Radeon HD 6970 And 6950 Review: Is Cayman A Gator Or A Crock?

Last month, Nvidia launched its GeForce GTX 580, but we told you to hold off on buying it. A week ago, Nvidia launched GeForce GTX 570 and we again said "wait." AMD's Cayman was our impetus. Were Radeon HD 6970 and 6950 worth the wait? Read on for more!

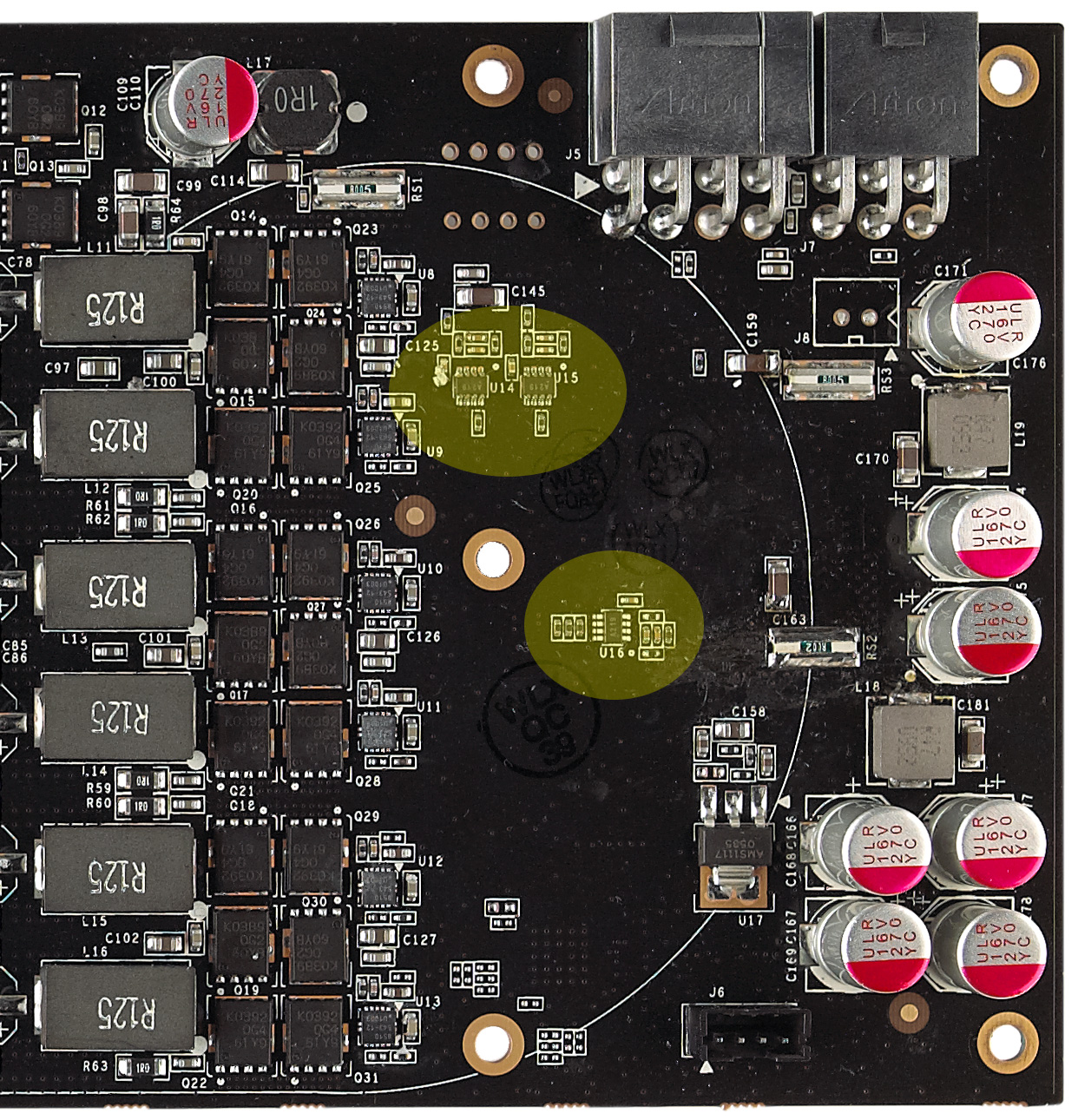

PowerTune: Changing The Way You Overclock

Then

Over time, AMD and Nvidia have integrated specific capabilities to help their hardware cope with the rigors of taxing applications and then gracefully scale back when the load isn’t as high.

Under extreme duress, usually in a piece of software like FurMark specifically written to apply atypically-intense workloads, both companies are able to throttle voltages and clock rates to protect against an unsustainable thermal situation. Additionally, they’ve incorporated protection mechanisms for voltage regulator circuitry that’ll also drop GPU clocks if an overvoltage occurs, even before the graphics processor heats up.

At the other end of the spectrum, Radeon and GeForce boards spin down when they’re not being taxed. This translates to significant power savings (not to mention better thermal and acoustic properties). The flexibility to scale up and down like this is what makes it possible to drop a desktop-class piece of silicon into a notebook and still end up with a useable system.

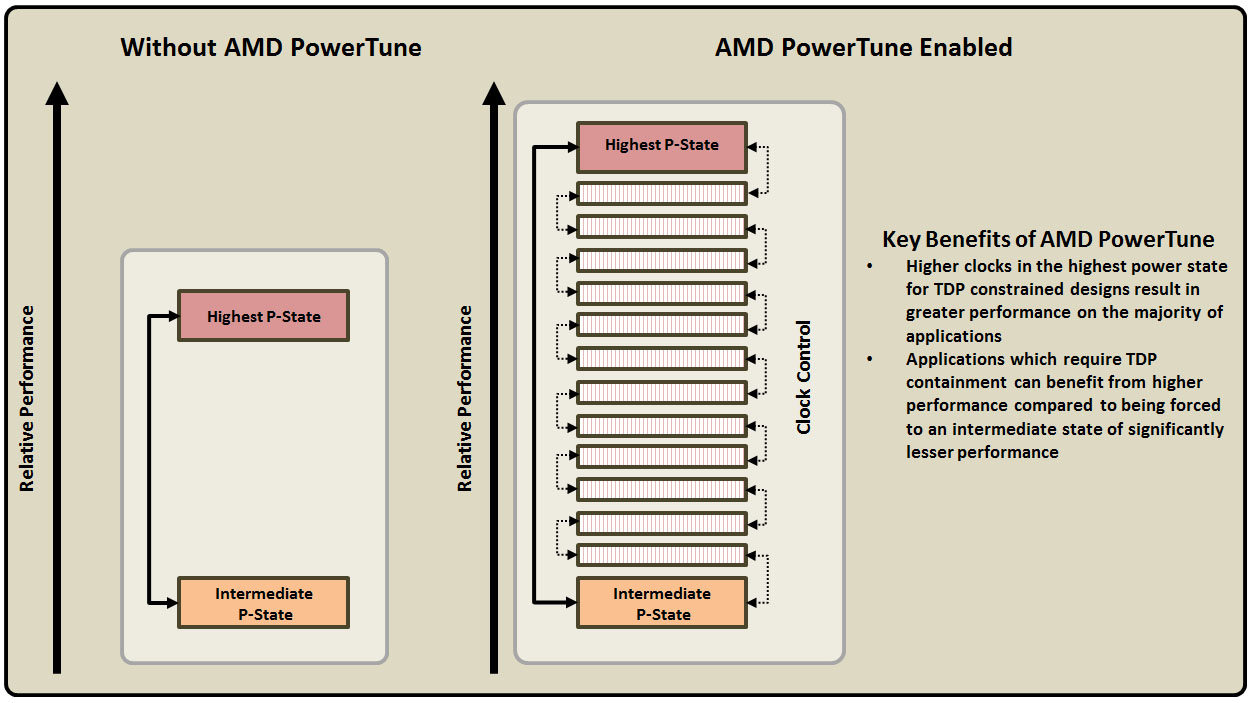

AMD’s suite of power management technologies has, for many generations, gone by the name of PowerPlay (apropos, given ATI’s origins in Toronto). It’s most well-known on the mobile side, because that’s where specific thermal considerations most affect what a given GPU can do. But PowerPlay is fairly rudimentary in the grand scheme of things. It supports an idle state, a peak power state, and intermediate states for things like video playback. However, each voltage/frequency combination is static, like rungs on a ladder.

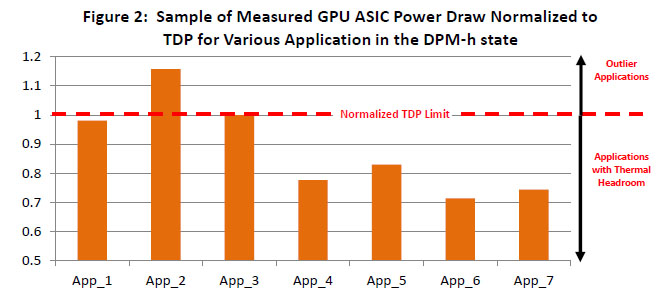

The problem is that applications don’t all behave the same way. So, even if a GPU is in its highest performance state, a piece of software like FurMark might trigger 260 W of power draw, while an application like Crysis pushes the card to consume 220 W. If you’re designing a graphics board, you can’t set the clocks and voltages with Crysis in mind; you have to make sure it’s stable in FurMark, too. That sort of worst-case combination of factors is what goes into the thermal design power we so often cite in our reviews.

A company like AMD or Nvidia defines a thermal design power for an entire graphics card, representing maximum power draw for reliable operation of the GPU, voltage regulation, memory, and so on. When they set the voltage and clock rate for that top P-state, three things are being taken into consideration: the TDP, the highest stable frequency at a given voltage, and the power characteristics of applications, which end up determining draw under full load, since some push hardware much harder than others.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Now, in some cases, you might have to artificially cap a GPU’s performance to get it to fit within a given power envelope--this is particularly common in notebooks. Similarly, it might become necessary to limit clock speed to fit within the PCI Express specification, for example. Sadly, the result could be that you limit the performance of World of Warcraft because you have to cap clocks with 3DMark Vantage’s Perlin Noise test in mind, preventing instability when that test runs. Suddenly, it makes a lot more sense as to why both GPU manufacturers hate programs like FurMark and OCCT so much. Those "outlier" apps, as they call them, artificially hobble what their cards can do.

At the end of the day, you have this situation where graphics cards are protected from damage. But the protection mechanism hammers performance in the name of safety. And if you’re running an application that doesn’t reach the board’s power limit, then you wind up leaving it underutilized—that’s performance left on the table.

Now

AMD claims that its PowerTune technology addresses both “power problems” that GPU vendors face through dynamic TDP management.

Instead of scaling up and down static power states, PowerTune dynamically calculates a GPU engine clock based on current power draw—right up to its highest possible state. Should you dial in an overclock and run an application that pushes the card beyond its TDP, PowerTune is supposed to keep the GPU in its highest P-state, but cut back on power use by dynamically reducing clock speed.

This is not to say that PowerTune will prevent you from crashing if you get too aggressive on your overclock. We tried upping the Radeon HD 6970’s clocks in AMD’s Catalyst Control Center software, keeping PowerTune at its factory setting, and still managed to get Just Cause 2 and Metro 2033 to crater. Also, it’s worth noting that actually using PowerTune is akin to overclocking. Should your shiny new 6000-series card’s death turn out to be PowerTune-related, a warranty won’t cover it. Sounds a little like Nissan equipping its GT-R with launch control, and then denying warranty claims when someone pops the tranny. Nevertheless, you’ve been warned.

How does PowerTune help performance? Well, rather than designing the Radeon HD 6000s with worst-case applications in mind, AMD is able to dial in a higher core clock at the factory (880 MHz in the case of the 6970) and rely on PowerTune to modulate performance down in the applications that would have previously forced the company to ship at, say, 750 MHz.

How It Works

So, let’s say you’re overclocking your card, leaving PowerTune at its default setting in AMD’s driver. If you run an application that wasn’t TDP-constrained by the default clock, and still isn’t constrained by the higher clock, you’ll see the scaling you expected. If the application wasn’t TDP-limited before the overclock, but does cross that threshold afterward, you’ll realize a smaller performance boost. Finally, if the application was already pushing the card’s TDP, overclocking isn’t going to get you anything extra—PowerTune was already modulating performance.

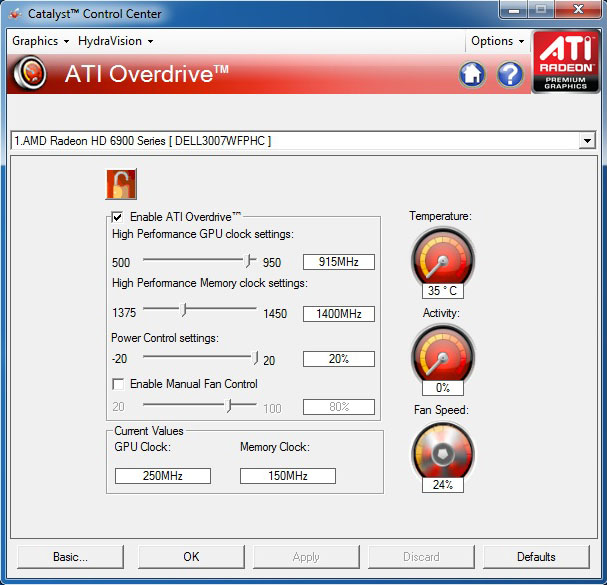

AMD gives you a way around this, though. In the Catalyst Control Center, under the AMD Overdrive tab, there’s a PowerTune slider that goes from -20% to +20%. Sliding down the scale reins in maximum TDP, helping you save energy at the cost of performance. Moving the other direction creates thermal headroom, allowing higher performance in apps that might have been capped previously.

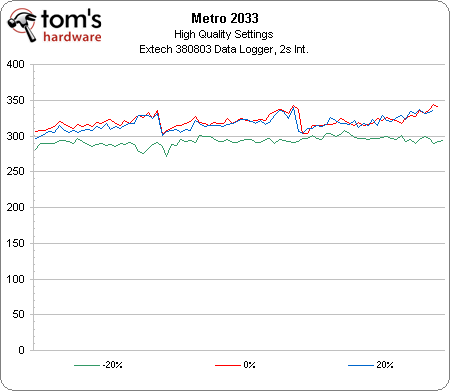

In order to test this out, we fired up a few games to spot check PowerTune’s behavior, eventually settling on Metro 2033—the same app we use to log power consumption later in this piece. We also dialed in a slight overclock on our Radeon HD 6970 (915/1400 MHz). With the slider set to -20%, we saw 48.54 frames per second at the game’s High detail setting. At the default 0%, performance jumped to 56.43 FPS. At +20%, performance increased slightly to 57.33 FPS.

The logged power chart tells the tale. By dropping the PowerTune slider, it’s clear that the capability pulls down peak power use (the difference is about 27 W average). But because Metro is already using a lot of power, there isn’t any headroom left for the card to drive extra performance.

In a sense, AMD has already extracted much of the overclocking headroom you might have otherwise pursued in order to make thee 6900-series cards more competitive. You can use the PowerTune slider to make more headroom available, but at the end of the day, the gains you see will be application-dependent.

AMD says that PowerTune is a silicon-level feature enabled by counters placed throughout the GPU. It works in real-time without relying on driver or application support. It’s programmable, too, so you can expect it to make a reappearance when Cayman is turned into a mobile part called Blackcomb.

Current page: PowerTune: Changing The Way You Overclock

Prev Page Adding Value Through Anti-Aliasing, Eyefinity, And Video Next Page Meet Radeon HD 6970 And Radeon HD 6950-

Annisman Thanks for the review Angelini, these new naming schemes are hurting my head, sometimes the only way to tell (at a quick glance) which AMD card matches up to what Nvidia card, is by comparing the prices, which I think is bad for the average consumer.Reply -

rohitbaran These cards are to GTX 500 series what 4000 series was to GTX 200. Not the fastest at their time but offer killer performance and feature set for the price. I too expected 6900 to be close to GTX 580, but it didn't turn out that way. Still, it is the card I have waited for to upgrade. Right in my budget.Reply -

notty22 AMD's top card is about a draw with the gtx 570.Reply

Pricing is in line.

Gives AMD only hold outs buying options, Nvidia already offered

Merry Christmas -

IzzyCraft Sorry all i read was thisReply

"This helps catch AMD up to Nvidia. However, Intel has something waiting in the wings that’ll take both graphics companies by surprise. In a couple of weeks, we'll be able to tell you more." and now i'm fixated to weather or not intel's gpu's can actually commit to proper playback. -

andrewcutter but from what i read at hardocp, though it is priced alongside the 570, 6970 was benched against the 580 and they were trading blows... So toms has it at par with 570 but hard has it on par with 580.. now im confused because if it can give 580 perfomance or almost 580 performance at 570 price and power then this one is a winner. Sim a 6950 was trading blows with 570 there. So i am very confusedReply -

sgt bombulous This is hilarious... How long ago was it that there were ATI fanboys blabbering "The 6970 is gonna be 80% faster than the GTX 580!!!". And then reality hit...Reply