World Of Warcraft: Cataclysm--Tom's Performance Guide

Ready for the launch of Blizzard's World of Warcraft: Cataclysm expansion tomorrow? Is your PC? We test 24 different graphics cards from AMD and Nvidia, CPUs from AMD and Intel, and compare DirectX 9 to DirectX 11, showing you which settings to use.

Nvidia: High-End Cards At Ultra Quality

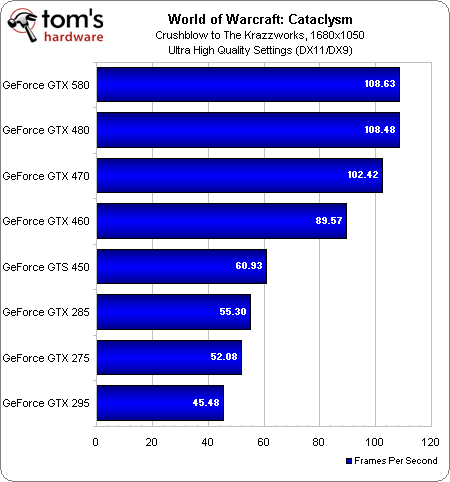

Thanks in part to performance gains enabled by DirectX 11 (more on that shortly), all of Nvidia's GeForce GTx 400-series cards can play Cataclysm at Ultra quality. The DX10-capable GeForce GTX 200-series boards don't enjoy the same performance boost, and so they occupy the bottom half of our charts.

Once again, we'll point out a problem with the second dual-GPU card in our little benchmark-fest. The GeForce GTX 295, like AMD's Radeon HD 5970, sees no gains from its second graphics processor. In fact, it even runs slower than a single-GPU GeForce GTX 275 at all three resolutions. Nvidia claims SLI should function normally, but the settings we were told enable proper scaling did little to improve performance.

The order here is somewhat predictable. If you've purchased a GeForce GTX 580, you're probably wondering why it isn't beating the GeForce GTX 480 (or even 470) by much of a margin. The answer is that the top three cards are being limited by the performance of our overclocked Core i7-980X. It'll take more than a mainstream resolution and Ultra quality settings to push these boards harder.

Nvidia's GeForce GTX 460 remains a solid choice here, as it puts down benchmark results that are even faster than AMD's Radeon HD 5870.

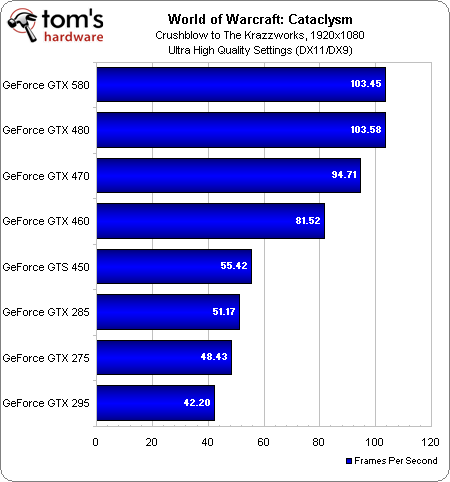

We're still CPU-bound up top at 1920x1080. The GeForce GTX 460 still looks like the best value here. And are we the only ones amazed that a GeForce GTS 450, benefiting from DirectX 11 support, is able to beat the GeForce GTX 285? And poor GeForce GTX 295...after spending so much on a flagship board, that last-place finish has to hurt.

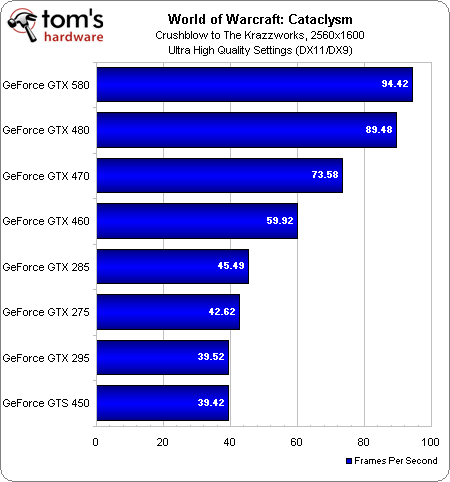

Finally, a high resolution puts some distance between the fastest two boards--though it's decidedly not large enough to justify upgrading from one card to the other (perhaps our anti-aliasing tests will show more of a difference).

A more intense graphics load pushes the mainstream GeForce GTS 450 down to the bottom of the stack here. Optimizations to the API are only able to help when there's not a bottleneck elsewhere, and the card's limited shading muscle is what holds it back when the resolution increases.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

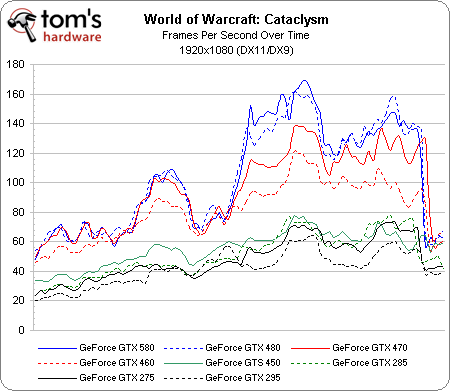

Here that's run at 1920x1080 plotted out over time. There's a massive hole in between the DirectX 11-capable cards and last generation's DX10 boards (with the exception of the GTS 450, which hangs out at the top of that latter bunch).

Current page: Nvidia: High-End Cards At Ultra Quality

Prev Page AMD: Mainstream Cards At Good Quality Next Page Nvidia: Mainstream Cards At Good Quality-

Odem Kind of unfortunate to see if I had gone with an i5 750 instead of a 955 I'd be seeing more fps. Although the money I saved for the same frames in most other games leaves me happy.Reply -

WOW only uses 2 cores by default. However youc an configure it to "quasi" use more cores. you have to manually edit your config.wtf and change the variable: SET processAffinityMask "3" (3 is the default meaning 2 cores) to the following values for respective processors:Reply

i7 Qudcore with Ht- 85

Any Quadcore chips with no HT - 15

i5 Quadcore which does not have HT as far as I know - 15

i5 Dualcore with HT- 5

Dualcore with HT- 5

Dualcore without HT - 5

AMD tricore - 7

There used to be a blue post explaining the settings and how to calculate it for different cores. But the old forums got wiped. -

sudeshc not a that big fan of wow, but still happy to see that they do keep in mind that people also have low end hardware too.Reply -

SpadeM I'm impressed, if Chris went to all that trouble to benchmark the new expansion for a mmorpg in such great detail it can me only 2 things:Reply

1. Chris is a closet WoW-player

2. Really bored

With that said, i really do hope to see more of these articles, albeit with a more demanding title on the bench, even if it's from a "lesser" developer/publisher combo.

PS: I do hope ppl appreciate my sense of humor :P -

dirtmountain Damn fine job Mr. Angelini, the most comprehensive hardware guide i've ever seen for WoW. This will save me hours, if not days of time when talking to players about their systems. Much appreciated.Reply -

Bluescreendeath The Intel CPU scaling part was lacking...i7 980X at 3.7GHz? For WoW? Really?Reply

And why only Corei CPUs? Where are all the Core2s? 75% of Intel users still use Core2s and 775s! -

voicu83 i hate you so much tom's hardware ... now i have to go buy an intel proc instead of my phenom ii x4 :D ... and add a dx11 board on top of it ... oh well, there goes my santa's gift :PReply -

Moneyloo Simply astounded by the time and effort that must have went into this piece. It also makes me greatly look forward to my new Maingear desktop arriving on the 23rd just in time for Christmas. Dual OC gtx580s in sli with a corei7 FTW. Ultra everything here I come!Reply -

cangelini SpadeMI'm impressed, if Chris went to all that trouble to benchmark the new expansion for a mmorpg in such great detail it can me only 2 things:1. Chris is a closet WoW-player2. Really boredWith that said, i really do hope to see more of these articles, albeit with a more demanding title on the bench, even if it's from a "lesser" developer/publisher combo. PS: I do hope ppl appreciate my sense of humorReply

It's a little easier to talk about WoW since I've been playing it for way too long, but I definitely want to see us doing more comprehensive coverage of demanding titles on launch day. It's all a matter of trying to convince the software guys to give a hardware site early access to the game. That's the hard part :)

-

mitch074 With hardware-accelerated cursor now enabled, OpenGL has finally become usable in WoW; was there any testing done on that? Not only does it sometimes give a boost to Nvidia cards, it's also the 'default' setting for Linux players - incidentally, the ones who were asking for the feature for a while.Reply