Zotac Sonix NVMe SSD Review: Our First E7 Tests

Why you can trust Tom's Hardware

Mixed Workloads And Steady State

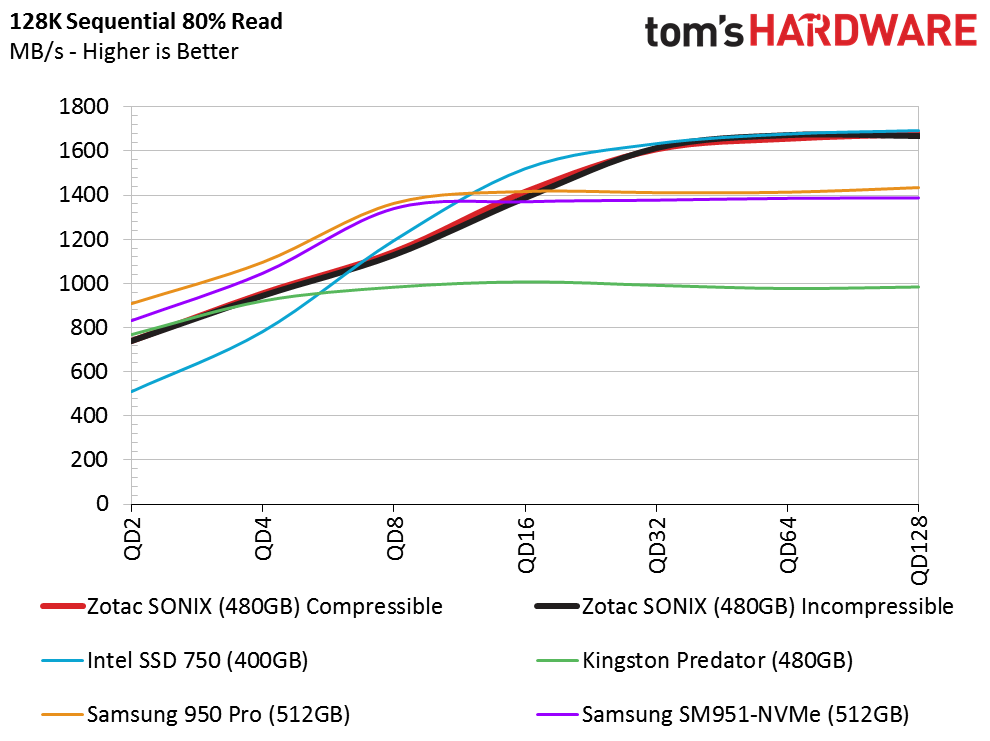

80 Percent Sequential Mixed Workload

Our mixed workload testing is described in detail here, and our steady state tests are described here.

Even before Phison finishes optimizing its controller ahead of an official launch, the Sonix performs well in our mixed sequential workloads. It's much more competitive against existing SSDs than it appeared to be when we tested with 100 percent reads or writes.

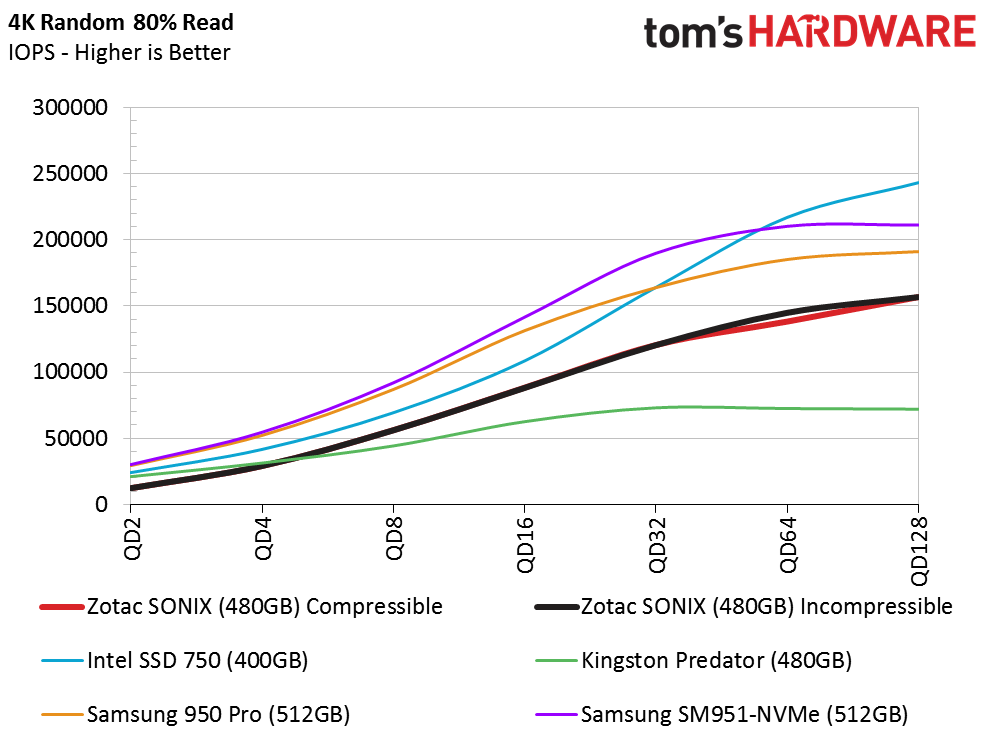

80 Percent Random Mixed Workload

Historically, Phison struggled with mixed random workloads. We know it's a major focus focus for 2016, though. The 480GB Sonix fares better against other NVMe-attached drives, even if we're still seeing low I/O throughput at the queue depths most desktop users experience.

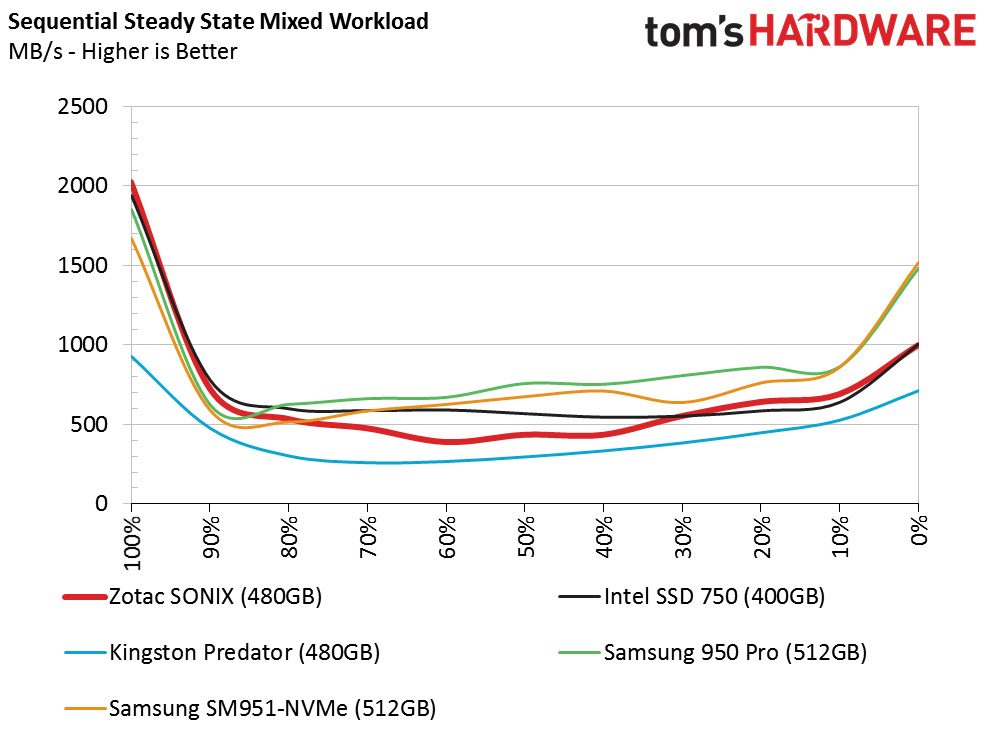

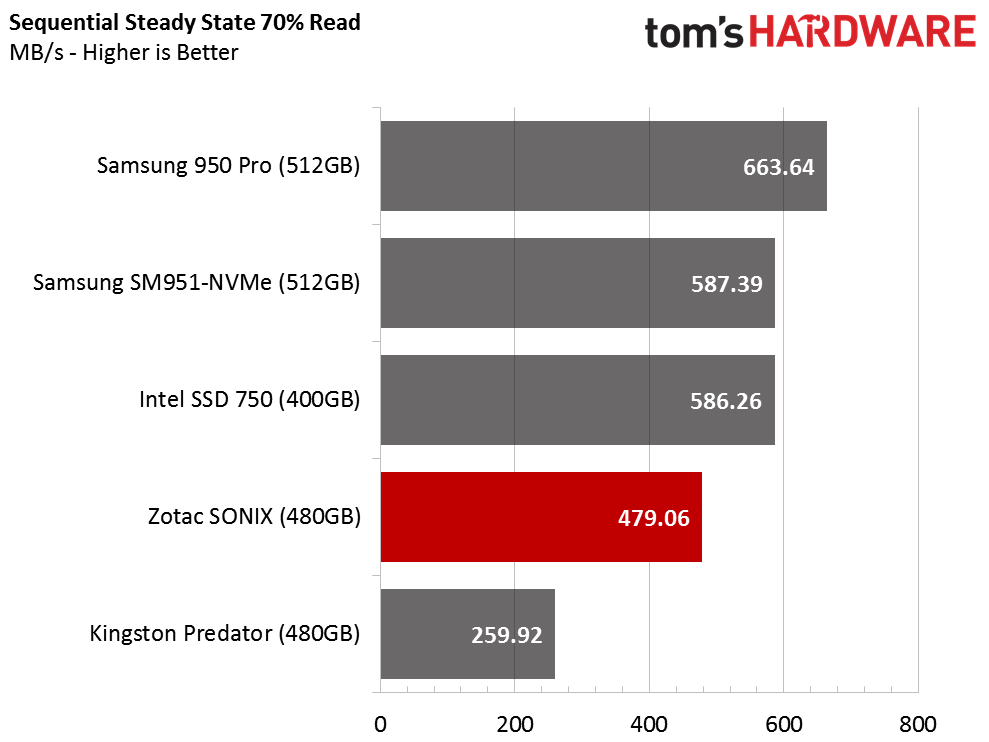

Sequential Steady State

NVMe has big implications for the workstation market, where tons of bandwidth and low latency improve the performance of storage-bound applications. Here, we're looking at sequential transfers in a steady state condition. The far left of the graph represents 100% reads, and we move right, changing the blend of reads and writes in 10 percent increments.

Most professional users work from secondary storage, so it's easy to hit a 50/50 mix while editing large multimedia files. Working with CAD files is represented by a different mix. Truly, to understand this chart requires understanding your workload's behavior.

We're most concerned with two specific data points. Desktop applications are often characterized by an 80/20 blend of reads and writes, while workstations shift right a bit to 70/30. Even enthusiasts won't see steady state conditions though, thanks to the way wear-leveling and garbage collection algorithms work. It'd take filling your drive up completely to get there.

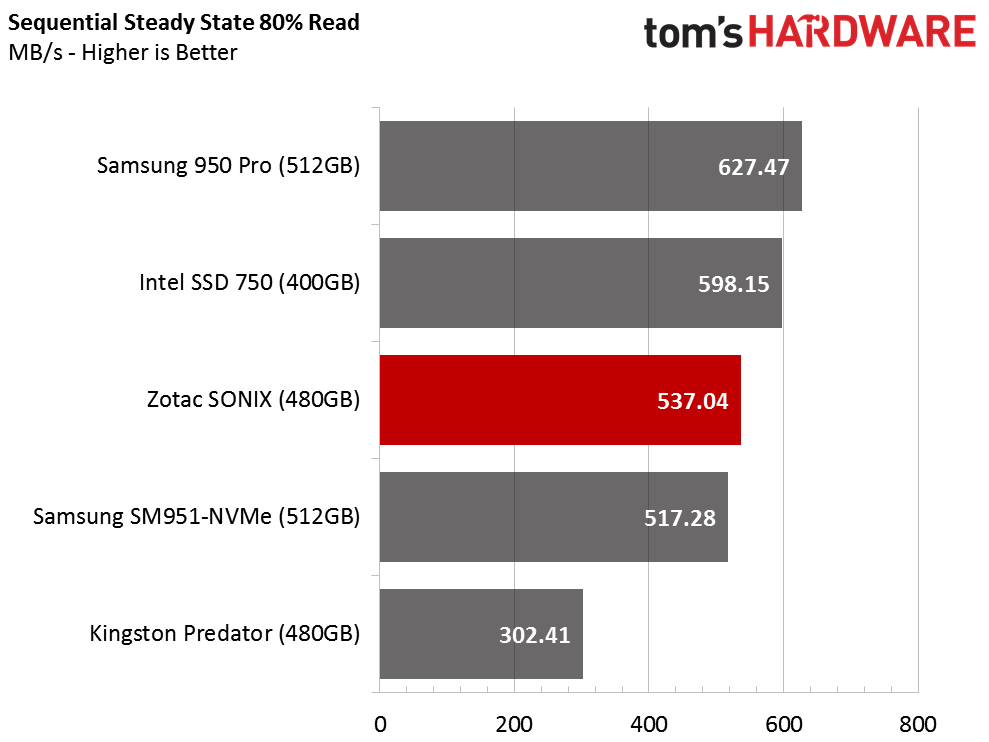

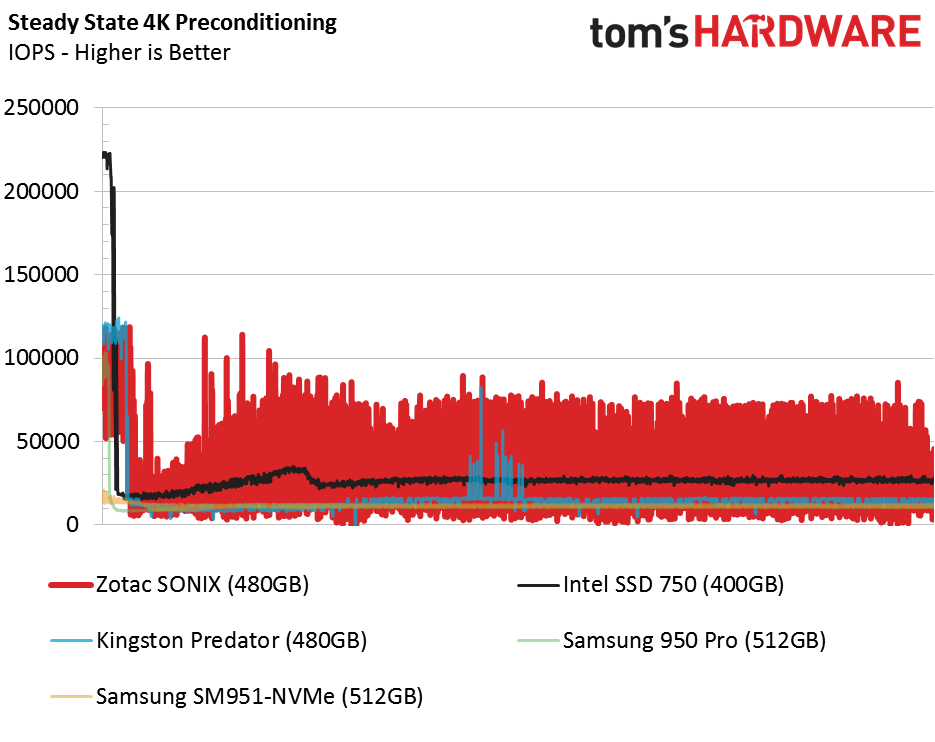

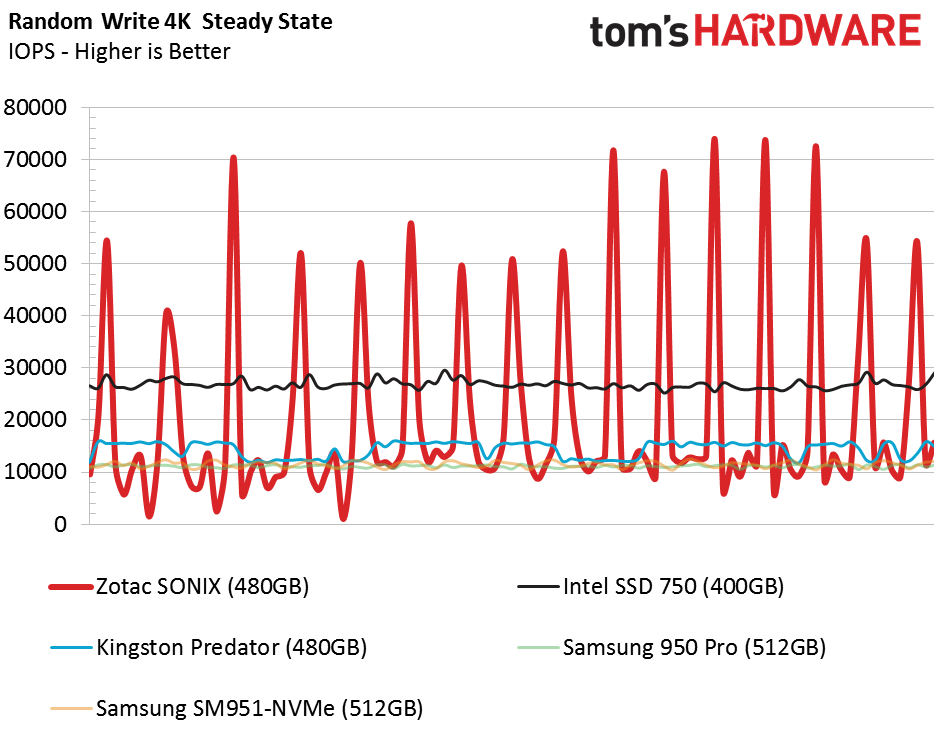

Random Write Steady State

The same goes for inducing steady state with random writes; it's just not going to happen on a desktop. Good thing, too. This is a particularly low-performance condition where every cell contains 4KB of data as additional 4KB blocks hit the drive. It's an enterprise-oriented test specific to database servers.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

But this metric does expose the lowest random performance you'll see from a drive, and we really want to see the smallest possible deviations between minimums and maximums. Intel's 400GB SSD 750 gives us what we're looking for, signaling it'd be a great candidate for a RAID 0 array. The Sonix, on the other hand, yields inconsistent I/O performance.

Current page: Mixed Workloads And Steady State

Prev Page Four-Corner Performance Testing Next Page Real-World Application Performance

Chris Ramseyer was a senior contributing editor for Tom's Hardware. He tested and reviewed consumer storage.

-

2Be_or_Not2Be Is it too much to ask that all of your graphs keep the same colors for each product? One page the Intel 750 is light blue; the next page, it's black. Consistency here would help the reader.Reply -

CRamseyer The different colors for the first and later parts of the review is a one off. We added the compressible / incompressible tests to the tests early in the review so there was a shift. In the future I'll just use a red dotted line if adding something special.Reply -

Kewlx25 Correction:Reply

"For a bit of context, AHCI operates on one command at a time, but can queue up to 32 of them. NVMe can operate on as many as 256 commands simultaneously, and each of those commands can queue another 256."

https://en.wikipedia.org/wiki/NVM_Express#Comparison_with_AHCI

NVMe

65535 queues;

65536 commands per queue -

CRamseyer You are correct, that was my fault. I built a test that does 256 / 256 to see how it works. I'll make the correction.Reply -

josejones Good article, Chris Ramseyer.Reply

"Real-world software rarely pushes fast storage devices to their limits"

Chris, I am curious about what all holds back NVMe SSD's from getting their full potential? What all needs to come together to reach their full potential? Will Kaby Lake and the new 200-Series Chipset Union Point motherboards help to get better performance out of the new NVMe & Optane SSD's? I've heard we need a far bigger BUS too. I am holding out for an NVMe SSD that will actually reach the claimed 32 Gb/s or close to it - minus overhead. -

TbsToy So Chris what is your opinion on which of these drives should be used where. Workstation, PC desktop, laptop etc. as the most 'suitable' drive among this group for which machine?Reply

W.P -

TbsToy So Cris which of these drives to you consider to be the most useful with which type of machine, server, workstation, PC, or laptop?Reply

W.P. -

Kewlx25 ReplyGood article, Chris Ramseyer.

"Real-world software rarely pushes fast storage devices to their limits"

Chris, I am curious about what all holds back NVMe SSD's from getting their full potential? What all needs to come together to reach their full potential? Will Kaby Lake and the new 200-Series Chipset Union Point motherboards help to get better performance out of the new NVMe & Optane SSD's? I've heard we need a far bigger BUS too. I am holding out for an NVMe SSD that will actually reach the claimed 32 Gb/s or close to it - minus overhead.

This is my semi-educated guess.

1) The storage chips need to be faster, but they are pretty fast.

2) Controllers need to be faster. Less complicated overhead, better command concurrency, etc

3) There is a latency vs throughput issue. If most programs are making one request at a time and waiting for the response for that request, then you need really low latency to have high bandwidth. On the other hand, if a program makes many concurrent requests, then it just multiplied its theoretical peak bandwidth.

Similar issue with why TCP has a transmit window. Waiting for a response over high latency slows you down. The main difference is TCP pushes data. Reading from the harddrive pulls data. -

CRamseyer Opine will change things a bit because it lowers the QD1 latency. It will make your computer feel faster thus increasing the user experience.Reply

Beyond that, we need a complete overhaul to effectively utilize NVMe in regular computers. The software needs to reach out for more data at the same time. The Windows file systems (other than ReFS) are all aging. We need a big shift in software across the board. It's just like with video games and other software right now. Nothing pushed the limits of the hardware. VR could be change that but I suspect we are still 5 years away from VR for anyone other than enthusiasts.

TbsToy - NVMe accelerates all tasks by lowering latency. We're starting to see the tech ship in notebooks from MSI and Lenovo. Custom desktops from companies like Maingrear and AVA sell with NVMe as well. I would say use it wherever you find a place. The Samsung NVMe drives sell for a very small premium over the SATA-based 850 Pro. You get workstation and in some cases enterprise-level performance capabilities for the rare instances when the load gets that high. -

jt AJ ReplyOpine will change things a bit because it lowers the QD1 latency. It will make your computer feel faster thus increasing the user experience.

Beyond that, we need a complete overhaul to effectively utilize NVMe in regular computers. The software needs to reach out for more data at the same time. The Windows file systems (other than ReFS) are all aging. We need a big shift in software across the board. It's just like with video games and other software right now. Nothing pushed the limits of the hardware. VR could be change that but I suspect we are still 5 years away from VR for anyone other than enthusiasts.

TbsToy - NVMe accelerates all tasks by lowering latency. We're starting to see the tech ship in notebooks from MSI and Lenovo. Custom desktops from companies like Maingrear and AVA sell with NVMe as well. I would say use it wherever you find a place. The Samsung NVMe drives sell for a very small premium over the SATA-based 850 Pro. You get workstation and in some cases enterprise-level performance capabilities for the rare instances when the load gets that high.

cpu is hitting a dead limit due to software not capable of taking advantage of new instruction hence we see 5% improvement on cpu year after year, and thats also because microsoft window legacy support and why so many old software still work on new windows.

when software programmed to take advantage of cpu's new instructions we'll see a huge jump in cpu performance and would mean more data taken from SSD overall just faster.