AMD Unveils EPYC 'Milan' 7003 CPUs, Zen 3 Comes to 64-Core Server Chips

From Rome to Milan

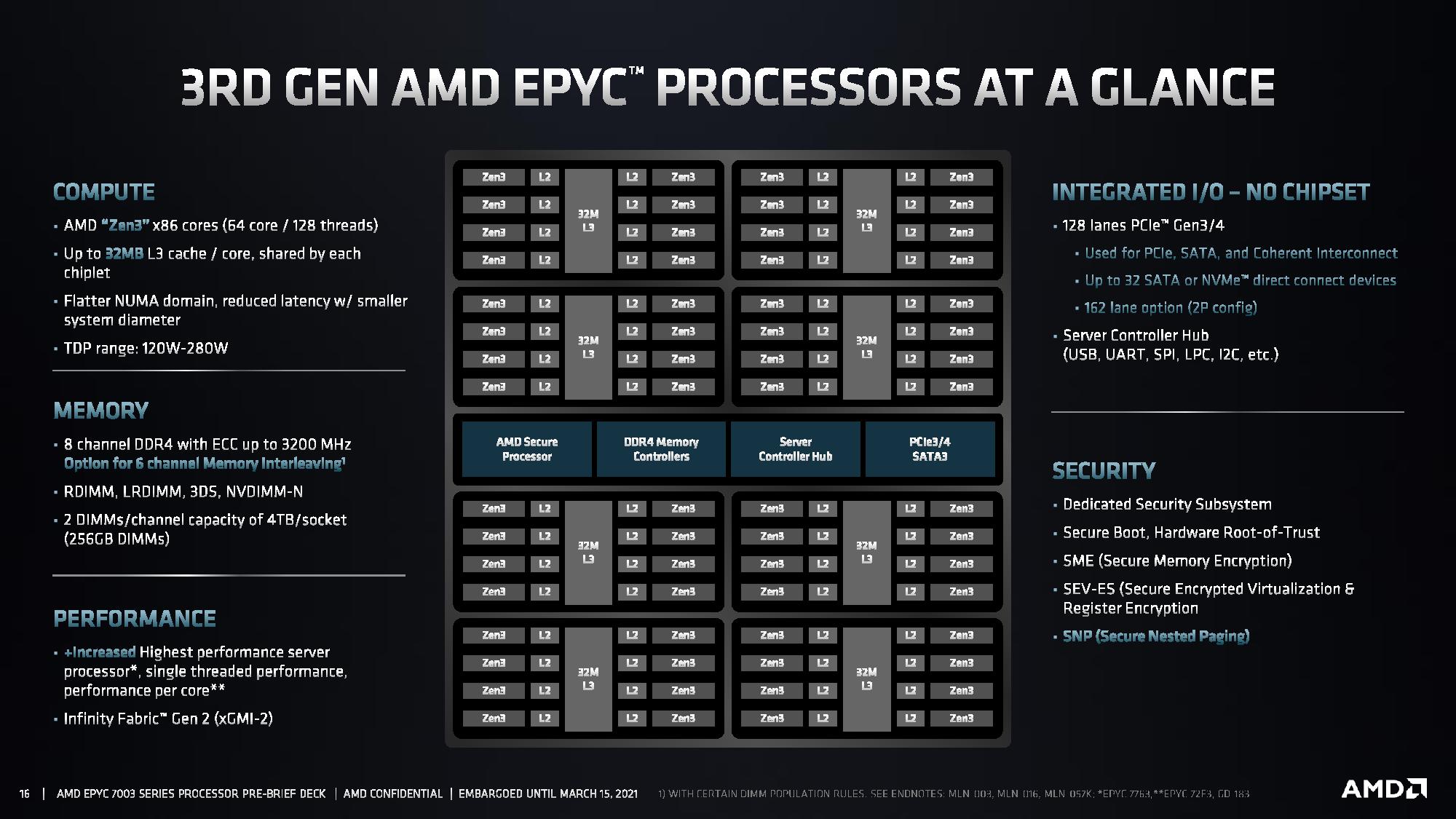

AMD unveiled its EPYC 7003 'Milan' processors today, claiming that the chips, which bring the company's powerful Zen 3 architecture to the server market for the first time, take the lead as the world's fastest server processor with its flagship 64-core 128-thread EPYC 7763. Like the rest of the Milan lineup, this chip comes fabbed on the 7nm process and is drop-in compatible with existing servers. AMD claims it brings up to twice the performance of Intel's competing Xeon Cascade Lake Refresh chips in HPC, Cloud, and enterprise workloads, all while offering a vastly better price-to-performance ratio.

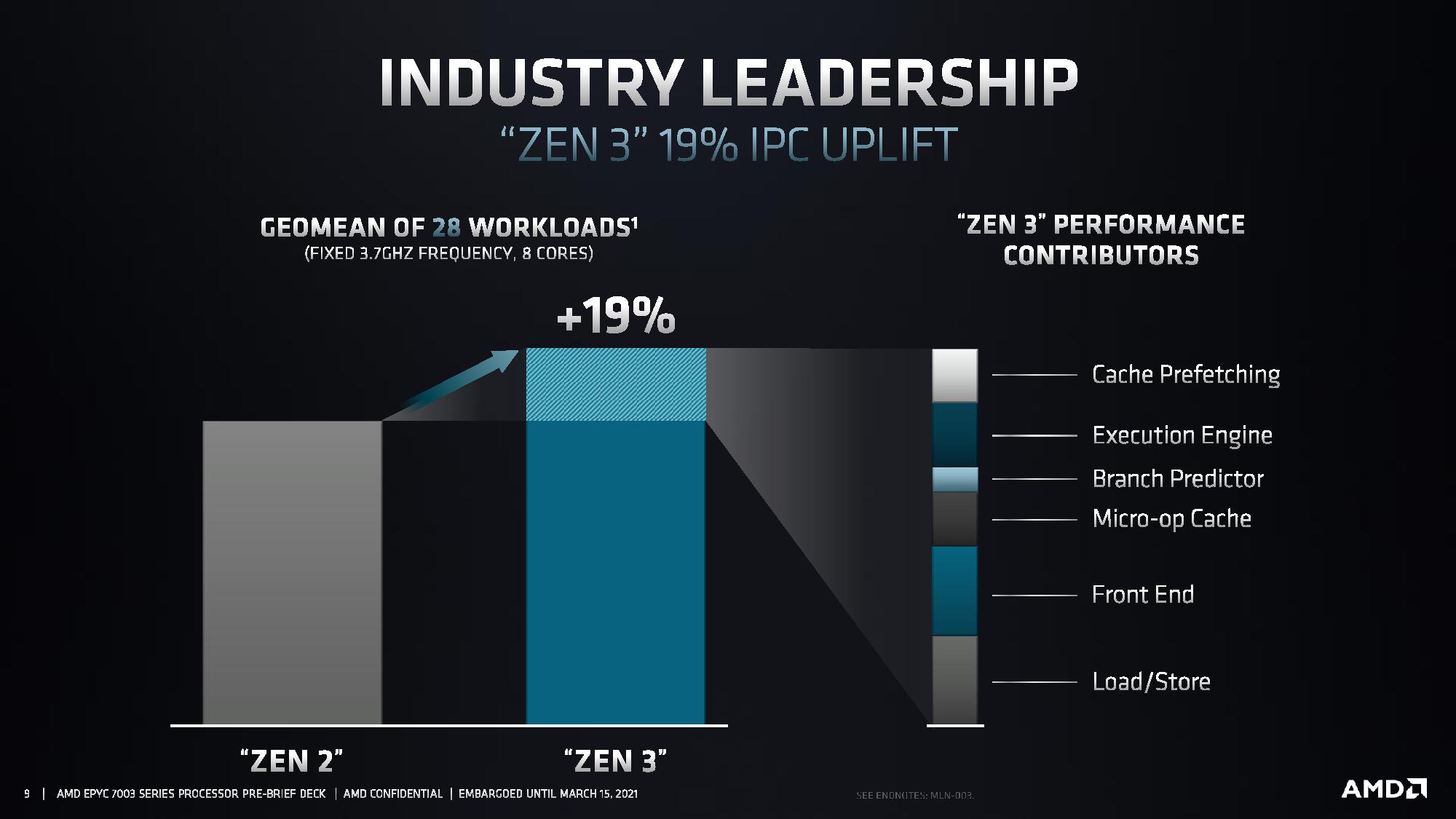

Milan's agility lies in the Zen 3 architecture and its chiplet-based design. This microarchitecture brings many of the same benefits that we've seen with AMD's Ryzen 5000 series chips that dominate the desktop PC market, like a 19% increase in IPC and a larger unified L3 cache. Those attributes, among others, help improve AMD's standing against Intel's venerable Xeon lineup in key areas, like single-threaded work, and offer a more refined performance profile across a broader spate of applications.

The other attractive features of the EPYC lineup are still present, too, like enhanced security, leading memory bandwidth, and the PCIe 4.0 interface. AMD also continues its general approach of offering all features with all of its chips, as opposed to Intel's strict de-featuring that it uses to segment its product stack. As before, AMD also offers single-socket P-series models, while its standard lineup is designed for dual-socket (2P) servers.

The Milan launch promises to reignite the heated data center competition once again. Today marks the EPYC Milan processors' official launch, but AMD actually began shipping the chips to cloud service providers and hyperscale customers last year. Overall, the EPYC Milan processors look to be exceedingly competitive against Intel's competing Xeon Cascade Lake Refresh chips.

Like AMD, Intel has also been shipping to its largest customers; the company recently told us that it has already shipped 115,000 Ice Lake chips since the end of last year. Intel also divulged a few details about its Ice Lake Xeons at Hot Chips last year; we know the company has a 32-core model in the works, and it's rumored that the series tops out at 40 cores. As such, Ice Lake will obviously change the competitive landscape when it comes to the market.

AMD has chewed away desktop PC and notebook market share at an amazingly fast pace, but the data center market is a much tougher market to crack. While this segment represents the golden land of high-volume and high-margin sales, the company's slow and steady gains lag its radical advance in the desktop PC and notebook markets.

Much of that boils down to the staunchly risk-averse customers in the enterprise and data center; these customers prize a mix of factors beyond the standard measuring stick of performance and price-to-performance ratios, instead focusing on areas like compatibility, security, supply predictability, reliability, serviceability, engineering support, and deeply-integrated OEM-validated platforms. To cater to the broader set of enterprise customers, AMD's Milan launch also carries a heavy focus on broadening AMD's hardware and software ecosystems, including full-fledged enterprise-class solutions that capitalize on the performance and TCO benefits of the Milan processors.

AMD's existing EPYC Rome processors already hold the lead in performance-per-socket and pricing, easily outstripping Intel's Xeon at several key price points. Given AMD's optimizations, Milan will obviously extend that lead, at least until the Ice Lake debut. Let's see how the hardware stacks up.

AMD EPYC 7003 Series Milan Specifications and Pricing

| Row 0 - Cell 0 | Cores / Threads | Base / Boost (GHz) | L3 Cache (MB) | TDP (W) | 1K Unit Price |

| EPYC Milan 7763 | 64 / 128 | 2.45 / 3.5 | 256 | 280 | $7,890 |

| EPYC Milan 7713 | 64 / 128 | 2.0 / 3.675 | 256 | 225 | $7,060 |

| EPYC Rome 7H12 | 64 / 128 | 2.6 / 3.3 | 256 | 280 | ? |

| EPYC Rome 7742 | 64 / 128 | 2.25 / 3.4 | 256 | 225 | $6,950 |

| EPYC Milan 7663 | 56 / 112 | 2.0 / 3.5 | 256 | 240 | $6,366 |

| EPYC Milan 7643 | 48 / 96 | 2.3 / 3.6 | 256 | 225 | $4.995 |

| EPYC Milan 7F53 | 32 / 64 | 2.95 / 4.0 | 256 | 280 | $4,860 |

| EPYC Milan 7453 | 28 / 56 | 2.75 / 3.45 | 64 | 225 | $1,570 |

| Xeon Gold 6258R | 28 / 56 | 2.7 / 4.0 | 38.5 | 205 | $3,651 |

| EPYC Milan 74F3 | 24 / 48 | 3.2 / 4.0 | 256 | 240 | $2,900 |

| EPYC Rome 7F72 | 24 / 48 | 3.2 / ~3.7 | 192 | 240 | $2,450 |

| Xeon Gold 6248R | 24 / 48 | 3.0 / 4.0 | 35.75 | 205 | $2,700 |

| EPYC Milan 7443 | 24 / 48 | 2.85 / 4.0 | 128 | 200 | $2,010 |

| EPYC Rome 7402 | 24 / 48 | 2.8 / 3.35 | 128 | 180 | $1,783 |

| EPYC Milan 73F3 | 16 / 32 | 3.5 / 4.0 | 256 | 240 | $3,521 |

| EPYC Rome 7F52 | 16 / 32 | 3.5 / ~3.9 | 256 | 240 | $3,100 |

| Xeon Gold 6246R | 16 / 32 | 3.4 / 4.1 | 35.75 | 205 | $3,286 |

| EPYC Milan 7343 | 16 / 32 | 3.2 / 3.9 | 128 | 190 | $1,565 |

| EPYC Rome 7302 | 16 / 32 | 3.0 / 3.3 | 128 | 155 | $978 |

| EPYC Milan 72F3 | 8 / 16 | 3.7 / 4.1 | 256 | 180 | $2,468 |

| EPYC Rome 7F32 | 8 / 16 | 3.7 / ~3.9 | 128 | 180 | $2,100 |

| Xeon Gold 6250 | 8 / 16 | 3.9 / 4.5 | 35.75 | 185 | $3,400 |

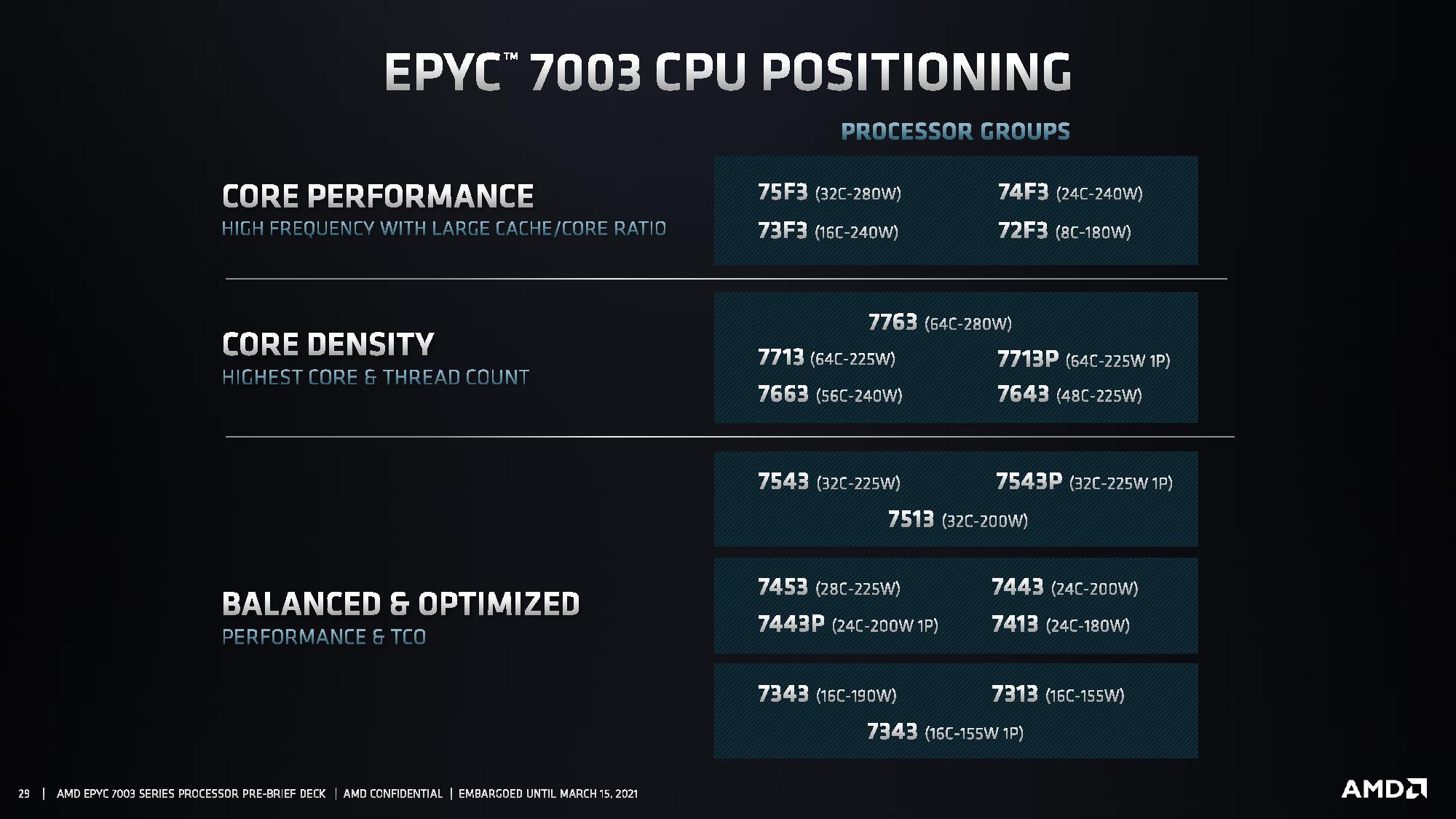

AMD released a total of 19 EPYC Milan SKUs today, but we've winnowed that down to key price bands in the table above. We have the full list of the new Milan SKUs later in the article.

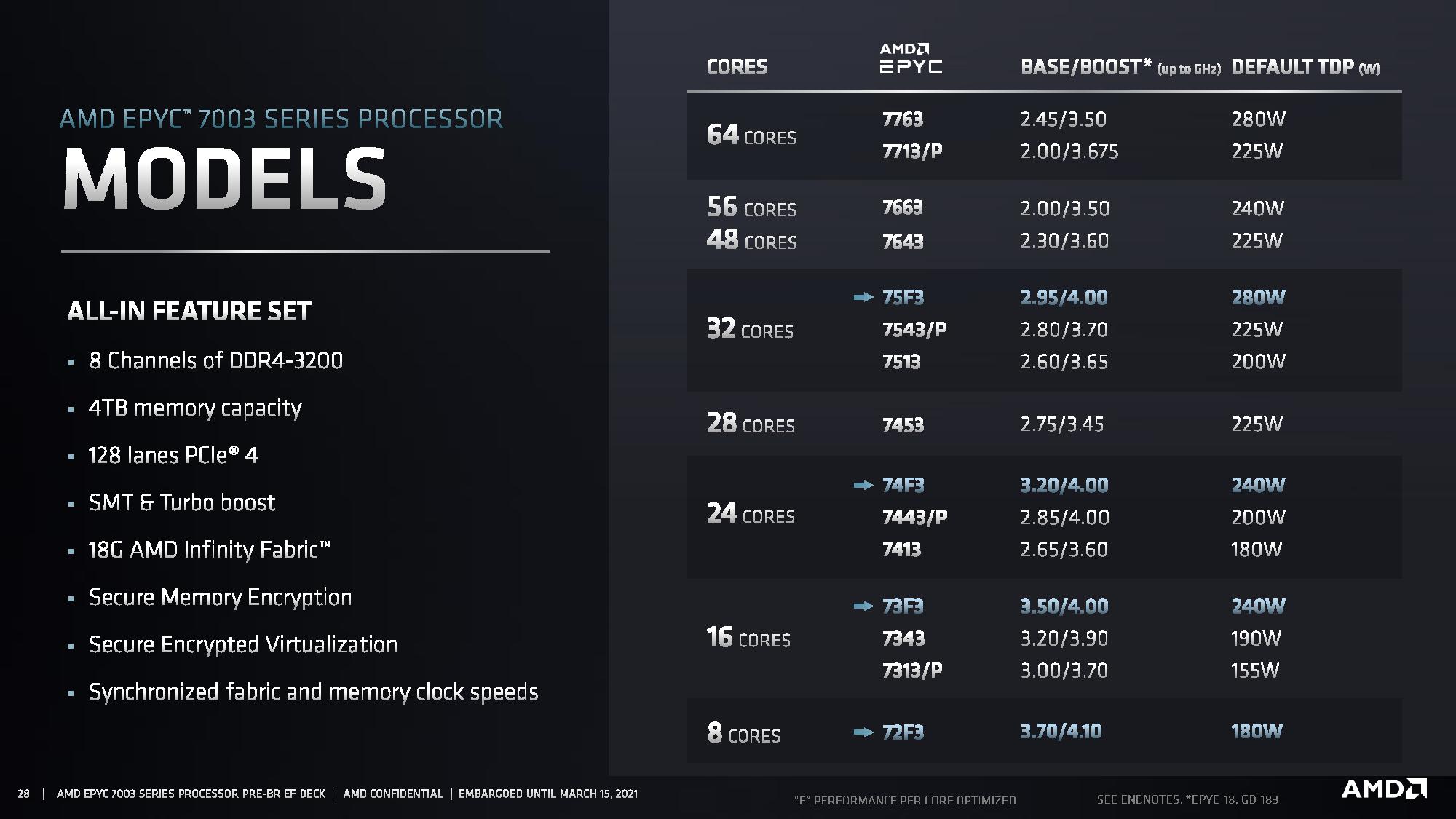

As with the EPYC Rome generation, Milan spans from eight to 64 cores, while Intel's Cascade Lake Refresh tops out at 28 cores. All Milan models come with threading, support up to eight memory channels of DDR4-3200, 4TB of memory capacity, and 128 lanes of PCIe 4.0 connectivity. AMD supports both standard single- and dual-socket platforms, with the P-series chips slotting in for single-socket servers (we have those models in the expanded list below). The chips are drop-in compatible with the existing Rome socket.

AMD added frequency-optimized 16-, 24-, and 32-core F-series models to the Rome lineup last year, helping the company boost its performance in frequency-bound workloads, like databases, that Intel has typically dominated. Those models return with a heavy focus on higher clock speeds, cache capacities, and TDPs compared to the standard models. AMD also added a highly-clocked 64-core 7H12 model for HPC workloads to the Rome lineup, but simply worked that higher-end class of chip into its standard Milan stack.

As such, the 64-core 128-thread EPYC 7763 comes with a 2.45 / 3.5 GHz base/boost frequency paired with a 280W TDP. This flagship part also comes armed with 256MB of L3 cache and supports a configurable TDP that can be adjusted to accommodate any TDP from 225W to 280W.

The 7763 marks the peak TDP rating for the Milan series, but the company has a 225W 64-core 7713 model that supports a TDP range of 225W to 240W for more mainstream applications.

All Milan models come with a default TDP rating (listed above), but they can operate between a lower minimum (cTDP Min) and a higher maximum (cTDP Max) threshold, allowing quite a bit of configurability within the product stack. We have the full cTDP ranges for each model listed in the expanded spec list below.

Milan's adjustable TDPs now allow customers to tailor for different thermal ranges, and Forrest Norrod, AMD's SVP and GM of the data center and embedded solutions group, says that the shift in strategy comes from the lessons learned from the first F- and H-series processors. These 280W processors were designed for systems with robust liquid cooling, which tends to add quite a bit of cost to the platform, but OEMs were surprisingly adept at engineering air-cooled servers that could fully handle the heat output of those faster models. As such, AMD decided to add a 280W 64-core model to the standard lineup and expanded the ability to manipulate TDP ranges across its entire stack.

AMD also added new 28- and 56-core options with the EPYC 7453 and 7663, respectively. Norrod explained that AMD had noticed that many of its customers had optimized their applications for Intel's top-of-the-stack servers that come with multiples of 28 cores. Hence, AMD added new models that would mesh well with those optimizations to make it easier for customers to port over applications optimized for Xeon platforms. Naturally, AMD's 28-core's $1,570 price tag looks plenty attractive next to Intel's $3,651 asking price for its own 28-core part.

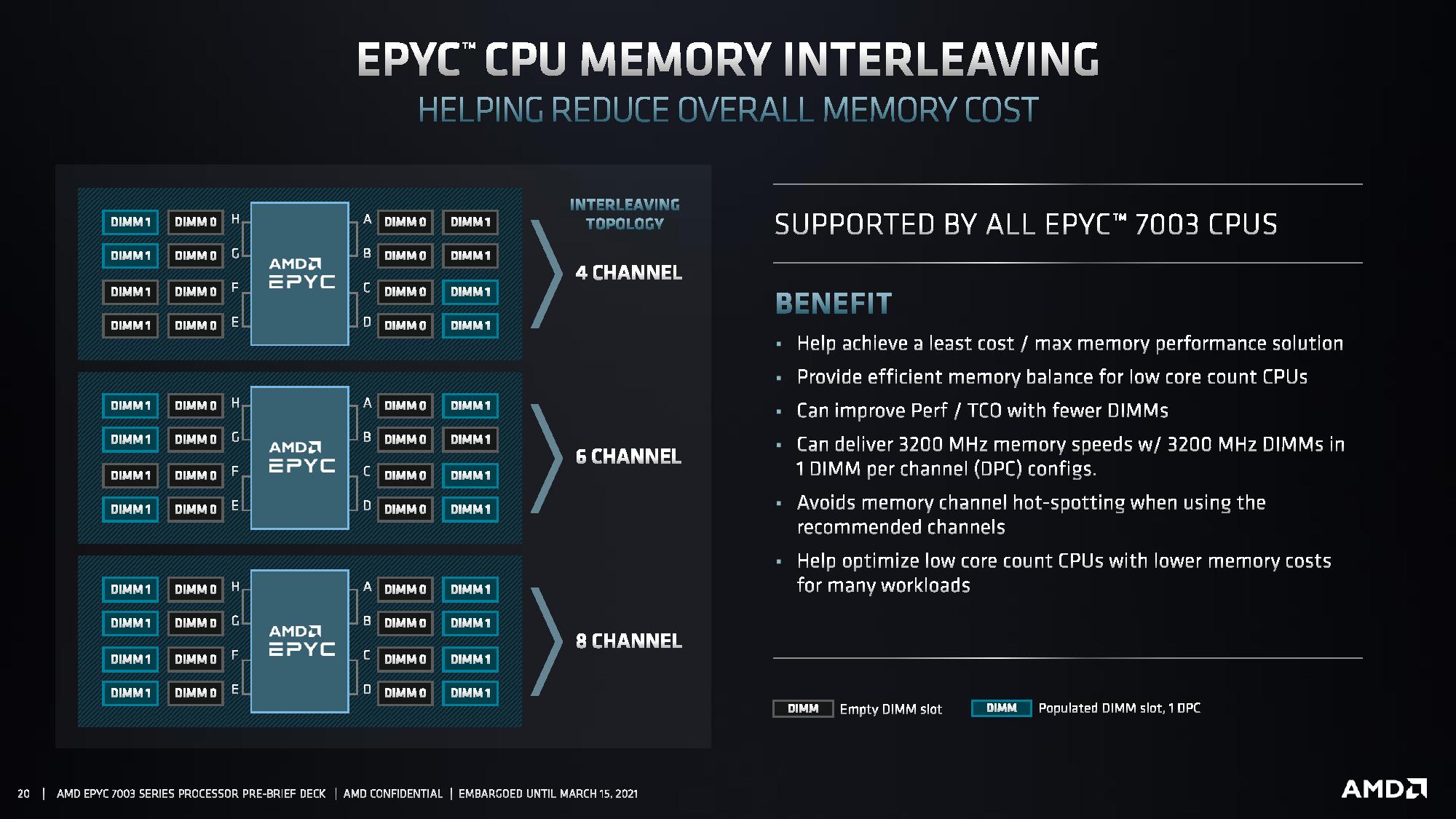

AMD made a few other adjustments to the product stack based on customer buying trends, like reducing three eight-core models to one F-series variant, and removing a 12-core option entirely. AMD also added support for six-way memory interleaving on all models to lower costs for workloads that aren't sensitive to memory throughput.

Overall, Milan has similar TDP ranges, memory, and PCIe support at any given core count than its predecessors but comes with higher clock speeds, performance, and pricing.

Milan also comes with the performance uplift granted by the Zen 3 microarchitecture. Higher IPC and frequencies, not to mention more refined boost algorithms that extract the utmost performance within the thermal confines of the socket, help improve Milan's performance in the lightly-threaded workloads where Xeon has long held an advantage. The higher per-core performance also translates to faster performance in threaded workloads, too.

Meanwhile, the larger unified L3 cache results in a simplified topology that ensures broader compatibility with standard applications, thus removing the lion's share of the rare eccentricities that we've seen with prior-gen EPYC models.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

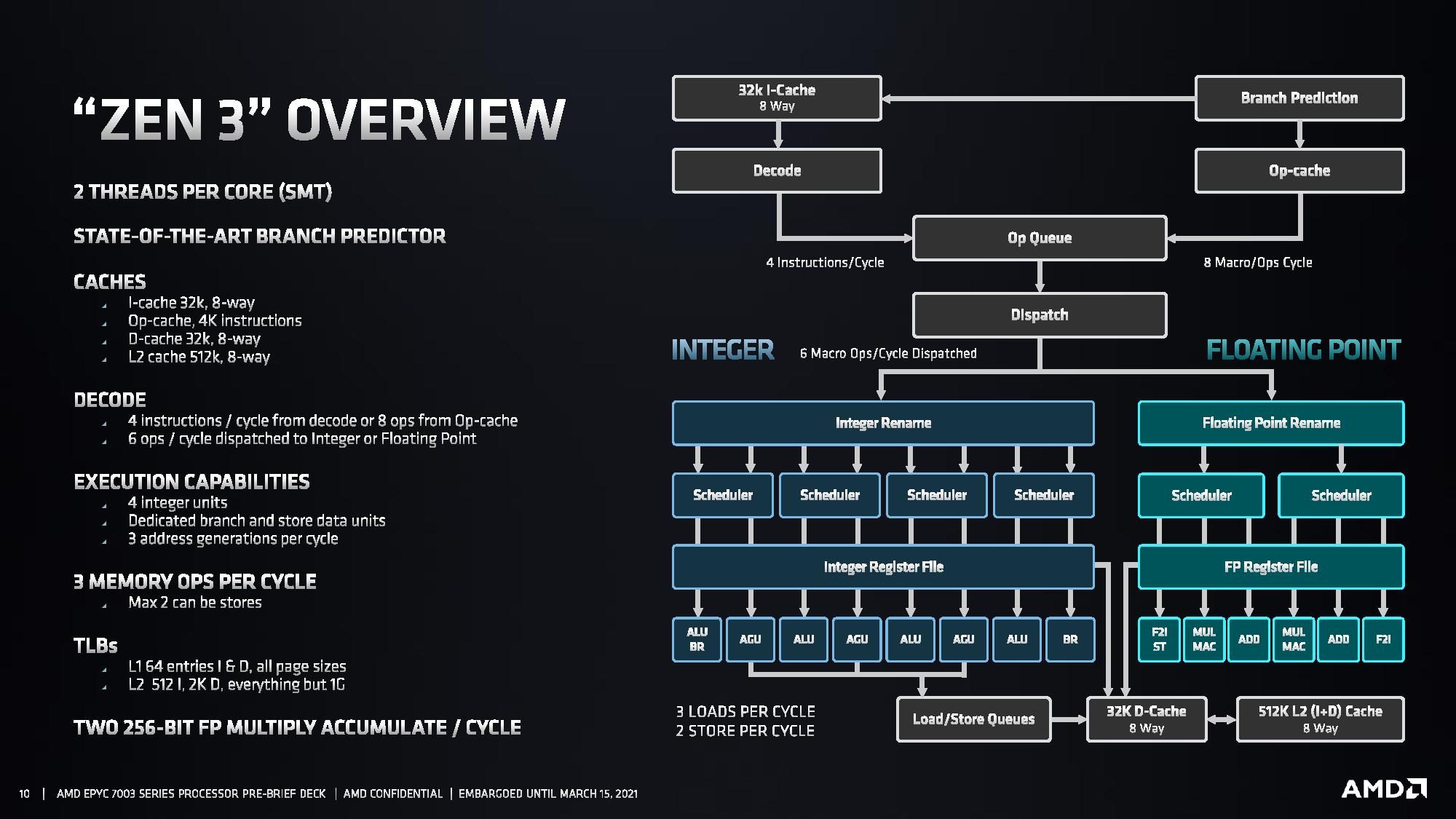

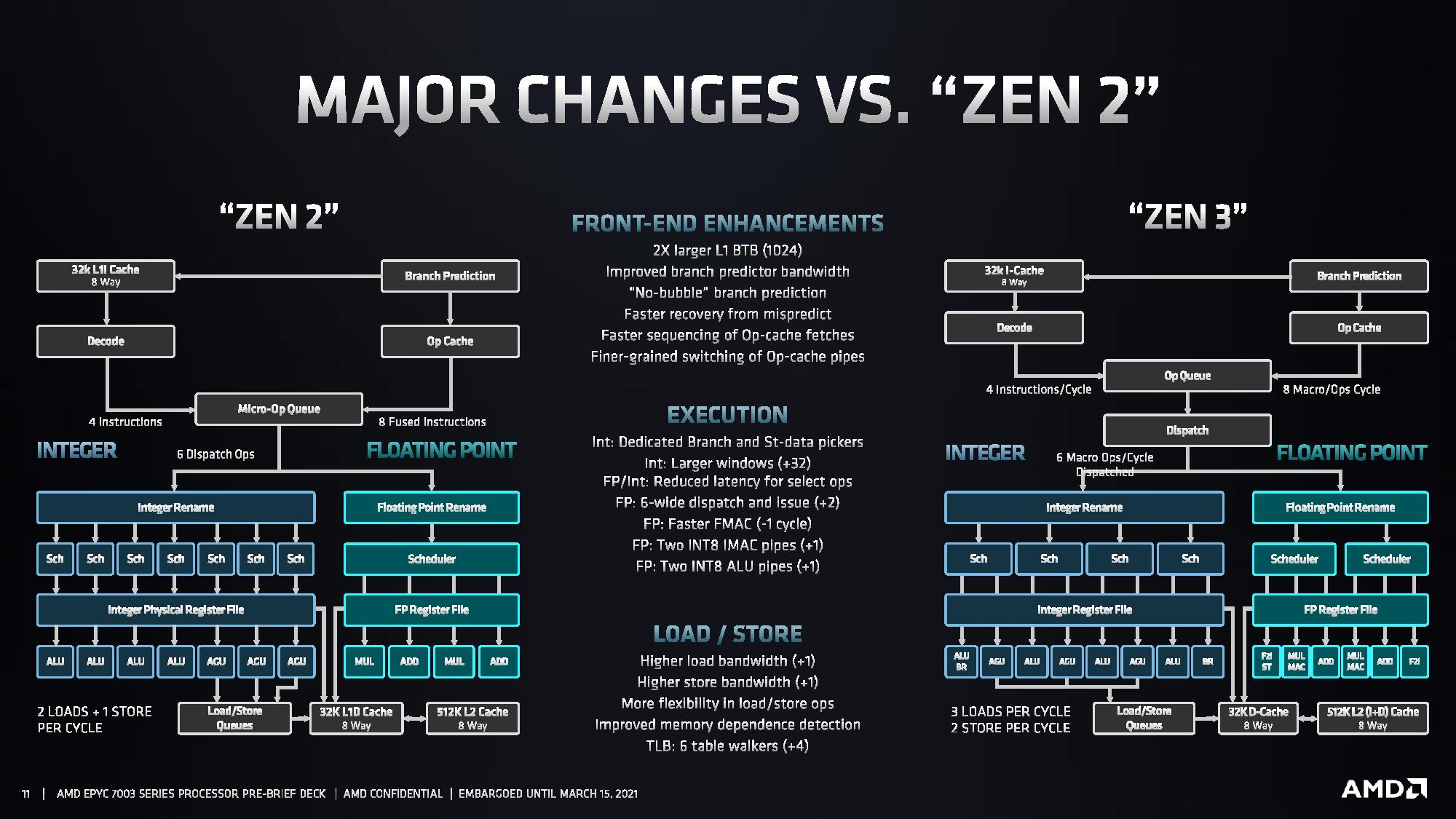

The Zen 3 microarchitecture brings the same fundamental advantages that we've seen with the desktop PC and notebook models (you can read much more about the architecture here), like reduced memory latency, doubled INT8 and floating point performance, and higher integer throughput.

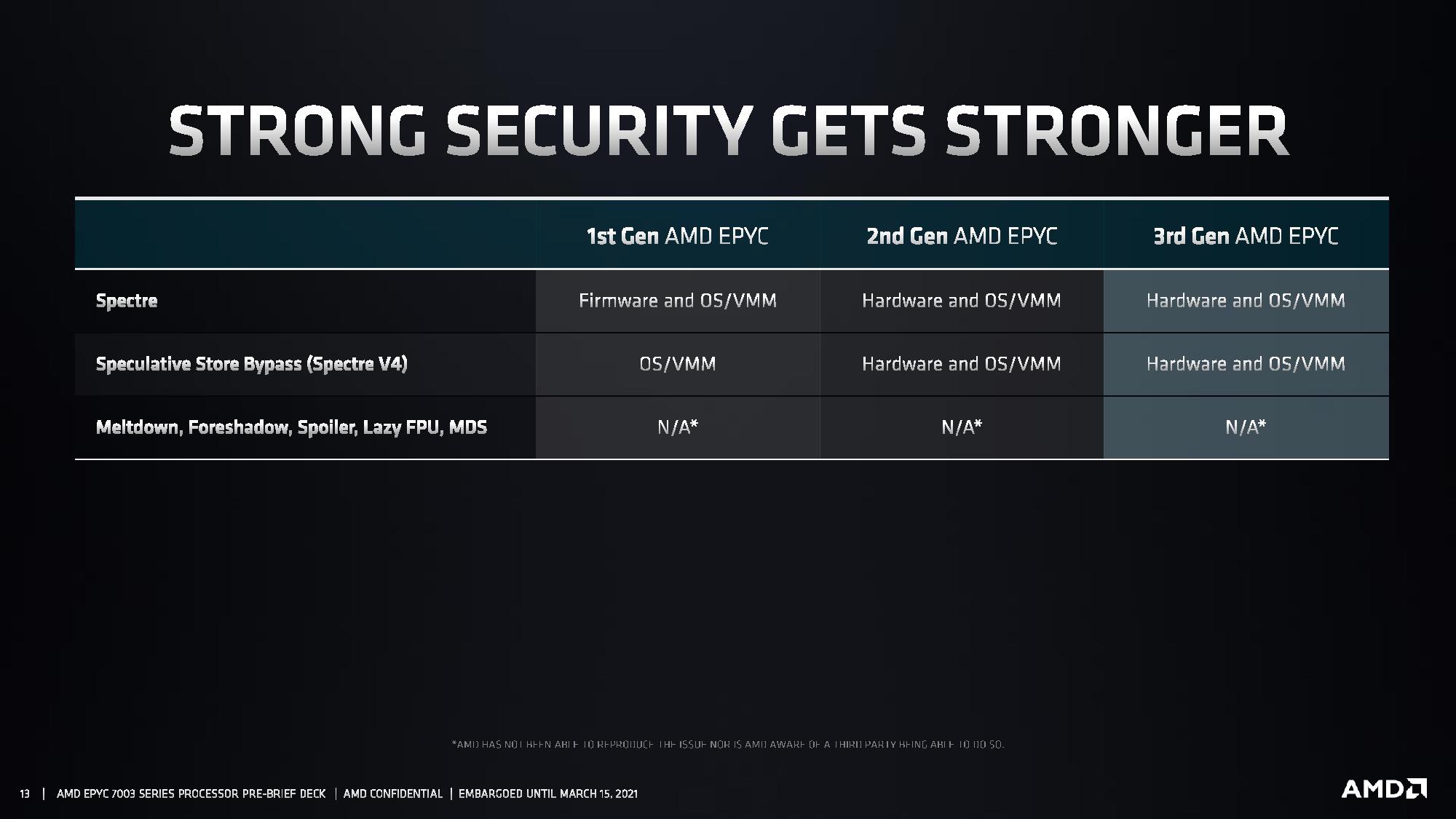

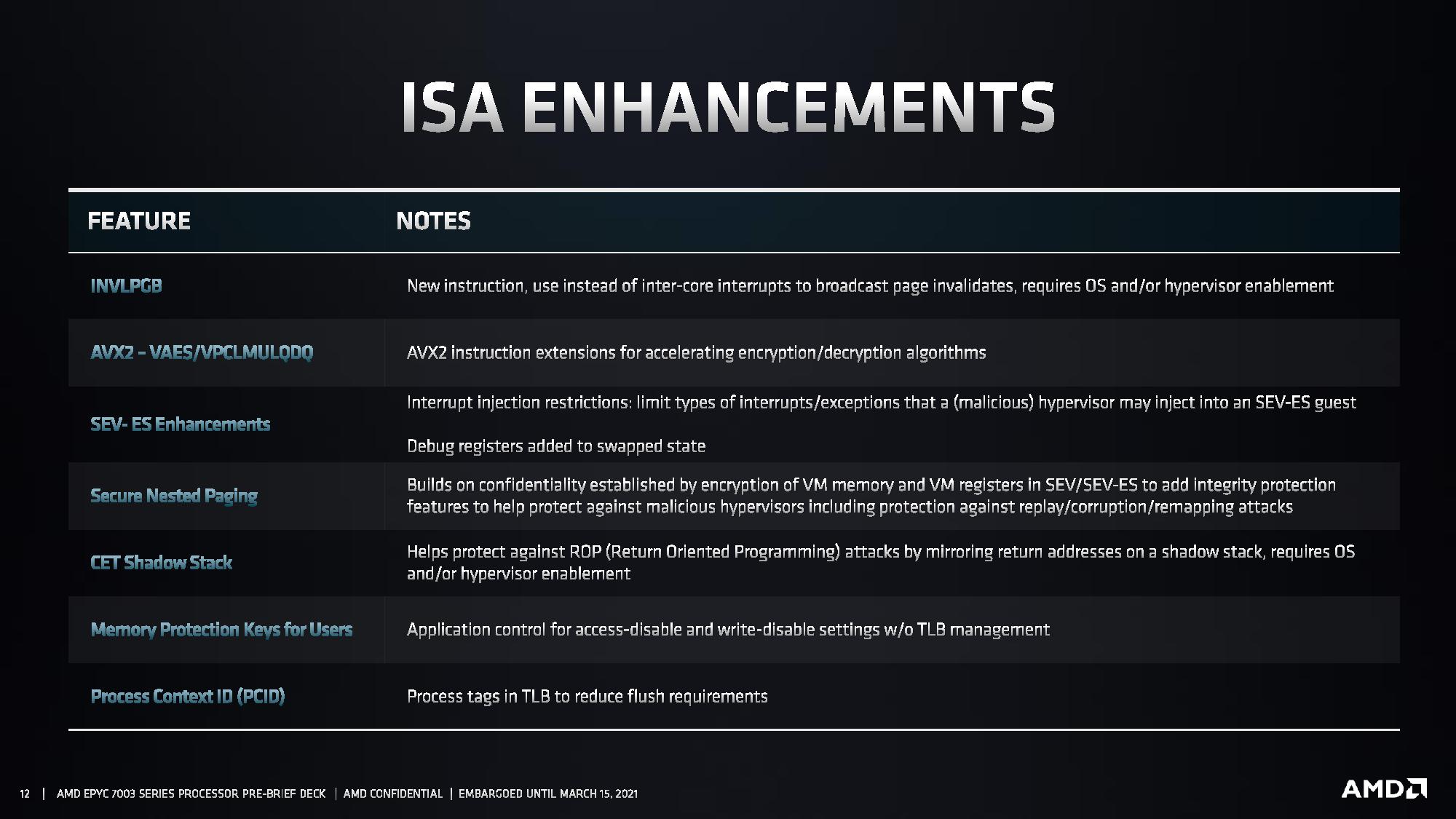

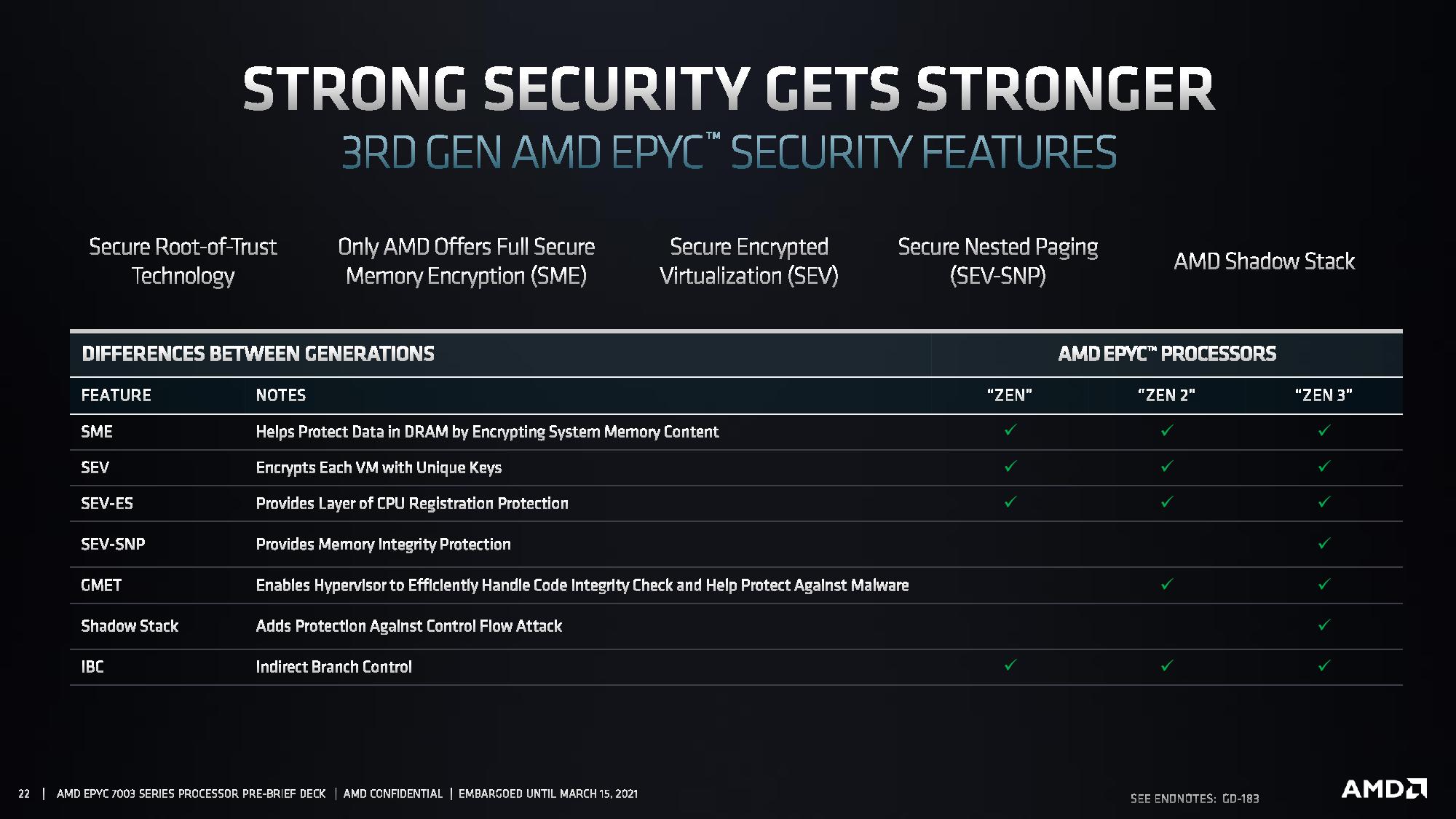

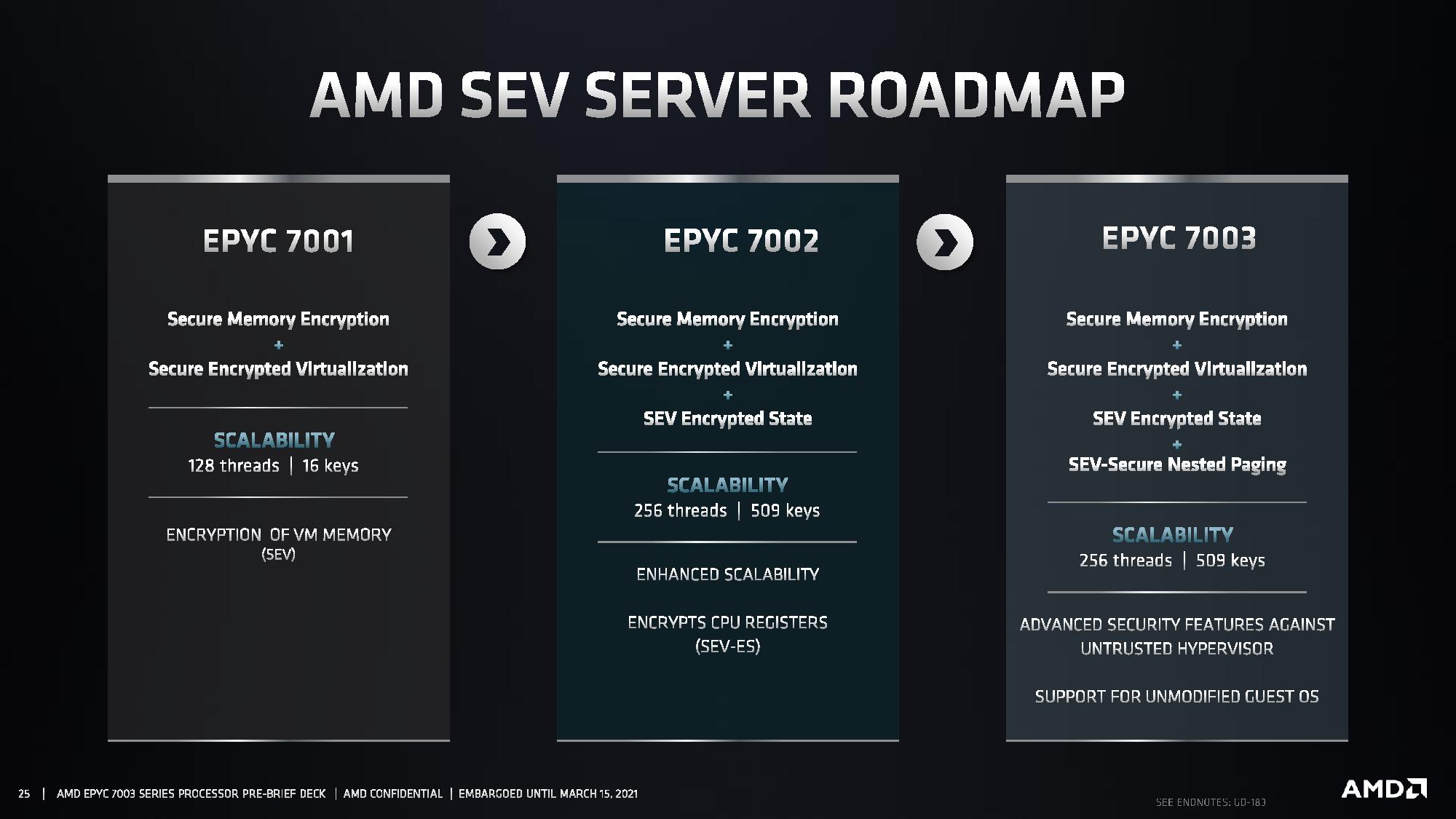

AMD also added support for memory protection keys, AVX2 support for VAES/VPCLMULQD instructions, bolstered security for hypervisors and VM memory/registers, added protection against return oriented programming attacks, and made a just-in-time update to the Zen 3 microarchitecture to provide in-silicon mitigation for the Spectre vulnerability (among other enhancements listed in the slides above). As before, Milan remains unimpacted by other major security vulnerabilities, like Meltdown, Foreshadow, and Spoiler.

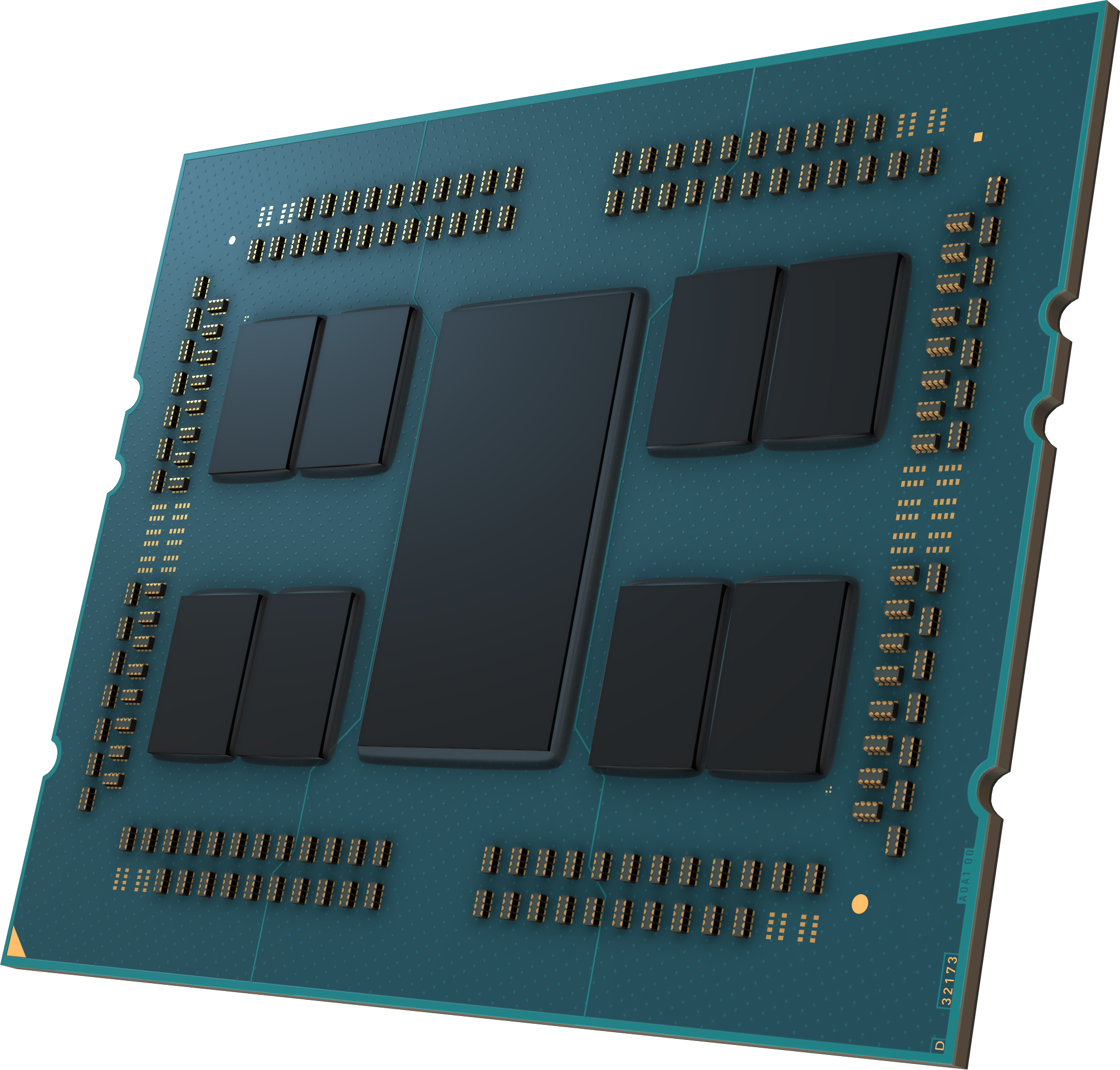

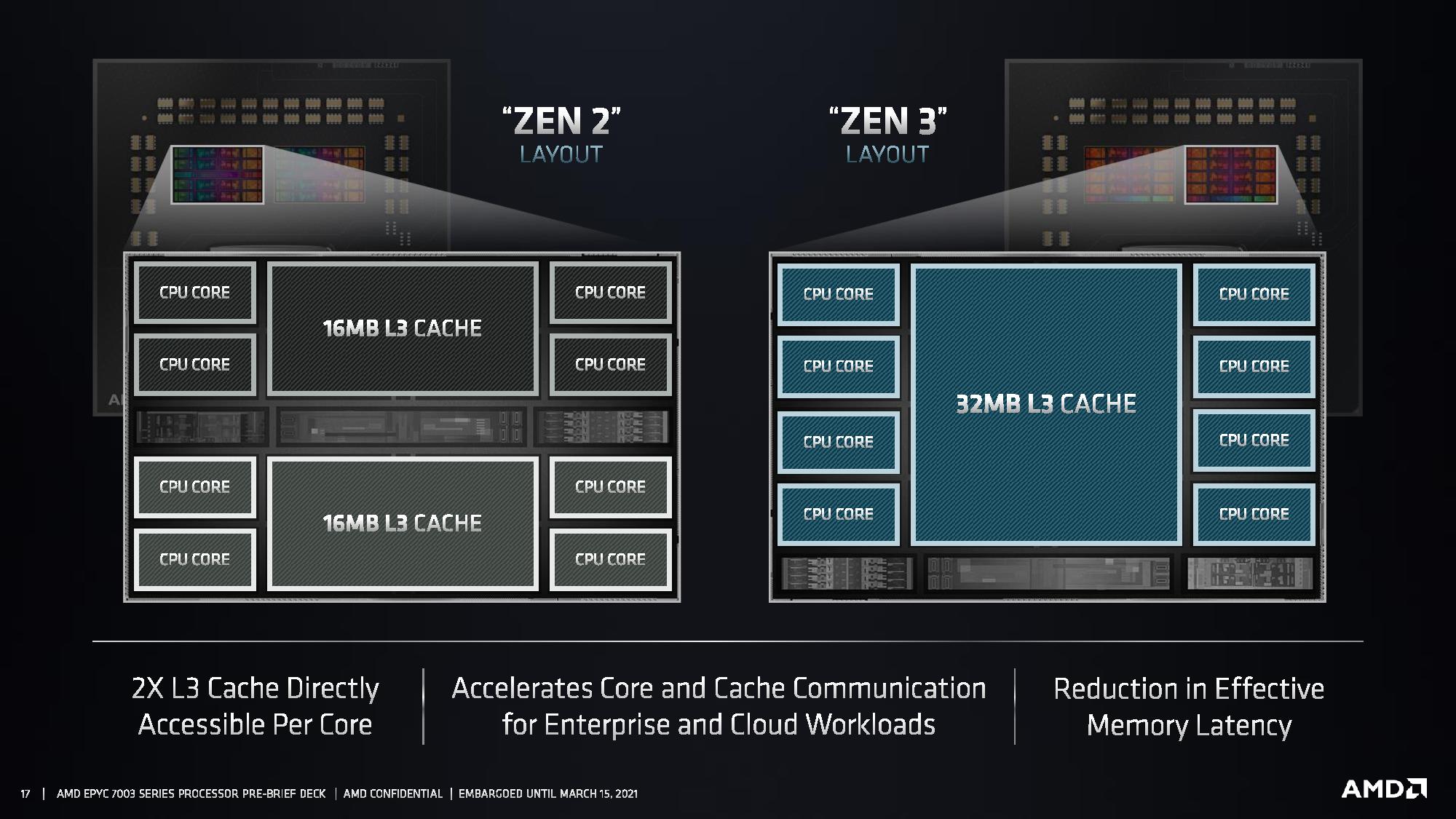

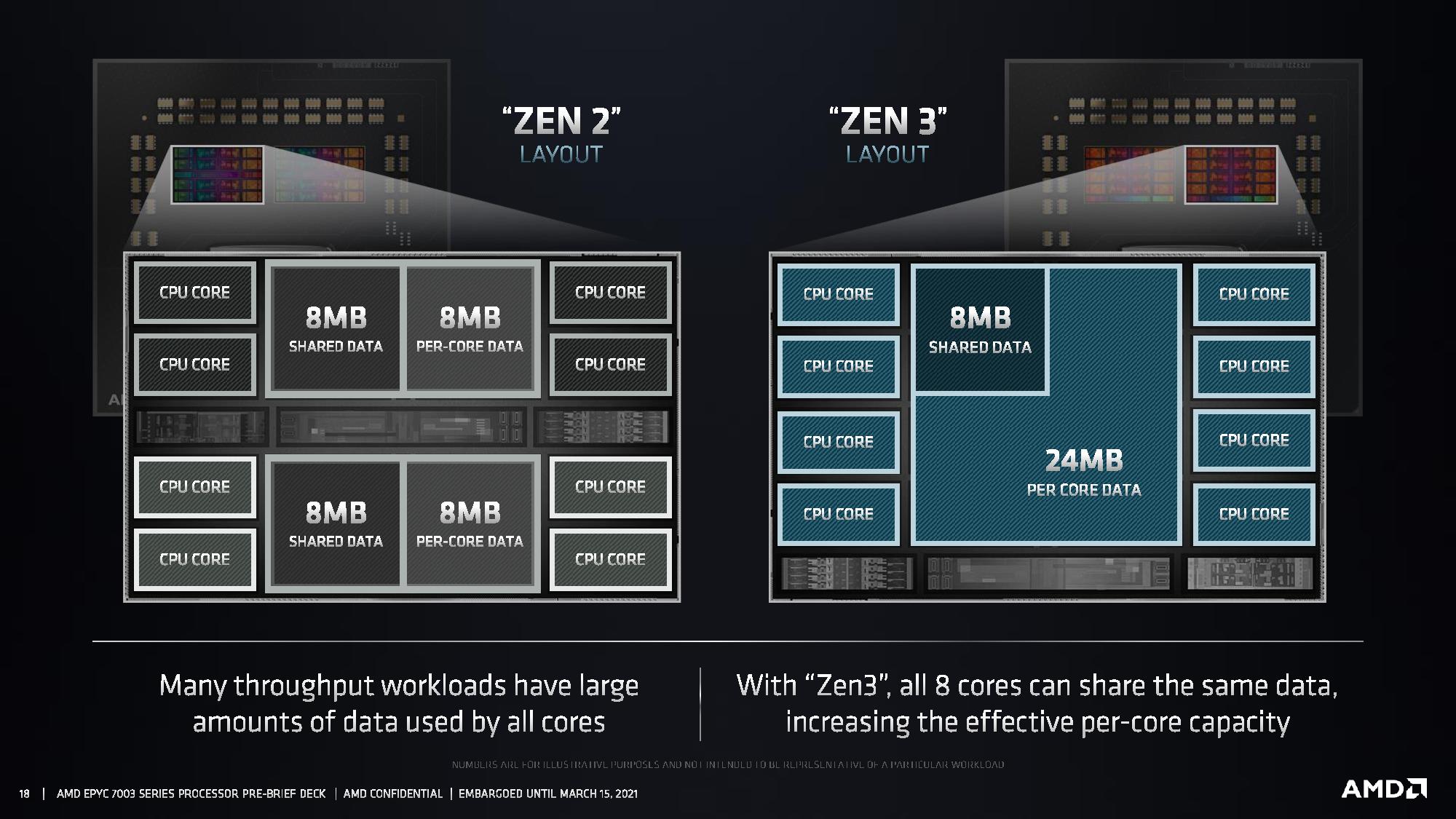

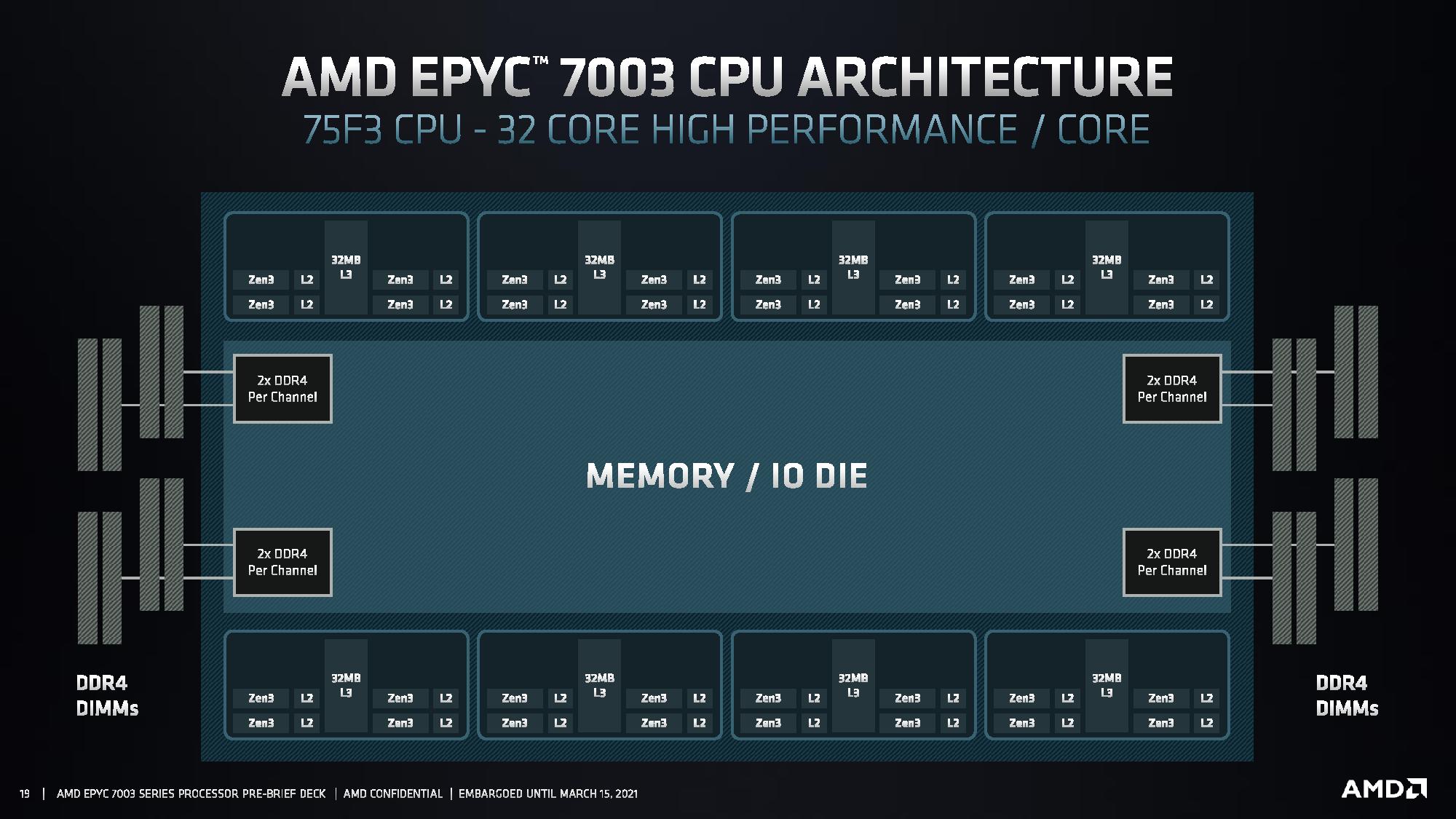

The EPYC Milan SoC adheres to the same (up to) nine-chiplet design as the Rome models and is drop-in compatible with existing second-gen EPYC servers. Just like the consumer-oriented chips, Core Complex Dies (CCDs) based on the Zen 3 architecture feature eight cores tied to a single contiguous 32MB slice of L3 cache, which stands in contrast to Zen 2's two four-core CCXes, each with two 16MB clusters. The new arrangement allows all eight cores to communicate to have direct access to 32MB of L3 cache, reducing latency.

This design also increases the amount of cache available to a single core, thus boosting performance in multi-threaded applications and enabling lower-core count Milan models to have access to significantly more L3 cache than Rome models. The improved core-to-cache ratio boosts performance in HPC and relational database workloads, among others.

Second-gen EPYC models supported either 8- or 4-channel memory configurations, but Milan adds support for 6-channel interleaving, allowing customers that aren't memory bound to use less system RAM to reduce costs. The 6-channel configuration supports the same DDR4-3200 specification for single DIMM per channel (1DPC) implementations. This feature is enabled across the full breadth of the Milan stack, but AMD sees it as most beneficial for models with lower core counts.

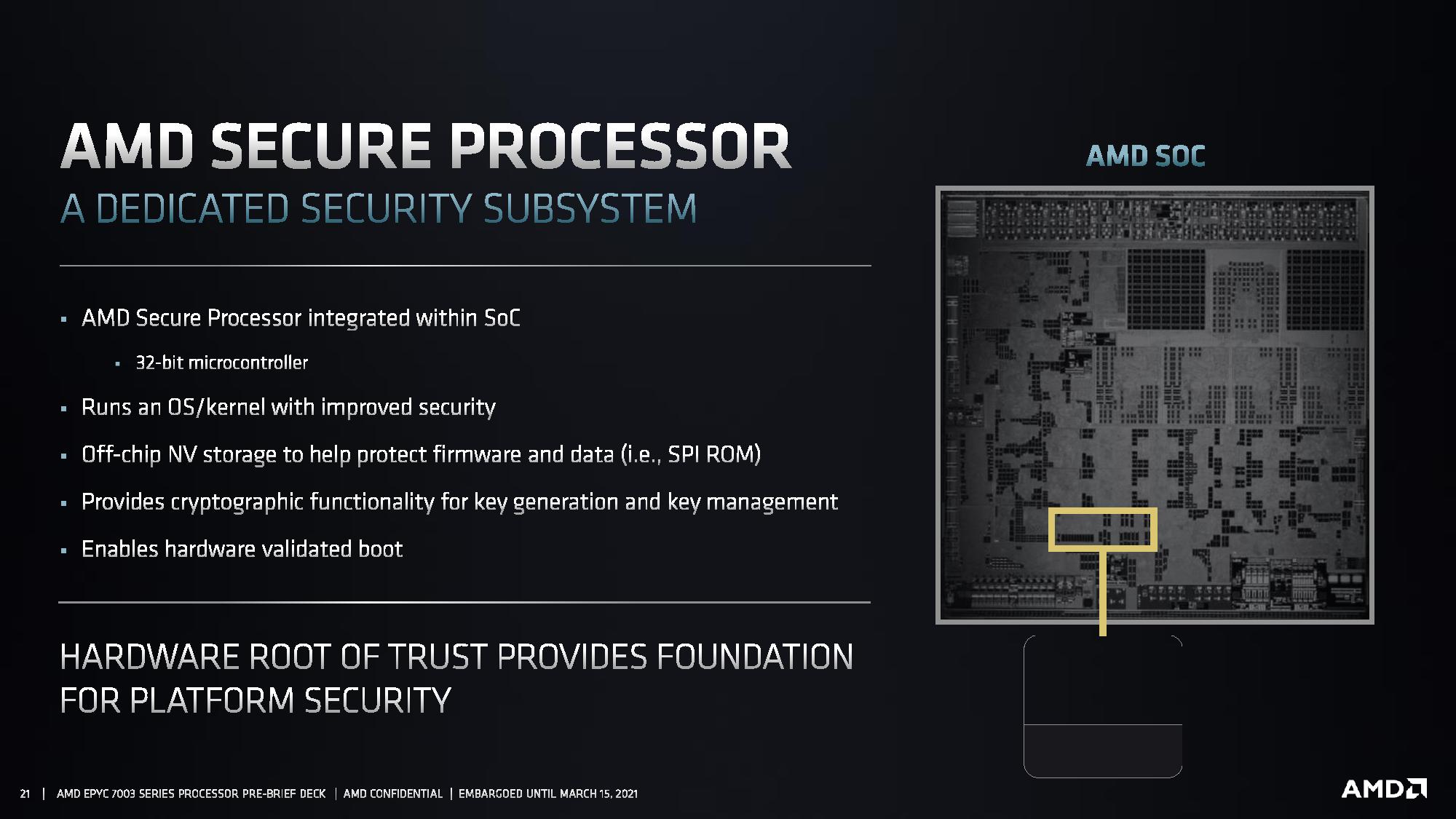

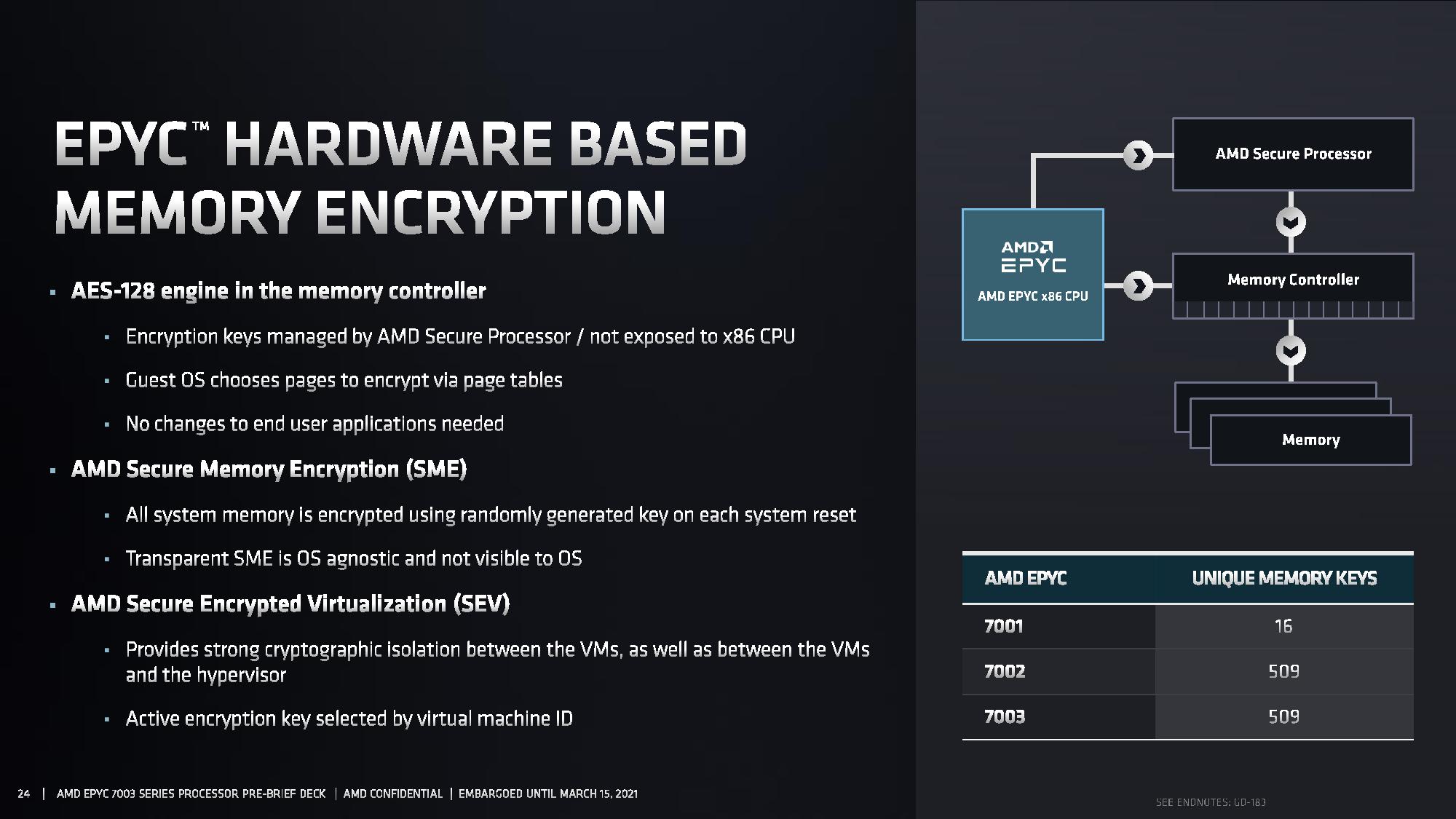

Milan also features the same 32-bit AMD Secure Processor in the I/O Die (IOD) that manages cryptographic functionality, like key generation and management for AMD's hardware-based Secure Memory Encryption (SME) and Secure Encrypted Virtualization (SEV) features. These are key advantages over Intel's Cascade Lake processors, but Ice Lake will bring its own memory encryption features to bear. AMD's Secure Processor also manages its hardware-validated boot feature.

AMD EPYC Milan Performance

AMD provided its own performance projections based on its internal testing. However, as with all vendor-provided benchmarks, we should view these with the appropriate level of caution. We've included the testing footnotes at the end of the article.

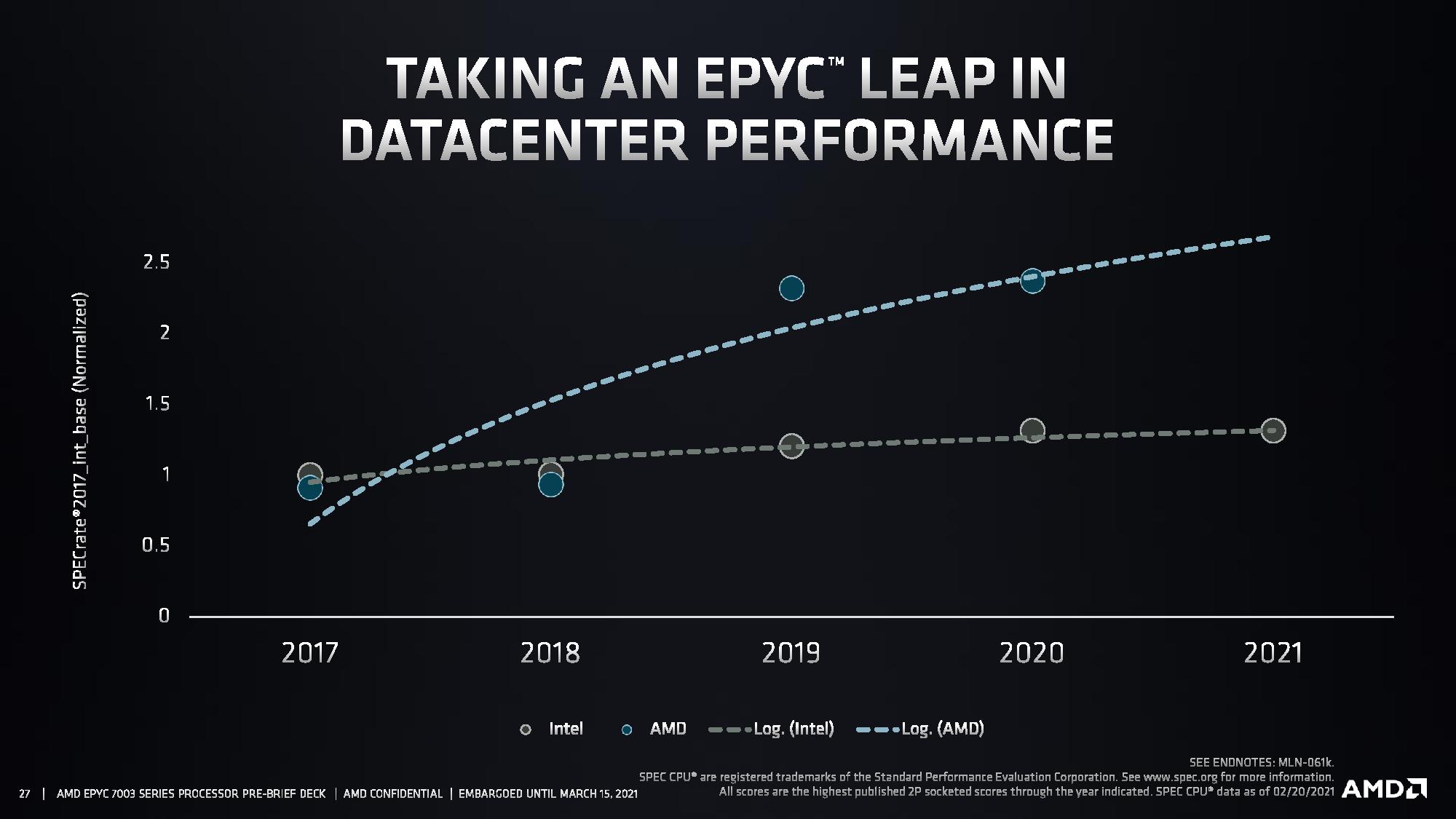

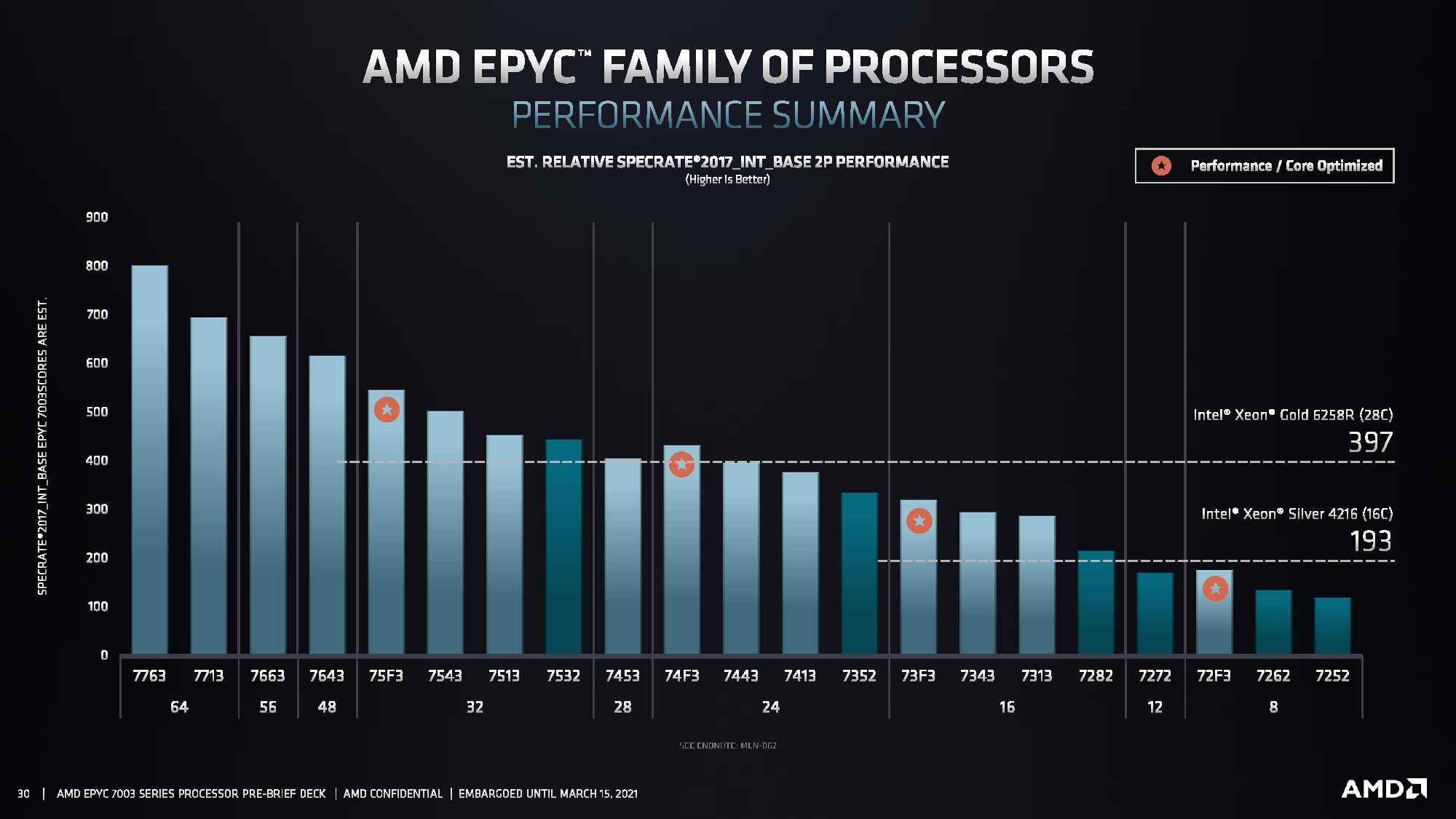

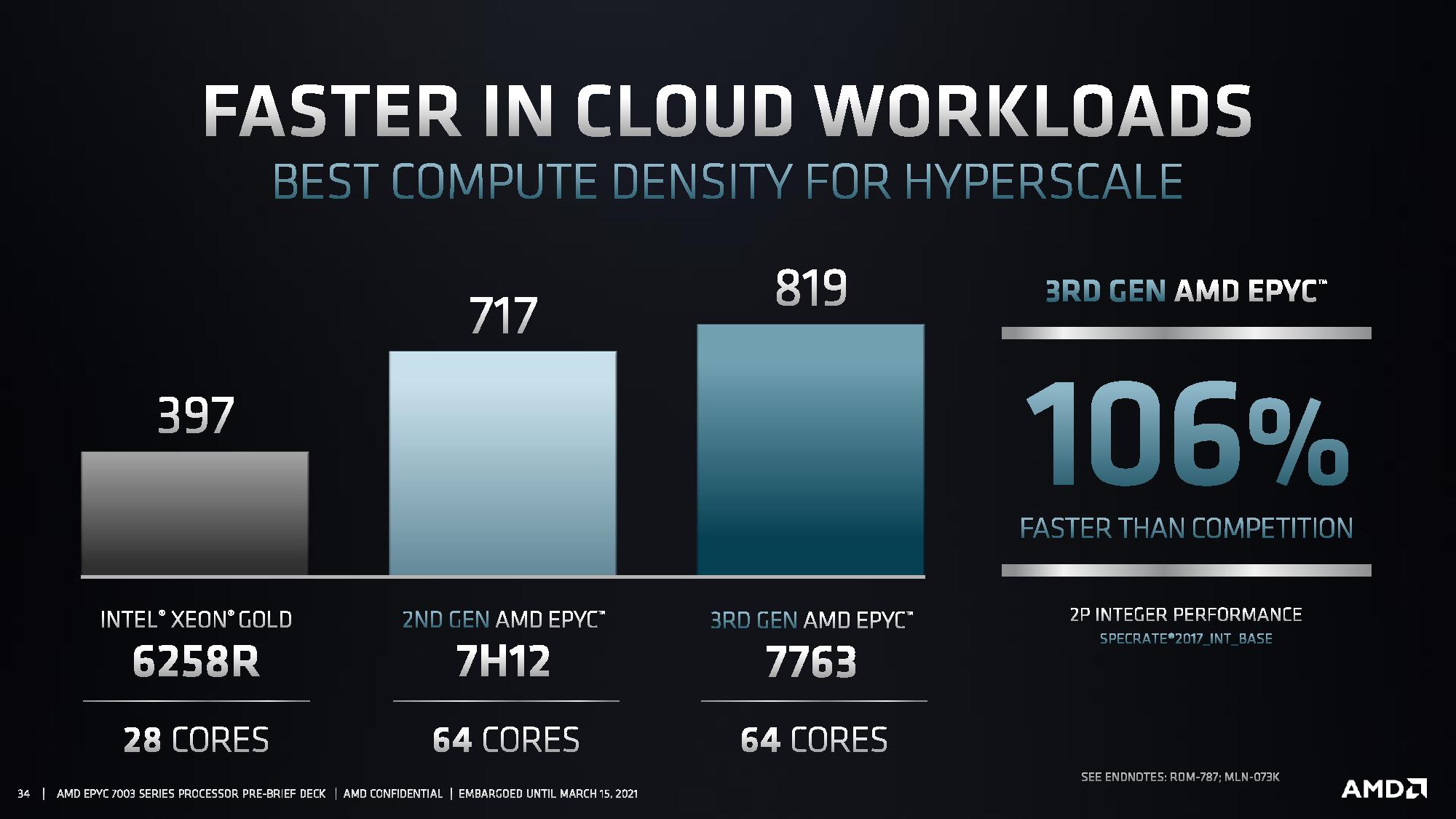

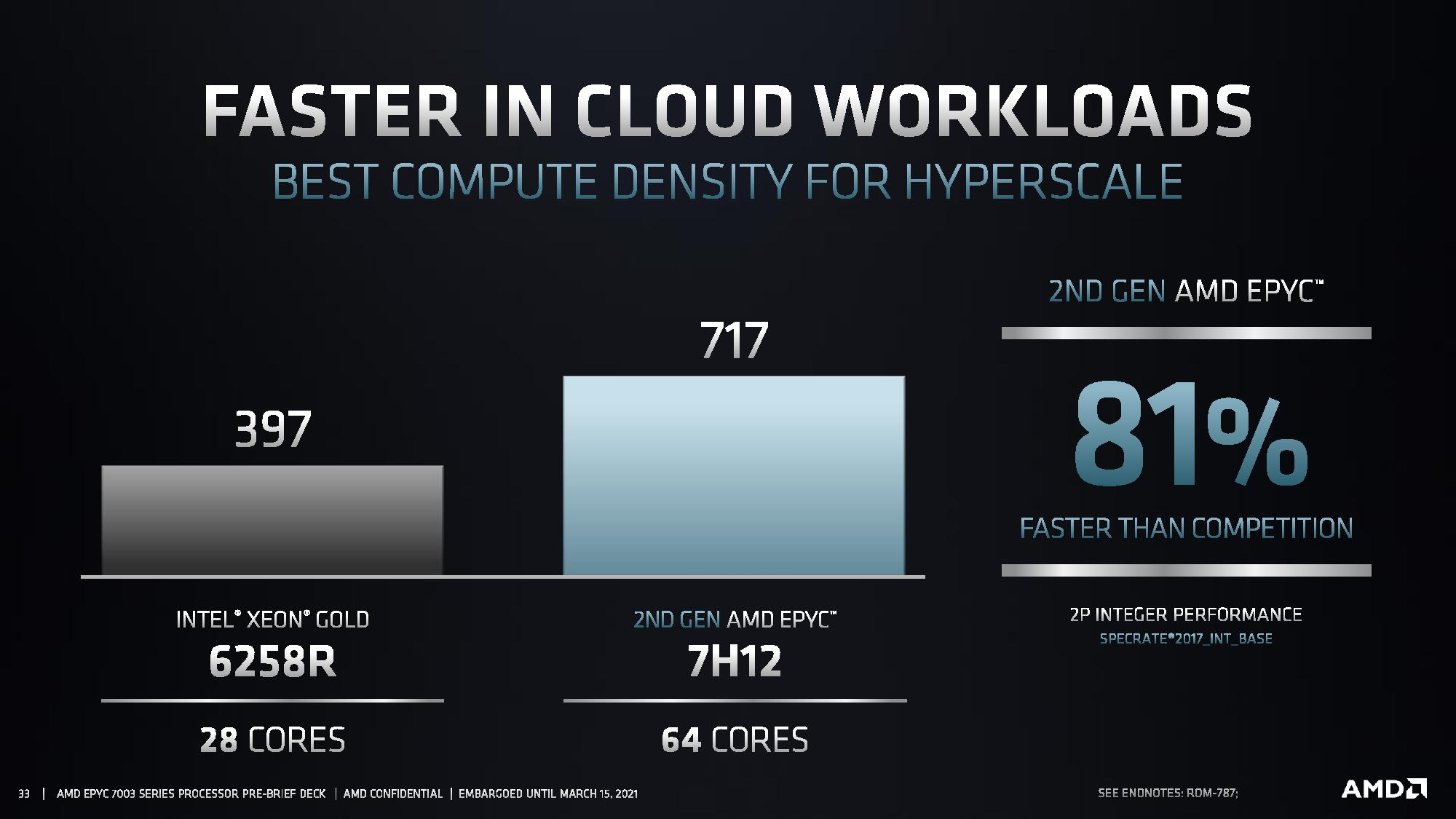

AMD claims the Milan chips are the fastest server processors for HPC, cloud, and enterprise workloads. The first slide outlines AMD's progression compared to Intel in SPECrate2017_int_base over the last few years, highlighting its continued trajectory of significant generational performance improvements. The second slide outlines how SPECrate2017_int_base scales across the Milan product stack, with Intel's best published scores for two key Intel models, the 28-core 6258R and 16-core 4216, added for comparison.

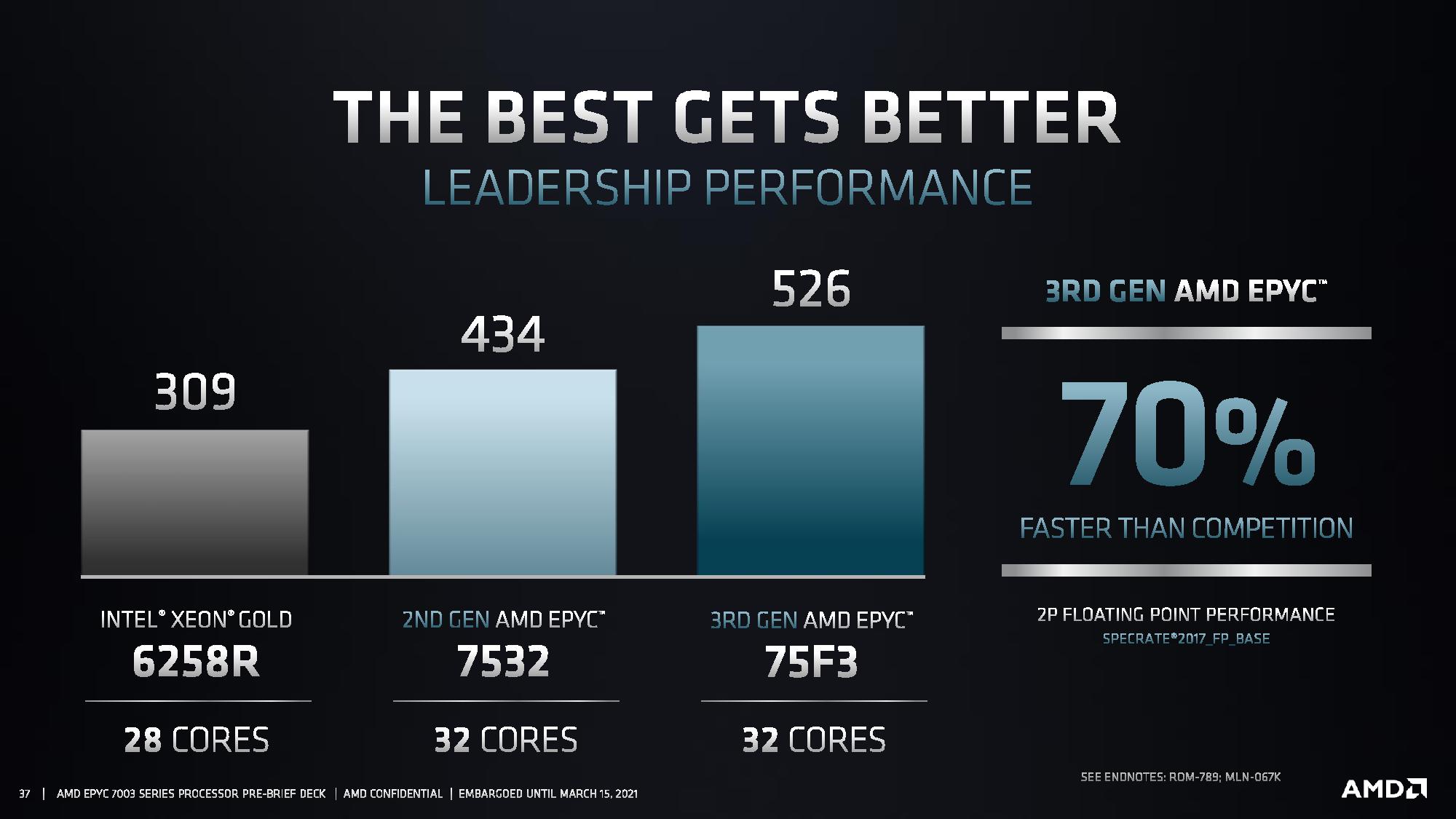

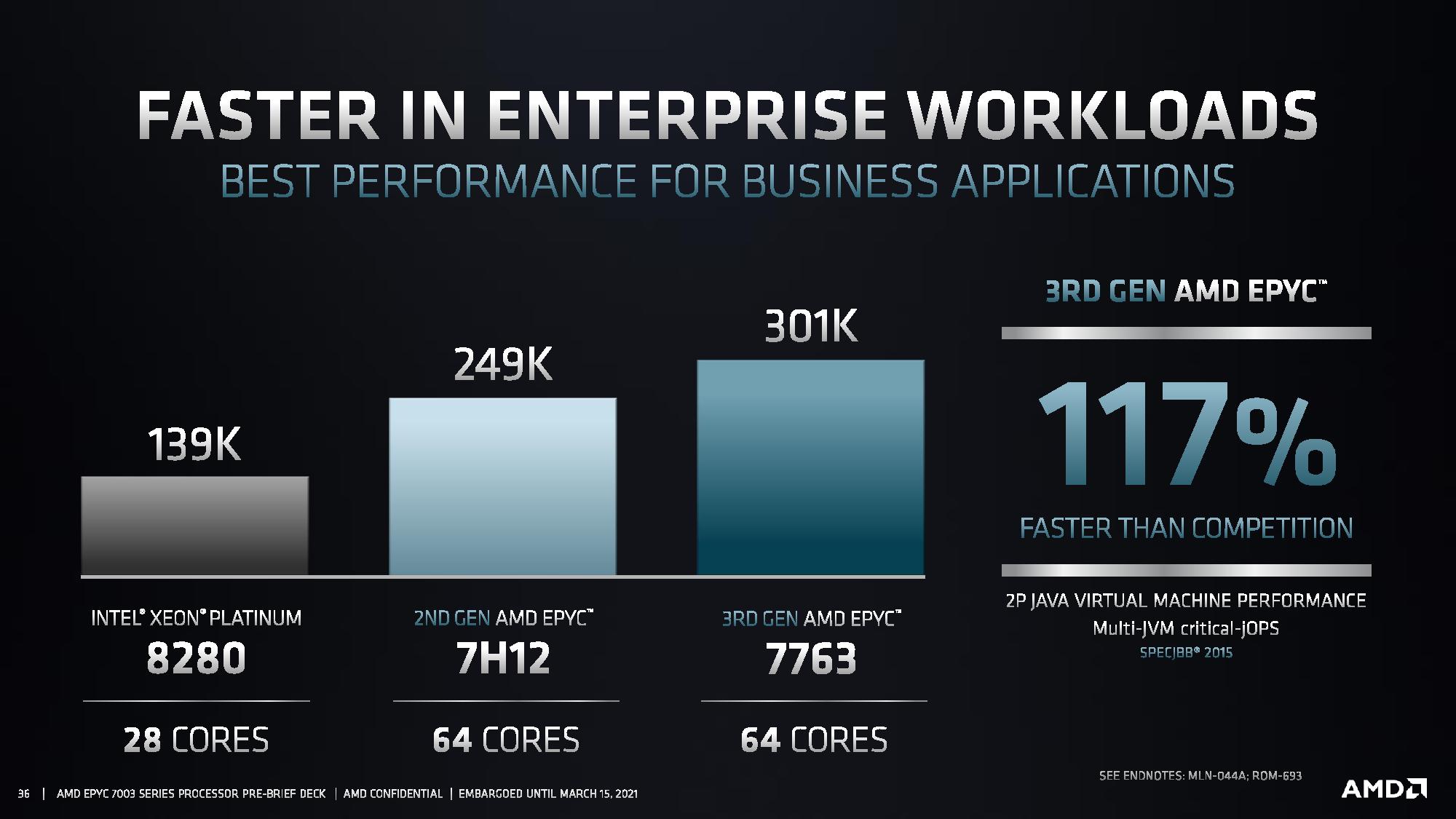

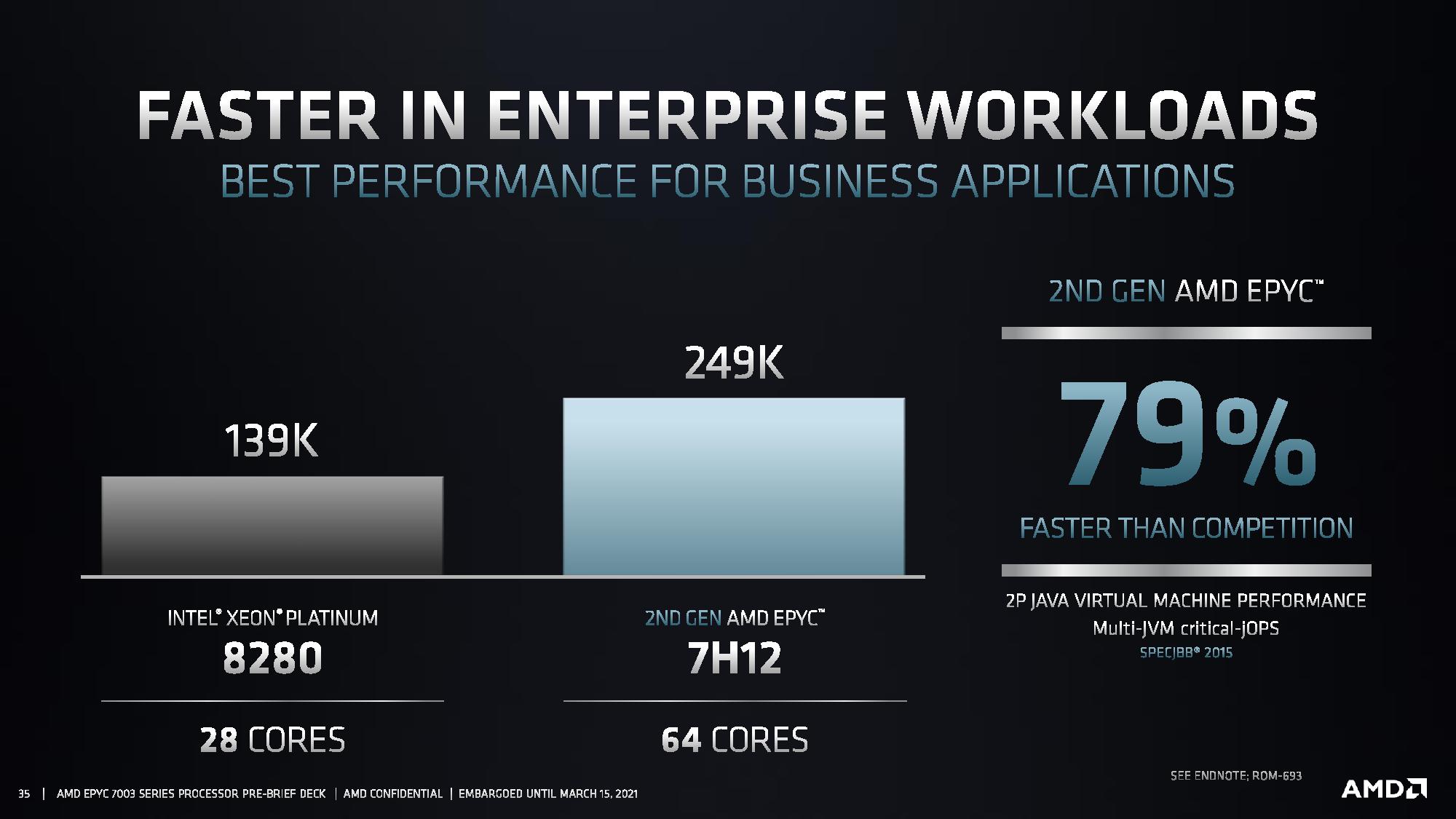

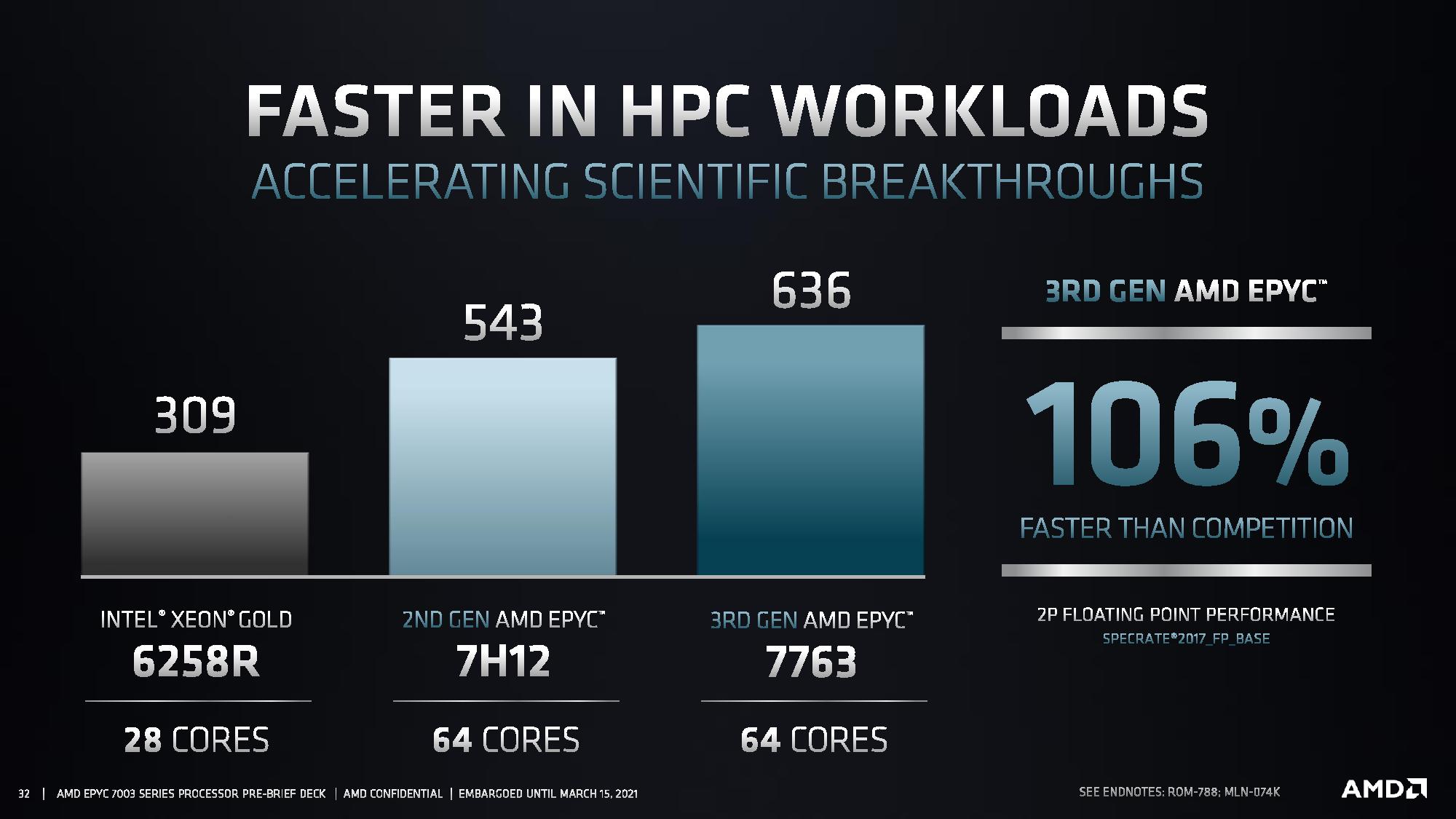

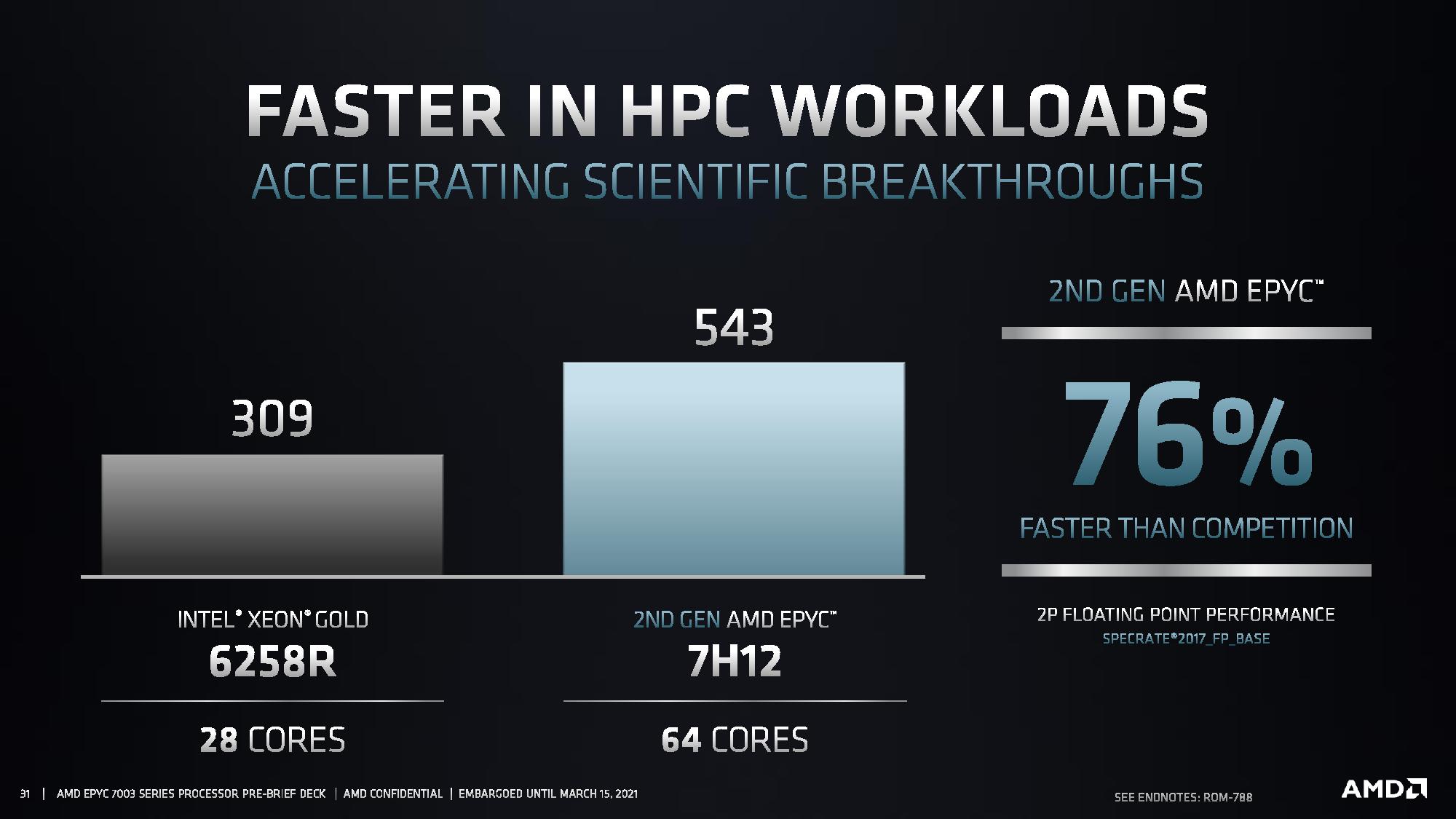

Moving on to a broader spate of applications, AMD says existing two-socket 7H12 systems already hold an easy lead over Xeon in the SPEC2017 floating point tests, but the Milan 7763 widens the gap to a 106% advantage over the Xeon 6258R. AMD uses this comparison for the two top-of-the-stack chips, but be aware that this is a bit lopsided: The 6258R carries a tray price of $3,651 compared to the 7763's $7,890 asking price. AMD also shared benchmarks comparing the two in SPEC2017 integer tests, claiming a similar 106% speedup. In SPECJBB 2015 tests, which AMD uses as a general litmus for enterprise workloads, AMD claims 117% more performance than the 6258R.

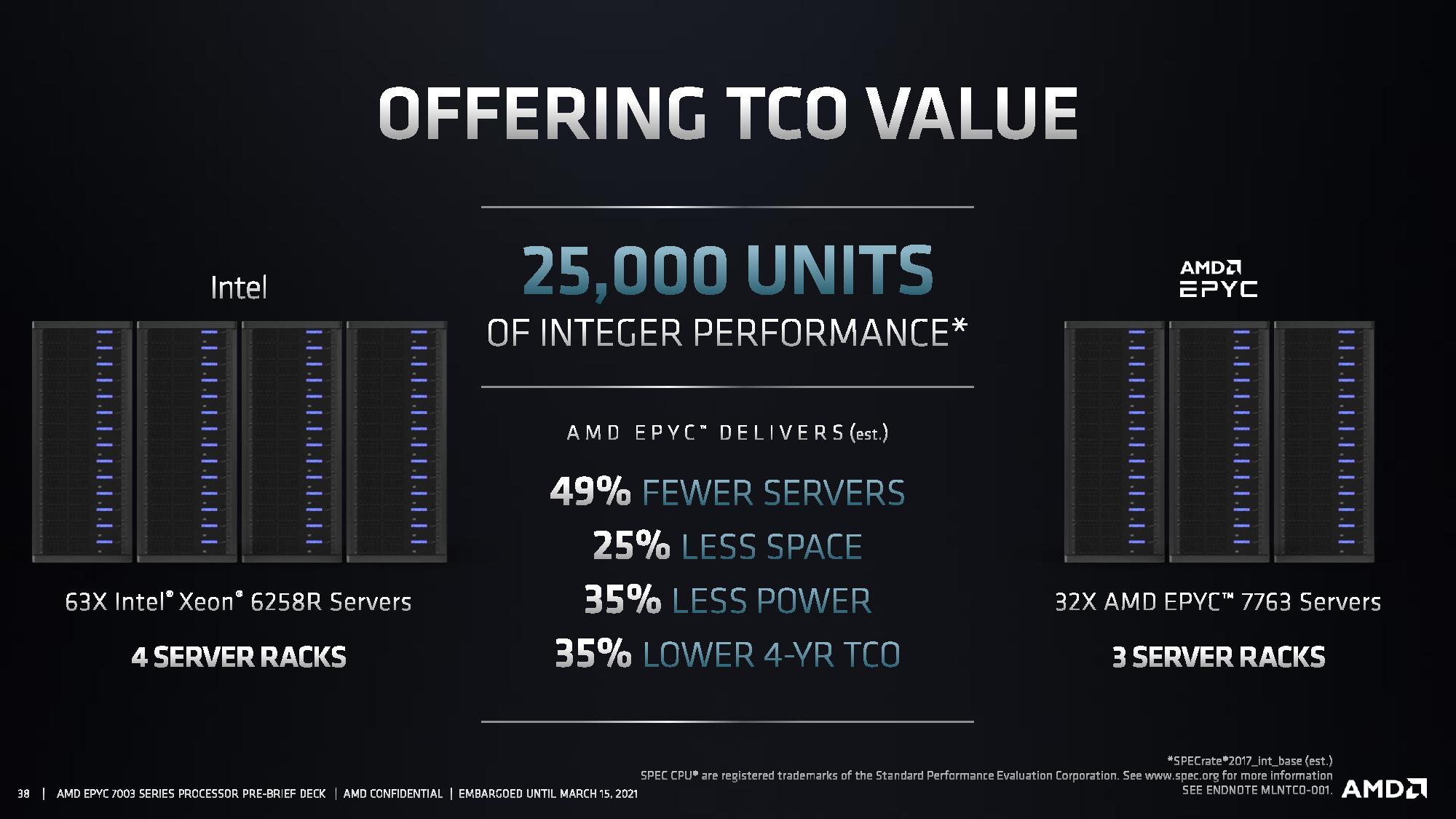

The company also shared a few test results showing performance in the middle of its product stack compared to Intel's 6258R, claiming that its 32-core part also outperforms the 6258R, all of which translates to improved TCO for customers due to the advantages of lower pricing and higher compute density that translates to fewer servers, lower space requirements, and lower overall power consumption.

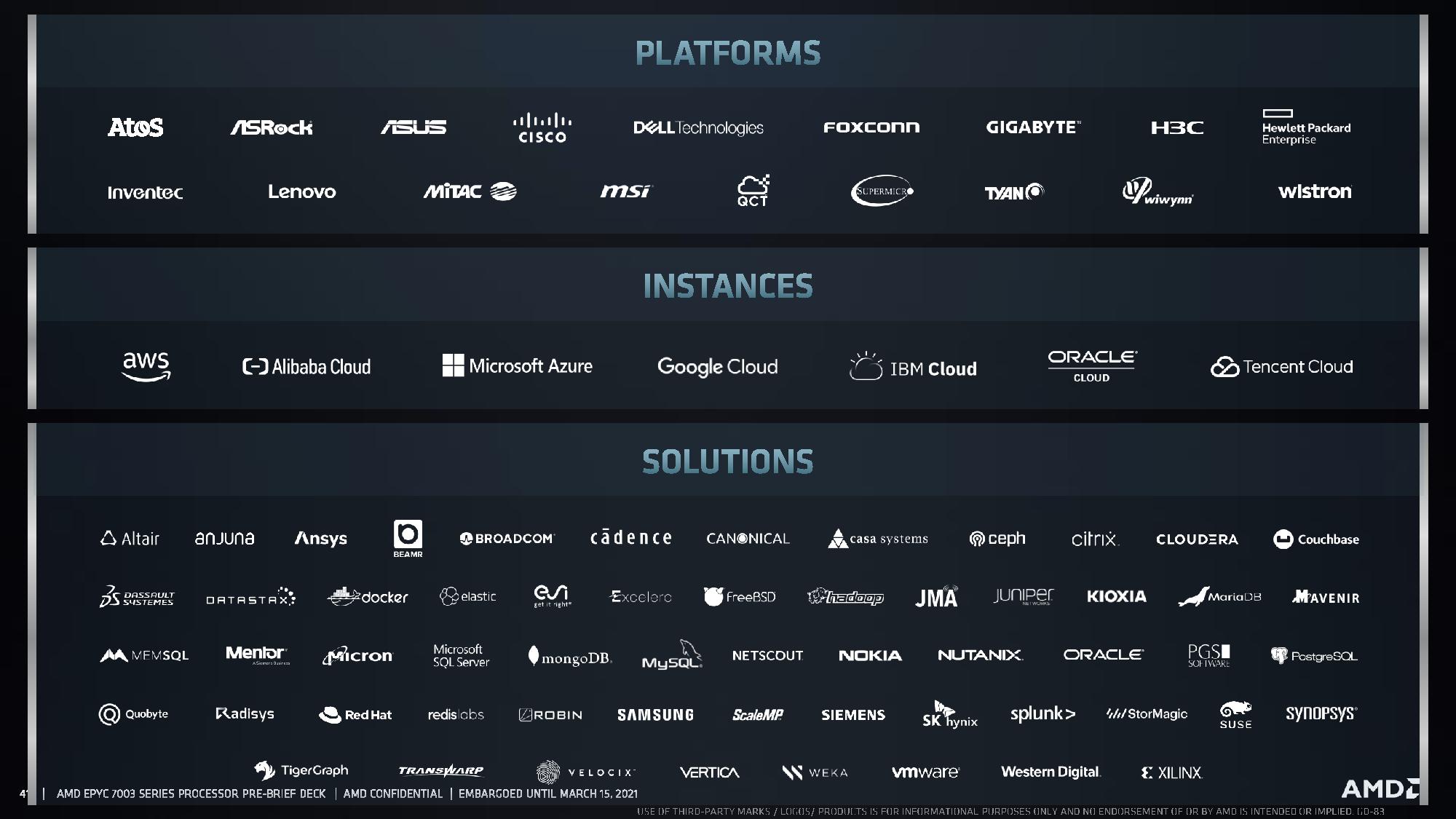

Finally, AMD has a broad range of ecosystem partners with fully-validated platforms available from top-tier OEMs like Dell, HP, and Lenovo, among many others. These platforms are fed by a broad constellation of solutions providers as well. AMD also has an expansive list of instances available from leading cloud service providers like AWS, Azure, Google Cloud, and Oracle, to name a few.

| Model # | Cores | Threads | Base Freq (GHz) | Max Boost Freq (up to GHz11) | Default TDP (w) | cTDP Min (w) | cTDP Max (w) | L3 Cache (MB) | DDR Channels | Max DDR Freq (1DPC) | PCIe 4 | 1Ku Pricing |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 7763 | 64 | 128 | 2.45 | 3.50 | 280 | 225 | 280 | 256 | 8 | 3200 | x128 | $7,890 |

| 7713 | 64 | 128 | 2.00 | 3.68 | 225 | 225 | 240 | 256 | 8 | 3200 | X128 | $7,060 |

| 7713P | 64 | 128 | 2.00 | 3.68 | 225 | 225 | 240 | 256 | 8 | 3200 | X128 | $5,010 |

| 7663 | 56 | 112 | 2.00 | 3.50 | 240 | 225 | 240 | 256 | 8 | 3200 | x128 | $6,366 |

| 7643 | 48 | 96 | 2.30 | 3.60 | 225 | 225 | 240 | 256 | 8 | 3200 | x128 | $4,995 |

| 75F3 | 32 | 64 | 2.95 | 4.00 | 280 | 225 | 280 | 256 | 8 | 3200 | x 128 | $4,860 |

| 7543 | 32 | 64 | 2.80 | 3.70 | 225 | 225 | 240 | 256 | 8 | 3200 | x128 | $3,761 |

| 7543P | 32 | 64 | 2.80 | 3.70 | 225 | 225 | 240 | 256 | 8 | 3200 | X128 | $2,730 |

| 7513 | 32 | 64 | 2.60 | 3.65 | 200 | 165 | 200 | 128 | 8 | 3200 | x128 | $2,840 |

| 7453 | 28 | 56 | 2.75 | 3.45 | 225 | 225 | 240 | 64 | 8 | 3200 | x128 | $1,570 |

| 74F3 | 24 | 48 | 3.20 | 4.00 | 240 | 225 | 240 | 256 | 8 | 3200 | x128 | $2,900 |

| 7443 | 24 | 48 | 2.85 | 4.00 | 200 | 165 | 200 | 128 | 8 | 3200 | x128 | $2,010 |

| 7443P | 24 | 48 | 2.85 | 4.00 | 200 | 165 | 200 | 128 | 8 | 3200 | X128 | $1,337 |

| 7413 | 24 | 48 | 2.65 | 3.60 | 180 | 165 | 200 | 128 | 8 | 3200 | X128 | $1,825 |

| 73F3 | 16 | 32 | 3.50 | 4.00 | 240 | 225 | 240 | 256 | 8 | 3200 | x128 | $3,521 |

| 7343 | 16 | 32 | 3.20 | 3.90 | 190 | 165 | 200 | 128 | 8 | 3200 | x128 | $1,565 |

| 7313 | 16 | 32 | 3.00 | 3.70 | 155 | 155 | 180 | 128 | 8 | 3200 | X128 | $1,083 |

| 7313P | 16 | 32 | 3.00 | 3.70 | 155 | 155 | 180 | 128 | 8 | 3200 | X128 | $913 |

| 72F3 | 8 | 16 | 3.70 | 4.10 | 180 | 165 | 200 | 256 | 8 | 3200 | x128 | $2,468 |

Thoughts

AMD's general launch today gives us a good picture of the company's data center chips moving forward, but we won't know the full story until Intel releases the formal details of its 10nm Ice Lake processors.

The volume ramp for both AMD's EPYC Milan and Intel's Ice Lake has been well underway for some time, and both lineups have been shipping to hyperscalers and CSPs for several months. The HPC and supercomputing space also tend to receive early silicon, so they also serve as a solid general litmus for the future of the market. AMD's EPYC Milan has already enjoyed brisk uptake in those segments, and given that Intel's Ice Lake hasn't been at the forefront of as many HPC wins, it's easy to assume, by a purely subjective measure, that Milan could hold some advantages over Ice Lake.

Intel has already slashed its pricing on server chips to remain competitive with AMD's EPYC onslaught. It's easy to imagine that the company will lean on its incumbency and all the advantages that entails, like its robust Server Select platform offerings, wide software optimization capabilities, platform adjacencies like networking, FPGA, and Optane memory, along with aggressive pricing to hold the line.

AMD has obviously prioritized its supply of server processors during the pandemic-fueled supply chain disruptions and explosive demand that we've seen over the last several months. It's natural to assume that the company has been busy building Milan inventory for the general launch. We spoke with AMD's Forrest Norrod, and he tells us that the company is taking steps to ensure that it has an adequate supply for its customers with mission-critical applications.

One thing is clear, though. Both x86 server vendors benefit from a rapidly expanding market, but ARM-based servers have become more prevalent than we've seen in the recent past. For now, the bulk of the ARM uptake seems limited to cloud service providers, like AWS with its Graviton 2 chips. In contrast, uptake is slow in the general data center and enterprise due to the complexity of shifting applications to the ARM architecture. Continuing and broadening uptake of ARM-based platforms could begin to change that paradigm in the coming years, though, as x86 faces its most potent threat in recent history. Both x86 vendors will need a steady cadence of big performance improvements in the future to hold the ARM competition at bay.

Unfortunately, we'll have to wait for Ice Lake to get a true view of the competitive x86 landscape over the next year. That means the jury is still out on just what the data center will look like as AMD works on its next-gen Genoa chips and Intel readies Sapphire Rapids.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.