R600: Finally DX10 Hardware from ATI

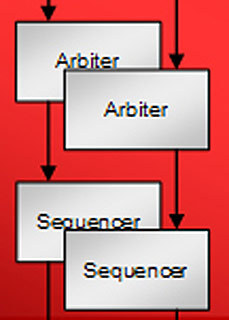

Sequencing

Associated with each arbiter is a sequencer. This is a small program counter with state association that says "x" thread has won the arbitration. It then executes the thread next on the SIMD array. As an example, say there are 12 sets of instructions on the one shader which need to be executed on the ALU before it needs to do a texture fetch. The sequencer executes that segment of the thread until the thread goes to sleep again. It will sleep until it wins arbitration for the texture fetch. When it wins, the sleeping thread is revived and can process the next step in its instruction set. Even when it immediately wins the arbitration for the texture fetch after it finished the first 12 instructions, it will then sleep until the data comes back so not to waste clock cycles on the ALU.

Based on this build it is not uncommon to have a lot sleeping because it can process many different threads on the fly. While each thread sleeps, there are other instructions being executed which have won arbitration for a particular resource. This helps ensure that the hardware is fully utilized. What happens if there are more threads than resources? This would be common as there should be at least two threads in flight on each SIMD. That means 50% of the work available is not executing. The situation could arise in which there is more sleeping threads than physical local cache space. This is where virtualization comes in.

Virtualization

All of the shader caches are virtualized. Previous products had limited amount of storage for the pixel shaders with 64 on a scalar and 64 on the vector for R300 and R400. The 5xx series raised that to 1500 instructions and while there is a physical shader instruction cache, R600 completely virtualized it. Sleeping shaders still need to fit into physical memory addresses of some physical memory. The total number instructions are "virtually" made unlimited. 2^32 specific words can be accessed, which would be about a 4 GB shader. As for the total number of instructions with loops etc., it would be virtually limitless. This is about the same that a CPU can do if looped.

This is how the typical flow for having a thread to get a compute for a particular shader would happen. It is first fetched from the system or other addressable memory. It then is brought into a cache and executed very much like a CPU does but is much more complicated after that. Being fully associative caches, they can hold all of the instructions for all of the shaders, at the same time. Unlike a CPU, which typically only has two to three threads of instructions, the R600 series has different shaders and each is executing sections of the shaders at a time.

There is also a constant cache. Constants are values used in the shaders. These values are not sent down into the shader per draw command or primitive. Their values can range from 1.0 and 2.0, pi and e or some other value. These are the "semi-static variables" used in a program. All of it, as well as the instructions, are cached in. DX9 introduced these and they also had to fit into physical amounts of memory. DX10 once again virtualized this. DX10 can have a tremendous number of constants so ATI cached the whole system. It all gets faulted in as it is needed.

Why Do All Of That Work? Efficiency, Parallelism And Latencies

Why does ATI have so many threads in flight and arbitrate all of the resources? Couldn't you just do the next thing and put it away one at a time? The reality is that there is latency to deal with. It takes time to do work or fetch something. If the shaders says "go fetch a texture," even on a cache hit, it needs to do a level of detail (LOD) computation on that texture address, do a cache check, get the data from that cache, filter that data and then bring it back. That process is going to take a dozen of cycles, at least. If there is a cache miss, then you are talking about a memory cycle. Hundreds of cycles could be spent going to DRAM and back. It could effectively be thousands of cycles.

The reality is that not only is this single unit asking for a texture, other parts of the hardware, such as the raster back end, are initiating memory fetches at the same time. According to ATI, there can be over "80 read clients" and each wants something from memory. There is a lot of memory bandwidth as R600 with GDDR3 has about 105 GB per second and with GDDR4, it is 160 GB per second.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Even with so much bandwidth, there are many clients asking for a lot of bandwidth and this is typically not enough. It is important for them to continue asking, but to be efficient, it is necessary NOT answer them right away. These requests need to be organized into efficient ways for the memory, such as staying on open DRAM pages. The trick is to keep very high memory efficiency in order to maintain peak bandwidth. In order to stay close to the peak bandwidth, not only do you have to be efficient on the memory but the system needs to be very tolerant of latency. When a request is made to the memory controller or anything outside the shader, it is important to get your devices accustomed to waiting. As mentioned before, it is common for units to wait dozens, hundreds and thousands of cycles for data.

In order to hide latencies, it is ideal to do a lot of work in parallel. As soon the hardware is finished working on one piece and knows it is going to wait to do the next section of work, it is put to sleep (even if it is a few dozen cycles). Then it finds another piece of work to do. By having hundreds of threads working in parallel, the hardware should almost always have something to do.

In theory it should be easy to go to the next piece and the next continually. All the while the hardware will keep working on things to arbitrate and sequence into the best fit scenarios to keep things flowing with efficacy. If there is a pause, the hardware can even store their state, sleep it, and we work on something else. When the work in sleep mode is retrieved, it can come back ready to finish what it was waiting for. With enough threads in flight, theoretically it should be no problem making sure the units have something to work on.

A CPU can only process a few threads at a time, while graphics processors can simultaneously accommodate thousands of threads. ATI has its workload set to 16 pixels per thread. When you multiply that by the number of threads, it means that at any given moment there can be tens of thousands of pixels in the shaders. With an abundance of work in a parallel manner, there should be no worries about masking latency. That is the art of parallel processing and why GPUs more easily hit their theoretical numbers, compared to CPUs.