Nvidia's CUDA: The End of the CPU?

The Theory: CUDA from the Hardware Point of View

If you’re a faithful reader of Tom’s Hardware, the architecture of the latest Nvidia GPUs won’t be unfamiliar to you. If not, we advise you to do a little homework. With CUDA, Nvidia presents its architecture in a slightly different way and exposes certain details that hadn’t been revealed before now.

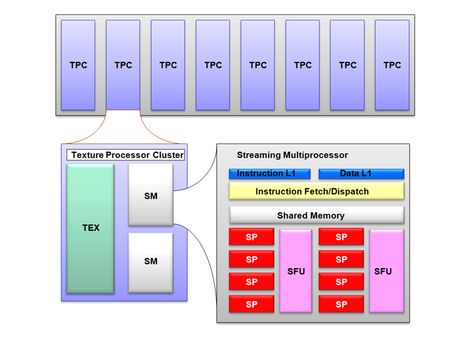

As you can see above, Nvidia’s Shader Core is made up of several clusters Nvidia calls Texture Processor Clusters. An 8800GTX, for example, has eight clusters, an 8800GTS six, and so on. Each cluster, in fact, is made up of a texture unit and two streaming multiprocessors. These processors consist of a front end that reads/decodes and launches instructions and a backend made up of a group of eight calculating units and two SFUs (Super Function Units) where the instructions are executed in SIMD fashion: The same instruction is applied to all the threads in the warp. Nvidia calls this mode of execution SIMT (for single instruction multiple threads). It’s important to point out that the backend operates at double the frequency of the front end. In practice, then, the part that executes the instructions appears to be twice as “wide” as it actually is (that is, as a 16-way SIMD unit instead of an eight-way one). The streaming multiprocessors’ operating mode is as follows: At each cycle, a warp ready for execution is selected by the front end, which launches execution of an instruction. To apply the instruction to all 32 threads in the warp, the backend will take four cycles, but since it operates at double the frequency of the front end, from its point of view only two cycles will be executed. So, to avoid having the front end remain unused for one cycle and to maximize the use of the hardware, the ideal is to alternate types of instructions every cycle – a classic instruction for one cycle and an SFU instruction for the other.

Each multiprocessor also has certain amount of resources that should be understood in order to make the best use of them. They have a small memory area called Shared Memory with a size of 16 KB per multiprocessor. This is not a cache memory – the programmer has a free hand in its management. As such, it’s like the Local Store of the SPUs on Cell processors. This detail is particularly interesting, and demonstrates the fact that CUDA is indeed a set of software and hardware technologies. This memory area is not used for pixel shaders – as Nvidia says, tongue in cheek, “We dislike pixels talking to each other.”

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: The Theory: CUDA from the Hardware Point of View

Prev Page A Few Definitions Next Page Hardware Point of View, Continued-

CUDA software enables GPUs to do tasks normally reserved for CPUs. We look at how it works and its real and potential performance advantages.Reply

Nvidia's CUDA: The End of the CPU? : Read more -

Well if the technology was used just to play games yes, it would be crap tech, spending billions just so we can play quake doesnt make much sense ;)Reply

-

dariushro The Best thing that could happen is for M$ to release an API similar to DirextX for developers. That way both ATI and NVidia can support the API.Reply -

dmuir And no mention of OpenCL? I guess there's not a lot of details about it yet, but I find it surprising that you look to M$ for a unified API (who have no plans to do so that we know of), when Apple has already announced that they'll be releasing one next year. (unless I've totally misunderstood things...)Reply -

neodude007 Im not gonna bother reading this article, I just thought the title was funny seeing as how Nvidia claims CUDA in NO way replaces the CPU and that is simply not their goal.Reply -

LazyGarfield I´d like it better if DirectX wouldnt be used.Reply

Anyways, NV wants to sell cuda, so why would they change to DX ,-) -

I think the best way to go for MS is announce to support OpenCL like Apple. That way it will make things a lot easier for the developers and it makes MS look good to support the oen standard.Reply

-

Shadow703793 Mr RobotoVery interesting. I'm anxiously awaiting the RapiHD video encoder. Everyone knows how long it takes to encode a standard definition video, let alone an HD or multiple HD videos. If a 10x speedup can materialize from the CUDA API, lets just say it's more than welcome.I understand from the launch if the GTX280 and GTX260 that Nvidia has a broader outlook for the use of these GPU's. However I don't buy it fully especially when they cost so much to manufacture and use so much power. The GTX http://en.wikipedia.org/wiki/Gore-Tex 280 has been reported as using upwards of 300w. That doesn't translate to that much money in electrical bills over a span of a year but never the less it's still moving backwards. Also don't expect the GTX series to come down in price anytime soon. The 8800GTX and it's 384 Bit bus is a prime example of how much these devices cost to make. Unless CUDA becomes standardized it's just another niche product fighting against other niche products from ATI and Intel.On the other hand though, I was reading on Anand Tech that Nvidia is sticking 4 of these cards (each with 4GB RAM) in a 1U formfactor using CUDA to create ultra cheap Super Computers. For the scientific community this may be just what they're looking for. Maybe I was misled into believing that these cards were for gaming and anything else would be an added benefit. With the price and power consumption this makes much more sense now. Agreed. Also I predict in a few years we will have a Linux distro that will run mostly on a GPU.Reply