Early Verdict

Radeon RX Vega 56 has the potential to be a GeForce GTX 1070 killer if AMD can make enough of them to hit its pricing target. Although the card uses a lot of power, kicks out quite a bit of heat, and makes more noise than the competition under load, performance-oriented enthusiasts will like the way it stacks up in our 1440p benchmarks.

Pros

- +

Faster than GeForce GTX 1070 FE

- +

~30% lower power than Vega 64

- +

Great 1440p performance

Cons

- -

Higher power consumption than GTX 1070

- -

Cooling fan is loud under load

- -

Uncertain value given volatile pricing

Why you can trust Tom's Hardware

Introduction

Flagships always attract the most attention. They’re big, bad, and expensive, and enthusiasts love fawning over high-end hardware. But in the hours leading up to AMD’s Radeon RX Vega 64 launch, the company seemed prescient of its top model’s fate. Radeon RX Vega 56 landed as we were wrapping up a story that’d be published in English, French, German, and Italian with a note: “…we ask that you consider prioritizing your coverage of Radeon RX Vega 56.”

The wheels were already in motion and we couldn’t add the derivative board in time for AMD’s embargo. So, we planned a review to focus solely on Vega 56 with even more data. And we’re glad we did. Not only did we have a chance to expand our testing, but we also saw Radeon RX Vega 64 launch, sell out in minutes, and then slowly trickle back into stock at substantially higher prices.

Of course, it’s not uncommon to see new hardware sell out quickly and then start commanding a premium. It happens all of the time, particularly in the high-end space. The competition has a 15-month head start and plenty of product in the channel, though. There’s no room for an air-cooled Radeon RX Vega 64 beyond $500—it simply doesn’t get recommended over GeForce GTX 1080. AMD needs its flagship right where it launched…or lower.

Lower is the realm of Radeon RX Vega 56, which AMD tells us will sell for $400. But if you think today’s issues with Vega 64 availability are going to get better come Vega 56, then you’re sorely mistaken. AMD wants to sell as many of its 12.5-billion-transistor Vega 10 GPUs on $500 (or higher) cards. Unless it’s having a serious yield issue, it’s almost inconceivable that we’d see more Vega 56 cards on launch day.

Will Radeon RX Vega 56 be worth waiting for then, once it’s readily in stock at AMD’s recommended price? Now that’s a question we can sink our teeth into.

Specifications

Meet Radeon RX Vega 56

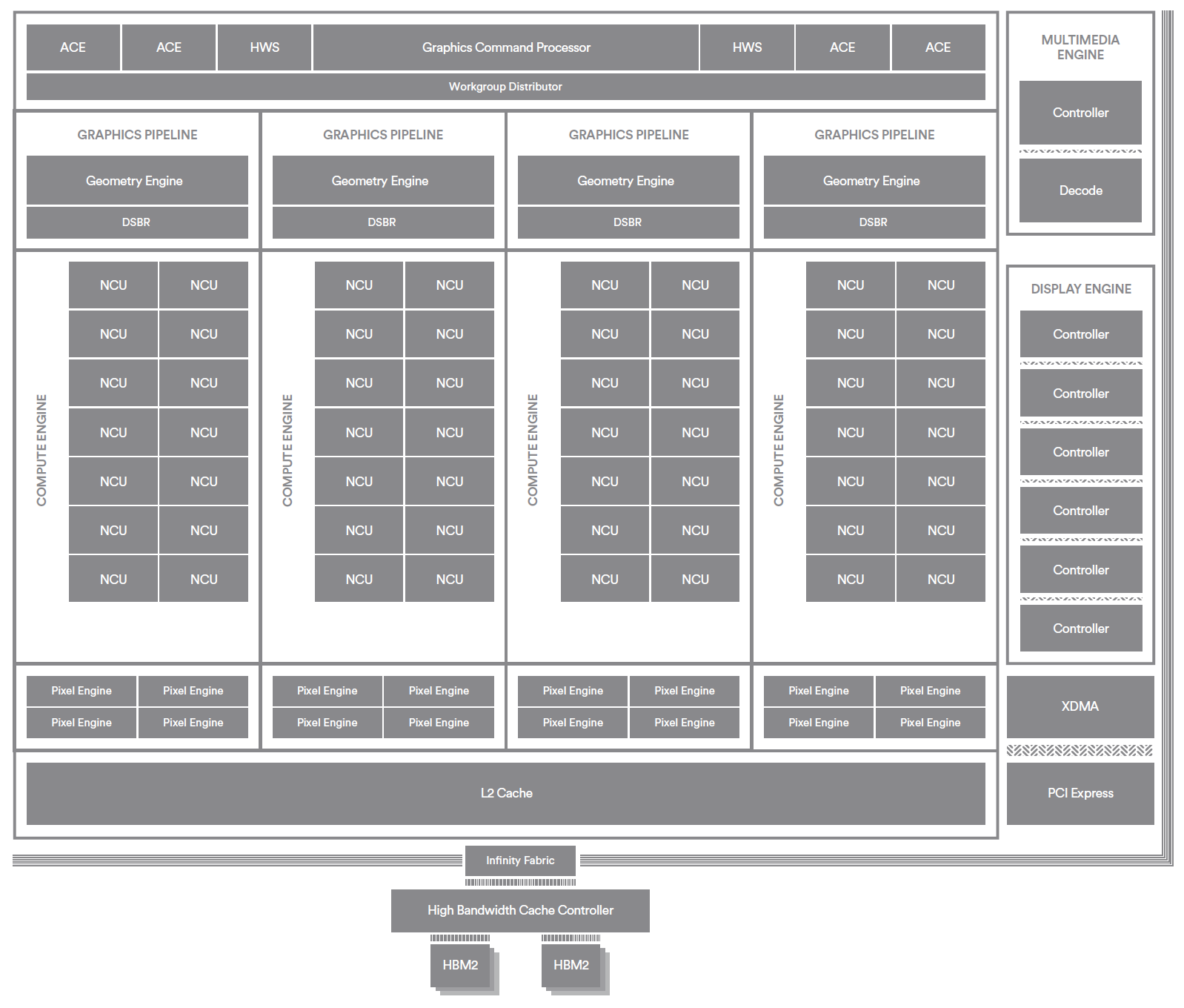

Radeon RX Vega 56 utilizes the same Vega 10 processor found in Vega 64. It’s a 486 mm² behemoth sporting 12.5 billion transistors manufactured on GlobalFoundries’ 14LPP platform. You’ll still find four Shader Engines under the hood, each with its own geometry processor and draw stream binning rasterizer.

But rather than 64 active Compute Units across those Shader Engines, AMD turns off two CUs per Shader Engine, leaving 56 enabled across the GPU. Given 64 Stream processors and four texture units per CU, you get 3584 Stream processors and 224 texture units--~88% of Vega’s NCU resources. This configuration takes another hit to SP and texturing throughput in the form of lower base and typical boost clock rates. Radeon RX Vega 56's base is 1156 MHz compared to Vega 64's 1274 MHz, while Vega 56's boost frequency is rated at 1471 MHz versus Vega 64's 1546 MHz. Using AMD’s peak compute performance figures, that knocks theoretical SP performance down from 13.7 TFLOPS to 10.5 TFLOPS.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Each of Vega 10's Shader Engines sports four render back-ends capable of 16 pixels per clock cycle, yielding 64 ROPs. These render back-ends become clients of the L2, as we already know. That L2 is now 4MB in size, whereas Fiji included 2MB of L2 capacity (already a doubling of Hawaii’s 1MB L2). Ideally, this means the GPU goes out to HBM2 less often, reducing Vega 10’s reliance on external bandwidth. Since Vega 10’s clock rates on the 56-CU card can get up to ~40% higher than Fiji’s, while memory bandwidth actually drops by 102 GB/s, a larger cache should do even more to help prevent bottlenecks here than on the flagship.

Adoption of HBM2 allows AMD to halve the number of memory stacks on its interposer compared to Fiji, cutting an aggregate 4096-bit bus to 2048 bits. And yet, rather than the 4GB ceiling that dogged Radeon R9 Fury X, RX Vega 56 comfortably offers 8GB using 4-hi stacks, similar to the Vega 64. A 1.6 Gb/s data rate facilitates a 410 GB/s bandwidth figure, exceeding what either the GeForce GTX 1070 or 1080 have available to them using GDDR5 or GDDR5X, respectively. Still, it's a little surprising that AMD carves out ~15% of its throughput budget, given the emphasis on memory all the way back to Hawaii (512-bit aggregate bus) and Fiji (HBM enabling 512 GB/s). We have to imagine that a 3584-shader configuration at up to almost 50%-higher clocks than Radeon R9 Fury won't scale as well as it could with a beefier memory subsystem.

| Model | Cooling Type | BIOS Mode | Power Profile | |

|---|---|---|---|---|

| RX Vega 56 | Power Save | Balanced | Turbo | |

| Air | Primary | 150W | 165W | 190W |

| Secondary | 135W | 150W | 173W |

Like Vega 64, Radeon RX Vega 56 offers two on-board BIOSes, each with three corresponding driver-based power profiles. The primary firmware in its default Balanced mode imposes a GPU power limit of 165W. Turbo loosens the reigns to 190W, while Power Save pulls Vega 10 back to 150W. The second BIOS is eco-friendlier, defining 135W, 150W, and 173W limits for the three power profiles. Of course, board power is higher, and AMD defines just one specification for this: 210W. That’s 71% of Radeon RX Vega 64’s board power, reflecting the combined impact of fewer active resources and lower GPU/memory frequencies.

Look, Feel & Connectors

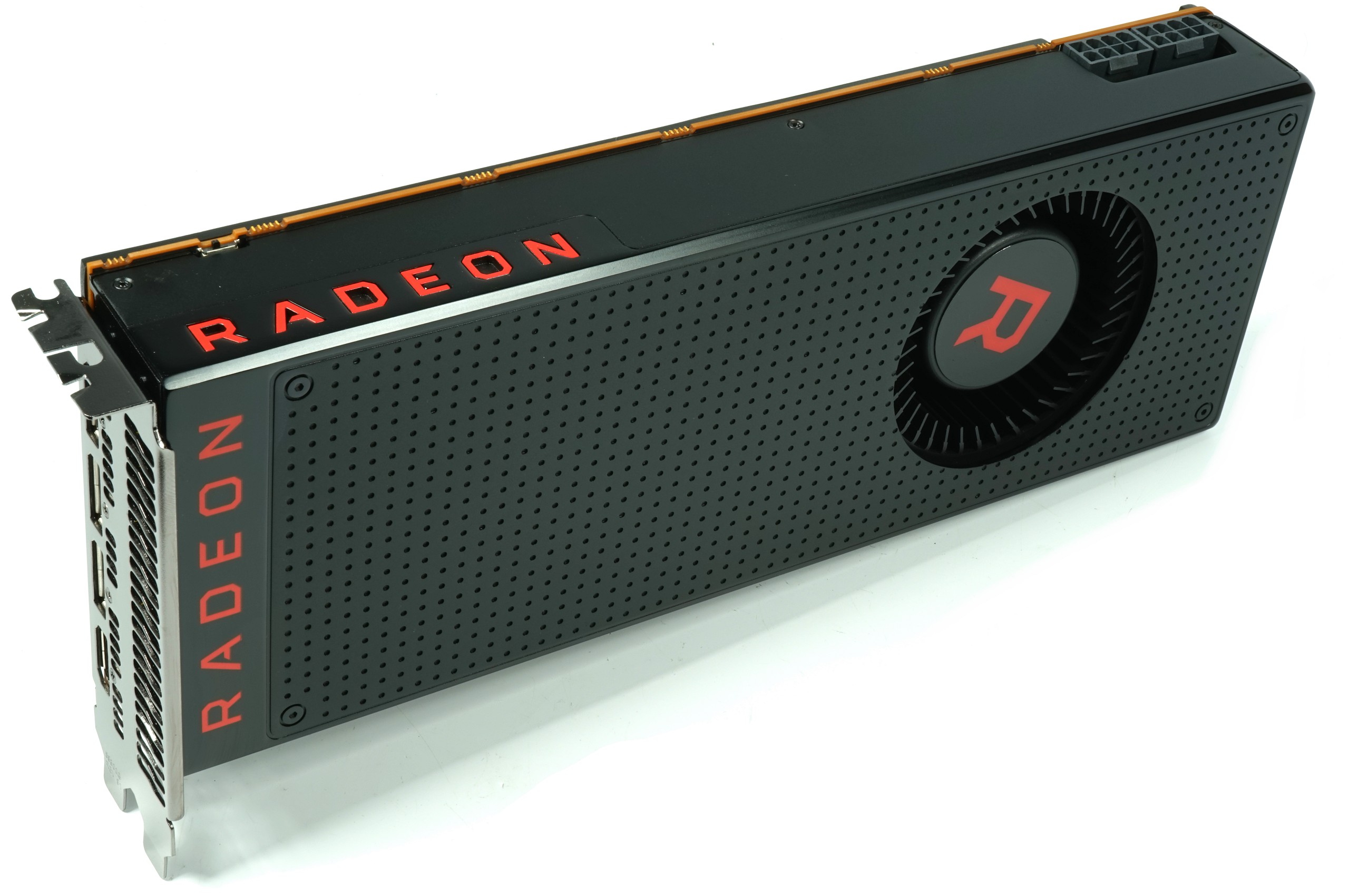

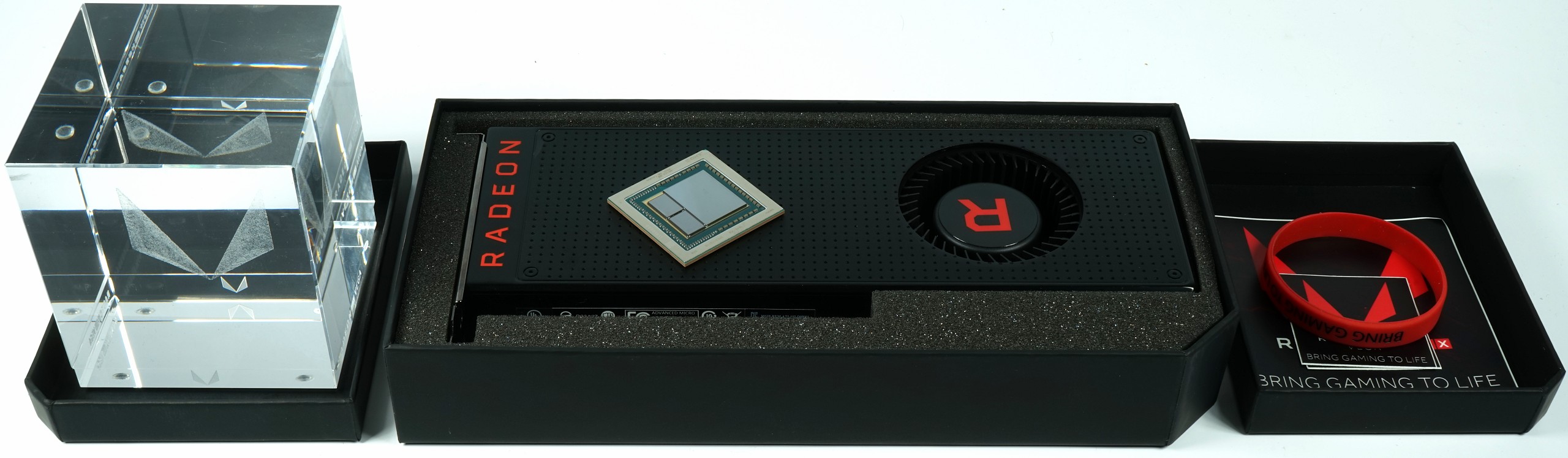

AMD’s RX Vega 56 weighs in at 1064g, which makes it 14g heavier than the Frontier Edition. Its length is 26.8cm (from bracket to end of cover), its height is 10.5cm (from top of motherboard slot to top of cover), and its depth is 3.8cm. This makes it a true dual-slot graphics card, even though the backplate does add another 0.4cm to the back.

Both the cover and the backplate are made of black anodized aluminum, giving the card a cool and high-quality feel. The surface texture is achieved using simple cold forming that preceded the aluminum’s anodization. All of the screws are painted matte black. The red Radeon logo on the front is printed, and provides the only splash of color.

The top of the card is dominated by two eight-pin PCIe power connectors and the red Radeon logo, which lights up. There’s also a two-position switch that allows access to the aforementioned secondary BIOS optimized for lower power consumption and its corresponding driver-based power profiles. These make the card quieter, cooler, and, of course, a bit slower.

The end of the card is closed and includes mounting holes that are a common sight on workstation graphics cards. The powder-coated matte black slot bracket is home to three DisplayPort connectors and one HDMI 2.0 output. There is no DVI interface, which was a smart choice since it allows for much better airflow. The slot bracket doubles as the exhaust vent, after all.

MORE: Best Graphics Cards

MORE: Desktop GPU Performance Hierarchy Table

MORE: All Graphics Content

-

kjurden What a crock! I didn't realize that Tom's hardware pandered to the iNvidiot's. AMD VEGA GPU's have rightfully taken the performance crown!Reply -

Martell1977 Vega 56 vs GTX 1070, Vega goes 6-2-2 = Winner Vega!Reply

Good job AMD, hopefully next gen you can make more headway in power efficiency. But this is a good card, even beats the factory OC 1070. -

Wisecracker Thanks for the hard work and in-depth review -- any word on Vega Nano?Reply

Some 'Other Guys' (Namer Gexus?) were experimenting on under-volting and clock-boosting with interesting results. It's not like you guys don't have enough to do, already, but an Under-Volt-Off Smack Down between AMD and nVidia might be fun for readers ...

-

pavel.mateja No undervolting tests?Reply

https://translate.google.de/translate?sl=de&tl=en&js=y&prev=_t&hl=de&ie=UTF-8&u=https://www.hardwareluxx.de/index.php/artikel/hardware/grafikkarten/44084-amd-radeon-rx-vega-56-und-vega-64-im-undervolting-test.html&edit-text= -

10tacle Reply20112576 said:What a crock! I didn't realize that Tom's hardware pandered to the iNvidiot's. AMD VEGA GPU's have rightfully taken the performance crown!

Yeah Tom's Hardware does objective reviewing. If there are faults with something, they will call them out like the inferior VR performance over the 1070. This is not the National Inquirer of tech review sites like WCCTF. There are more things to consider than raw FPS performance and that's what we expect to see in an honest objective review.

Guru3D's conclusion with caveats:

"For PC gaming I can certainly recommend Radeon RX Vega 56. It is a proper and good performance level that it offers, priced right. It's a bit above average wattage compared to the competitions product in the same performance bracket. However much more decent compared to Vega 64."

Tom's conclusion with caveats:

"Even when we compare it to EVGA’s overclocked GeForce GTX 1070 SC Gaming 8GB (there are no Founders Edition cards left to buy), Vega 56 consistently matches or beats it. But until we see some of those forward-looking features exposed for gamers to enjoy, Vega 56’s success will largely depend on its price relative to GeForce GTX 1070."

^^And that's the truth. If prices of the AIB cards coming are closer to the GTX 1080, then it can't be considered a better value. This is not AMD's fault of course, but that's just the reality of the situation. You can't sugar coat it, you can't hide it, and you can't spin it. Real money is real money. We've already seen this with the RX 64 prices getting close to GTX 1080 Ti territory.

With that said, I am glad to see Nvidia get direct competition from AMD again in the high end segment since Fury even though it's a year and four months late to the party. In this case, the reference RX 56 even bests an AIB Strix GTX 1070 variant in most non-VR games. That's promising for what's going to come with their AIB variants. The question now is what's looming on the horizon in an Nvidia response with Volta. We'll find out in the coming months. -

shrapnel_indie We've seen what they can do in a factory blower configuration. Are board manufacturers allowed to take 64 and 56 and do their own designs and cooling solutions, where they can potentially coax more out of it (power usage aside)? Or are they stuck with this configuration as Fury X and Fury Nano were stuck?Reply -

10tacle No, there will be card vendors like ASUS, Gigabyte, and MSI who will have their own cooling. Here's a review of an ASUS RX 64 Strix Gaming:Reply

http://hexus.net/tech/reviews/graphics/109078-asus-radeon-rx-vega-64-strix-gaming/ -

pepar0 Reply

Will any gamers buy this card ... will any gamers GET to buy this card? Hot, hungry, noisy and expensive due to the crypto currency mining craze was not what this happy R290 owner had in mind.20112412 said:Radeon RX Vega 56 should be hitting store shelves with 3584 Stream processors and 8GB of HBM2. Should you scramble to snag yours or shop for something else?

AMD Radeon RX Vega 56 8GB Review : Read more