Sapphire Toxic HD 7970 GHz Edition Review: Gaming On 6 GB Of GDDR5

Sapphire gives its new flagship graphics card 6 GB of very fast memory, compared to the mere 3 GB on AMD's reference card. Does this give Sapphire's Toxic HD 7970 GHz Edition a real-world speed boost? We connect it to an epic six-screen array to find out.

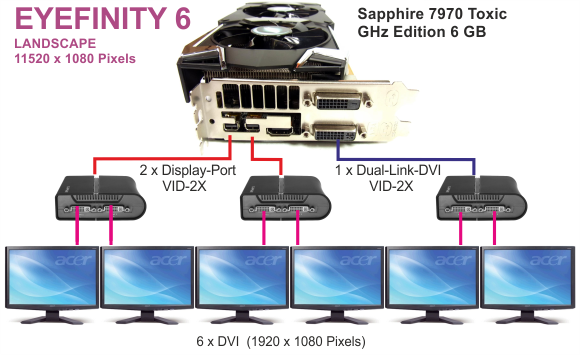

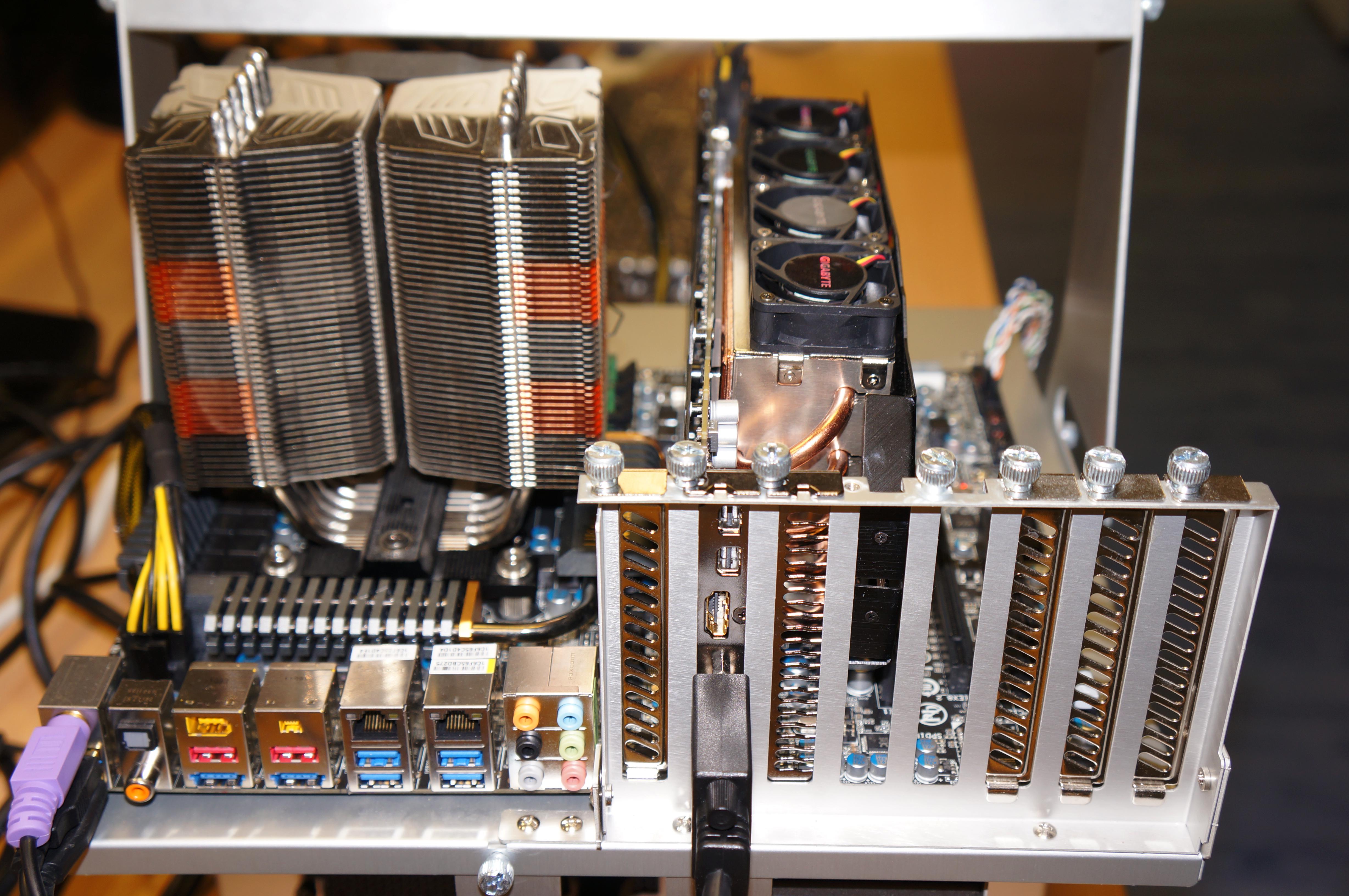

Setting Up And Benchmarking Eyefinity 6

As a result of the way our splitters work, the monitors could only be set up in two configurations: ultra-widescreen with a 11520x1080 resolution or three rows of two monitors stacked on top of each other, resulting in 3840x3840. The second option just looked weird. So, we used the ultra-widescreen arrangement.

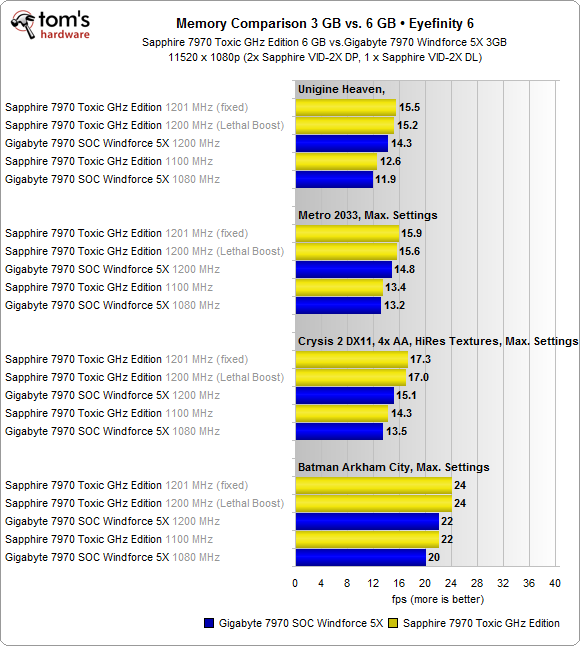

Although that looks like a fairly simple setup, few games will actually run at a resolution as crazy as 11520x1080. We were able to test with one synthetic metric and three real-world titles, comparing the performance of 3 and 6 GB cards at different clock rates.

Based on these frame rates, it's pretty clear that one card can't drive six monitors with playable performance, at least not without massively lowering the graphics settings. And if you're forced to do that, does it even make sense to spend so much money?

At similar GPU frequencies, Sapphire's Toxic HD 7970 GHz Edition 6 GB is faster than Gigabyte's Radeon HD 7970 Super Overclock due to its faster graphics memory. The difference shrinks if we set both cards to operate at the same clock rates.

The lesson we learned is that 6 GB, on its own, doesn't really affect performance, even at the insane resolutions needed to take advantage of that much memory. The additional 3 GB is almost pointless today, then, particularly since one card isn't fast enough to push playable frame rates in popular games.

Unfortunately, one of our Sapphire Vid-2X splitters stopped working before we could switch over and try the 2x3 monitor setup. It seemed as though 30 degrees Celsius (86 degrees Fahrenheit) in a full room on a hot summer day was too much for it. Clearly, heat problems aren’t exclusive to graphics cards.

We carried on with just four monitors, and were precluded from repeating the benchmarks later at a similar event, as Sapphire needed its card back.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Setting Up And Benchmarking Eyefinity 6

Prev Page Building An Eyefinity-Capable System Next Page Setting Up And Benchmarking Eyefinity 4

Igor Wallossek wrote a wide variety of hardware articles for Tom's Hardware, with a strong focus on technical analysis and in-depth reviews. His contributions have spanned a broad spectrum of PC components, including GPUs, CPUs, workstations, and PC builds. His insightful articles provide readers with detailed knowledge to make informed decisions in the ever-evolving tech landscape

-

Youngmind The 6gb of memory might not have much of an effect with only a single card, but I wonder if it will have a larger impact if you use in configurations with more graphics cards such as tri-crossfire and quad-crossfire? If people are willing to spend so much money on monitors, I think they'd be willing to spend a lot of money on tri/quad graphics card configurations.Reply -

robthatguyx i think this would perform much better with a trifire.if one 7970 reference can handle 3 screens than 3 of these could easily eat 6 screen,in my op YoungmindThe 6gb of memory might not have much of an effect with only a single card, but I wonder if it will have a larger impact if you use in configurations with more graphics cards such as tri-crossfire and quad-crossfire? If people are willing to spend so much money on monitors, I think they'd be willing to spend a lot of money on tri/quad graphics card configurations.Reply -

palladin9479 YoungmindThe 6gb of memory might not have much of an effect with only a single card, but I wonder if it will have a larger impact if you use in configurations with more graphics cards such as tri-crossfire and quad-crossfire? If people are willing to spend so much money on monitors, I think they'd be willing to spend a lot of money on tri/quad graphics card configurations.Reply

Seeing as in both SLI and CFX memory contents are copied to each card, you would practically need that much for ridiculously large screen playing. One card can not handle multiple screens as this was designed for, you need at least two for a x4 screen and three for a x6 screen. The golden rule seems to be two screens per high end card. -

tpi2007 YoungmindThe 6gb of memory might not have much of an effect with only a single card, but I wonder if it will have a larger impact if you use in configurations with more graphics cards such as tri-crossfire and quad-crossfire? If people are willing to spend so much money on monitors, I think they'd be willing to spend a lot of money on tri/quad graphics card configurations.Reply

This.

BigMack70Would be very interested in seeing this in crossfire at crazy resolutions compared to a pair of 3GB cards in crossfire to see if the vram helps in that case

And this.

Tom's Hardware, if you are going to be reviewing a graphics card with 6 GB of VRAM you have to review at least two of them in Crossfire. VRAM is not cumulative, so using two regular HD 7970 3 GB in Crossfire still means that you only have a 3 GB framebuffer, so for high resolutions with multiple monitors, 6 GB might make the difference.

So, are we going to get an update to this review ? As it is it is useless. Make a review with at least two of those cards with three 30" 1600p monitors. That is the kind of setup someone considering buying one of those cards will have. And that person won't buy just one card. Those cards with 6 GB of VRAM were made to be used at least in pairs. I'm surprised Sapphire didn't tell you guys that in the first place. In any case, you should have figured it out.

-

FormatC ReplyTom's Hardware, if you are going to be reviewing a graphics card with 6 GB of VRAM you have to review at least two of them in Crossfire.

Sapphire was unfortunately not able to send two cards. That's annoying, but not our problem. And: two of these are cards are deadly for my ears ;) -

tpi2007This.And this.Tom's Hardware, if you are going to be reviewing a graphics card with 6 GB of VRAM you have to review at least two of them in Crossfire. VRAM is not cumulative, so using two regular HD 7970 3 GB in Crossfire still means that you only have a 3 GB framebuffer, so for high resolutions with multiple monitors, 6 GB might make the difference.So, are we going to get an update to this review ? As it is it is useless. Make a review with at least two of those cards with three 30" 1600p monitors. That is the kind of setup someone considering buying one of those cards will have. And that person won't buy just one card. Those cards with 6 GB of VRAM were made to be used at least in pairs. I'm surprised Sapphire didn't tell you guys that in the first place. In any case, you should have figured it out.Why not go to the uber-extreme and have crossfire X (4gpus) with six 2500X1600 monitors and crank up the AA to 4x super sampling to prove once and for all in stone.Reply

-

freggo FormatCSapphire was unfortunately not able to send two cards. That's annoying, but not our problem. And: two of these are cards are deadly for my earsReply

Thanks for the review. The noise demo alone helps in making a purchase decission.

No sale !

Anyone know why no card has been designed to be turned OFF ( 0 Watts !) when idle, and the system switching to internal graphics for just desktop stuff or simple tasks?

Then applications like Photoshop, Premiere or the ever popular Crisis could 'wake up' the card and have the system switch over.

Or are there cards like that ?

-

FormatC For noise comparison between oc'ed Radeons HD 7970 take a look at this:Reply

http://www.tomshardware.de/Tahiti-XT2-HD-7970-X-X-Edition,testberichte-241091-6.html

-

dudewitbow freggoThanks for the review. The noise demo alone helps in making a purchase decission.No sale !Anyone know why no card has been designed to be turned OFF ( 0 Watts !) when idle, and the system switching to internal graphics for just desktop stuff or simple tasks?Then applications like Photoshop, Premiere or the ever popular Crisis could 'wake up' the card and have the system switch over.Or are there cards like that ?Reply

I think that has been applied to laptops, but not on the desktop scene. One of the reasons why I would think its not as useful on a desktop scene is even if your build has stuff off, the PSU is the least efficient when on near 0% load, so no matter what, your still going to burn electricity just by having the computer on. All gpus nowandays have downclocking features when its not being on load(my 7850 downclocks to 300mhz on idle) but I wouldnt think cards will go full out 0.