23 Years Of Supercomputer Evolution

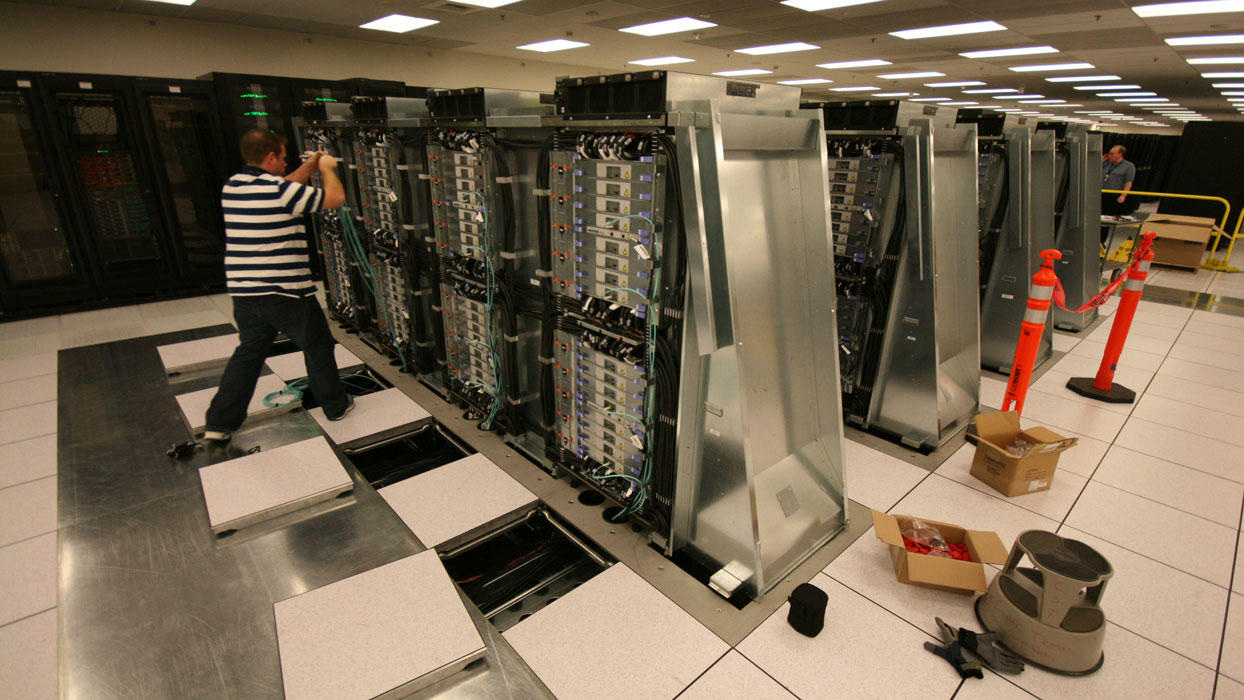

June 2012: Sequoia BlueGene/Q

In June 2012, the Sequoia BlueGene/Q became the first supercomputer to surpass 1.5 million cores. Despite the fact that it has more than twice the number of cores of the K Computer, it consumed almost half the power (7890 kW).

The system was comprised of 16-core PowerPC processors clocked at 1.6 GHz, and was the first supercomputer to exceed 20 PFlops of theoretical computational power. In practice, the system achieved 16 PFlops. The machine was installed in a national laboratory belonging to the US Department of Energy. It is also important because it marks the return of the United States to the top of the TOP500 list.

November 2012: Cray XK7 (Titan)

In November 2012, IBM was again beaten by the Cray XK7-based Titan. This system contained almost 300,000 Opteron 6274 processors and more than 260,000 K20x NVIDIA GPUs. This system marked the first time AMD would be used in the world's fastest supercomputer since the Jaguar 3.0 supercomputer that dominated the list in June 2010.

Its theoretical processing power did not surpass the BlueGene/Q, but its practical performance was rated at 17.6 Pflops, edging out the BlueGene/Q. It consumed roughly 8209 kW of power. It was installed in the US Department of Energy's Oak Ridge National Laboratory.

Another significant event in the top 10 super computers in November 2012 was the entry of the Xeon Phi.

June 2013: Tianhe-2 (MilkyWay-2)

In June 2013, China took back the lead with a supercomputer that broke several records. The Tianhe-2 exceeded 50 PFlops of theoretical computational power (54.9 PFlops). It also exceeded 33 Pflops of real world performance under Linpack, nearly double what the second place Cray XK7 was capable of.

To achieve this performance, Tianhe-2 uses approximately 3.12 million cores, breaking the record for the most CPU cores in a supercomputer. It also proved to be the most power hungry super computer, consuming in excess of 17,000 kw (17,808 kw).

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Tianhe-2 is installed in the National University of Defense Technology. The system was a surprise to everyone when it began operations two years early. Each node in the Tianhe-2 is comprised of two 12-core Xeon E5-2692 processors clocked at 2.2 GHz, and three Xeon Phi 31S1P compute cards which deliver the majority of the performance. It persists as the world's fastest supercomputer today.

June 2016: Sunway TaihuLight

In June 2016, the Tianhe-2 was overtaken by China’s new Sunway TaihuLight as the world’s fastest supercomputer. Following the Tianhe-2’s development, the U.S. government restricted the sale of server-grade Intel processors in China in an attempt to give the U.S. time to build a new supercomputer capable of surpassing the Tianhe-2. As a result, China was unable to obtain significant numbers of Intel processors to upgrade the Tianhe-2 or build a successor, so instead the TaihuLight uses ShenWei RISC CPUs developed by the National Research Center of Parallel Computer Engineering and Technology (NRCPC) organization in China.

The TaihuLight contains 40,960 ShenWei SW26010 processors, one inside of each supercomputer node. Each SW26010 contains 260 cores, which results in a total of 10,649,600 cores in the TaihuLight. The supercomputer has a peak theoretical processing power of approximately 125 petaflops, and it scores 93 petaflops under Linpack, making it roughly three times faster than the Tianhe-2. It is also incredibly efficient compared to the Tianhe-2, as it consumes just 15.3 megawatts of power, a full 2.5 megawatts less than the Tianhe-2 while performing three times the work.

MORE: Best Deals

MORE: Hot Bargains @PurchDeals

Follow us on Facebook, Google+, RSS, Twitter and YouTube.

-

adamovera Archived comments are found here: http://www.tomshardware.com/forum/id-2844227/years-supercomputer-evolution.htmlReply -

bit_user SW26010 is basically a rip-off of the Cell architecture that everyone hated programming so much that it never had a successor.Reply

It might get fast Linpack benchies, but I don't know how much else will run fast on it. I'd be surprised if they didn't have a whole team of programmers just to optimize Linpack for it.

I suspect Sunway TaihuLight was done mostly for bragging rights, as opposed to maximizing usable performance. On the bright side, I'm glad they put emphasis on power savings and efficiency. -

bit_user BTW, nowhere in the article you linked does it support your claim that:Replythe U.S. government restricted the sale of server-grade Intel processors in China in an attempt to give the U.S. time to build a new supercomputer capable of surpassing the Tianhe-2.

-

bit_user aldaia only downvotes because I'm right. If I'm wrong, prove it.Reply

Look, we all know China will eventually dominate all things. I'm just saying this thing doesn't pwn quite as the top line numbers would suggest. It's a lot of progress, nonetheless.

BTW, China's progress would be more impressive, if it weren't tainted by the untold amounts of industrial espionage. That makes it seem like they can only get ahead by cheating, even though I don't believe that's true.

And if they want to avoid future embargoes by the US, EU, and others, I'd recommend against such things as massive DDOS attacks on sites like github.

-

g00ey Reply18201362 said:SW26010 is basically a rip-off of the Cell architecture that everyone hated programming so much that it never had a successor.

It might get fast Linpack benchies, but I don't know how much else will run fast on it. I'd be surprised if they didn't have a whole team of programmers just to optimize Linpack for it.

I suspect Sunway TaihuLight was done mostly for bragging rights, as opposed to maximizing usable performance. On the bright side, I'm glad they put emphasis on power savings and efficiency.

I'd assume that what "everyone" hated was to have to maintain software (i.e. games) for very different architectures. Maintaining a game for PS3, XBOX360 and PC that all have their own architecture apparently is more of a hurdle that if they all were Intel-based or whatever architecture have you. At least XboX360 had DirectX...

In the heydays of PowerPC, developers liked it better than the Intel architecture, particularly assembler developers. Today, it may not have that "fancy" stuff such as AVX, SSE etc but it probably is quite capable for computations. Benchmarks should be able to give some indications... -

bit_user Reply

I'm curious how you got from developers hating Cell programming, to game companies preferring not to support different platforms, and why they would then single out the PS3 for criticism, when this problem was hardly new. This requires several conceptual leaps from my original statement, as well as assuming I have even more ignorance of the matter than you've demonstrated. I could say I'm insulted, but really I'm just annoyed.18208196 said:I'd assume that what "everyone" hated was to have to maintain software (i.e. games) for very different architectures.

No, you're way off base. Cell was painful to program, because the real horsepower is in the vector cores (so-called PPEs), but they don't have random access to memory. Instead, they have only a tiny bit of scratch pad RAM, and must DMA everything back and forth from main memory (or their neighbors). This means virtually all software for it must be effectively written from scratch and tuned to queue up work efficiently, so that the vector cores don't waste loads of time doing nothing while data is being copied around. Worse yet, many algorithms inherently depend on random access and perform poorly on such an architecture.

In terms of programming difficulty, the gap in complexity between it and multi-threaded programming is at least as big as that separating single-threaded and multi-threaded programming. And that assumes you're starting from a blank slate - not trying to port existing software to it. I think it's safe to say it's even harder than GPU programming, once you account for performance tuning.

Architectures like this are good at DSP, dense linear algebra, and not a whole lot else. The main reason they were able to make it work in a games console is because most game engines really aren't that different from each other and share common, underlying libraries. And as game engines and libraries became better tuned for it, the quality of PS3 games improved noticeably. But HPC is a different beast, which is probably why IBM never tried to follow it with any successors.

I'm not even sure what you're talking about, but I'd just point out that both Cell and the XBox 360's CPUs were derived from Power PC. And PPC did have AltiVec, which had some advantages over MMX & SSE.18208196 said:In the heydays of PowerPC, developers liked it better than the Intel architecture, particularly assembler developers. Today, it may not have that "fancy" stuff such as AVX, SSE etc but it probably is quite capable for computations. Benchmarks should be able to give some indications...

-

alidan Reply18208196 said:18201362 said:SW26010 is basically a rip-off of the Cell architecture that everyone hated programming so much that it never had a successor.

It might get fast Linpack benchies, but I don't know how much else will run fast on it. I'd be surprised if they didn't have a whole team of programmers just to optimize Linpack for it.

I suspect Sunway TaihuLight was done mostly for bragging rights, as opposed to maximizing usable performance. On the bright side, I'm glad they put emphasis on power savings and efficiency.

I'd assume that what "everyone" hated was to have to maintain software (i.e. games) for very different architectures. Maintaining a game for PS3, XBOX360 and PC that all have their own architecture apparently is more of a hurdle that if they all were Intel-based or whatever architecture have you. At least XboX360 had DirectX...

In the heydays of PowerPC, developers liked it better than the Intel architecture, particularly assembler developers. Today, it may not have that "fancy" stuff such as AVX, SSE etc but it probably is quite capable for computations. Benchmarks should be able to give some indications...

18209958 said:

I'm curious how you got from developers hating Cell programming, to game companies preferring not to support different platforms, and why they would then single out the PS3 for criticism, when this problem was hardly new. This requires several conceptual leaps from my original statement, as well as assuming I have even more ignorance of the matter than you've demonstrated. I could say I'm insulted, but really I'm just annoyed.18208196 said:I'd assume that what "everyone" hated was to have to maintain software (i.e. games) for very different architectures.

No, you're way off base. Cell was painful to program, because the real horsepower is in the vector cores (so-called PPEs), but they don't have random access to memory. Instead, they have only a tiny bit of scratch pad RAM, and must DMA everything back and forth from main memory (or their neighbors). This means virtually all software for it must be effectively written from scratch and tuned to queue up work efficiently, so that the vector cores don't waste loads of time doing nothing while data is being copied around. Worse yet, many algorithms inherently depend on random access and perform poorly on such an architecture.

In terms of programming difficulty, the gap in complexity between it and multi-threaded programming is at least as big as that separating single-threaded and multi-threaded programming. And that assumes you're starting from a blank slate - not trying to port existing software to it. I think it's safe to say it's even harder than GPU programming, once you account for performance tuning.

Architectures like this are good at DSP, dense linear algebra, and not a whole lot else. The main reason they were able to make it work in a games console is because most game engines really aren't that different from each other and share common, underlying libraries. And as game engines and libraries became better tuned for it, the quality of PS3 games improved noticeably. But HPC is a different beast, which is probably why IBM never tried to follow it with any successors.

I'm not even sure what you're talking about, but I'd just point out that both Cell and the XBox 360's CPUs were derived from Power PC. And PPC did have AltiVec, which had some advantages over MMX & SSE.18208196 said:In the heydays of PowerPC, developers liked it better than the Intel architecture, particularly assembler developers. Today, it may not have that "fancy" stuff such as AVX, SSE etc but it probably is quite capable for computations. Benchmarks should be able to give some indications...

ah the cel... listening to devs talk about it and an mit lecture, the main problems with it were this

1) sony refused to give out proper documentation, they wanted their games to get progressively better as the console aged, preformance wise and graphically, so what better way then to kneecap devs

2) from what i understand about the architecture, and im not going to say this right, you had one core devoted to the os/drm, then you had the rest devoted to games and one core disabled on each to keep yields (something from the early day of the ps3) then you had to program the games while thinking of what core the crap executed on, all in all, a nightmare to work with.

if a game was made ps3 first it would port fairly good across consoles, but most games were made xbox first, and porting to ps3 was a nightmare. -

bit_user Reply

It's slightly annoying that they took a 8 + 1 core CPU and turned it into a 6 + 1 core CPU, but I doubt anyone was too bothered about that.18224039 said:2) from what i understand about the architecture, and im not going to say this right, you had one core devoted to the os/drm, then you had the rest devoted to games and one core disabled on each to keep yields (something from the early day of the ps3)

I think PS4 launched with only 4 of the 8 cores available for games (or maybe it was 5/8?). Recently, they unlocked one more. I wonder how many the PS4 Neo will allow.

This is part of what I was saying. Again, the reason why it mattered which core was that the memory model was so restrictive. Each PPE plays in its own sandbox, and has to schedule any copies to/from other cores or main memory. Most multi-core CPUs don't work this way, as it's too much burden to place on software, with the biggest problem being that it prevents one from using any libraries that weren't written to work this way.18224039 said:you had to program the games while thinking of what core the crap executed on, all in all, a nightmare to work with.

if a game was made ps3 first it would port fairly good across consoles, but most games were made xbox first, and porting to ps3 was a nightmare.

Now, if you write your software that way, you can port it to XBox 360/PC/etc. by simply taking the code that'd run on the PPEs and put it in a normal userspace thread. The DMA operations can be replaced with memcpy's (and, with a bit more care, you could even avoid some copying).

Putting it in more abstract terms, the Cell strictly enforces a high degree of data locality. Taking code written under that constraint and porting it to a less constrained architecture is easy. Going the other way is hard.

-

alidan Reply18224479 said:

It's slightly annoying that they took a 8 + 1 core CPU and turned it into a 6 + 1 core CPU, but I doubt anyone was too bothered about that.18224039 said:2) from what i understand about the architecture, and im not going to say this right, you had one core devoted to the os/drm, then you had the rest devoted to games and one core disabled on each to keep yields (something from the early day of the ps3)

I think PS4 launched with only 4 of the 8 cores available for games (or maybe it was 5/8?). Recently, they unlocked one more. I wonder how many the PS4 Neo will allow.

This is part of what I was saying. Again, the reason why it mattered which core was that the memory model was so restrictive. Each PPE plays in its own sandbox, and has to schedule any copies to/from other cores or main memory. Most multi-core CPUs don't work this way, as it's too much burden to place on software, with the biggest problem being that it prevents one from using any libraries that weren't written to work this way.18224039 said:you had to program the games while thinking of what core the crap executed on, all in all, a nightmare to work with.

if a game was made ps3 first it would port fairly good across consoles, but most games were made xbox first, and porting to ps3 was a nightmare.

Now, if you write your software that way, you can port it to XBox 360/PC/etc. by simply taking the code that'd run on the PPEs and put it in a normal userspace thread. The DMA operations can be replaced with memcpy's (and, with a bit more care, you could even avoid some copying).

Putting it in more abstract terms, the Cell strictly enforces a high degree of data locality. Taking code written under that constraint and porting it to a less constrained architecture is easy. Going the other way is hard.

as for turning cores off, that's largely to do with yields, later on in the consoles cycle they unlocked cores so some systems that weren't bad chips got slightly better performance in some games then other consoles, at least on ps3, that meant all of nothing as it had a powerful cpu but the gpu was bottlenecking it, opposite of the 360 where the cpu was bottlenecking that one at least if i remember the systems right.