SSD Deathmatch: Crucial's M500 Vs. Samsung's 840 EVO

Micron's consumer products division, Crucial, wasn't the first brand to introduce a 1 TB SSD. But it was the first to sell one for less than a fortune, and it sports some snazzy new features to boot. We got our hands on the entire line-up to test.

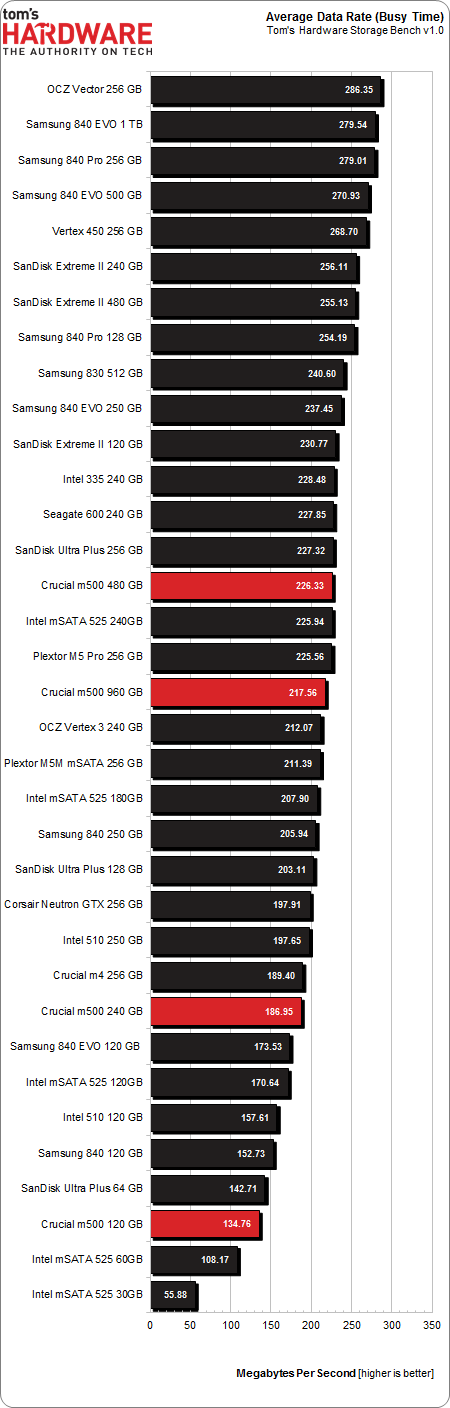

Results: Tom's Storage Bench v1.0

Storage Bench v1.0 (Background Info)

Our Storage Bench incorporates all of the I/O from a trace recorded over two weeks. The process of replaying this sequence to capture performance gives us a bunch of numbers that aren't really intuitive at first glance. Most idle time gets expunged, leaving only the time that each benchmarked drive was actually busy working on host commands. So, by taking the ratio of that busy time and the the amount of data exchanged during the trace, we arrive at an average data rate (in MB/s) metric we can use to compare drives.

It's not quite a perfect system. The original trace captures the TRIM command in transit, but since the trace is played on a drive without a file system, TRIM wouldn't work even if it were sent during the trace replay (which, sadly, it isn't). Still, trace testing is a great way to capture periods of actual storage activity, a great companion to synthetic testing like Iometer.

Incompressible Data and Storage Bench v1.0

Also worth noting is the fact that our trace testing pushes incompressible data through the system's buffers to the drive getting benchmarked. So, when the trace replay plays back write activity, it's writing largely incompressible data. If we run our storage bench on a SandForce-based SSD, we can monitor the SMART attributes for a bit more insight.

| Mushkin Chronos Deluxe 120 GBSMART Attributes | RAW Value Increase |

|---|---|

| #242 Host Reads (in GB) | 84 GB |

| #241 Host Writes (in GB) | 142 GB |

| #233 Compressed NAND Writes (in GB) | 149 GB |

Host reads are greatly outstripped by host writes to be sure. That's all baked into the trace. But with SandForce's inline deduplication/compression, you'd expect that the amount of information written to flash would be less than the host writes (unless the data is mostly incompressible, of course). For every 1 GB the host asked to be written, Mushkin's drive is forced to write 1.05 GB.

If our trace replay was just writing easy-to-compress zeros out of the buffer, we'd see writes to NAND as a fraction of host writes. This puts the tested drives on a more equal footing, regardless of the controller's ability to compress data on the fly.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Average Data Rate

The Storage Bench trace generates more than 140 GB worth of writes during testing. Obviously, this tends to penalize drives smaller than 180 GB and reward those with more than 256 GB of capacity.

The M500s handle their business in this metric, but hardly turn in spectacular performances. The 120 GB M500 gets dinged right out of the gate because of its capacity, though not as severely as, say, the 30 GB Intel SSD 525. It's even slower than the three-bit-per-cell-based Samsung 840 120 GB.

All three larger M500s mix it up in the middle of the pack. For instance, the 240 GB drive reports back that it's 2.55 MB/s slower than the previous-gen m4. The 960 GB model is next-highest, just behind Plextor's 256 GB M5 Pro. We really want to know why the M500s fall in line the way they do, though.

Busy time accumulates when the SSD performs a task initiated by the host. So, when the operating system asks a drive to read or write, measured busy time increases. Take the total elapsed time and the amount of data read/written by the trace, and you get busy time in a far easy to understand MB/s figure. Unfortunately, busy time and the MB/s number generated with it aren't really good at measuring higher queue depth performance.

The corner case testing tells us that the M500s really stand apart from each other as queue depth increases. However, all of these SSDs are basically the same in the background I/O generated by Windows, your Web browser, or most other mainstream applications. It's only when we're presented with lots of reads and writes in a short time that the mid-range and high-end drives stand apart from each other. To test that, we need another metric.

Current page: Results: Tom's Storage Bench v1.0

Prev Page Results: 4 KB Random Writes Next Page Results: Tom's Storage Bench, Continued-

Someone Somewhere I think you mixed up the axis on the read vs write delay graph. It doesn't agree with the individual ones after, or the writeup.Reply -

Someone Somewhere Even 3bpc SSDs should last you a good ten years...Reply

The SSD 840 is rated for 1000 P/E cycles, though it's been seen doing more like ~3000. At 10GB/day, a 240GB would last for 24,000 days, or about 766 years, and that's using the 1K figure.

You're free to waste money if you want, but SLC now has little place outside write-heavy DB storage.

EDIT: Screwed up by an order of magnitude. -

cryan Reply11306005 said:I think you mixed up the axis on the read vs write delay graph. It doesn't agree with the individual ones after, or the writeup.

You are totally correct! You win a gold star, because I didn't even notice. Thanks for catching it, and it should be fixed now.

Regards,

Christopher Ryan

-

cryan Reply11306034 said:I would only buy SSD that uses SLC memory. I dont wan't to buy new drive every year or so.

Not only are consumer workloads completely gentle on SSDs, but modern controllers are super awesome at expanding NAND longevity. I was able to burn through 3000+ PE cycles on the Samsung 840 last year, and it only is rated at 1,000 PE cycles or so. You'd have to put almost 1 TB a day on a 120 GB Samsung 840 TLC to kill it in a year, assuming it didn't die from something else first.

Regards,

Christopher Ryan

-

Someone Somewhere I'd like to see some sources on that - for starters, I don't think the 840 has been out for a year, and it was the first to commercialize 3bpc NAND.Reply

You may be thinking of the controller failures some of the Sandforce drives had, which are completely unrelated to the type of NAND used. -

mironso Well, I must agree with Someone Somewhere. I would also like to see sources for this statement: "Yes, in theory they last 10 years, in practise they last a year or so.".Reply

I would like to see, can TH use SSD put this 10GB/day and see for how long it will work.

After this I read this article, I think that Crucial's M500 hit the jackpot. Will see Samsung's response. And that's very good for end consumer. -

edlivian It was sad that they did not include the samsung 830 128gb and crucial m4 128gb in the results, those were the most popular ssd last year.Reply -

Someone Somewhere You can also find tens of thousands of people not complaining about their SSD failing. It's called selection bias.Reply

Show me a report with a reasonable sample size (more than a couple of dozen drives) that says they have >50% annual failures.

A couple of years ago Tom's posted this: http://www.tomshardware.com/reviews/ssd-reliability-failure-rate,2923.html

The majority of failures were firmware-caused by early Sandforce drives. That's gone now.

EDIT: Missed your post. First off, that's a perfect example of self-selection. Secondly, those who buy multiple SSDs will appear to have n times the actual failure rate, because if any fail they all appear to fail. Thirdly, that has nothing to do with whether or not it is a 1bpc or 3 bpc SSD - that's what you started off with.

This doesn't fix the problem of audience self-selection

-

Someone Somewhere You were however trying to stop other people buying them...Reply

Sounds a bit like a sore loser argument, unfortunately.

SSDs aren't perfect, but they generally do live long enough to not be a problem. Most of the failures have been overcome by now too.

Just realised there's an error in my original post - off by a factor of ten. Should have been 66 years. -

warmon6 Reply11306841 said:I am not talking about Samsung SSD-s, I am talking about SSDs in general. And I am not going to provide any sources because SSD fail all the time after a year or so. That is the raility. You can find, on the internet, people complaining abouth their SSD failing. There are a lot of them...

Also, SLC based SSD-s are usually "enterprise", so they are designed for reliability and not performance, and they don't use some bollocks, overclocked to the point of failure, controllers. And have better optimised firmware...

Tell that to all the people on this forum still running intel X-25M that launched all the way back in 2008 and my Samsung 830 that's been working just fine for over a year.......

See what you're paying attention too is the loudest group of ssd owners. The owners that have failed ssd's.

See it's the classic "if someone has a problem, there going to be the one that you hear and the quiet group, isn't having the problem" issue.

Those that dont have issues (such as myself) dont mention about our ssds and is probably complaining about something else that has failed.