Nvidia Claims Arm Grace CPU Superchip 2X Faster, 2.3X More Efficient Than Intel Ice Lake

Nvidia's Arm chips get the benchmark treatment

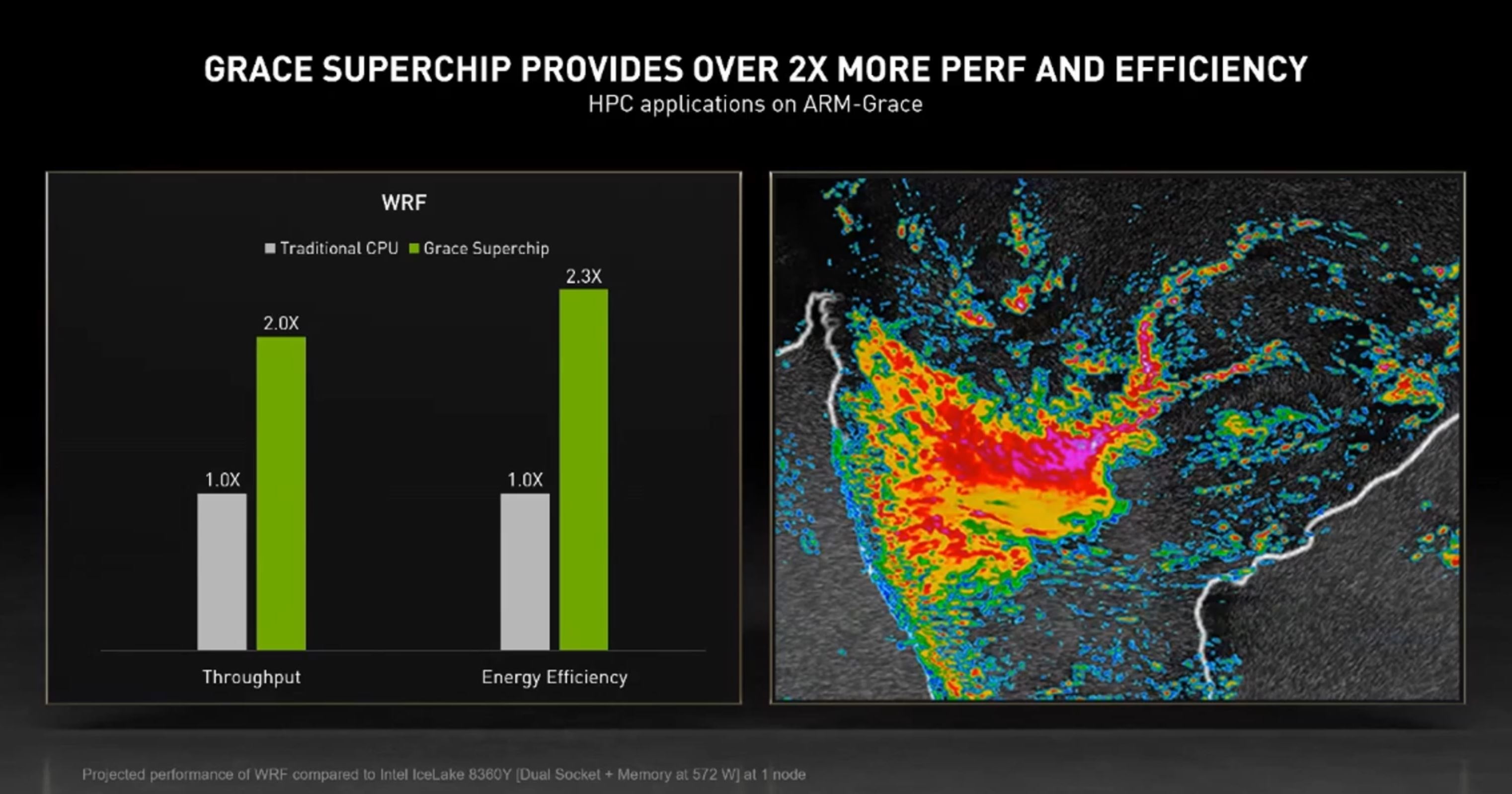

Nvidia unveiled its new 144-core Grace CPU Superchip, its first CPU-only Arm chip designed for the data center, back at GTC. Nvidia shared a benchmark against AMD's EPYC to claim a 1.5X lead, but that wasn't very useful because it was against a previous-gen model. However, we found a benchmark of Grace versus Intel's Ice Lake buried in a GTC presentation from Nvidia's vice president of its Accelerated Computing business unit, Ian Buck. This benchmark claims Grace is 2X faster and 2.3X more energy-efficient than Intel's current-gen Ice Lake in a Weather Research and Forecasting (WRF) model commonly used in HPC.

Nvidia's first benchmark claimed that Grace is 1.5X faster in the SPECrate_2017 benchmark than two previous-gen 64-core EPYC Rome 7742 processors and that it will deliver twice the power efficiency of today's server chips when it arrives in early 2023. However, those benchmarks compare to previous-gen chips — the Rome chips will be four years old when Grace arrives next year, and AMD already has its faster EPYC Milan shipping. Given the comparison to Rome, we can expect Nvidia's Grace to be on-par with the newer Milan in both performance and performance-per-watt. However, even that comparison doesn't really matter; AMD's EPYC Genoa will be available in 2023, and it will be faster still.

That makes Nvidia's comparison against Intel's current-gen Ice Lake a bit more interesting. So even though Intel will have its Sapphire Rapids available by 2023, at least we're getting a generation closer in the comparison below. (Beware, this is a vendor-provided benchmark result and is based on a simulation of the Grace CPU, so take Nvidia's claims with a grain of salt.)

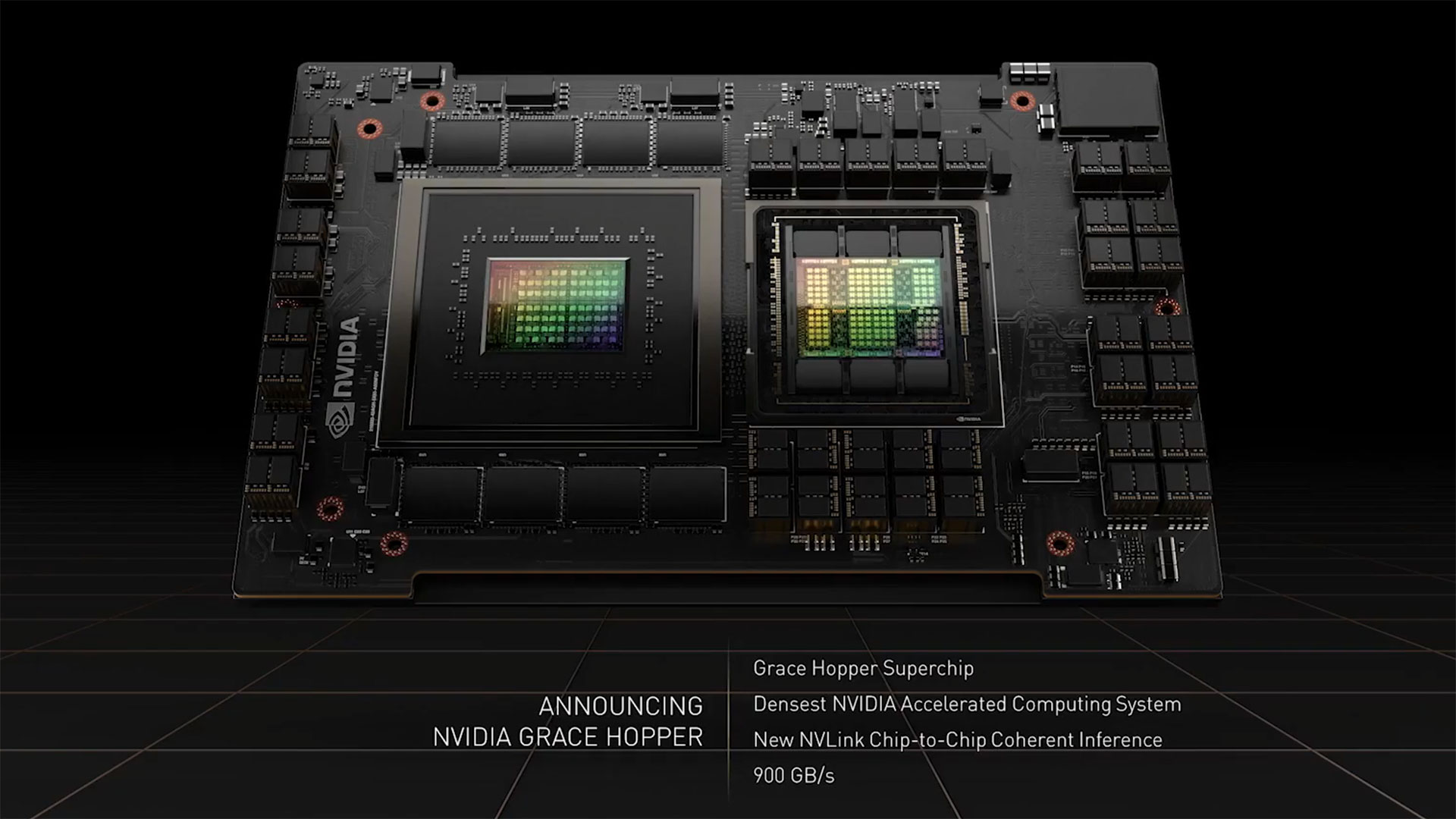

As a reminder, Nvidia's Grace CPU Superchip is an Arm v9 Neoverse (N2 Perseus) processor with 144 cores spread out over two dies fused together with Nvidia's newly branded NVLink-C2C interconnect tech that delivers 900 GB/s of throughput and memory coherency. In addition, the chip uses 1TB of LPDDR5x ECC memory that delivers up to 1TB/s of memory bandwidth, twice that of other data center processors that will support DDR5 memory.

And make no mistake, that enhanced memory throughput plays right to the strengths of the Grace CPU Superchip in the Weather Research and Forecasting (WRF) model above. Nvidia says that its simulations of the 144-core Grace chip show that it will be 2X faster and provide 2.3X the power efficiency of two 36-core 72-thread Intel 'Ice Lake' Xeon Platinum 8360Y processors in the WRF simulation. That means we're seeing 144 Arm threads (each on a physical core), facing off with 144 x86 threads (two threads per physical core).

The various permutations of WRF are real-world workloads commonly used for benchmarking, and many of the modules have been ported over for GPU acceleration with CUDA. We followed up with Nvidia about this specific benchmark, and the company says this module hasn't yet been ported over to GPUs, so it is CPU-centric. Additionally, it is very sensitive to memory bandwidth, giving Grace a leg up in both performance and efficiency. Nvidia's estimates are "based on standard NCAR WRF, version 3.9.1.1 ported to Arm, for the IB4 model (a 4km regional forecast of the Iberian peninsula)."

Grace's tremendous memory throughput will pay dividends in performance and also in energy efficiency because the increased throughput reduces the number of inactive cycles by keeping the greedy cores fed with data. The chips also use lower-power LPDDR5X compared to Ice Lake's DDR4.

However, Grace likely won't have as much of an advantage against Intel's upcoming Sapphire Rapids — these chips support DDR5 memory and also have variants with HBM memory that could help counter Grace's strengths in some memory-bandwidth-starved applications. AMD also has its Milan-X with 3D-stacked L3 cache (3D V-Cache) that benefits some workloads, and we expect the company will make similar SKUs for the EPYC Genoa family.

It's telling that Nvidia used benchmarks showing a 1.5X gain over AMD's prior-gen EPYC Rome for its headline benchmark comparisons at GTC and in its press releases instead of using its larger 2X gain over Intel's current-gen Ice Lake. Instead, it buried the Intel comparison in a GTC presentation. Given that AMD is the leader in the data center, perhaps Nvidia felt that even managing to beat up on its previous-gen chips was more impressive than taking down Intel's current-gen finest.

In either case, that doesn't mean Nvidia doesn't have a use for Intel's silicon. For example, Nvidia's Jensen Huang told us during a recent roundtable that "[...]If not for Intel's CPUs in our Omniverse computers that are coming up, we wouldn't be able to do the digital twin simulations that rely so deeply on the single-threaded performance that they're really good at."

In fact, those very Nvidia OVX servers use two of Intel's 32-core Ice Lake 8362 processors apiece, and they're obviously selected because they are more agile in single-threaded work than AMD's EPYC— at least for this specific use case. Interestingly, Nvidia has yet to share any projections of Grace's prowess in single-threaded work, instead preferring to show off its sheer threaded heft for now.

There will certainly be interesting times ahead as a new and very serious contender enters the data center CPU race, this time with a specialized Arm design that's tightly integrated with what is fast becoming the most important number cruncher of all in the data center: the GPU.

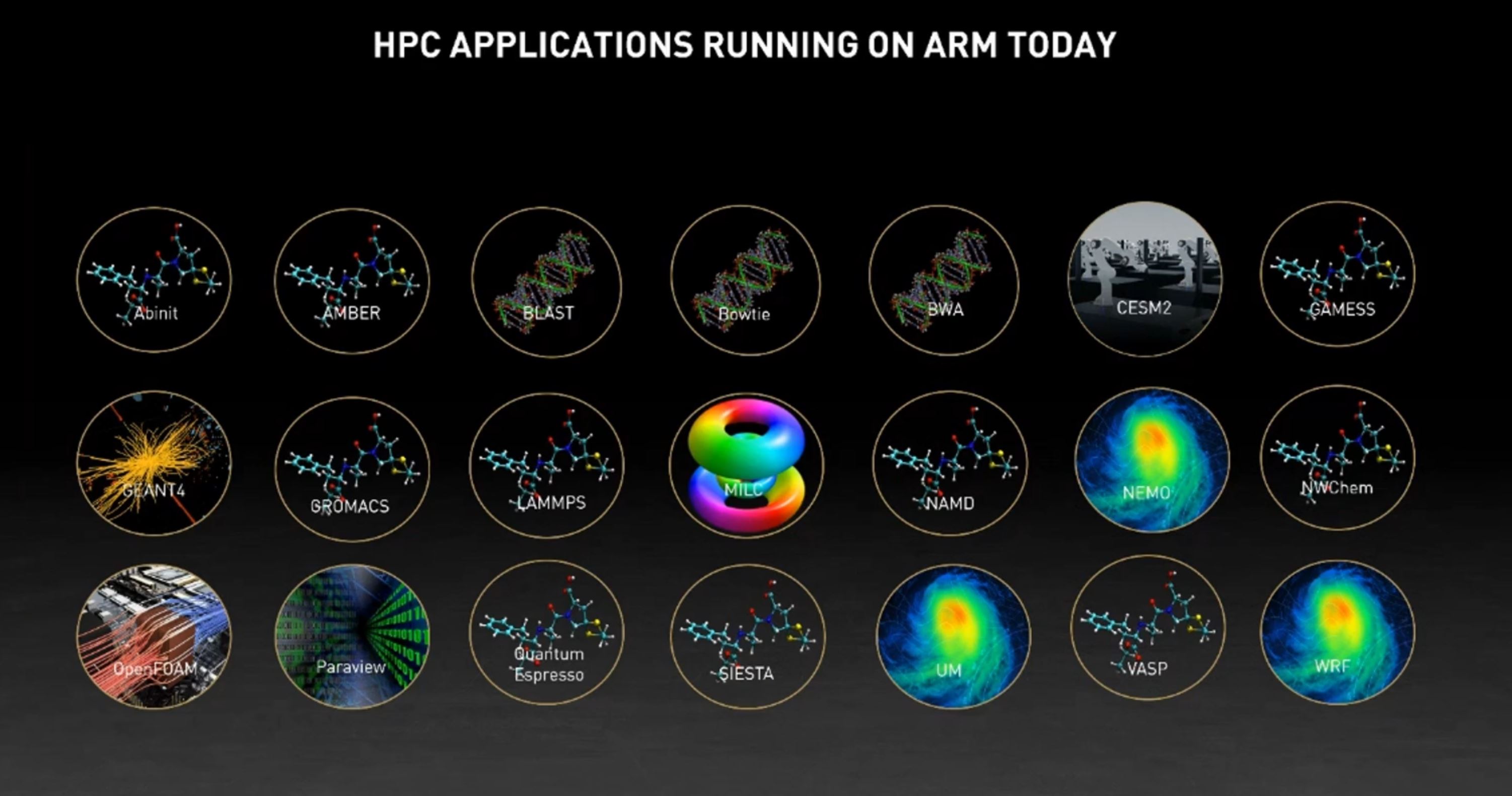

Overall, Nvidia claims the Grace CPU Superchip will be the fastest processor on the market when it ships in early 2023 for a wide range of applications, like hyperscale computing, data analytics, and scientific computing. Regardless of how well Nvidia's Grace CPU Superchip performs relative to the other data center chips in 2023, there will certainly be plenty of choice in the years ahead, specifically for the myriad of HPC workloads shown below that already run on Arm. Given the recent explosion of new Arm-based chips in the data center, we expect this list to grow quickly.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

ezst036 As an ARM chip, I'm more interested in how Grace stacks up against another ARM chip.Reply

Apple's M1. (Max/Ultra/etc) Looking forward to benchmarks some day. -

haribos ARM chips from Nvidia are going to surpass x86 CPUs from Intel and AMD in data centers like how Apple Silicon has surpassed them in consumer desktops and laptops.Reply -

jkflipflop98 Replyharibos said:ARM chips from Nvidia are going to surpass x86 CPUs from Intel and AMD in data centers like how Apple Silicon has surpassed them in consumer desktops and laptops.

Except that's only true if you read the headlines and nothing else. Apple hasn't surpassed Intel at anything. Just like ARM hasn't surpassed x86 at anything. -

Liquidrider Replyharibos said:ARM chips from Nvidia are going to surpass x86 CPUs from Intel and AMD in data centers like how Apple Silicon has surpassed them in consumer desktops and laptops.

If you believe that then you are ignoring the industry standards. AMD's Epyc line-up is on a whole new playing field, which is probably why Nvidia isn't comparing ARM to them. While Industry does change Nvidia has a long road ahead. Switching from x86 to ARM isn't a like turning on a switch.

And I would love to hear your explanation of how Apple surpassed Intel & AMD in the consumer market? Surpass in what exactly? Windows still dominates the consumer market by a wide margin. -

spongiemaster Reply

Except Nvidia did compare their chip to Epyc during the original announcement. The ones they currently use in their DGX systems. Seems unlikely Nvidia would replace the Epyc CPU's in their current system with slower ARM CPU's.Liquidrider said:If you believe that then you are ignoring the industry standards. AMD's Epyc line-up is on a whole new playing field, which is probably why Nvidia isn't comparing ARM to them. -

saltweaver ReplyThe various permutations of WRF are real-world workloads commonly used for benchmarking, and many of the modules have been ported over for GPU acceleration with CUDA.

I'm very interested to see if Saphire Rapids will use same GPU acceleration with new Intel OneAPI and how will this compare with CUDA. -

jp7189 Reply

Of course they would. More NVDIA components equals more profit to them.spongiemaster said:Except Nvidia did compare their chip to Epyc during the original announcement. The ones they currently use in their DGX systems. Seems unlikely Nvidia would replace the Epyc CPU's in their current system with slower ARM CPU's. -

jp7189 Reply

Chuckle even their best simulations are showing a loss in reality. 144 Grace cores vs 72 <real> intel cores. 2x the cores = 2x the performance. BUT intel has a much slower socket to socket interface and much slower RAM. That tells us that the <future> fundamental Grace core is slower than existing Intel cores.haribos said:ARM chips from Nvidia are going to surpass x86 CPUs from Intel and AMD in data centers like how Apple Silicon has surpassed them in consumer desktops and laptops. -

JamesJones44 ReplyLiquidrider said:If you believe that then you are ignoring the industry standards. AMD's Epyc line-up is on a whole new playing field, which is probably why Nvidia isn't comparing ARM to them. While Industry does change Nvidia has a long road ahead. Switching from x86 to ARM isn't a like turning on a switch.

And I would love to hear your explanation of how Apple surpassed Intel & AMD in the consumer market? Surpass in what exactly? Windows still dominates the consumer market by a wide margin.

What "Industrial standard" are you speaking of? In the server/backend/cloud/DataCenter world ARM has been supported for a long time, almost all software developed today for the server land runs on both x86 and AArch. If you question it, go ahead and take a look at Docker Hub and see how many containers of top software run on both.

x86 in server land is in real trouble due to the performance per watt issue. Every watt AWS, G-Cloud, Azure saves is money in the bank. Desktop land is a different story, but in server land there is no "standard". Just look at how much market share it has lost to ARM already there since 2020. Heck we even have customers with private DataCenter running ARM now, something that 3 years ago would have been crazy to think of. I'm sorry, but the days of required architecture are over, it's either best performance or best performance per watt and that is all that matters now. -

Kamen Rider Blade ReplyJamesJones44 said:x86 in server land is in real trouble due to the performance per watt issue. Every watt AWS, G-Cloud, Azure saves is money in the bank. Desktop land is a different story, but in server land there is no "standard". Just look at how much market share it has lost to ARM already there since 2020. Heck we even have customers with private DataCenter running ARM now, something that 3 years ago would have been crazy to think of. I'm sorry, but the days of required architecture are over, it's either best performance or best performance per watt and that is all that matters now.

Server Revenue says otherwise. Team x86 is doing fine and the gap is growing between Team x86 & Non-x86

Non-x86 Server Revenue is on a Downward Trend:

IBM eats up a size-able portion of the Non-x86 Server Revenue.