Robotaxis Use Nvidia Data Centers to Constantly Improve

A reminder that Nvidia is bigger than Ampere.

Tech enthusiasts tend to think of Nvidia for its PC components like the RTX 3000 line and gaming services like GeForce Now, but especially during the pandemic, these are far from the company’s only moneymakers. There’s also Nvidia’s enterprise level hardware, specifically its data centers. Nvidia’s data centers have been at the center of its story this year as the pandemic has forced businesses to move more of their operations into the cloud, but according to a new blog post the company posted earlier today, its data centers might also soon be bringing their calculations to the streets.

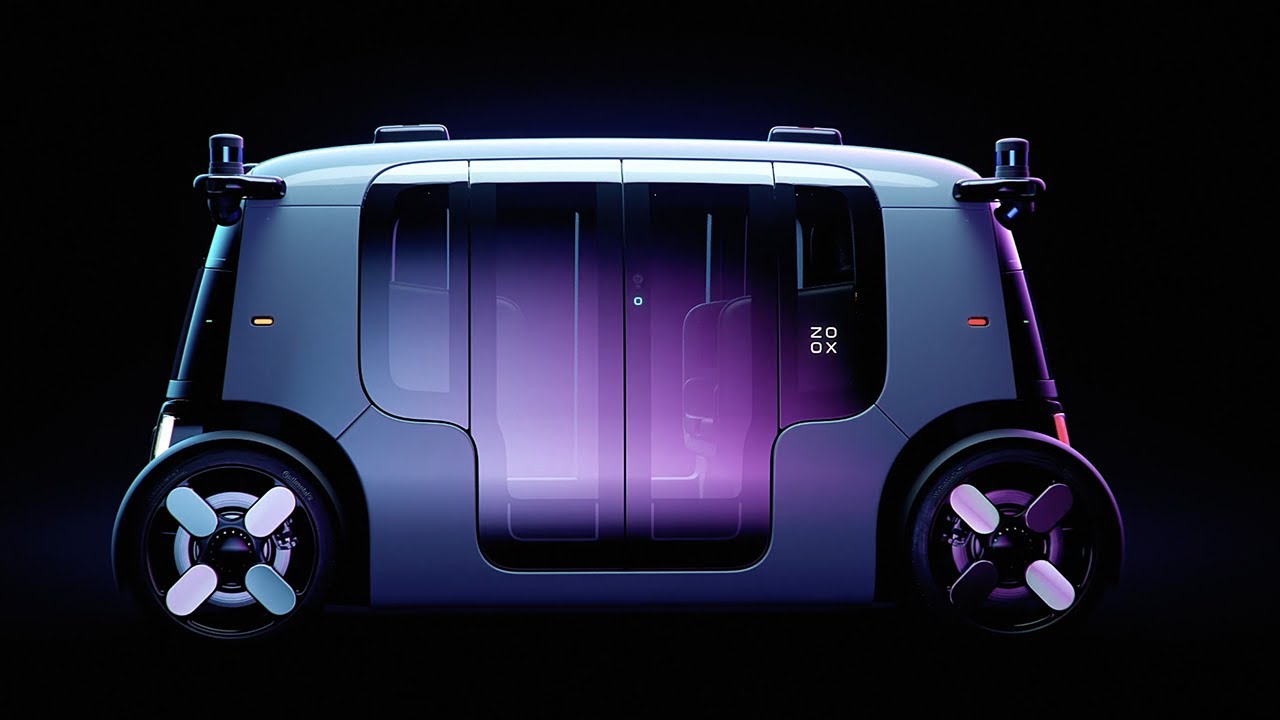

Earlier this week, Amazon’s Zoox, which develops driverless taxi, posted an in-depth breakdown of its latest robotaxi prototype. While it’s not publicly available quite yet, a testing fleet has been built and is currently on the streets on both closed courses and certain public roads. The Zoox robotaxi sets itself apart from competitors by focusing on a symmetrical design with no proper forward or backwards, which lets it give customers identical experiences regardless of where they sit and eliminates the need to reverse. It’s also got built-in touchscreens for communicating with the taxi and four-wheel steering to be able to get into tight spaces without parallel parking, but most importantly, it sports an Nvidia-powered machine-learning algorithm that incorporates cameras, radar, and lidar to constantly improve.

According to Nvidia, Zoox has been working directly with Nvidia to bring a level 5 autonomous vehicle- which is the highest tier of self-driving car and requires no human intervention- to streets since at least 2017. It’s also an alumnus of Nvidia Inception, which is the company’s incubator program for startups that focus on AI and data science.

So how does Zoox’s algorithm works? Essentially, while individual robotaxis will use their many sensors to drive safely in the moment, achieving a 360 degree field of view around all four corners of the vehicle, that data also gets sent back to an Nvidia data center equipped that helps beef up the company's machine learning algorithm. That algorithm can then interpret that data to improve the driving AI, and beam updates out to its fleet of vehicles over-the-air every few weeks.

A human hand is also involved.

“As Zoox drives more, it gets smarter by collecting more data,” Zoox Senior Vice President of Software Ashu Rege explained in Zoox's video breakdown. “As we encounter more incidents, we will process that data offline, and our engineers will obviously take a look at what situations it was able to handle well, what it wasn’t able to handle as well, and that gets folded into our development process where we would train our AI better.”

Zoox is currently testing and training its cars in San Francisco and Las Vegas, two dense cities that it’s hoping will help flesh out its AI’s ability to understand unusual edge cases one might encounter in driving. But we don’t know when it’s set to come out quite yet.

“The next step after today is to continue testing, both on private roads and public roads, and get to market in a dense urban environment,” Zoox CEO Aicha Evans explained.

For Nvidia’s part, its blog post calls Zoox “the only proven, high-performance solution for robotaxis.”

All of this information is still unfortunately fairly vague and mostly meant to sell customers on the concept rather than provide an in-depth guide to how Zoox’s AI learns or how it leverages Nvidia data centers. But it’s a good reminder that, regardless of how many consumer launches it bungles, Nvidia’s tendrils reach deep into the tech world.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Michelle Ehrhardt is an editor at Tom's Hardware. She's been following tech since her family got a Gateway running Windows 95, and is now on her third custom-built system. Her work has been published in publications like Paste, The Atlantic, and Kill Screen, just to name a few. She also holds a master's degree in game design from NYU.