AgileX In-Depth: PCIe Gen 5, DDR5, HBM3 and Optane DIMM Support

Any-to-Any Integration and Memory Coherency

Xeon Memory Coherency

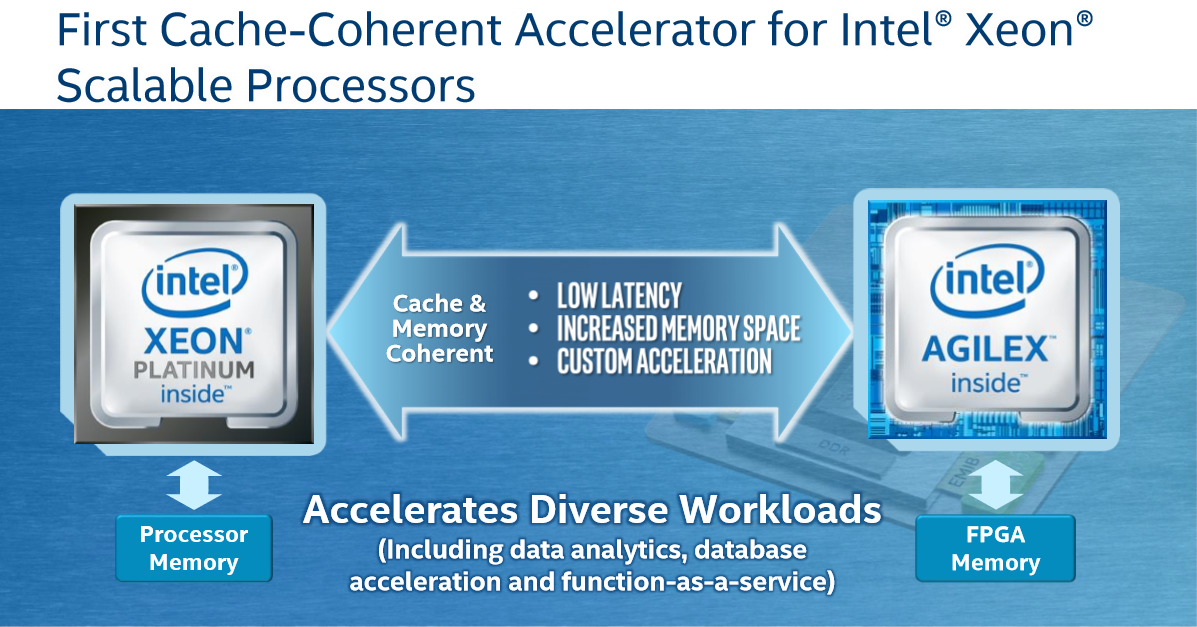

Intel says that AgileX will be the first memory-coherent accelerator for Xeon Scalable processors.

Over the last year, Intel has introduced Programmable Accelerator Cards (PACs) containing an FPGA connected to the CPU via PCIe, basically the analogue of graphics cards for FPGAs. That is where the accelerator-for-Xeon part comes from. The new part here is memory- or cache-coherency. This enables the CPU and FPGA to share a common memory space to reduce overhead, latency and data movement, basically allowing the FPGA and Xeon to work on the same data in parallel.

Intel says coherency is very important and will drive a number of new applications in both the network and the cloud, like database acceleration, infrastructure offload applications, and functions-as-a-service.

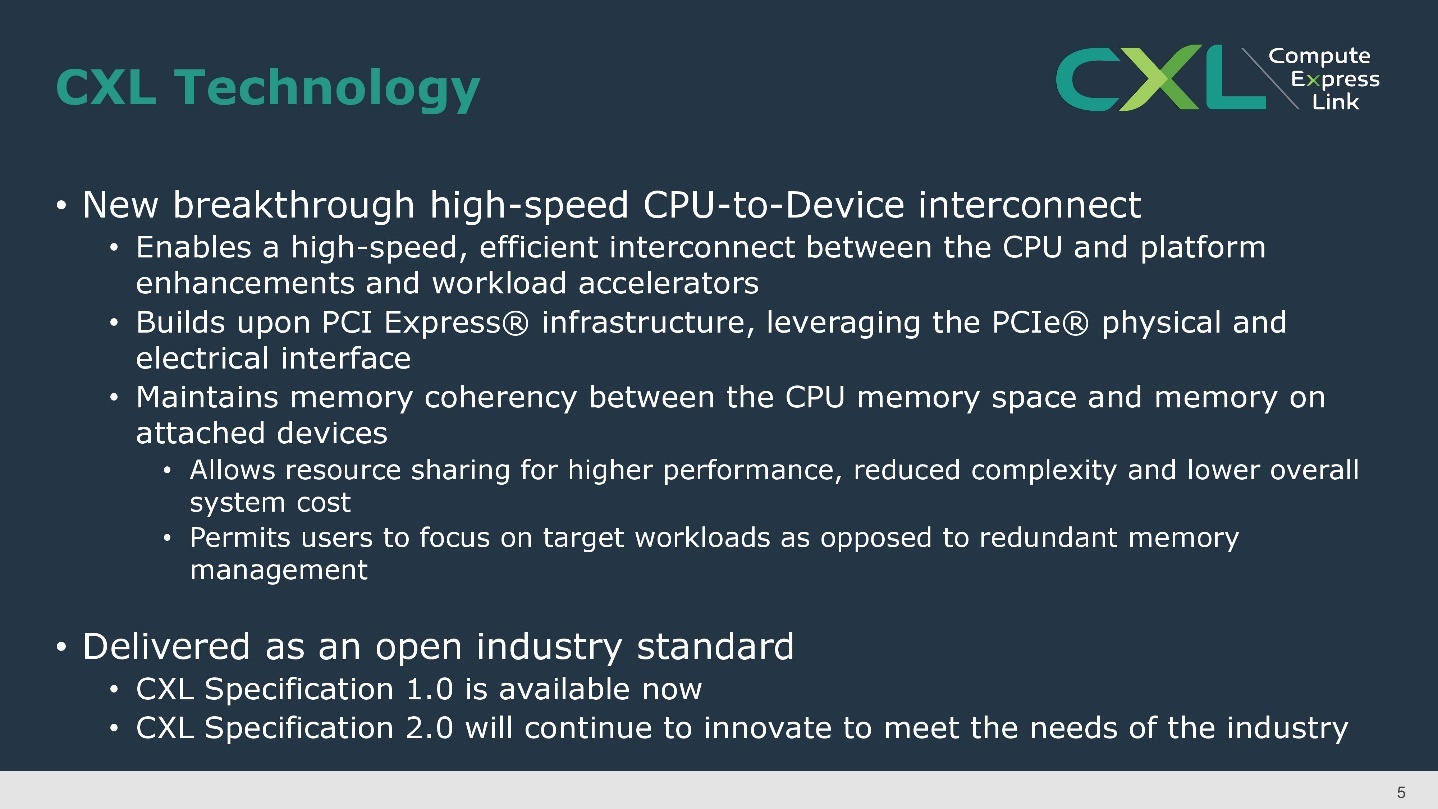

Memory coherency is made possible by the Compute Express Link (CXL) interconnect (announced last month). To recap briefly, this is a new protocol, similar in nature to NVLink, OpenCAPI, Gen-Z, and CCIX, that works over the PCIe electrical interface (PCIe 5.0 for CXL) and serves as a high-bandwidth, low latency interconnect between the CPU and accelerators like GPUs and FPGAs. It also supports any other accelerator, and even memory.

To sum up, the advantages of memory coherency and CXL are resource sharing for higher performance, reduced overhead and latency, reduced software stack complexity and, lastly, lower overall system cost because the technology is plug-and-play. As a side note, today Intel uses its ultra-path interconnect (UPI) for connecting multiple Xeons.

Any-to-Any Integration

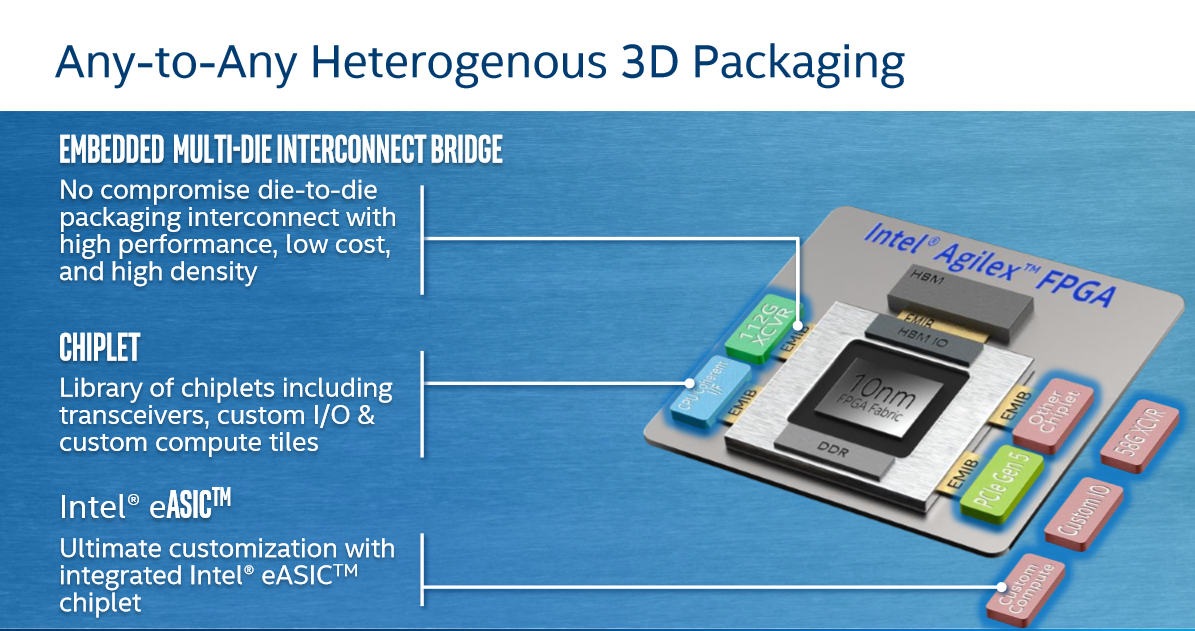

True to Intel's design goals with chiplets, the AgileX FPGA consists of a central monolithic die connected to smaller packages (chiplets) that provide several essential functions. Any-to-any integration means Intel can integrate any chiplet from any foundry.

The Intel AgileX block diagram gives a few interesting examples of the versatility of this approach. The FPGA fabric itself is still monolithic (although that might change when 3D Foveros chip stacking comes into the picture), but Intel outlines several examples of chiplets. As an aside, Stratix 10 supports six chiplets, so this diagram with five chiplets probably does not disclose how many chiplets Intel can attach to the central AgileX die.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Obviously, support for transceivers and HBM remains, but Intel bumped support up to 112G and HBM3, respectively. There is also a chiplet for PCIe 5.0 connectivity, and this example really demonstrates the agility (pun intended) of AgileX. PCIe 4.0 products arrive this year, but AgileX supports PCIe 5.0 via an Intel-produced chiplet. This option will not be available at launch, but Intel can add the capability later. That will significantly enhance the platform capabilities over time, as PCIe 5.0 is also required for memory coherency. This is possible because PCIe 5.0 is backwards compatible with PCIe 4.0 and because EMIB uses its own protocol (called AIB) for communication between the two chiplets.

Chiplets can also consist of custom compute tiles, custom I/O, and the CPU coherent interface (I/F). Higher core count dies, with CPUs from the Cortex A7x series, analog and digital converters, and “optical chiplets” are also planned. All those chiplets reside next to the FPGA in the same chip package. Xilinx has chosen Versal for its 7nm branding, but Intel's FPGA is arguably far more versatile.

MORE: Best CPUs

MORE: Intel & AMD Processor Hierarchy

MORE: All CPUs Content

Current page: Any-to-Any Integration and Memory Coherency

Prev Page In-Depth on AgileX: Intel Making FPGAs Accessible Next Page OneAPI and the AgileX Family