The Myths Of Graphics Card Performance: Debunked, Part 2

HDTVs, Display Size And Anti-Aliasing

HDTVs Versus PC Displays

Myth: My 120/240/480Hz HDTV is better for gaming than a corresponding 60Hz PC display

Except for 4K displays, almost all HDTVs are limited in resolution to a maximum of 1920x1080. PC displays can go up to 3840x2160.

PC displays can currently take inputs up to 144Hz, while televisions are limited to 60Hz. Don't be fooled by 120, 240 or 480Hz marketing. Those televisions are still limited to 60Hz input signals; they achieve their higher-rated refresh rates through frame interpolation. Typically, they introduce lag in doing so. This doesn’t really matter to regular TV content. But beyond a certain threshold, we've already proven that it matters to gaming.

Compared to PC display standards, HDTV input lag can be massive (50, sometimes even 75 ms). Summed up with all additional lag contributors in a system, that’s almost certainly noticeable. If you really must play on an HDTV, make sure its "game mode" is enabled. Also, you may want to disable its 120Hz setting entirely; it’ll only make your favorite title look worse. That is not to say that all HDTVs are bad for gaming. Some PC gaming-friendly screens do exist. In general though, you'll get better value for your money with a PC monitor, unless of course watching TV/movies is your primary consideration and you don’t have room for two displays.

Bigger Isn't Always Better

Myth: A larger display is better.

When it comes to displays, one particular measure appears to trump all others: size. Specifically, diagonal length measured in inches. So, 24", 27", 30" and so on.

While that dimension worked well for standard-definition TVs, and still works well for modern high-def televisions that accept a signal with its resolution set in advance, the same does not hold true for PC displays.

A PC display's primary specification, beyond size, is its resolution specified as the number of horizontal pixels times the number of vertical pixels it displays natively. HD is 1920x1080. The highest-resolution PC display commercially available has an Ultra HD resolution of 3840x2160, or four times HD. The picture above shows you two side-by-side screen captures illustrating the comparison. Note the "Level Up" caption on the left side; that’s one of many small UI bugs you'll have to tolerate as an early adopter of 4K, should you choose to go that route.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

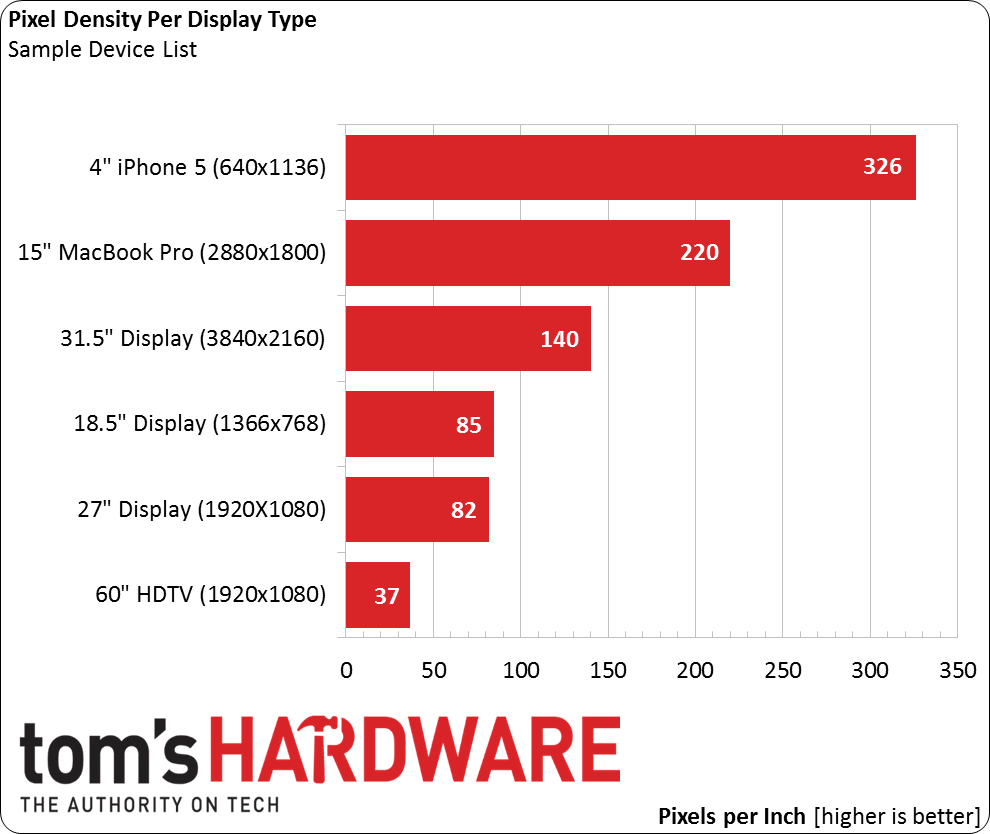

The monitor’s resolution in relation to its viewable diagonal determines its pixel density. With the advent of Retina-class mobile devices, the more standard measure of pixels-per-inch ("ppi") has often been replaced by "pixels per degree", a more general measure that takes into account not only pixel density, but also viewing distance. In a discussion of PC displays, where the viewing distance is fairly standard, we can stick with pixels per inch, though.

Steve Jobs said that 300ppi was a sort of magic number for a device held 10-12 inches from the eye, and there was much debate afterwards over his claim’s accuracy. It held though, and today that’s a generally accepted reference figure for high-resolution displays.

As you can see, PC displays still have a way to go in terms of pixel density. But if you can trade a smaller display for a higher resolution, all else being equal, you should almost always do so, unless for some reason you tend to look at your display from farther away than other PC users.

The Upsides And Downsides Of Higher Resolutions

Higher resolutions mean more pixels on-screen. While more pixels generally mean a sharper image, they also impose a heavier load on your GPU. As a result, it’s quite common to upgrade a machine’s display and GPU together, as higher-resolution panels typically require more powerful GPUs to maintain the same frame rate.

The good news is that running higher resolutions reduces the need for heavy (and GPU-intensive) anti-aliasing. Although it’s still present, and quite evident in moving scenes as "shimmering", aliasing at higher resolutions is much less noticeable than at lower resolutions. That's good, since the cost of AA also increases proportionally with resolution.

But all of the anti-aliasing talk necessitates further explanation.

Not All Antialiasing Algorithms Are Created Equal

Myth: FXAA/MLAA is better than MSAA or CSAA/EQAA/TXAA/CFAA…wait, what do these even mean?

…and…

Myth: FXAA/MLAA and MSAA are alternative to each other.

Anti-aliasing is another topic muddled with consumer confusion, and justifiably so. The huge number of technologies and acronyms (all similar to each other), convoluted by marketing hype, makes the landscape difficult to navigate. Furthermore, games like Rome II and BioShock: Infinite don’t make it clear what type of anti-aliasing they implement, leaving you to scratch your head. We’ll try to help.

There really are just two mainstream categories of anti-aliasing: multi-sampling- and post-processing techniques. They both aim to address the same image quality problem, but work very differently in practice and introduce dissimilar tradeoffs. There is yet another category of experimental approaches to anti-aliasing that are rarely implemented in games.

You’ll occasionally encounter techniques that are obsolete (most notably SSAA due to its extreme computational cost, surviving only as the notorious "ubersampling" setting in The Witcher 2) or haven’t really taken off (Nvidia's SLI AA). Also, certain sub-techniques deal with transparent textures in MSAA settings. They’re not separate AA technologies, but rather an adaptation of MSAA. We will not discuss any of them in detail here today.

Again, without going into the details, the table below highlights the differences between both mainstream categories of anti-aliasing. The A/B classes are not industry standards, but rather our attempt at simplification.

| Header Cell - Column 0 | Generic/Third-Party Names | AMD-Specific Implementation | Nvidia-Specific Implementation |

|---|---|---|---|

| Class A+, Experimental: Hybrid multi-sample, post-processing and temporal-filtered techniques | SMAA, CMAA - Various variants of MLAA typically | None | TXAA (Partially) |

| Class A, Premium: Rendering-based (multi-sampling) techniques | MSAA - Multi-Sample Anti-Aliasing | CFAA, EQAA | CSAA, QSAA |

| Class B, Value: Post-processing-based techniques | PPAA - Image-Based Post-Process Anti-Aliasing | MLAA | FXAA |

The advantage of MSAA techniques, especially higher sample-count ones, is that they are arguably better at retaining sharpness. MLAA/FXAA, in comparison, make a scene look “softer” or a little “blurred”. MSAA’s increased quality, however, comes at a very high cost in terms of video memory usage and fill-rate, since more pixels needs to be rendered. Depending on the application, on-board memory may simply be insufficient, or the performance impact of MSAA may be too great. Thus, we refer to MSAA as a Class A – Premium AA technique.

Class A+: Combining Class A and Class B Antialiasing?

Most people tend to think of MSAA and FXAA/MLAA as alternative to each other. In reality, given that one is a render-based technique and the other one is a post-processing based technique, both of them can actually be turned on at the same time. The actual benefits of doing so, though, are debatable as there are tradeoffs (e.g., lower sharpness than MSAA only, but with AA of transparent textures which MSAA does not support). Attempts to combine the two techniques more effectively, while adding a further-beneficial temporal filter, exist although they haven't taken off yet - SMAA is a notable one, Intel's CMAA is the latest (see link in article). These techniques, which we could classify as "A+", vary great in quality/cost, but at the higher settings can have an even higher memory and computational cost than comparable MSAA.

More simply, Class A multi-sampling techniques process additional pixels (beyond the display's native resolution). The number of additional samples is typically expressed as a factor. For example, you might see 4x MSAA. The higher the factor, the larger the quality improvement, not to mention the graphics memory and frame rate impact.

Class B – Value techniques, by contrast, are applied after a scene has been rendered in a raster format. They use almost no memory (see the hard data on this point in Part 1 of our series) and are much faster than Class A techniques, imposing a significantly lower frame rate impact. Most gamers who can already run a title a given resolution should be able to enable these algorithms and realize a degree of image quality benefit. That’s why we’re referring to SMAA/MLAA/FXAA as Class B – Value anti-aliasing technologies. Class B techniques do not rely on additional samples and, as such, there is no such thing as 2x FXAA or 4x MLAA. They’re either on or off.

As you can see, both AMD and Nvidia implement MSAA and FXAA/MLAA in some proprietary way. While image quality may vary slightly between them, the main AA classes don't substantially change. Just keep in mind that AMD's MLAA is a higher-cost, but somewhat higher-quality algorithm compared to Nvidia's FXAA. MLAA further uses up a bit more graphics memory (see our Rome II data in Part 1 as an example), while FXAA does not require additional graphics memory.

I personally feel that MSAA at 4K is overkill. I’d rather ensure a higher frame rate, which, at 3840x2160, can get perilously low, than turn on eye candy. Besides, FXAA and MLAA at 4K work well enough. The point is that, while MSAA is almost necessary at lower resolutions for optimal fidelity, its value becomes increasingly subjective as pixels become denser.

We have an in-depth article discussing AA that you may want to read if you're interested in learning more. If you want additional information on state-of-the-art AA techniques, we'd point you to this possibly biased but well-written article from Intel.

Choosing Between High Refresh Rates And Low Display Latency Or Better Color Accuracy And Wider Viewing Angles

Larger panels tend to come into two varieties: twisted nematic, which tends to be faster, typically offers lower color accuracy and suffers limited viewing angles, and in-plane switching technology that responds slower, but improves color reproduction and widens the available viewing angles.

Although I have two IPS-based displays that I love, gamers are often recommended to buy fast TN panels, ideally with 120/144Hz refresh rates and 1-2ms G2G response times. Gaming displays able to refresh faster feel smoother. They exhibit less lag and often include more advanced features too. One such capability that we hope to see more of in 2015 is G-Sync, which breaks the tradeoff between v-sync on or off. Refer back to our G-Sync Technology Preview: Quite Literally A Game Changer for a deep-dive.

For reasons that we'll explain on the next page, there are no 4K (2160p) panels able to support 120Hz, and there probably won’t be for a while. Gaming displays, we believe, will settle in the 1080p to 1440p range for the next couple of years. Also, IPS panels operating at 120Hz are practically non-existent. I hesitate to recommend the Yamakasi Catleap Q270 "2B Extreme OC", which relies on overclocking to reach its performance level and has a relatively slow 6.5 ms G2G response time.

The 1080p resolution remains your best value bet, since Asus’ PG278Q ROG Swift offers 70% more pixels, but sells for several times as much money. At 1080p, high-end (120/144Hz) gaming displays start at $280. Asus’ 24” VG248QE 24" isn’t cheap. However, it received our prestigious Smart Buy award in Asus VG248QE: A 24-Inch, 144 Hz Gaming Monitor Under $300. Valid alternatives in the 1080p class are BenQ's XL2420Z/XL2720T and Philips’ 242G5DJEB.

If you're on a tighter budget, it becomes necessary to sacrifice 120Hz support. Don’t despair, though. There are still plenty of fast 60Hz 1080p displays starting at roughly $110. In that price range, try to shop for a panel with a 5 ms response time. Among the many viable options, Acer’s G246HLAbd is quite popular at $140.

Current page: HDTVs, Display Size And Anti-Aliasing

Prev Page NVAPI: Measuring Graphics Memory Bandwidth Utilization Next Page DVI, DisplayPort, HDMI: Digital, But Not Quite The Same-

iam2thecrowe i've always had a beef with gpu ram utillization and how its measured and what driver tricks go on in the background. For example my old gtx660's never went above 1.5gb usage, searching forums suggests a driver trick as the last 512mb is half the speed due to it's weird memory layout. Upon getting my 7970 with identical settings memory usage loading from the same save game shot up to near 2gb. I found the 7970 to be smoother in the games with high vram usage compared to the dual 660's despite frame rates being a little lower measured by fraps. I would love one day to see an article "the be all and end all of gpu memory" covering everything.Reply

Another thing, i'd like to see a similar pcie bandwidth test across a variety of games and some including physx. I dont think unigine would throw much across the bus unless the card is running out of vram where it has to swap to system memory, where i think the higher bus speeds/memory speed would be an advantage. -

blackmagnum Suggestion for Myths Part 3: Nvidia offers superior graphics drivers, while AMD (ATI) gives better image quality.Reply -

chimera201 About HDTV refresh rates:Reply

http://www.rtings.com/info/fake-refresh-rates-samsung-clear-motion-rate-vs-sony-motionflow-vs-lg-trumotion -

photonboy Implying that an i7-4770K is little better than an i7-950 is just dead wrong for quite a number of games.Reply

There are plenty of real-world gaming benchmarks that prove this so I'm surprised you made such a glaring mistake. Using a synthetic benchmark is not a good idea either.

Frankly, I found the article was very technically heavy were not necessary like the PCIe section and glossed over other things very quickly. I know a lot about computers so maybe I'm not the guy to ask but it felt to me like a non-PC guy wouldn't get the simplified and straightforward information he wanted. -

eldragon0 If you're going to label your article "graphics performance myths" Please don't limit your article to just gaming, It's a well made and researched article, but as Photonboy touched, the 4770k vs 950 are about as similar as night and day. Try using that comparison for graphical development or design, and you'll get laughed off the site. I'd be willing to say it's rendering capabilities are actual multiples faster at those clock speeds.Reply -

SteelCity1981 photonboy this article isn't for non pc people, because non pc people wouldn't care about detailed stuff like this.Reply -

renz496 Reply14561510 said:Suggestion for Myths Part 3: Nvidia offers superior graphics drivers

even if toms's hardware really did their own test it doesn't really useful either because their test setup won't represent million of different pc configuration out there. you can see one set of driver working just fine with one setup and totally broken in another setup even with the same gpu being use. even if TH represent their finding you will most likely to see people to challenge the result if it did not reflect his experience. in the end the thread just turn into flame war mess.

14561510 said:Suggestion for Myths Part 3: while AMD (ATI) gives better image quality.

this has been discussed a lot in other tech forum site. but the general consensus is there is not much difference between the two actually. i only heard about AMD cards the in game colors can be a bit more saturated than nvidia which some people take that as 'better image quality'. -

ubercake Just something of note... You don't necessarily need Ivy Bridge-E to get PCIe 3.0 bandwidth. Sandy Bridge-E people with certain motherboards can run PCIe 3.0 with Nvidia cards (just like you can with AMD cards). I've been running the Nvidia X79 patch and getting PCIe gen 3 on my P9X79 Pro with a 3930K and GTX 980.Reply -

ubercake Another article on Tom's Hardware by which the 'ASUS ROG Swift PG...' link listed for an unbelievable price takes you to the PB278Q page.Reply

A little misleading.