Adata Demos Next-Gen Memory: CAMM, CXL, and MR-DIMM Modules

Adata shows off next-generation memory modules at Computex.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

A number of all-new memory module standards targeting different applications emerged in the last couple of years. Introduction of new modules represents challenges and opportunities for module makers, so Adata decided to show that it is ready to produce CAMM, CXL, and MR-DIMM modules for client and server applications at Compute 2023.

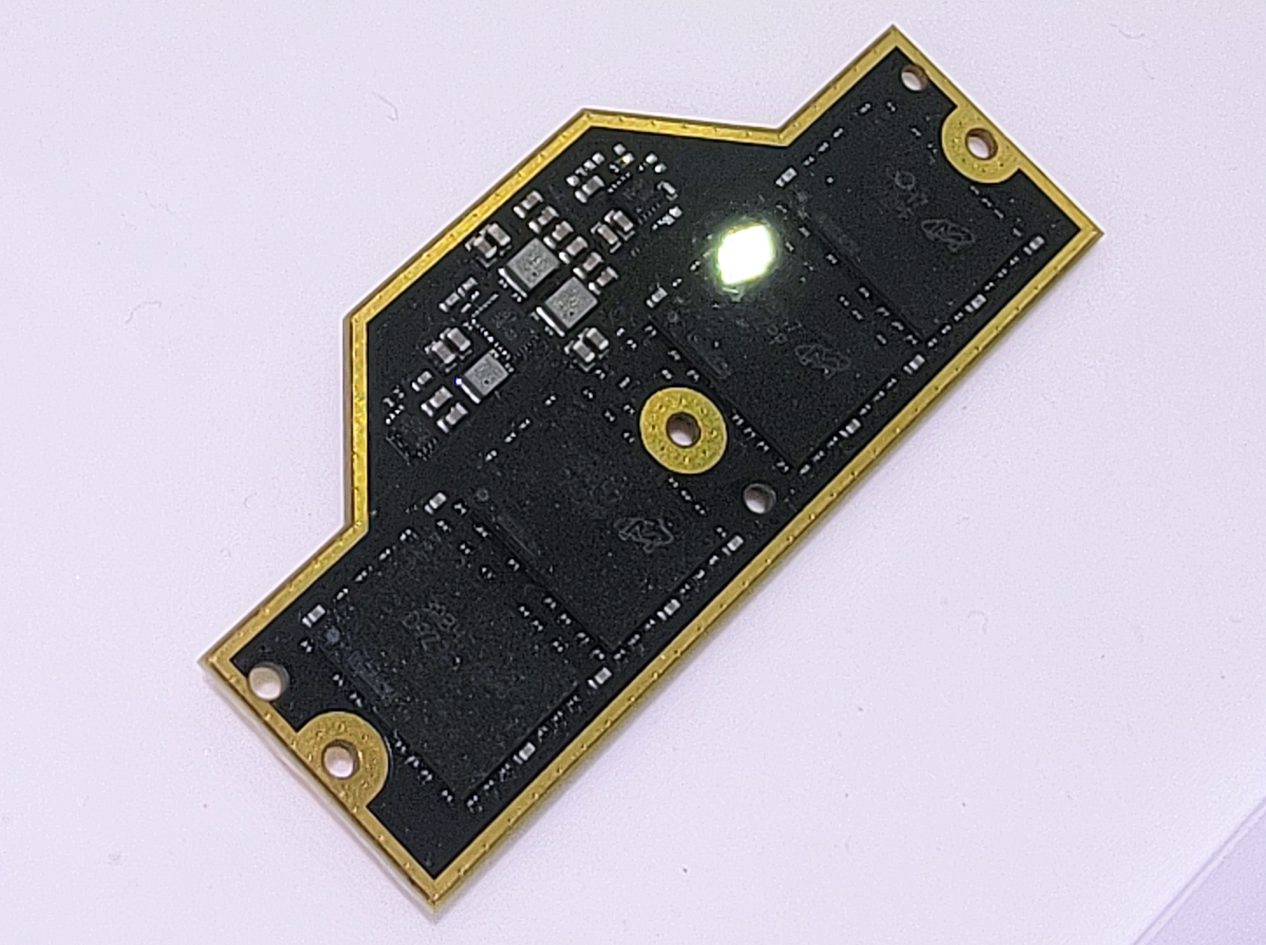

The Compression Attached Memory Module (CAMM) specification is to be finalized only sometimes in the second half of 2023, but Adata is already demonstrating a sample at the trade show. It is noteworthy that Adata's CAMM module looks quite a bit different from the one used by Dell today, but this is not particularly surprising as Dell uses some sort of pre-JEDEC-approved modules.

For those not familiar, CAMMs are projected to replace SO-DIMMs for ultra-thin laptops and other small form-factor applications. Their advantages include simplified connection of memory ICs to memory controllers (which enables usage of both module-optimized DDR5 and point-to-point interconnections-optimized LPDDR5 chips on CAMMs), simplified dual-channel connectivity (as every module is going to enable dual-channel connectivity by default), higher density, and lower Z-dimensions.

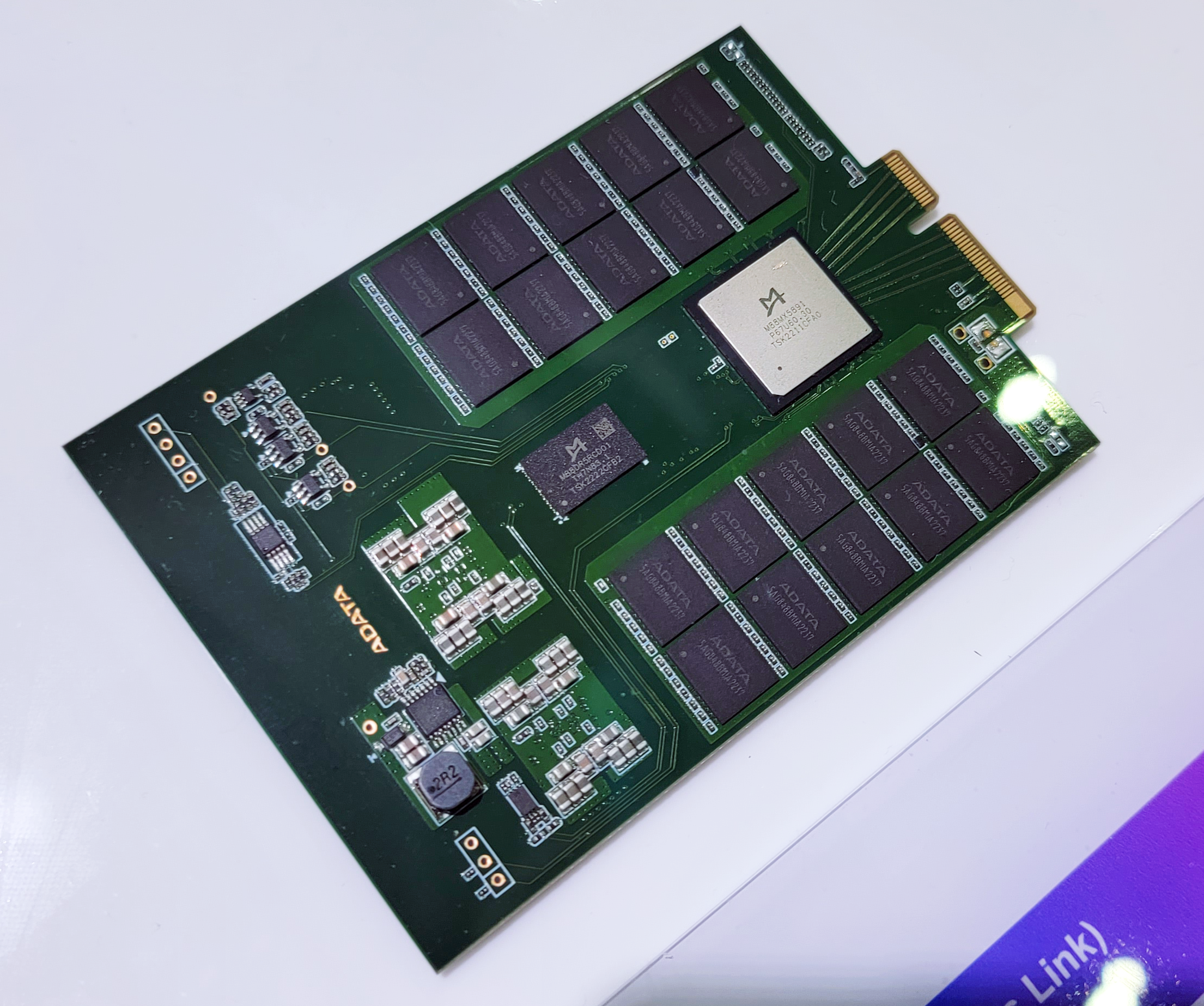

Another module that Adata is showing off is a CXL 1.1-compliant memory device with a PCIe 5.0 x4 interface and featuring an E3.S form-factor. This module carries 3D NAND memory that can act to expand system memory for servers and are meant to enable a relatively inexpensive way of system memory expansion using PCIe modules for machines that need it.

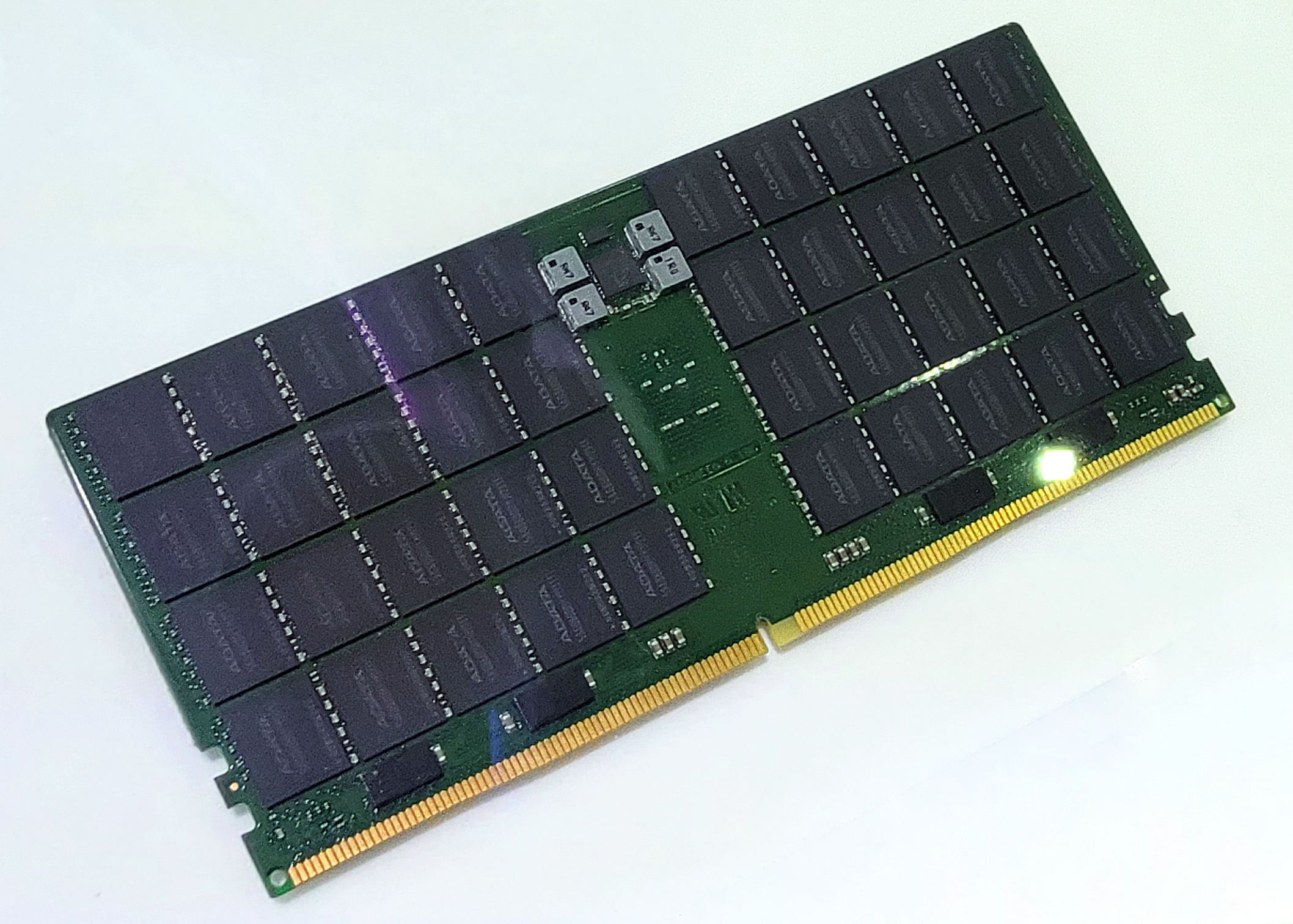

Yet another module aimed at servers that Adata is showing off at the trade show is an MR-DIMM (multi-ranked buffered DIMM). MR-DIMMs are projected to be next-generation buffered memory modules for servers (along with MCR-DIMMs). These modules are expected to be supported by next-generation CPUs from AMD and Intel (including Granite Rapids CPUs) and tangibly increase performance and capacity of memory subsystems by essentially combining two memory modules on one.

The modules are set to start at 8,400 MT/s (based on information from Adata) and then scale to 17,600 MT/s data transfer rate. Meanwhile, Adata claims that the modules will exist in 16GB, 32GB, 64GB, 128GB, and 192GB capacities.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

- Paul AlcornEditor-in-Chief

-

thestryker I'm really curious what the latency penalty on MR/MCR-DIMM memory looks like as from that picture a potential client version wouldn't have to be larger than current DDR5. This could be a way to increase memory bandwidth a fair bit without needing huge overclocks/shifting to a 256 bit memory bus.Reply -

Kamen Rider Blade MR-DIMM (Multi-Ranked Buffered DIMM) is what I envisioned the future of Server DIMMs where DIMMs will exceed more than 2x physical rows.Reply -

bit_user Reply

I think I read that it's an additional cycle, at the memory bus speed. I'm not sure if that's relative to Registered DIMMs (which already add a ~1 cycle penalty), but I would assume so.thestryker said:I'm really curious what the latency penalty on MR/MCR-DIMM memory looks like

The bigger issue is probably that they increase the burst size from 64 bytes to 128 bytes. Unless the two halves of that burst can come from different addresses, that would make the practical speedup you'd get from them sub-linear (too often, we only want 64 bytes from a given address, due to how CPU caches currently work).

It's a good stop-gap on the way towards greater use of in-package memory. That's where you're going to see non-linear increases in memory bandwidth.thestryker said:This could be a way to increase memory bandwidth a fair bit without needing huge overclocks/shifting to a 256 bit memory bus.

In-package memory is an inevitability. Not only because memory bandwidth hasn't kept pace with compute scaling, but also because it's necessary for efficiency reasons. And even that won't be enough. Longer-term, we're probably looking at hybrid die stacks of compute + DRAM. -

thestryker Reply

Yeah that's my line of thinking.bit_user said:It's a good stop-gap on the way towards greater use of in-package memory. That's where you're going to see non-linear increases in memory bandwidth.

I'm mostly curious what client desktop looks like in this circumstance, because I agree completely this is where it's going. The benefits of integration outweigh the problems in the case of laptops, but I'm not sure about desktop. I assume that we'll likely see more integration in servers and they'll use CXL for capacity, but I'm not sure that will work on client desktop unless we see changes in connectivity (which I'd welcome).bit_user said:In-package memory is an inevitability. Not only because memory bandwidth hasn't kept pace with compute scaling, but also because it's necessary for efficiency reasons. And even that won't be enough. Longer-term, we're probably looking at hybrid die stacks of compute + DRAM. -

bit_user Reply

Look at what Apple achieved with in-package DRAM. The M1 Ultra came with 128 GB of DRAM, which is as much as you could put in a x86 desktop platform... until 48 GB DIMMs came along and elevated them to 192 GB. Then again, you could probably just put those same dies in-package, increasing it to match. So, capacity isn't necessarily the kind of problem you might think it is.thestryker said:I'm mostly curious what client desktop looks like in this circumstance, because I agree completely this is where it's going. The benefits of integration outweigh the problems in the case of laptops, but I'm not sure about desktop. I assume that we'll likely see more integration in servers and they'll use CXL for capacity, but I'm not sure that will work on client desktop unless we see changes in connectivity (which I'd welcome).

If you want to scale beyond that, then some form of slower, external DRAM will be necessary. It could be a conventional DIMM, but I think it will eventually shift to CXL.mem. If external DRAM is integrated via page-migration, then the latency impact of CXL (vs. directly-connected DIMM) should be negligible. The advantage of CXL is that you have the flexibility to use the same lanes for either memory, storage, or I/O. So, it's a lot more versatile than the current split where some pins on the CPU are dedicated to PCIe and others to DDR5. -

thestryker Reply

FWIW you can't use DDR5 in package as the chip capacities are only 16/24Gb (max spec is 64Gb). LPDDR5/5X currently are 144/128Gb (I wasn't able to find max spec) which still would require minimum of 8 chips which takes up considerable package space.bit_user said:Look at what Apple achieved with in-package DRAM. The M1 Ultra came with 128 GB of DRAM, which is as much as you could put in a x86 desktop platform... until 48 GB DIMMs came along and elevated them to 192 GB. Then again, you could probably just put those same dies in-package, increasing it to match. So, capacity isn't necessarily the kind of problem you might think it is. -

bit_user Reply

Yeah, the M1 Ultra has a 1024-bit memory interface, so that implies 16. But, that's just what Apple did in 2021. Nvidia's new Grace has 512 GB of LPDDR5X, and I'm pretty sure it just uses a 512-bit data bus. If we look at HBM, Intel's Xeon Max has up to 64 GB in 4 stacks.thestryker said:FWIW you can't use DDR5 in package as the chip capacities are only 16/24Gb (max spec is 64Gb). LPDDR5/5X currently are 144/128Gb (I wasn't able to find max spec) which still would require minimum of 8 chips which takes up considerable package space.

In spite of how I was talking, I'm not really worried about capacity. The lowest-memory Apple M1 has just 8 GB of memory, and that's shared between the CPU and GPU. To make it work, they use hardware memory compression and swap. For basic stuff, it's fine.

The way it's looking like CXL.mem would work is as a faster substitute for swapping. So, you migrate pages to/from the CXL module(s) on demand. Ideally, this would have some level of hardware support. An interesting difference between swapping to a storage device and CXL.mem is that it's not too painful to access data on the memory module without migrating to faster, in-package memory. You'd only want to migrate it, if it starts getting a lot of accesses. -

thestryker Reply

CXL implementations have been the most interesting part of forthcoming hardware to me. I'm mostly looking forward to MTL just to see the specifics on how Intel is using it within the CPU itself.bit_user said:The way it's looking like CXL.mem would work is as a faster substitute for swapping. So, you migrate pages to/from the CXL module(s) on demand. Ideally, this would have some level of hardware support. An interesting difference between swapping to a storage device and CXL.mem is that it's not too painful to access data on the memory module without migrating to faster, in-package memory. You'd only want to migrate it, if it starts getting a lot of accesses. -

bit_user Reply

Huh? I saw them mention that it uses UCIe, but that has a "raw" mode. CXL feels a little heavy-weight for the kind of chiplet interconnects on Meteor Lake. Did you see them say they're using it, or is that just conjecture?thestryker said:CXL implementations have been the most interesting part of forthcoming hardware to me. I'm mostly looking forward to MTL just to see the specifics on how Intel is using it within the CPU itself. -

thestryker Reply

Hotchips last year is when they talked about it. I don't recall if they specified where all they were using it, but they're using the protocol over their own interconnects. I caught it while watching Ian Cutress doing live commentary.bit_user said:Huh? I saw them mention that it uses UCIe, but that has a "raw" mode. CXL feels a little heavy-weight for the kind of chiplet interconnects on Meteor Lake. Did you see them say they're using it, or is that just conjecture?

I forgot Chips and Cheese covered it and they're saying IGP: https://chipsandcheese.com/2022/09/10/hot-chips-34-intels-meteor-lake-chiplets-compared-to-amds/