Expansion card lets you insert 512GB of extra DDR5 memory into your PCIe slot — CXL 2.0 AIC designed for TRX50 and W790 workstation motherboards

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

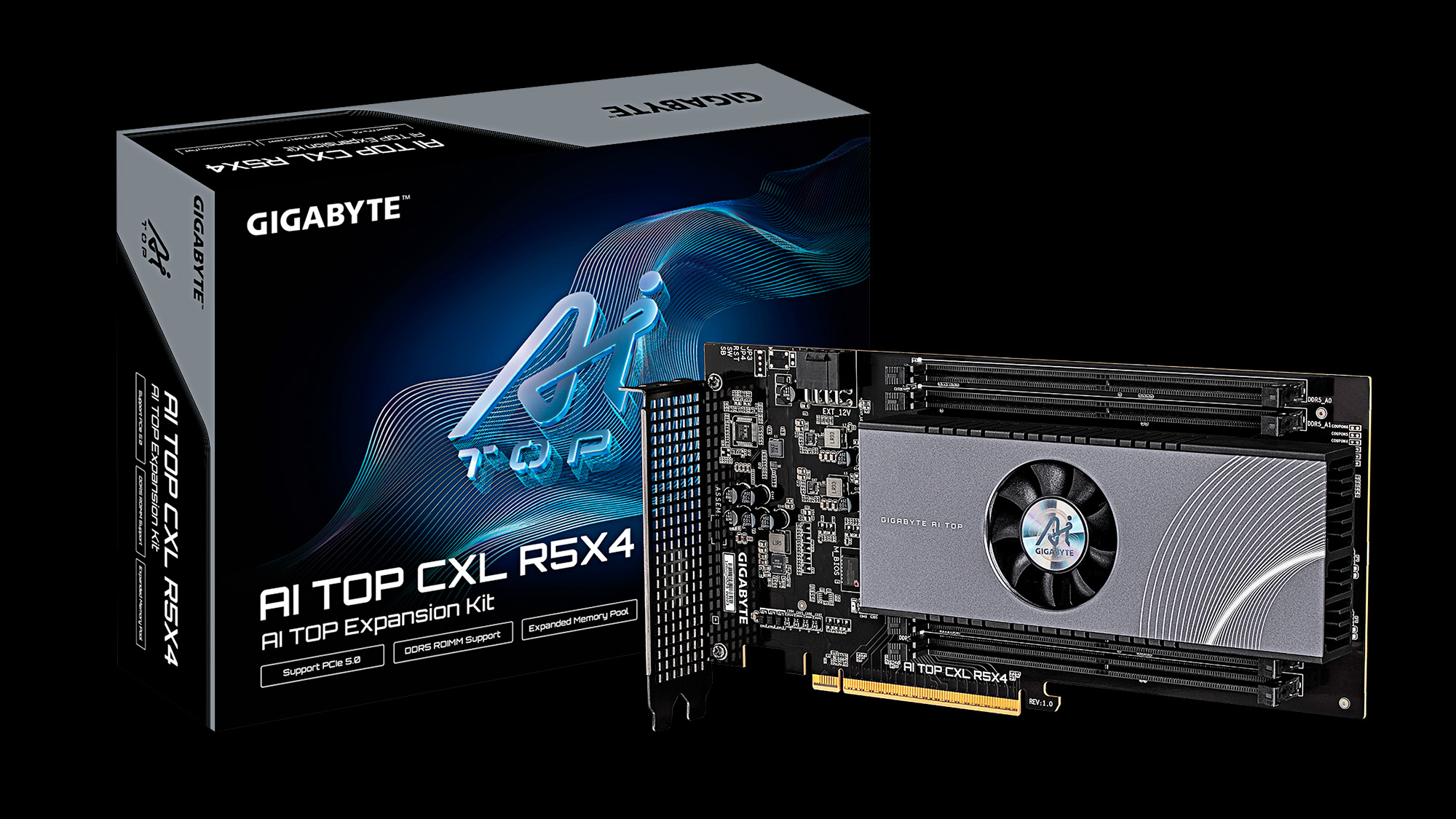

Workstation motherboards already have access to an immense amount of memory. However, additional memory can often be beneficial. Gigabyte has introduced the AI Top CXL R5X4, a specialized add-in card (AIC) designed to further increase the memory capacity of the company's TRX50 AI Top and W790 AI Top motherboards.

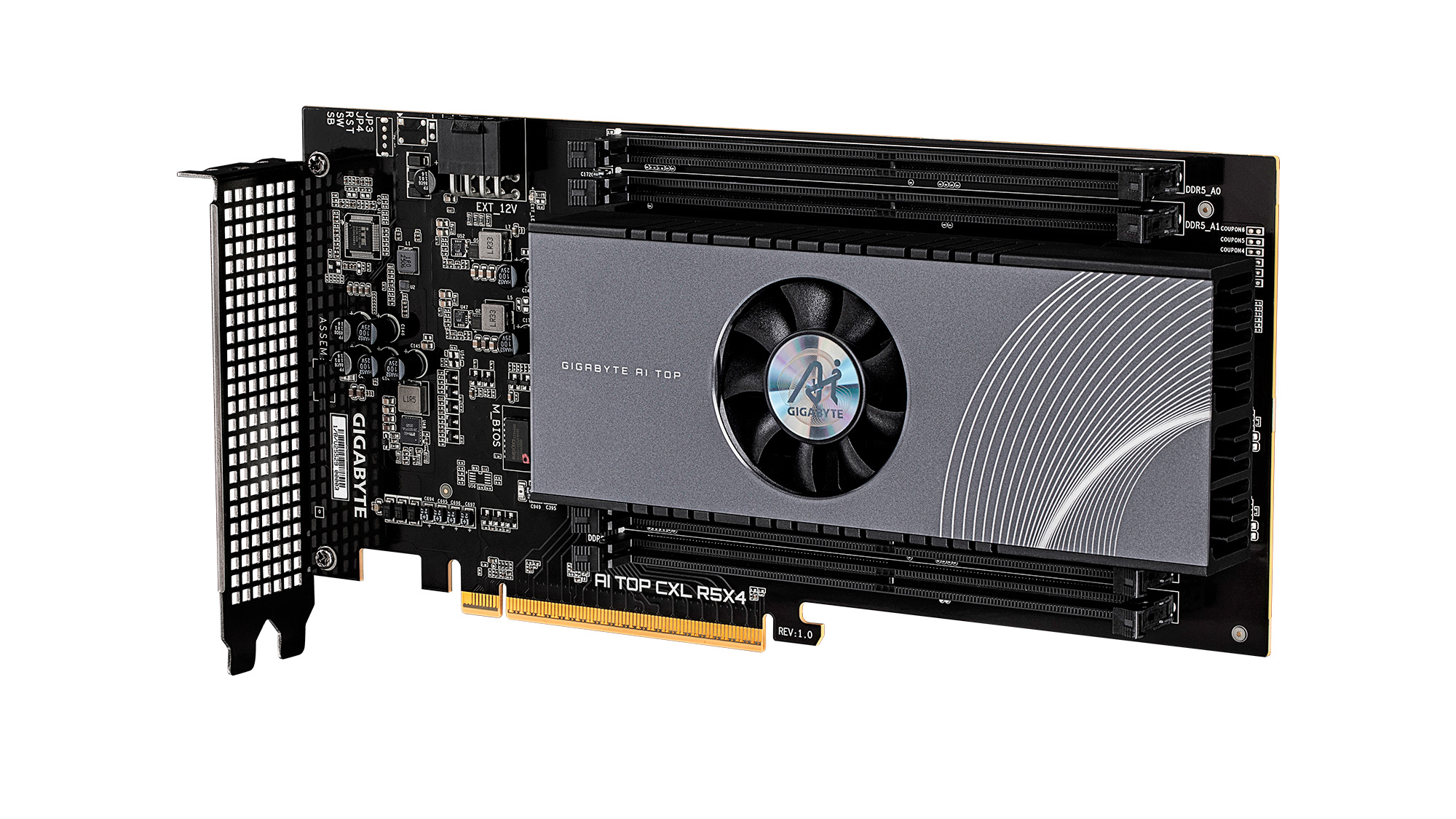

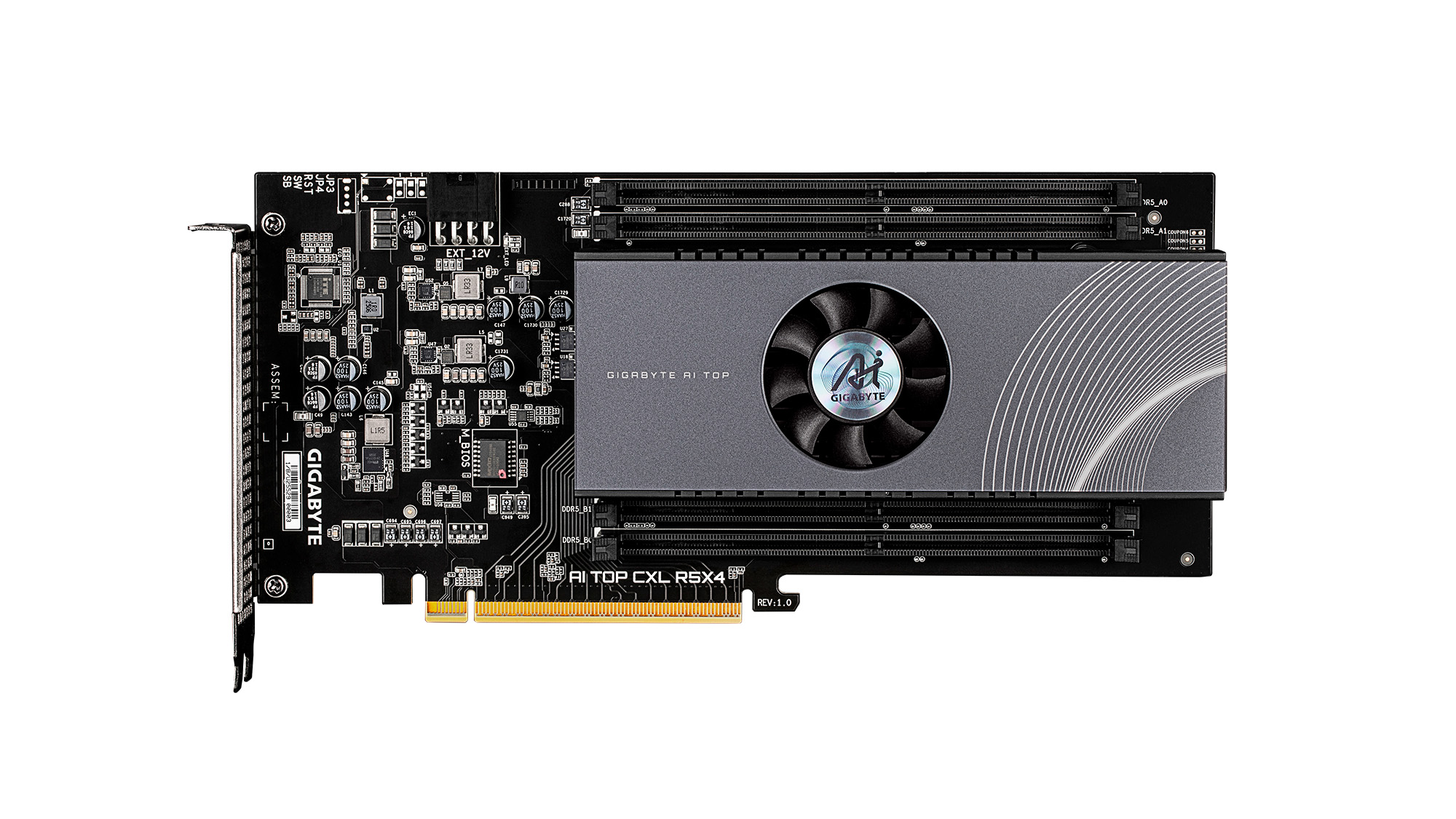

The AI Top CXL R5X4 may initially be mistaken for an entry-level gaming graphics card if you don't catch the presence of the memory slots. It is because the AIC, which measures 4.7 x 10 inches (12 x 25.4 cm), slots into a regular PCIe 5.0 x16 expansion slot. The AI Top CXL R5X4, which features a 16-layer HDI PCB, is based around the Microchip PM8712 controller cooled with what Gigabyte calls the "CXL Thermal Armor," a solution manufactured with a full-metal thermal design. It also includes an AIO fan for active cooling, which expels air from the shroud to cool the nearby memory slots.

Other CXL solutions, such as the Samsung CMM-D modules, come with a fixed amount of memory. Gigabyte's AI Top CXL R5X4, however, features four DDR5 memory slots that support RDIMMs, allowing you to choose the amount of memory you want and accommodate future upgrades. With each slot supporting up to 128GB, the total memory capacity can reach 512GB on the AI Top CXL R5X4.

Gigabyte has not specified the supported data rate for the card. For reference, some RDIMMs have achieved data rates of up to DDR5-8800. The QVL for the AI Top CXL R5X4, last updated on April 25, shows support for DDR5 memory kits with data rates up to DDR5-6800.

While the AI Top CXL R5X4 is installed in a PCIe 5.0 x16 expansion slot, the card communicates with your system using the CXL 2.0/1.1 protocol; You will need a motherboard with at least the AMD TRX50 chipset or the Intel W790 chipset. Gigabyte specifically advertises the AI Top CXL R5X4 for the brand's TRX50 AI Top and W790 AI Top motherboards. However, we don't see any reason why the AIC wouldn't work on any motherboard with PCIe 5.0 and CXL support.

As far as power requirements go, the AIC relies on a standard 8-pin EXT12V power connector. Gigabyte has conveniently placed a small power connector indicator LED beside the power connector to indicate whether it's plugged in correctly or not.

Gigabyte hasn't revealed when the AI Top CXL R5X4 (12ME-ATCXL54-101AR) will be available nor its price.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Follow Tom's Hardware on Google News, or add us as a preferred source, to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button!

Zhiye Liu is a news editor, memory reviewer, and SSD tester at Tom’s Hardware. Although he loves everything that’s hardware, he has a soft spot for CPUs, GPUs, and RAM.

-

bit_user Reply

The only other x86 machines that support CXL are the larger-socket Xeons from Sapphire Rapids generation (2022) and beyond.The article said:we don't see any reason why the AIC wouldn't work on any motherboard with PCIe 5.0 and CXL support.

OS support for this should be interesting. Linux has tiered memory support for a long time, but I don't know what kind of shape it's in. I hope they send Phoronix one of these to benchmark, though it's perhaps unlikely.

I wonder how close you can get to the nominal PCIe 5 rate of 64 GB/s, in each direction. -

Alvar "Miles" Udell ReplyHowever, we don't see any reason why the AIC wouldn't work on any motherboard with PCIe 5.0 and CXL support.

I can give you a very good reason: Most motherboards outside of HEDT markets like Threadripper don't have x16/x16 5.0 -

bit_user Reply

Many desktop motherboards have featured PCIe 5.0 x16 since 2021 (when Intel launched LGA 1700 with it), but those don't support CXL.Alvar Miles Udell said:I can give you a very good reason: Most motherboards outside of HEDT markets like Threadripper don't have x16/x16 5.0 -

Amdlova Reply

but you have 300x nvme ports :SAlvar Miles Udell said:I can give you a very good reason: Most motherboards outside of HEDT markets like Threadripper don't have x16/x16 5.0 -

Phillip0web Wouldn't this be a lot slower than regular memory on the board? Isn't pcie 5 slower?Reply -

bit_user Reply

For sure, it would. According to this, CXL latency is expected to be 170-250 ns.Phillip0web said:Wouldn't this be a lot slower than regular memory on the board?

https://www.servethehome.com/compute-express-link-cxl-latency-how-much-is-added-at-hc34/

I think CXL 3.0 cuts down on that. But, it won't work any miracles.

Probably the main way that something like this would be used is page-migration. Pages can be swapped into local DRAM, either on first access or as they start to heat up. Cold pages can be demoted, sort of like virtual memory.

Here's a resource I found, concerning the topic. I haven't really looked at it, so I have no idea whether you'd find it helpful. It does at least touch on all the keywords and core concepts.

https://jiyuan.is/papers/asplos25-m5.pdf

As for the bandwidth question, PCIe 5.0 x16 is on the order of how fast a desktop dual-channel memory setup runs. Yes, a bit slower, if you're only accessing in mostly one direction. Of course, server (currently) have up to 12-channel memory subsystems. However, the nice thing about CXL is that it scales. So, if you have a server platform, with 64 CXL lanes, you can add 4 of these cards and get 4x linear scaling.Phillip0web said:Isn't pcie 5 slower?

What's really cool is that later revisions of CXL support switching. So, it lets you aggregate large amounts of memory in a pool that might be larger than the total amount of DRAM you could connect directly to the CPUs.

What's even better is that CXL defines cache-coherent access between host CPUs and accelerators, meaning a GPU could pull data directly from these cards, without having to go to the host CPU. Cache-coherent means that the CPU can update data on the card, without having to worry about whether the GPU will see the latest version (or vice versa). -

abufrejoval Replybit_user said:For sure, it would. According to this, CXL latency is expected to be 170-250 ns.

Reminds me of how putting DRAM on plug-in cards used to be the 'normal' thing.

RAM always took lots of space, even when it was very low capacity, a 64KB magnetic core memory card was a full sized Unibus card in a PDP-11: those invented the 19" racks.

S-100 µ-computer systems also put RAM into a different slots by default, the CPU card really just had the CPU with it's required support circuitry.

Apple ]

Probably the main way that something like this would be used is page-migration. Pages can be swapped into local DRAM, either on first access or as they start to heat up. Cold pages can be demoted, sort of like virtual memory.

Here's a resource I found, concerning the topic. I haven't really looked at it, so I have no idea whether you'd find it helpful. It does at least touch on all the keywords and core concepts.

https://jiyuan.is/papers/asplos25-m5.pdfCXL has a very long-term vision and with that the shortcomings you get when you try to design very far ahead.

One of the main drivers was that in a typical HPC environment, you may find yourself having plenty of left-over RAM on one machine, that runs with high locality and a small memory footprint, while another really needs to access a huge dataset with poor locality, but can' afford swapping to disk or even NV-RAM without slowing down by orders of magnitude.

So CXL allows to scale-out PCIe compatible, but with in a multi-master (root) environment, that changes allocations at run-time. The idea is to lend/loan resources like RAM to/from other machines in the same data center, especially when they are close by, or use one of the optical interconnects CXL was envisioned for, to overcome the copper energy and latency issues, that mount up as distances grow. It's also designed to fit with UCI, which handles PCIe semantics/abstracions within silicon dies, so scaling can be done up and down.

Of course, it's not just RAM, storage, GPUs, any type of PCIe device could get elevated via CXL to out-of-the-box sharing and connectivity, but that causes layers, abstractions, indirections all of which cost raw speed and power.

Which is at least one reason, why proprietary links like NVlink, or what the Chinese are cooking, aim either for better performance or a smaller patent pool to pay licenses for.

And yes, either your application is directly conscious of the resources and their topology and migrates resources like storage (including RAM) to wherever they are most adequate for the workload, or they require proxies/agents to do it for them: traditionally that would be an OS responsibility, in scale-out it's a new layer, because you can't teach 1970 Unixoids with a God complex new tricks.

Yes, all that rich functionality with all the proper abstractions... and the overhead that implies. Nvidia will claim it's all futile and wasted effort and their proprietary fabric the only path worth following; the hyperscalers cook their secret sauces or even do their own optical switching interconnects (Google).bit_user said:As for the bandwidth question, PCIe 5.0 x16 is on the order of how fast a desktop dual-channel memory setup runs. Yes, a bit slower, if you're only accessing in mostly one direction. Of course, server (currently) have up to 12-channel memory subsystems. However, the nice thing about CXL is that it scales. So, if you have a server platform, with 64 CXL lanes, you can add 4 of these cards and get 4x linear scaling.

What's really cool is that later revisions of CXL support switching. So, it lets you aggregate large amounts of memory in a pool that might be larger than the total amount of DRAM you could connect directly to the CPUs.

What's even better is that CXL defines cache-coherent access between host CPUs and accelerators, meaning a GPU could pull data directly from these cards, without having to go to the host CPU. Cache-coherent means that the CPU can update data on the card, without having to worry about whether the GPU will see the latest version (or vice versa).

For you as a consumer, I'd say these CXL RAM cards will only become interesting, once they wind up used and really cheap on eBay. -

Alvar "Miles" Udell Replybit_user said:Many desktop motherboards have featured PCIe 5.0 x16 since 2021 (when Intel launched LGA 1700 with it), but those don't support CXL.

But not x16/x16, no non-Threadripper or EPYC board supports it, and even then not all CPUs do, and even then not all of them support CXL, and even on boards that do not all PCIe slots support CXL, like the Gigabyte TRX50 AI TOP.

Even if desktop boards did expose support for CXL you'd be limiting performance of anything stronger than a 5090 by using x8 (Techpowerup's PCIe scaling test shows the 5090 is -just- at the limit of x8 5.0), and hampering performance of the AIC. -

das_stig Maybe we need to go back in time ..Reply

1. Motherboard was just lots of expansion slots.

2. Cartridge style cpu sockets that conform to an industry standard connection to support

Intel, AMD, ARM, NVidia, Chinese chips

3. Couple of dedicated memory slots for memory riser cards.

4 .Couple of dedicated NVMe riser cards slots.

5. Various 16x 8x 4x slots to plugin whatever controller you need, audio, wi-fi, lan, SATA,SAS, in my opinion 1x slots obsolete

If you don't need audio, wi-fi, 10 USBs why have them eating up PCIe lanes etc.

Imagine a dual socket E-ATX with all those lovely slots to fill ? -

Amdlova @das_stig my old p8z68-v has more support than my new h670 board... but she have 3 nvme... just cables to use these nvme as usefull will cost two boards. The old system are more responsive don't have tons of cpu power or IO but is enough to put the 13600T to sleep!Reply