Nvidia's first CPU+GPU chips come to AI workstation desktop PCs — Grace Hopper Superchip systems start at $41,500

Yes, it can run Crysis.

Although Nvidia's flagship CPU, GPU, is intended for data centers and AI, GPTshop.ai sells the GH200 as part of an AI workstation in a desktop computer form factor. Anyone can now access Nvidia's Grace CPU and Hopper GPU, provided they have at least $41,500 to afford the base model of the GH200 system.

A GH200-powered desktop workstation looks completely overkill on paper. The Grace CPU half of GH200 has 72 cores and comes with 480GB of LPDDR5X memory, which isn't quite as much as the 1TB you can install on Threadripper 7000, but it's still a respectable amount. The show's real star is the Hopper-based H200 chip, which measures a massive 814mm2 and has 16,896 CUDA cores. Interestingly, GPTshop.ai offers both the HBM3 version of GH200 and the newer HBM3e version, including more VRAM.

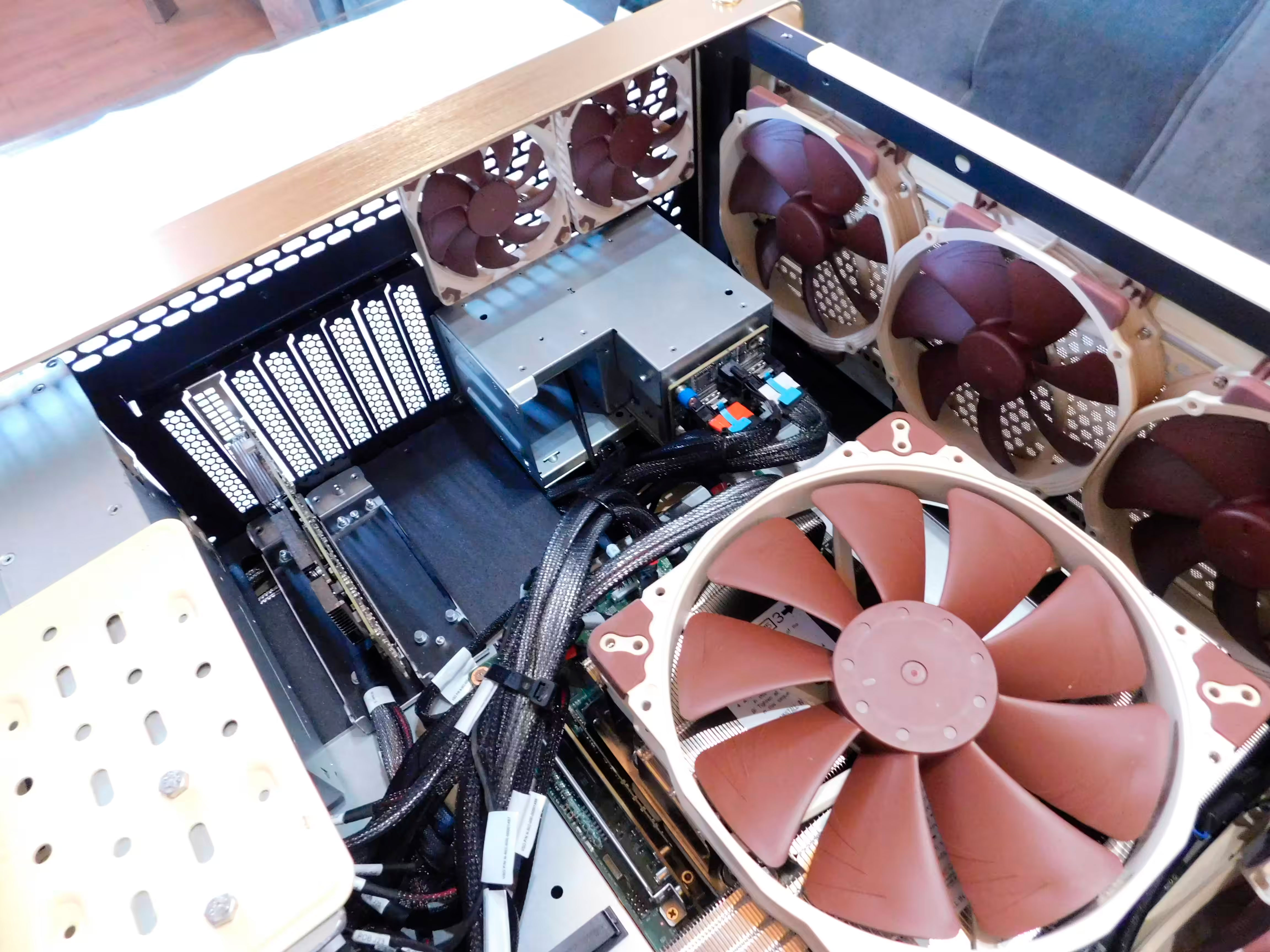

The system also features Noctua fans and cooling. It has optional add-ons like Nvidia's Bluefield-3 and ConnectX-7 networking cards, 8TB SSDs, 30TB HDDs, mouse and keyboard, and even an RTX 4060, presumably for graphics output or access to ray tracing, which is one of the few features that Hopper doesn't support. Your only option for OS is an Ubuntu server; Windows isn't a great operating system for Arm CPUs, let alone an Arm data center CPU.

Regarding performance for this GH200-equipped system, we can be confident that its CPU is quite potent. Linux-focused publication Phoronix tested the workstation desktop's Grace CPU in various workloads. Although Grace was slower on average than top-end chips from Intel and AMD, it won more benchmarks than any other chip: 15 against Emerald Rapids and 13 against Bergamo and Genoa, out of 23 benchmarks.

Meanwhile, we can't be sure of how well the H200 half of the device will perform as the Phoronix review didn't test the GPU. Still, the chip's on-paper specifications strongly imply it's a heavy hitter, which isn't surprising since there's so much demand for H200 (and pretty much every AI-capable GPU these days, for that matter).

Although $50,000 is certainly no small amount of change for a workstation desktop, it would seem a decent deal, considering the H100, the predecessor of the H200, goes for $40,000 on its own. For $50,000, getting a faster GPU plus a top-end server CPU all inside a prebuilt system sounds like a pretty good deal.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Matthew Connatser is a freelancing writer for Tom's Hardware US. He writes articles about CPUs, GPUs, SSDs, and computers in general.

-

ezst036 Well, with Apple no longer selling $50,000 computers someone had to step in and fill that gap! I just went to the Apple website, the highest I can push up an Apple Pro tower with every hardware upcharge is around $12,000.Reply

Nvidia was even kind enough to put it into a sort of cheese grating case. Albeit, gold-ish instead of silver. (The Nvidia tower looks closer to the towers from a decade ago IMHO)

-

vanadiel007 Well, even if I had a lot of greenbacks to burn I would refuse to purchase this because of the poor cable management.Reply

If you pay +$40,000 for a desktop computer it should look like it, and not look like it's wired up by a 5 year old. -

williamcll Reply

The Case is an InWin DubilSystemsincode said:do they sell the case separate? its lovely. -

BoobleKooble Replyezst036 said:Well, with Apple no longer selling $50,000 computers someone had to step in and fill that gap! I just went to the Apple website, the highest I can push up an Apple Pro tower with every hardware upcharge is around $12,000.

Nvidia was even kind enough to put it into a sort of cheese grating case. Albeit, gold-ish instead of silver. (The Nvidia tower looks closer to the towers from a decade ago IMHO)

tons of companies sell such systems, at least in terms of price tag.

If you are trying to tie the price tag to the ecosystem of standardized component based systems that are recognizable to normal consumer or low end workstation experienced users (ie built around socketed CPU and PCIE bus) most extremely expensive systems derive their price tag from comically expensive accelerator cards

for example, the tippy tip toppest range of Xylinx FPGA accelerators usually commands ~$100,000 per part price at any given point in time.

Thus you could easily build >$100,000 system simply by incorporating exactly 1 such card into its design.