Nvidia's Grace server CPU trades blows with AMD and Intel in detailed review -- outperforms Bergamo, Genoa, and Emerald Rapids in over half of the benchmarks

Slower on average though.

Nvidia's Grace server CPU appears to be very competitive, according to Phoronix's review of the GH100, which includes a single Grace chip. Although Nvidia's 72-core Arm CPU lagged behind AMD's and Intel's flagships in overall performance, it won in more benchmarks than the top-end Epyc 9754 or Xeon Platinum 8592+. With more optimization for the Arm architecture, Grace could prove to be a very potent datacenter processor.

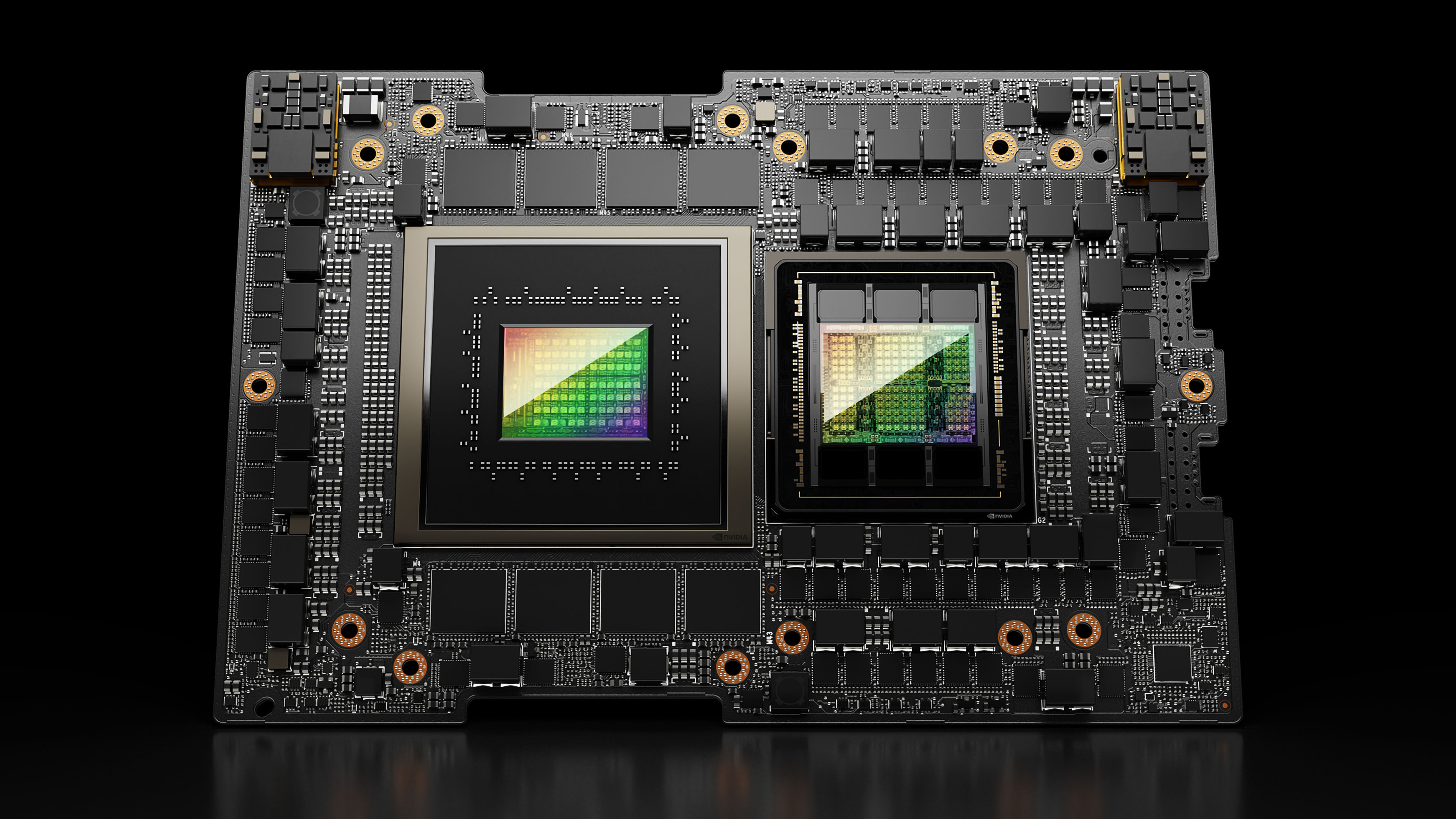

GH100 includes a Hopper GPU and a 72-core Grace CPU with 480GB of LPDDR5X RAM. Since Nvidia doesn't sell single Grace chips on their own, GH100 (and GH200) are really the only devices that can be tested to ascertain the performance of just one Grace CPU. Phoronix obtained access to a GH100 via GPTshop.ai, but only remotely. No power statistics were exposed to the remote computer, and since the publication couldn't see power draw from the wall, no power figures are quoted in the review.

The benchmarks were conducted in Linux, the most common server operating system. The review includes comparisons to many different CPUs, including dual-socket setups. In the table below, we've taken the results comparing Grace to AMD's flagship Bergamo-based Epyc 9754 and Intel's top-end Emerald Rapids Xeon Platinum 8592+

| Row 0 - Cell 0 | Grace-Hopper GH200 | Epyc 9754 | Xeon Platinum 8592+ |

| High Performance Conjugate Gradient | 41.69 | 25.89 | 35.42 |

| Algebraic Multi-Grid Benchmark 1.2 | 1,997,929,111 | 2,291,049,667 | 1,839,912,667 |

| LULESH 2.0.3 | 23,185.18 | 22,356.75 | 39,468.91 |

| Xmrig 6.18.1 | 17,253 | 29,356.1 | 40,381.2 |

| John The Ripper 2023.03.14 | 68,817 | 204,828 | 178,108 |

| ACES DGEMM 1.0 | 17.94 | 43.68 | 29.14 |

| GraphicsMagick 1.3.38 Sharpen | 1,363 | 924 | 749 |

| GraphicsMagick 1.3.38 Enhance | 1,761 | 1,451 | 1,192 |

| Graph500 3.0 Median | 1,239,790,000 | 1,147,090,000 | 1,238,670,000 |

| Graph500 3.0 Max | 1,315,650,000 | 1,184,510,000 | 1,304,200,000 |

| Stress-NG 0.16.04 Matrix | 512,759.08 | 552,067.04 | 301,894.53 |

| Stress-NG 0.16.04 Matrix 3D | 17,483.02 | 8,009.21 | 13,854.38 |

These tests were all measured in different values, ranging from GFLOPs to calculations per second to points. Most of Grace's losses are contained in this spread of benchmarks, which is why the CPU might not look that impressive at first glance. Still, there are workloads where Grace has big leads, like High Performance Conjugate Gradient and GraphicsMagick.

| Row 0 - Cell 0 | Grace-Hopper GH200 | Epyc 9754 | Xeon Platinum 8592+ |

| Rodinia 3.1 (Lower is better) | 30.31 | 25.15 | 39.89 |

| NWChem 7.0.2 (Lower is better) | 1,403.5 | 1,700.8 | 1,850.8 |

| Xompact3d Incompact3d (Lower is better) | 254.49 | 493.5 | 323.53 |

| Xompact3d Incompact3d (Lower is better) | 9.81 | 9.03 | 10.18 |

| Godot Compilation 4.0 (Lower is better) | 139.1 | 118.25 | 111.96 |

| Primesieve 8.0 (Lower is better) | 35.49 | 21.76 | 49.06 |

| Helsing 1.0-beta (Lower is better) | 67.61 | 48.95 | 84.95 |

| DuckDB 0.9.1 IMDB (Lower is better) | 92.08 | 147.6 | 96.87 |

| DuckDB 0.9.1 TPC-H Parquet (Lower is better) | 148.76 | 177.13 | 134.73 |

| RawTherapee (Lower is better) | 46.72 | 66.13 | 45.53 |

| Timed Gem 5 Compilation 23.0.1 (Lower is better) | 180.62 | 208.58 | 174.18 |

| Overall Average Performance | 2,175.03 | 2,459.11 | 2,242.9 |

Grace picks up more steam in this second set of tests scored on completion time, where lower is better. In the end, the single Grace chip racks up 15 wins against Emerald Rapids and 13 wins against both Bergamo and Genoa (which isn't included in the table, but the results are very similar). There were even some cases where Nvidia's server CPU beat AMD's or Intel's in dual-socket systems. Grace was also very fast compared to Ampere's aging Altra Max M128-30, which also uses Arm.

However, because many of Grace's losses were pretty big, on average, it's 3% behind the Emerald Rapids-powered Xeon Platinum 8592+ and about 13% slower than the Bergamo-based Epyc 9754 and the Genoa-based Epyc 9654. According to Phoronix, "there still are some workloads not too well optimized for AArch64 [Arm]," which is a key reason why when Grace lost, it often lost by a large margin.

It's hard to evaluate how good Grace will be as a server CPU based solely on performance, though, as efficiency is also a key metric. However, we know that the Grace superchip combining two Grace CPUs has a TDP of 500 watts, implying that a single Grace likely isn't using anything more than 350 watts. Early benchmarks for the superchip certainly suggest it's very efficient, which will probably also be true for single-chip configurations.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Matthew Connatser is a freelancing writer for Tom's Hardware US. He writes articles about CPUs, GPUs, SSDs, and computers in general.

-

bit_user The Geomean shows a single, 72-core Grace achieving 2175, while a single, 96-core AMD EPYC 9654 achieves 2499. So, that's 14.9% faster with 33.3% more cores (and 166.7% more threads).Reply

What's even more impressive is how well it does against the 128-core/256-thread Zen 4C-based Bergamo (EPYC 9754), which is only 13.1% faster with 77.8% more cores (255.6% more threads).

Not bad, Grace! -

Gururu Reply

Is there a particular test that informs best a linear relationship between score and number of cores? I wonder if there is some kind of diminishing return.bit_user said:The Geomean shows a single, 72-core Grace achieving 2175, while a single, 96-core AMD EPYC 9654 achieves 2499. So, that's 14.9% faster with 33.3% more cores (and 166.7% more threads).

What's even more impressive is how well it does against the 128-core/256-thread Zen 4C-based Bergamo (EPYC 9754), which is only 13.1% faster with 77.8% more cores (255.6% more threads).

Not bad, Grace! -

bit_user Reply

This is a good question and I'm not aware of anyone having done that analysis. I think Michael (the author at Phoronix) knows which tests tend to scale well.Gururu said:Is there a particular test that informs best a linear relationship between score and number of cores? I wonder if there is some kind of diminishing return.

An interesting distinction might also be whether they scale well to multiple, physical CPUs. That subset is easy to eyeball by just looking for tests where 2P configurations score about double (or half) of a 1P test setup. Among these, I see:

Algebraic Multi-Grid Benchmark 1.2

Xcompact3d Incompact3d 2021-03-11 - Input: input.i3d 193 Cells Per Direction

LULESH 2.0.3

Xmrig 6.18.1 - Variant: Monero- Hash Count: 1M

John The Ripper 2023.03.14 - Test: bcrypt

Primesieve 8.0 - Length: 1e13

Helsing 1.0-beta - Digit Range: 14 digit

Stress-NG 0.16.04 - Test: Matrix Math

I think categorizing the benchmarks in Phoronix Test Suite (which allegedly takes a couple months to run in its entirety!), based on things like multi-processor scalability, sensitivity to memory bottlenecks, intensity of disk I/O, etc. would be fertile ground for someone to tackle. This would also let you easily run just such a sub-category, based on what aspect you want to stress.

It would also be interesting to do some clustering analysis of the benchmarks in PTS, so that you could skip those which tend to be highly-correlated and run just the minimal subset needed to fully-characterize a system.

Something else that tends to come up is how heavily a benchmark uses vector instructions and whether it contains hand-optimized codepaths for certain architectures.

As PTS is an open source project, it might be possible to add some of these things (or, at least enough logging that some of these metrics can be computed), but I have no idea how open Michael might be for such contributions to be upstreamed. -

K.A.R Reply

It's still slower than the 9554 which has 64c.bit_user said:The Geomean shows a single, 72-core Grace achieving 2175, while a single, 96-core AMD EPYC 9654 achieves 2499. So, that's 14.9% faster with 33.3% more cores (and 166.7% more threads).

What's even more impressive is how well it does against the 128-core/256-thread Zen 4C-based Bergamo (EPYC 9754), which is only 13.1% faster with 77.8% more cores (255.6% more threads).

Not bad, Grace! -

bit_user Reply

Yes, the GeoMean is indeed lower (which is what I was talking about).K.A.R said:It's still slower than the 9554 which has 64c.

There are some outliers, though. In each direction, to be fair, but things like Xmrig (Monero) seem like they'd probably have some x86 optimizations and maybe not ones for ARM. John The Ripper (bcrypt) and ACES DGEMM seem like other cases where a few, well-placed optimizations could tip the balance more in ARM's favor.

It would be nice if Phoronix would be able to exclude benchmarks with x86-specific optimizations that don't also have ARM-optimized paths. However, given some comments in that article, it's clear this was not done.

That said, I think the V2 still does better on energy-efficiency than Zen 4, according to the prior article. Ultimately, Grace is just there as a support chip for Hopper. So, I think it doesn't need the best per-core perfomance, in order to serve that role - efficiency is more important.

Also, Grace can scale up to at least 32 CPUs per server. As far as I know the most x86 will scale is in the top of the Xeon range, where you can build only 8P systems. EPYC only scales to 2P. -

Pierce2623 Reply

He definitely knows which tests tend to scale well to many cores or even multiple sockets because he often makes those annotations in his reviews. He also knows which tests are more bandwidth dependent etc. He literally benchmarks hardware for a living. He’s good at it, too.bit_user said:This is a good question and I'm not aware of anyone having done that analysis. I think Michael (the author at Phoronix) knows which tests tend to scale well.

An interesting distinction might also be whether they scale well to multiple, physical CPUs. That subset is easy to eyeball by just looking for tests where 2P configurations score about double (or half) of a 1P test setup. Among these, I see:

Algebraic Multi-Grid Benchmark 1.2

Xcompact3d Incompact3d 2021-03-11 - Input: input.i3d 193 Cells Per Direction

LULESH 2.0.3

Xmrig 6.18.1 - Variant: Monero- Hash Count: 1M

John The Ripper 2023.03.14 - Test: bcrypt

Primesieve 8.0 - Length: 1e13

Helsing 1.0-beta - Digit Range: 14 digit

Stress-NG 0.16.04 - Test: Matrix Math

I think categorizing the benchmarks in Phoronix Test Suite (which allegedly takes a couple months to run in its entirety!), based on things like multi-processor scalability, sensitivity to memory bottlenecks, intensity of disk I/O, etc. would be fertile ground for someone to tackle. This would also let you easily run just such a sub-category, based on what aspect you want to stress.

It would also be interesting to do some clustering analysis of the benchmarks in PTS, so that you could skip those which tend to be highly-correlated and run just the minimal subset needed to fully-characterize a system.

Something else that tends to come up is how heavily a benchmark uses vector instructions and whether it contains hand-optimized codepaths for certain architectures.

As PTS is an open source project, it might be possible to add some of these things (or, at least enough logging that some of these metrics can be computed), but I have no idea how open Michael might be for such contributions to be upstreamed. -

bit_user Reply

I know he has a sense of these things, but that doesn't mean his knowledge is complete.Pierce2623 said:He definitely knows which tests tend to scale well to many cores or even multiple sockets because he often makes those annotations in his reviews. He also knows which tests are more bandwidth dependent etc.

He lacks transparency in which benchmarks he uses for which hardware reviews - certainly a way the results could be biased. Having classifications and categories of benchmarks would be a way to provide greater transparency, more alignment between different benchmark runs (as well as with & among user submissions), and reduce suspicions of influencing the results.Pierce2623 said:He literally benchmarks hardware for a living. He’s good at it, too. -

K.A.R Reply

Most software is optimized and designed for x86, so the Benchmark is realistic.bit_user said:Yes, the GeoMean is indeed lower (which is what I was talking about).

There are some outliers, though. In each direction, to be fair, but things like Xmrig (Monero) seem like they'd probably have some x86 optimizations and maybe not ones for ARM. John The Ripper (bcrypt) and ACES DGEMM seem like other cases where a few, well-placed optimizations could tip the balance more in ARM's favor.

It would be nice if Phoronix would be able to exclude benchmarks with x86-specific optimizations that don't also have ARM-optimized paths. However, given some comments in that article, it's clear this was not done.

That said, I think the V2 still does better on energy-efficiency than Zen 4, according to the prior article. Ultimately, Grace is just there as a support chip for Hopper. So, I think it doesn't need the best per-core perfomance, in order to serve that role - efficiency is more important.

Also, Grace can scale up to at least 32 CPUs per server. As far as I know the most x86 will scale is in the top of the Xeon range, where you can build only 8P systems. EPYC only scales to 2P. -

bit_user Reply

This claim is starting to get pretty tired.K.A.R said:Most software is optimized and designed for x86, so the Benchmark is realistic.

Ever since the smartphone revolution got going, lots of common software libraries & tools have been getting optimized for ARM. Then, we started to see companies like Ampere, Applied Micro, Cavium, and even Qualcomm (i.e. their aborted Centriq product) start developing ARM-based server CPUs, with optimizations of server software for ARM beginning to trickle in. That trickle turned into a stream, when ARM launched it Neoverse initiative, back in late 2018.

The next big milestone is probably back in March 2020, when Amazon rolled out Graviton 2 - and with very competitive pricing. This fed in even more ARM optimizations.

In the embedded & self-driving space, Nvidia (along with most others, I think) has used ARM in its self-driving SoCs.

Even HPC felt ARM's presence, when Fugaku topped the charts, using Fujitsu's custom A64FX ARM core and no GPUs!

Finally, Windows/ARM has been brewing for probably the better part of the past decade, which should be focusing more attention on ARM optimizations of client-specific packages.

By now, we're many years into the ARM revolution. There are still some packages yet to receive ARM optimizations, but they're probably in the minority of the ones commonly in use.

I think your claim is past its sell-by date. x86 stalwarts really need to come up with a new rallying cry, or risk getting laughed off the field. -

TerryLaze Replybit_user said:This claim is starting to get pretty tired.

Good thing you don't bring it up then...bit_user said:It would be nice if Phoronix would be able to exclude benchmarks with x86-specific optimizations that don't also have ARM-optimized paths. However, given some comments in that article, it's clear this was not done.

You can't have it both ways, sure everything that can be optimized for ARM has been, but there are still plenty of things that can't be optimized which is why you wanted phoronix to exclude them.