Intel Xeon Platinum 8176 Scalable Processor Review

Why you can trust Tom's Hardware

The Mesh Topology & UPI

The Scalable Processor family features fundamental changes to the web of interconnects that facilitate intra-processor and inter-socket communication. We covered Skylake-X's topology in Intel Introduces New Mesh Architecture For Xeon And Skylake-X Processors. We also followed up in our Core i9-7900X review with more details, adding fabric latency and bandwidth tests to our analysis. More recently, though, Intel gave us extra info to flavor our take on the mesh.

The Old Way

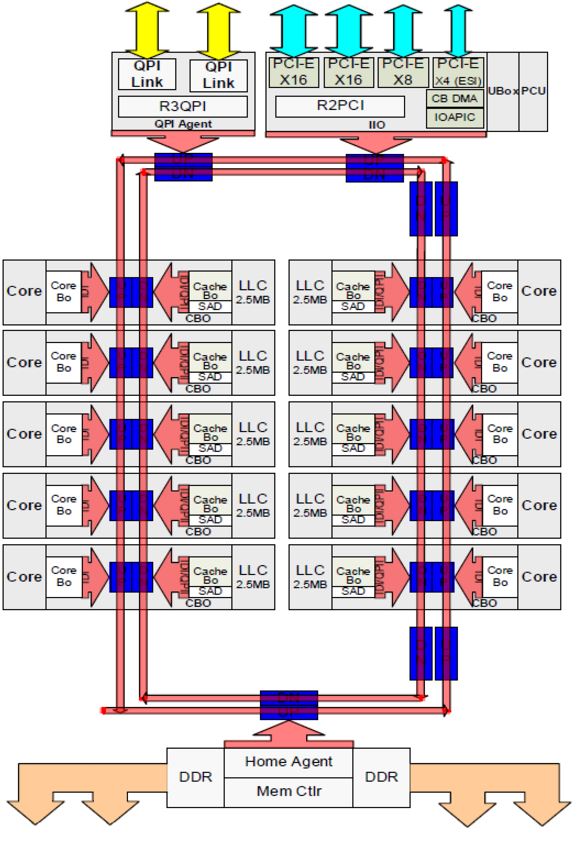

Processor interconnects move data between key elements, such as cores, memory, and I/O controllers. Several techniques have been used over the years for intra-processor communication. Intel's ring bus served as a cornerstone of the company's designs since Nehalem in 2007. Unfortunately, the ring bus cannot scale indefinitely; it becomes less viable as core counts, memory channels, and I/O increase.

With the ring bus, data travels a circuitous route to reach its destination. As you might imagine, adding stops on the bus amplifies latency. At a certain point, Intel had to split its larger die into two ring buses to combat those penalties. That created its own scheduling complexities, though, as the buffered switches facilitating communication between the rings added a five-cycle penalty.

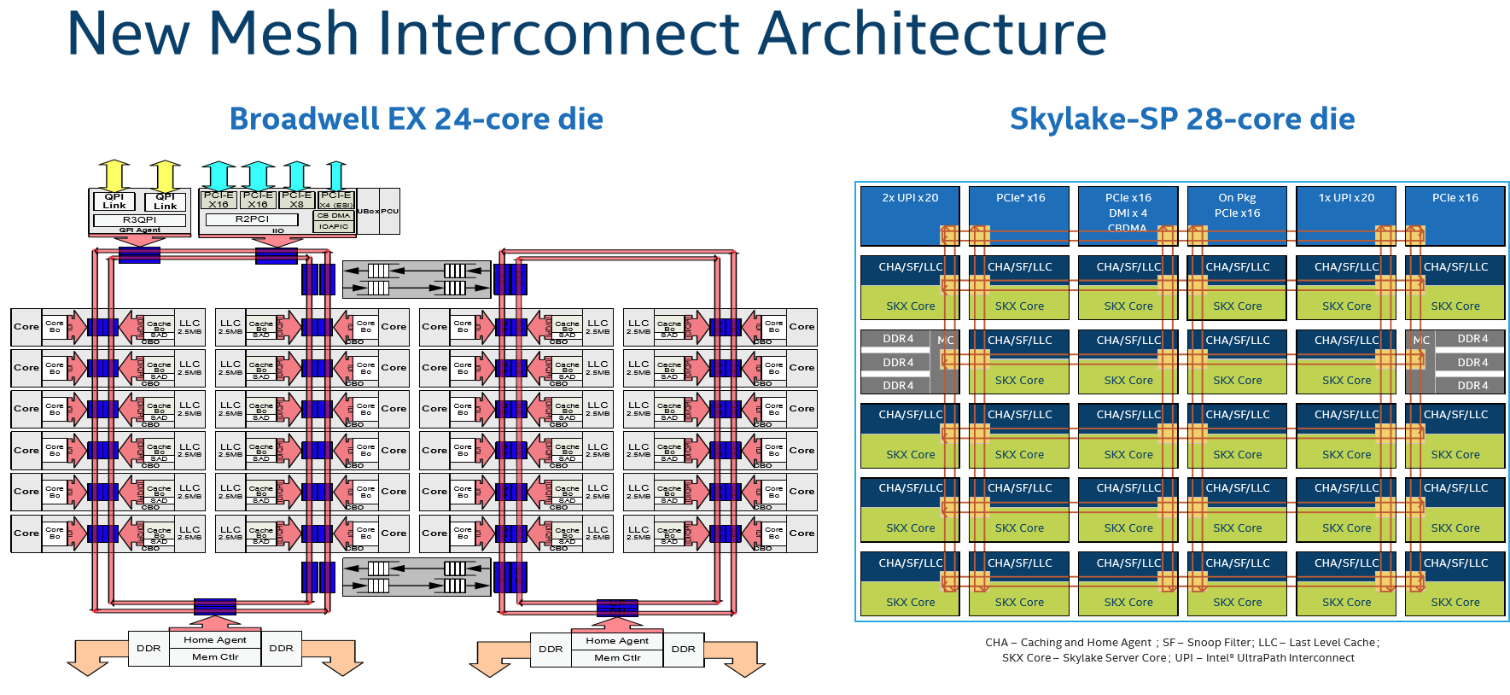

In the second slide, you can see Intel's mesh topology compared side-by-side to Broadwell-EX's dual ring bus design.

Enter The Mesh

Intel's 2D mesh architecture made its debut on the company's Knights Landing products. Those processors feature up to 72 cores, so we know from practice that the mesh is scalable.

For Skylake-SP, a six-column arrangement of cores would have necessitated three separate ring buses, introducing more buffered switches into the design and making it untenable. Instead, the mesh consists of rows and columns of bi-directional interconnects between cores, caches, and I/O controllers.

As you can see, the latency-killing buffered switches are absent. The ability to stair-step data through the cores allows for much more complex, and purportedly efficient, routing.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Intel claims its 2D mesh features a lower voltage and frequency than the ring bus, yet still provides higher bandwidth and lower latency. The mesh operates between ~1.8 - 2.4 GHz to save power, though that figure varies based on the model. Of course, this allows the company to dedicate more of its power budget to completing useful work without sacrificing intra-die throughput. After all, mesh bandwidth also affects memory and cache performance.

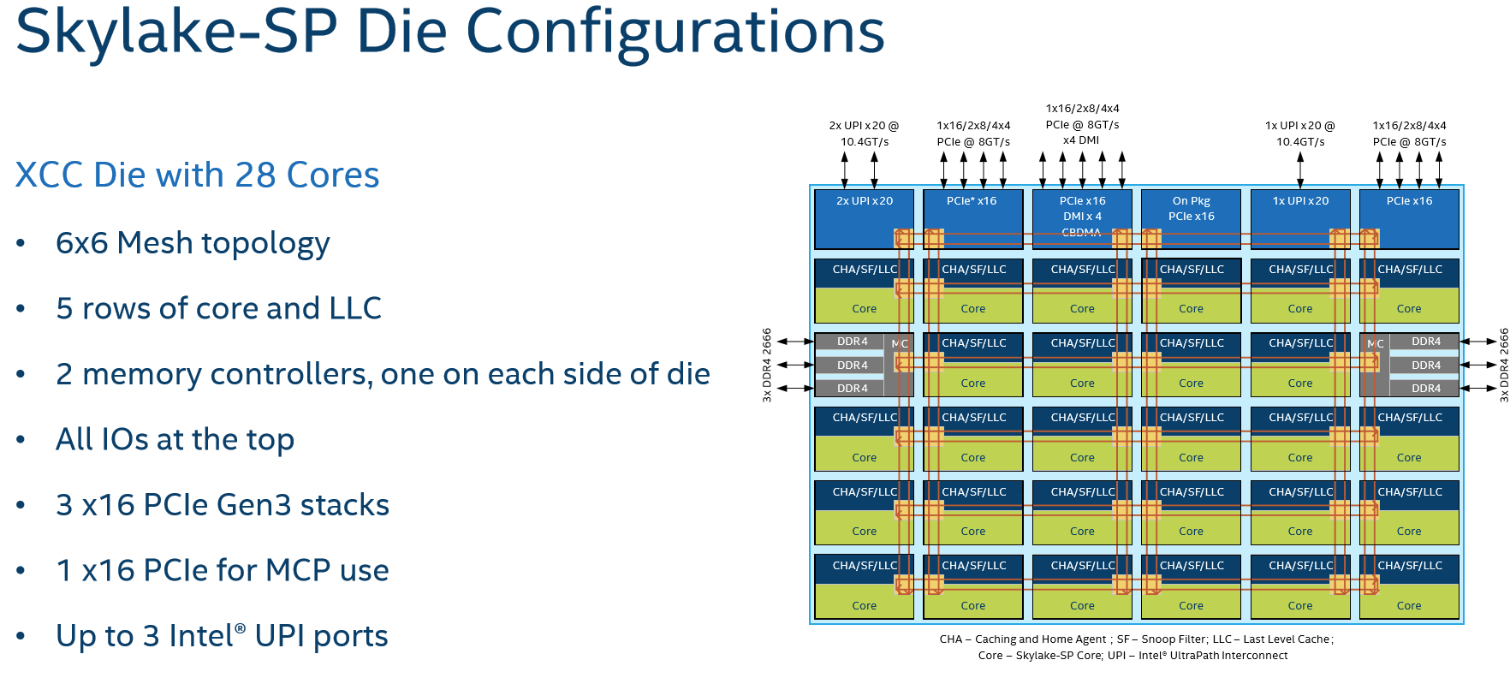

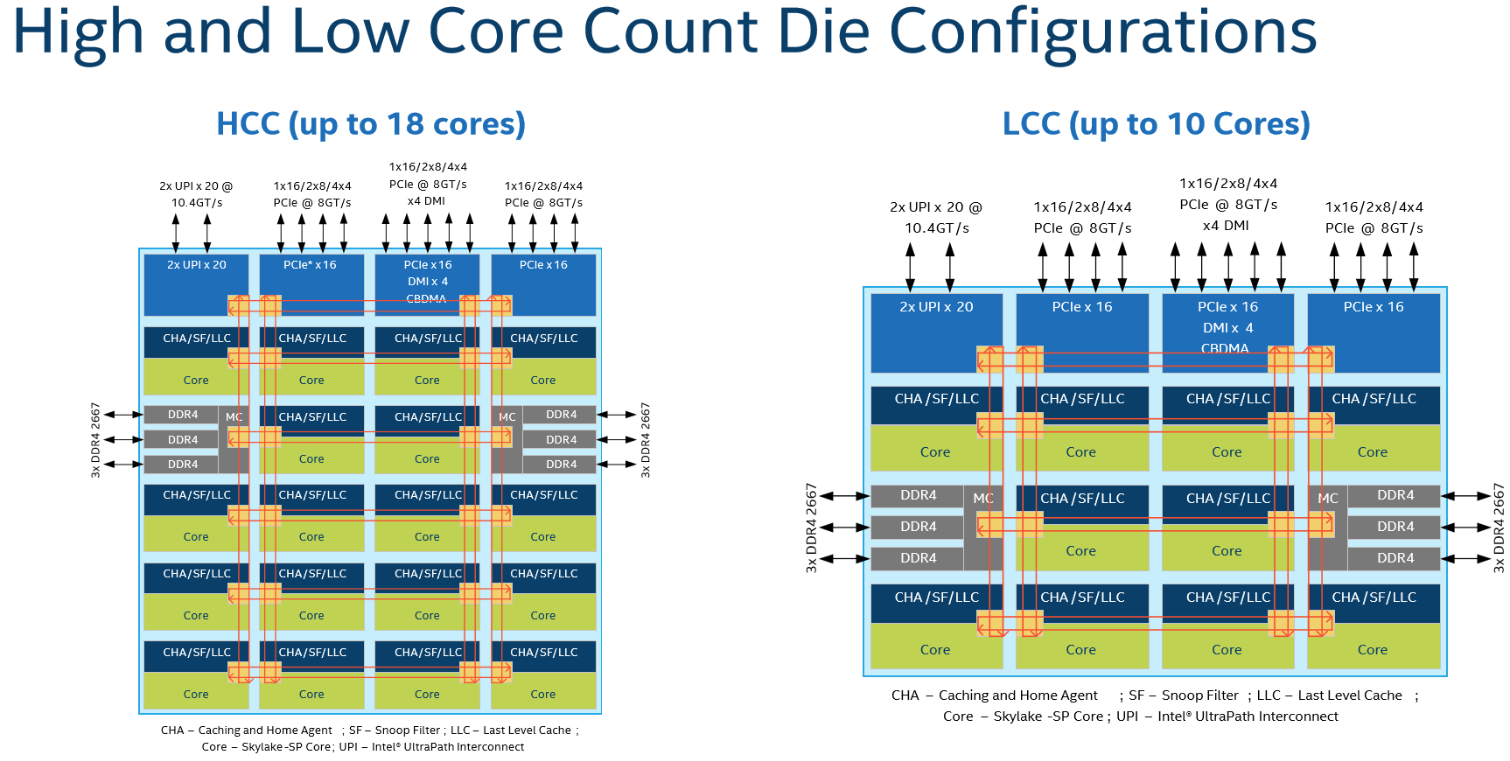

Intel constructs its Xeon Scalable Processors from different dies made up of varying core configurations. The XCC (extreme core count) die features 28 cores, the HCC (high core count) arrangement has 18 cores, and the LCC (low core count) implementation has ten. Intel isn't sharing transistor counts or die sizes at this time.

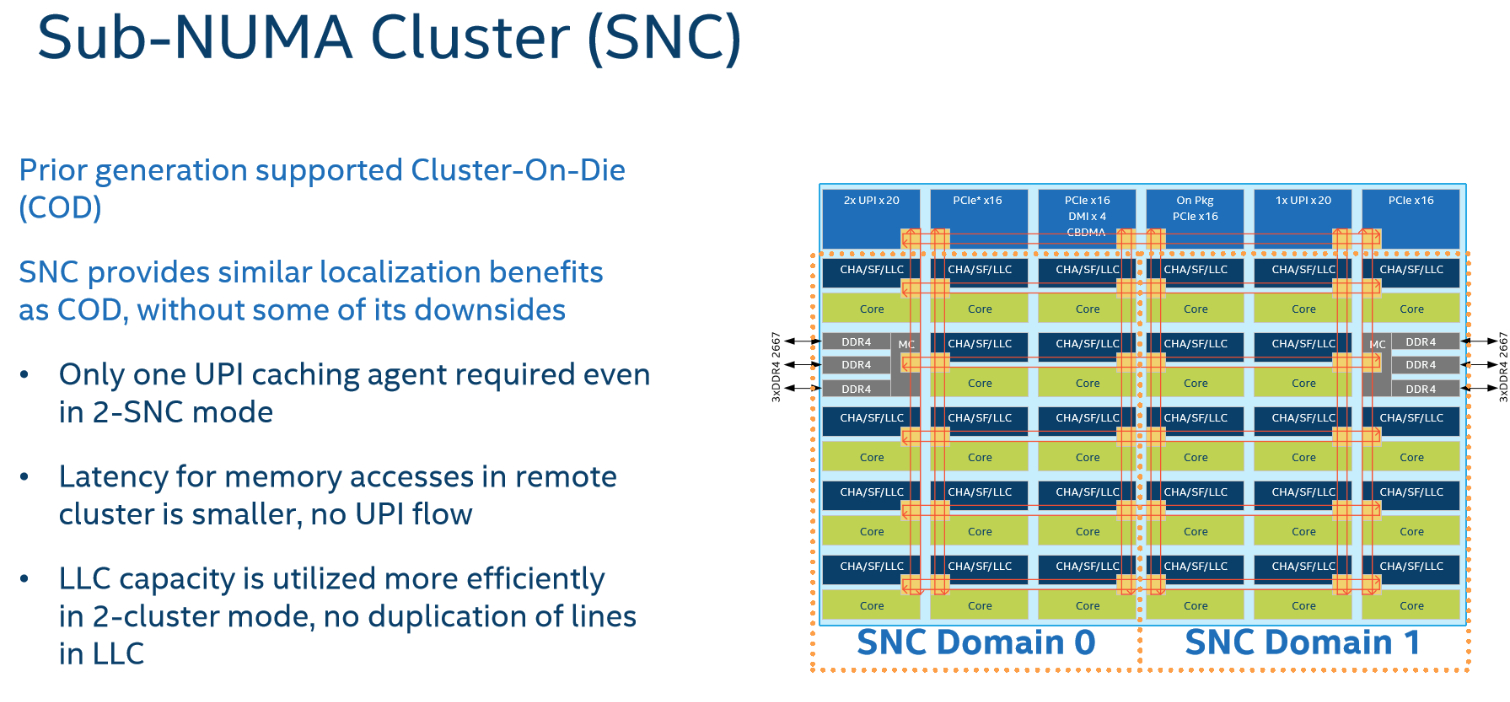

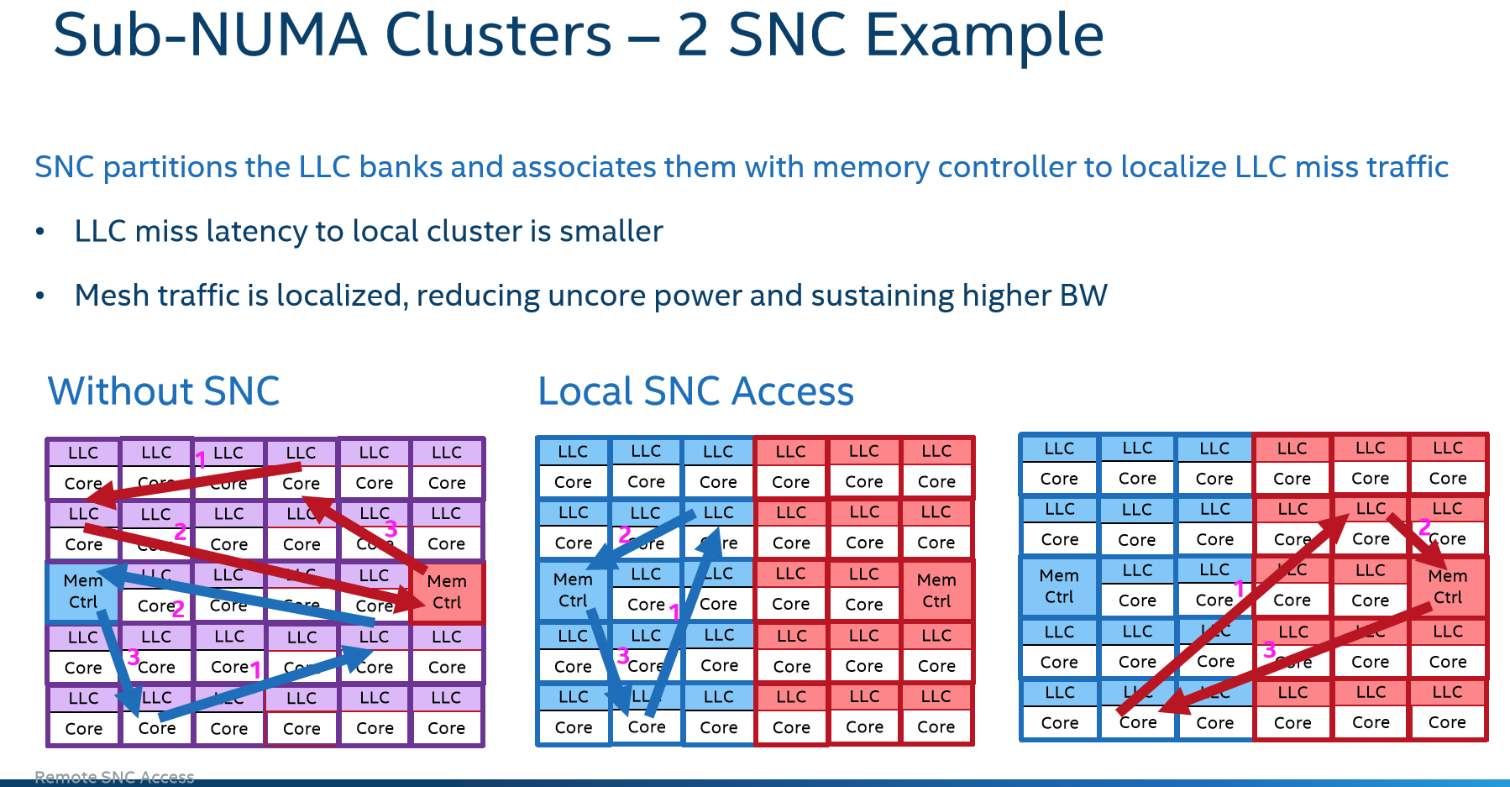

The columns of cores are mirror images of each other with mesh pathways running along the right and left sides of the mirrored cores. This influences the distance between every other column of cores, which translates to more cycles required for horizontal data traversal. For instance, it requires one hop/cycle to move data vertically to the next core's cache, but moving horizontally from the second column to the third column requires three cycles. Intel's Sub-NUMA Clustering (SNC) feature can split the processor into two separate NUMA domains, reducing the latency impact of traversing the mesh to cores/cache farther away. This is similar to the Cluster On Die (COD) tactic Intel used to bifurcate two ring buses on Broadwell-EP processors. SNC provides increased performance in some scenarios, but Intel says it isn't as pronounced with the mesh architecture.

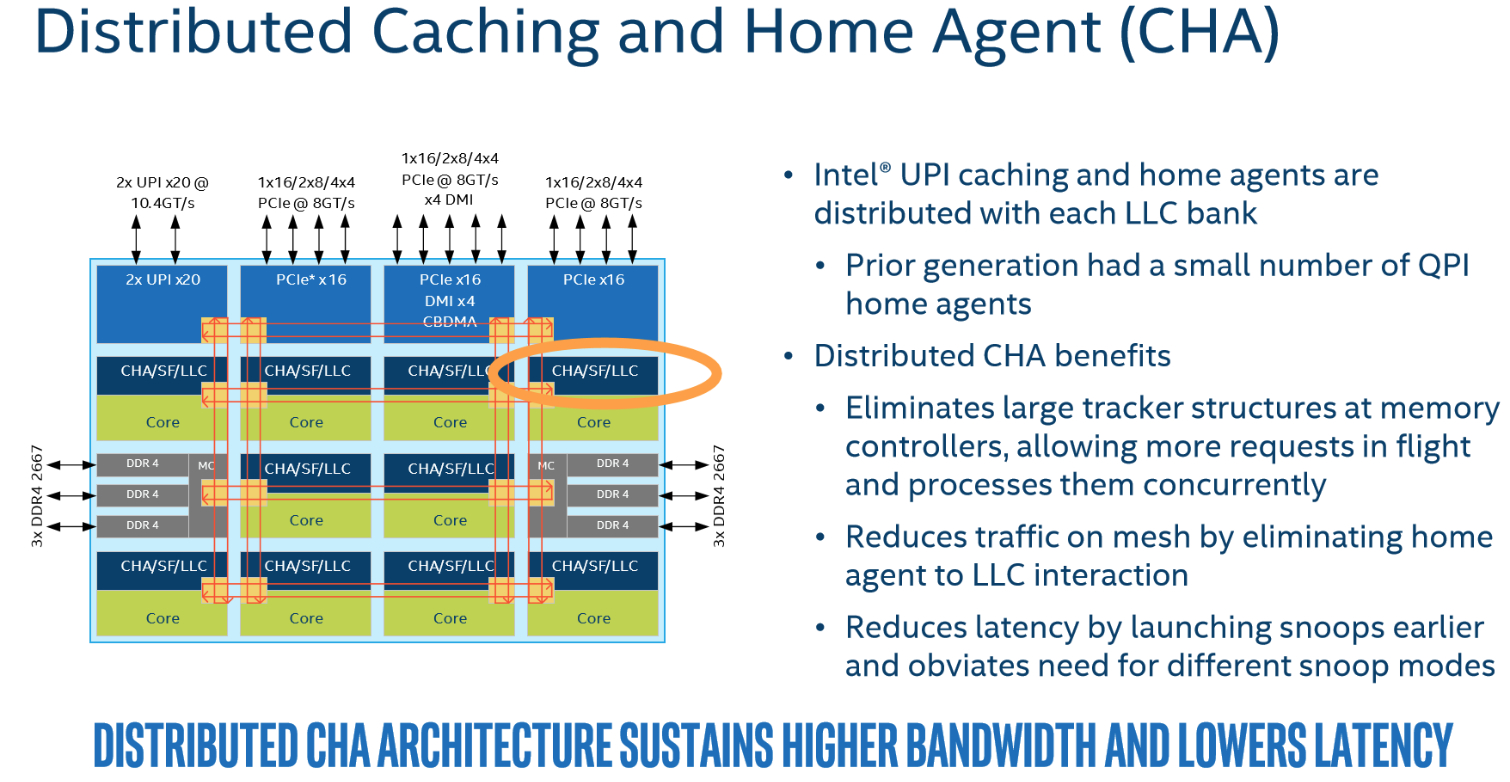

In the past, the caching and home agent (CHA) resided at the bottom of each ring. Intel changed the CHA to a distributed design to mirror the distributed L3 cache. This helps minimize communication traffic between the LLC and home agent, reducing latency.

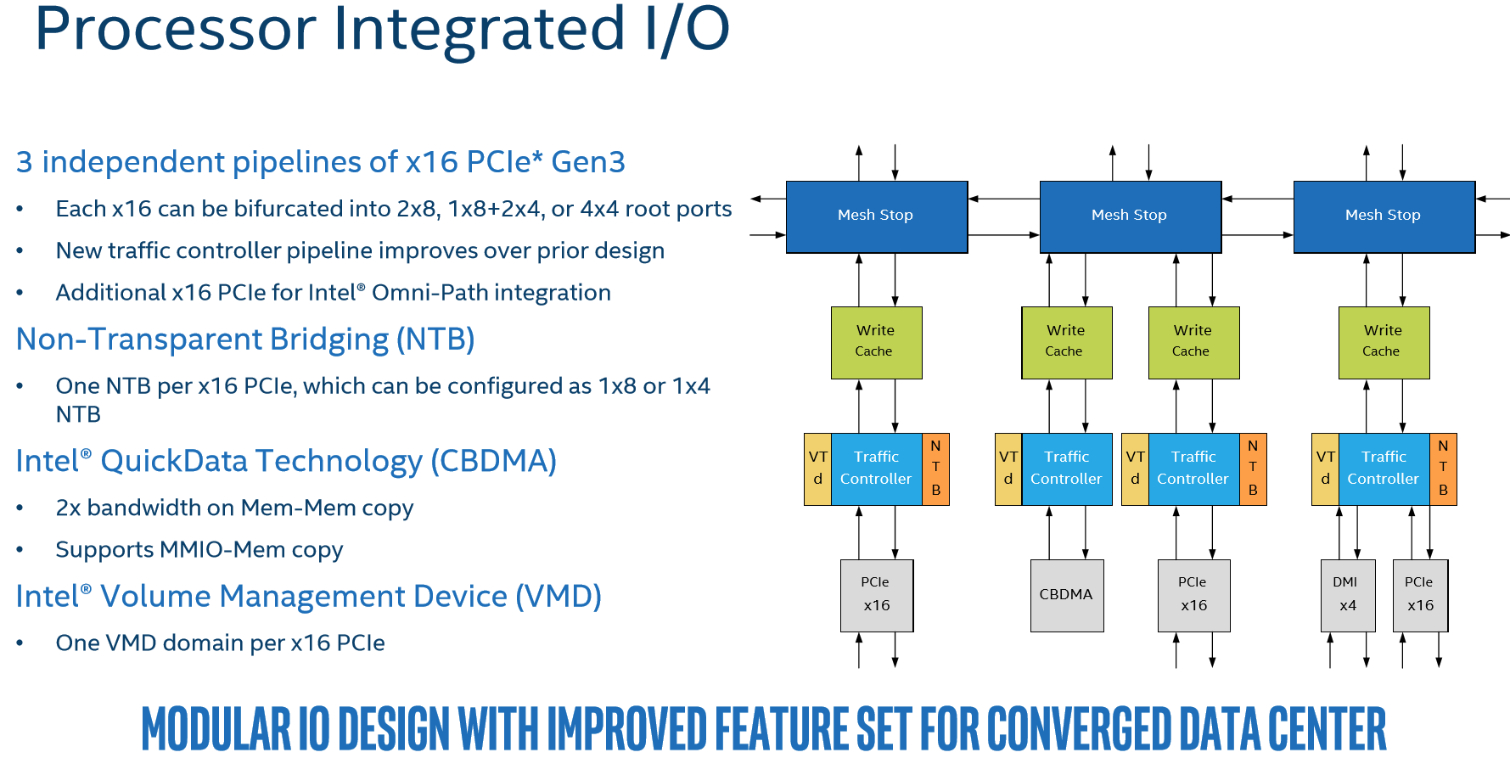

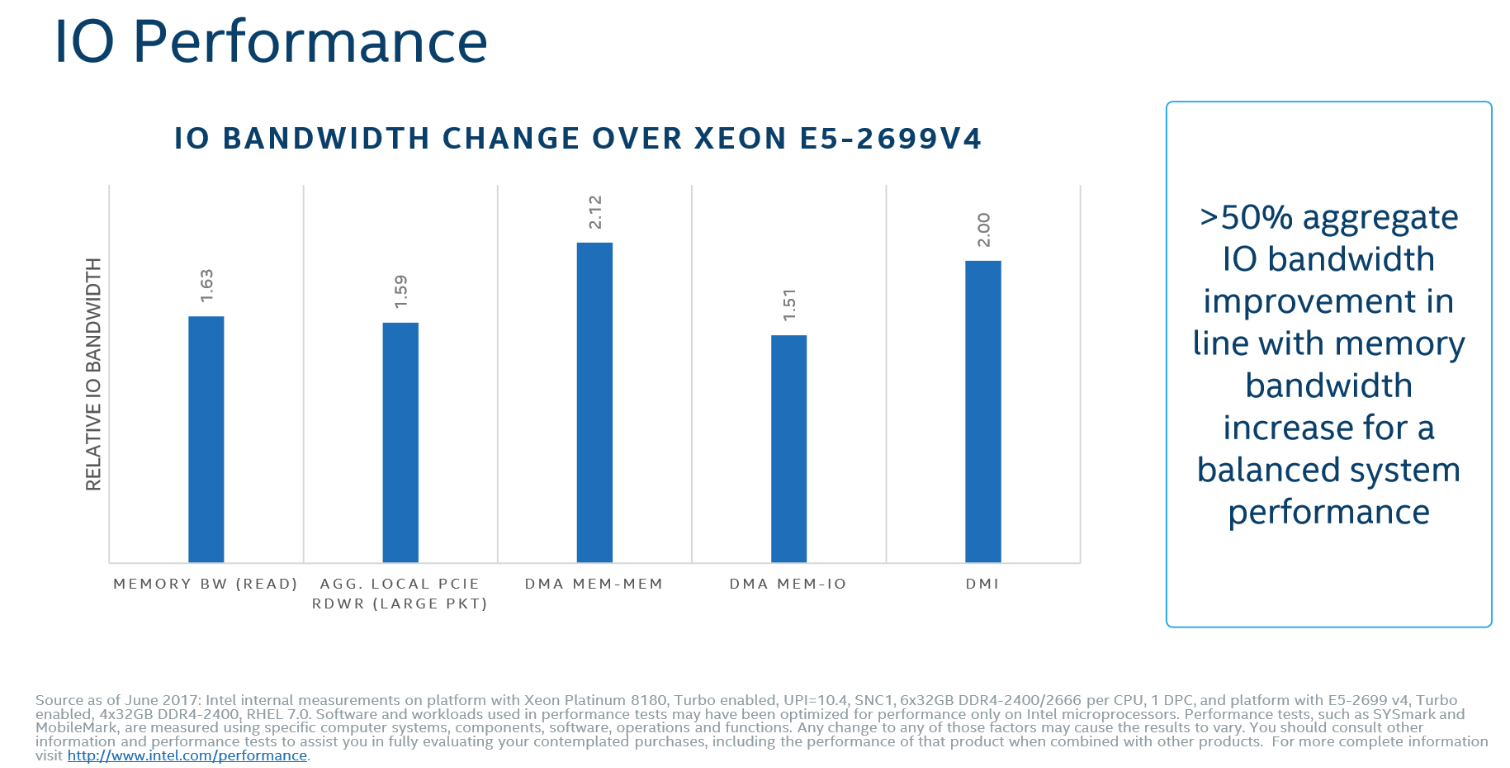

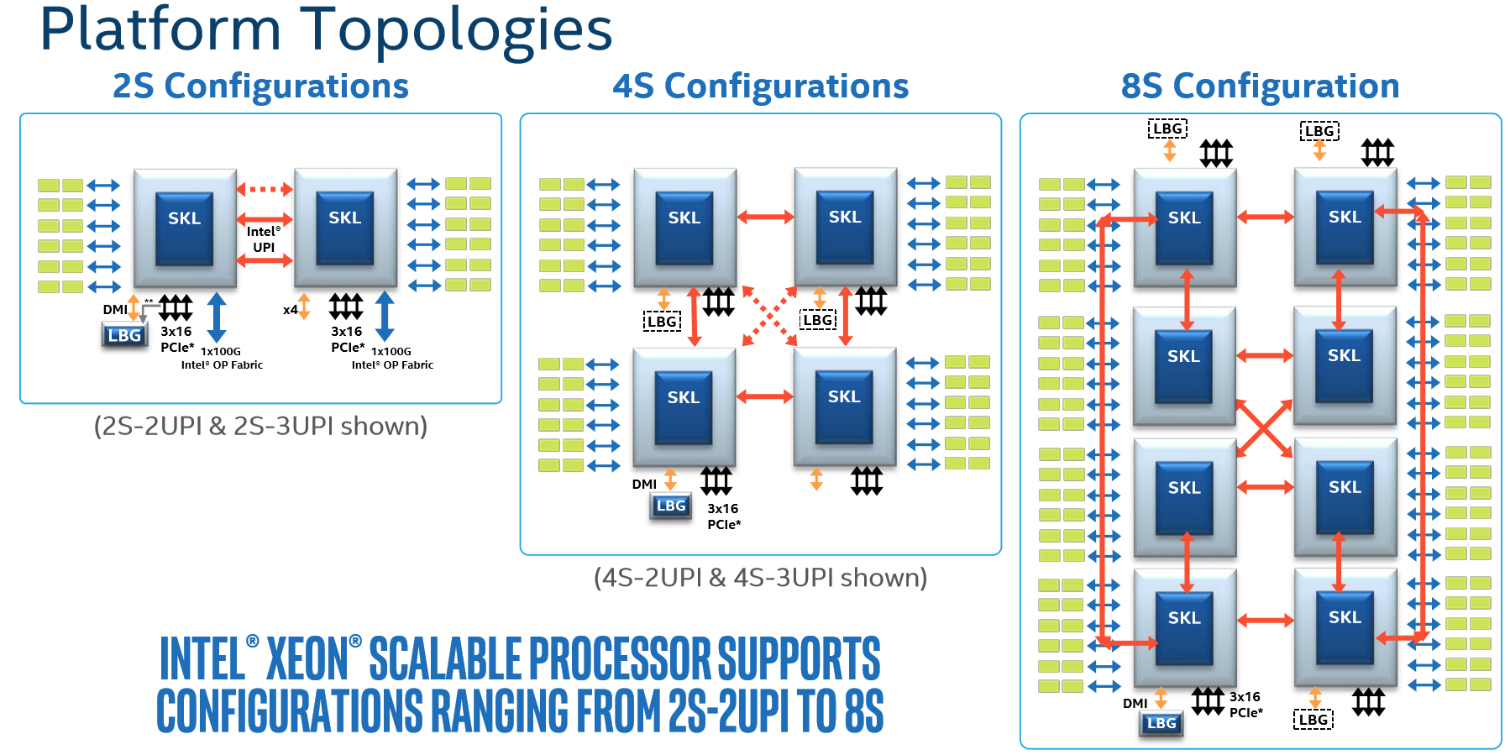

With the Broadwell design, all PCIe traffic flowed into the ring bus at a single point, creating a bottleneck. In Skylake, Intel carves the integrated I/O up into three separate 16-lane PCIe pipelines that reside at the top of different columns (totaling 12 root ports). The modular design provides multiple points of entry, alleviating the prior choke point. Along with other improvements, that provides up to 50% more aggregate I/O bandwidth. Two UPI links also share a single entry point at the top of a column. Models with a third UPI link have an additional entry point. Integrated Omni-Path models also feature an additional dedicated x16 PCIe link, so the networking addition doesn't consume any of the normal lanes exposed to the user.

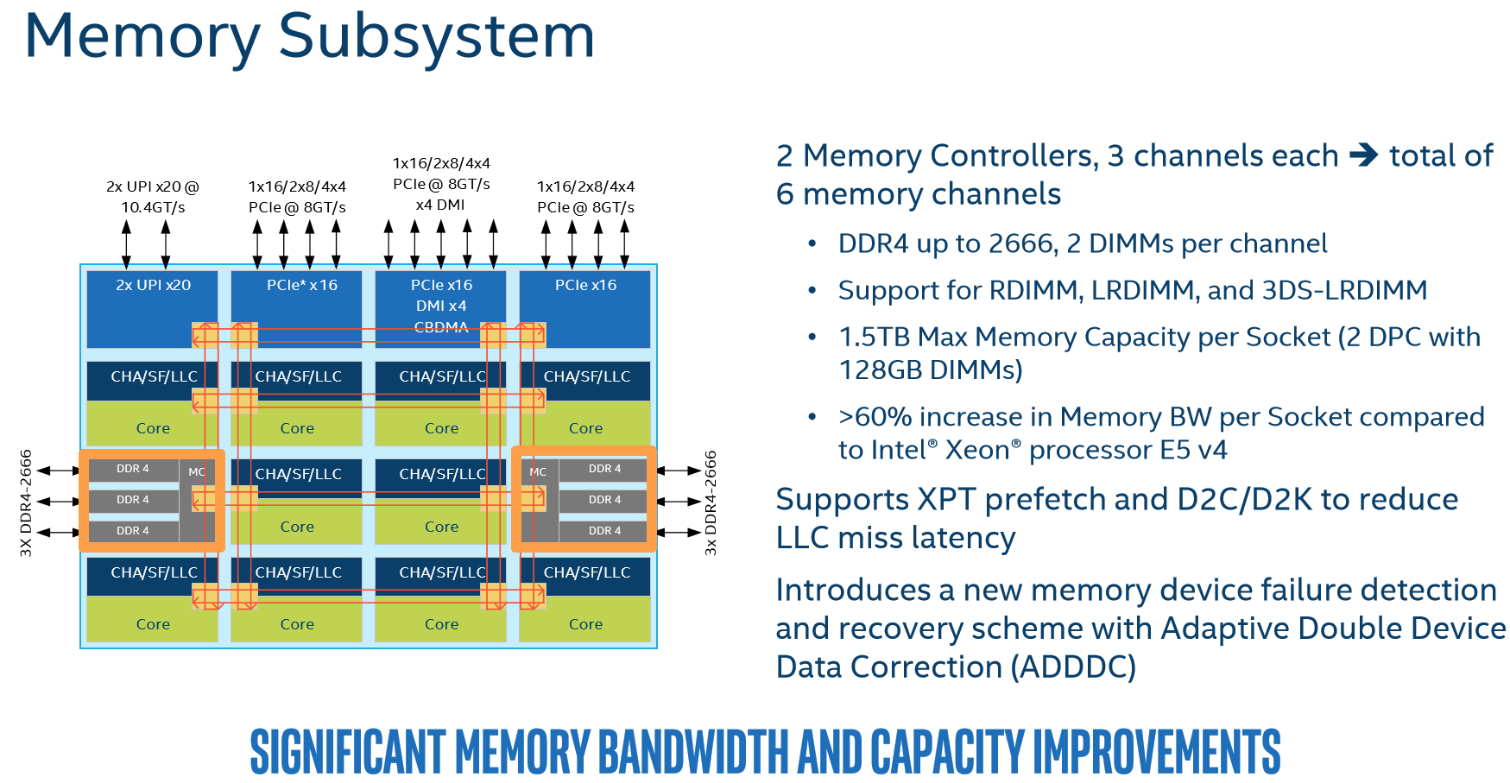

Intel moved its two memory controllers, armed with three channels each, to opposing sides of the die. You can populate up to two DIMMs per channel (DPC). The 2DPC limitation is a step back from Broadwell's 3DPC support, but of course Intel offsets that with 50% more total channels. You can slot in up to 768GB of memory with regular models or 1.5TB with "M" models. Notably, Intel doesn't impose a lower data rate if you install more DIMMs per channel (1DPC, 2DPC). In contrast, Broadwell processors slowed memory transfers as you added modules to a given channel. That created performance/capacity trade-offs.

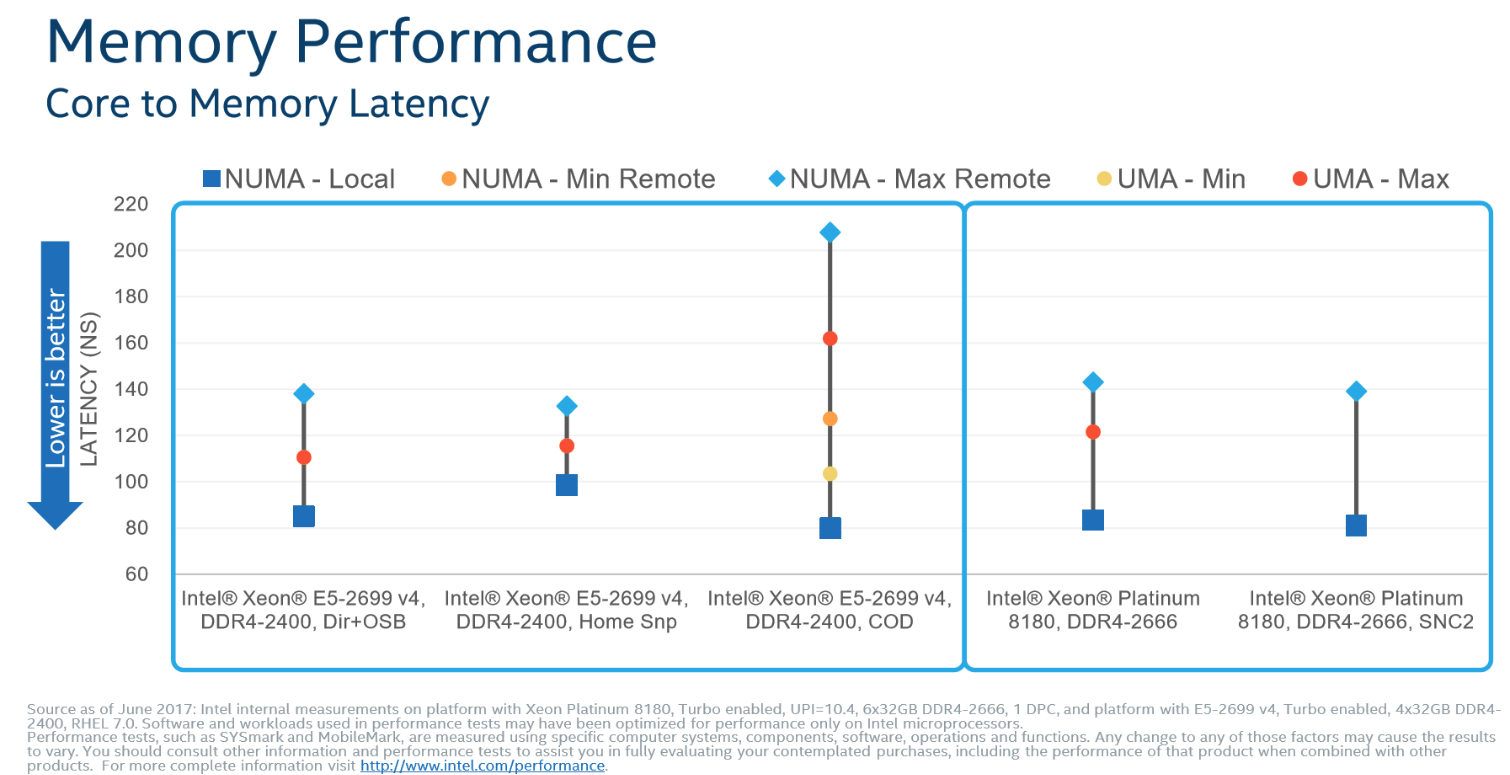

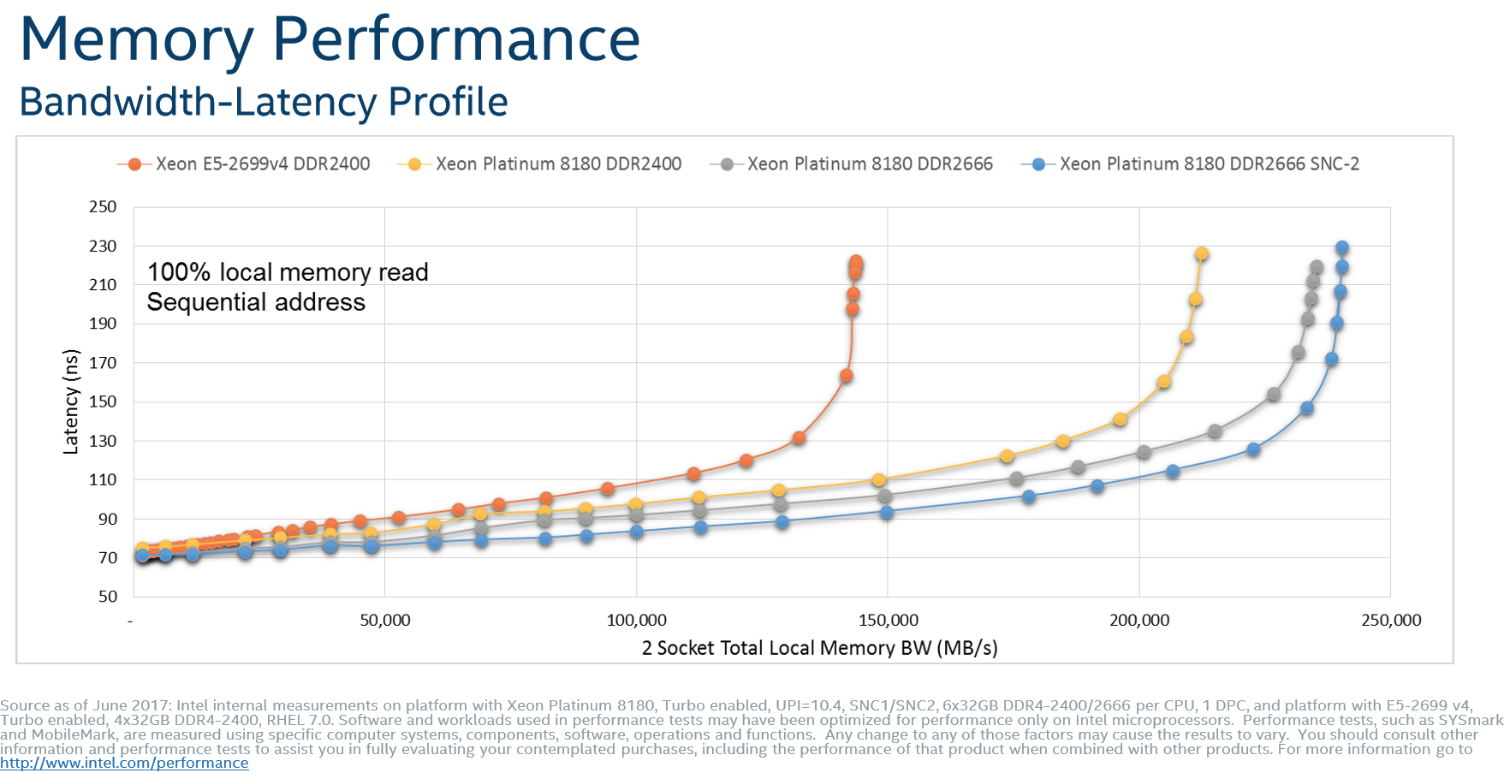

Overall, Intel's memory subsystem enhancements provide a massive gain to potential capacity and practical latency/throughput, as we'll see in our tests.

Ultra Path Interconnect (UPI)

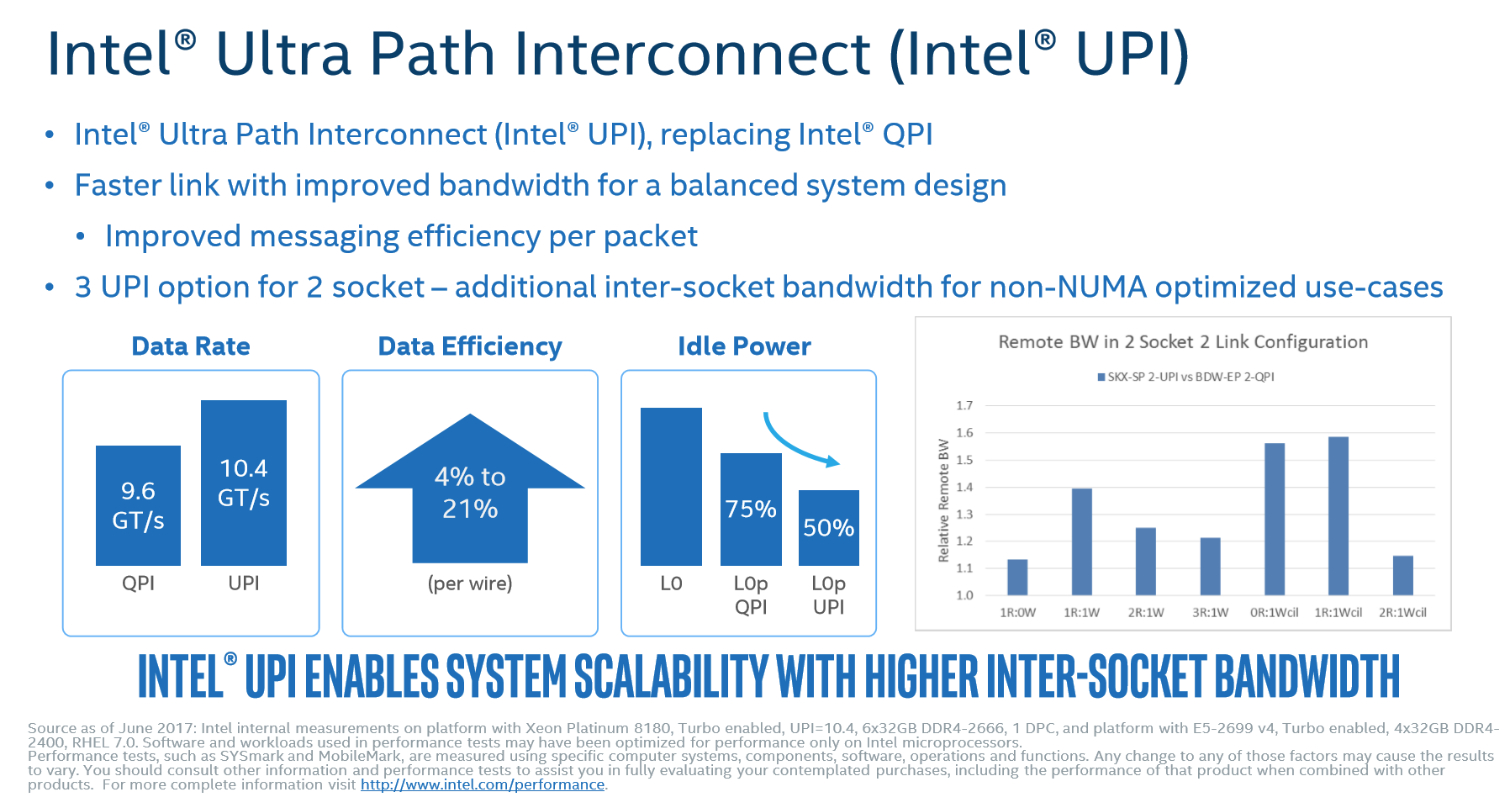

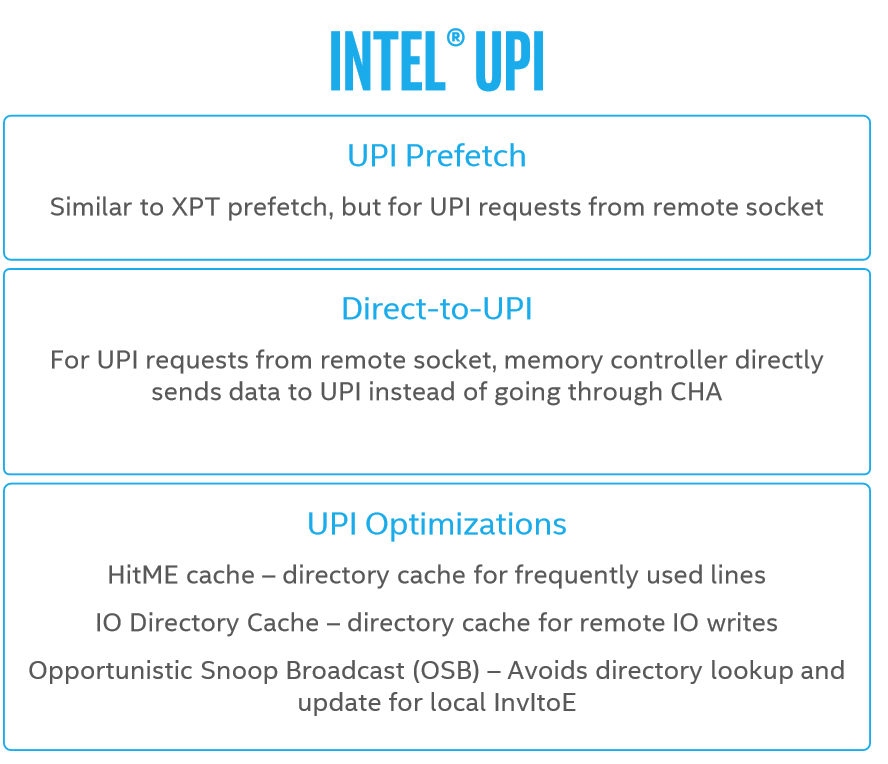

The new Ultra Path Interconnect (UPI) replaces the QuickPath Interconnect (QPI). Intel optimized its pathway between nodes because per-socket memory and I/O throughput were increasing so drastically, reaching the limits of QPI's scalability. As with most interconnects, the company could have simply increased the QPI's voltage and frequency to enable faster communication, but still would have faced a point of diminishing returns.

As such, Intel developed UPI to improve the data rate and power efficiency. QPI required static provisioning of resources at the target before it serviced message requests, whereas UPI has a more adaptive approach that launches requests that wait for resources to become available at the target. The distributed CHA enables for more in-flight messages to the target, which improves efficiency. A host of other improvements help enable a 4% to 21% increase in transfer efficiency per wire (at the same speed). The end result is an increase from 9.6 GT/s to 10.6 GT/s without excessive power consumption.

Increased bandwidth speeds communication, but it also has other gratuitous side effects. Much like QPI, UPI also features a L0p (low power) state that reduces throughput during periods of low activity to save power. The increased efficiency allows for even lower L0p power consumption.

MORE: Best CPUs

MORE: Intel & AMD Processor Hierarchy

MORE: All CPU Content

Current page: The Mesh Topology & UPI

Prev Page Into The Core And Cache, AVX-512 Next Page The Lewisburg Chipset

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.