Intel Xeon Platinum 8176 Scalable Processor Review

Why you can trust Tom's Hardware

Power Consumption

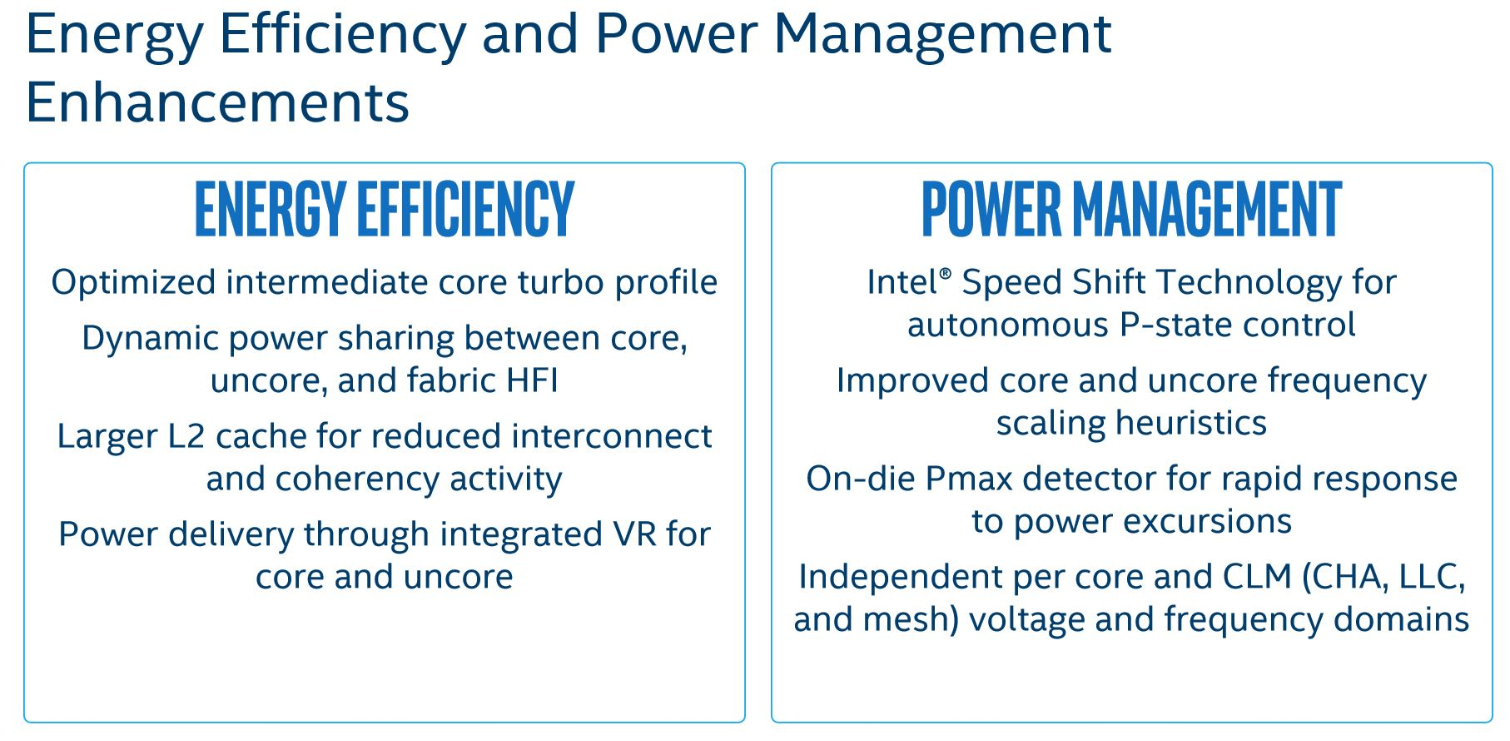

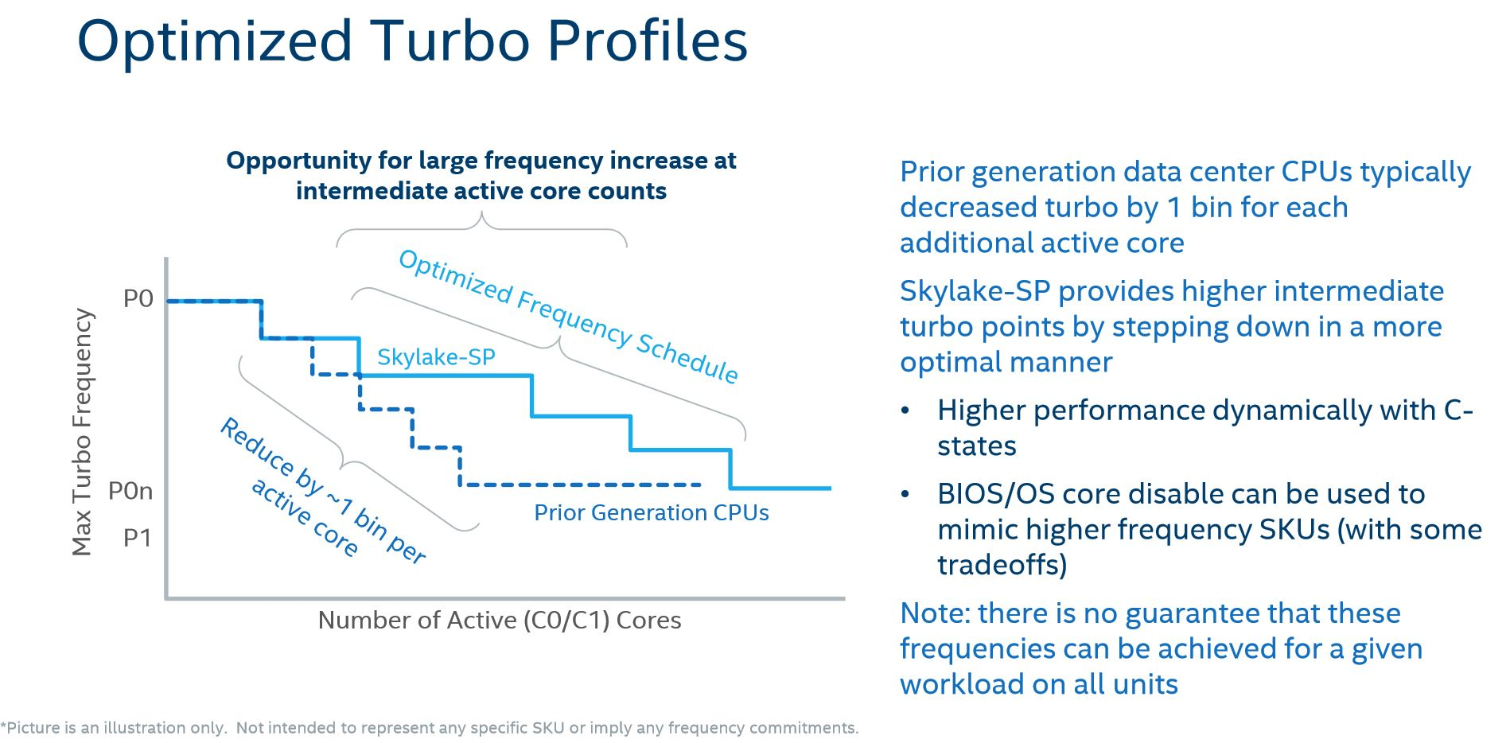

Skylake Power Optimizations

Power consumption is of critical importance in the data center. It's an ongoing expense that must be factored into total cost of ownership and managed accordingly. It also correlates with waste heat, necessitating cooling, eating up more power in kind.

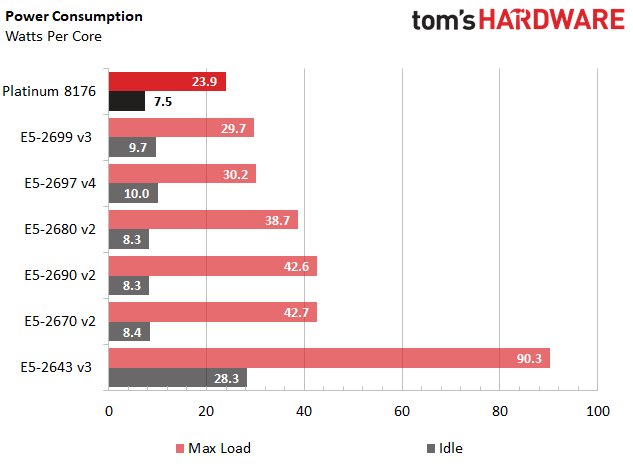

One of the best ways to reduce costs is getting more useful work done per watt of power. Intel's Scalable Processor family improves efficiency compared to generations prior by serving up higher performance, but using less power per core.

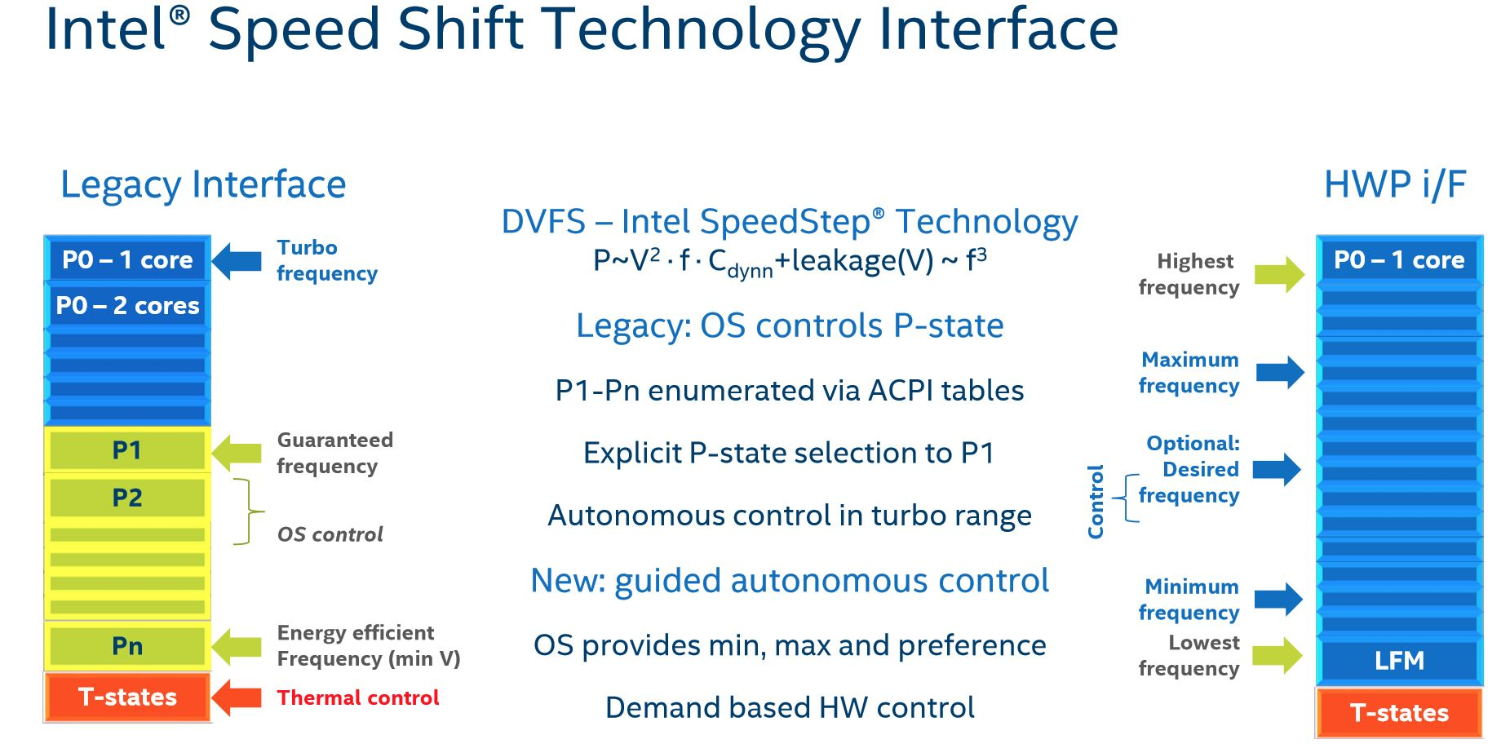

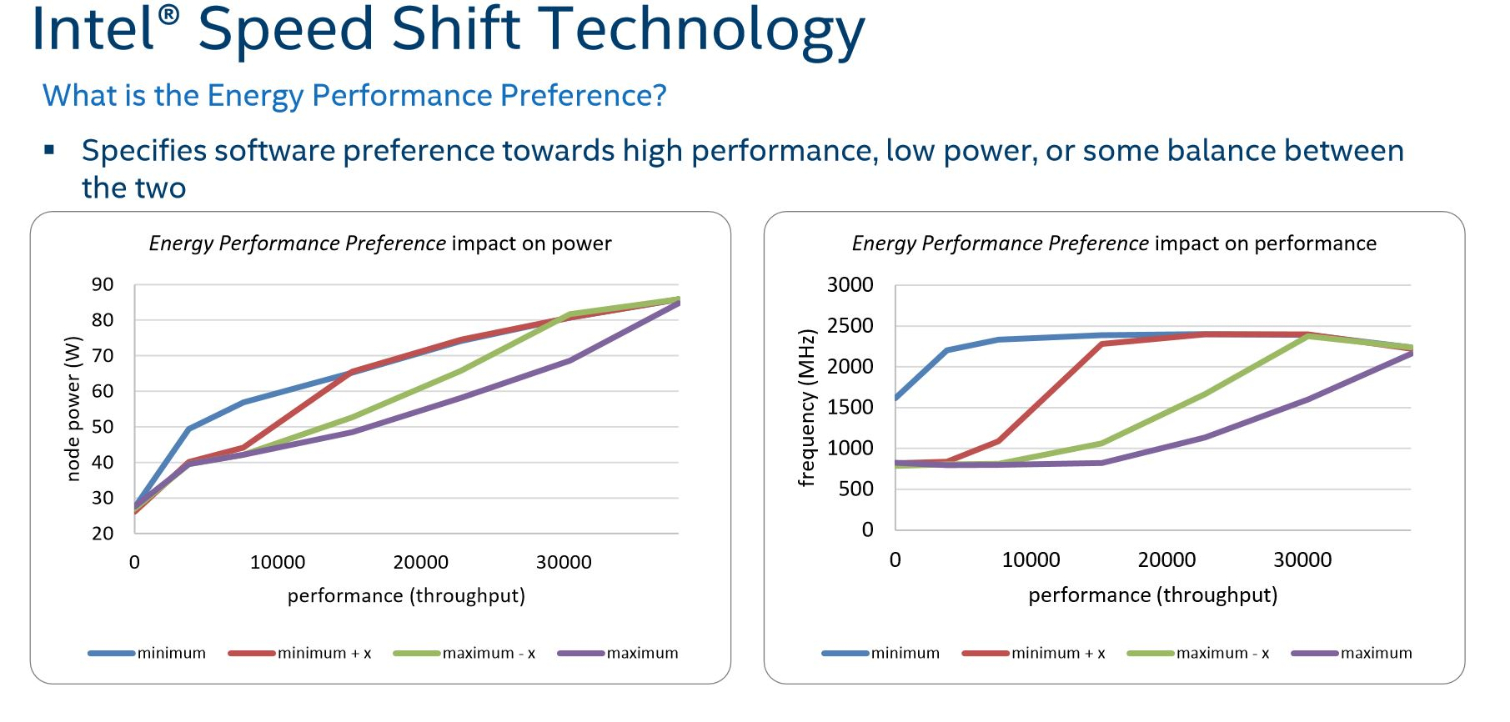

Much like mainstream Skylake-based chips, the new Xeons employ Speed Shift technology, which cedes control of power states to the processor instead of relying on constant (and latent) hints from the operating system. Instead, the OS defines preferences, such as minimum and maximum performance levels, and the processor handles fine-grained adjustments. An expanded set of P-states allows the processor to control frequency and voltages on a more granular level, thereby saving power and accelerating response time. Speed Shift also eliminates the latency associated with P-state commands from the operating system.

This is a step forward from the Hardware Controlled Power Management (HWPM) feature in Broadwell-EP. Among other optimizations, Intel developed independent per-core voltage and frequency domains that allow the processor to dynamically manage key uncore components like the mesh topology and shared L3 cache. The larger L2 also reduces the number of requests from the LLC. Those requests require a trip across the mesh, and because all data movement consumes power, fewer requests means less power consumption.

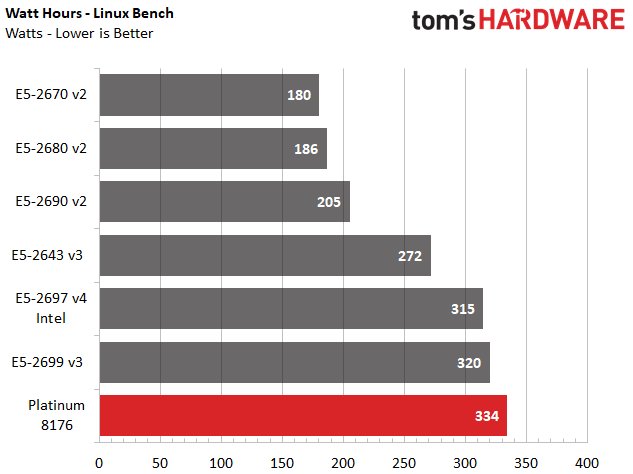

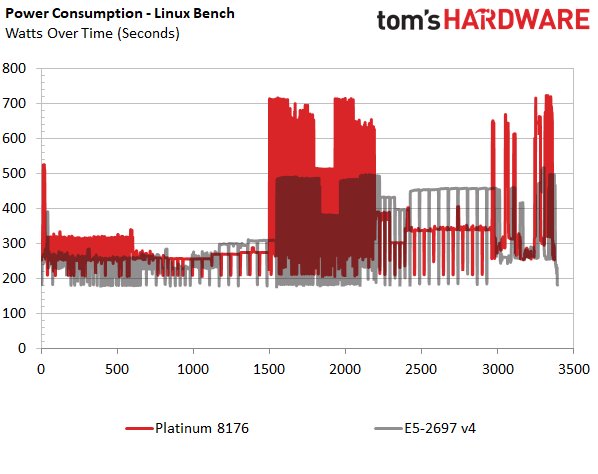

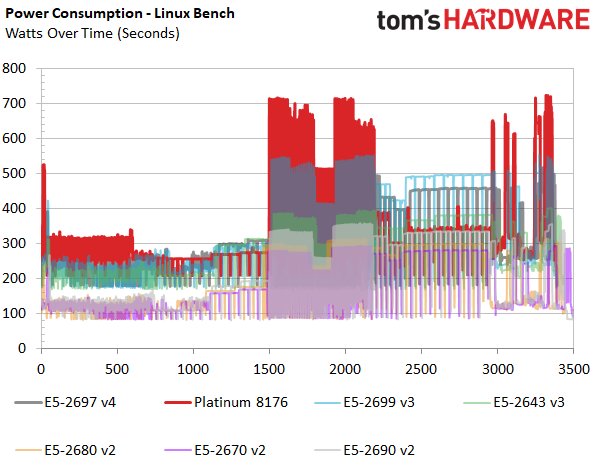

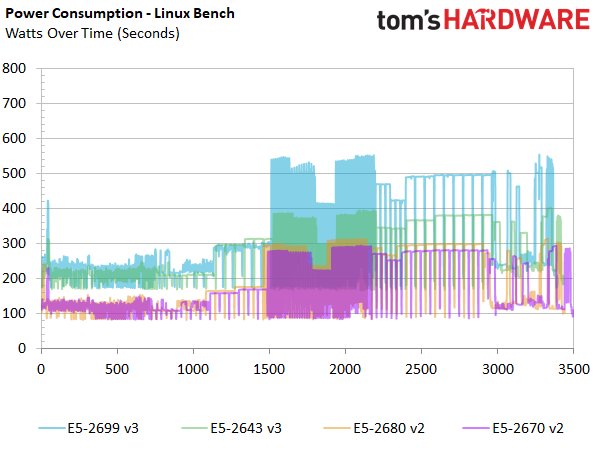

Linux-Bench Power Consumption

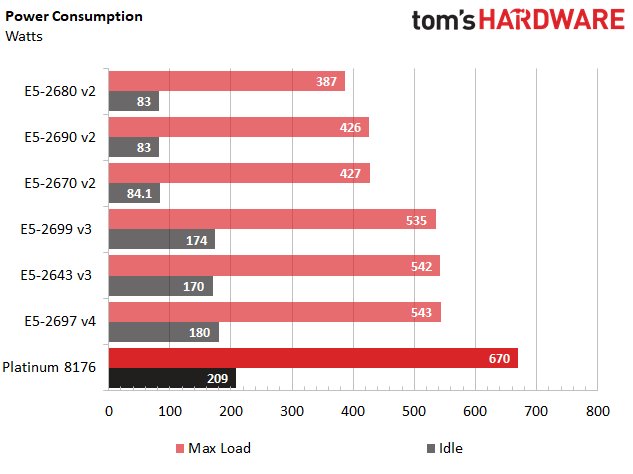

We logged platform power consumption during our Linux-Bench run, so these measurements also include the effects of DRAM and power supply efficiency.

Factoring in the amount of work performed per watt provides the best view of overall power efficiency, we think. Generalizing remains a challenging endeavor, though. Because the Scalable Processor family covers such a wide range of target markets and relevant workloads, it's hard to identify a handful of tests applicable to everyone. As such, consider these results a basic indicator of overall power consumption.

The 8176's lofty power use numbers are the result of 10 more cores than the most similar previous-gen CPU. Those cores do get more done per watt though, particularly in threaded benchmarks. For applications that aren't as aggressively parallelized, it's best to buy a processor with fewer cores able to operate at a higher clock rate. Intel's "M" processors offer the best mix of core counts and per-core performance, but they're premium products with matching price tags.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Watt Hours

The Platinum wields 55% more cores than Intel's E5-2699 v3, but it only consumes 14 more watt-hours during the Linux-Bench script. That's pretty impressive, especially if you factor in higher performance, too.

Power Maximum Load And Idle

Power draw is recorded every second in our enterprise lab. During the Linux-Bench tests, we captured multiple high-draw bursts that only appeared for one second, cresting at 711W. The same granularity is used during our Linpack tests, but because of the 8176's lower AVX frequencies, we only recorded a 670W peak.

The 8176's extra 10 cores lead to higher overall power draw at idle and under full load. As you can see in the second chart, though, which calculates per-core consumption by dividing the total by the core count, Intel's 8176 uses far less power per core than the company's previous-gen CPUs. This paints a nice picture of improved efficiency.

MORE: Best CPUs

MORE: Intel & AMD Processor Hierarchy

MORE: All CPU Content

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

the nerd 389 Do these CPUs have the same thermal issues as the i9 series?Reply

I know these aren't going to be overclocked, but the additional CPU temps introduce a number of non-trivial engineering challenges that would result in significant reliability issues if not taken into account.

Specifically, as thermal resistance to the heatsink increases, the thermal resistance to the motherboard drops with the larger socket and more pins. This means more heat will be dumped into the motherboard's traces. That could raise the temperatures of surrounding components to a point that reliability is compromised. This is the case with the Core i9 CPUs.

See the comments here for the numbers:

http://www.tomshardware.com/forum/id-3464475/skylake-mess-explored-thermal-paste-runaway-power.html -

Snipergod87 Reply19926080 said:Do these CPUs have the same thermal issues as the i9 series?

I know these aren't going to be overclocked, but the additional CPU temps introduce a number of non-trivial engineering challenges that would result in significant reliability issues if not taken into account.

Specifically, as thermal resistance to the heatsink increases, the thermal resistance to the motherboard drops with the larger socket and more pins. This means more heat will be dumped into the motherboard's traces. That could raise the temperatures of surrounding components to a point that reliability is compromised. This is the case with the Core i9 CPUs.

See the comments here for the numbers:

http://www.tomshardware.com/forum/id-3464475/skylake-mess-explored-thermal-paste-runaway-power.html

Wouldn't be surprised if they did but also wouldn't be surprised in Intel used solder on these. Also it is important to note that server have much more airflow than your standard desktop, enabling better cooling all around, from the CPU to the VRM's. Server boards are designed for cooling as well and not aesthetics and stylish heat sink designs -

InvalidError Reply

That heat has to go from the die, through solder balls, the multi-layer CPU carrier substrate, those tiny contact fingers and finally, solder joints on the PCB. The thermal resistance from die to motherboard will still be over an order of magnitude worse than from the die to heatsink, which is less than what the VRM phases are sinking into the motherboard's power and ground planes. I wouldn't worry about it.19926080 said:the thermal resistance to the motherboard drops with the larger socket and more pins. This means more heat will be dumped into the motherboard's traces.

-

bit_user ReplyThe 28C/56T Platinum 8176 sells for no less than $8719

Actually, the big customers don't pay that much, but still... For that, it had better be made of platinum!

That's $311.39 per core!

The otherwise identical CPU jumps to a whopping $11722, if you want to equip it with up to 1.5 TB of RAM instead of only 768 GB.

Source: http://ark.intel.com/products/120508/Intel-Xeon-Platinum-8176-Processor-38_5M-Cache-2_10-GHz -

Kennyy Evony jowen3400 21 minutes agoReply

Can this run Crysis?

Jowen, did you just come up to a Ferrari and ask if it has a hitch for your grandma's trailer? -

bit_user Reply

I wouldn't trust a $8k server CPU I got for $100. I guess if they're legit pulls from upgrades, you could afford to go through a few @ that price to find one that works. Maybe they'd be so cheap because somebody already did cherry-pick the good ones.19927274 said:W8 on ebay\aliexpress for $100

Still, has anyone had any luck on such heavily-discounted server CPUs? Let's limit to Sandybridge or newer. -

JamesSneed Reply19927188 said:The 28C/56T Platinum 8176 sells for no less than $8719

Actually, the big customers don't pay that much, but still... For that, it had better be made of platinum!

That's $311.39 per core!

The otherwise identical CPU jumps to a whopping $11722, if you want to equip it with up to 1.5 TB of RAM instead of only 768 GB.

Source: http://ark.intel.com/products/120508/Intel-Xeon-Platinum-8176-Processor-38_5M-Cache-2_10-GHz

That is still dirt cheap for a high end server. An Oracle EE database license is going to be 200K+ on a server like this one. This is nothing in the grand scheme of things.

-

bit_user Reply

A lot of people don't have such high software costs. In many cases, the software is mostly home-grown and open source (or like 100%, if you're Google).19927866 said:An Oracle EE database license is going to be 200K+ on a server like this one. This is nothing in the grand scheme of things.