Intel Xeon Platinum 8176 Scalable Processor Review

Why you can trust Tom's Hardware

Test Platforms & How We Test

The Processors

We know, we know. This is another all-Intel line-up in an enterprise-oriented processor review. That's a natural side effect of the company's ~99.6% market share. Until AMD's EPYC processors become widely available, there really aren't any suitable x86 alternatives. However, we have a nice selection of Ivy Bridge, Haswell, and Broadwell-EP processors to document Intel's steady march of improvements up to the Xeon Platinum 8176.

The Test Platforms

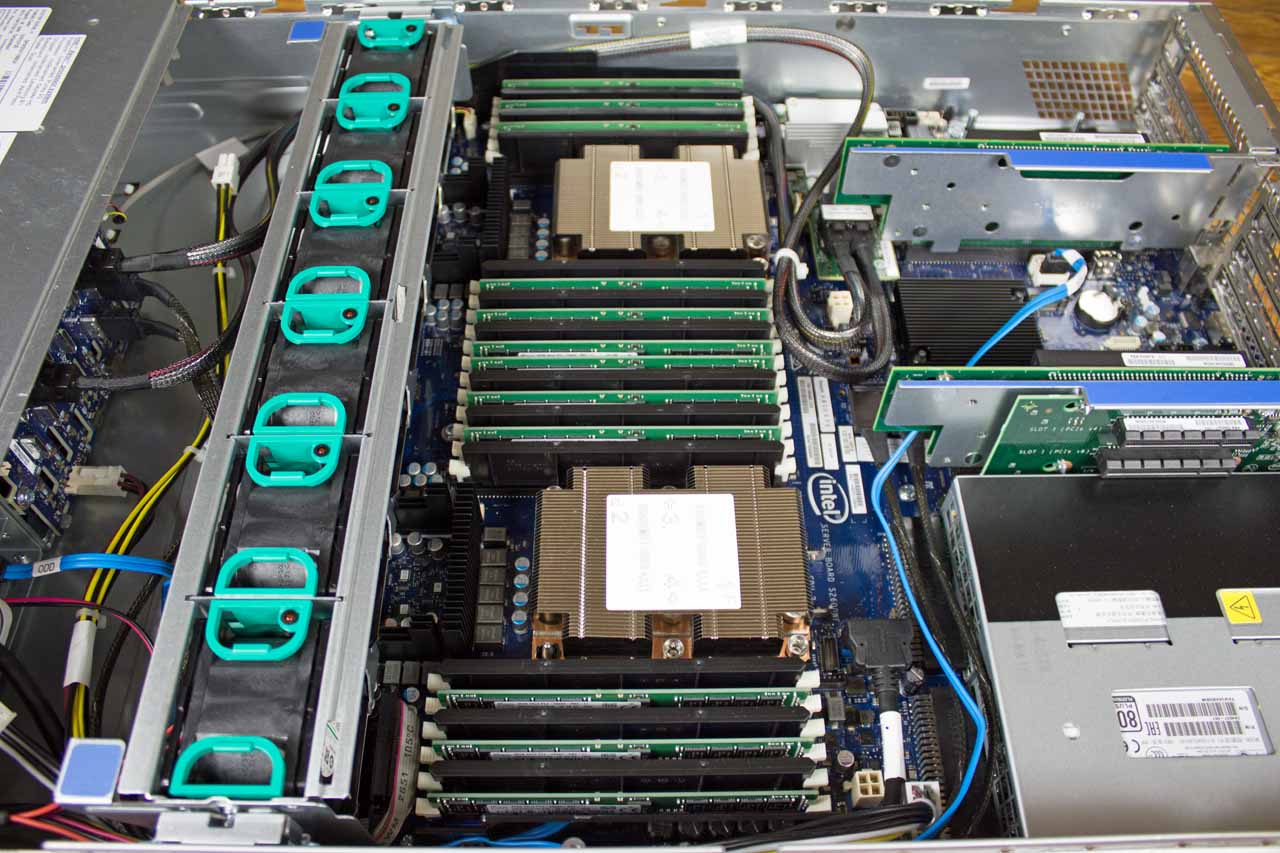

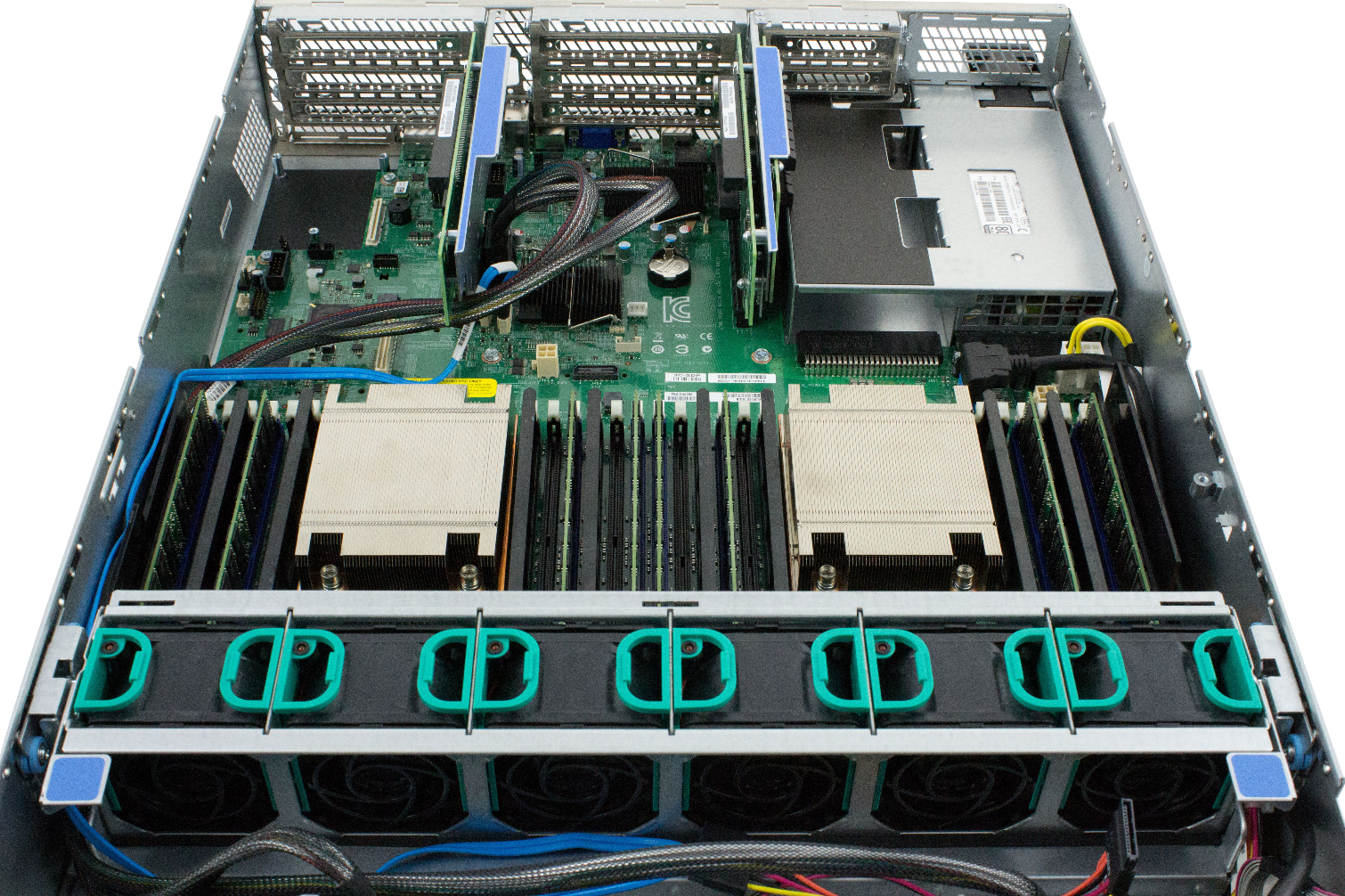

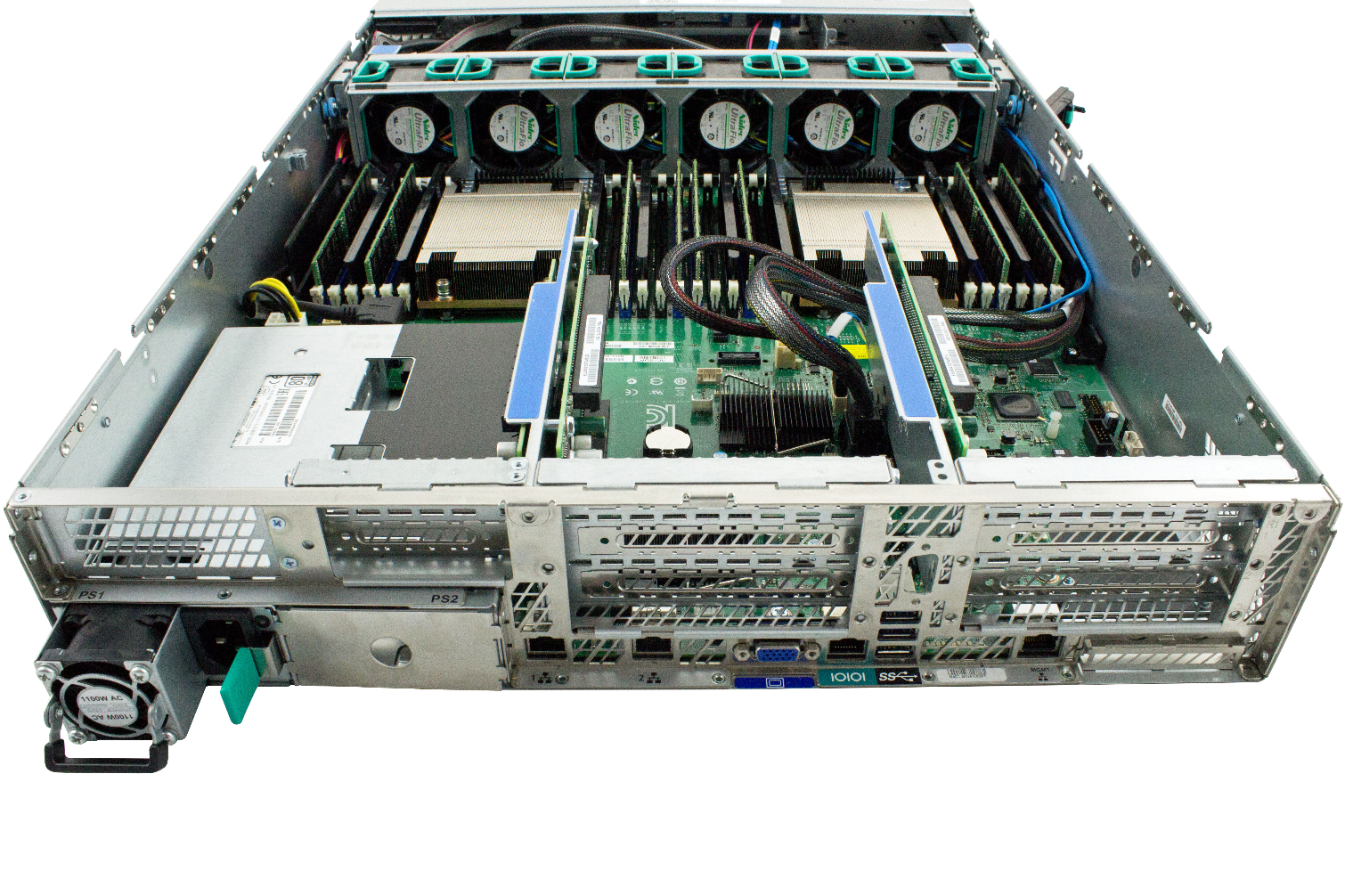

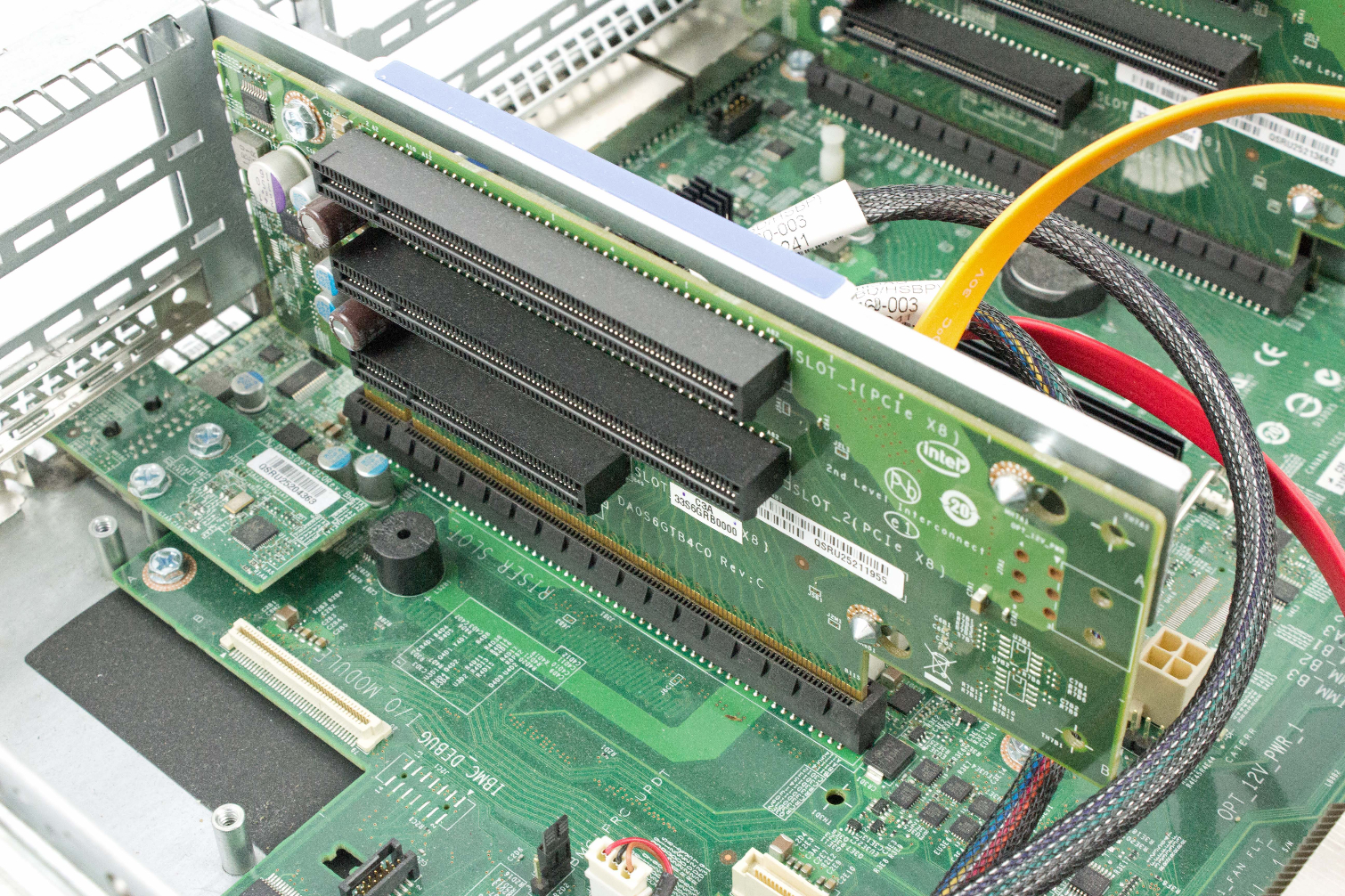

- Intel Purley S2P2Y3Q Server

Intel sent a Server System S2P2SY3Q test platform powered by a dual-socket Intel Server Board 2600WF. Two Xeon Platinum 8176 processors, which feature 28 cores and 56 threads apiece, are complemented by 12 32GB Hynix DDR4-2666 DIMMs. That provides a total of 56C/112T and 432GB of memory. The Software Development Platform includes two redundant 80 PLUS 1100W power supplies. The PSUs, like the fans, are hot-pluggable to avoid downtime issues due to component failure.

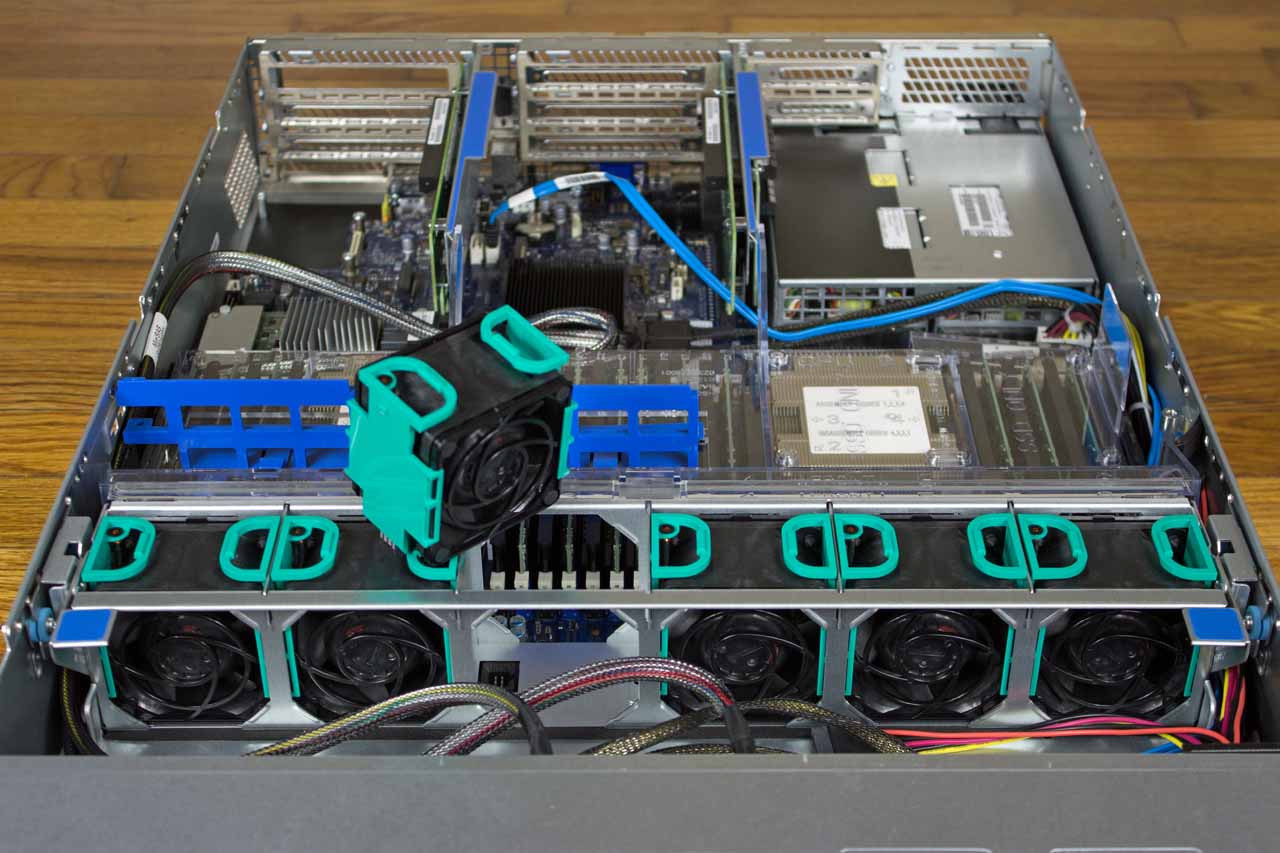

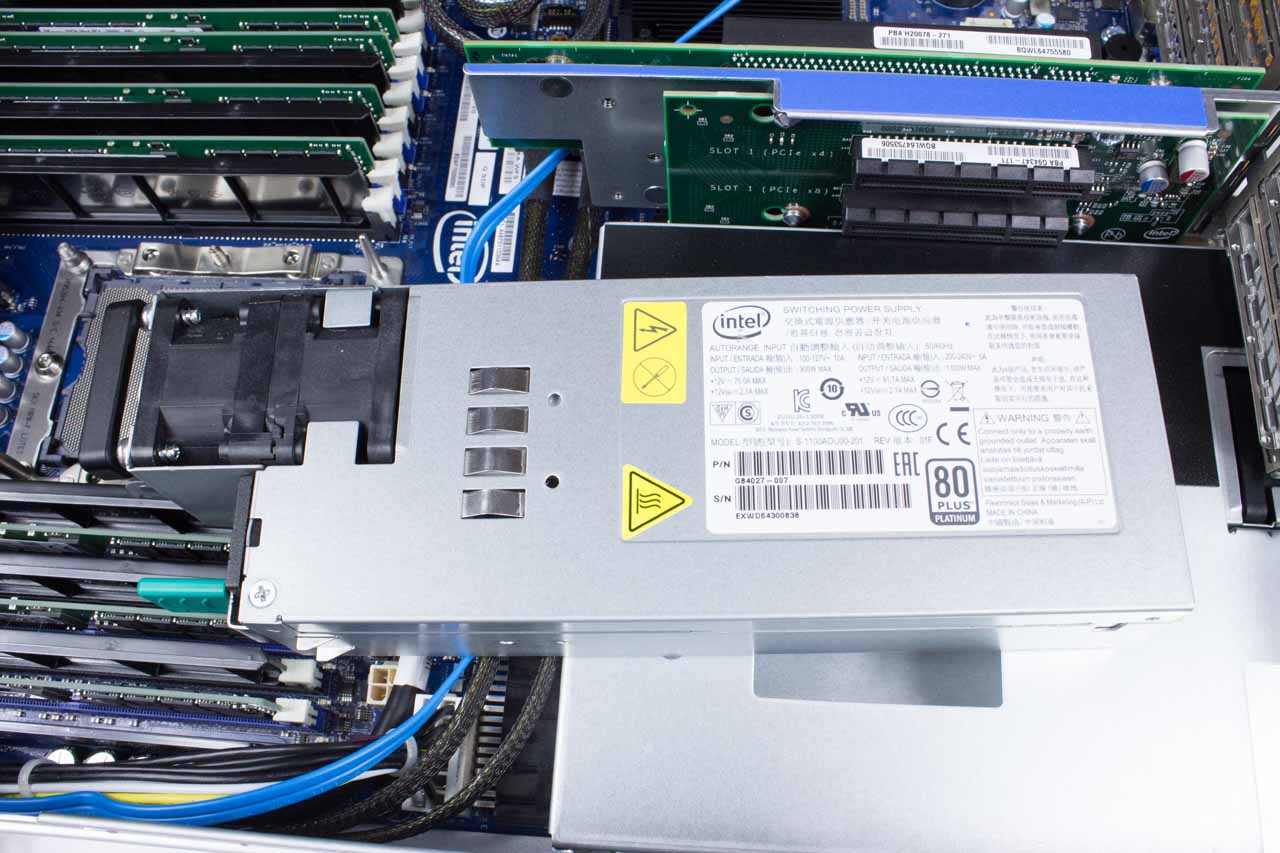

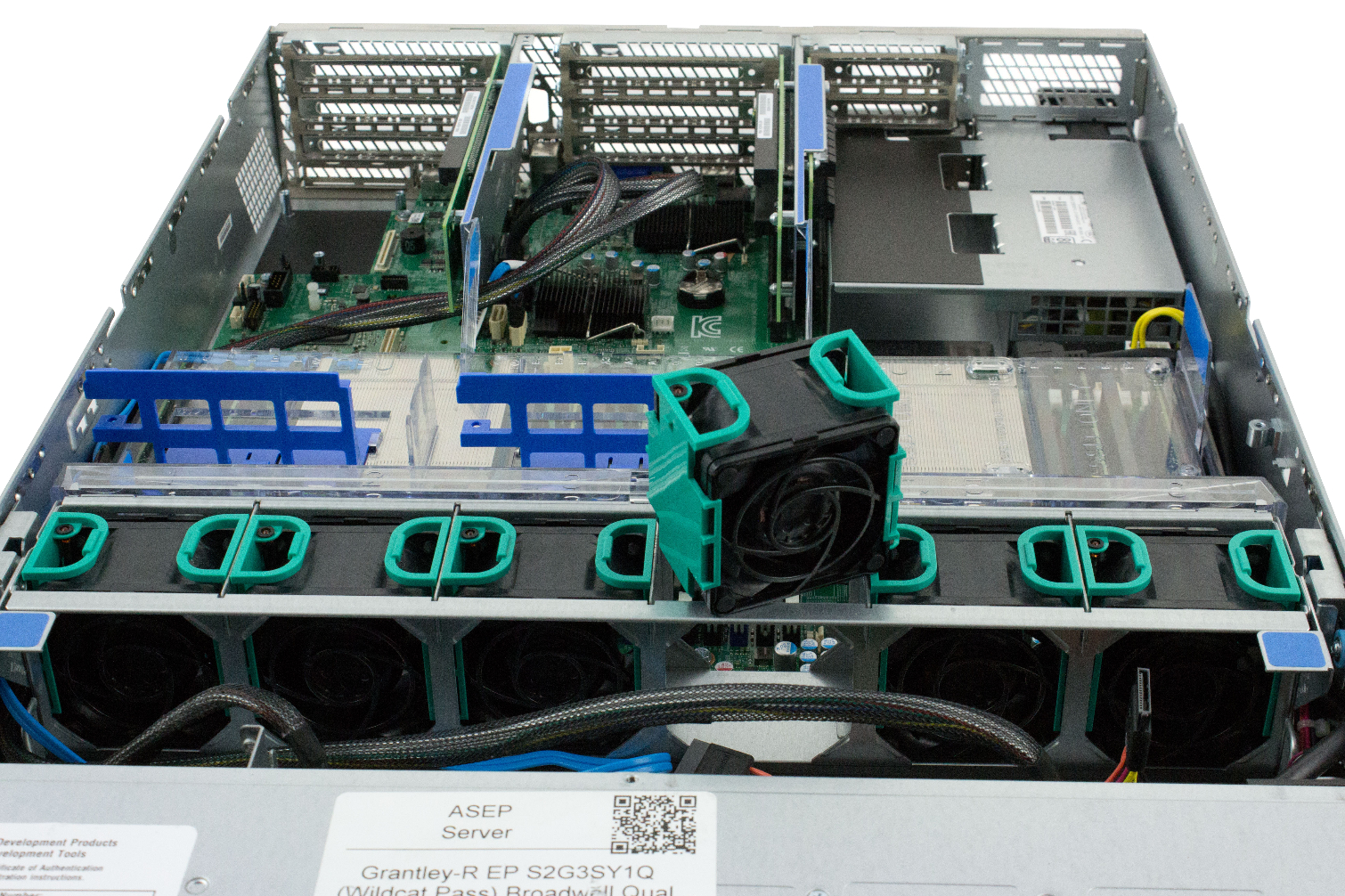

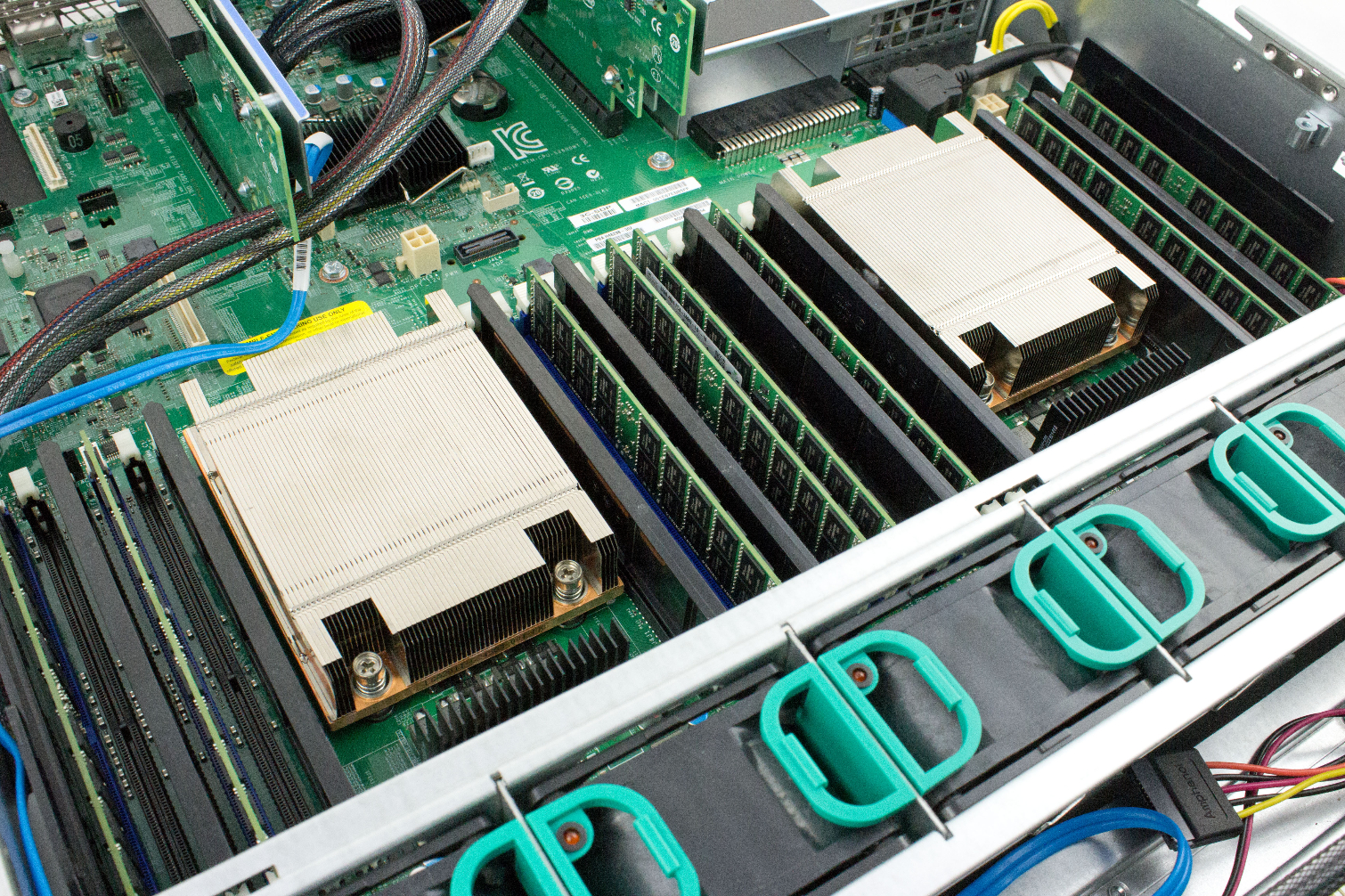

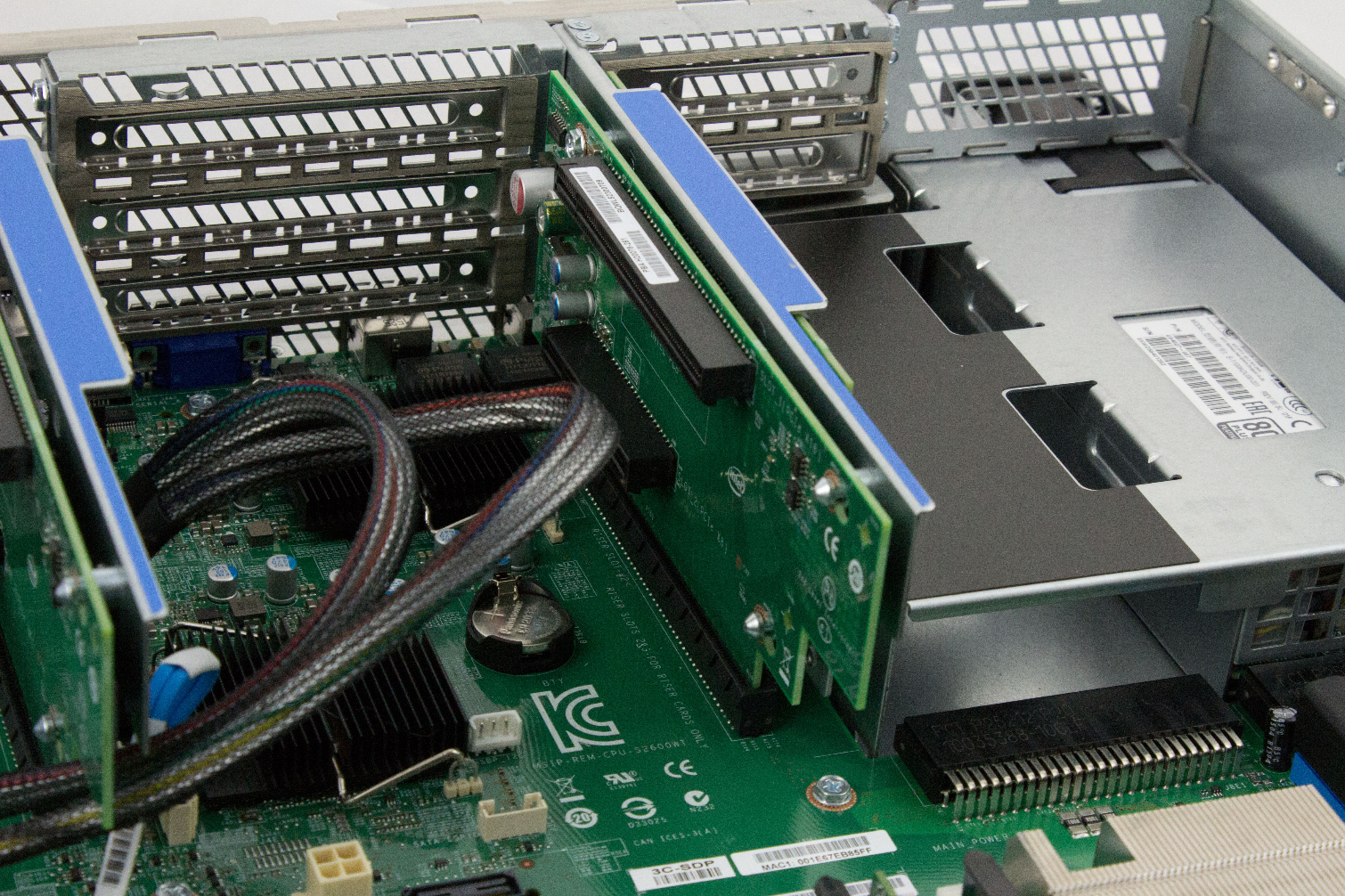

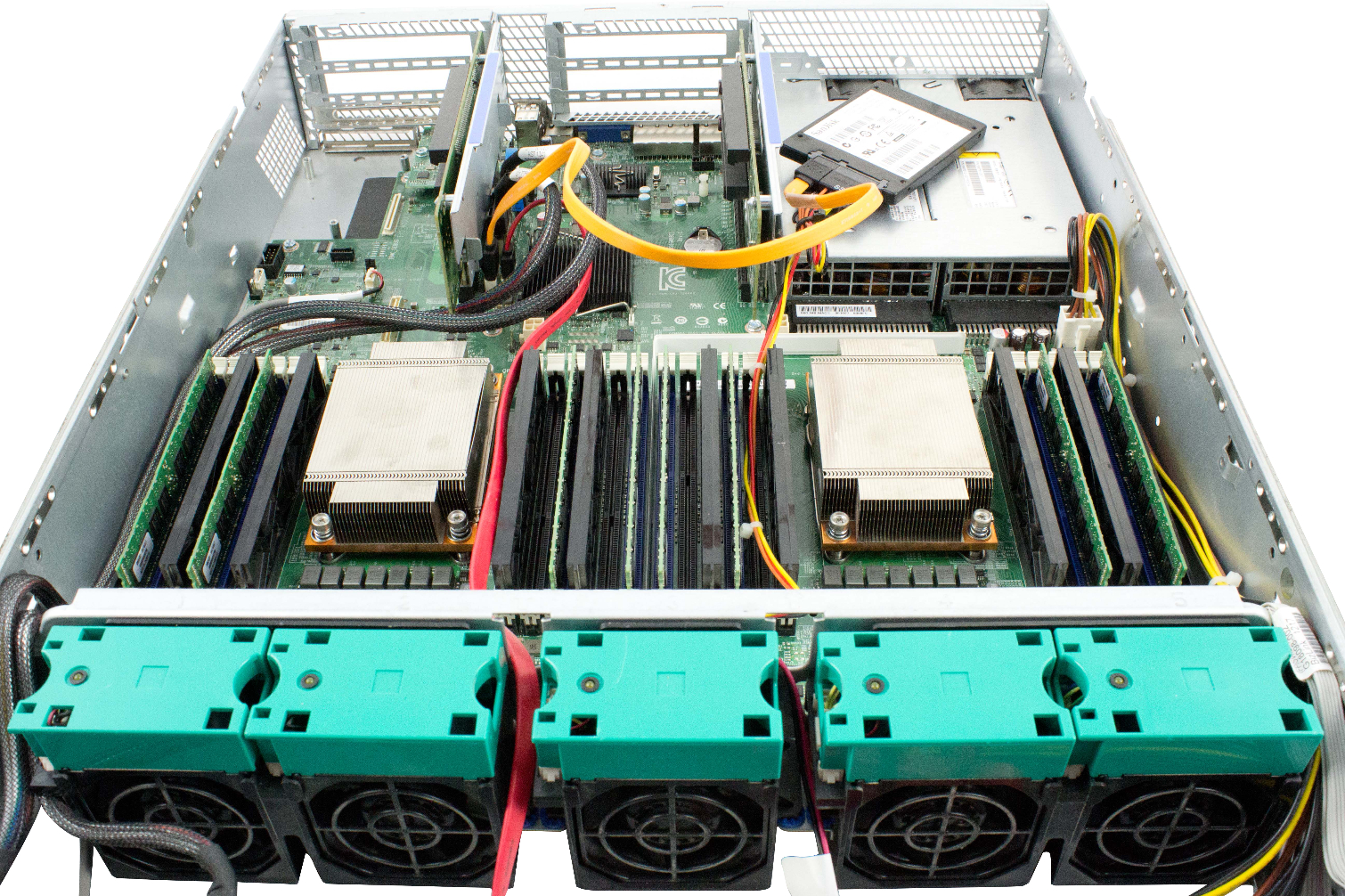

- Intel Wildcat Pass S2G3SY1Q Server

We tested the Broadwell-EP-based Xeon E5-2697 v4, the Haswell-EP-based Xeon E5-2699 v3, and the E5-2643 v3 on an Intel Software Development Platform server. The pre-production Grantley-R EP S2G3SY1Q (Wildcat Pass) Broadwell Qualification 2U test bed originally came with two Xeon E5-2697 v4 CPUs with 18 Hyper-Threaded cores and 45MB of shared cache apiece.

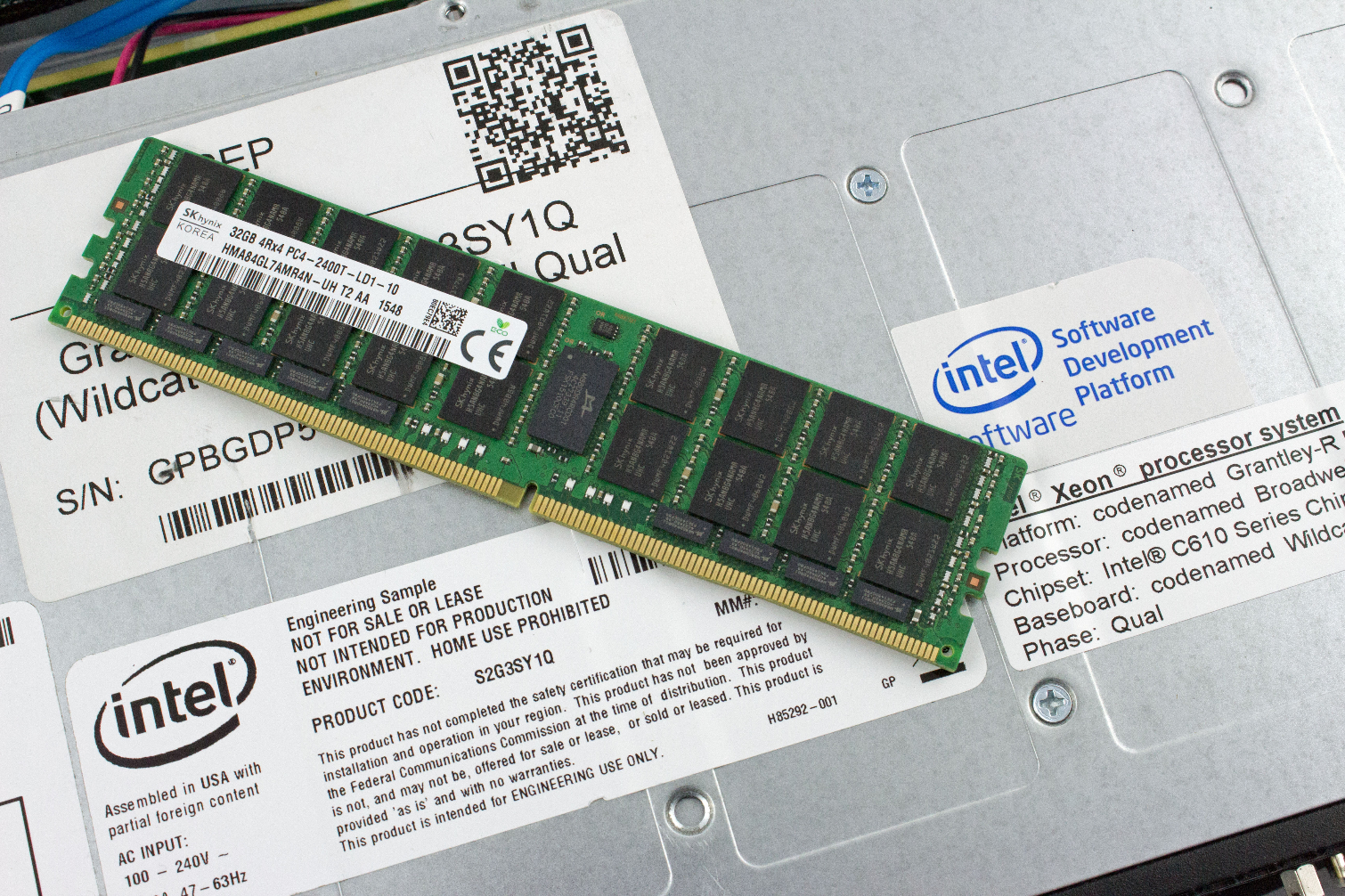

The test platform features Intel's C610 chipset family and includes eight 32GB SK hynix DDR4-2400 DIMMs (HMA84GL7AMR4N-UH). Intel provides this server for use as a software development platform; it's not designed for use in a production environment. As such, it lacks some of the features that facilitate redundancy, such as dual PSUs. One of the PSU bays is covered, but the other houses a single 900W power supply.

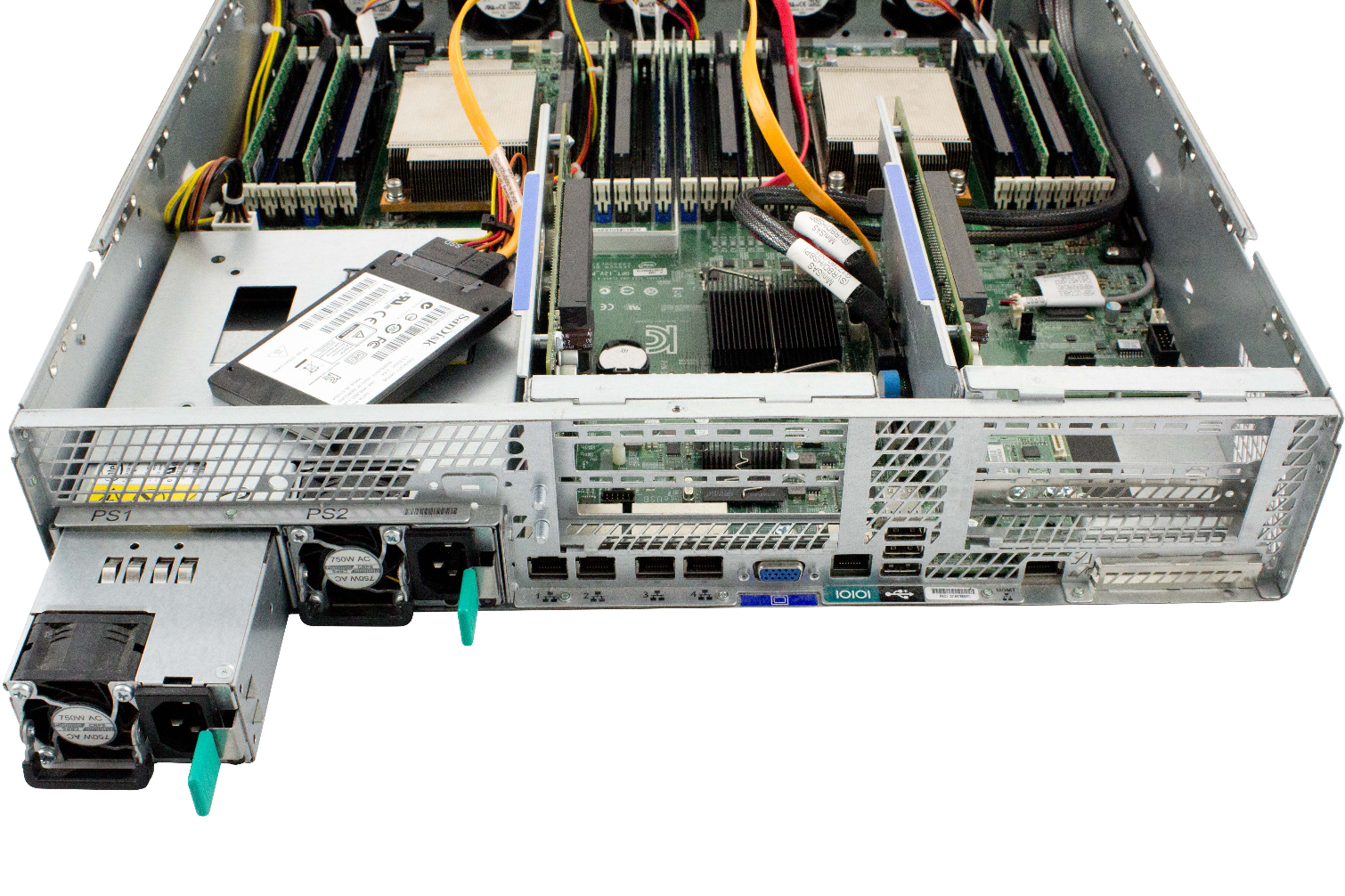

- Intel Server System R2208GZ4GC

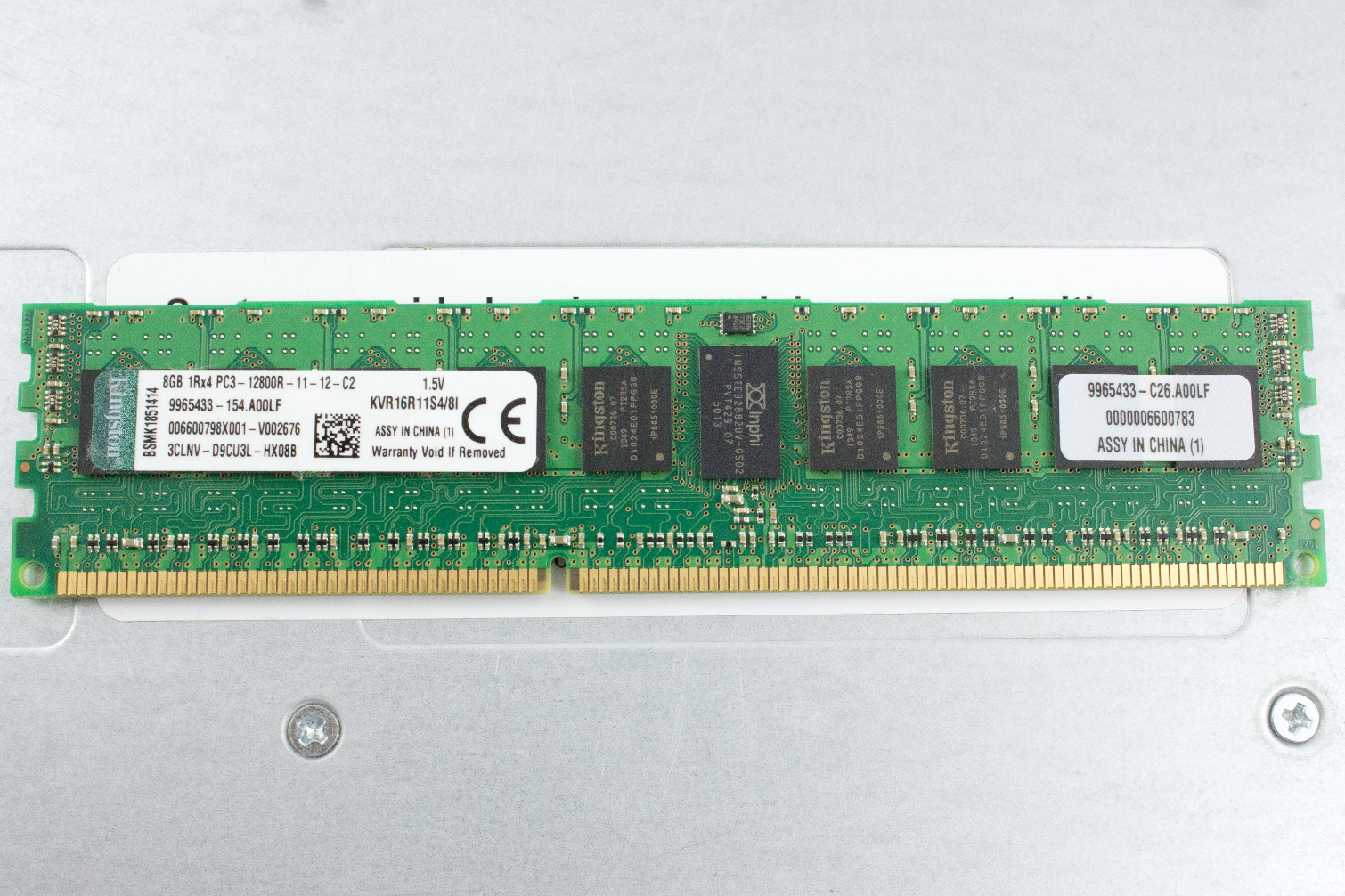

We tested the Ivy Bridge-based (v2) CPUs in Intel's Server System R2208GZ4GC, which features the S2600GZ motherboard (C602 chipset) housed in a production-class chassis with the requisite redundant and hot-swappable fans, along with dual hot-swappable 750W power supplies. We installed 64GB of Kingston DDR3-1600 memory in 8GB modules.

How We Test

We benchmarked the servers with the open source Linux-Bench script, which is available on Linux-Bench.com and GitHub. ServeTheHome and others in the open source community maintain it. The suite runs from an Ubuntu 14.04 LiveCD either on local storage or through a KVM-over-IP connection. The script installs dependencies and runs several well-known independent open source benchmarks that characterize CPU performance.

Most enterprise deployments are built for specific needs and workloads, and as tempting as application testing is, there are far too many variables to make the results applicable to all but a small subset of users. The benchmarks in this article encompass several industry-standard tools that quantify performance trends, but it's noteworthy that optimized deployments could unlock even more performance.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

MORE: Best CPUs

MORE: Intel & AMD Processor Hierarchy

MORE: All CPU Content

Current page: Test Platforms & How We Test

Prev Page The Lewisburg Chipset Next Page Benchmarks, Part 1

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

the nerd 389 Do these CPUs have the same thermal issues as the i9 series?Reply

I know these aren't going to be overclocked, but the additional CPU temps introduce a number of non-trivial engineering challenges that would result in significant reliability issues if not taken into account.

Specifically, as thermal resistance to the heatsink increases, the thermal resistance to the motherboard drops with the larger socket and more pins. This means more heat will be dumped into the motherboard's traces. That could raise the temperatures of surrounding components to a point that reliability is compromised. This is the case with the Core i9 CPUs.

See the comments here for the numbers:

http://www.tomshardware.com/forum/id-3464475/skylake-mess-explored-thermal-paste-runaway-power.html -

Snipergod87 Reply19926080 said:Do these CPUs have the same thermal issues as the i9 series?

I know these aren't going to be overclocked, but the additional CPU temps introduce a number of non-trivial engineering challenges that would result in significant reliability issues if not taken into account.

Specifically, as thermal resistance to the heatsink increases, the thermal resistance to the motherboard drops with the larger socket and more pins. This means more heat will be dumped into the motherboard's traces. That could raise the temperatures of surrounding components to a point that reliability is compromised. This is the case with the Core i9 CPUs.

See the comments here for the numbers:

http://www.tomshardware.com/forum/id-3464475/skylake-mess-explored-thermal-paste-runaway-power.html

Wouldn't be surprised if they did but also wouldn't be surprised in Intel used solder on these. Also it is important to note that server have much more airflow than your standard desktop, enabling better cooling all around, from the CPU to the VRM's. Server boards are designed for cooling as well and not aesthetics and stylish heat sink designs -

InvalidError Reply

That heat has to go from the die, through solder balls, the multi-layer CPU carrier substrate, those tiny contact fingers and finally, solder joints on the PCB. The thermal resistance from die to motherboard will still be over an order of magnitude worse than from the die to heatsink, which is less than what the VRM phases are sinking into the motherboard's power and ground planes. I wouldn't worry about it.19926080 said:the thermal resistance to the motherboard drops with the larger socket and more pins. This means more heat will be dumped into the motherboard's traces.

-

bit_user ReplyThe 28C/56T Platinum 8176 sells for no less than $8719

Actually, the big customers don't pay that much, but still... For that, it had better be made of platinum!

That's $311.39 per core!

The otherwise identical CPU jumps to a whopping $11722, if you want to equip it with up to 1.5 TB of RAM instead of only 768 GB.

Source: http://ark.intel.com/products/120508/Intel-Xeon-Platinum-8176-Processor-38_5M-Cache-2_10-GHz -

Kennyy Evony jowen3400 21 minutes agoReply

Can this run Crysis?

Jowen, did you just come up to a Ferrari and ask if it has a hitch for your grandma's trailer? -

bit_user Reply

I wouldn't trust a $8k server CPU I got for $100. I guess if they're legit pulls from upgrades, you could afford to go through a few @ that price to find one that works. Maybe they'd be so cheap because somebody already did cherry-pick the good ones.19927274 said:W8 on ebay\aliexpress for $100

Still, has anyone had any luck on such heavily-discounted server CPUs? Let's limit to Sandybridge or newer. -

JamesSneed Reply19927188 said:The 28C/56T Platinum 8176 sells for no less than $8719

Actually, the big customers don't pay that much, but still... For that, it had better be made of platinum!

That's $311.39 per core!

The otherwise identical CPU jumps to a whopping $11722, if you want to equip it with up to 1.5 TB of RAM instead of only 768 GB.

Source: http://ark.intel.com/products/120508/Intel-Xeon-Platinum-8176-Processor-38_5M-Cache-2_10-GHz

That is still dirt cheap for a high end server. An Oracle EE database license is going to be 200K+ on a server like this one. This is nothing in the grand scheme of things.

-

bit_user Reply

A lot of people don't have such high software costs. In many cases, the software is mostly home-grown and open source (or like 100%, if you're Google).19927866 said:An Oracle EE database license is going to be 200K+ on a server like this one. This is nothing in the grand scheme of things.