Why you can trust Tom's Hardware

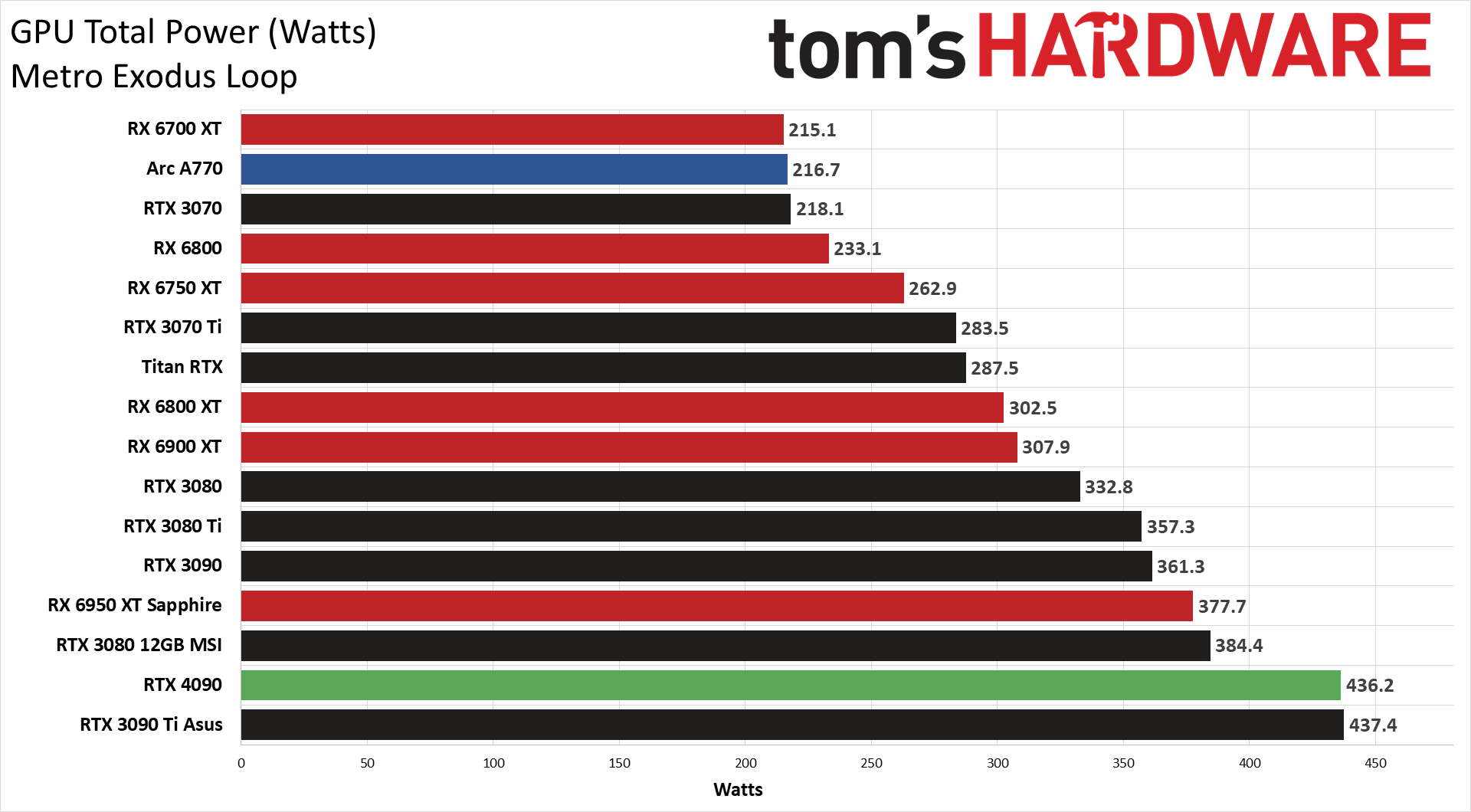

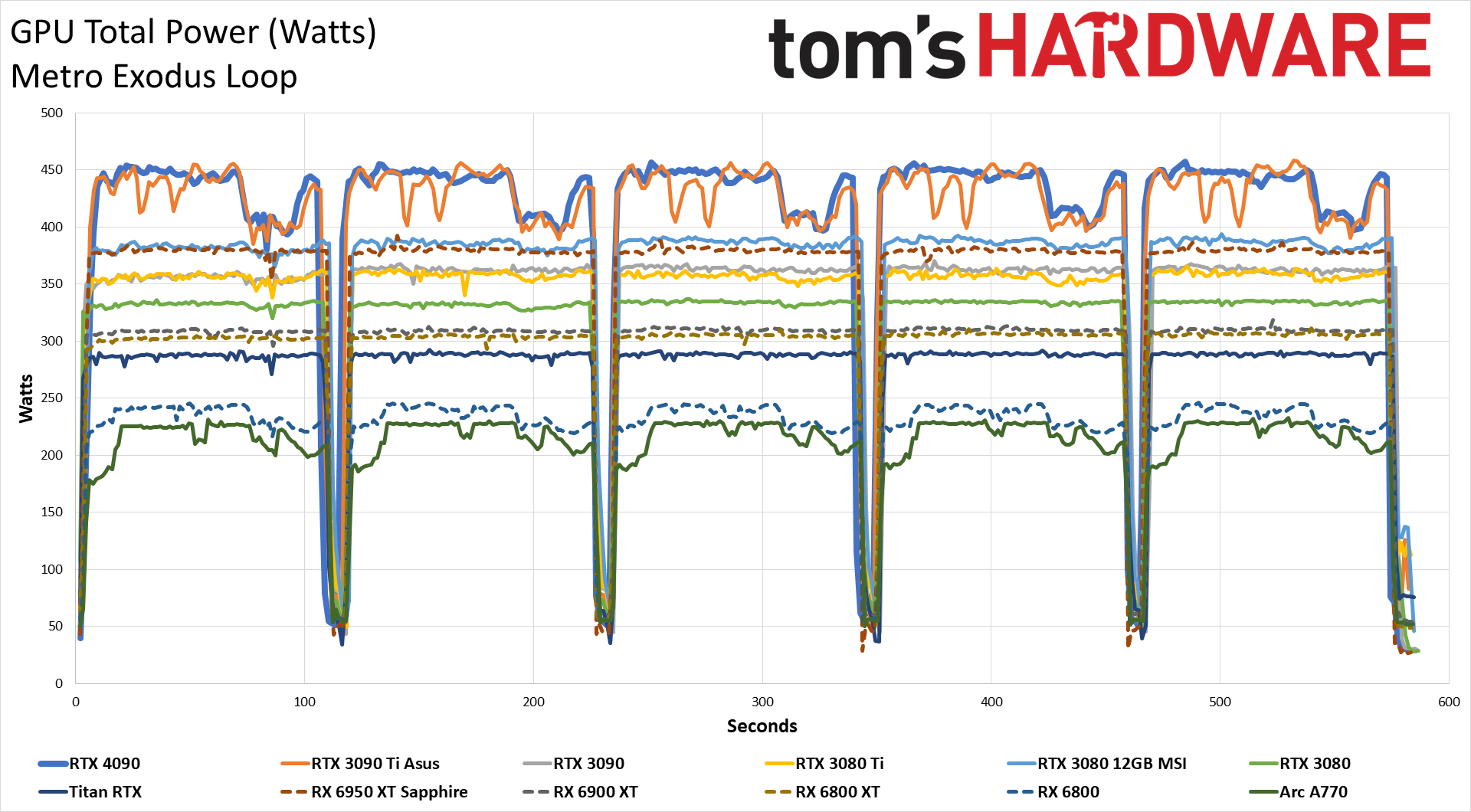

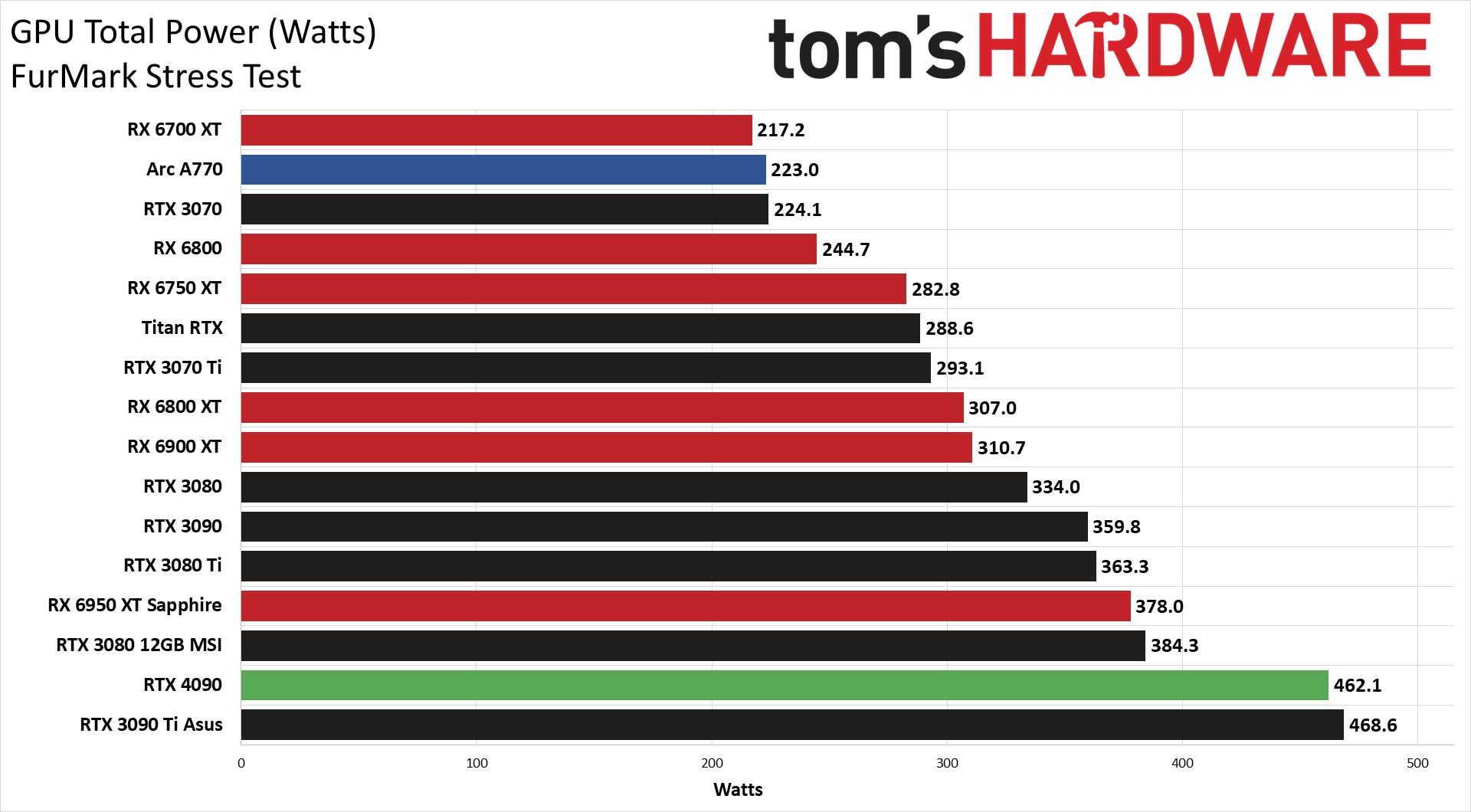

We measure real-world power consumption using Powenetics testing hardware and software. We capture in-line GPU power consumption by collecting data while looping Metro Exodus (the original, not the enhanced version) and while running the FurMark stress test. Our test PC remains the same old Core i9-9900K as we've used previously, to keep results consistent.

For the RTX 4090 Founders Edition, our previous settings of 2560x1440 ultra in Metro and 1600x900 in FurMark clearly weren't pushing the GPU hard enough. Power draw was well under the rated 450W TBP, so we increased the resolution and settings to 4K Extreme for Metro and 1440p for FurMark. The following charts are intended to represent worst-case power consumption, temps, etc. and so we do check other settings to ensure we're pushing the GPUs as much as reasonably possible.

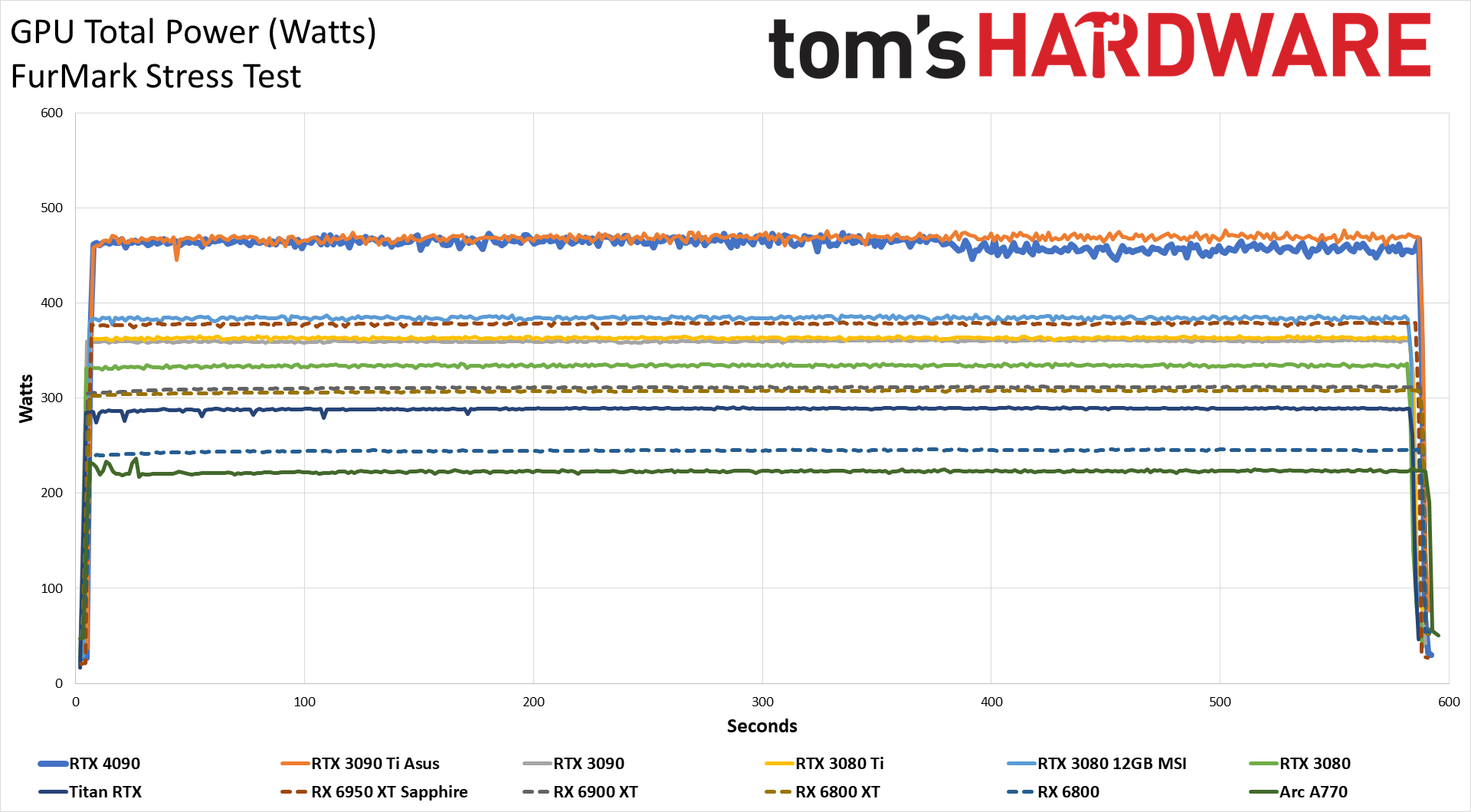

Compared to the Asus RTX 3090 Ti, the power results are very similar. That makes sense as both cards have a 450W TBP. Considering you're getting at least 50% higher performance with the 4090 (at 4K), that proves the core architecture is quite a bit more efficient than with Ampere. Both the 4090 and 3090 Ti average just under 440W in Metro Exodus, and 460W–470W in FurMark. Interestingly, the 4090 power draw does take a dip after about six minutes running FurMark, but we don't see a corresponding drop in clock or fan speeds.

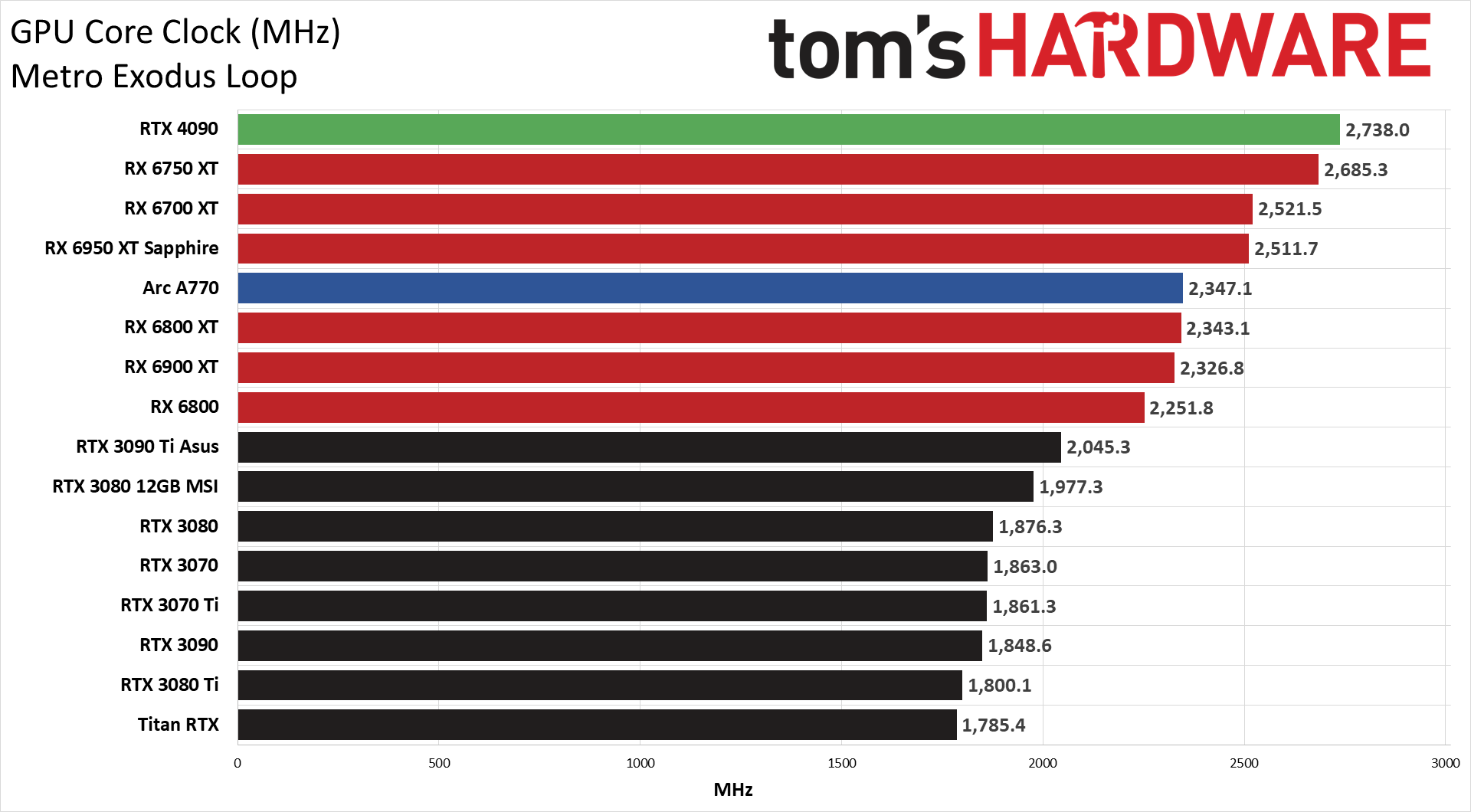

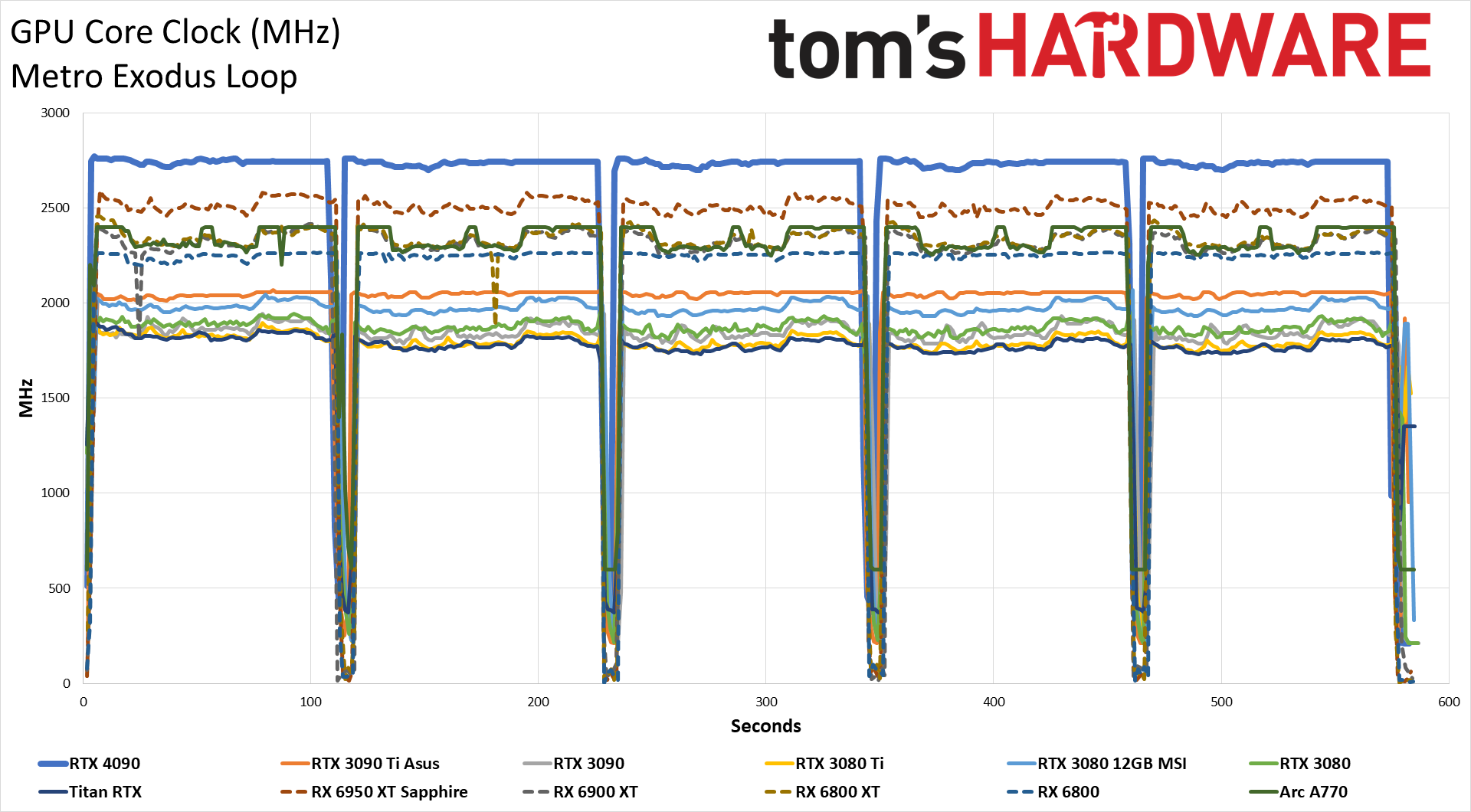

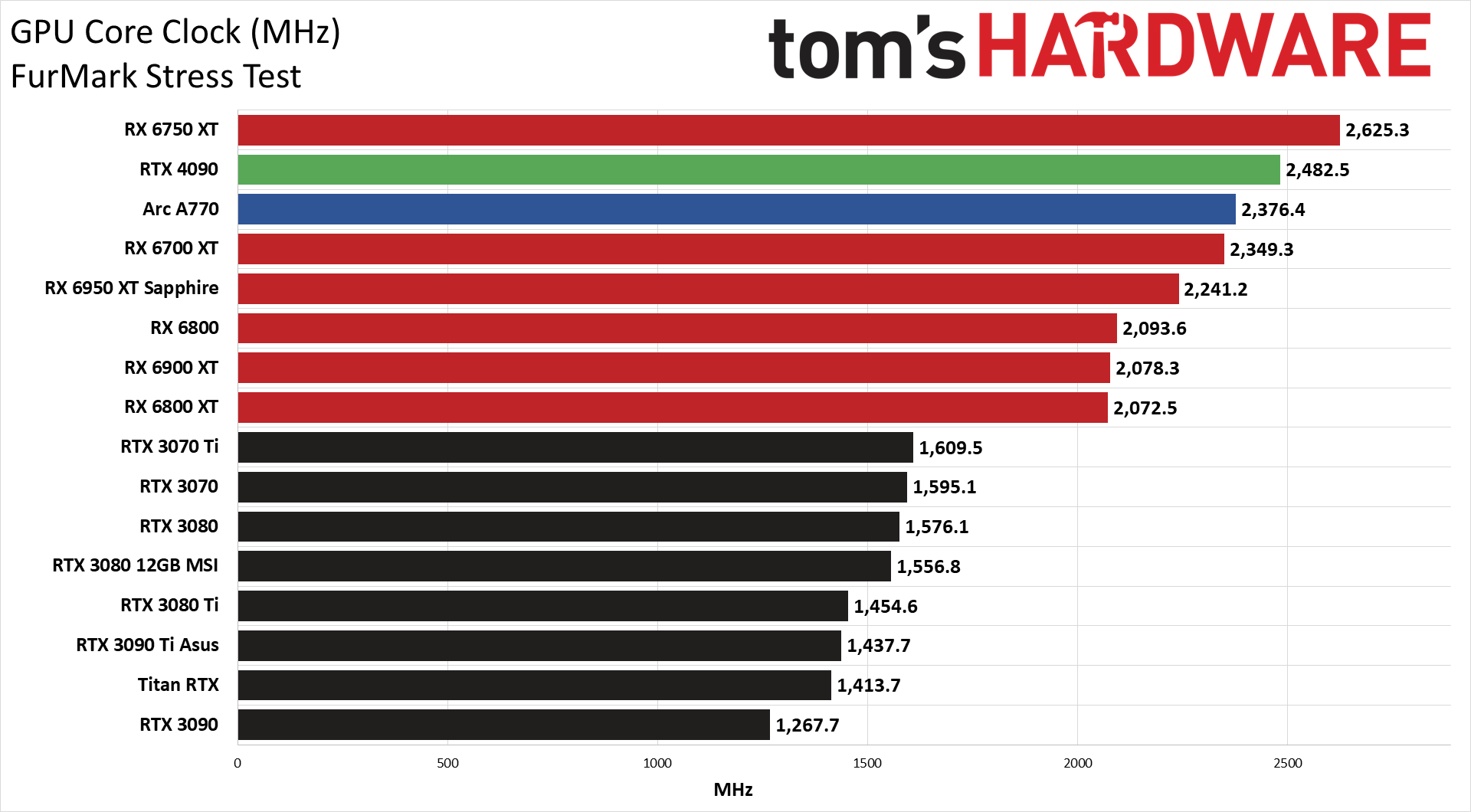

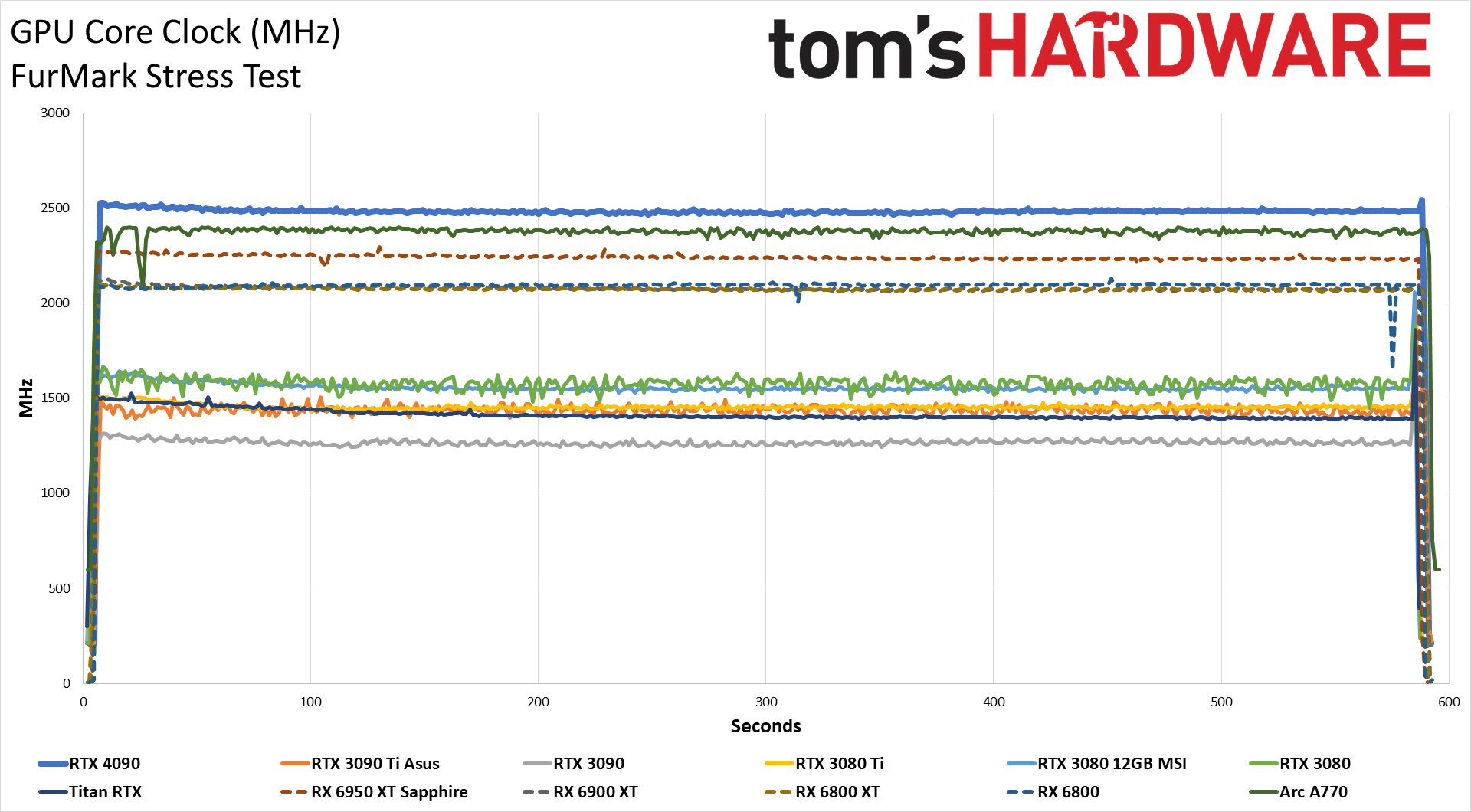

GPU clocks are a massive improvement over any prior Nvidia GPU architecture. The RTX 4090 has an official boost clock of 2520 MHz, though Nvidia often exceeds that for gaming workloads. In Metro Exodus, as well as many of the other games we've checked, typical GPU clocks are in the 2750 MHz range. That's higher than even AMD's RX 6750 XT, and there are indications we'd be able to push those clocks even higher if we're willing to crank up the power limit. FurMark does drop to just under 2.5 GHz, but then the other Nvidia GPUs show even larger throttling, with the 3090 Ti as an example running at just 1.44 GHz.

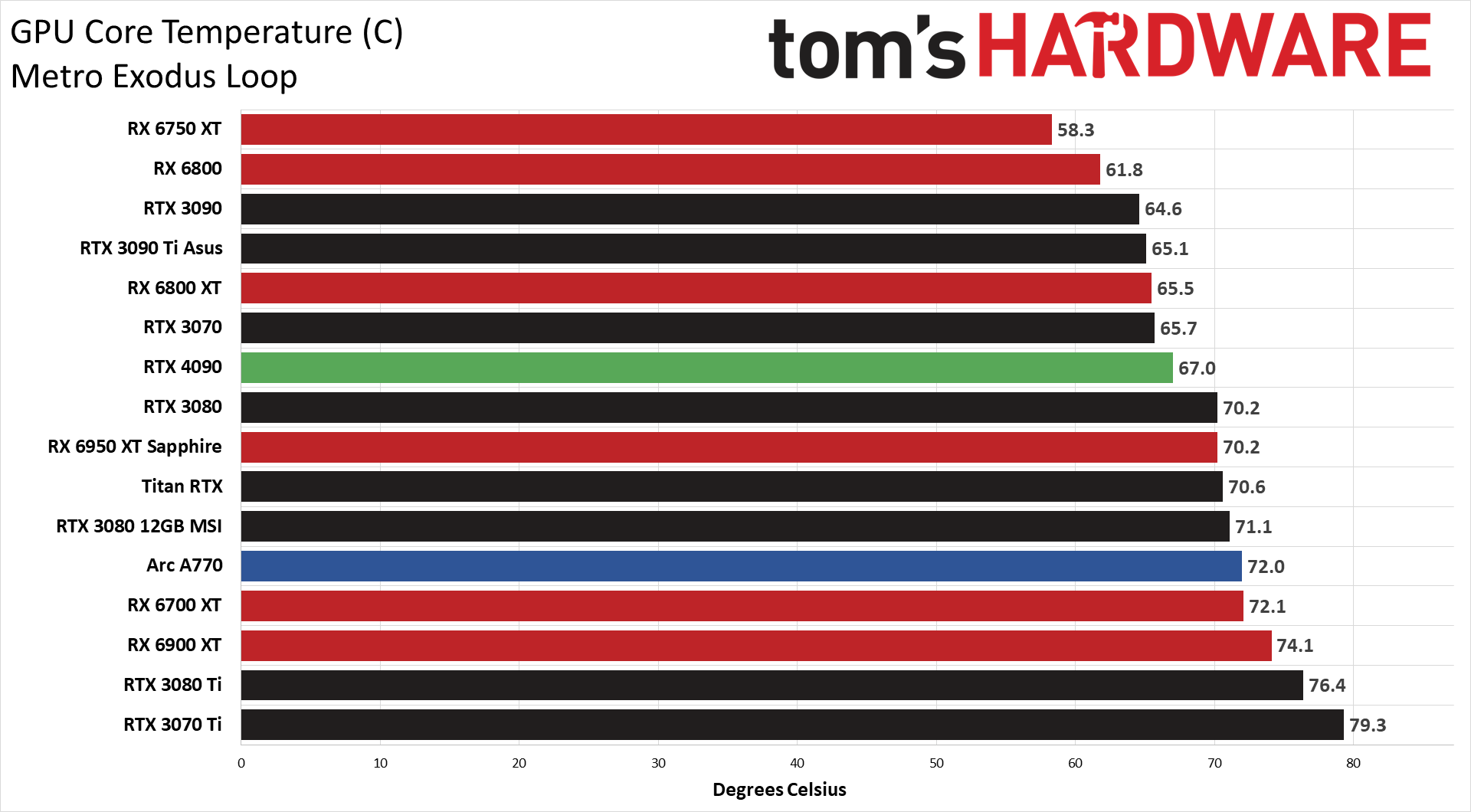

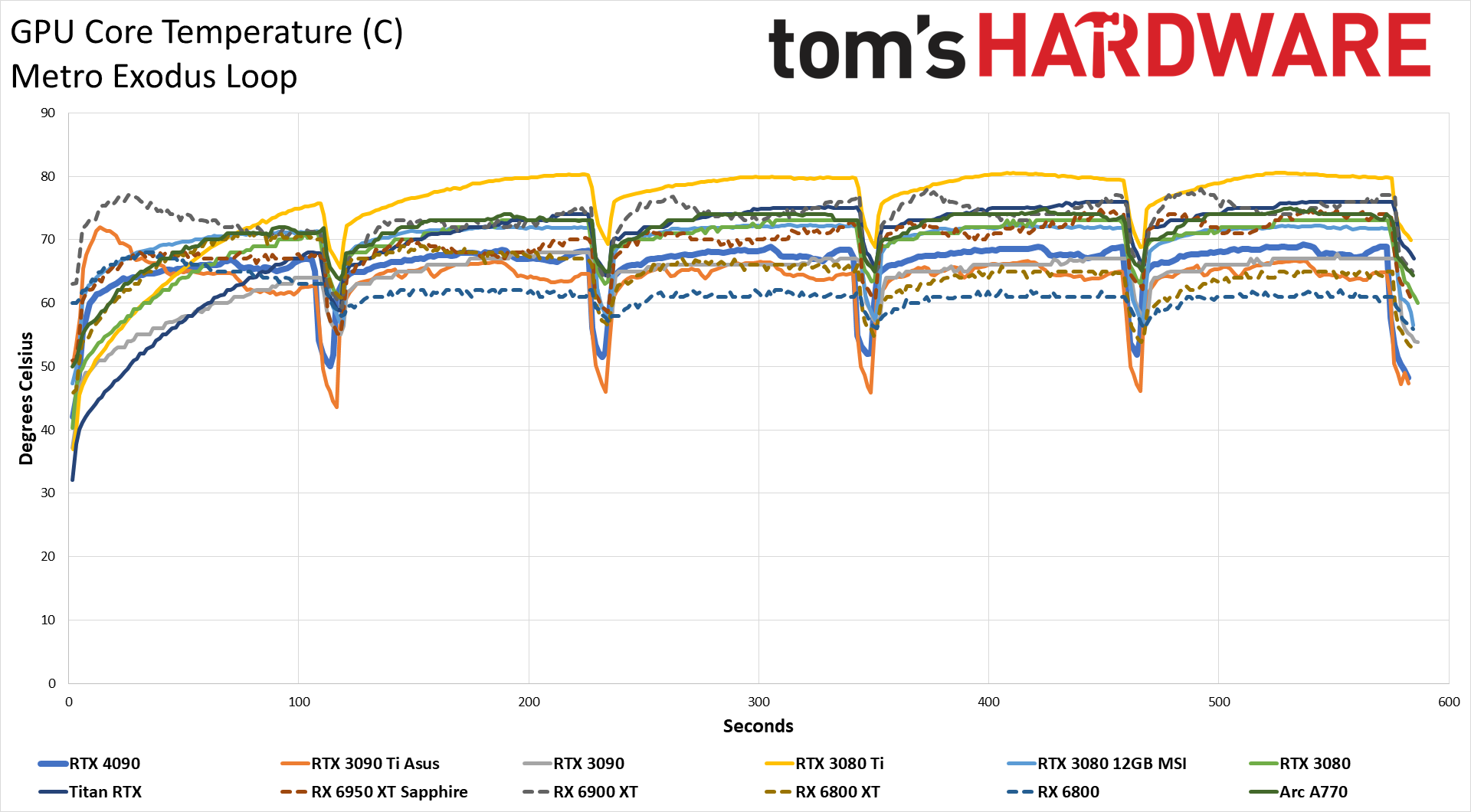

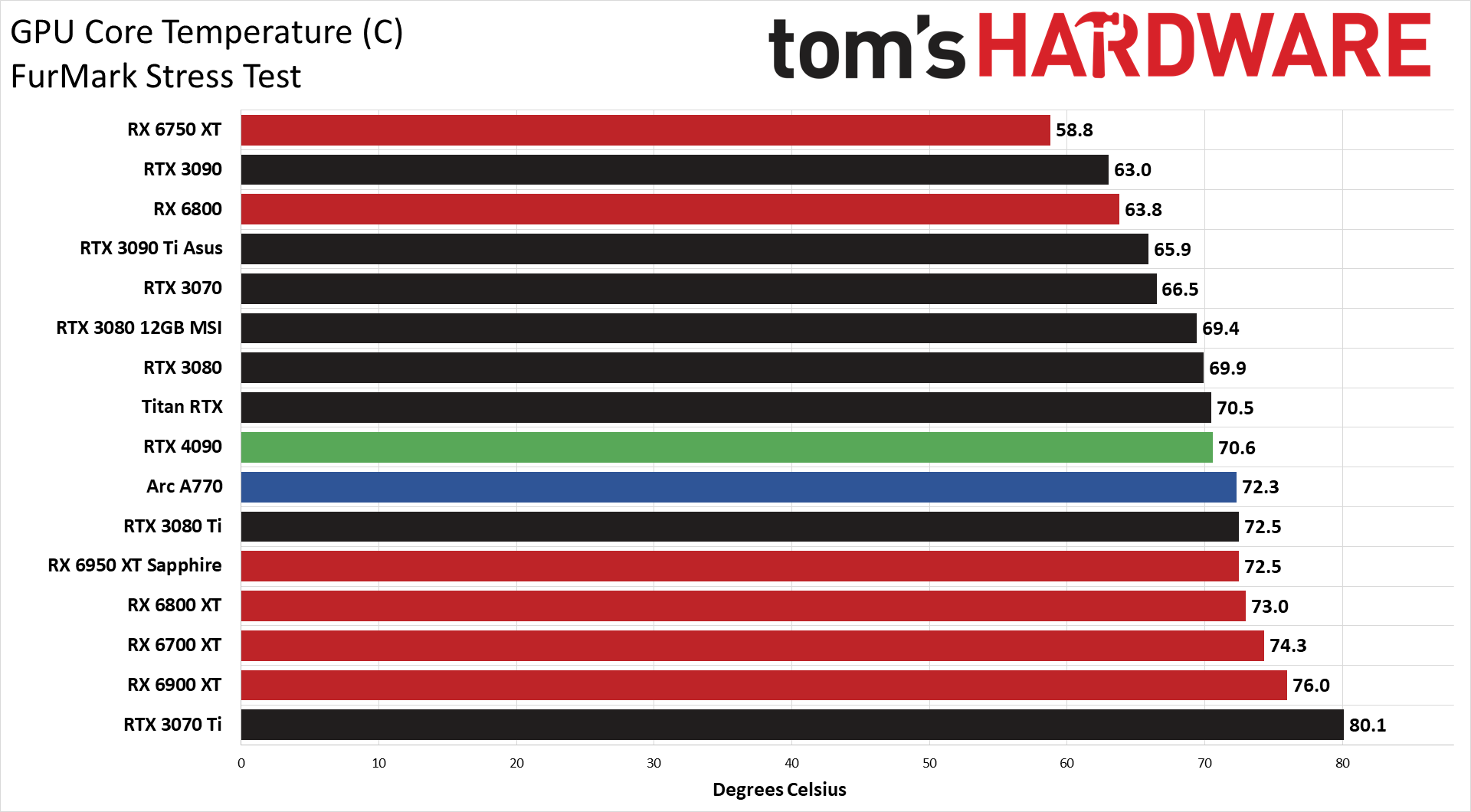

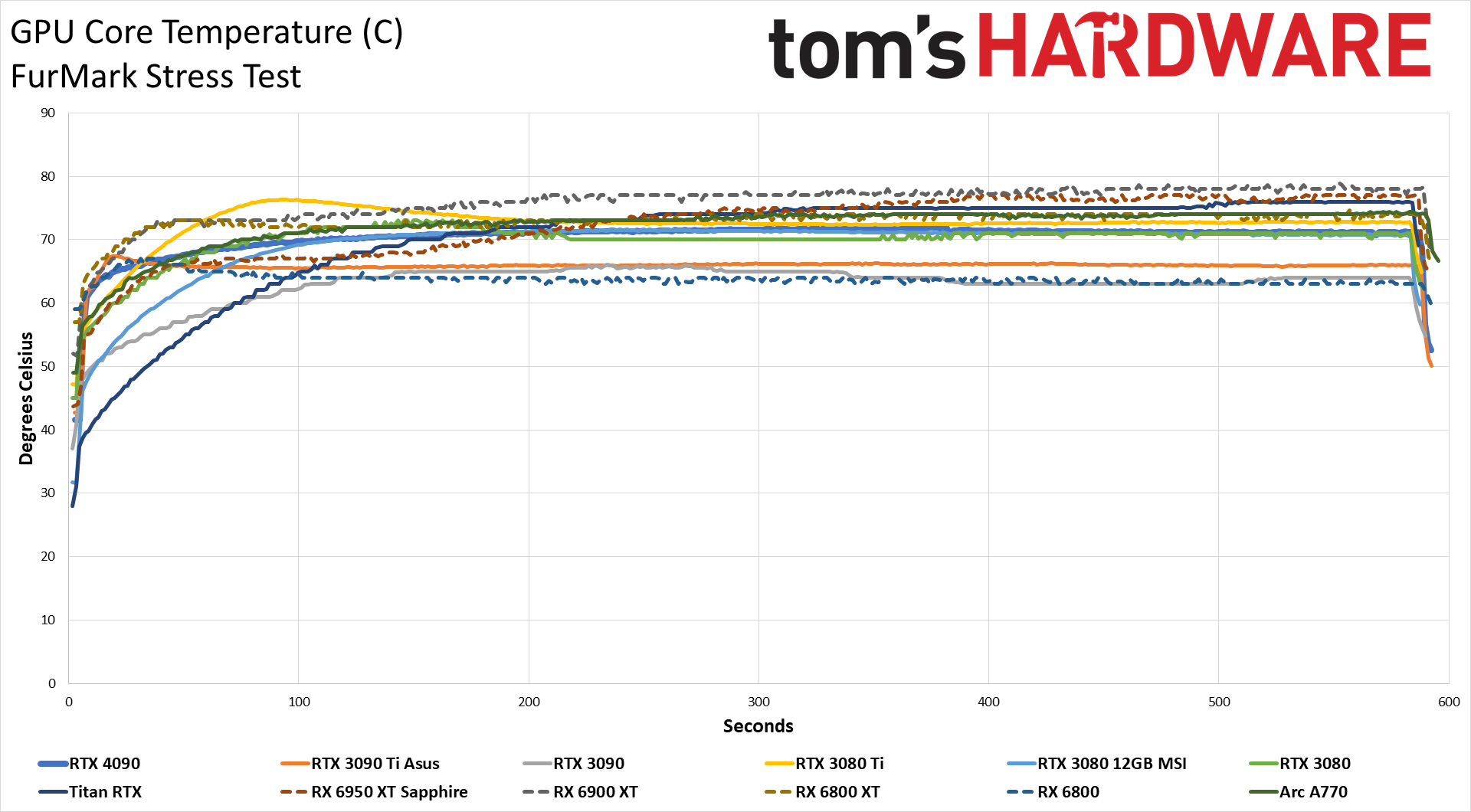

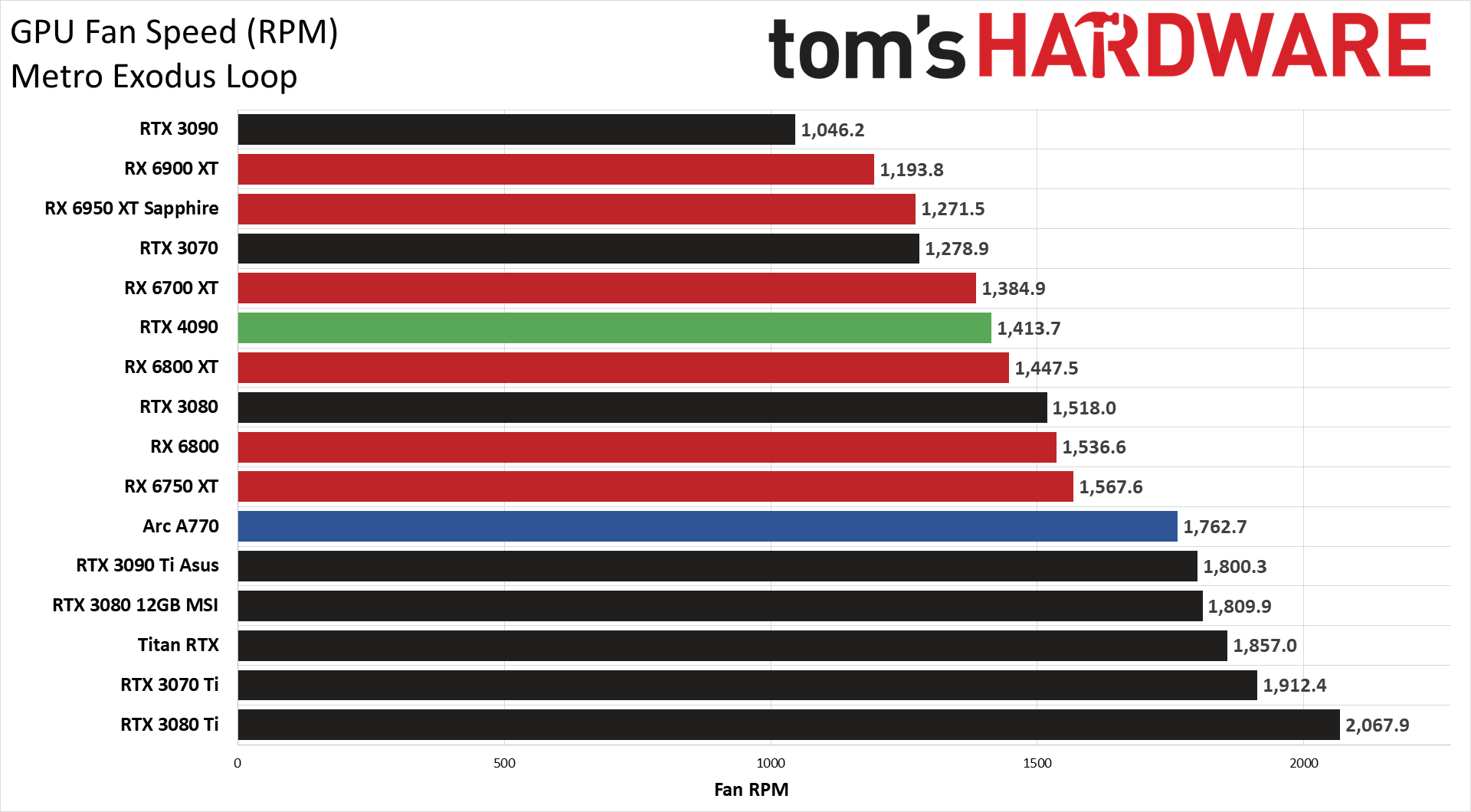

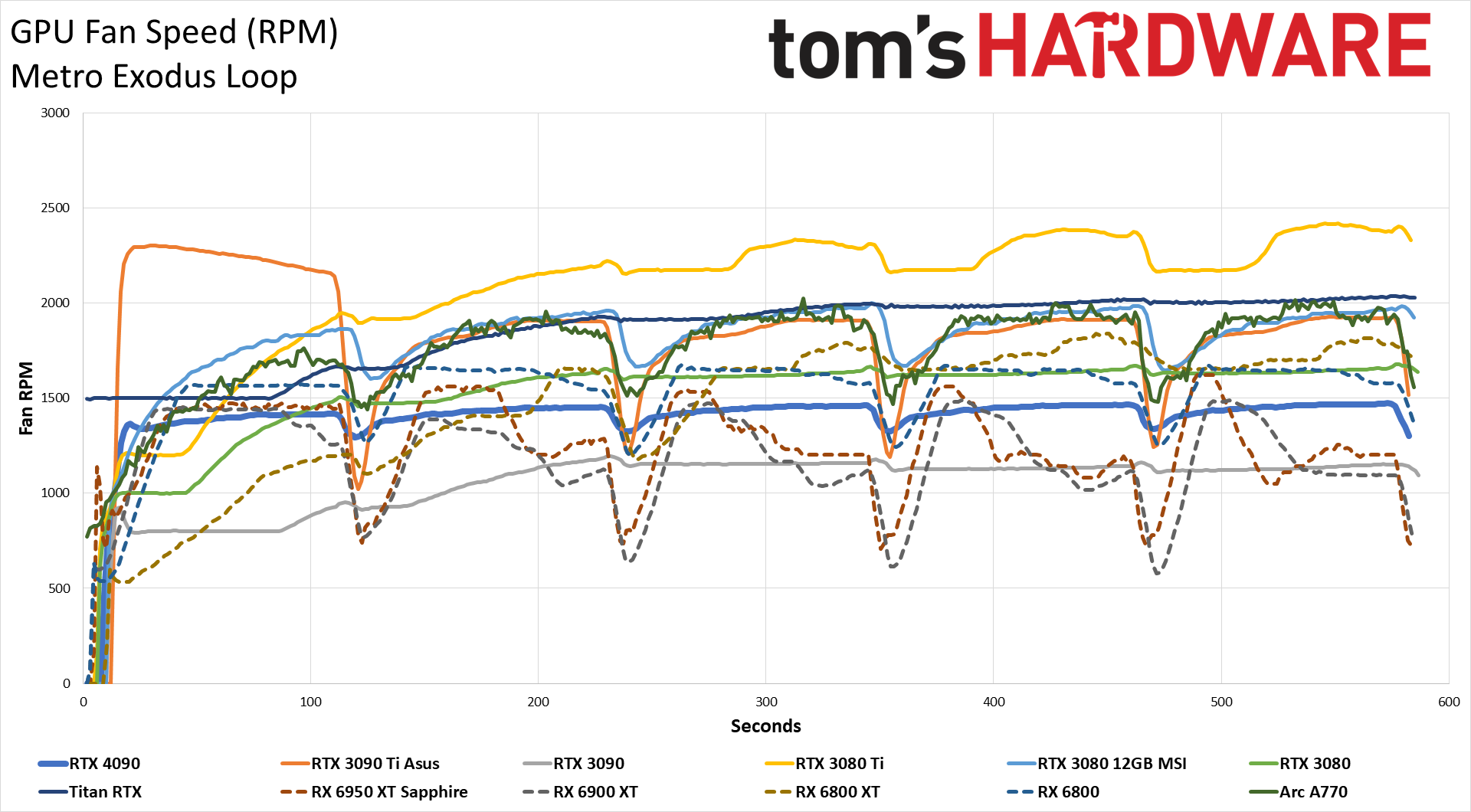

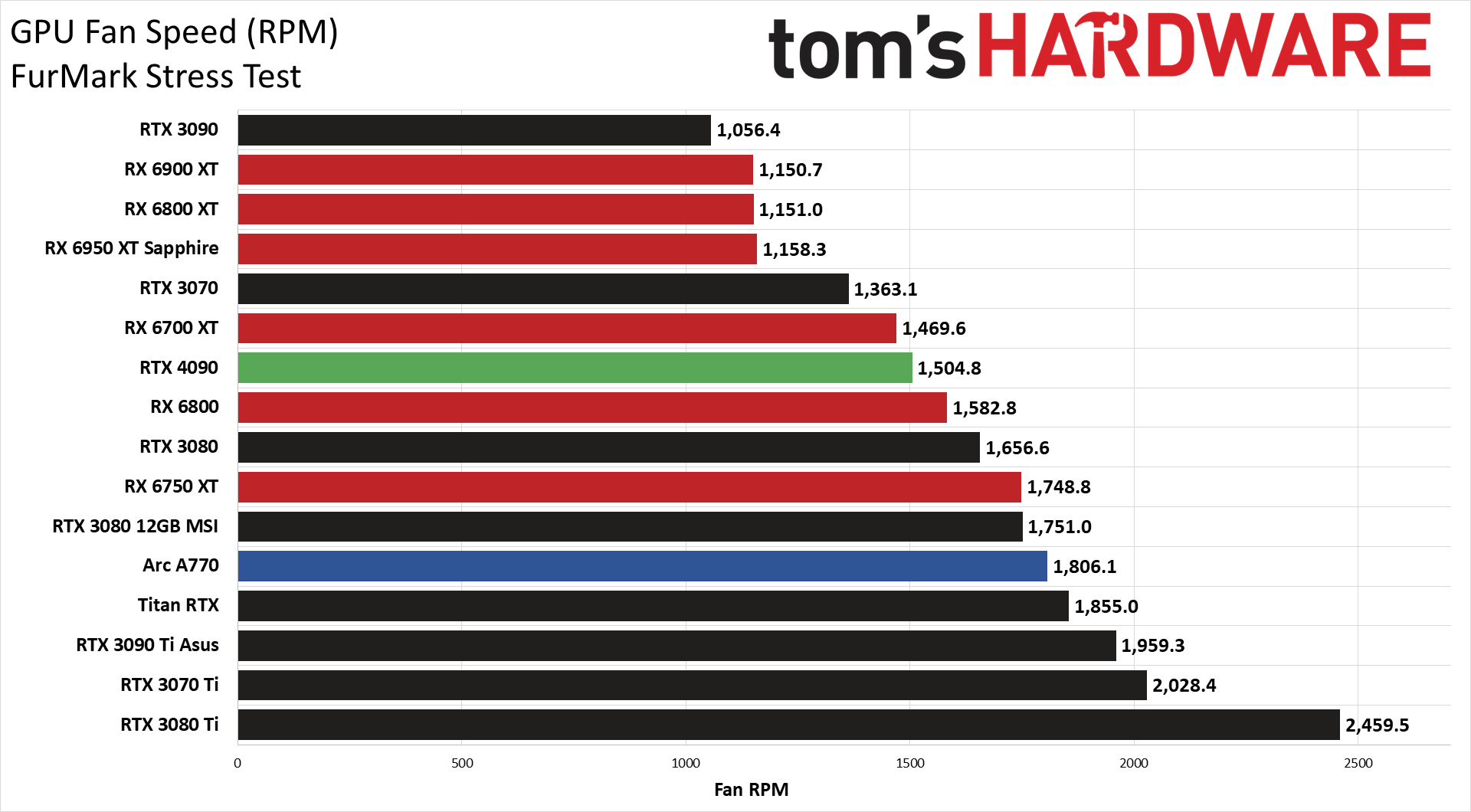

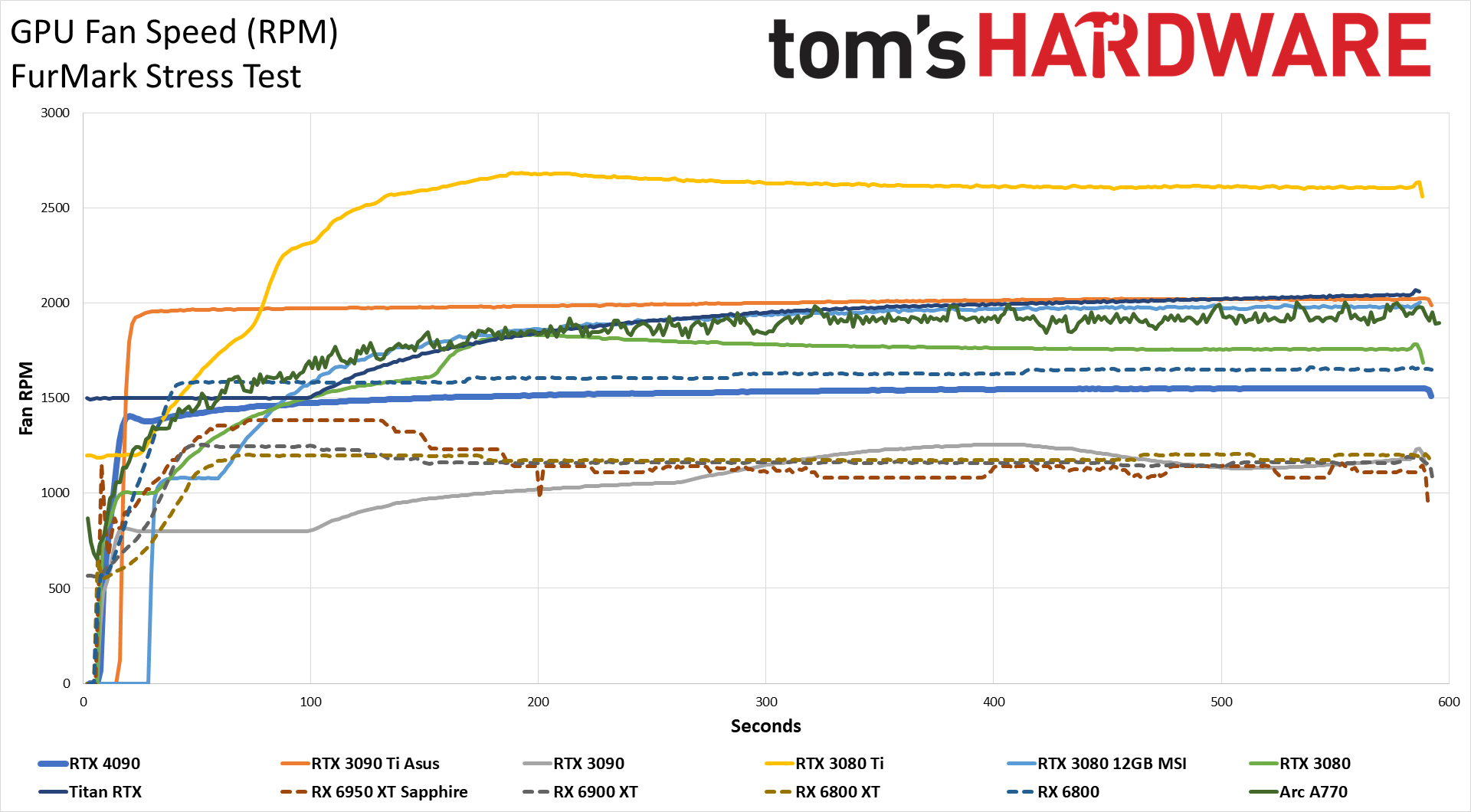

We were impressed with the RTX 3090 Founders Edition at launch, as it had incredibly low noise levels and temperatures — at least while gaming. Cryptocurrency mining was another matter, and the Founders Edition cards routinely hit 110C on their GDDR6X memory before throttling kicked in. But for gaming, the 3090 was usually very cool and quiet. The 3090 Ti from Asus had to ramp up fan speed quite a bit in order to remain at its target temperature of around 65C. The 4090 Founders Edition ends up splitting the difference: slightly higher temps of 67C but lower fan speed. FurMark is also more favorable toward the 4090 than the 3090 Ti, though it's not representative of very many real-world workloads.

We measure noise levels at 10cm using an SPL (sound pressure level) meter, aimed right at the GPU fans in order to minimize the impact of other noise sources like the fans on the CPU cooler. The noise floor of our test environment and equipment is around 32–33 dB(A). The RTX 4090 while gaming plateaued at 45.0 dB(A) and a fan speed of around 40%. The Asus 3090 Ti in comparison ran at 49.1 dB(A) and a 74% fan speed, while a Sapphire RX 6950 XT only measured 37.3 dB(A).

The fans on the RTX 4090 can get louder if needed, and ramping up fan speed to 75% results in 57.2 dB(A). Hopefully the card won't ever need to run the fans that high — and with the death of GPU mining, that's far more likely to be the case during the lifetime of the card.

Overall, the RTX 4090 Founders Edition isn't worse than the RTX 3090 Ti from a pure power consumption point of view, and it offers far superior performance. We're also very interested in doing additional testing with overclocking and third-party cards, which we'll be looking at in the near future.

One thing to note is that, while the power draw of the RTX 4090 might be 450W and that seems like a lot (it is), for chips it's really more about thermal density. Cooling 450W in a 608mm^2 chip isn't that difficult. It's actually quite a bit easier than cooling 250W in a 215mm^2 chip, which is what Alder Lake i9-12900K has to deal with. Zen 4 is actually potentially worse, with a 70mm^2 CCD (core complex die) that could potentially pull well over 140W.

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: GeForce RTX 4090: Power, Temps, Noise, Etc.

Prev Page GeForce RTX 4090 Video Encoding Performance and Quality Next Page RTX 4090 Third Party Cards and Overclocking

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

-Fran- Shouldn't this be up tomorrow?Reply

EDIT: Nevermind. Looks like it was today! YAY.

Thanks for the review!

Regards. -

brandonjclark Replycolossusrage said:Can finally put 4K 120Hz displays to good use.

I'm still on a 3090, but on my 165hz 1440p display, so it maxes most things just fine. I think I'm going to wait for the 5k series GPU's. I know this is a major bump, but dang it's expensive! I simply can't afford to be making these kind of investments in depreciating assets for FUN. -

JarredWaltonGPU Reply

Yeah, Nvidia almost always does major launches with Founders Edition reviews the day before launch, and partner card reviews the day of launch.-Fran- said:Shouldn't this be up tomorrow?

EDIT: Nevermind. Looks like it was today! YAY.

Thanks for the review!

Regards. -

JarredWaltonGPU Reply

You could still possibly get $800 for the 3090. Then it’s “only” $800 to upgrade! LOL. Of course if you sell on eBay it’s $800 - 15%.brandonjclark said:I'm still on a 3090, but on my 165hz 1440p display, so it maxes most things just fine. I think I'm going to wait for the 5k series GPU's. I know this is a major bump, but dang it's expensive! I simply can't afford to be making these kind of investments in depreciating assets for FUN. -

kiniku A review like this, comparing a 4090 to an expensive sports car we should be in awe and envy of, is a bit misleading. PC Gaming systems don't equate to racing on the track or even the freeway. But the way it's worded in this review if you don't buy this GPU, anything "less" is a compromise. That couldn't be further from the truth. People with "big pockets" aren't fools either, except for maybe the few readers here that have convinced themselves and posted they need one or spend everything they make on their gaming PC's. Most gamers don't want or need a 450 watt sucking, 3 slot, space heater to enjoy an immersive, solid 3D experience.Reply -

spongiemaster Reply

Congrats on stating the obvious. Most gamers have no need for a halo GPU that can be CPU limited sometimes even at 4k. A 50% performance improvement while using the same power as a 3090Ti shows outstanding efficiency gains. Early reports are showing excellent undervolting results. 150W decrease with only a 5% loss to performance.kiniku said:Most gamers don't want or need a 450 watt sucking, 3 slot, space heater to enjoy an immersive, solid 3D experience.

Any chance we could get some 720P benchmarks? -

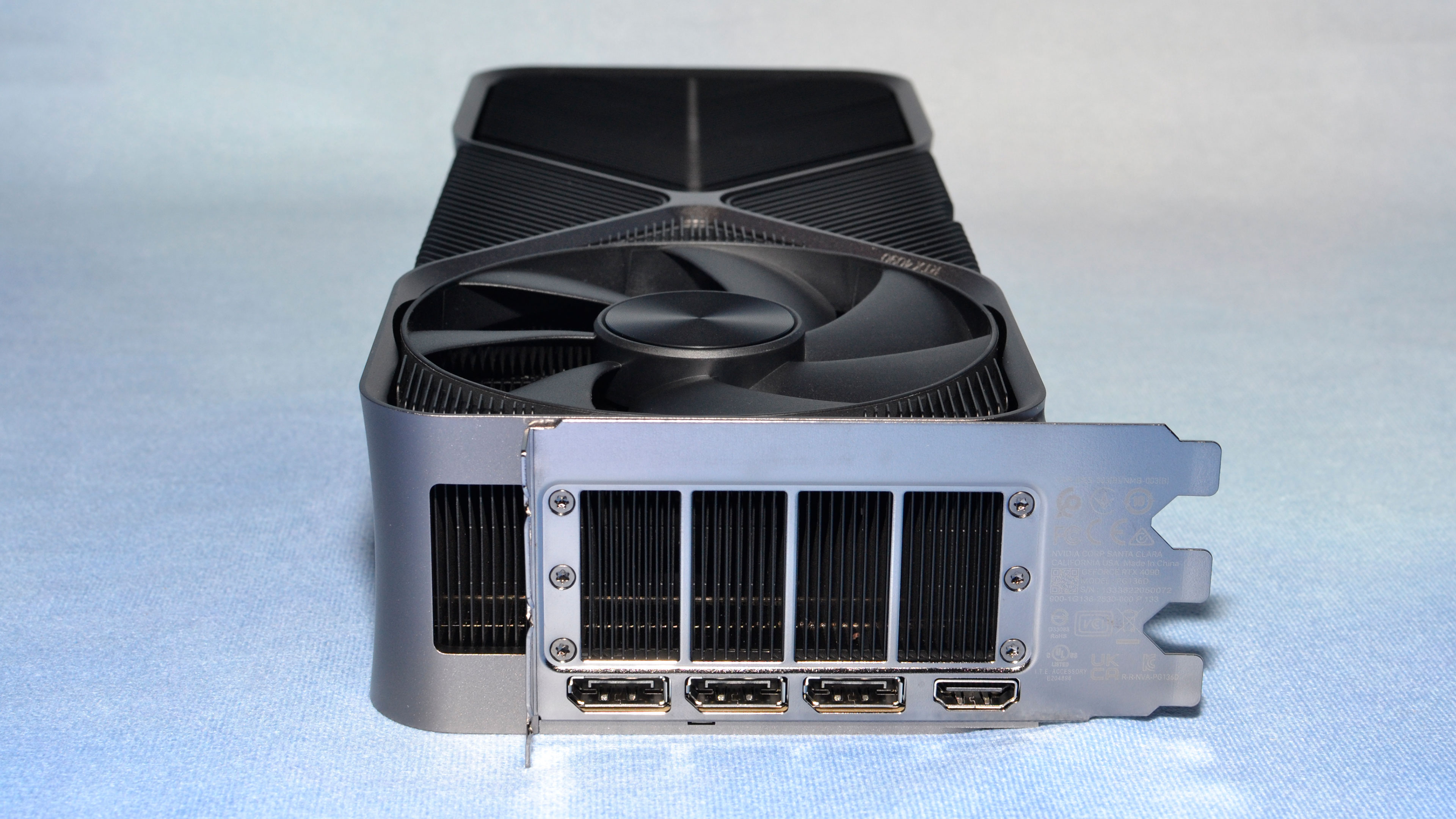

LastStanding Replythe RTX 4090 still comes with three DisplayPort 1.4a outputs

the PCIe x16 slot sticks with the PCIe 4.0 standard rather than upgrading to PCIe 5.0.

These missing components are selling points now, especially knowing NVIDIA's rival(s?) supports the updated ports, so, IMO, this should have been included as a "con" too.

Another thing, why would enthusiasts only value "average metrics" when "average" barely tells the complete results?! It doesn't show the programs stability, any frame-pacing/hitches issues, etc., so a VERY miss oversight here, IMO.

I also find weird is, the DLSS benchmarks. Why champion the increase for extra fps buuuut... never, EVER, no mention of the awareness of DLSS included awful sharpening-pass?! 😏 What the sense of having faster fps but the results show the imagery smeared, ghosting, and/or artefacts to hades? 🤔