Why you can trust Tom's Hardware

The Nvidia Ada Lovelace and RTX 40-series GPUs feature a lot of new tech, so there are some things we can't even test against on previous generation graphics cards — like DLSS 3. The short recap is that DLSS 3's main new feature is Frame Generation, which uses a more powerful Optical Flow Accelerator fixed function unit that's only found in Ada GPUs. Ampere and even Turing GPUs also have OFAs, but they are much slower and apparently can't handle the computational requirements of Frame Generation — or at least that's Nvidia's claim and we have no way to prove or disprove it.

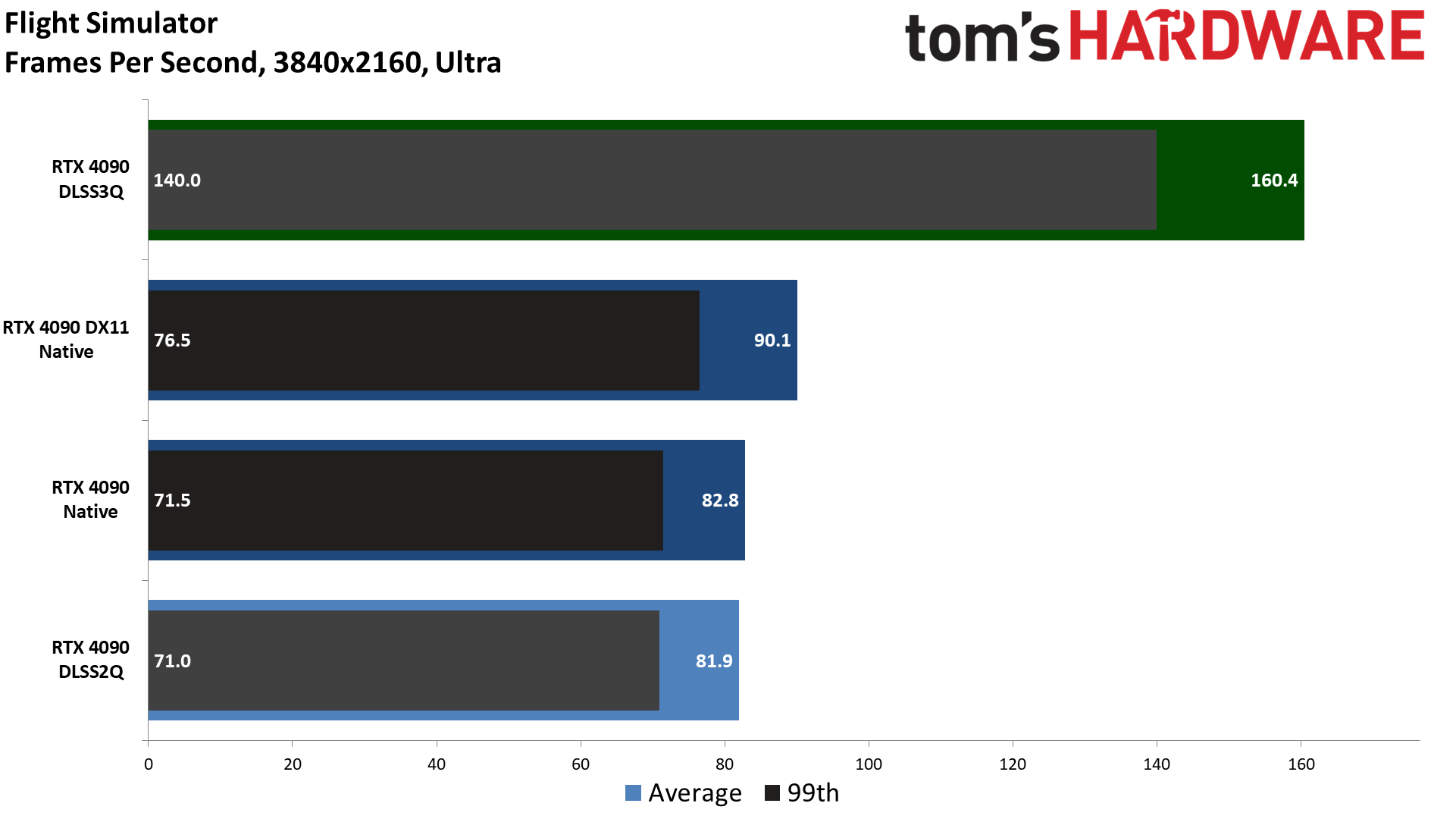

DLSS 3 is otherwise backward compatible with DLSS 2, meaning if you have an RTX 20- or 30-series graphics card, it will just do regular DLSS 2 Super Sampling. All of the preview games that Nvidia provided access to include a toggle for Frame Generation, and sometimes you can even use it without enabling DLSS — which could be useful in fully CPU-limited games like Flight Simulator. As an added bonus (to Nvidia GPU owners at least), DLSS 3 requires that a game also implement Nvidia Reflex, which can substantially lower total system latency in games that support it.

Nvidia has announced that over 35 games and apps are already working to implement the technology, and given the benefits we experienced, we expect many more will join that list in the coming years.

Frame Generation works by taking the previous frame and the current frame and then generating an interstitial frame. Every other frame thus ends up being created via Frame Generation, with the drivers balancing frame outputs to keep frames coming at an even pace. You'll note that this means Frame Generation basically adds one frame of additional latency, plus additional overhead, though Reflex basically overcomes the latency deficit relative using DLSS 2 without Reflex. In other words, Nvidia is spending the latency gains from Reflex on Frame Generation.

In a best-case scenario, Frame Generation can theoretically double the framerate of a game. However, it does not increase the number of frames generated by the CPU and there's no additional input sampling. Still, games should look smoother and subjectively they also feel smoother. For competitive esports gaming, however, the lower latency of Reflex without Frame Generation may still provide an advantage.

With that preface out of the way, here's a preview of what DLSS 3 can do in seven different games and/or demos.

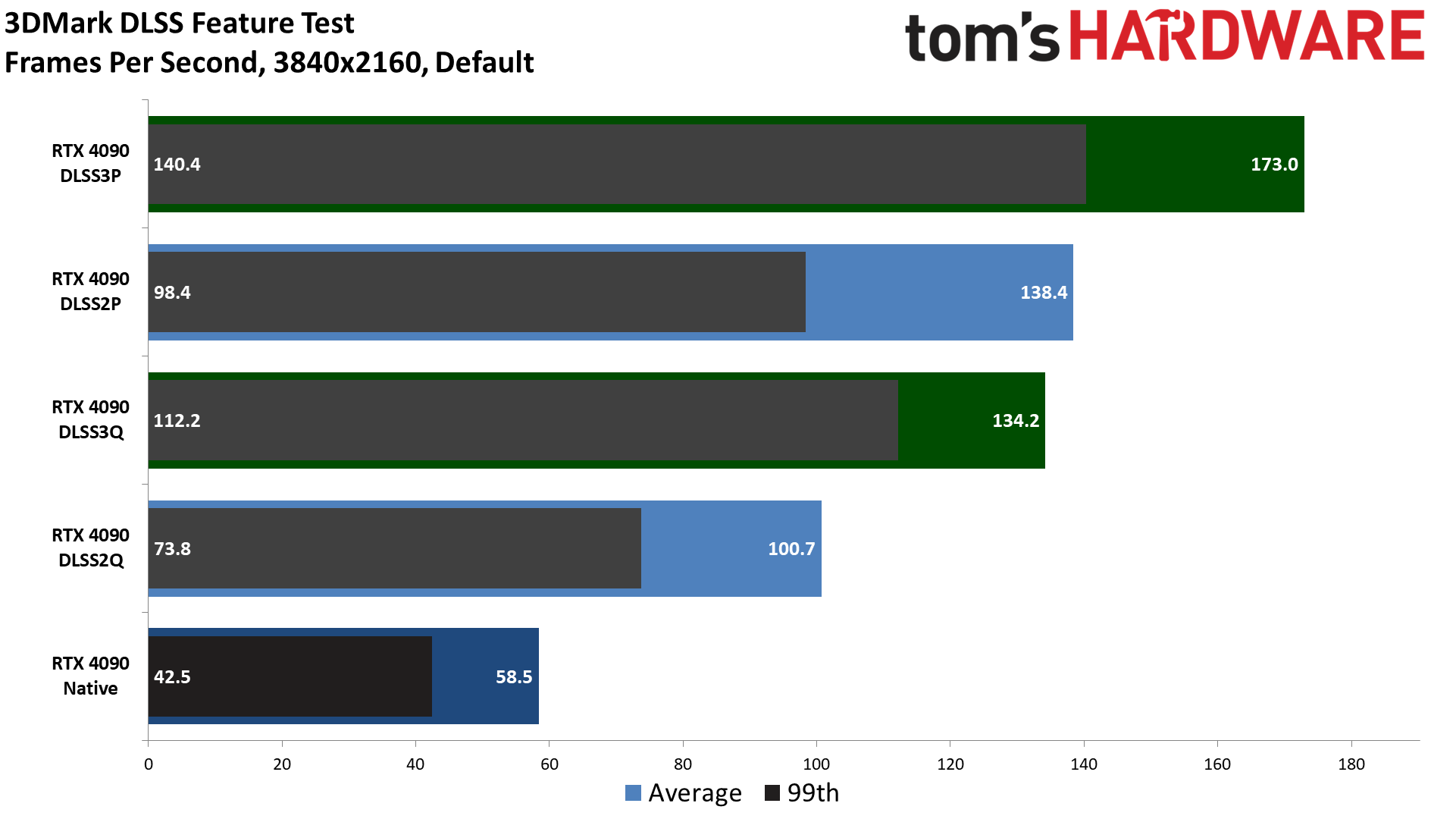

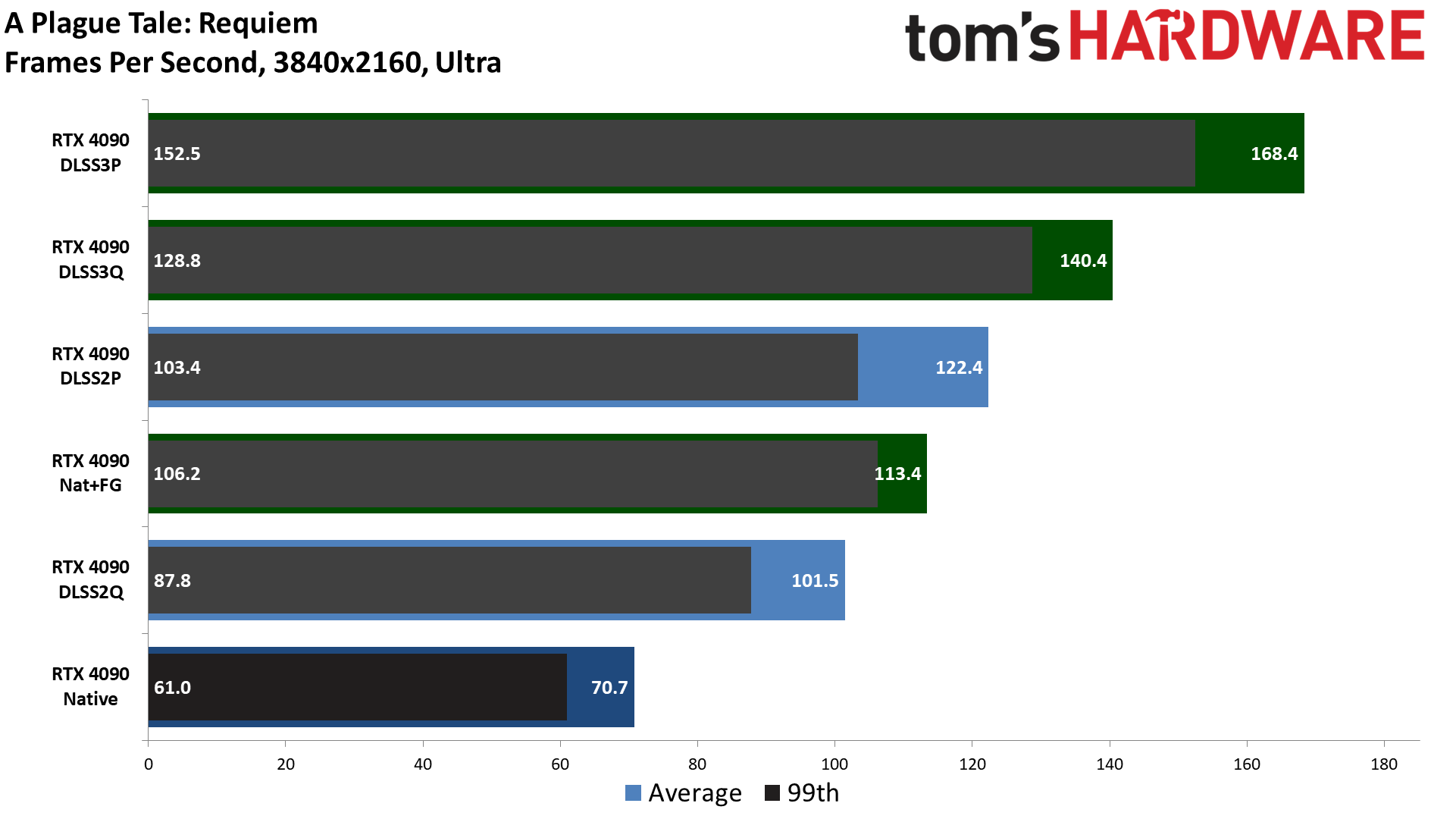

Nvidia claims that with DLSS 3, performance with the RTX 4090 can be anywhere from 2x to 4x faster than on an RTX 3090 Ti — with the latter running DLSS 2. Most of the games and applications we tested don't support cards other than the RTX 4090 right now, and timing constraints limited how much we could test, but generally speaking DLSS 2 Performance upscaling (4x upscale, or rendering 1080p and upscaling to 4K) roughly doubles your FPS, at least in situations where CPU bottlenecks aren't a factor.

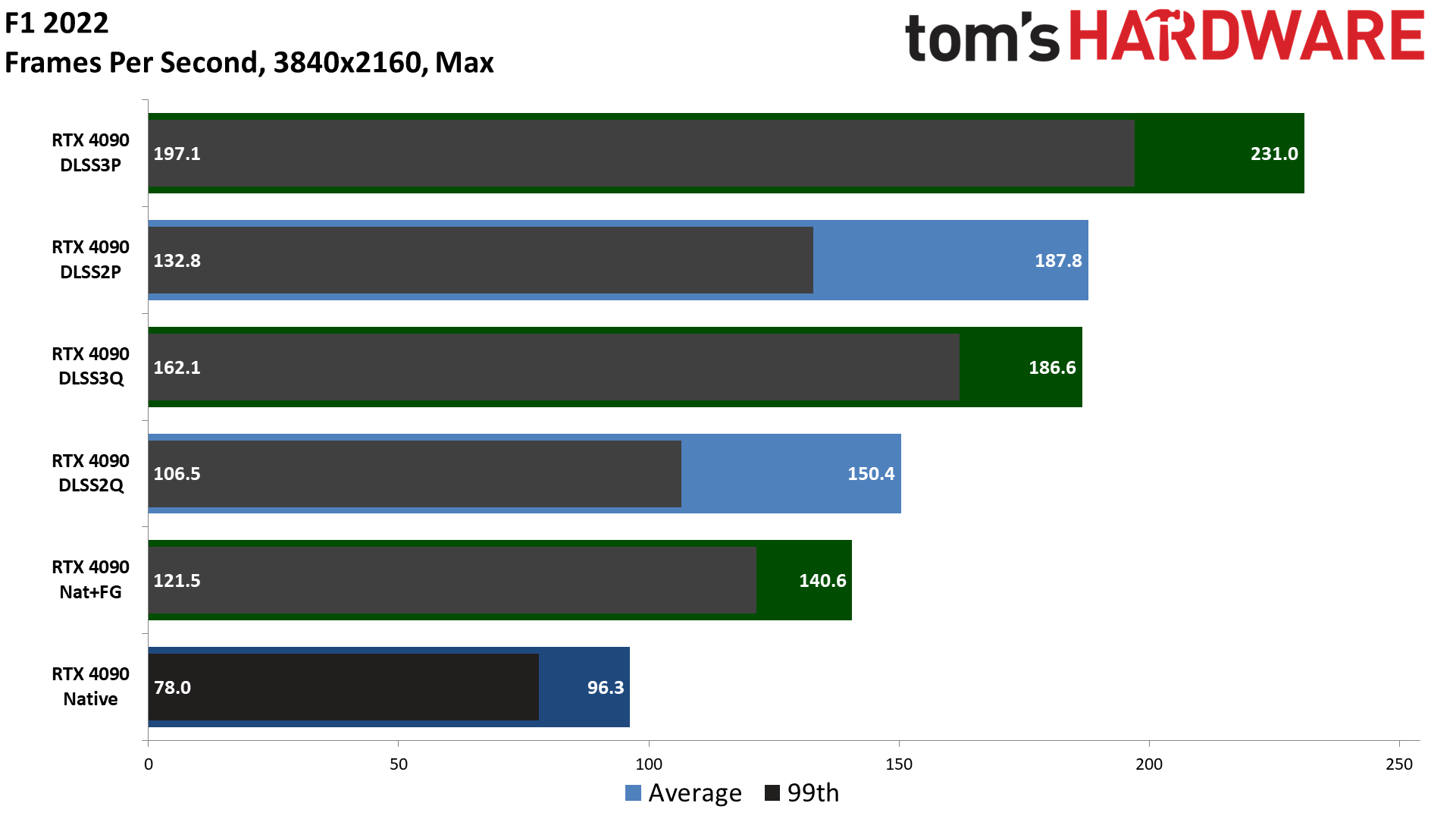

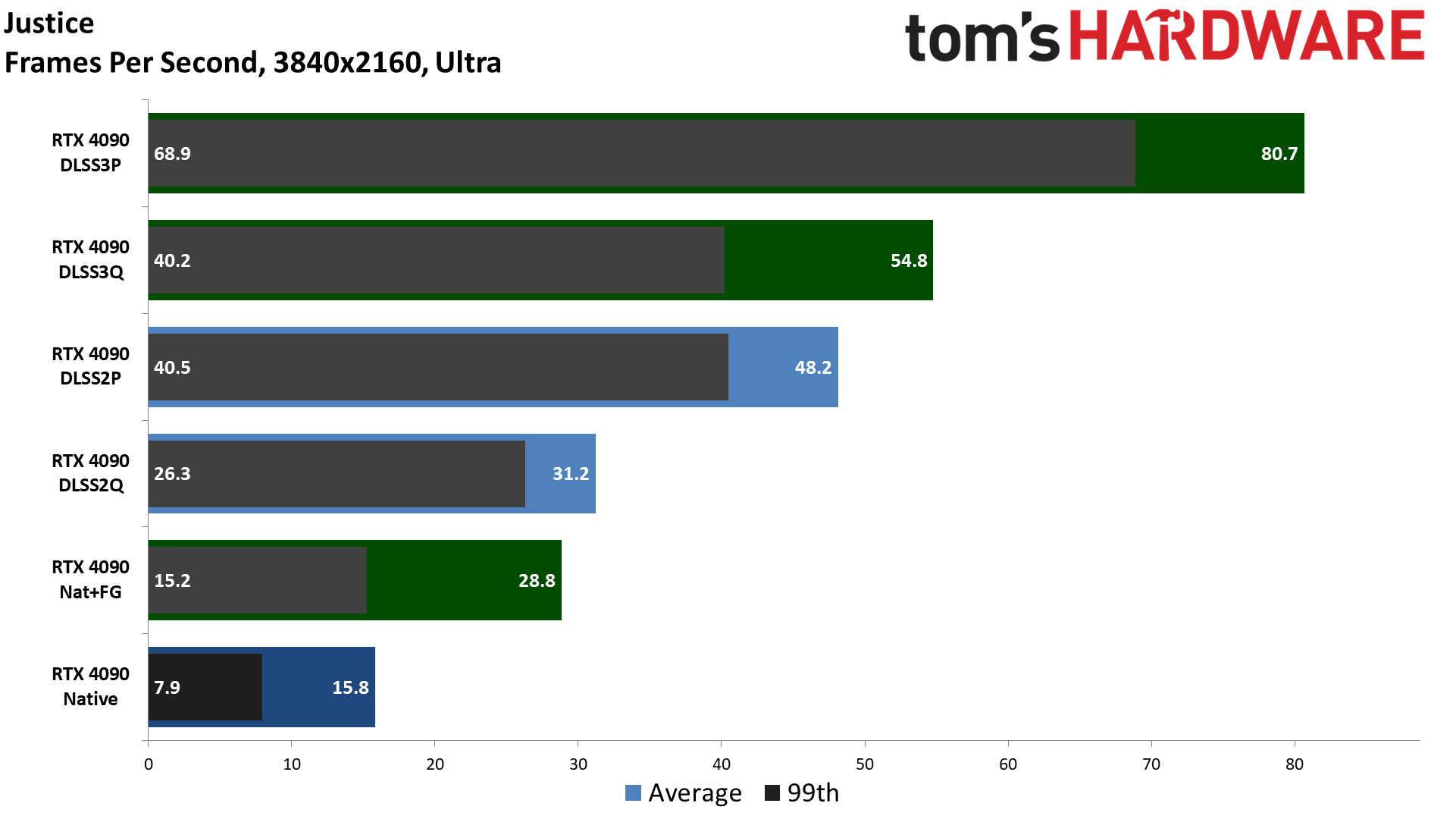

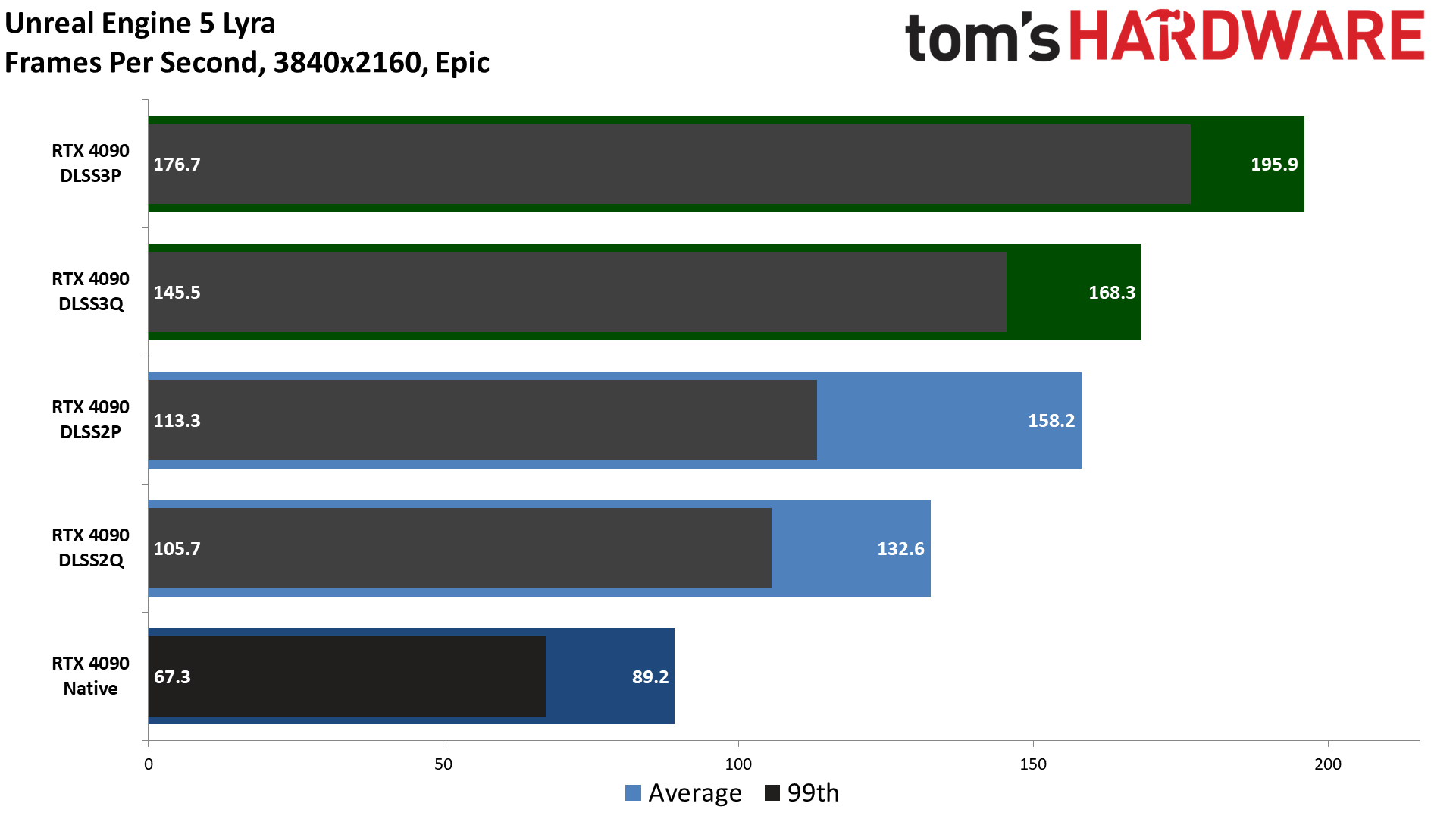

With DLSS 3, there are situations where the RTX 4090 is up to 5x faster than native, like in the Chinese MMO Justice, though every preview we looked at shows different results. 3DMark's DLSS Feature Test shows 3x the performance. A Plague Tale: Requiem and F1 2022 are up to 2.4x faster. Cyberpunk 2077 improves up to 3.6x over native, and the Unreal Engine 5 demo Lyra is up to 2.2x faster. Finally, Flight Simulator only improves by 2x, but it's also a game that's almost entirely CPU limited on the RTX 4090. Doubling framerates in that case represents a nice improvement.

How does DLSS 3 image quality with Frame Generation compare to normal rendering? We're still working on looking at the various games, which requires some changes to our normal testing as we need to capture games at higher than 60 fps rates to get meaningful results. Subjectively, though, we can say that other than looking smoother, we wouldn't be able to immediately spot the difference between the rendered and generated frames while gaming.

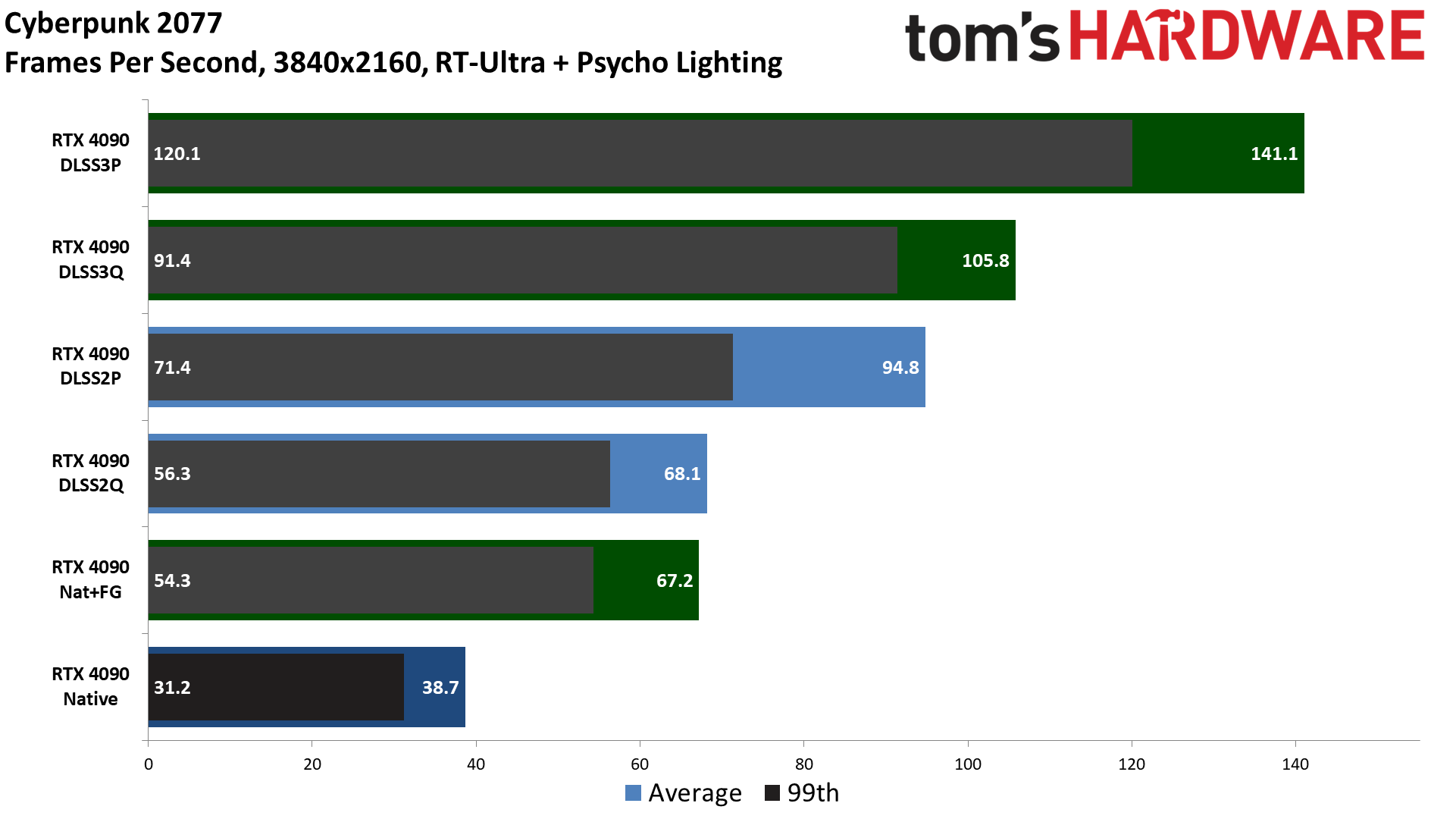

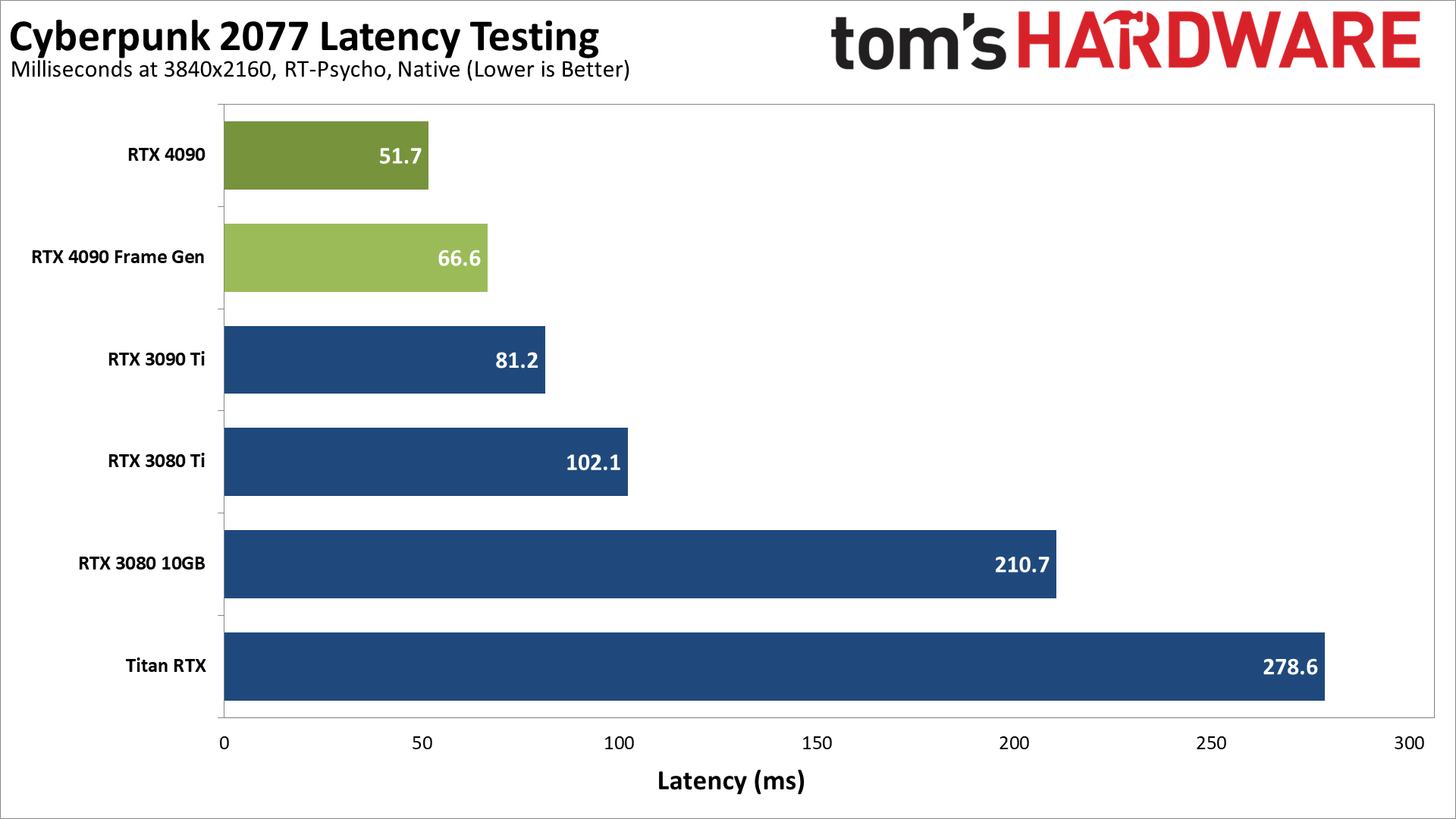

We did want to look at latency with and without Frame Generation, so we turned to the preview version of Cyberpunk 2077. Thanks to Reflex, we could run the game on RTX 30-series GPUs as well, so we captured performance using Nvidia's FrameView utility. We maxed out the settings, so 4K with RT-Ultra and then turned Ray Traced Lighting to the Psycho setting. We also tested with Reflex enabled on all the GPUs, because that's how most gamers will play.

Latency has a direct correlation with frame rate, so faster GPUs inherently get lower latency. We see that in the native results, where the RTX 4090 has 52ms of latency compared to 81ms on the 3090 Ti, and it just gets worse from there with the Titan RTX (we picked it as the fastest 20-series GPU, and also because it has 24GB of VRAM) sitting at 279ms. The RTX 4090 with Frame Generation ends up at 67ms latency, basically about one frame's delay if the game were running at 60 fps — which is pretty close, as we'll see in a moment.

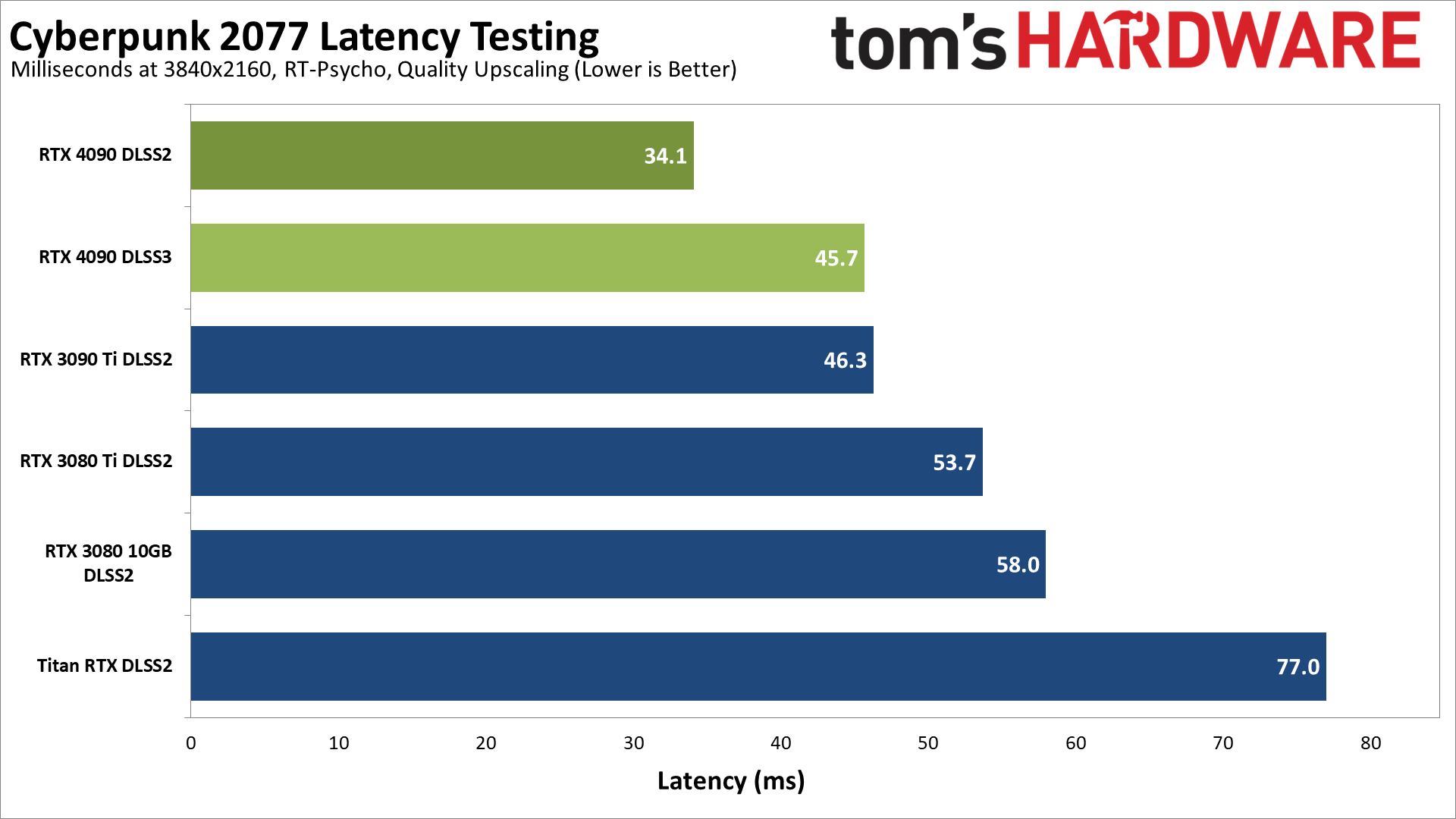

Enabling DLSS Quality upscaling nearly doubles the performance of the RTX cards, and latency drops quite a bit. The 4090 now sits at 34ms, or 46ms with Frame Generation. That's a 12ms difference, with the DLSS 3 running at 106 fps. The 4090 with DLSS 3 ties the latency of the 3090 Ti with DLSS 2, with more than double the performance.

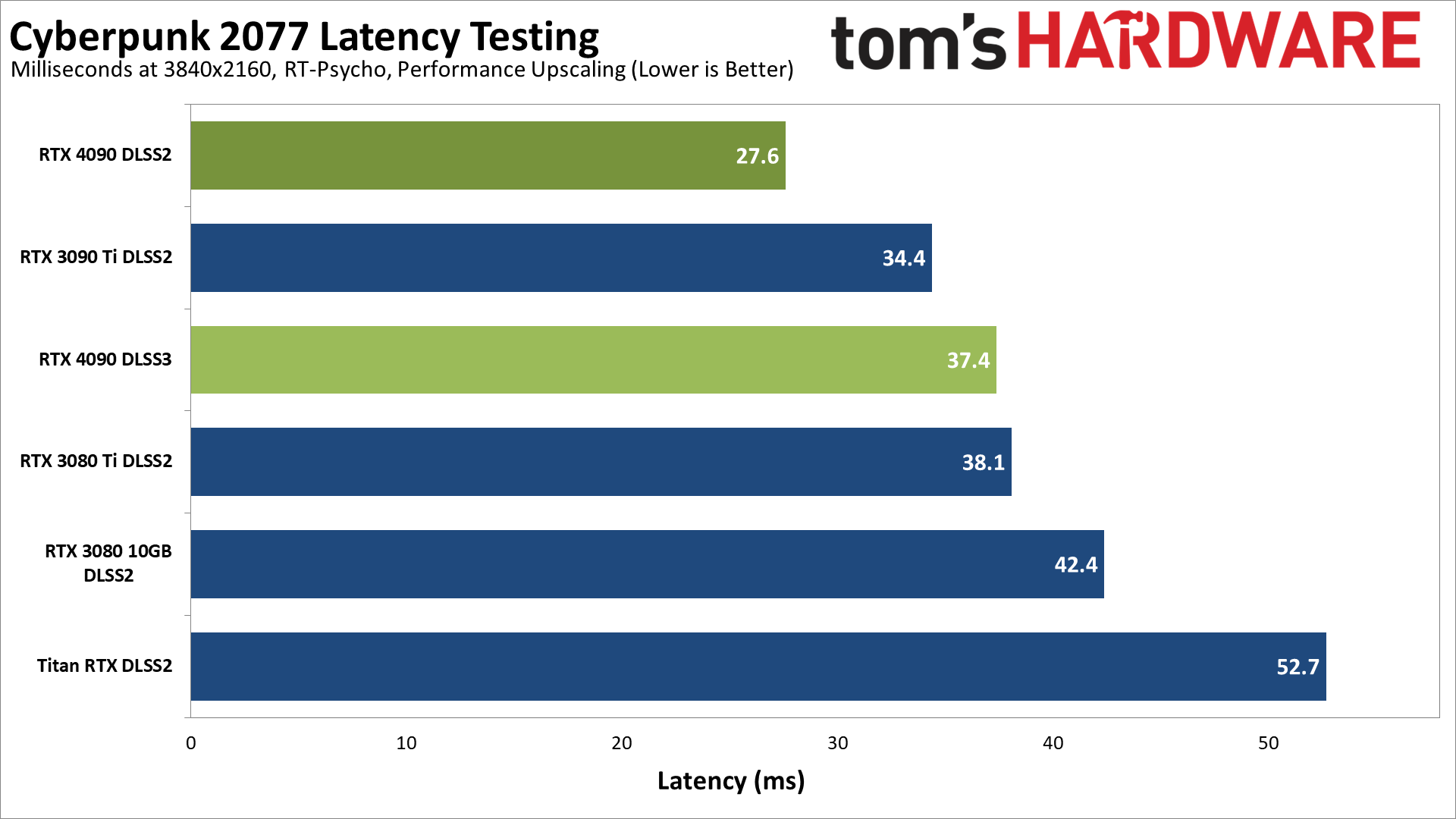

Finally, with Performance mode upscaling, the 4090 with Frame Generation enabled actually ends up with slightly more latency than a 3090 Ti, but still with more than double the frame rate. If you have a 144 Hz 4K display, which is what we used for testing, DLSS 3 looks better and feels a bit smoother. It's not a huge difference, but it's nice to see triple-digit frame rates at 4K in such a demanding game.

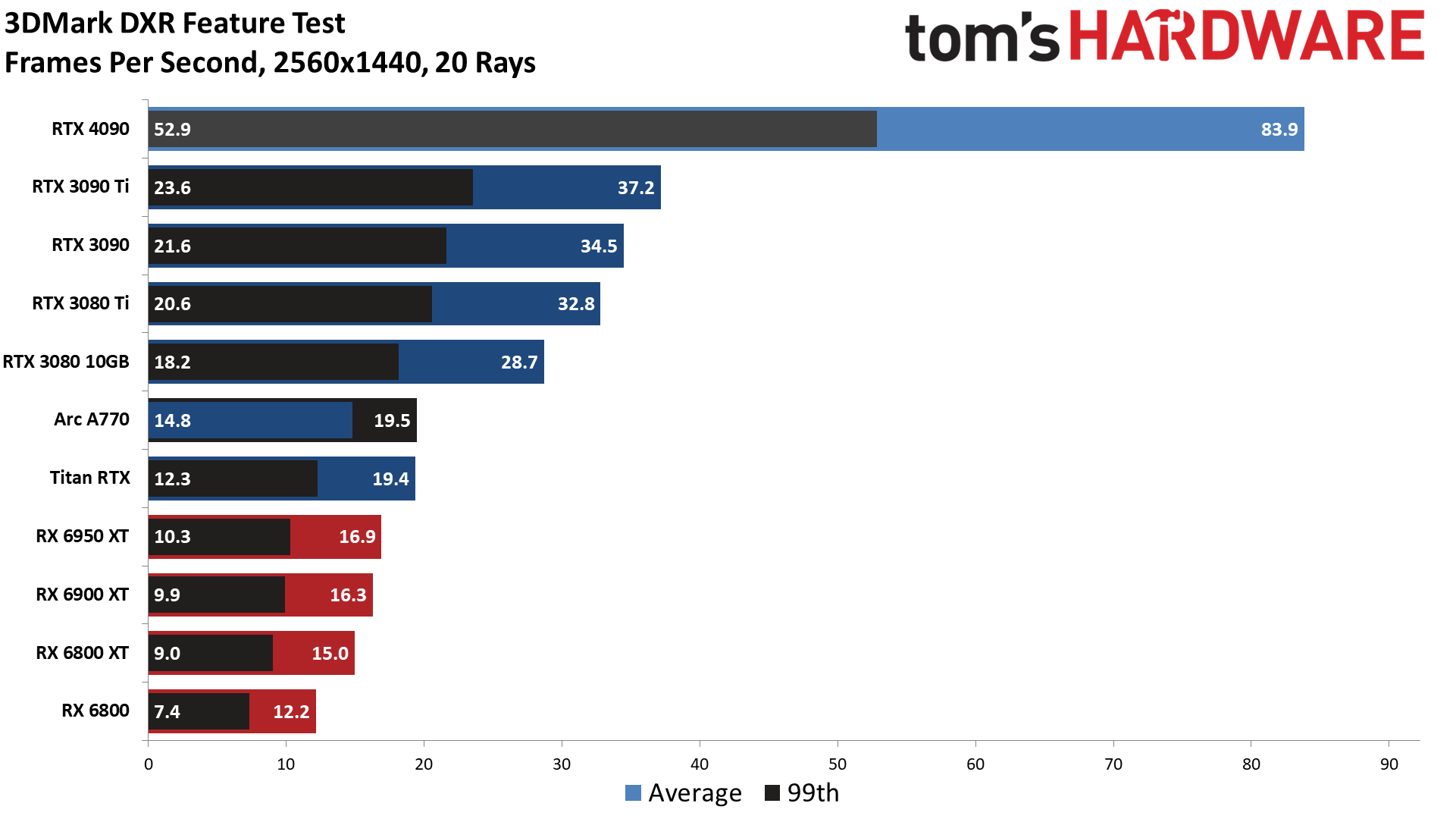

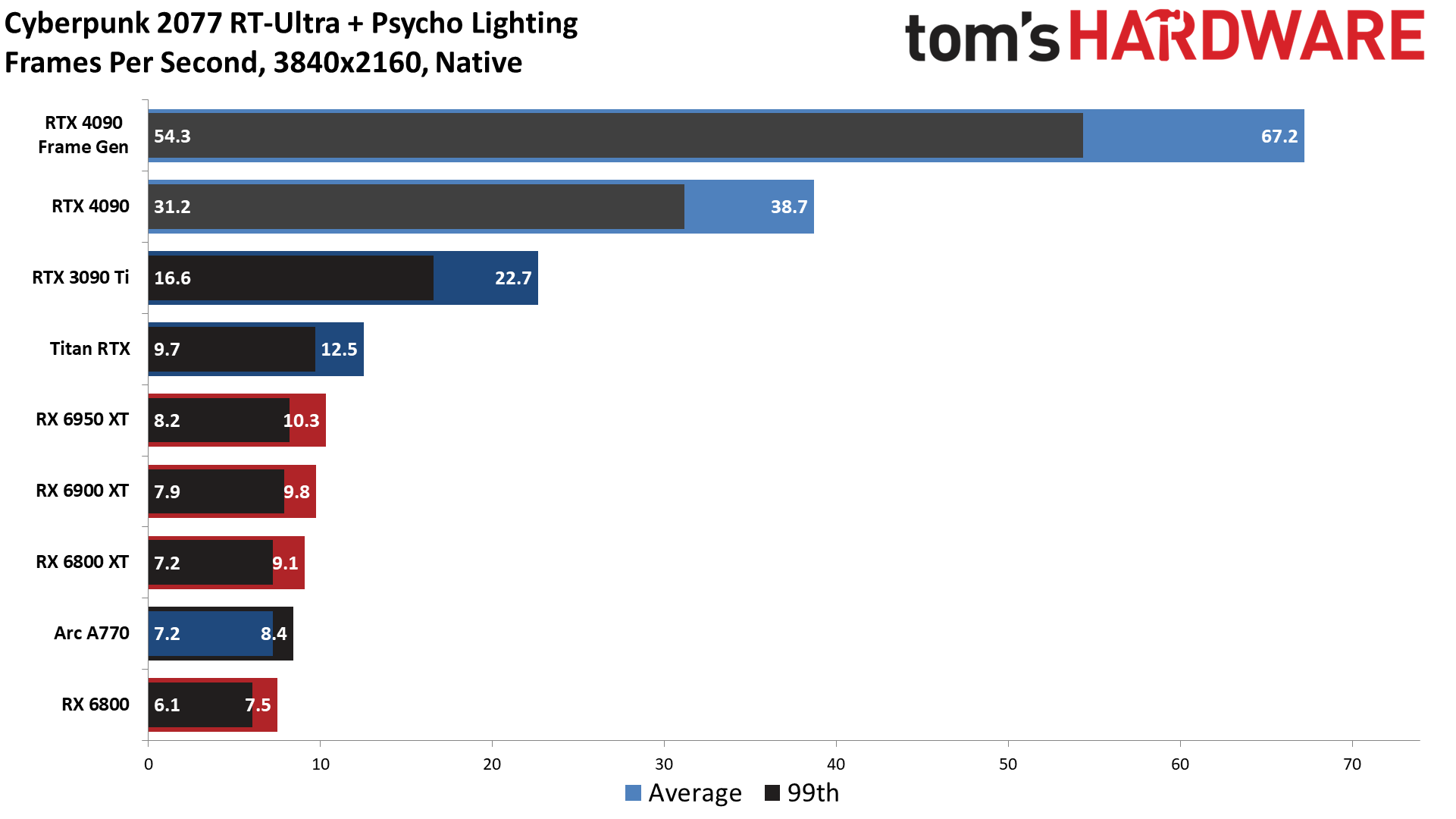

The last thing we want to show is how the various fastest GPUs from Nvidia, AMD, and even Intel stack up in pure ray tracing performance — or at least as close as we can get to "pure" RT as possible. We used the same Cyberpunk 2077 RT-Ultra plus Psycho Lighting settings as our latency testing, but now we have several AMD GPUs along with the Intel Arc A770. We also used the 3DMark DXR Feature Test, set to 20 rays, which apparently does do full ray tracing rather than hybrid rendering.

Starting with 3DMark's DXR Feature Test, it's no surprise that the RTX 4090 ranks way above any other GPU. Also note that this is still without the test implementing Ada's SER, OMM, and DMM features, so just the use of faster RT cores, with more of them, accounts for the difference between the 4090 and the 3090 Ti.

Moving down the chart, if you're like us, the placement of Intel's Arc A770 should raise an eyebrow. Yes, with just 32 RTUs running at somewhere around 2.35 GHz, Intel with its first generation of ray tracing hardware manages to match 84 of Nvidia's first generation RT cores. That probably won't happen in every game that supports ray tracing, but you'll also note that all of AMD's fastest RX 6000-series GPUs rank below the Arc A770 and Titan RTX. When people say AMD's first-generation RT hardware is weak, this is why.

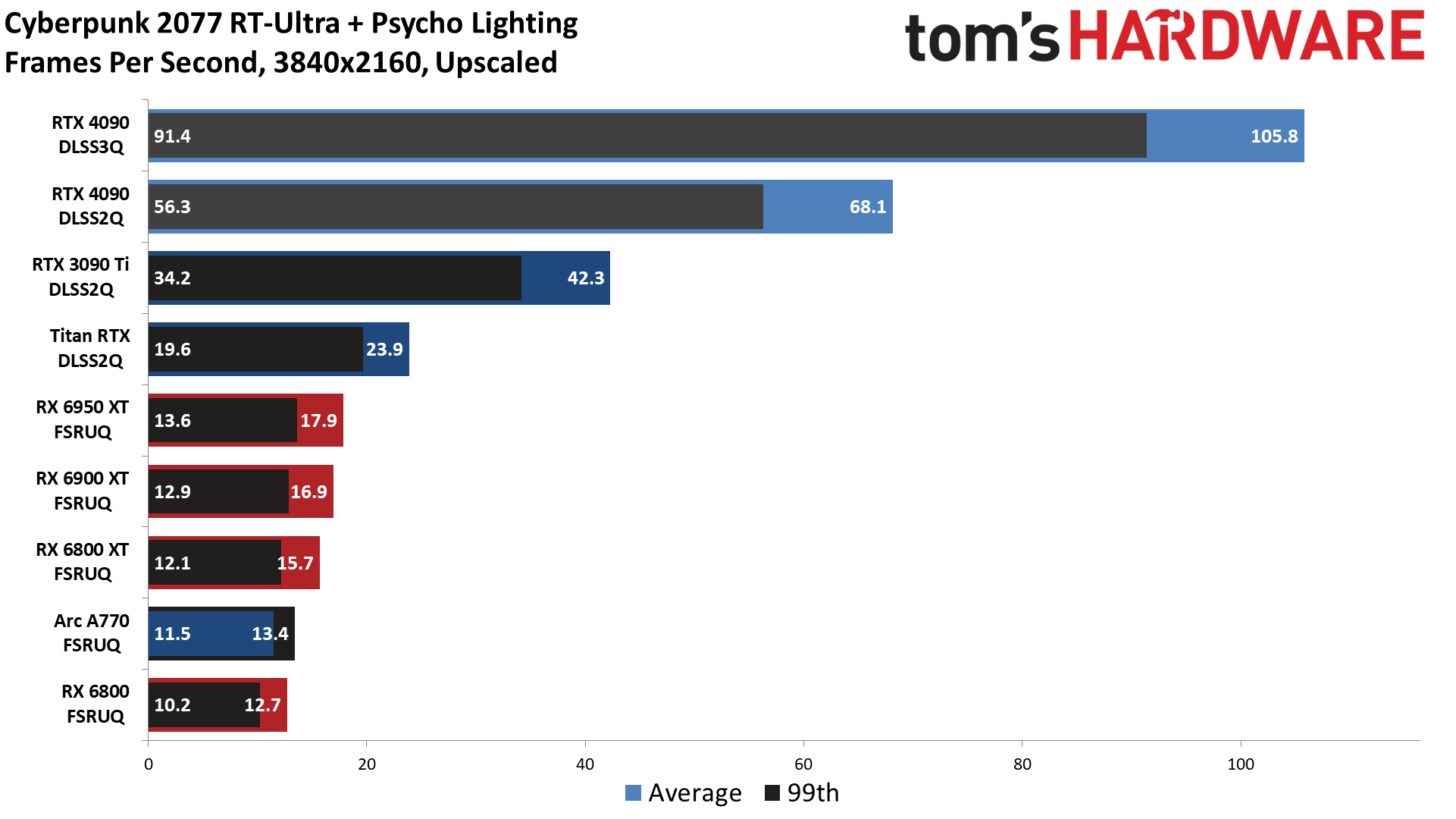

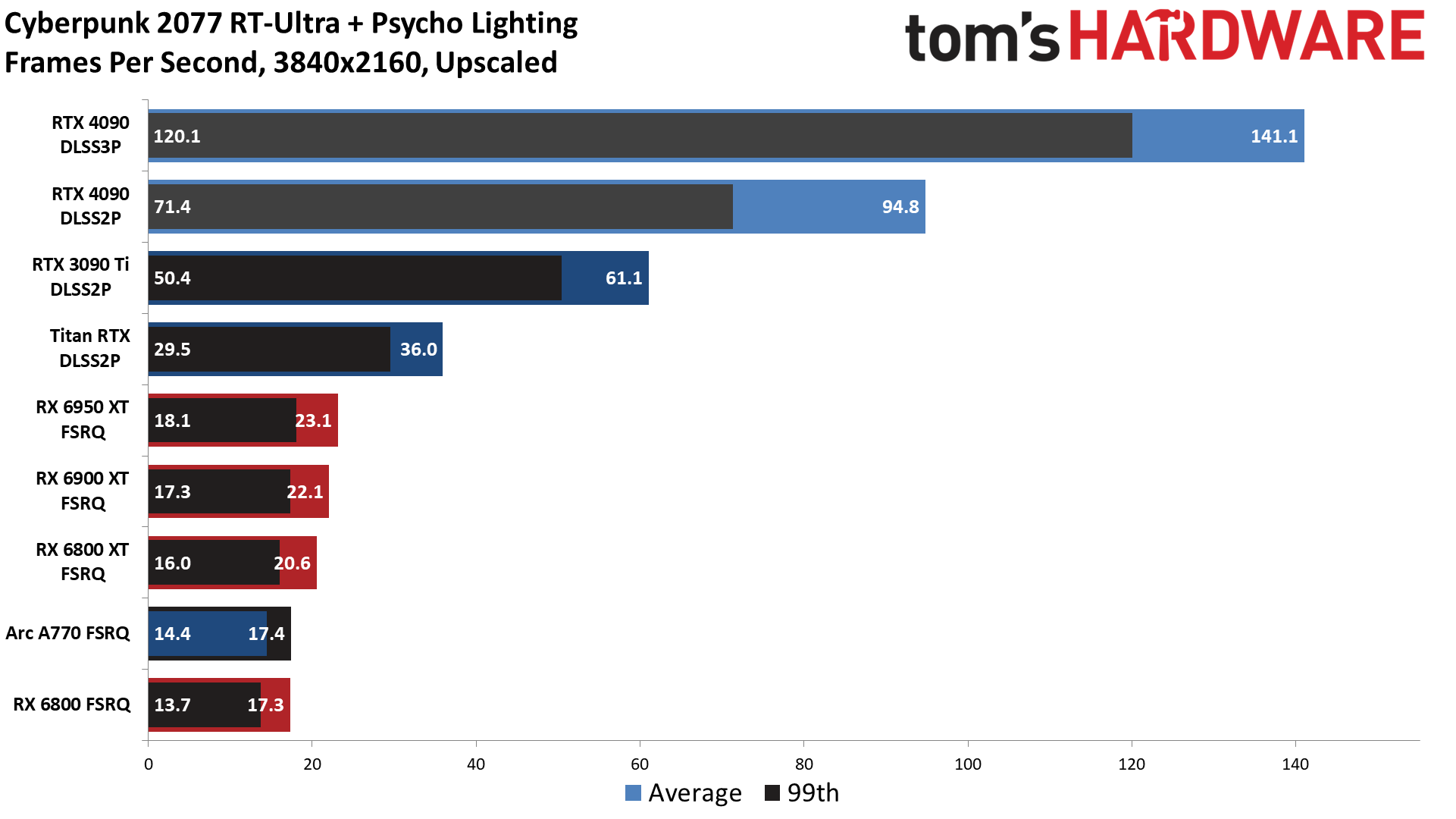

Things don't look quite so dire in Cyberpunk 2077, though nothing below the RTX 3090 Ti even comes remotely close to hitting playable frame rates at maxed out settings and 4K. The RTX 4090 at native gets 39 fps, and Frame Generation nearly doubles that to 67 fps.

We also did some apples-and-oranges comparisons of DLSS modes versus FSR modes. We're calling DLSS Quality upscaling more or less equal to FSR Ultra Quality, and DLSS Performance as somewhat equal to FSR Quality mode. Neither of those are entirely accurate, but we also just wanted to see if we could get any of the non-RTX cards up to playable levels of performance. The answer: Nope.

The RX 6950 XT tops out at 23 fps with FSR Quality mode; we'd have to drop to Balanced or Performance mode to break 30 fps, and we can definitely tell the difference in visual fidelity when those are in use. Also, note that the Arc A770 can't keep up with AMD's faster GPUs, but it does rank ahead of the RX 6800 in all three tests.

Bottom line: Nvidia has seriously upped the ante for ray tracing hardware performance with Ada Lovelace. We already knew that from the architectural overview, but this provides some hard evidence showing just how much things have improved.

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: GeForce RTX 4090: DLSS 3, Latency, and 'Pure' Ray Tracing Performance

Prev Page GeForce RTX 4090: Gaming Performance at 1440p and 1080p Next Page GeForce RTX 4090: Professional and Content Creation Performance

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

-Fran- Shouldn't this be up tomorrow?Reply

EDIT: Nevermind. Looks like it was today! YAY.

Thanks for the review!

Regards. -

brandonjclark Replycolossusrage said:Can finally put 4K 120Hz displays to good use.

I'm still on a 3090, but on my 165hz 1440p display, so it maxes most things just fine. I think I'm going to wait for the 5k series GPU's. I know this is a major bump, but dang it's expensive! I simply can't afford to be making these kind of investments in depreciating assets for FUN. -

JarredWaltonGPU Reply

Yeah, Nvidia almost always does major launches with Founders Edition reviews the day before launch, and partner card reviews the day of launch.-Fran- said:Shouldn't this be up tomorrow?

EDIT: Nevermind. Looks like it was today! YAY.

Thanks for the review!

Regards. -

JarredWaltonGPU Reply

You could still possibly get $800 for the 3090. Then it’s “only” $800 to upgrade! LOL. Of course if you sell on eBay it’s $800 - 15%.brandonjclark said:I'm still on a 3090, but on my 165hz 1440p display, so it maxes most things just fine. I think I'm going to wait for the 5k series GPU's. I know this is a major bump, but dang it's expensive! I simply can't afford to be making these kind of investments in depreciating assets for FUN. -

kiniku A review like this, comparing a 4090 to an expensive sports car we should be in awe and envy of, is a bit misleading. PC Gaming systems don't equate to racing on the track or even the freeway. But the way it's worded in this review if you don't buy this GPU, anything "less" is a compromise. That couldn't be further from the truth. People with "big pockets" aren't fools either, except for maybe the few readers here that have convinced themselves and posted they need one or spend everything they make on their gaming PC's. Most gamers don't want or need a 450 watt sucking, 3 slot, space heater to enjoy an immersive, solid 3D experience.Reply -

spongiemaster Reply

Congrats on stating the obvious. Most gamers have no need for a halo GPU that can be CPU limited sometimes even at 4k. A 50% performance improvement while using the same power as a 3090Ti shows outstanding efficiency gains. Early reports are showing excellent undervolting results. 150W decrease with only a 5% loss to performance.kiniku said:Most gamers don't want or need a 450 watt sucking, 3 slot, space heater to enjoy an immersive, solid 3D experience.

Any chance we could get some 720P benchmarks? -

LastStanding Replythe RTX 4090 still comes with three DisplayPort 1.4a outputs

the PCIe x16 slot sticks with the PCIe 4.0 standard rather than upgrading to PCIe 5.0.

These missing components are selling points now, especially knowing NVIDIA's rival(s?) supports the updated ports, so, IMO, this should have been included as a "con" too.

Another thing, why would enthusiasts only value "average metrics" when "average" barely tells the complete results?! It doesn't show the programs stability, any frame-pacing/hitches issues, etc., so a VERY miss oversight here, IMO.

I also find weird is, the DLSS benchmarks. Why champion the increase for extra fps buuuut... never, EVER, no mention of the awareness of DLSS included awful sharpening-pass?! 😏 What the sense of having faster fps but the results show the imagery smeared, ghosting, and/or artefacts to hades? 🤔