Why you can trust Tom's Hardware

GPUs are also used with professional applications, AI training and inferencing, and more. We're looking to expand some of our GPU testing, particularly for extreme GPUs like the RTX 4090 and would love your input on a good machine learning benchmark (that doesn't take forever to run). For now, we have a few 3D rendering applications that leverage ray tracing hardware, along with the SPECviewperf 2020 v3 test suite.

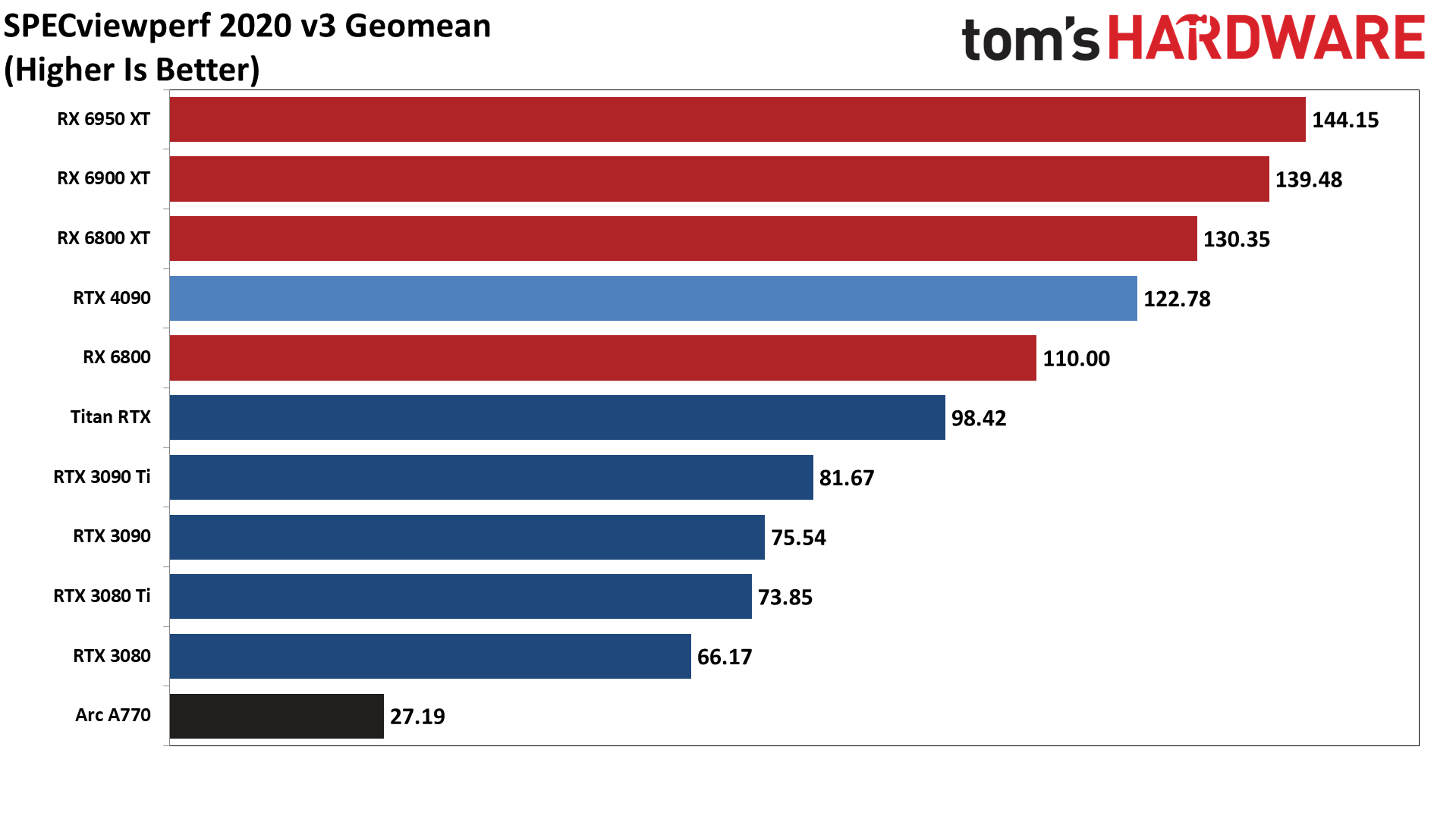

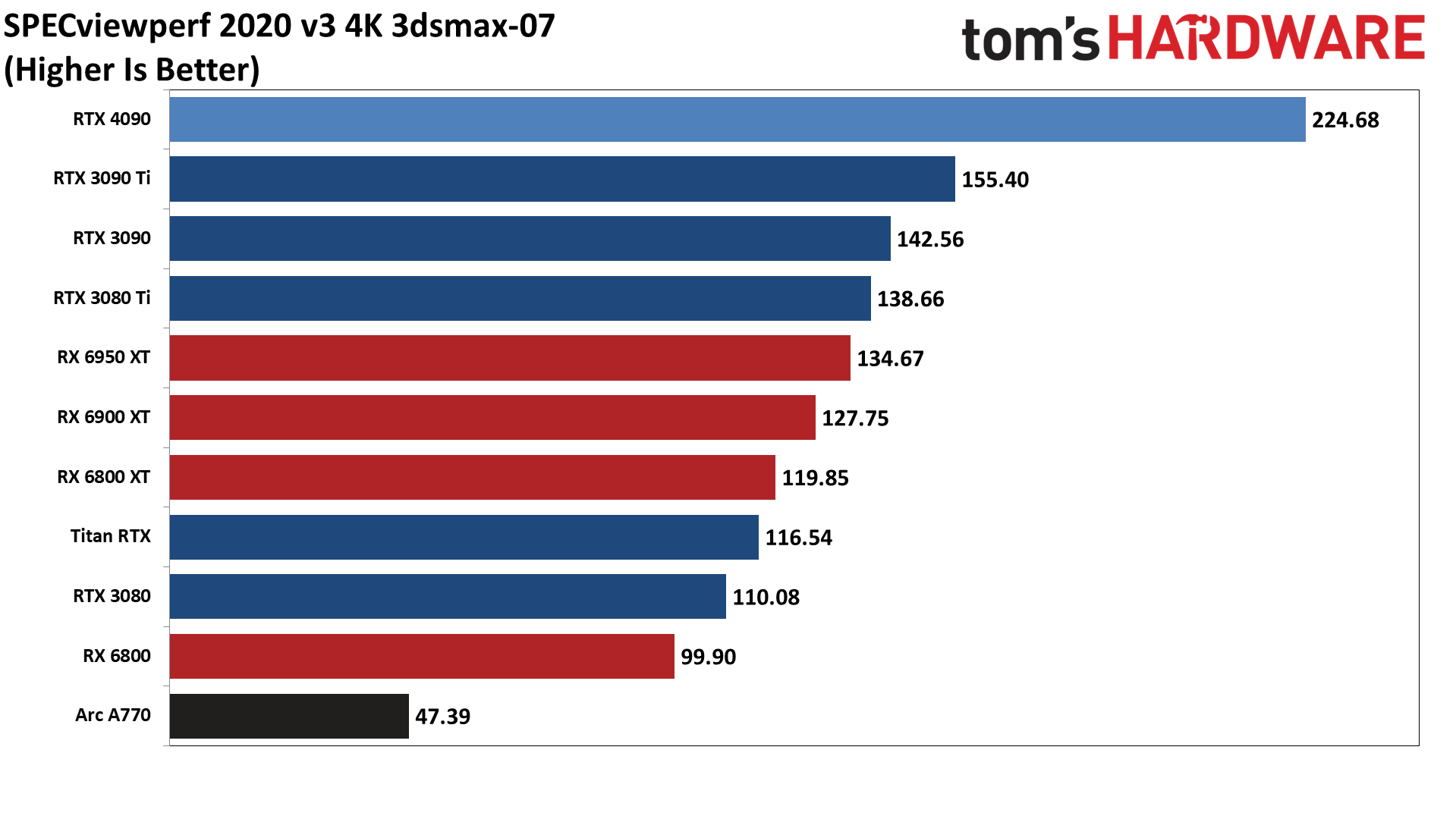

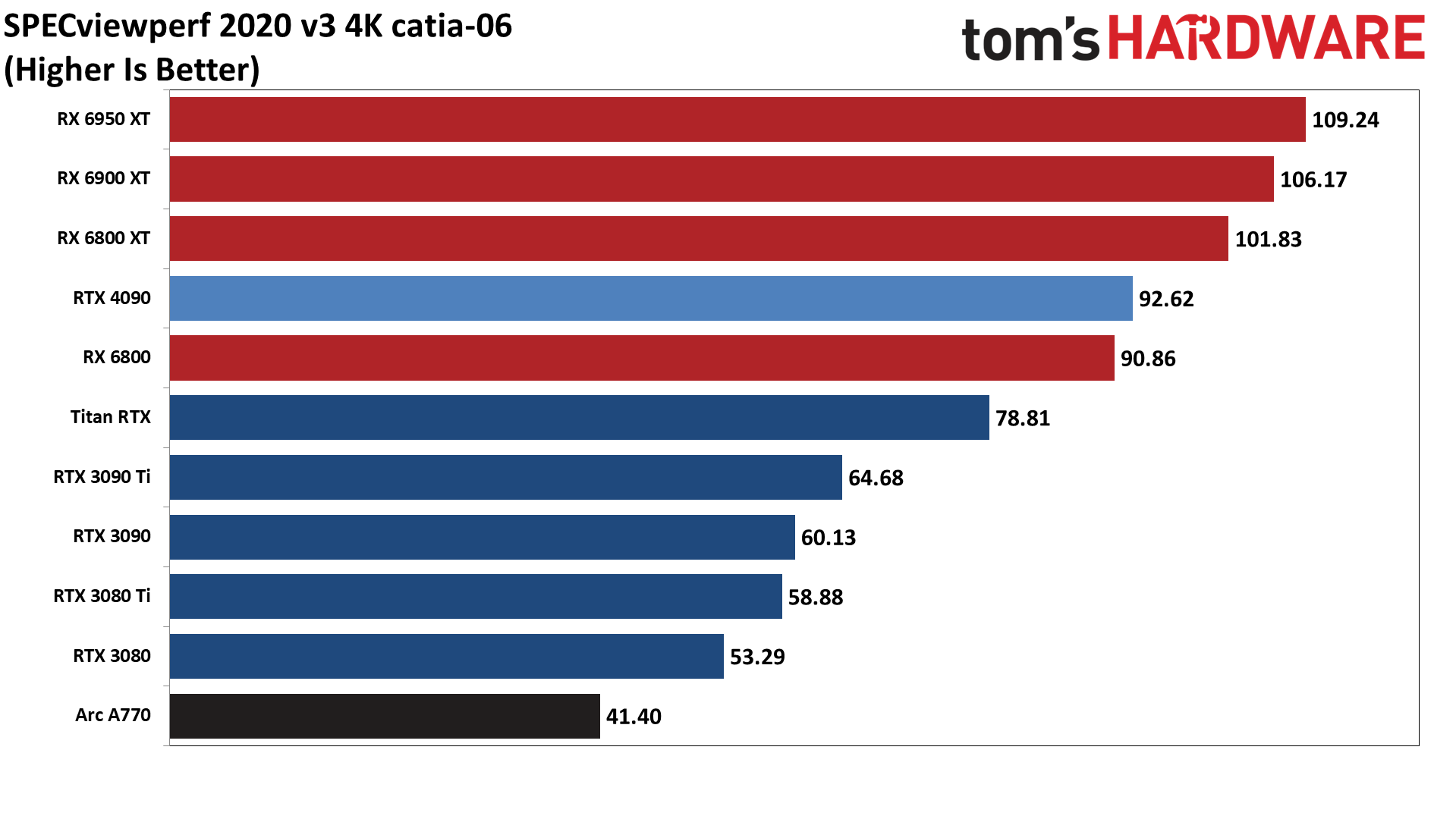

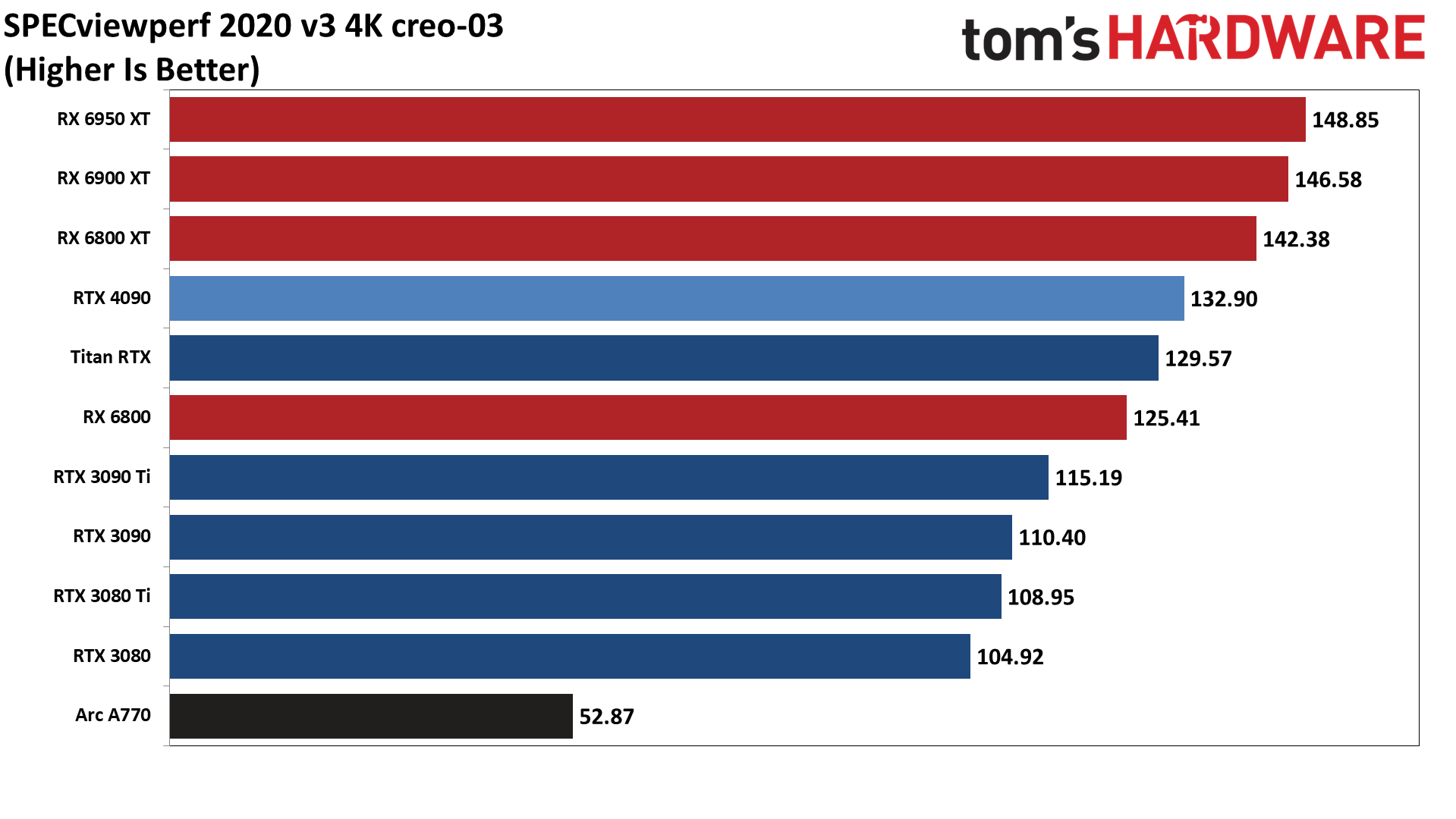

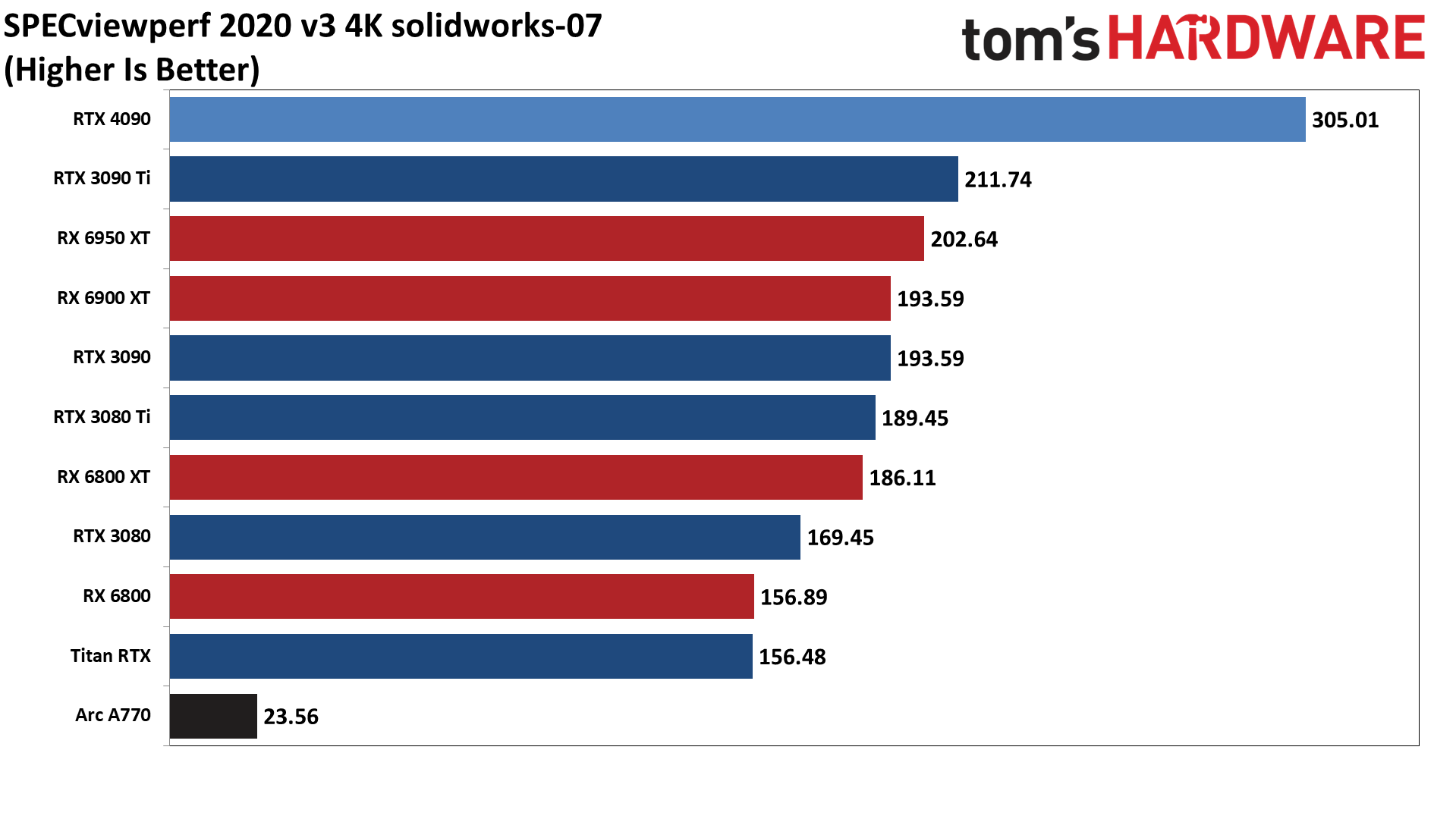

SPECviewperf 2020 consists of eight different benchmarks. We've also included an "overall" chart that uses the geometric mean of the eight results to generate an aggregate score. Note that this is not an official score, but it gives equal weight to the individual tests and provides a high-level overview of performance. Few professionals use all of these programs, however, so it's generally more important to look at the results for the applications you plan to use.

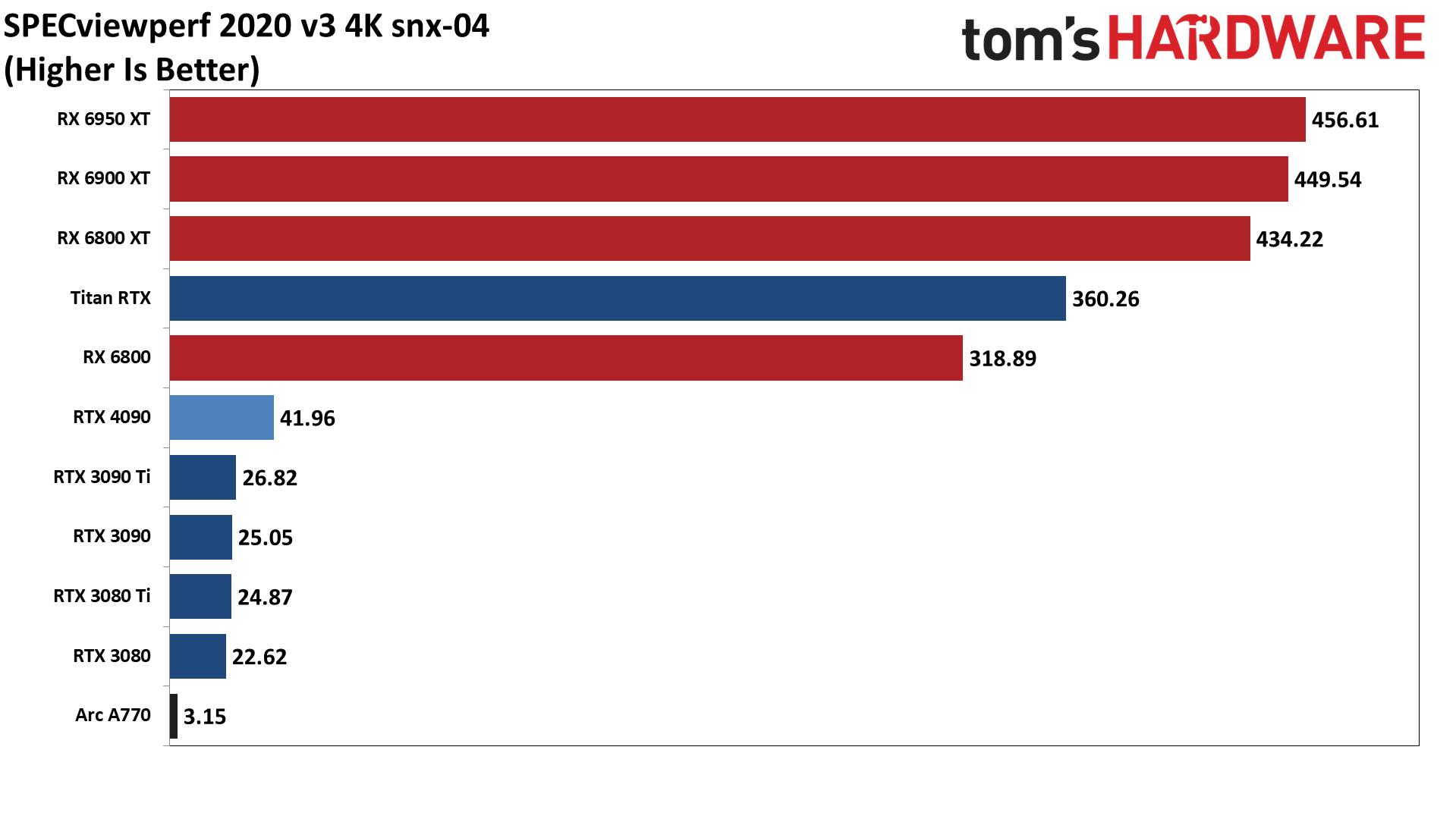

AMD recently discussed improvements to its OpenGL and professional drivers. Some or all of these improvements have been in the consumer Radeon Adrenalin 22.7.1 and later drivers, but we retested all of the GPUs with the latest available drivers for these charts. AMD's RX 6000-series cards all show an overall 65–75 percent performance increase compared to when we tested for the RX 6950 XT review. The new drives roughly a doubled catia-06 performance and quadrupled the performance in snx-04.

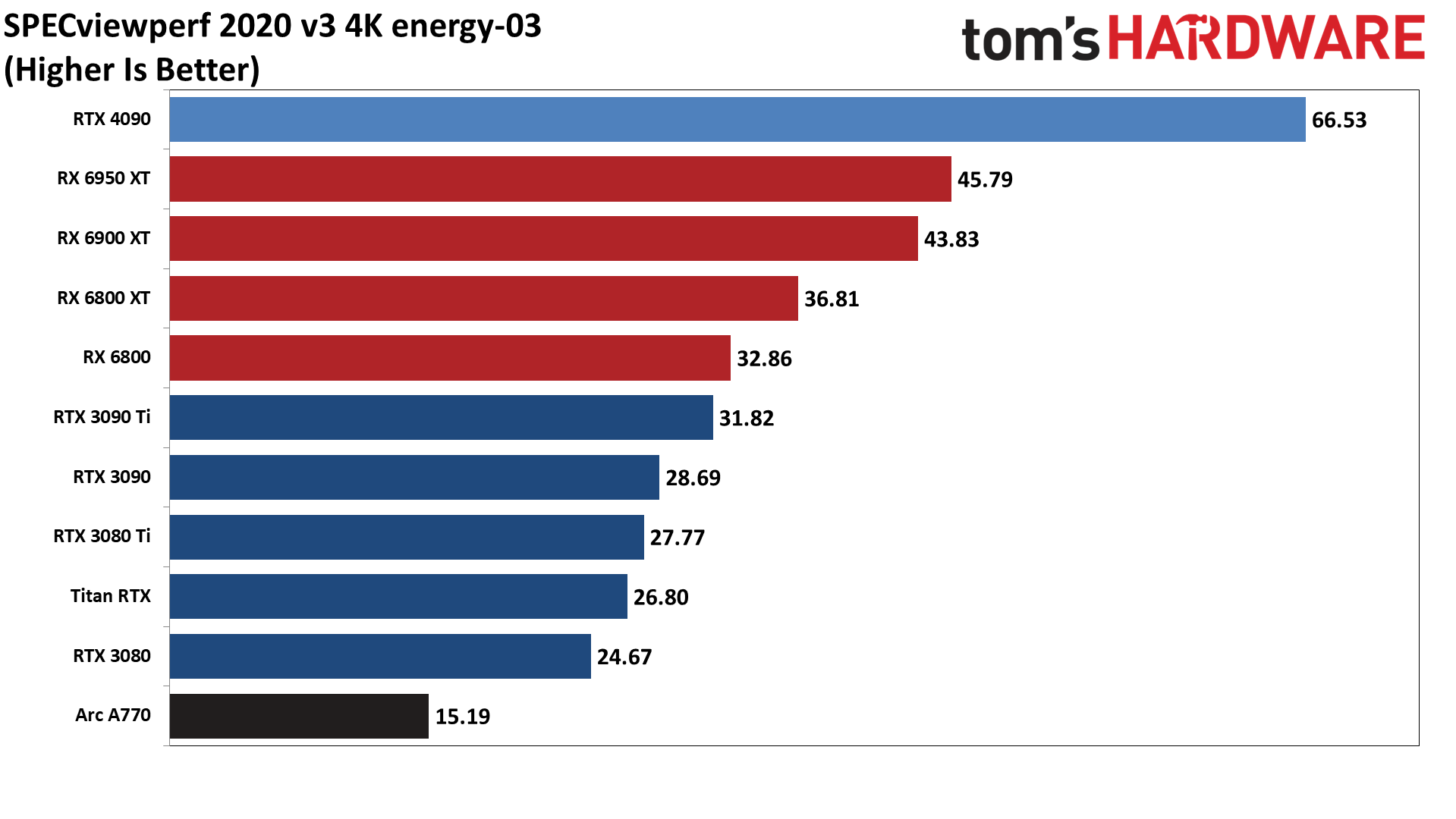

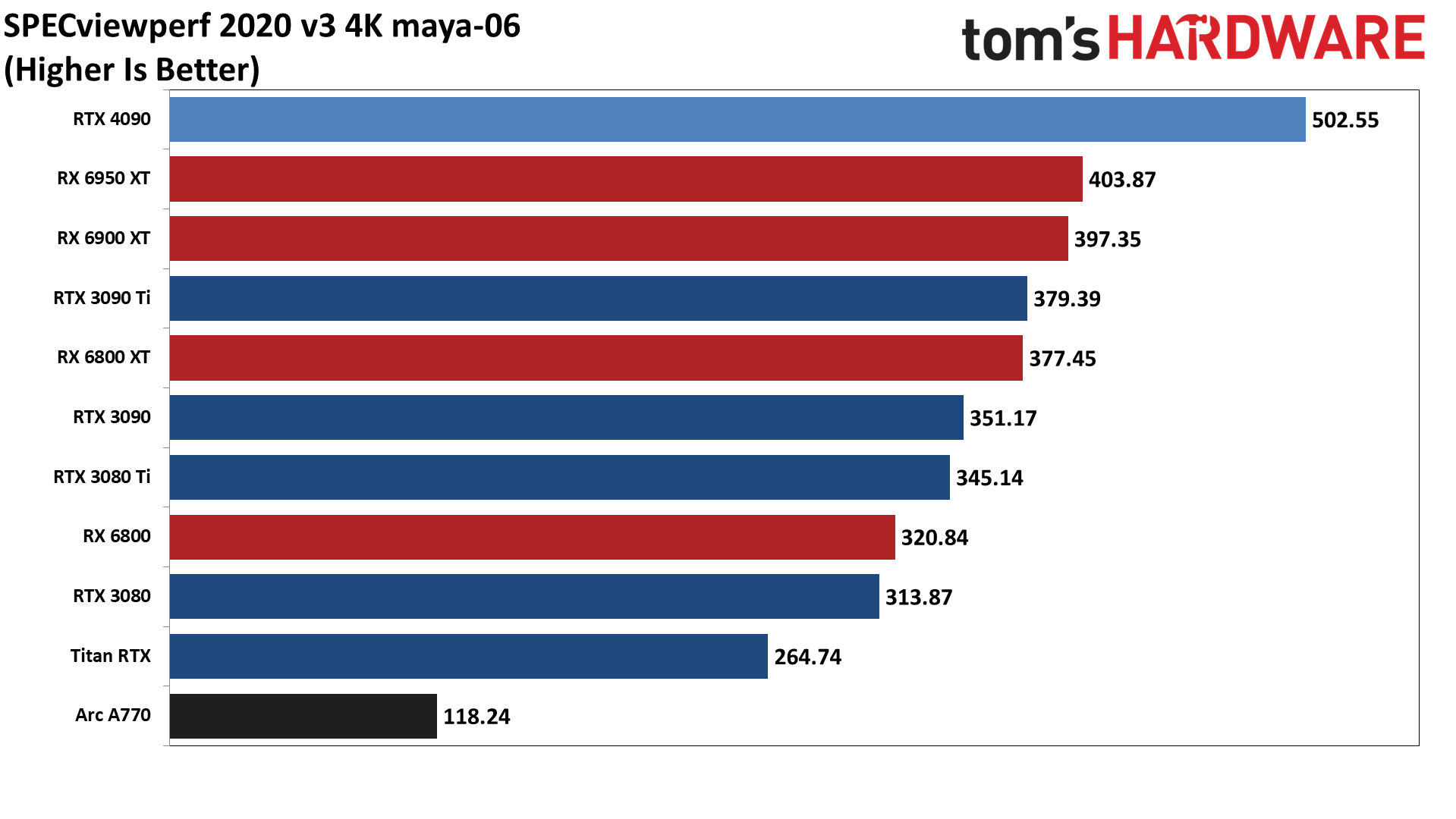

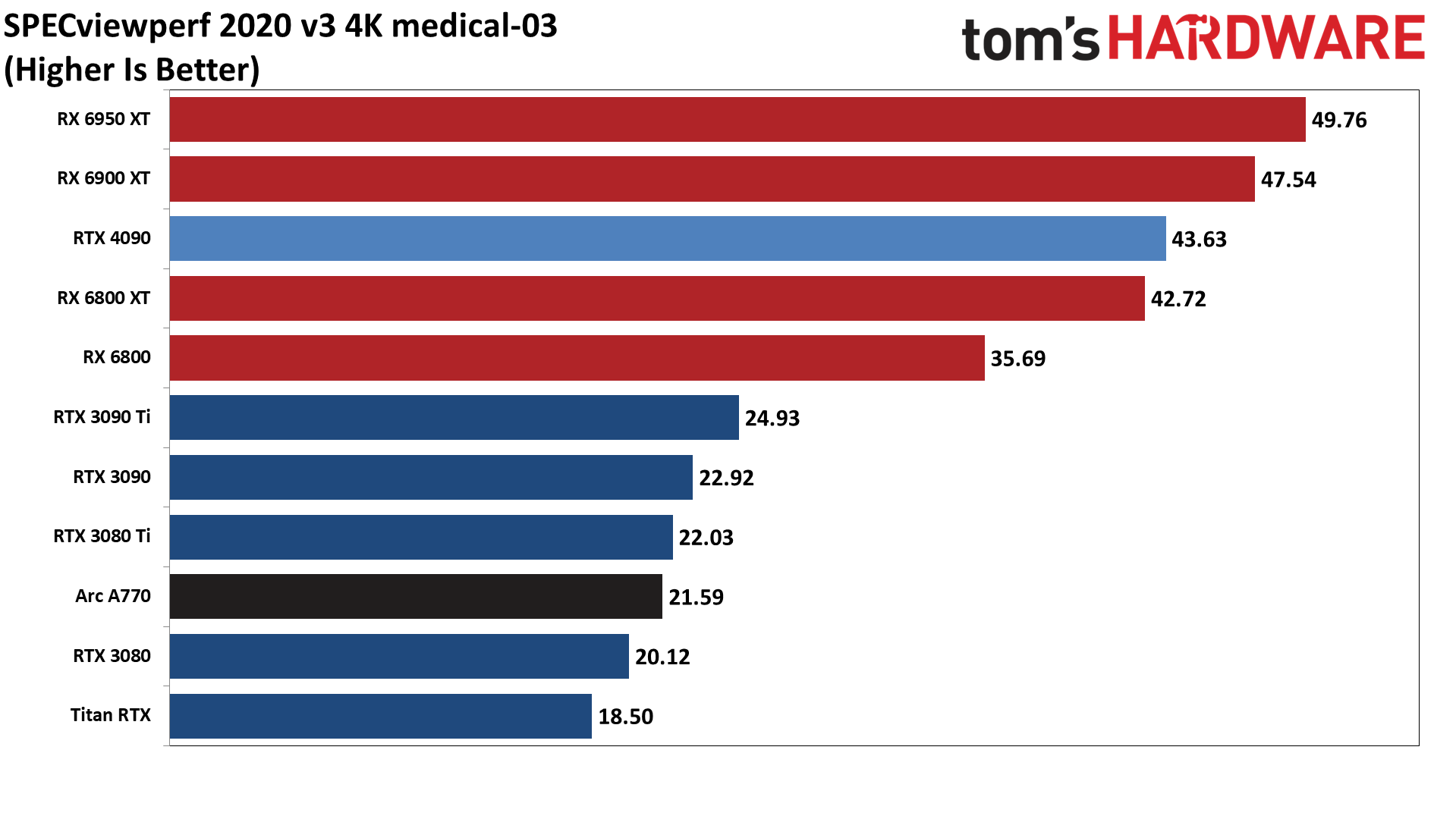

Those new AMD drivers help push most of AMD's GPUs ahead of even the RTX 4090 in our overall SPECviewperf 2020 v3 Geoman chart. In the individual charts, 3dsmax-07, energy-03, maya-06, and solidworks-07 still have the RTX 4090 in the top spot, so if you use any of these applications, consider these results — and also note that Nvidia's professional RTX cards like the RTX 6000 48GB card can offer major improvements thanks to their fully accelerated professional drivers (look at the Titan RTX in snx-04 for some idea of what Nvidia's pro drivers can do).

Intel's SPECviewperf results with the Arc A770 are decidedly mediocre. It places last on most of the charts, sometimes by a wide margin (snx-04 and solidworks-07). Driver updates could significantly improve its score, though some of the differences may be architectural. Plus the A770 costs about half as much as cards like the RX 6900 XT, and about one-fifth as much as the RTX 4090.

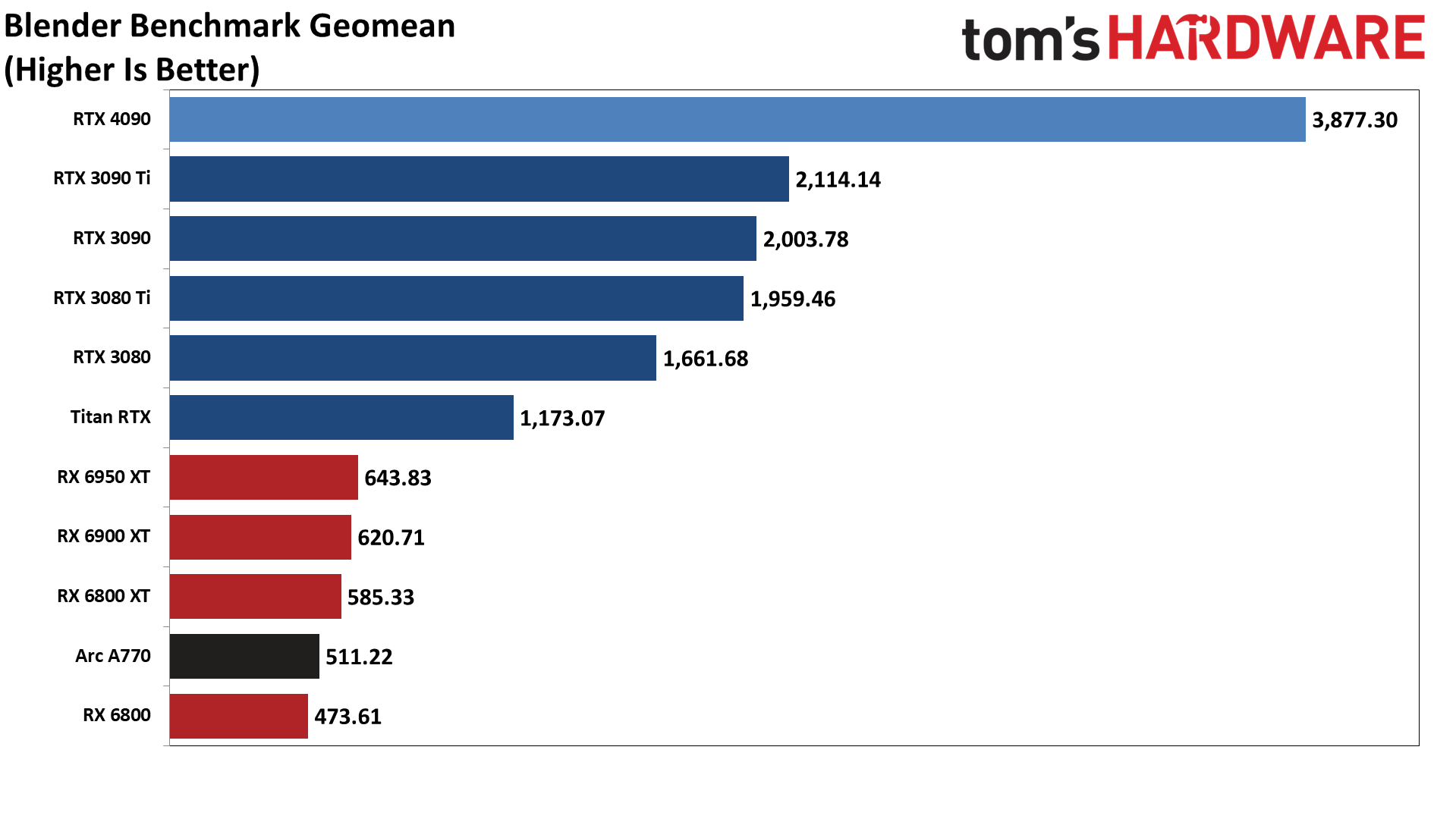

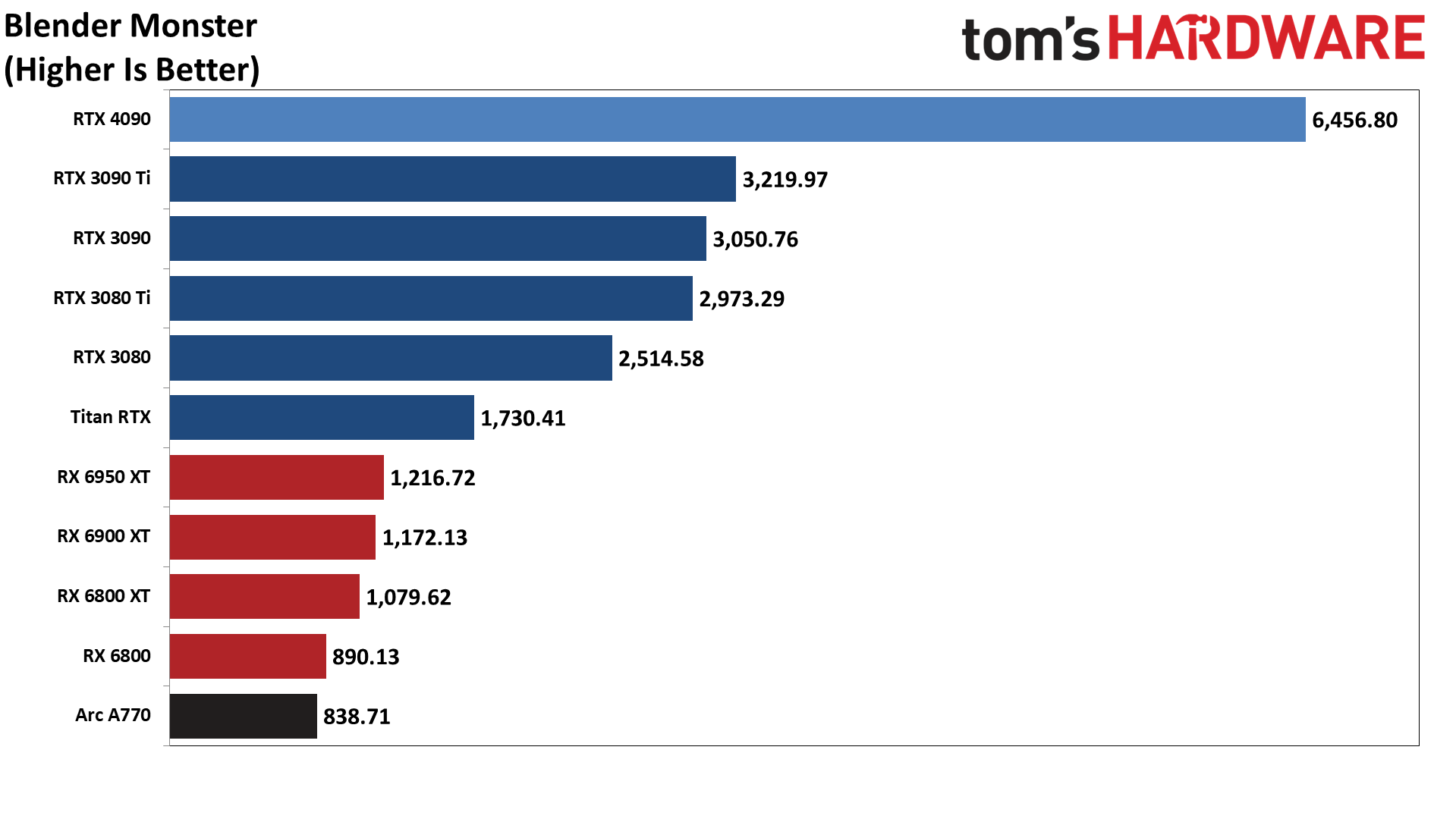

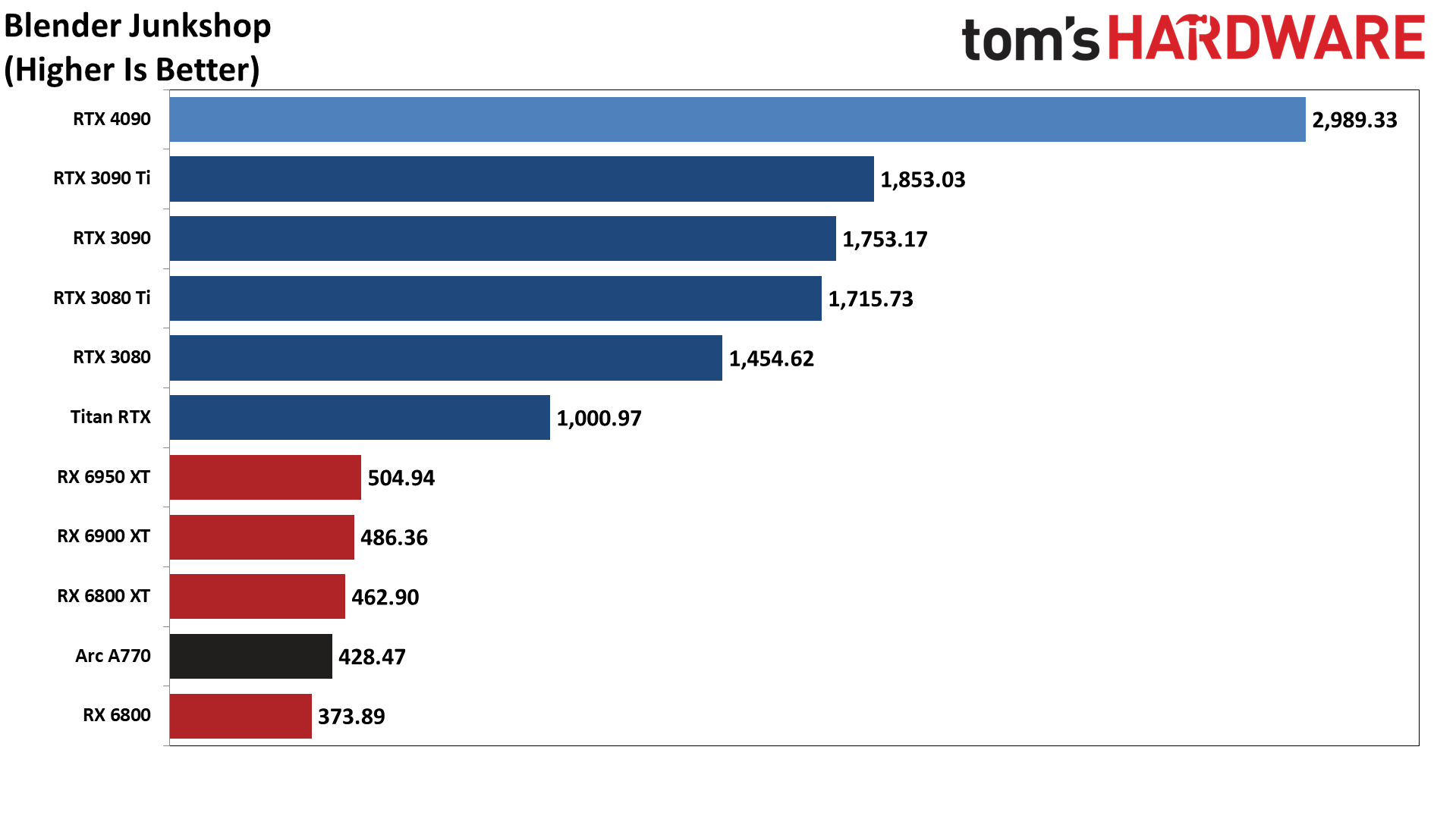

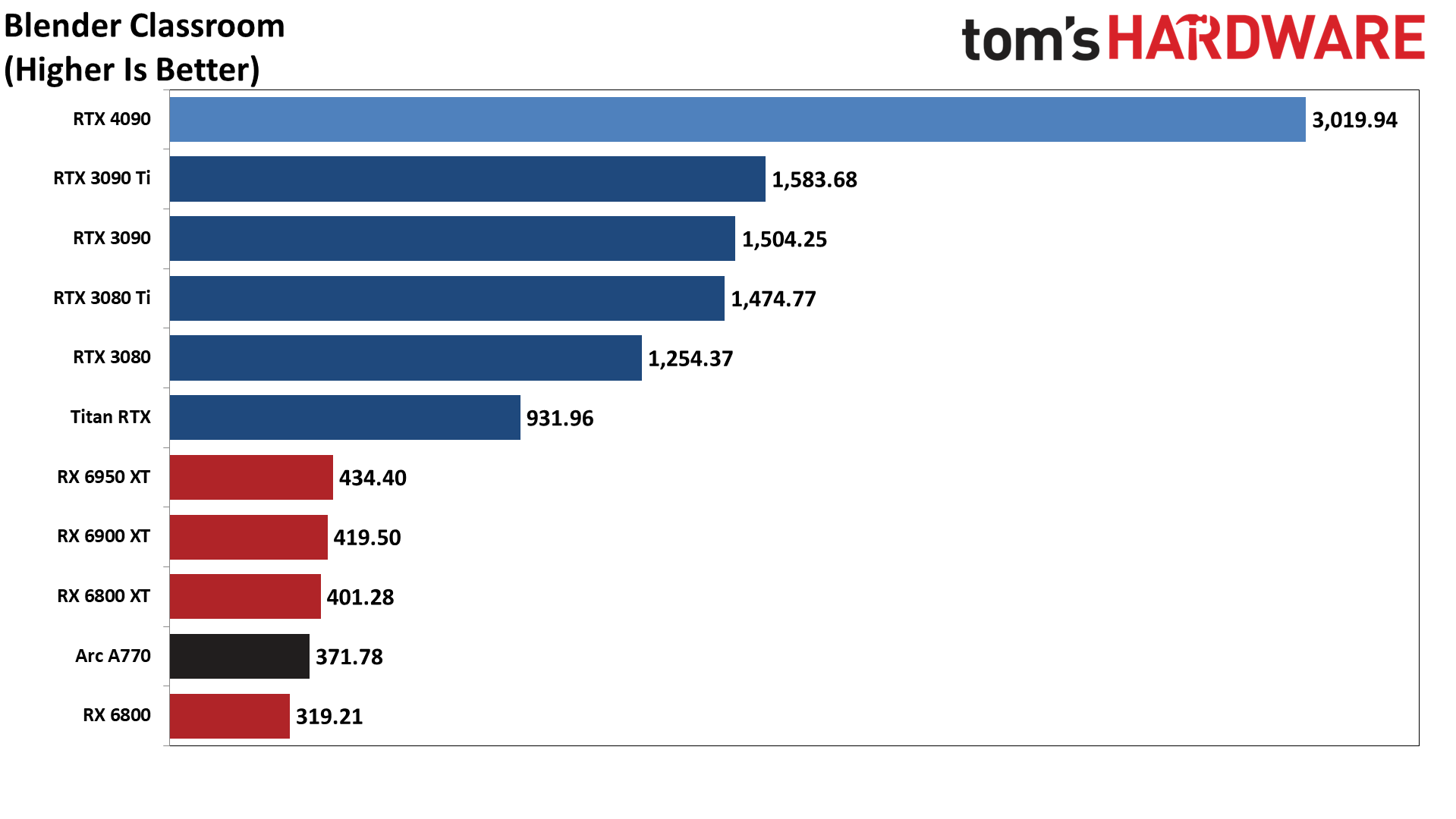

Next up, Blender is a popular open-source rendering application that has been used to make full-length films. We're using the latest Blender Benchmark, which uses Blender 3.30 and three tests. Blender 3.30 also includes the new Cycles X engine that leverages ray tracing hardware on AMD, Nvidia, and even Intel Arc GPUs. It does so via AMD's HIP interface (Heterogeneous-computing Interface for Portability), Nvidia's CUDA or OptiX APIs, and Intel's OneAPI.

Being open-source has one major advantage: the ability of the various companies to deliver their own rendering updates. This gives us the closest option for an apples-to-apples performance comparison of professional 3D rendering using the various GPUs.

Rendering applications like Blender will tend to show the raw theoretical ray tracing hardware performance, and again we see a major generational jump with the RTX 4090 over the RTX 3090 Ti — 83% higher to be precise in our overall score. In the three test scenes, the improvements are 101% with Monster, 61% in Junkshop, and 91% in Classroom.

And while Blender 3.30 does work on AMD and Intel GPUs, the performance gap is massive. The fastest AMD card is still less than half as fast as an RTX 3080 10GB and 45% slower than the Titan RTX. Intel's Arc A770 lands just above the RX 6800 this time. Put another way, the five non-Nvidia GPUs combined only manage about 73% of the performance of a single RTX 4090. Ouch.

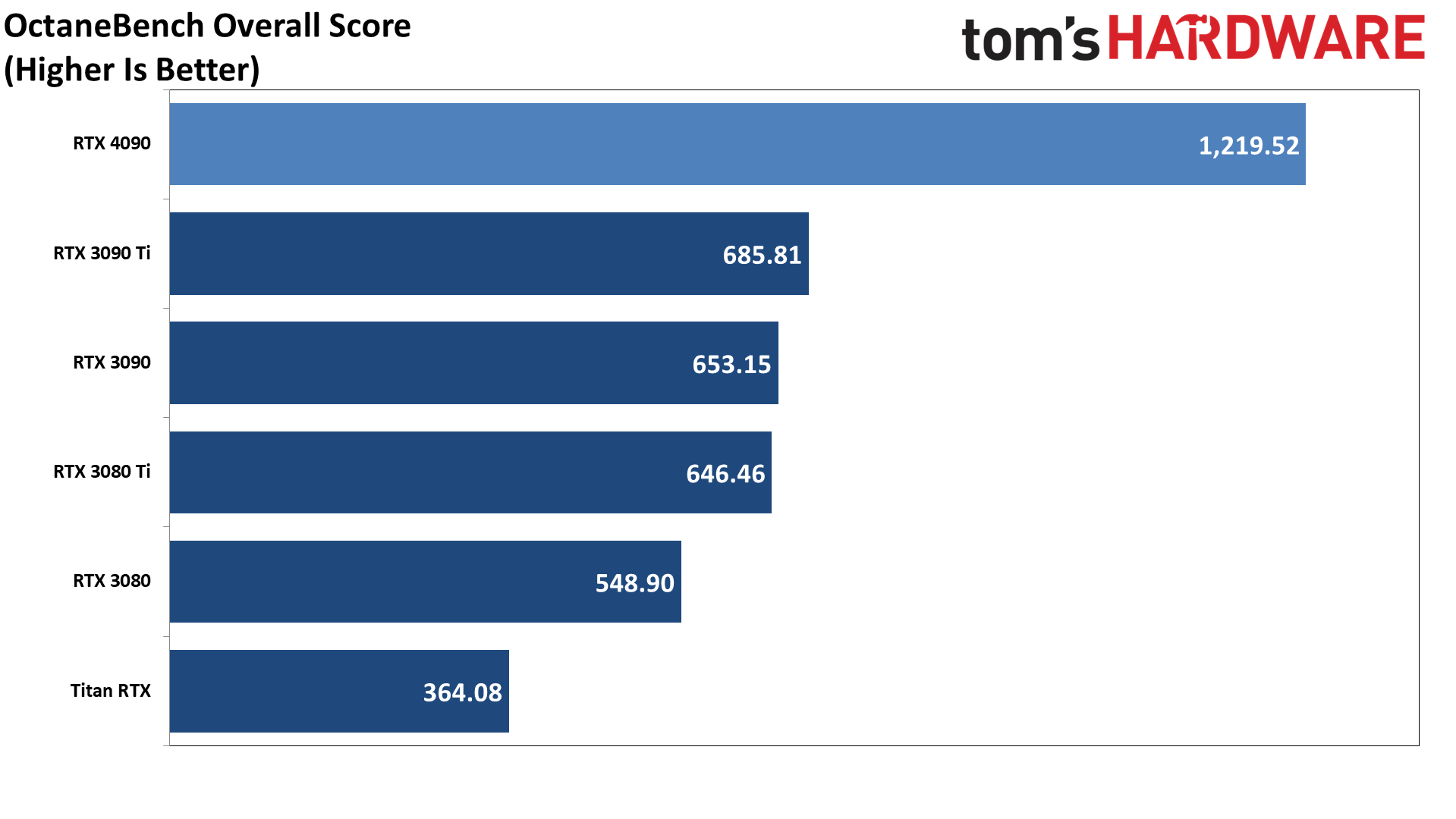

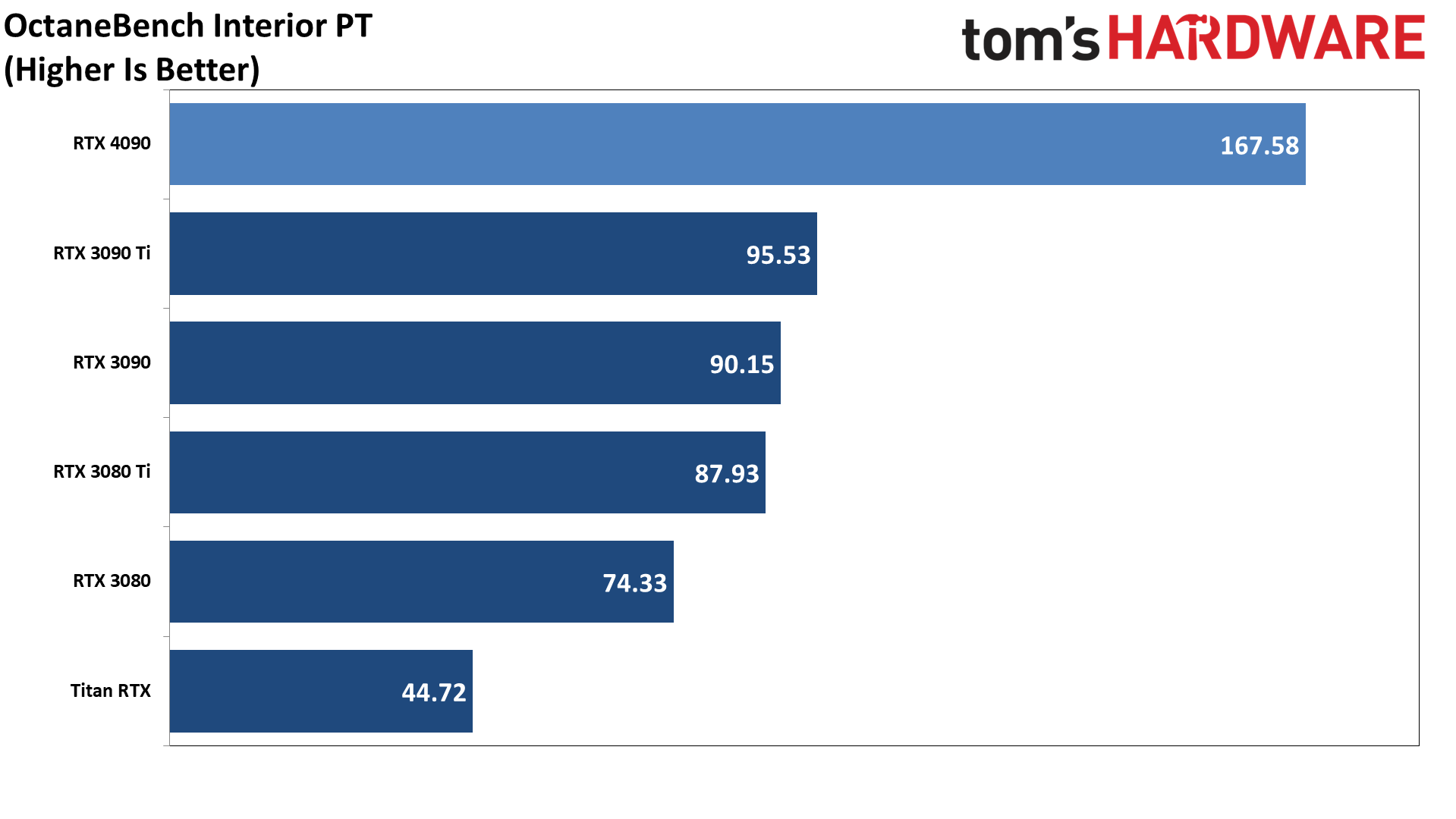

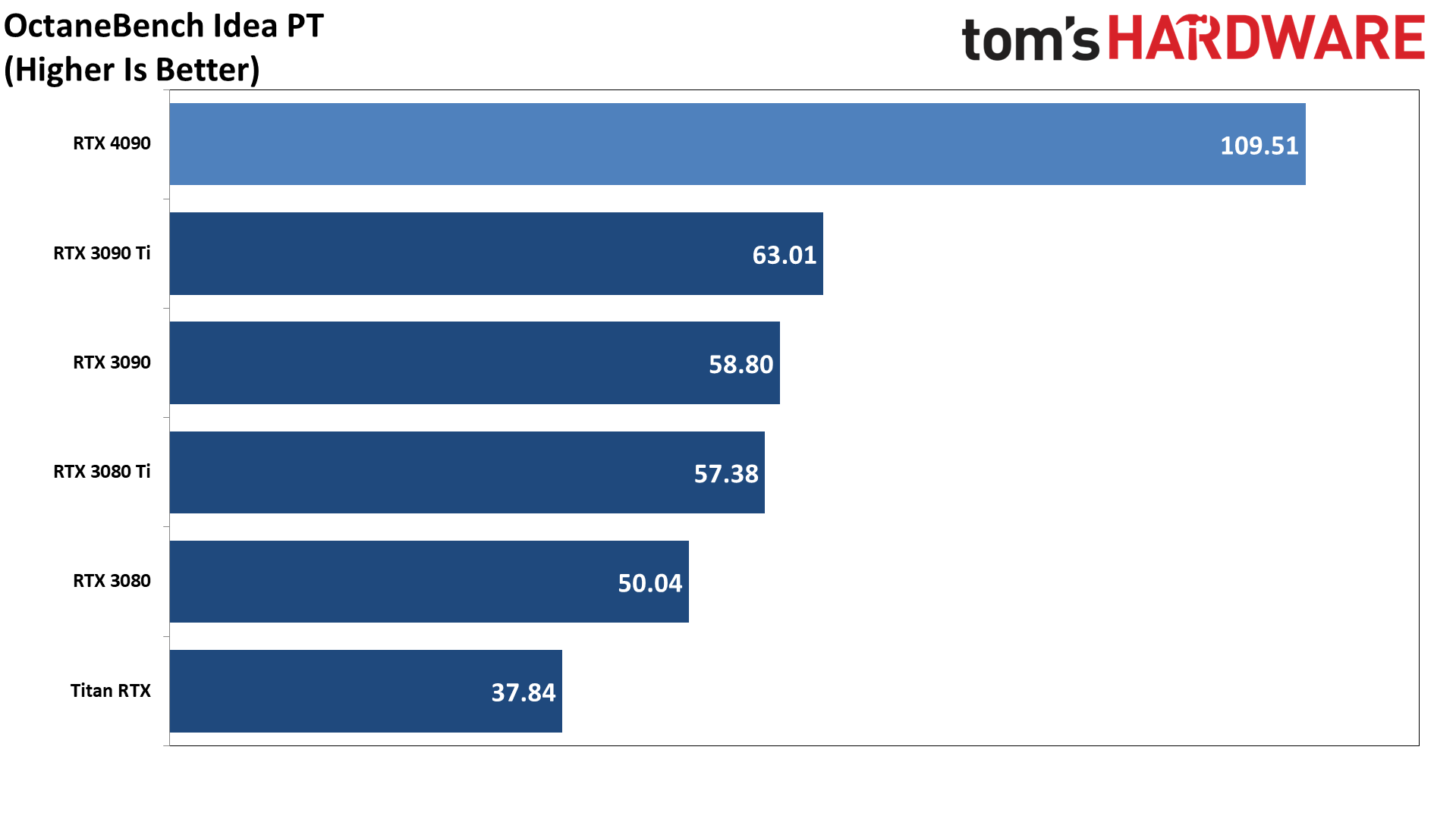

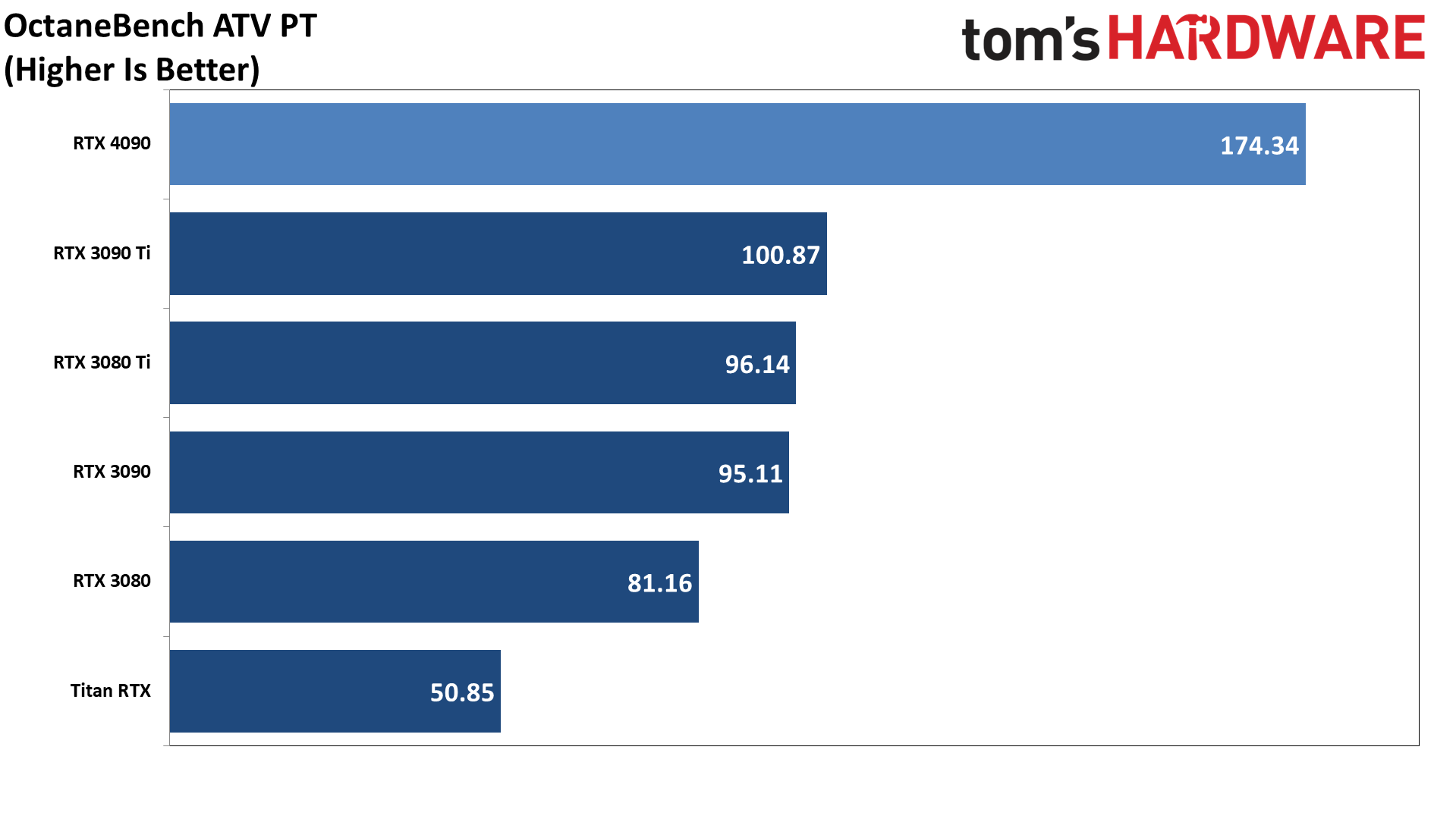

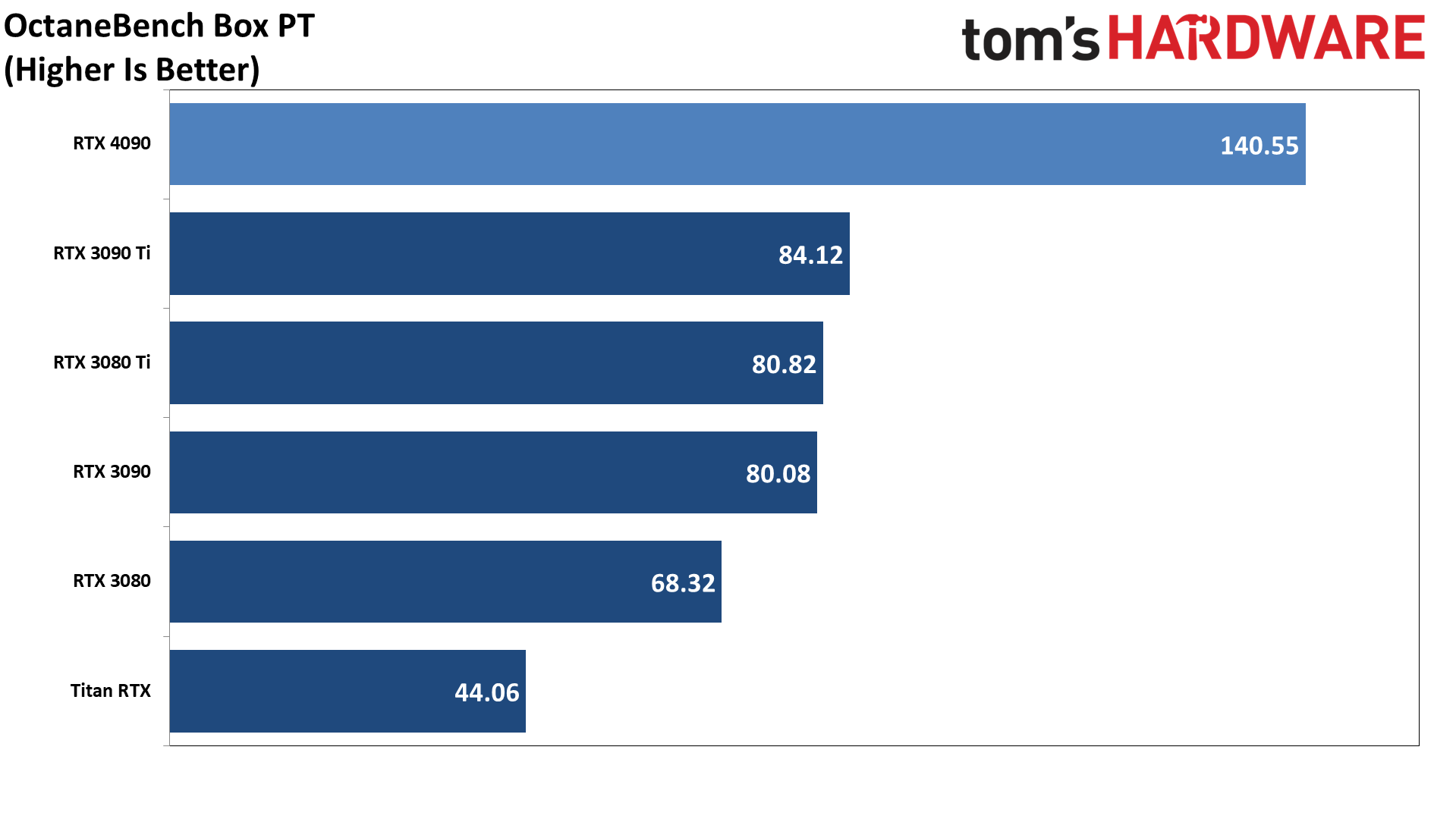

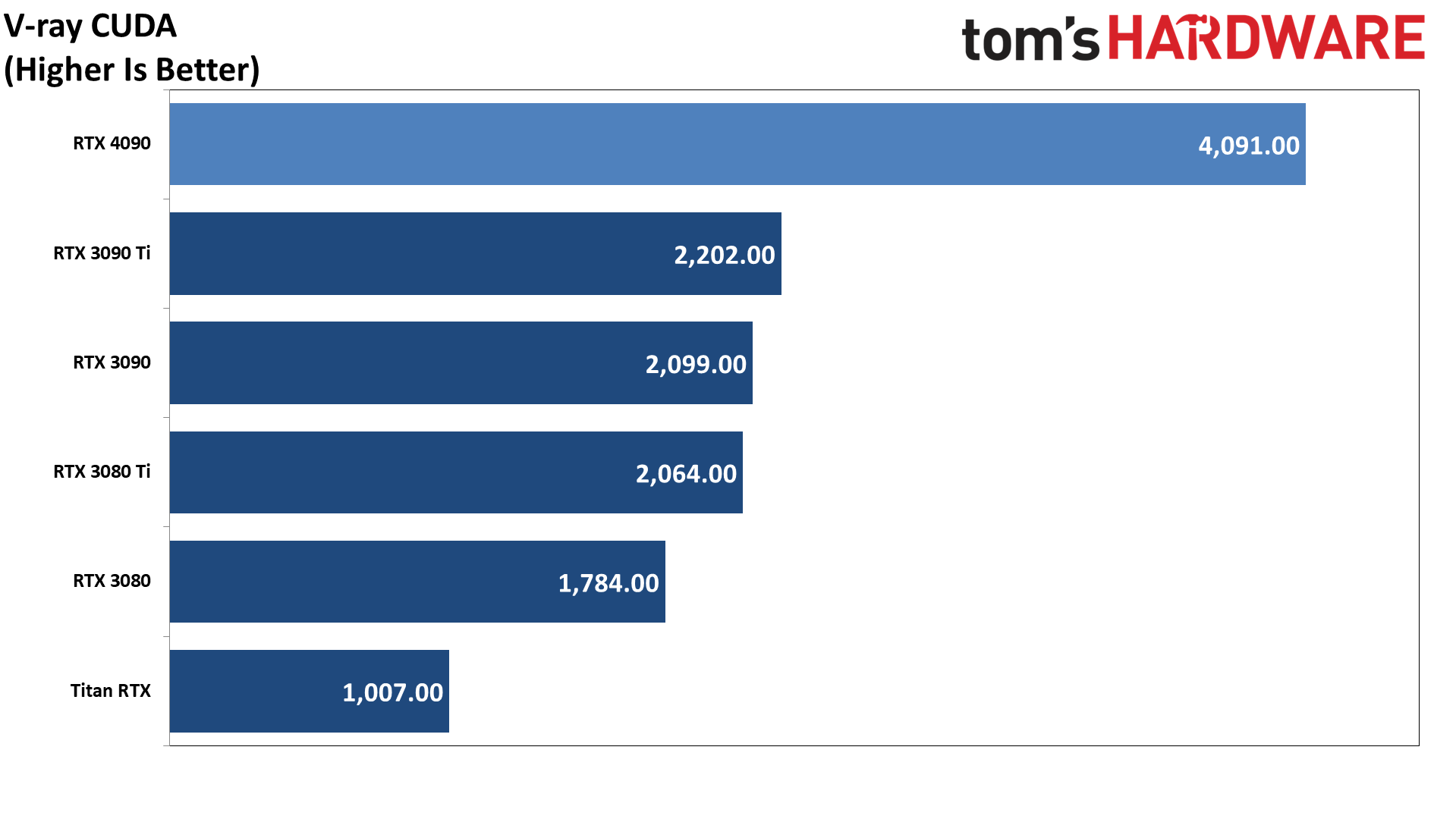

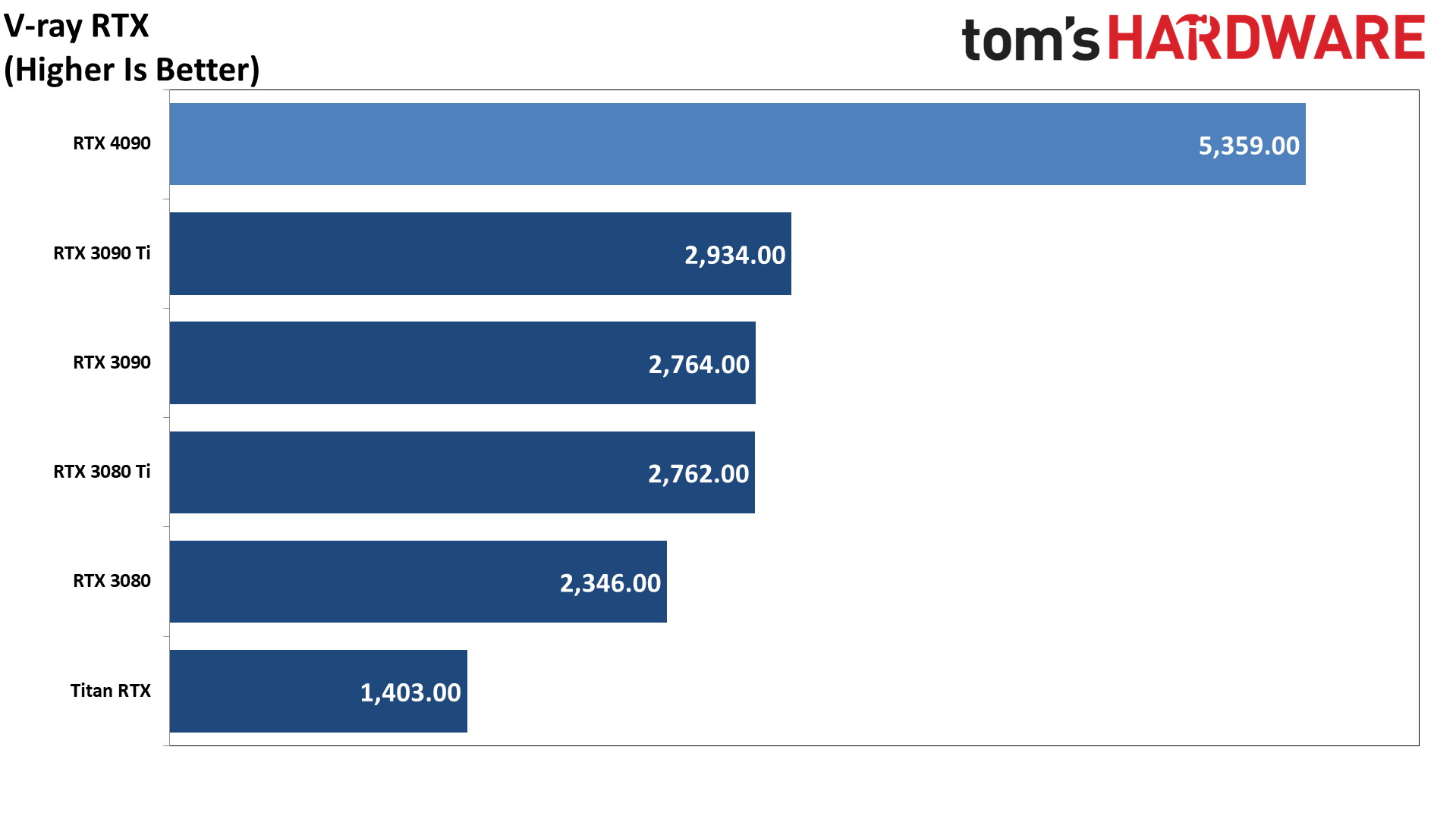

Our final two professional tests only support Nvidia's RTX cards for hardware ray tracing. That's largely because Nvidia's CUDA and OptiX APIs have been around and supported for far longer than any alternative, and while Optane formerly supported OpenCL, stability and difficulty combined with a lack of development efforts caused them to drop support with more recent versions. We've asked both OTOY and Chaos Group if they intend to add support for AMD and Intel GPUs, but these charts are Nvidia only. Note that OctaneBench hasn't been updated since 2020, while the latest V-Ray Benchmark is from June 13, 2022.

As with Blender, the performance improvement delivered by the RTX 4090 in Octane and V-Ray varies with the rendered scene. The overall OctaneBench result is 78% higher than on the RTX 3090 Ti, while the two V-Ray renders are 83% and 86% faster. Again, if you do this sort of work for a living, the RTX 4090 could make for an excellent upgrade.

[Note: I'm still looking for a good AI / machine learning benchmark, "good" meaning it's easy to run, preferably on Windows systems, and that the results are relevant. We don't want something that only works on Nvidia GPUs, or AMD GPUs, or that requires tensor cores. Ideally, it will use tensor cores if available (Nvidia RTX and Intel Arc), or GPU cores if not (GTX GPUs and AMD's current consumer lineup). If you have any suggestions, please contact me — DM me in the forums, or send me an email. Thanks!]

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: GeForce RTX 4090: Professional and Content Creation Performance

Prev Page GeForce RTX 4090: DLSS 3, Latency, and 'Pure' Ray Tracing Performance Next Page GeForce RTX 4090 Video Encoding Performance and Quality

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

-Fran- Shouldn't this be up tomorrow?Reply

EDIT: Nevermind. Looks like it was today! YAY.

Thanks for the review!

Regards. -

brandonjclark Replycolossusrage said:Can finally put 4K 120Hz displays to good use.

I'm still on a 3090, but on my 165hz 1440p display, so it maxes most things just fine. I think I'm going to wait for the 5k series GPU's. I know this is a major bump, but dang it's expensive! I simply can't afford to be making these kind of investments in depreciating assets for FUN. -

JarredWaltonGPU Reply

Yeah, Nvidia almost always does major launches with Founders Edition reviews the day before launch, and partner card reviews the day of launch.-Fran- said:Shouldn't this be up tomorrow?

EDIT: Nevermind. Looks like it was today! YAY.

Thanks for the review!

Regards. -

JarredWaltonGPU Reply

You could still possibly get $800 for the 3090. Then it’s “only” $800 to upgrade! LOL. Of course if you sell on eBay it’s $800 - 15%.brandonjclark said:I'm still on a 3090, but on my 165hz 1440p display, so it maxes most things just fine. I think I'm going to wait for the 5k series GPU's. I know this is a major bump, but dang it's expensive! I simply can't afford to be making these kind of investments in depreciating assets for FUN. -

kiniku A review like this, comparing a 4090 to an expensive sports car we should be in awe and envy of, is a bit misleading. PC Gaming systems don't equate to racing on the track or even the freeway. But the way it's worded in this review if you don't buy this GPU, anything "less" is a compromise. That couldn't be further from the truth. People with "big pockets" aren't fools either, except for maybe the few readers here that have convinced themselves and posted they need one or spend everything they make on their gaming PC's. Most gamers don't want or need a 450 watt sucking, 3 slot, space heater to enjoy an immersive, solid 3D experience.Reply -

spongiemaster Reply

Congrats on stating the obvious. Most gamers have no need for a halo GPU that can be CPU limited sometimes even at 4k. A 50% performance improvement while using the same power as a 3090Ti shows outstanding efficiency gains. Early reports are showing excellent undervolting results. 150W decrease with only a 5% loss to performance.kiniku said:Most gamers don't want or need a 450 watt sucking, 3 slot, space heater to enjoy an immersive, solid 3D experience.

Any chance we could get some 720P benchmarks? -

LastStanding Replythe RTX 4090 still comes with three DisplayPort 1.4a outputs

the PCIe x16 slot sticks with the PCIe 4.0 standard rather than upgrading to PCIe 5.0.

These missing components are selling points now, especially knowing NVIDIA's rival(s?) supports the updated ports, so, IMO, this should have been included as a "con" too.

Another thing, why would enthusiasts only value "average metrics" when "average" barely tells the complete results?! It doesn't show the programs stability, any frame-pacing/hitches issues, etc., so a VERY miss oversight here, IMO.

I also find weird is, the DLSS benchmarks. Why champion the increase for extra fps buuuut... never, EVER, no mention of the awareness of DLSS included awful sharpening-pass?! 😏 What the sense of having faster fps but the results show the imagery smeared, ghosting, and/or artefacts to hades? 🤔