Why you can trust Tom's Hardware

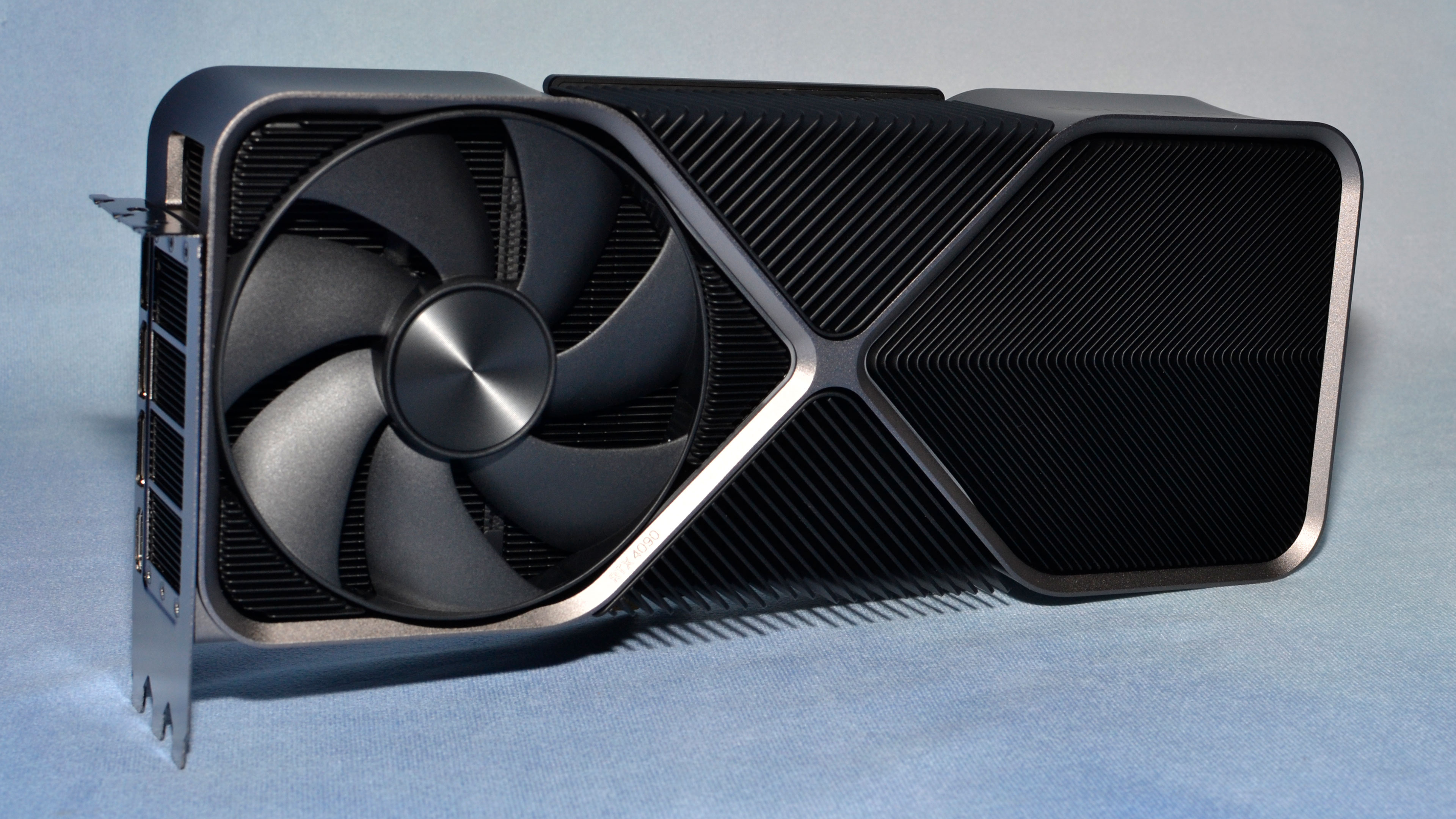

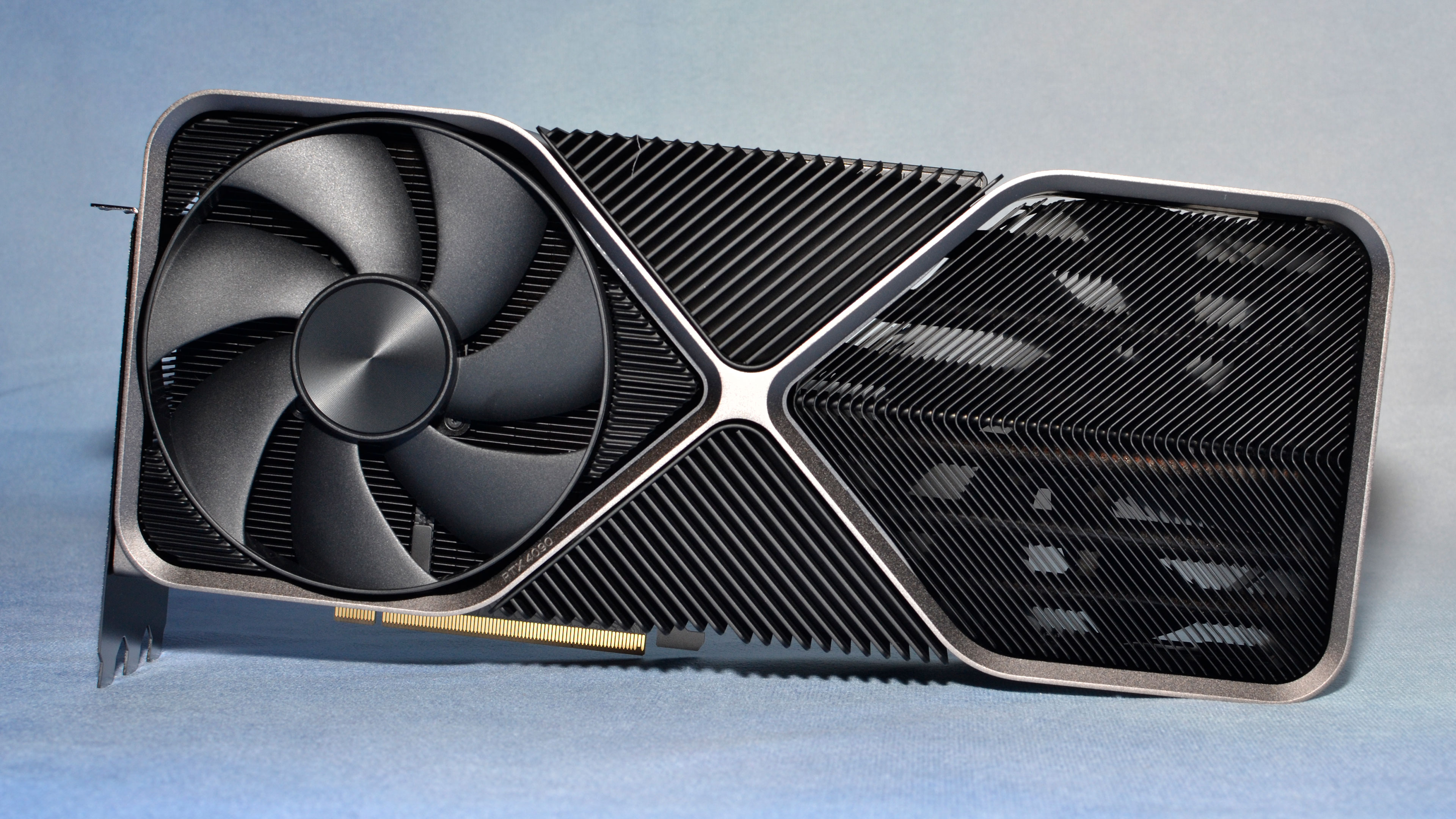

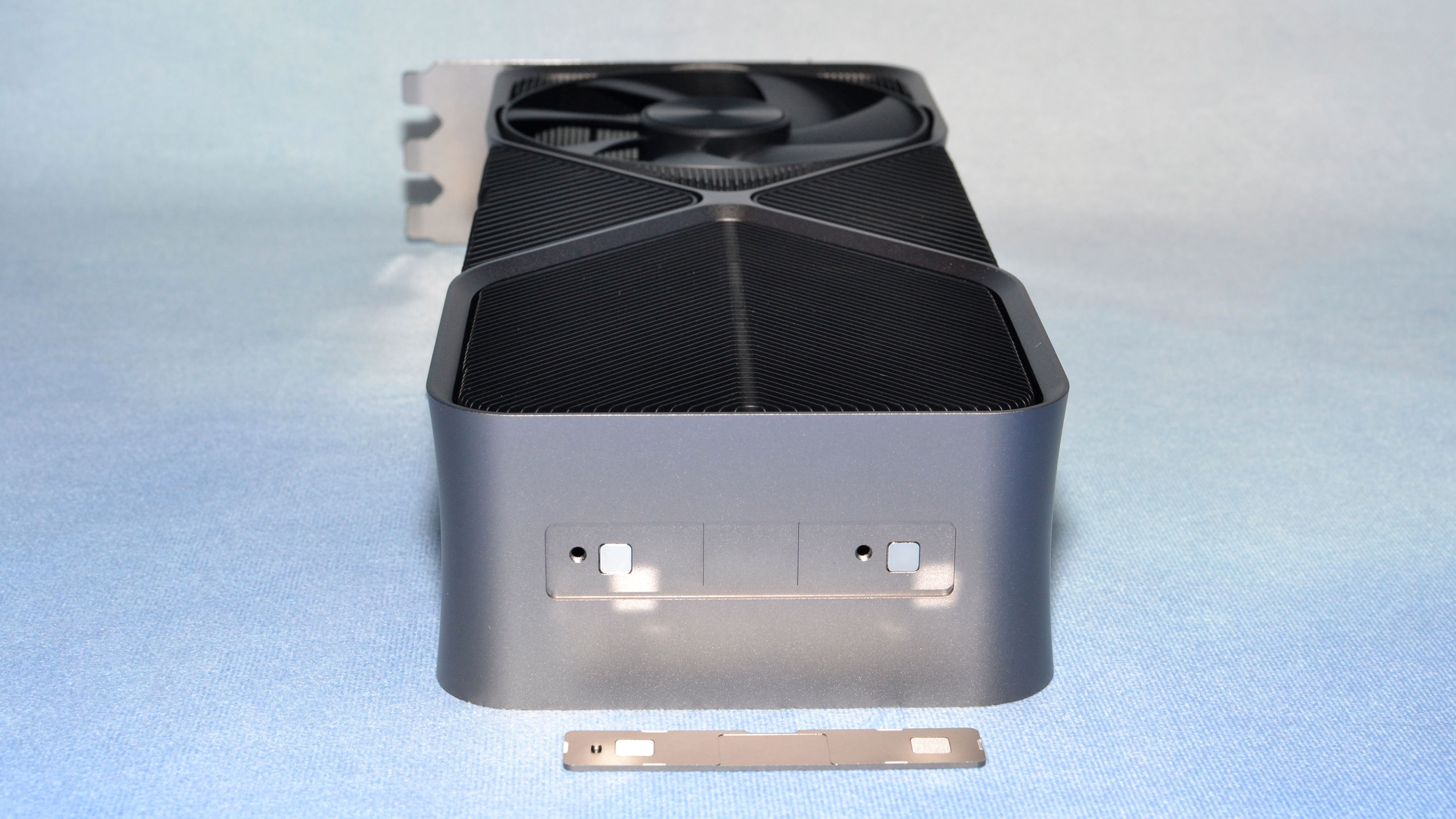

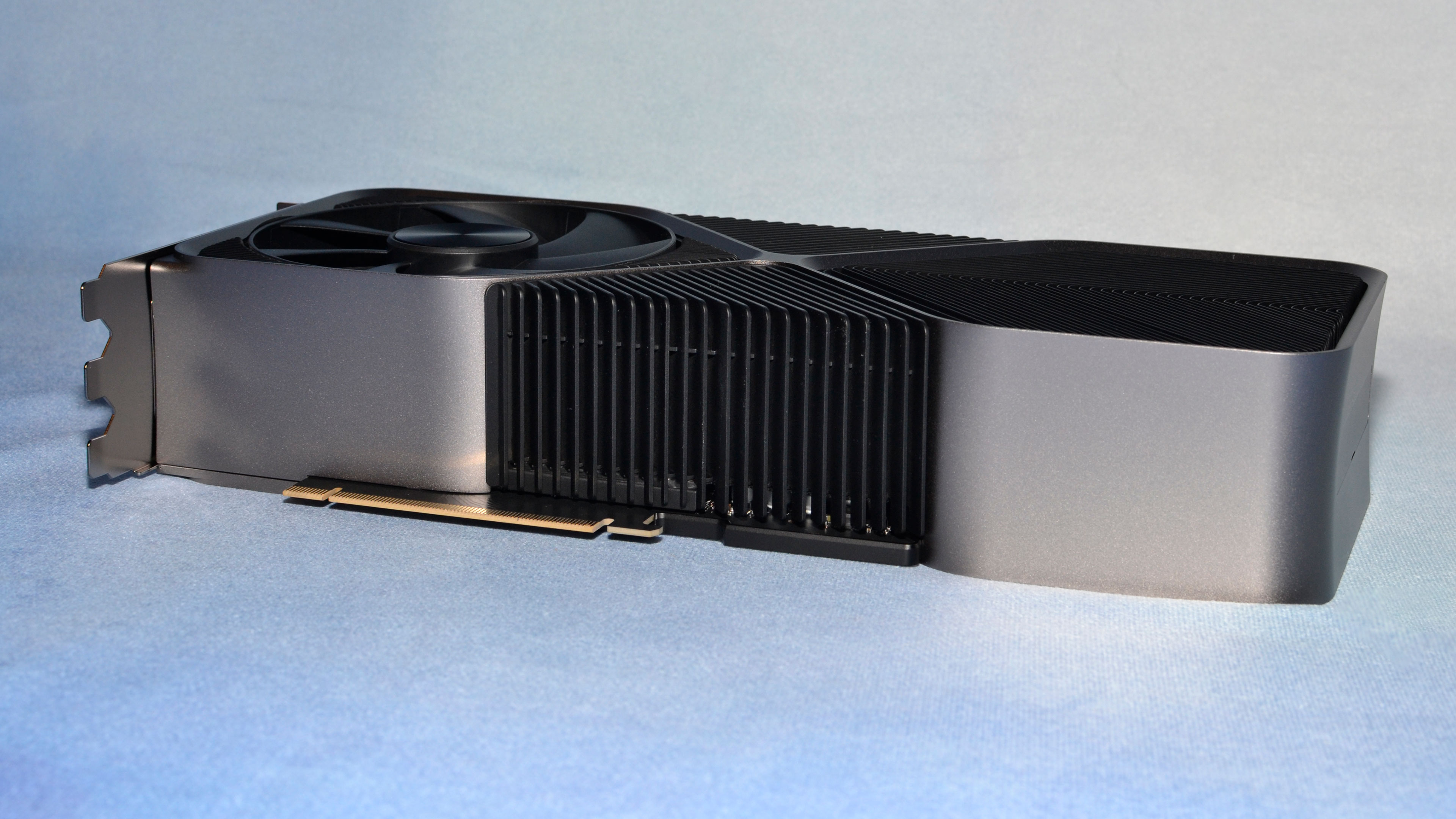

Nvidia's GeForce RTX 4090 Founders Edition superficially looks nearly the same as the previous RTX 3090 Founders Edition. However, there have been a few changes. For one, the new card is slightly thicker and not quite as long — it measures 304x137x61mm compared to the 3090's 313x138x57mm. Weight is virtually unchanged at 2186g, compared to 2189g on the previous generation.

Cosmetically, the new RTX 4090 has a slightly concave shape for the outer chassis frame. You can see the difference in the gallery below where we've put the 3090 and 4090 cards side by side. Otherwise, the changes are slightly more functional rather than purely aesthetic.

There are still two fans: one sucking air through the heatsink at the rear of the card and the other pushing air through the fins and out the exhaust port around the video connectors. The new fans are 115mm in diameter compared to 110mm on the previous generation, and the fans also have a slightly smaller central hub as well (38mm versus 42mm). Nvidia says the fans improve airflow by 20%, presumably at the same speed.

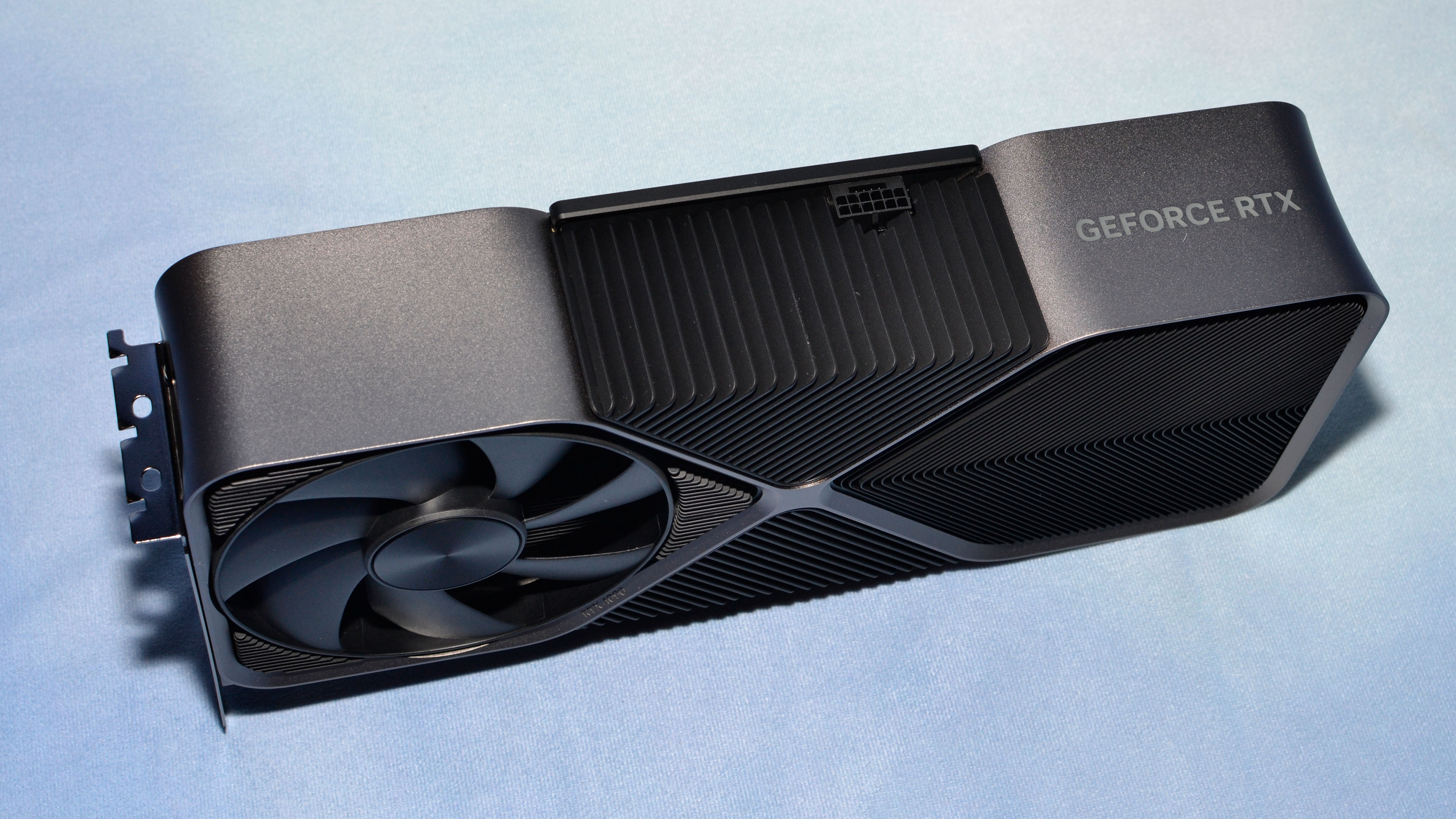

The power situation has also changed in the past two years. Nvidia pioneered the 12-pin power connector with the RTX 30-series. It's pin compatible with the new PCIe 5.0 12VHPWR 16-pin connector, just minus the extra four sense pins. The 4090 16-pin connector also lies parallel to the PCB, where the 3090 12-pin connector jutted out perpendicular to the board.

Finally, where the RTX 3090 had a dual 8-pin-to-12-pin adapter included in the box, the RTX 4090 has a quad 8-pin-to-16-pin adapter — and the same adapter seems to be a standard part of the kit for other RTX 4090 models from Nvidia's partners.

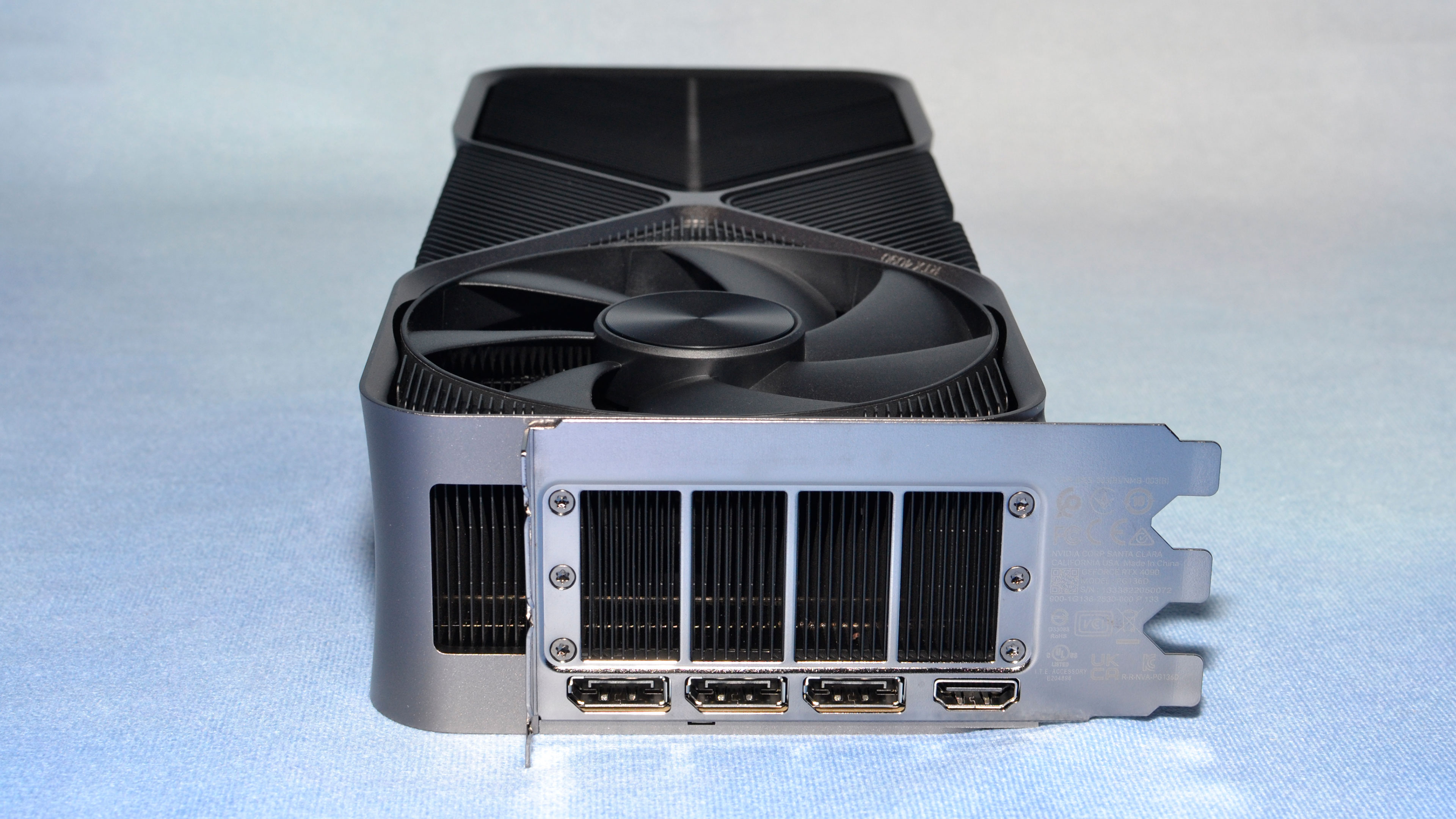

In terms of things that definitely haven't changed, the RTX 4090 still comes with three DisplayPort 1.4a outputs and a single HDMI 2.1 output. We still haven't seen any DisplayPort 2.0 monitors, which might be part of the reason for not upgrading the outputs, but it's still a bit odd as the DP 2.0 standard has been finalized since 2019.

Similarly, the PCIe x16 slot sticks with the PCIe 4.0 standard rather than upgrading to PCIe 5.0. That's probably not a big deal, especially since multi-GPU support in games has all but vanished. We'd argue that the frame generator and DLSS 3 also make multi-GPU basically unnecessary. To that end, Nvidia has also removed NVLink support from the AD102 GPU and the RTX 40-series cards. Yes, we can effectively declare that SLI is now dead, or at the very least it's lying dormant in a coma.

Test Setup for GeForce RTX 4090

We updated our GPU test PC and gaming suite in early 2022, and we continue to use the same hardware for the time being. AMD's Ryzen 9 7950X might be slightly faster, though we're using XMP for a modest boost to performance and we're not too concerned (yet) about a few percent higher frame rates. But if you're actually going to go out and buy an RTX 4090, you'll definitely want at least a Core i9-12900K like we're using, if not the upcoming Core i9-13900K or the aforementioned 7950X.

Our CPU sits in an MSI Pro Z690-A DDR4 WiFi motherboard, with DDR4-3600 memory — a nod to sensibility rather than outright maximum performance. We have a Crucial P5 Plus 2TB SSD, which ought to feel capacious but with the size of modern games it's beginning to seem just adequate. We also upgraded to Windows 11 and are now running the latest 22H2 version (with VBS and HVCI disabled) to ensure we get the most out of Alder Lake. You can see the rest of the hardware in the boxout.

Our gaming tests consist of a "standard" suite of eight games without ray tracing enabled (even if the game supports it), and a separate "ray tracing" suite of six games that all use multiple RT effects. We've tested the RTX 4090 at all of our normal settings, though the focus here will be squarely on 4K performance, with 1440p as a step down for those that might want one of the upcoming 1440p 360 Hz displays over a 4K 144 Hz panel.

Testing at 1080p was conducted, but it's almost entirely meaningless data unless you're running a game that supports ray tracing. Most games were already largely CPU limited at 1080p with the RTX 3090 Ti and RX 6950 XT. The RTX 4090 moves the needle so far to the right that there's less than a 5% difference in overall performance between 1080p ultra and 1440p ultra. We also enabled and tested the 4090 with DLSS 2 Quality mode in the ten games that support it.

Besides the gaming tests, we also have a collection of professional and content-creation benchmarks that can leverage the GPU. We're using SPECviewperf 2020 v3, Blender 3.30, OTOY OctaneBenchmark, and V-Ray Benchmark.

Finally, because the Ada Lovelace and RTX 40-series GPUs provide a lot of new features, we'll be looking at DLSS 3 in preview builds of several games provided by Nvidia. DLSS 3 only runs on the RTX 4090 for now, so we won't have direct comparisons to other GPUs. We'll also look at performance — and latency on Nvidia GPUs — across a collection of high-end graphics cards using the preview build of Cyberpunk 2077. (Nvidia GPUs are required for Reflex support, which in turn is required for Nvidia's FrameView utility to capture latency data.)

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Nvidia GeForce RTX 4090 Founders Edition

Prev Page Meet the Nvidia GeForce RTX 4090 Next Page GeForce RTX 4090: Gaming Performance at 4K

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

-Fran- Shouldn't this be up tomorrow?Reply

EDIT: Nevermind. Looks like it was today! YAY.

Thanks for the review!

Regards. -

brandonjclark Replycolossusrage said:Can finally put 4K 120Hz displays to good use.

I'm still on a 3090, but on my 165hz 1440p display, so it maxes most things just fine. I think I'm going to wait for the 5k series GPU's. I know this is a major bump, but dang it's expensive! I simply can't afford to be making these kind of investments in depreciating assets for FUN. -

JarredWaltonGPU Reply

Yeah, Nvidia almost always does major launches with Founders Edition reviews the day before launch, and partner card reviews the day of launch.-Fran- said:Shouldn't this be up tomorrow?

EDIT: Nevermind. Looks like it was today! YAY.

Thanks for the review!

Regards. -

JarredWaltonGPU Reply

You could still possibly get $800 for the 3090. Then it’s “only” $800 to upgrade! LOL. Of course if you sell on eBay it’s $800 - 15%.brandonjclark said:I'm still on a 3090, but on my 165hz 1440p display, so it maxes most things just fine. I think I'm going to wait for the 5k series GPU's. I know this is a major bump, but dang it's expensive! I simply can't afford to be making these kind of investments in depreciating assets for FUN. -

kiniku A review like this, comparing a 4090 to an expensive sports car we should be in awe and envy of, is a bit misleading. PC Gaming systems don't equate to racing on the track or even the freeway. But the way it's worded in this review if you don't buy this GPU, anything "less" is a compromise. That couldn't be further from the truth. People with "big pockets" aren't fools either, except for maybe the few readers here that have convinced themselves and posted they need one or spend everything they make on their gaming PC's. Most gamers don't want or need a 450 watt sucking, 3 slot, space heater to enjoy an immersive, solid 3D experience.Reply -

spongiemaster Reply

Congrats on stating the obvious. Most gamers have no need for a halo GPU that can be CPU limited sometimes even at 4k. A 50% performance improvement while using the same power as a 3090Ti shows outstanding efficiency gains. Early reports are showing excellent undervolting results. 150W decrease with only a 5% loss to performance.kiniku said:Most gamers don't want or need a 450 watt sucking, 3 slot, space heater to enjoy an immersive, solid 3D experience.

Any chance we could get some 720P benchmarks? -

LastStanding Replythe RTX 4090 still comes with three DisplayPort 1.4a outputs

the PCIe x16 slot sticks with the PCIe 4.0 standard rather than upgrading to PCIe 5.0.

These missing components are selling points now, especially knowing NVIDIA's rival(s?) supports the updated ports, so, IMO, this should have been included as a "con" too.

Another thing, why would enthusiasts only value "average metrics" when "average" barely tells the complete results?! It doesn't show the programs stability, any frame-pacing/hitches issues, etc., so a VERY miss oversight here, IMO.

I also find weird is, the DLSS benchmarks. Why champion the increase for extra fps buuuut... never, EVER, no mention of the awareness of DLSS included awful sharpening-pass?! 😏 What the sense of having faster fps but the results show the imagery smeared, ghosting, and/or artefacts to hades? 🤔