PCIe standards group releases draft specification for PCIe 7.0 — full release expected in 2025

Final specifications to be revealed later in 2025

The Peripheral Component Interconnect Special Interest Group (PCI-SIG) just released version 0.7 of the PCIe 7.0 specifications for the approval of its members. With the goal of releasing the final specifications in 2025.

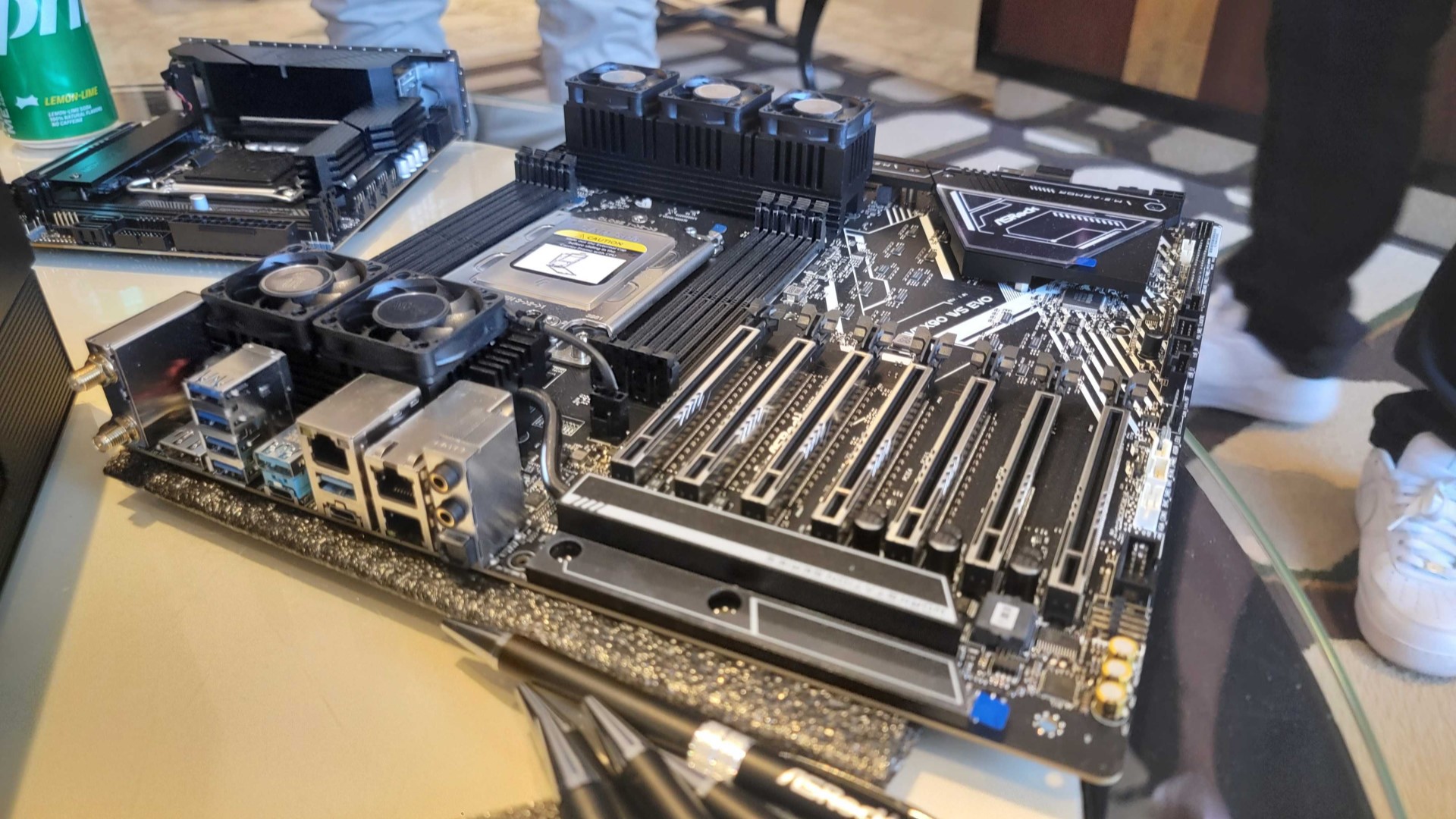

The PCI-SIG is the group that sets the standards for the interface that connects the motherboard to other components like the best GPUs, so it must ensure that these interconnects can keep up with hardware advancements and not serve as bottlenecks to future developments. PCI-SIG aims to finalize the specifications for PCIe 7.0 this year to keep up with its target cadence of new standards every three years.

PCIe 7.0 aims to double the limits set by PCIe 6.0 to 128GT/s raw bit rate, which translates to a bi-directional transfer speed of 512GB/s on a 16-lane or x16 configuration. It will also use Pulse Amplitude Modulation with 4 levels (PAM4) signaling introduced in PCIe 6.0, which allows it to encode two bits of data per clock cycle, effectively doubling the data rate versus the signaling tech used in PCIe 4.0 and PCIe 5.0.

The previous version of the PCIe 7.0 draft specifications, version 0.5, was released last April, and it didn’t seem to have received any major revisions in version 0.7. So, if the PCI-SIG members reach a general consensus with this latest release, the group will finalize and publish the standard this year.

However, even if that happens, don’t expect to see PCIe 7.0 SSDs and GPUs arrive on the market any time soon. The PCIe 5.0 standards were released in 2019, but it wasn’t until 2023 that the first PCIe 5.0 SSDs arrived in retail stores. In fact, PCIe 6.0—finalized in January 2022—is still being tested and verified for interoperability in December 2023.

Aside from that, manufacturers also need to deal with various roadblocks when deploying these new technologies, especially as their higher transfer speeds result in ever increasing operating temperatures. Intel is seeing this while working on a PCIe Cooling Driver for Linux, which reduces the SSD’s bandwidth if temperatures get too high. Because of this, we will likely see more and more internal components that require massive heat sinks and active cooling just so that it can run at its advertised speed.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Jowi Morales is a tech enthusiast with years of experience working in the industry. He’s been writing with several tech publications since 2021, where he’s been interested in tech hardware and consumer electronics.

-

tommo1982 Instead of pushing more data and faster they could devise a method to do it smarter. Each PCIe version increases prices and the benefit for regular user is very little.Reply -

das_stig PCIe and general motherboard interconnects are out dated..Reply

We need a new system of multiple big bus routes, many buses, many lanes, all defined in the BIOS.

For very basic example and I'm sure somebody more technical can flesh it out ..

Your a server motherboard and have been designed with 3x buses and 128 lanes ..

Bus 1 .. 16 lanes

1. 1x lane for simple gpu

2. 2x lane for multiple USB2's, Kbd/Mse, UPS connection and the like

3. 4x for 2x 1G LAN NICS + 1G Remote NIC

4. 6x lanes for other stuff

5. 3x for motherboard interconnect

Bus 2 .. 64 lanes

1. 48x lanes for CPU-2-Memory

2. 16x for motherboard interconnect

Bus 3 .. 48 lanes

1. 32x lanes for multiple storage controllers

2. 8x for other stuff

3. 16x for motherboard interconnect -

thesyndrome I did a double take when I read the title.Reply

AFAIK PCI-e 5 only recently had affordable NVMe drives put up for sale (last year's offering seemed to pricey for anyone but the enterprise sector), and modern GPUs on PCI-e 5 still don't even use close to the bandwidth that it offers.

6 currently exists and has done since 2022....but not for consumers in any way at all, so to say that 7 will be ready in 2025 seems like redundant news for the vast majority of people if we won't even get devices that can use it for another 3 years... and even then it seems like the device manufacturers are struggling to even make devices that can fully utilise the full speed, even the upcoming Nvidia 50-series is still on 5 rather than 6

I'm probably wrong on this, but it feels like PCI-SIG are outpacing manufacturers so fast that the "every three years" plan is too quick for anyone to catch up, even taking into consideration they they will design future products around the extra bandwidth, I get the impression that they will be "releasing" PCI-e 12 by the time we finally get devices that can take advantage of 7 and the gap is just going to keep getting larger.

I'm waiting for the day they announce they are ready to release PCI-e 50 by the time we get devices that support 9. -

edzieba Remember that PCIe is not just "that thing I use for GPUs and sometimes storage drives".Reply

PCIe is the underlying link for multiple other systems. This includes not only peripheral connections like UCIe, CXL, but also internal package and chip interconnects (e.g. AMD's Infinity Fabric, Intel's DMI, and the links between chipset cores and peripheral interfaces like USB and SATA controllers). All of these benefit from advancements of the PCIe bus, even if it never filters out to consumer components. -

DougMcC Replytommo1982 said:Instead of pushing more data and faster they could devise a method to do it smarter. Each PCIe version increases prices and the benefit for regular user is very little.

I think what you're looking for here is known as 'why don't they use magic instead'. Because of course, they are always looking for options to improve the design. That's why the PAM4 move.

They don't have to worry about regular users, either. If the newer designs offer no benefit, motherboard manufacturers are completely free to use older and cheaper designs, and if the price difference matters to users vs the modest increment in capability, those older and cheaper designs will sell. -

DougMcC Replythesyndrome said:I did a double take when I read the title.

AFAIK PCI-e 5 only recently had affordable NVMe drives put up for sale (last year's offering seemed to pricey for anyone but the enterprise sector), and modern GPUs on PCI-e 5 still don't even use close to the bandwidth that it offers.

6 currently exists and has done since 2022....but not for consumers in any way at all, so to say that 7 will be ready in 2025 seems like redundant news for the vast majority of people if we won't even get devices that can use it for another 3 years... and even then it seems like the device manufacturers are struggling to even make devices that can fully utilise the full speed, even the upcoming Nvidia 50-series is still on 5 rather than 6

I'm probably wrong on this, but it feels like PCI-SIG are outpacing manufacturers so fast that the "every three years" plan is too quick for anyone to catch up, even taking into consideration they they will design future products around the extra bandwidth, I get the impression that they will be "releasing" PCI-e 12 by the time we finally get devices that can take advantage of 7 and the gap is just going to keep getting larger.

I'm waiting for the day they announce they are ready to release PCI-e 50 by the time we get devices that support 9.

I think what you're missing is that downstream adoption times are relatively steady. It takes around 3 years from the version release to where each stage of the downstream pipeline has adopted the technology. They can't NOT announce when the specification is ready, and they can't change how long it takes to build chips from the day that the specification is complete.

For your benefit, i'd recommend tuning out the specification announcement, and wait for the first motherboard announcement. Then you'll never have this 'waiting' problem. -

DougMcC Reply

This is what PCI is. You can partition it this way if you want. The fact that no one particularly picks your '3 bus' design is just an artifact of what they find to be the most efficient allocation.das_stig said:PCIe and general motherboard interconnects are out dated..

We need a new system of multiple big bus routes, many buses, many lanes, all defined in the BIOS.

For very basic example and I'm sure somebody more technical can flesh it out ..

Your a server motherboard and have been designed with 3x buses and 128 lanes ..

Bus 1 .. 16 lanes

1. 1x lane for simple gpu

2. 2x lane for multiple USB2's, Kbd/Mse, UPS connection and the like

3. 4x for 2x 1G LAN NICS + 1G Remote NIC

4. 6x lanes for other stuff

5. 3x for motherboard interconnect

Bus 2 .. 64 lanes

1. 48x lanes for CPU-2-Memory

2. 16x for motherboard interconnect

Bus 3 .. 48 lanes

1. 32x lanes for multiple storage controllers

2. 8x for other stuff

3. 16x for motherboard interconnect

Or to put it another way: since you can already do exactly this with PCI, the fact that no one has means that the cost/benefit of doing so doesn't look appealing. Basically you are asking for more lanes than consumers currently get, and the manufacturers think you don't need it. Because if you want more lanes you can get a workstation / server part, and you would be getting pretty much exactly what you're asking for. It just costs more. Why aren't you (and everyone) buying such parts? -

OldAnalogWorld Reply

Until now there were no consumer chips for the 5.0 bus. Where did you get this from, can you provide a link to such a dgpu chip in 2024?thesyndrome said:and modern GPUs on PCI-e 5 still don't even use close to the bandwidth that it offers.

If you want to understand why 5.0 pci-e and especially 6.0+ are pointless in consumer chips x86 (but not server ones with HBM3+ memory and 1024-bit memory bus) - read the discussion and especially my comments in this news topic.

In short:

A simple example of Zen4 HX - it is already more than 2 years out of date (but it is still the fastest mobile processor in the world in the x86 camp - 7945HX). It has 28 5.0 lanes (24 are free). The video card is connected via 4.0 x8 most often, at best x16, i.e. it is only 8 5.0 lanes. M.2 5.0 x4 slots are pointless in laptops - too high power consumption of controllers and heating of nand chips with disgusting air draft around M.2 slots - a priori incorrect architectural planning of the case and motherboards of laptops, plus the impossibility of installing effective large-sized radiators (or there will be increased noise).

In fact, Zen4 HX uses less than half of the 24 available lanes in 5.0 mode. In addition, 28 5.0 lanes are more than 100GB/s, if all at the same time (the limiting case). And the real memory bus bandwidth of this series is 60-65GB/s. This is 2 (two) times worse than the full load on 28 lanes of 5.0 requires. Now imagine what will happen with 6.0 28 lanes or even worse 7.0 (even if you remove the factor of poor technical process and heating of controllers).

To service such requests, you need a memory bus with a real bandwidth several times higher.

And what do we see? Zen5 Halo for the first time in consumer x86 gets a 256-bit controller, which in reality should have been in Zen4 HX 2 years ago, which would allow you to easily service all 28 lanes of 5.0 with a reserve for OS/software - they also need to work ...

To service 7.0, you need RAM with a bandwidth of 1TB/s, and only HBM3 controllers with a 1024-bit bus provide this.

Forget about 6.0 devices in PC/laptops until the RAM can easily pump 300GB/s+ in the mass case - at least in the "gaming" segment - this is the level of 512+ bit memory bus at current frequencies of memory modules

5.0 is just coming into its own with the 256-bit Zen5 Halo controller. The 128-bit ArrowLake HX is simply not suitable for this - its throughput is much lower than that of the AMD chip.

--

5.0 SSDs are not needed by anyone in the consumer segment at all, even in the gaming segment - they are too hot and still too slow, especially in 4k IOPs (the difference with RAM in Apple M4 Pro when exchanging in random 4k blocks is more than 100 times - 120MB/s(SSD) vs 12000MB/s(RAM)).

Until SSD controllers (NAND chip consumption) drop below 5-6W in total, which will allow them to be easily installed in laptops without radiators or with very small ones - they do not matter.

In desktops, they are only interesting to those who are engaged in non-linear editing of video in RAW quality and similar loads. In games, 5.0 SSDs are of practically useless.

For video cards, a thicker bus matters if the RAM is approximately (empirically) about 3 times faster than this channel. That is, if you want to download data to vram dgpu at a speed of 128GB/s, i.e. pcie 6.0 x16 (5090 has 1700+ GB/s VRAM bandwidth), you will need a 350GB/s memory bus at least for general tasks, so that everything runs smoothly and efficiently in parallel with dgpu. For 7.0 (256 GB/s x16), you will need RAM performance in the x86 controller already closer to 1TB/s, so that everything runs smoothly in parallel.

This will not happen in the next 10 years in x86. -

hotaru251 afaik nothing even saturates a 5.0 let alone 6.0....and until they figure ways to counter thermals whats point of improving it even more??Reply

Seems liek a pointless advancement thats just costlier to implement and make stuff mroe expensive for enduser -

OldAnalogWorld In servers it pays off. In the mass retail segment of course not, until the coming "silicon dead end" is overcome - the rapid transformation of the performance growth curve per 1W of consumption practically into a plain, if you look at it on an exponential scale over the past 60 years. We all want this - but this is already a task of big science, fundamental science and the coordinated work of many people. And the performance of the brain and its insightful abilities are finite with the growth of information volumes and the increasing complexity of internal associations for the emergence of any new "insights" (people increasingly walk between the figurative "3 pines" without seeing the forest itself behind them) that must be kept in mind. And such tasks are increasingly "parallelized" into large teams, since their efficiency of joint work also quickly falls, despite the groundwork for the automation of their work. Therefore, such desperate efforts are invested in the development of neural networks, i.e. an attempt to create AI so that it would surpass these growing biochemical limitations of the brain, biological civilization as a whole and give new fundamental solutions and discoveries, giving us a breakthrough to a new "energy" level of possibilities, including individual ones.Reply

Quantum computers and photonics are one of such topics, but so far these are mostly laboratory works and extremely narrow practical tasks in semi-laboratory solutions - for example, attempts to open the current stable asymmetric encryption (to freely read the correspondence of opponents-competitors), using quantum methods. Or some tasks of modeling chaotic processes.

And household electronics are unlikely to be able to offer something amazing in the next 10-15 years - it is simply becoming impossible in the "pocket", with the emerging dead end in the growth of performance per 1W of consumption. It is no longer possible to increase consumption further, neither in PCs, nor in laptops, and especially in wearable gadgets. And then it reached another absurdity - even coolers appeared in smartphones. They will increase performance, say, by 10 times (or even 20 times) in 10-15 years - this is nothing, if you look at the growth of performance per 1W since the 1960s in an exponential form. And no powerful neural networks (really useful in everyday life) at the level of "in your pocket" (locally, i.e. independently of anyone) of course will not happen. The same tectonic large-scale shifts in production technologies must occur, as they happened from the 60s of the last century to today. This is already beyond the 21st century, and in the best case scenario for humanity. Maybe today's babies will live to see this new tectonic shift, as the Internet and smartphones did for us...