The Ryze Of EPYC: The AMD Supercomputer Slideshow

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

The Ryze Of EPYC

AMD's EPYC processors made their first halting steps into the server and supercomputer world with a limited debut at the Supercomputer 2017 conference. What a difference two years makes. We found more AMD EPYC systems at Supercomputer 2018 than we expected, and most importantly, those systems come from some of the biggest names in the server and supercomputer space. AMD has also continued its rapid ascension into the supercomputing realm with its 2019 announcement that it will power the Frontier supercomputer, which is set to be the fastest on the planet with 1.5 exaflops of computing power. That's faster than the top 160 supercomputers, combined.

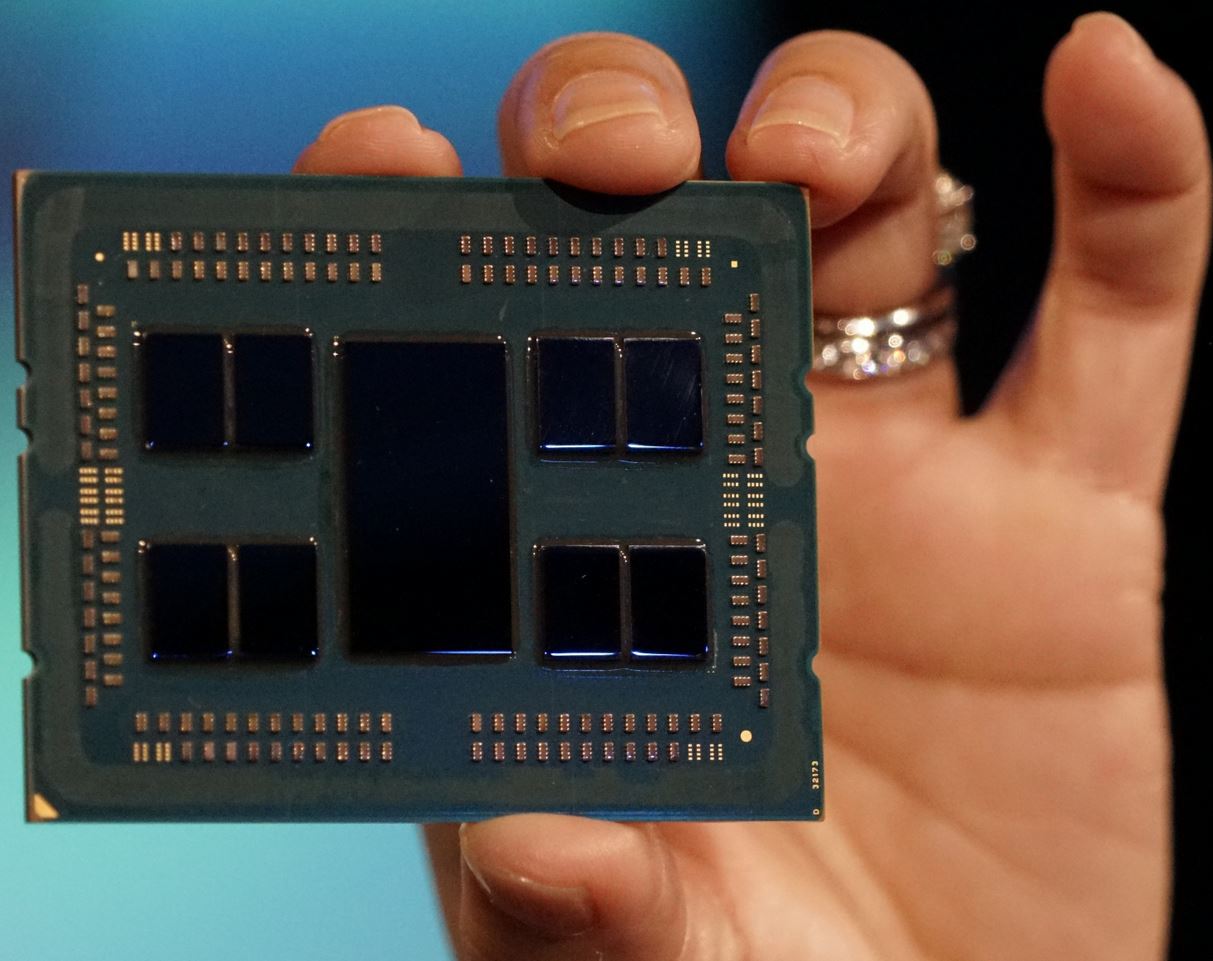

The innovative EPYC design features a multi-chip implementation that keeps costs in check, providing AMD with firm footing against Intel in the pricing war. But the x86 instruction set is an even larger draw for potential customers. AMD's customers, both large and small, can execute the same x86 code on EPYC servers as they run on Intel's kit, albeit after the requisite qualification cycles. In either case, x86 applications comprise roughly 95% of the data center, as evidenced by Intel's dominating market share. That works to AMD's advantage and keeps other promising ARM-based competitors on the sidelines.

The battle lines are drawn for x86 dominance, which must be concerning for Intel as it grapples with an ongoing shortage and its perennially-delayed 10nm process. Especially as AMD readies its 7nm EPYC Rome processors for launch next year. But EPYC's value proposition stretches beyond price and the advantages of the x86 instruction set. Hefty memory capacity, throughput, and a generous slathering of PCIe lanes make these processors well-suited for many key segments of both the server and supercomputer world. Let's take a look.

Perlmutter: The Milan-Powered Shasta Supercomputer

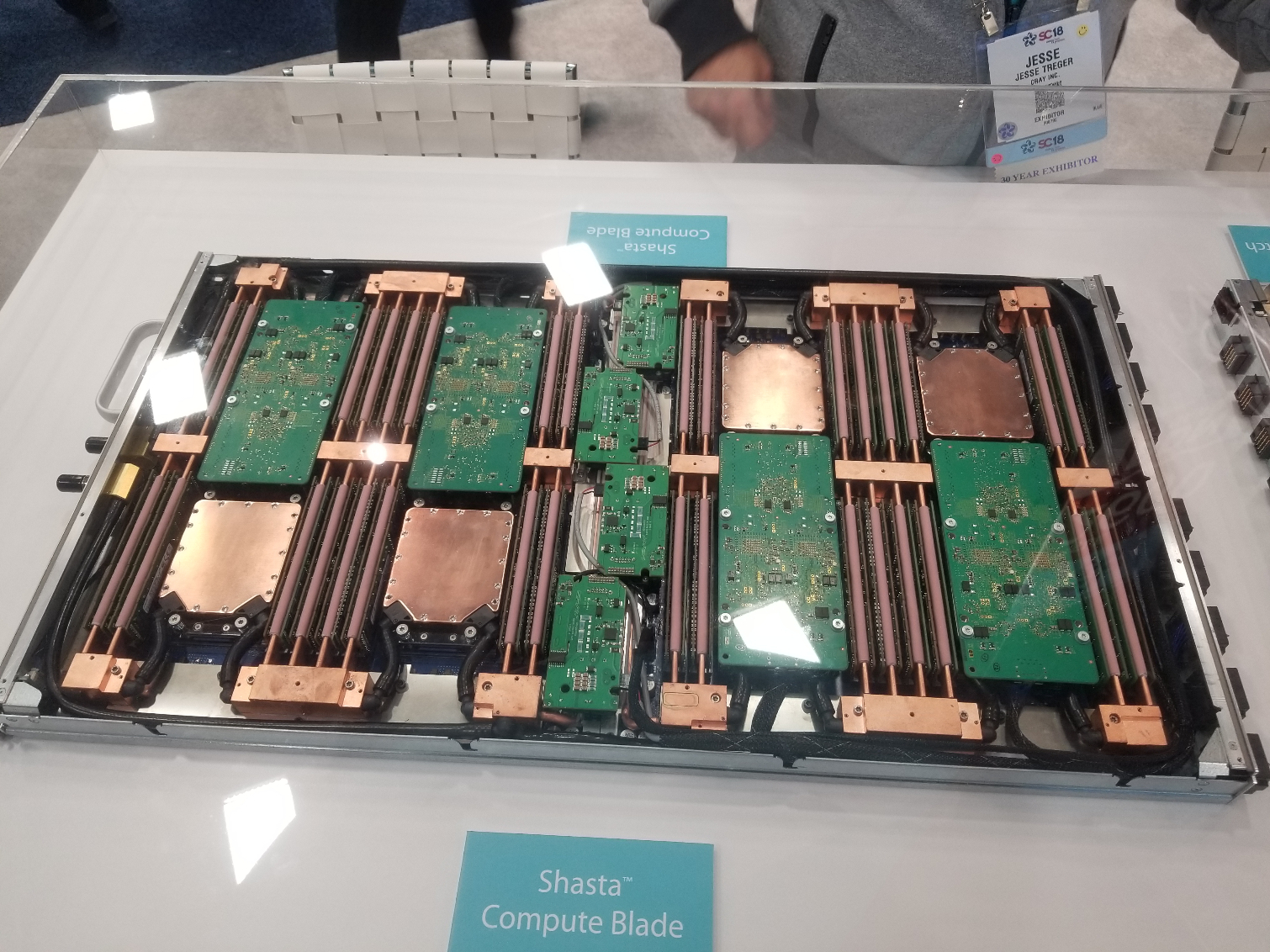

It didn't take long for us to hone in on Cray's Shasta Supercomputer (deep dive here). This new system comes packing AMD's unreleased EPYC Milan processors. Those are AMD's next-next generation data center processors. The new supercomputer will also use Nvidia's "Volta-Next" GPUs, with the two combining to make an exascale-class machine that will be one of the fastest supercomputers in the world.

The Department of Energy's forthcoming Perlmutter supercomputer will be built with a mixture of both CPU and GPU nodes, with the CPU node pictured here. This watercooled chassis houses eight AMD Milan CPUs. We see four copper waterblocks that cover the Milan processors, while four more processors are mounted inverted on the PCBs between the DIMM slots. This system is designed for the ultimate in performance density, so all the DIMMs are also watercooled.

But impressive specs aside, mindshare is the real win for AMD here. Data center customers buy into long-term roadmaps, rather than a single generation of products. It's a telling sign that both Cray and the Department of Energy have brought into the third generation of EPYC, particularly when the second-gen Rome processors have yet to ship in volume. We would have never expected this type of buy-in from several of the leaders in supercomputing before the debut of the first-gen Naples processors last year. This achievement is more than just a bullet point on AMD's marketing documentation: The EPYC family of processors, especially the next-gen Rome and Milan models, pose a serious threat to Intel's data center dominance.

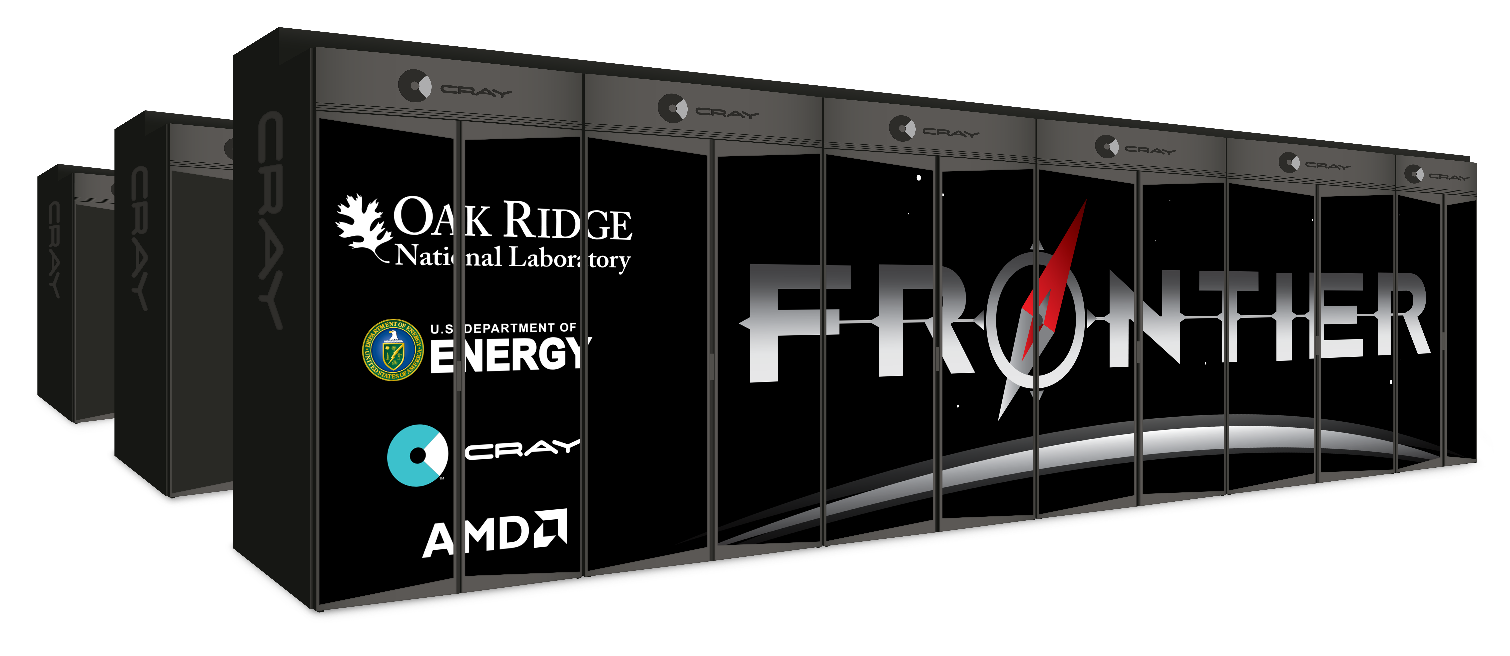

EPYC and Radeon in Frontier, the World's Fastest Supercomputer

Speaking of the Shasta supercomputer blades, AMD and Cray announced they had been selected to power Frontier, which is soon to be the world's fastest supercomputer when it comes online in 2021. Frontier will crank out 1.5 exaflops of compute power, which is faster than the current top 160 supercomputers, combined. That compute power comes courtesy of next-generation variants of AMD's EPYC processors and Radeon Instinct GPUs.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

AMD optimized its custom EPYC processor with support for new instructions that provide optimal performance in AI and supercomputing workloads. "It is a future version of our Zen architecture. So think of it as beyond..what we put into Rome," said AMD CEO Lisa Su. That could indicate that AMD will use a custom variant of its next-next-gen EPYC Milan processors for the task, but that remains unconfirmed.

The CPUs will be combined with high-performance Radeon GPU accelerators that have extensive mixed-precision compute capabilities and high bandwidth memory (HBM). AMD specified that the GPU will come to market in the future, but didn't elaborate about the future of the custom-designed EPYC processor. AMD will connect each EPYC CPU to four Radeon Instinct GPUs via a custom high-bandwidth low-latency coherent Infinity Fabric. This is an evolution of AMD's foundational Infinity Fabric technology that it currently uses to tie together CPU and GPU die inside its processors, but now AMD has extended it to operate over the PCIe bus.

To put Frontier's performance into perspective, the supercomputer will be able to crunch up to 1.5 quintillion operations per second, which is equivalent to solving 1.5 quintillion mathematical problems every second. Cray also touts the performance of its networking solution as offering 24,000,000 times the bandwidth of the fastest home internet connection, or equivalent to being able to download 100,000 full HD movies in one second.

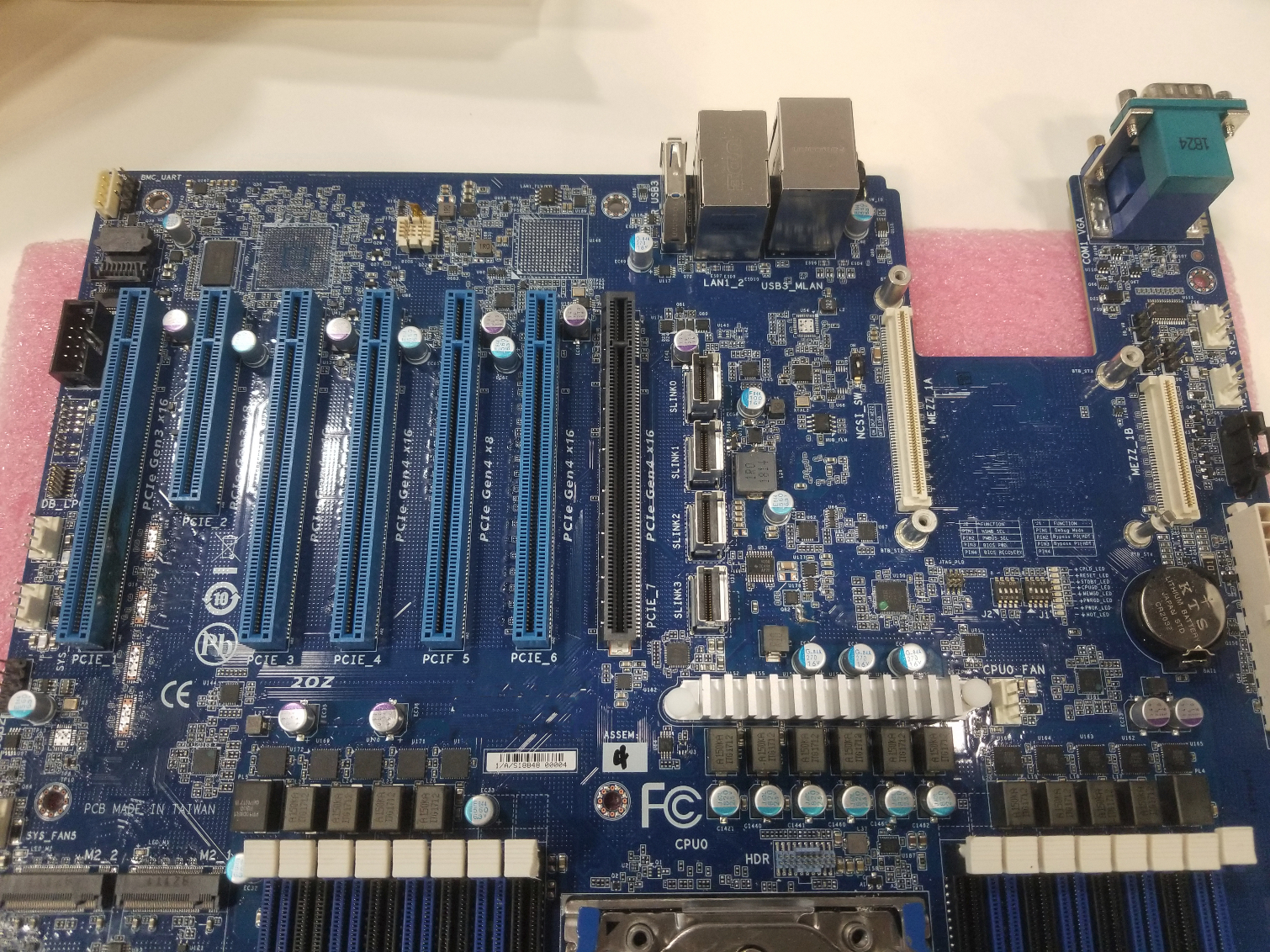

All Roads Lead To Rome

AMD's 7nm Rome processors are ushering in a new wave of multi-chip architectures for data center processors, but AMD has remained coy about exactly when the 64-core 128-thread processors will come to market, instead vaguely saying they will arrive in 2019. According to a motherboard vendor that displayed a new motherboard designed to support the Rome processors and PCIe 4.0, the first wave of fully-compatible Rome motherboards will arrive in Q3 2019, likely signaling the beginning of shipments for the Rome chips.

The Rome processors expose 128 PCIe lanes. This motherboard distributes the lanes to four x16 slots and two x8 slot. The board also supplies two PCIe 3.0 x16 slots and two M.2 SSD sockets to the lower left. That type of connectivity signifies the main value attraction of AMD's current-gen Naples processors, but adding more cores to the equation, and the lower power- and cost-per-core that come along with the 7nm process, will create an even more competitive platform next year.

EPYC Reverberations in China

The waves of EPYC have come crashing down on Chinese shores. Chinese chip producer Hygon participates in a joint venture with AMD that allows it to license the Zen microarchitecture to create 'Dhyana' data center processors that are essentially a carbon copy of AMD's EPYC Naples chips . Those chips pose a big threat to Intel in the fast-growing Chinese market, especially considering China's existing protectionist measures that find it promoting indigenous products. Whip in the boiling trade war, and you have a recipe for explosive growth in the Chinese market, particularly if the joint venture begins to spin out 7nm Rome processors next year.

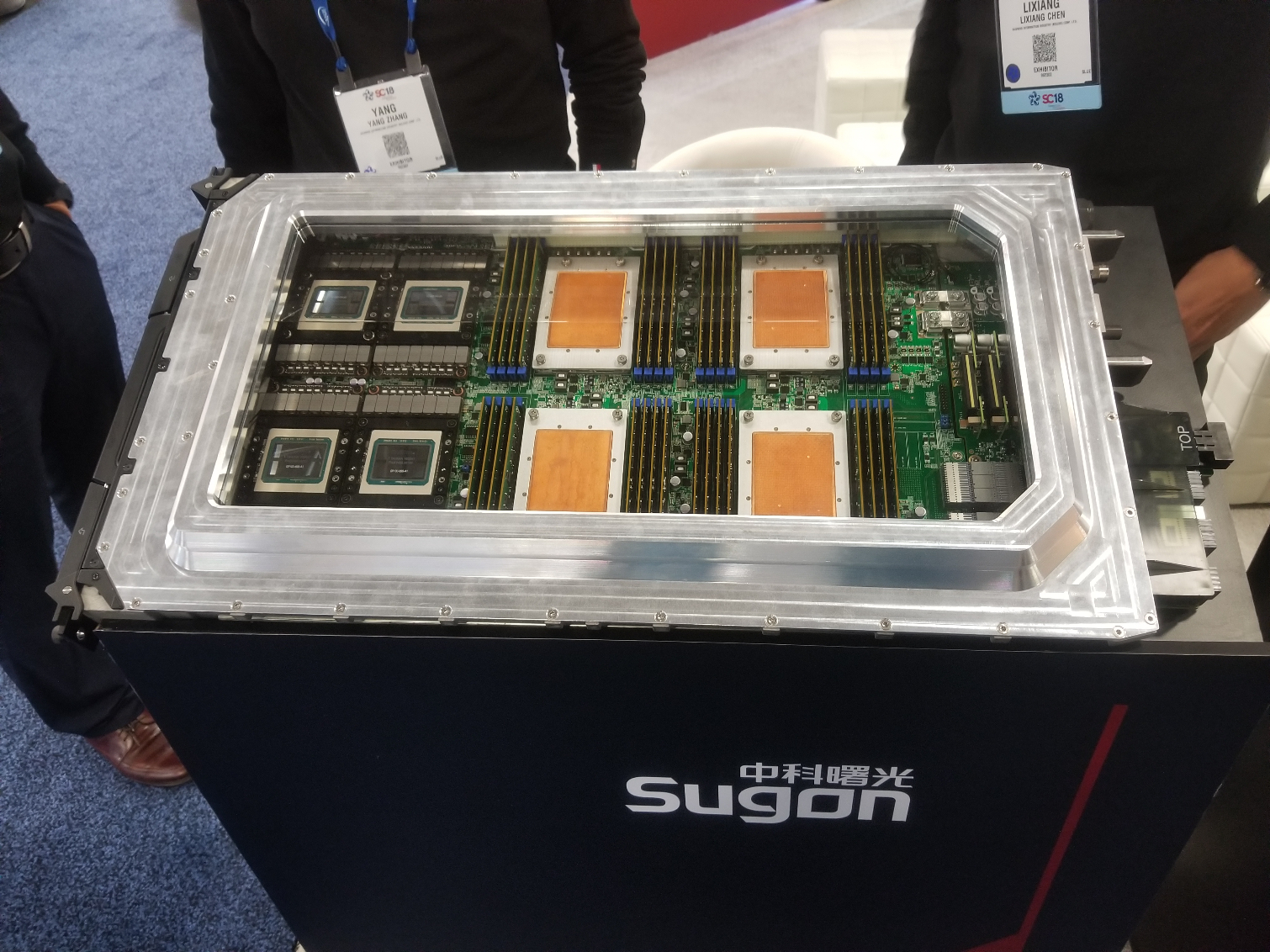

Sugon has a full-scale Nebula server rack that immersion cools individual compute trays that come bristling with four Nvidia Teslas and four Dhyana CPUs apiece. Immersion cooling provides unparalleled performance density, but it requires specialized infrastructure to handle phase-change cooling equipment. Sugon's solution hosts 42 compute blades and comes with a secondary cabinet that includes the phase-change cooling equipment. As you can see in the video above, the sleds come with a quick-release connection that allows for near-hot-pluggable nodes without dealing with complicated tubing connections.

Dell/EMC Buys In

Dell and EMC recently joined forces to become the largest seller of enterprise hardware on the planet, making AMD's OEM win with the behemoth all the more important. Several ODMs have also developed EPYC servers, but OEM systems help broaden the platforms' appeal to a larger range of enterprise customers. Dell's servers come with all the hallmarks of a reliable enterprise-class system, like extensive support and maintenance contracts that make the jump to an EPYC system a breeze for new customers.

Dell/EMC launched its EPYC server line with three models. The Dell EMC PowerEdge R7425 is the dual-socket 2U workhorse of the group. This server supports up to 4TB of memory and up to 64 cores with the high-end EPYC processors. That's a good fit for virtualized environments and analytics workloads, among others.

HPE's Got Game, Too

When HPE first announced its new Proliant DL385 Gen10, it revealed that it had set floating point world records in SPECrate2017_fp_base and SPECfp_rate2006 with the EPYC processors.

Now the company has its HPE Apollo 35 system available. These servers consist of four dual-socket sleds (pictured above) that house 2TB of memory and two processors apiece. Four sleds fit into one 2U chassis, thus providing excellent performance density.

There's importance in AMD's return to HPE's Proliant lineup. AMD hasn't been present in that lineup for several years, so it’s been an all-Intel affair. Now that AMD has secured a beachhead with the EPYC Naples processors, it's assured that we will see new models with Rome processors soon.

Don't Forget Supermicro's Servers

Due to extensive collaboration between the two companies, Supermicro is often thought of as an extension of Intel. But the company is also getting into the EPYC server act with a range of both servers and motherboards that span both single- and dual-socket systems.

The AS-2123BT-HNC0R (that name just rolls off the tongue) server is a four-node design. Each node features two EPYC 7000-series processors of your choice and supports up to 2TB of ECC LRDIMMs at DDR4-2666. Storage accommodations include six SAS 3 ports and 4 NVMe sockets.

...but it Also has Motherboards

Unlike the big server vendors like Dell/EMC and HPE, Supermicro also sells motherboards separately. Here we see both dual-socket and single-socket models on display. This SP3-socket motherboard supports two EPYC 7000-series processors and up to 2TB of ECC memory running at DDR4-2666. Two integrated 10GBe LAN ports supply networking while the ten SATA 6Gb/s ports are complemented by 2 SATA DOMs and an M.2 port.

Gigabyte Weighs In

Gigabyte was one of the early adopters of the EPYC platform. The Gigabyte G291-Z20 is all about GPU compute, which is a common use-case for EPYC servers due to their support for an industry-leading 128 PCIe lanes. This system supports two EPYC 7000-series processors that host up to eight double-slot GPUs. Dual 2200W 80 PLUS platinum redundant power supplies provide the juice for a wide range of machine learning workloads. Gigabyte supports Nvidia's Tesla V100's, but it also has developed its own homegrown cooler to create passively-cooled Radeon Instinct MI25 GPUs.

Which brings us to one of AMD's other advantages. As the only company that produces both x86 host processors and GPUs, AMD can create tightly-coupled customized products. We'll see this initiative mature as the company rolls support for communication between its CPUs and GPUs across its Infinity Fabric (XGMI) interface. That's widely thought to come to market with the Rome processors next year.

ASRock Rack Stacks GPUs

ASRock Rack had its AMD EPYC system on display with four Radeon Instinct GPUs. This compact single-socket solution is an example of how motherboard vendors can use AMD's copious PCIe lane allotments to provide more native connectivity inside a single EPYC server than you would get from a dual-socket Intel platform.

AMD holds the unequivocal lead in PCIe connectivity for a single-socket server with 128 lanes. That far outweighs Purley's single-socket maximum of 48+20 (CPU+chipset). Intel admits it has a "lane deficiency," but claims this won't impact the average consumer. AMD naturally thinks differently, contending it has a big advantage for customers with big I/O needs.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.