AMD Goads Nvidia Over Stingy VRAM Ahead of RTX 4070 Launch

Games are using more VRAM, and AMD suggests Nvidia isn't offering enough.

While Nvidia's GeForce RTX 4070 hasn't launched yet, there have been plenty of leaks about it, including the probability that it will boast 12GB of GDDR6X VRAM. But with some of the newest games chewing through memory (especially at 4K), AMD is taking the opportunity ahead of the 4070's release to poke at Nvidia and highlight the more generous amounts of VRAM it offers.

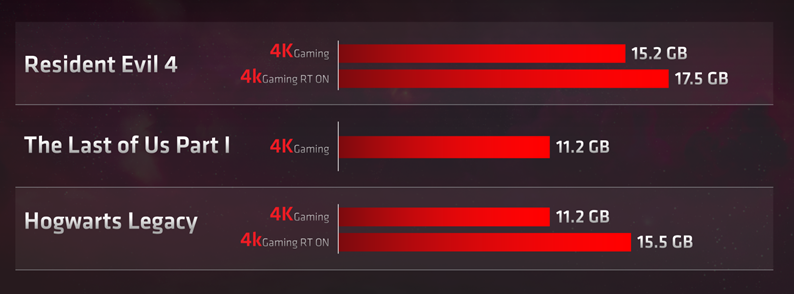

In a blog post titled "Are YOU an Enthusiast?" (caps theirs), AMD product marketing manager Matthew Hummel highlights the extreme amounts of VRAM used at top specs at 4K for games like the Resident Evil 4 remake, the recent (terrible) port of The Last of Us Part I, and Hogwarts Legacy. The short version is that AMD says that to play each of these games at 1080p, it recommends its 6600-series with with 8GB of RAM; at 1440p, its 6700-series with 12GB of RAM; and at 4K, the 16GB 6800/69xx-series. (That leaves out its RTX 7900 XT with 20GB of VRAM and 7900 XTX with 24GB).

The not-so-subtle subtext? You need more than 12GB of RAM to really game at 4K, especially with raytracing. Perhaps even less subtle? The number of times AMD mentions that it offers GPUs with 16GB of VRAM starting at $499 (three, if you weren't counting).

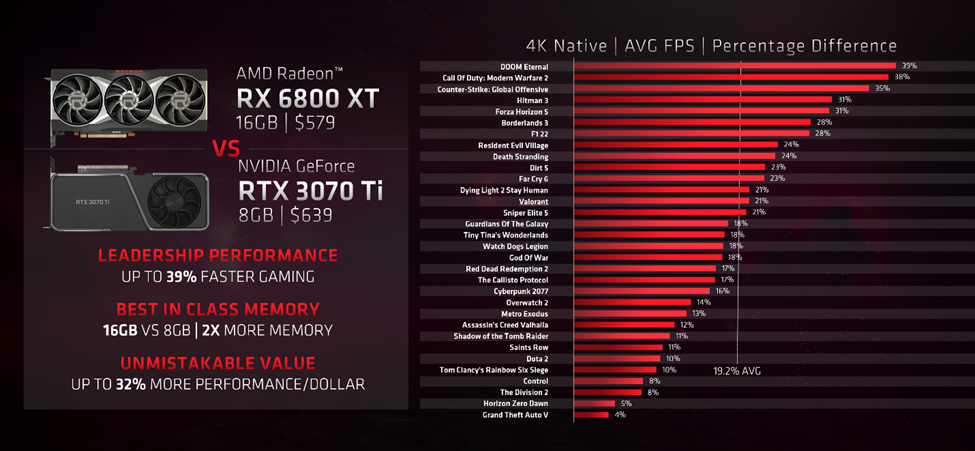

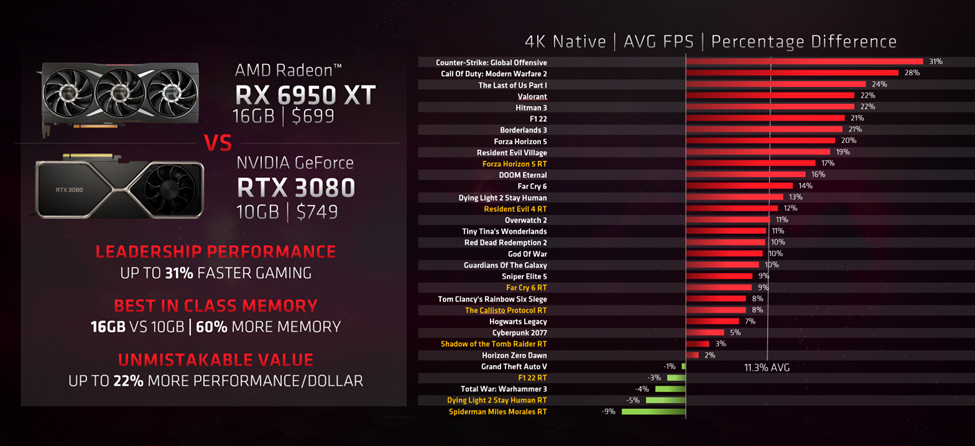

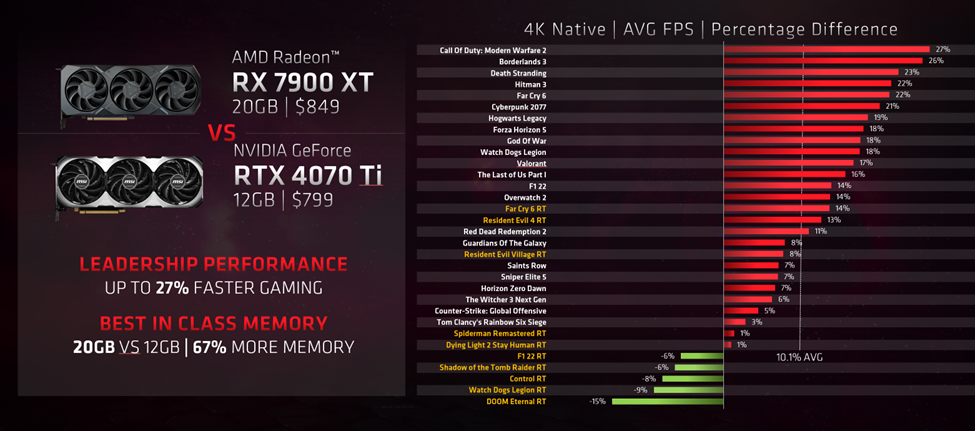

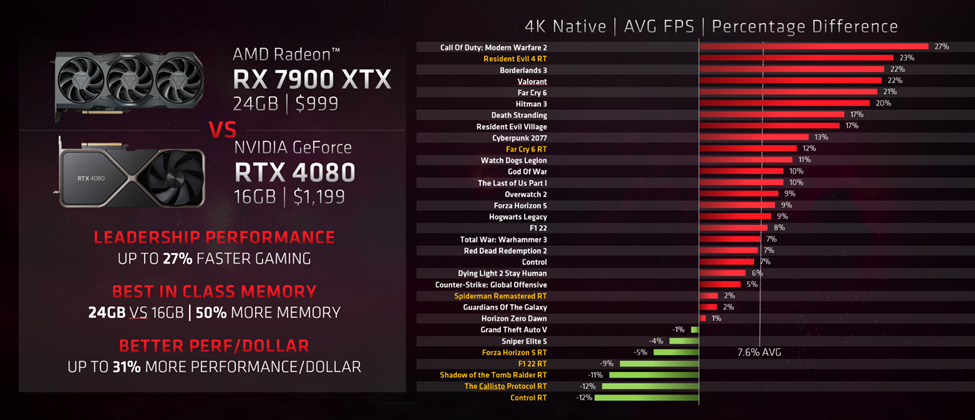

In an attempt to hammer its point home, AMD supplied some of its own benchmark comparisons versus Nvidia chips, pitting its RX 6800 XT against Nvidia's RTX 3070 Ti, the RX 6950 XT with the RTX 3080, the 7900 XT versus the RTX 4070 Ti, and the RX 7900 XTX taking on the RTX 4080. Each had 32 "select games" at 4K resolution. Unsurprisingly for AMD-published benchmarks, the Radeon GPUs won a majority of the time. You can see these in the gallery below:

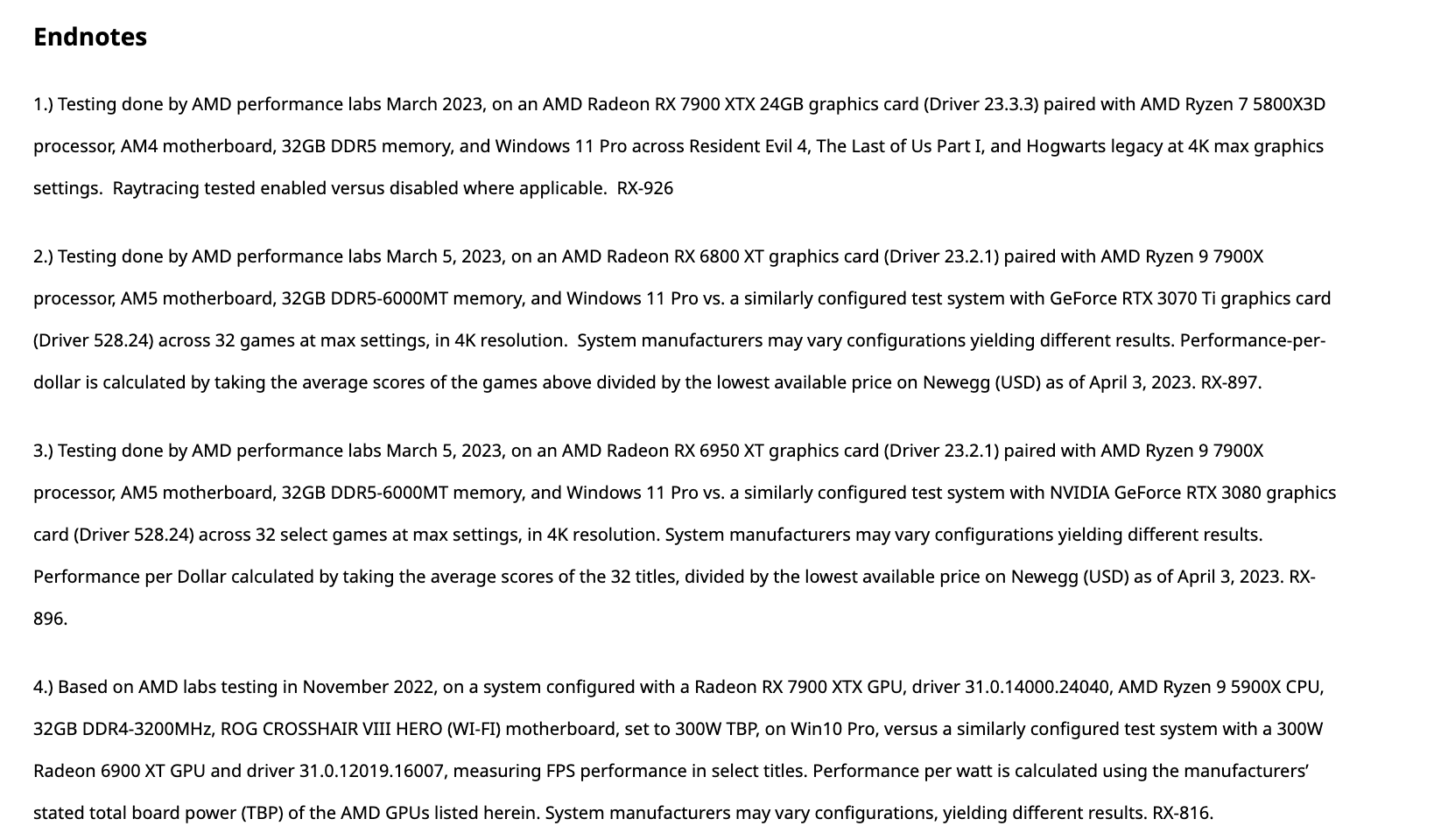

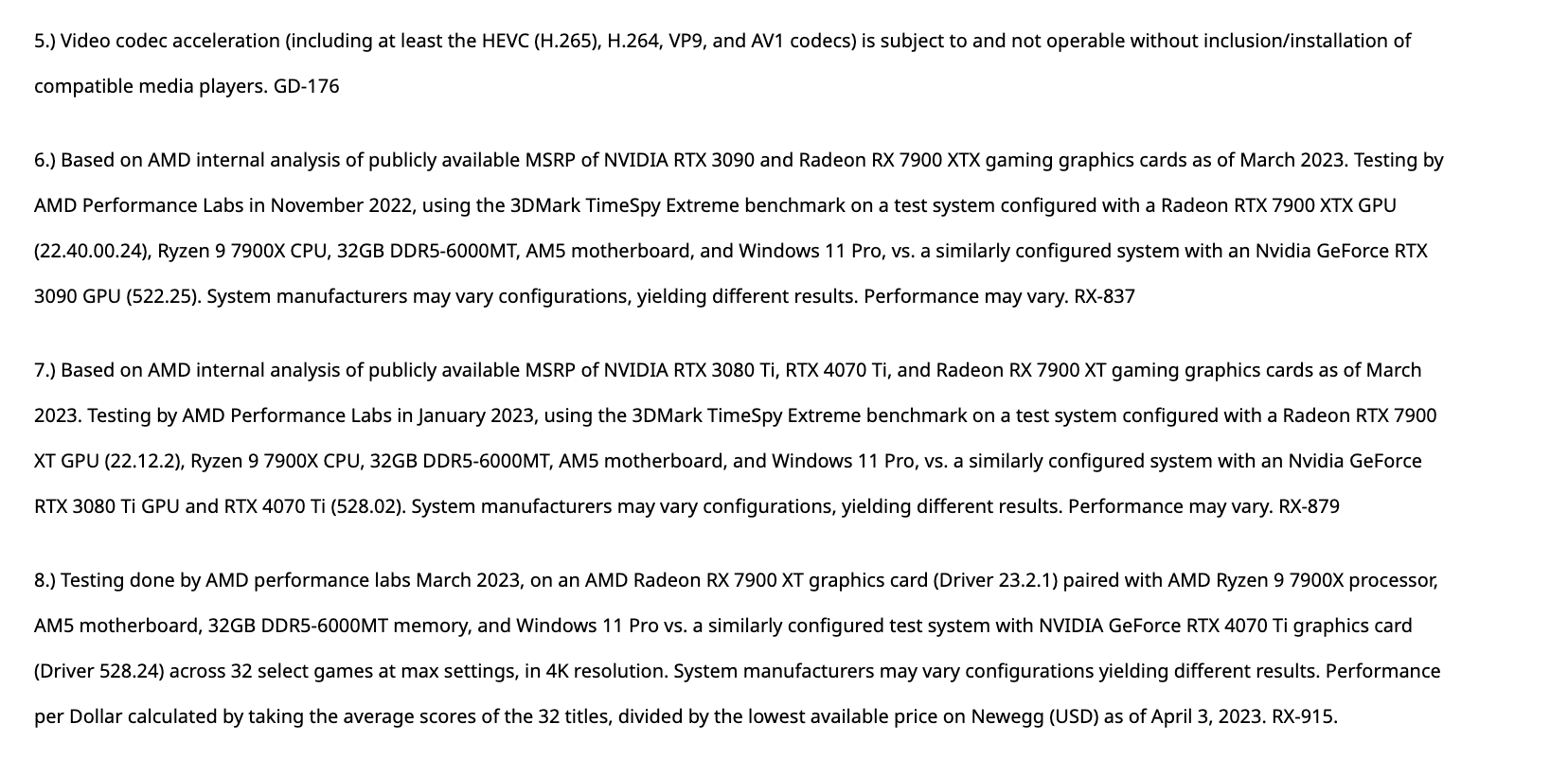

Like any benchmark heavy piece of marketing, there are a ton of testing notes here. We've reproduced those in the gallery below. Some interesting notes include differing CPUs, motherboards (including some with total board power adjusted), changes in RAM speeds, and more depending on the claim. The majority of the gaming tests use the same specs, but other claims vary and some use older test data. The games are not the same across each GPU showdown, and it also doesn't appear that these tests used FSR or DLSS, for those who are interested in those technologies.

We won't know for sure how Nvidia's RTX 4070 performs against any of these GPUs until reviews go live, but AMD is making sure you know it's more generous with VRAM (though it doesn't seem to differentiate between the GDDR6 it's using against Nvidia's GDDR6X).

It's also worth pointing out that the options for VRAM capacities are directly tied with the size of the memory bus, or number of channels prefer. Much like AMD's Navi 22 that maxes out with a 192-bit 6-channel interface (see RX 6700 XT and RX 6750 XT), Nvidia's AD104 (RTX 4070 Ti and the upcoming RTX 4070) supports up to a 192-bit bus. With 2GB chips, that means Nvidia can only do up to 12GB — doubling that to 24GB is also possible, with memory in "clamshell" mode on both sides of the PCB, but that's an expensive approach that's generally only used in halo products (i.e. RTX 3090) and professional GPUs (RTX 6000 Ada Generation).

AMD's taunts also raise an important question: What exactly does AMD plan to do with lower tier RX 7000-series GPUs? It's at 20GB for the $800–$900 RX 7900 XT. 16GB on a hypothetical RX 7800 XT sounds about right... which would then put AMD at 12GB on a hypothetical RX 7700 XT. Of course, we're still worried about where Nvidia might go with the future RTX 4060 and RTX 4050, which may end up with a 128-bit memory bus.

There's also a lot more going on than just memory capacity, like cache sizes and other architectural features. AMD's previous generation RX 6000-series offered 16GB on the top four SKUs (RX 6950 XT, RX 6900 XT, RX 6800 XT, and RX 6800), true, but those aren't latest generation GPUs, and Nvidia's competing RTX 30-series offerings still generally managed to keep pace in rasterization games while offering superior ray tracing hardware and enhancements like DLSS — while also boosts AI performance in things like Stable Diffusion.

Ultimately, memory capacity can be important, but it's only one facet of modern graphics cards. For our up-to-date thoughts on GPUs, check out our GPU hierarchy and our list of the best graphics cards for gaming.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Andrew E. Freedman is a senior editor at Tom's Hardware focusing on laptops, desktops and gaming. He also keeps up with the latest news. A lover of all things gaming and tech, his previous work has shown up in Tom's Guide, Laptop Mag, Kotaku, PCMag and Complex, among others. Follow him on Threads @FreedmanAE and BlueSky @andrewfreedman.net. You can send him tips on Signal: andrewfreedman.01

-

watzupken I think the lack of VRAM in current game titles is a problem, but I am not sure if the main reason is because we are not given enough VRAM or simply because game developers are not optimising the game as they used to. It is impossible to fit a 8GB VRAM buffer? I don’t think that is the case. Of course we can solve the problem with more VRAM, but the more you have, the less optimisation will be done by the developers. Eventually the problem will catch up with you even with 16GB of VRAM. Game titles in 2022 pretty much run well and with decent image quality with 8GB VRAM. There are few titles that may need more than 8GB, but dropping the texture quality settings from Ultra to the next highest setting typically solves the problem. Moving to 2023, suddenly VRAM requirements jumped 50% or more, while visually, they don‘t really look much better. So it just screams poor optimisation to me.Reply -

jeremyj_83 Reply

You cannot forget that consoles while having 16GB VRAM that VRAM is shared between the CPU and GPU. The Xbox for example reserves 2GB RAM for the OS so only the Series X you have 14GB RAM for GPU & CPU but on the Series S (10GB VRAM) you only have 8GB for the GPU & CPU. Now your PC GPU's VRAM is dedicated to it only. In many ways your 16GB consoles really only have 8-10GB VRAM for the GPU. This is why the console games usually use lower texture settings or resolution.PlaneInTheSky said:Nvidia and AMD are asking extremely high prices for GPU, the bare minimum to ask is that they can keep up with consoles that have 16GB VRAM.

I don't think every GPU needs to have 16GB VRAM. There is no reason for something like an RX6400 to have that much VRAM because it doesn't have the horsepower to drive a game with enough texture quality and resolution to come close to needing that much VRAM. Heck it cannot even come close to getting to where you need 8GB VRAM.PlaneInTheSky said:A PC GPU released in 2023 should have at least 16GB VRAM, no ifs or buts. -

CelicaGT Replywatzupken said:I think the lack of VRAM in current game titles is a problem, but I am not sure if the main reason is because we are not given enough VRAM or simply because game developers are not optimising the game as they used to. It is impossible to fit a 8GB VRAM buffer? I don’t think that is the case. Of course we can solve the problem with more VRAM, but the more you have, the less optimisation will be done by the developers. Eventually the problem will catch up with you even with 16GB of VRAM. Game titles in 2022 pretty much run well and with decent image quality with 8GB VRAM. There are few titles that may need more than 8GB, but dropping the texture quality settings from Ultra to the next highest setting typically solves the problem. Moving to 2023, suddenly VRAM requirements jumped 50% or more, while visually, they don‘t really look much better. So it just screams poor optimisation to me.

While poor optimization is definitely part of the mix, don't expect that to change. As for the timing? Most developers have begun releasing more current gen exclusive console games this year. Last gen had 8GB, this gen has 16GB. Yes, some of that goes to the OS but it still leaves >8GB on the table for the GPU to utilize. Ultimately the reasons don't matter. For some current and probably most future AAA titles at >1080p 8GB cards are DOA. The writing has been on the wall for a couple years now some of us just did not want to see it.

To add, this is more of an issue for last gen NVIDIA cards, whose GPUs have the processing horsepower to run current and releasing AAA titles just not the VRAM capacity. The HUB video posted by PlaneInTheSky is pretty damning, even after a "fix" the 3070 struggles with low framerates and fuzzy, popping in textures. -

-Fran- AMD marketing getting a jab across to nVidia... I guess now I'll just wait for the usual AMD karma to hit back in some fashion XD!Reply

Not less true what they say, but given their recent history and when mocking nVidia, they've had some hilarious karma payback.

Anyway, I do like more VRAM than not. As they say: "better to have it and not need it than need it and not have it".

Plus, these GPUs are darn expensive already, so they better be good long term.

Regards. -

I think anyone who buys a GPU with 8GB VRAM is making a mistake. 12/16GB is bare minimum IMO.Reply

My 1080 Ti in 2017 had 11GB... not sure why anyone would think 8GB would cut it with today's AAA titles. -

jkflipflop98 Probably shouldn't be talking trash to the guy that's already kicking your teeth out.Reply -

CelicaGT Replyjkflipflop98 said:Probably shouldn't be talking trash to the guy that's already kicking your teeth out.

Sometimes, that's exactly what you need to do. Time will tell if AMD gets egg on their face (again). -

peachpuff Reply

Shareholders think 4gb is enough...TravisPNW said:I think anyone who buys a GPU with 8GB VRAM is making a mistake. 12/16GB is bare minimum IMO.

My 1080 Ti in 2017 had 11GB... not sure why anyone would think 8GB would cut it with today's AAA titles. -

thisisaname The best reply AMD should have is releasing a card not talking trash about someone else's!Reply