Nvidia RTX 6000 Ada Now Available: 18,176 CUDA Cores at 300W

'Only' $6,800 to $8,600 for professionals

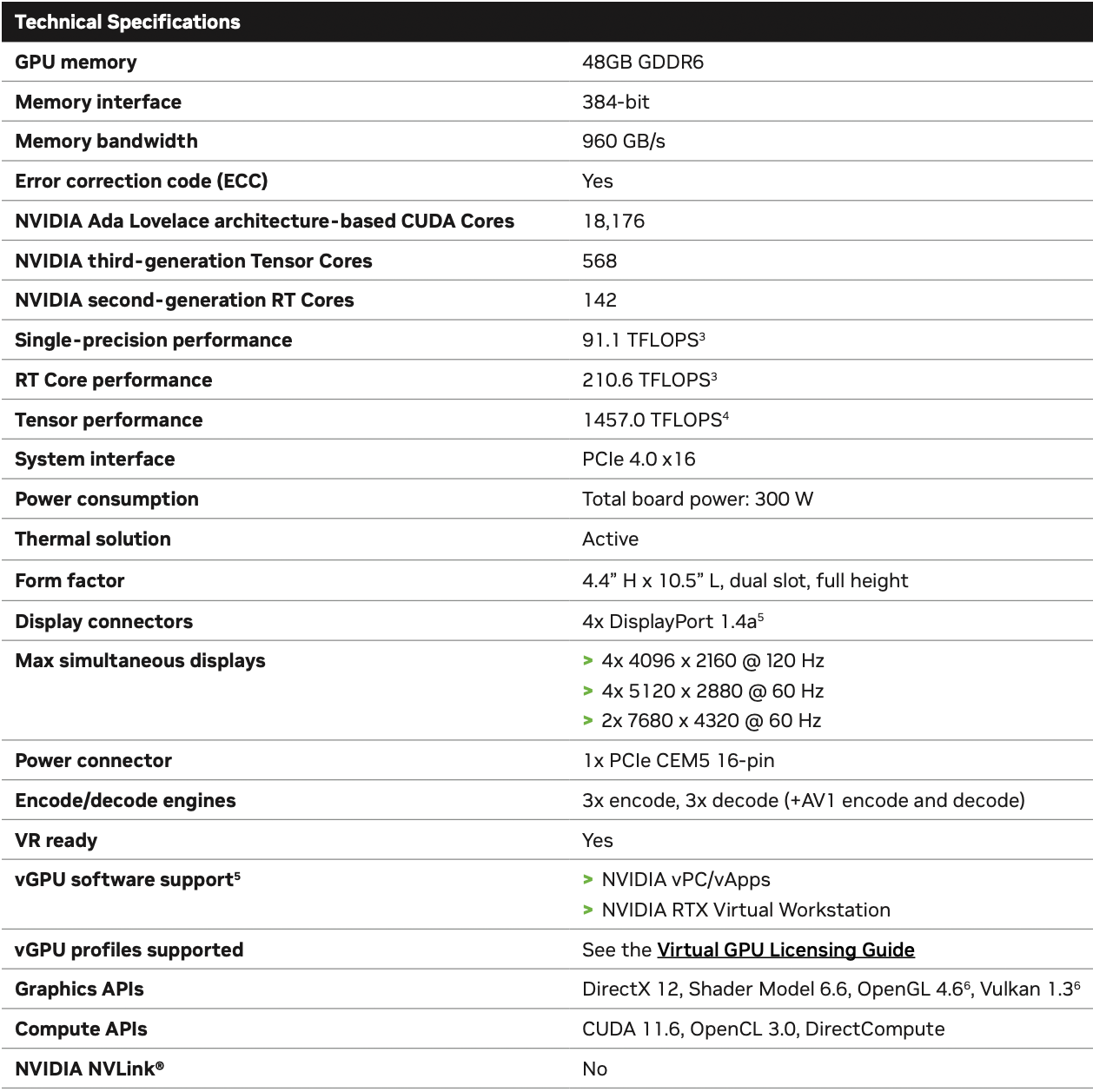

Nvidia has quietly started selling its RTX 6000 Ada Generation graphics card. Based on the AD102 GPU with 18,176 CUDA cores enabled, this is the 'fattest' AD102 configuration available to date — 142 of the 144 Streaming Multiprocessors are enabled. Rated for 300W of power consumption, the board is designed for computer aided design, digital content creation, virtual desktop infrastructure, and other professional applications.

Pricing varies pretty widely, ranging from $6,800 to $8,600 depending on the retailer. Nvidia has the card listed at $6,800, with a limit of five per customer — just in case you're trying to put together a bunch of workstations. Other outlets like CDW are marking it up an additional 25% or so, with availability set for 4–6 weeks out.

Nvidia's RTX 6000 Ada based on the AD102 GPU has 18,176 CUDA cores enabled, and while Nvidia doesn't specify clocks anywhere that we can find, it's partners like PNY and Leadtek quote to 91.1 FP32 TFLOPS of compute performance. That equates to a GPU boost clock of 2505 MHz and is about 10% higher than the GeForce RTX 4090, which features 16,384 CUDA cores. However, there are clear differences between the RTX 6000 Ada and the consumer-focused RTX 4090.

The RTX 6000 Ada is designed for professional applications, and so it carries 48GB of GDDR6 memory with ECC enabled, featuring a peak memory bandwidth of 960 MB/s — slightly lower than 1,008 MB/s offered by the GeForce RTX 4090. That suggests Nvidia is using 20 Gbps GDDR6 memory rather than the slightly faster 21 Gbps GDDR6X, but power use may also be slightly lower on the GDDR6 chips.

The RTX 6000 comes equipped with four DisplayPort 1.4a connectors that can drive four 4K or 5K displays (4K at 240 Hz is supported via DSC), or two 8K displays (120 Hz with DSC).

One of the intriguing things about the RTX 6000 Ada is its power consumption. Despite its higher compute performance and 48GB of memory onboard, the RTX 6000 Ada is rated for just 300W of power, down from the 450W rating on the gamer-oriented GeForce RTX 4090. Both GPUs have similar official boost clocks of around 2.5 GHz, though the minimum guaranteed clocks on the RTX 6000 are lower and we suspect it won't clock quite as high in heavy computational workloads.

Because of its relatively limited power consumption, the RTX 6000 Ada Generation graphics card comes with a dual-wide cooling system and a blower fan to ensure that it fits into workstations and servers. It uses one 12VHPWR (16-pin CEM 5.0 PCIe) power connector for power delivery, so it will require an appropriate cable adapter to fit into existing machines that do not have a native 16-pin connector.

Another interesting thing about Nvidia's RTX 6000 Ada professional graphics card is its price. Nvidia sells the board for $6,800, slightly lower than the $6,999 launch price of its RTX A6000 predecessor. But value added reseller CDW lists the product for $8,615, while a Japanese retailer found by @momomo_us lists the unit for $8,524 without tax. We are not sure why resellers are charging so much more than Nvidia itself, but for now it looks like it makes the most sense to buy the RTX 6000 Ada board directly from the Nvidia.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

Kamen Rider Blade Whomever came up with the name just made things needlessly confusing.Reply

Why the eff are they calling it the RTX 6000 when their mainline consumer Video Cards are called RTX #000 series?

We're currently on the RTX 4000 series

In 2x generations, there is going to be a literal name collision with RTX 6000, unless they plan on starting a brand new naming scheme all together.

Granted that has happened before, but still.

I thought they planned on Using <Letter Generation>#### as their new Professional WorkStation card Product Naming Scheme.

After 1 generation of use, they throw it away?

Now they're just calling it RTX 6000 Ada Generation ?

How is that a good naming scheme?

It was better when they used the Quadro name and everybody knew what was their Professional WorkStation line. -

anonymousdude ReplyKamen Rider Blade said:Whomever came up with the name just made things needlessly confusing.

Why the eff are they calling it the RTX 6000 when their mainline consumer Video Cards are called RTX #000 series?

We're currently on the RTX 4000 series

In 2x generations, there is going to be a literal name collision with RTX 6000, unless they plan on starting a brand new naming scheme all together.

Granted that has happened before, but still.

I thought they planned on Using <Letter Generation>#### as their new Professional WorkStation card Product Naming Scheme.

After 1 generation of use, they throw it away?

Now they're just calling it RTX 6000 Ada Generation ?

How is that a good naming scheme?

It was better when they used the Quadro name and everybody knew what was their Professional WorkStation line.

To make matters worse there's an Quadro RTX 6000 that's fairly recent too. So you've got Quadro RTX 6000, RTX A6000, and now RTX 6000 Ada Generation. Absolute genius here by Nvidia. -

Kamen Rider Blade Reply

To: Jensen Huang, CEO of nVIDIA.anonymousdude said:To make matters worse there's an Quadro RTX 6000 that's fairly recent too. So you've got Quadro RTX 6000, RTX A6000, and now RTX 6000 Ada Generation. Absolute genius here by Nvidia.

FIRE the idiot on your Marketing Team, whomever was the one who that thought this naming scheme was a good idea, they deserve to be FIRED ASAP! -

catavalon21 You can't make this stuff up. Used to be their pro cards ran DP at 1/2 the 32-bit rate but the spec sheet for the RTX 6000 doesn't mention it. That, and some error correction and other goodies, justified the cost.Reply -

Ar558 Yeh this is just stupid, although I doubt anyone will actually accidentally by a $7k GPU without doing the research.Reply -

JDJJ Imagine paying $7000-$8000 and it doesn't have DisplayPort 2.1. Jensen is really being stubborn about this. Sure, there's been a bit of a catch 22 about adoption...DP 2.1 has been out a few years now, but the card makers got complacent with crypto sales, and the monitor makers weren't making the update without supporting cards. But AMD cards now come with DP 2.1 support, and monitors from Samsung and others are rolling out with 2.1 support as we speak.Reply

We've now hit the upper bounds of what DSC compression can do with DP 1.4a's limited bandwidth. This is especially true for creators who have demanding requirements, e.g. a larger color space, etc. But even more problematic is that these newer cards are being held back. We are already seeing the 4090 bump up against the upper limits of 1.4a's bandwidth, and DSC does come at a cost as well ... there's no such thing as a free lunch. While many consumers won't notice the problems and limitations of compression and/or bandwidth limits playing PUBG, professionals should be hesitating about paying a premium over non-Ada versions of pro cards, or upgrading with such a handicap and lack of future-proofing.

I can only think either Jensen and Team Green have gotten so complacent they think as long as you post a bump in processing power, they'll sell out, even if the interface becomes a problem. Or maybe marketing is worried about the optics of updating to 2.1 in the pro card, while assuring the masses that 1.4a is all they need, having misjudged where the market was moving while trying to maximize profits in the wake of the loss of crypto sales. We can only guess at their motives, but at the end of the day, missing the boat on DP 2.1 will probably mean some professionals will see insufficient improvement in this card vs a previous model, especially at the price premium, to justify purchasing now.

I'll be curious to see what happens when AMD fixes their alleged issues with clock speeds on RDNA 3 and puts out a contender to challenge or surpass RTX 4090, such as an RX 7990 XTX rumored to be planned for 2023. If that forces NVIDIA to put out a 4090 Ti, it will certainly need to support DP 2.1 in order to compete, and to show actual gains over the 4090. Where will that put the RX 6000 Ada? Will they quietly update that as well? -

KyaraM This seriously is confusing. Who even thought that up?Reply

Iirc, AMD already said there will be no refresh aka fix, or at least that's what I read. They seem to concentrate on the successor.JDJJ said:Imagine paying $7000-$8000 and it doesn't have DisplayPort 2.1. Jensen is really being stubborn about this. Sure, there's been a bit of a catch 22 about adoption...DP 2.1 has been out a few years now, but the card makers got complacent with crypto sales, and the monitor makers weren't making the update without supporting cards. But AMD cards now come with DP 2.1 support, and monitors from Samsung and others are rolling out with 2.1 support as we speak.

We've now hit the upper bounds of what DSC compression can do with DP 1.4a's limited bandwidth. This is especially true for creators who have demanding requirements, e.g. a larger color space, etc. But even more problematic is that these newer cards are being held back. We are already seeing the 4090 bump up against the upper limits of 1.4a's bandwidth, and DSC does come at a cost as well ... there's no such thing as a free lunch. While many consumers won't notice the problems and limitations of compression and/or bandwidth limits playing PUBG, professionals should be hesitating about paying a premium over non-Ada versions of pro cards, or upgrading with such a handicap and lack of future-proofing.

I can only think either Jensen and Team Green have gotten so complacent they think as long as you post a bump in processing power, they'll sell out, even if the interface becomes a problem. Or maybe marketing is worried about the optics of updating to 2.1 in the pro card, while assuring the masses that 1.4a is all they need, having misjudged where the market was moving while trying to maximize profits in the wake of the loss of crypto sales. We can only guess at their motives, but at the end of the day, missing the boat on DP 2.1 will probably mean some professionals will see insufficient improvement in this card vs a previous model, especially at the price premium, to justify purchasing now.

I'll be curious to see what happens when AMD fixes their alleged issues with clock speeds on RDNA 3 and puts out a contender to challenge or surpass RTX 4090, such as an RX 7990 XTX rumored to be planned for 2023. If that forces NVIDIA to put out a 4090 Ti, it will certainly need to support DP 2.1 in order to compete, and to show actual gains over the 4090. Where will that put the RX 6000 Ada? Will they quietly update that as well? -

Friesiansam ReplyKamen Rider Blade said:Whomever came up with the name just made things needlessly confusing.

Why the eff are they calling it the RTX 6000 when their mainline consumer Video Cards are called RTX #000 series?

We're currently on the RTX 4000 series

In 2x generations, there is going to be a literal name collision with RTX 6000, unless they plan on starting a brand new naming scheme all together.

Granted that has happened before, but still.

I thought they planned on Using <Letter Generation>#### as their new Professional WorkStation card Product Naming Scheme.

After 1 generation of use, they throw it away?

Now they're just calling it RTX 6000 Ada Generation ?

How is that a good naming scheme?

It was better when they used the Quadro name and everybody knew what was their Professional WorkStation line.anonymousdude said:To make matters worse there's an Quadro RTX 6000 that's fairly recent too. So you've got Quadro RTX 6000, RTX A6000, and now RTX 6000 Ada Generation. Absolute genius here by Nvidia.

Anyone actually in the market for this kind of GPU, will have done their research and know exactly what they are buying.Kamen Rider Blade said:To: Jensen Huang, CEO of nVIDIA.

FIRE the idiot on your Marketing Team, whomever was the one who that thought this naming scheme was a good idea, they deserve to be FIRED ASAP! -

Kamen Rider Blade Reply

Maybe, but it also leads to needless confusion.Friesiansam said:Anyone actually in the market for this kind of GPU, will have done their research and know exactly what they are buying. -

JDJJ ReplyKyaraM said:This seriously is confusing. Who even thought that up?

What are you confused about? Linus Tech Tips did a video about the 4090 saturating the bandwidth available on DP 1.4a with DSC compression, and the downsides to DSC. From this point forward, any card with better performance will need more bandwidth to see those gains realized on the screen and justify a higher cost.