Tom's Hardware Verdict

The AMD RX 6500 XT has all the makings of a new budget GPU, including underwhelming performance. Unfortunately, the price only qualifies as "budget" in today's world of radically inflated GPU prices, and even then, it will likely sell for far more than its official $199 MSRP.

Pros

- +

+ Theoretically cheaper than the alternatives

- +

+ Decent 1080p medium/high performance

- +

+ Very high clocks

- +

+ Doesn't need a lot of power

Cons

- -

Specs and features cut too far

- -

64-bit memory and x4 PCIe slot connection

- -

Likely won't sell for anywhere close to MSRP

- -

Slower than previous budget GPUs

Why you can trust Tom's Hardware

The AMD Radeon RX 6500 XT represents the first real attempt at a modern "budget" GPU in over two years. With GPU prices still hovering in the stratosphere and the shortages not set to ease until perhaps the end of 2022 (if we're lucky), the best graphics cards are continually overpriced and sold out, and everything in our GPU benchmarks hierarchy feels more like a theoretical upgrade rather than something you should actually consider buying. We wish things were better, and we can hope that the RX 6500 XT will actually manage to land closer to its MSRP than other recent GPU launches… but don't be fooled by that $199 starting price. It will likely end up selling at $300 or more, making this more of a mid-range offering in the traditional sense.

But let's forget about the price for a moment. AMD cut down the core features of the RDNA 2 architecture just about as far as it could go with Navi 24. The result is an incredibly tiny chip, measuring just 107mm^2, but still packing 5.4 billion transistors. It's basically half of the Navi 23 in most areas, plus AMD cut out some of the hardware video codec support for good (bad, actually) measure. Can the resulting graphics card be worth the $200 asking price, never mind the more likely $300+ street prices we're likely to see? That's what we want to find out.

| Graphics Card | RX 6500 XT | RX 6600 | RX 5500 XT | RX 570 |

|---|---|---|---|---|

| Architecture | Navi 24 | Navi 23 | Navi 14 | Polaris 20 |

| Process Technology | TSMC N6 | TSMC N7 | TSMC N7 | GloFo 14N |

| Transistors (Billion) | 5.4 | 11.1 | 6.4 | 5.7 |

| Die size (mm^2) | 107 | 237 | 158 | 232 |

| Cus | 16 | 28 | 22 | 32 |

| GPU Cores | 1024 | 1792 | 1408 | 2048 |

| Ray Accelerators | 16 | 28 | N/A | N/A |

| Boost Clock (MHz) | 2815 | 2491 | 1717 | 1244 |

| VRAM Speed (Gbps) | 18 | 14 | 14 | 7 |

| VRAM (GB) | 4 | 8 | 4 / 8 | 4 / 8 |

| VRAM Bus Width | 64 | 128 | 128 | 256 |

| ROPs | 32 | 64 | 32 | 32 |

| TMUs | 64 | 112 | 88 | 128 |

| TFLOPS FP32 (Boost) | 5.8 | 8.9 | 4.8 | 5.1 |

| Bandwidth (GBps) | 144 | 224 | 224 | 224 |

| Power (watts) | 107 | 132 | 130 | 150 |

| Launch Date | Jan 2022 | Oct 2021 | Dec 2019 | Apr 2017 |

| Launch Price | $199 | $329 | $179 | $169 |

We've included the RX 6600 and RX 570 in the above table as points of reference, the latter being a card that launched in April 2017. More importantly, perhaps, are the official launch prices of $159 for the GTX 1650 Super (not shown in the above table) and $169 for the RX 570 4GB. Raw specs obviously don't tell the whole story, but certainly, a brand-new $199 GPU using TSMC's enhanced N6 process ought to be able to put some distance between itself and a $169 GPU using a 14nm process from nearly five years ago.

Let's be frank for a moment: I think AMD went too far cutting down the features, particularly on the memory configuration. Sure, this is a "budget" GPU, so you could make the argument that 4GB of VRAM is sufficient. However, Nvidia's competing GTX 1650 Super has the same configuration but with a bus width that's twice as wide, and the upcoming RTX 3050 has a 128-bit bus width with 8GB of VRAM. There was an easy middle ground that I wish AMD had used: 96-bit bus width and 6GB of VRAM. It would have made the chip 5–10% larger and increased the memory cost, but even Nvidia — which has a reputation for being greedy — put 8GB on a card that nominally costs just $50 more. 6GB would have really helped in some of our tests at higher quality settings as well.

It's not all bad news, though. Sure, Navi 24 got cut down to a puny 64-bit memory interface, but even a 16MB Infinity Cache will likely help effective memory bandwidth quite a bit. Except the PCIe interface is also just an x4 connection now, half of the Navi 23 and one-fourth of the normal x16 interface, and as mentioned already, video encoding and decoding support are more like what we saw with RX Vega than with even RDNA, never mind RDNA 2. Notice that the GDDR6 memory comes clocked at 18Gbps, however, and the boost clock is 2815MHz — a record for reference specs on graphics cards. There are enough influencing factors that we really can't predict where the RX 6500 XT will land without putting it to the test. This is why we run benchmarks.

XFX Radeon RX 6500 XT QICK 210 Overview

For our launch review, AMD provided us with an XFX RX 6500 XT QICK 210. As you'd expect from a budget-oriented GPU, the card lacks extras. There's no RGB lighting, and it only includes two video ports: one HDMI 2.1 and one DisplayPort 1.4a. If you like running multiple monitors, this definitely isn't an ideal card for such purposes. It's a decent step up from integrated graphics solutions, hopefully at a more affordable price than other GPUs, but we'll have to wait and see where it lands in the coming weeks.

The card measures 237x130x39 mm, which isn't particularly large, but neither is it very compact. The card also weighs 594g, making it a relative lightweight. Cooling consists of two 95mm fans, arguably a lot more than the GPU requires for cooling purposes, but it should keep noise levels down. About the only real 'extra' worth mentioning here is that XFX includes a metal backplate on the card. It won't make the card run faster or even cooler, but it does protect the back of the PCB from potential damage — something I appreciate as I have accidentally killed at least one GPU in the past when a screw landed on the PCB while the PC was running and shorted out the card. #Experience

AMD officially lists the TGP / board power for the RX 6500 XT at 107W for the reference specs, but with factory overclocked models running at 120W. Either way, you'll need at least a 6-pin PEG power connector on the card to supplement the x16 PCIe slot power, and that's exactly what the XFX RX 6500 XT QICK 210 provides. The XFX card has a factory overclock with the Game Clock set at 2684MHz, representing a slightly higher-than-baseline level of performance.

Test Setup for Radeon RX 6500 XT

After talking about upgrading our GPU test hardware for the past several months, it's finally time to make the switch. Gone is the Core i9-9900K and associated equipment, and in its place is a shiny new Core i9-12900K, which currently ranks second on our list of the best CPU for gaming. We've elected to stick with a 2x16GB kit of DDR4-3600 memory, mostly because DDR5 is nearly impossible to come by and a good kit of DDR4 performs comparably, particularly in games. (This kit is actually half of a 4x16GB kit from Corsair with 16-18-18 timings.) Of course, we needed a new motherboard, courtesy of MSI, Crucial provided its speedy P5 Plus 2TB PCIe Gen4 SSD for storage, and Cooler Master provided the power supply, cooler, and it's new HAF500 case.

It's not just about new hardware, naturally. We've upgraded to Windows 11 Pro since it's basically required to get the most out of Alder Lake, and we've also overhauled our gaming test suite. We'll be updating the GPU hierarchy with new test results once those are available (after a few weeks of retesting everything on the new PC, in case you're wondering). For now, we've selected seven games and will test at four settings: 1080p "medium" (or thereabouts) and 1080p/1440p/4K "ultra" (basically maxed out settings except for SSAA).

For the coming year, we're using Borderlands 3, Far Cry 6, Flight Simulator, Forza Horizon 5, Horizon Zero Dawn, Red Dead Redemption 2, and Watch Dogs Legion. Six of the seven games use DirectX 12 for the API, with RDR2 being the sole Vulkan representative. We didn't include any DX11 testing because, frankly, most modern games opt for DX12 instead. We'll revisit and revamp the testing regimen over the coming year as needed, but at least for the RX 6500 XT launch review, this is what we're going with.

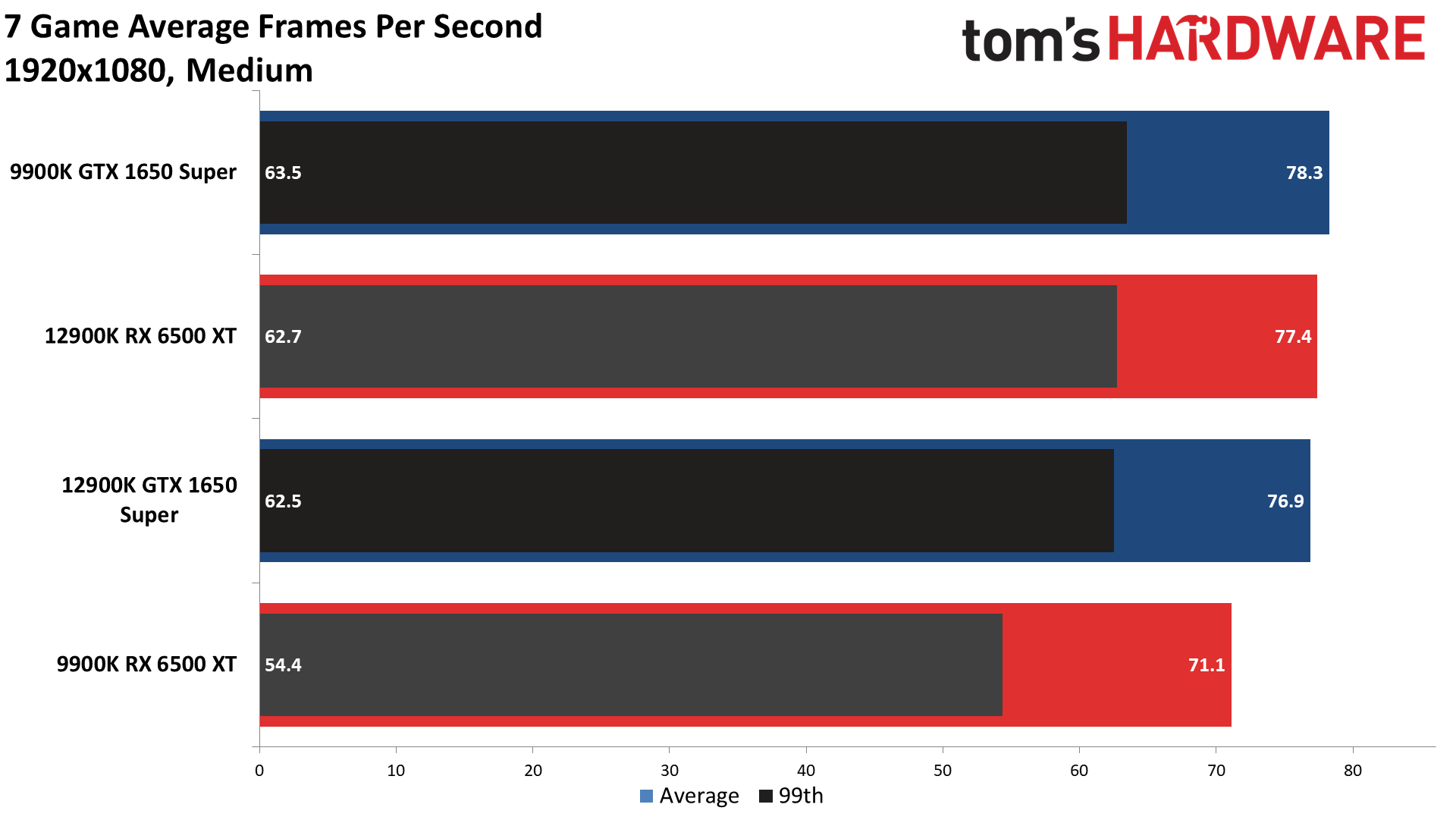

Radeon RX 6500 XT 1080p Gaming Performance

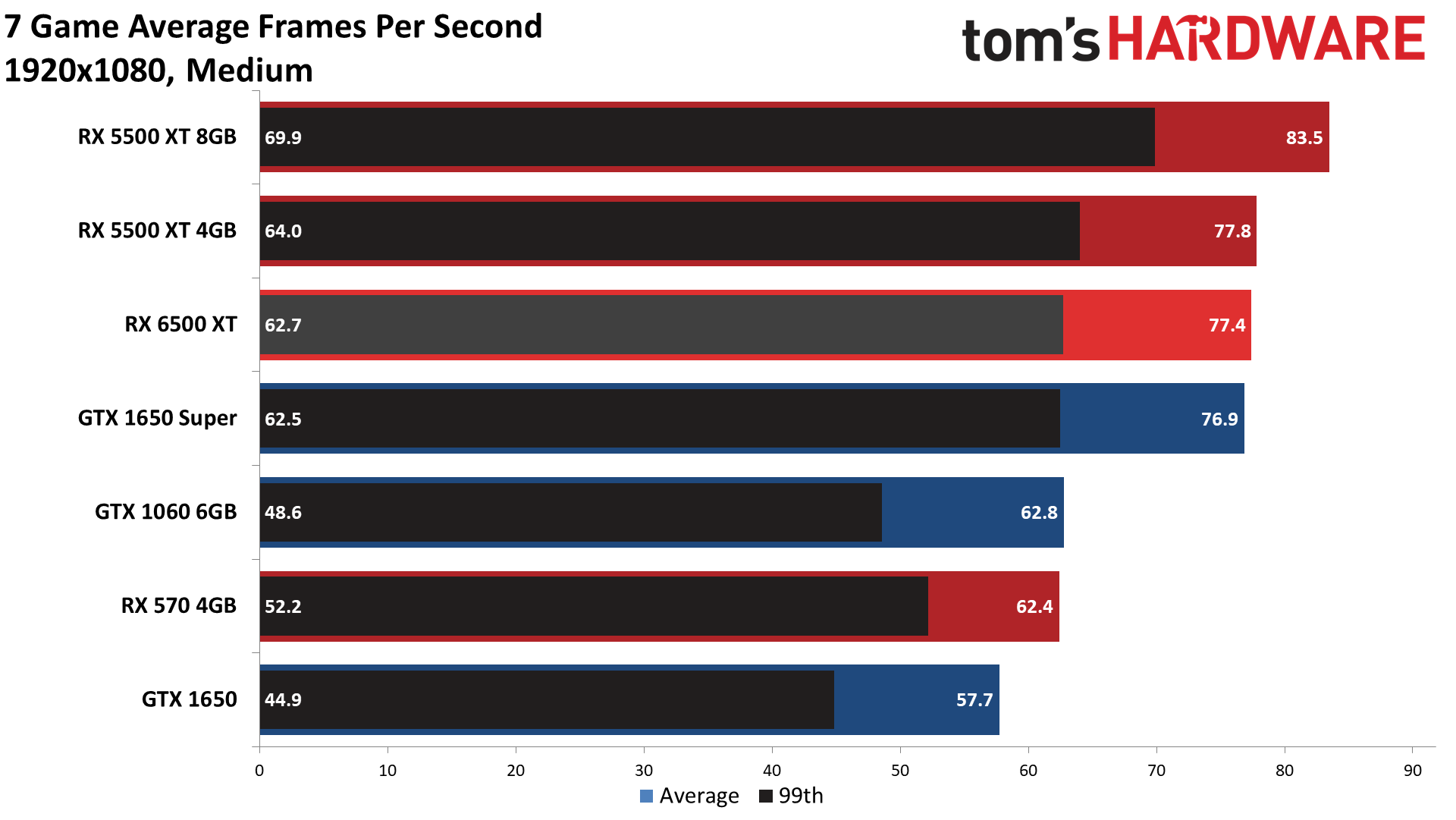

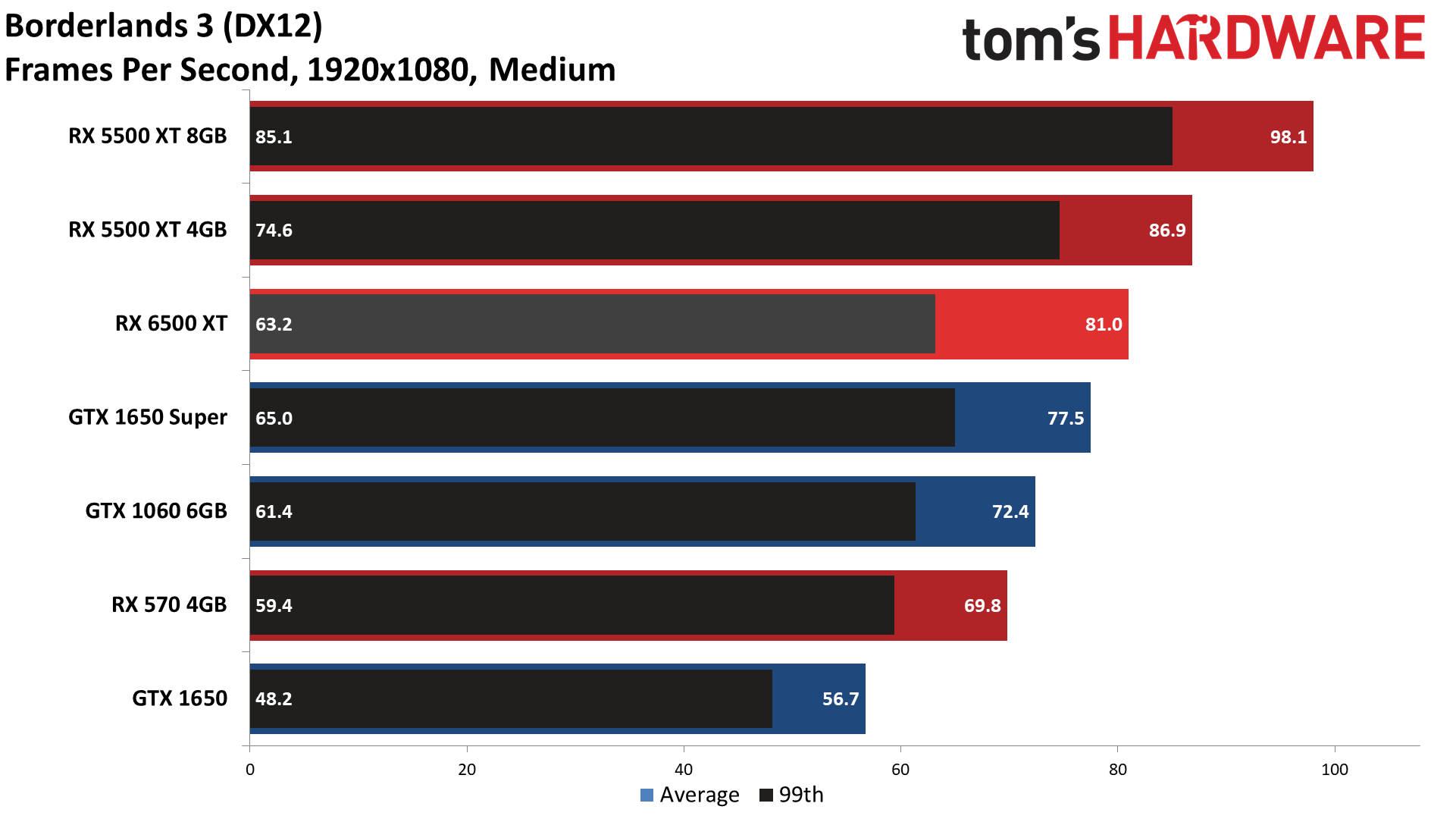

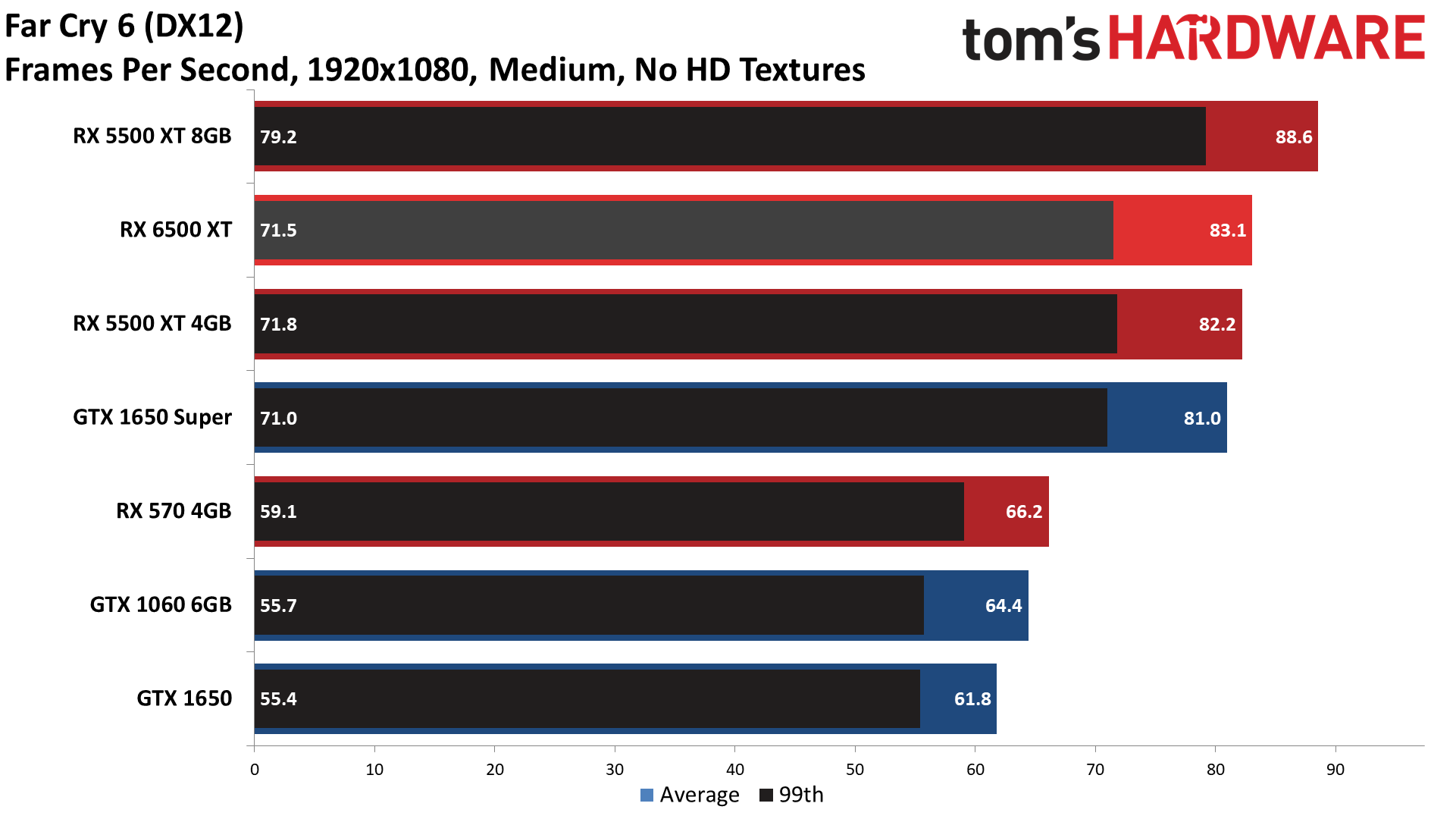

Unlike most GPUs launched in the past year, the Radeon RX 6500 XT is primarily intended for 1080p medium to high gaming. Of course, older games and lighter esports fare can run at higher settings and resolutions and still break 60 fps, but maxed-out 1080p settings will often be too much for the card with our demanding updated test suite. And that's fine, because even games running at 1080p and medium settings look quite good, and the card should deliver smooth performance at that target.

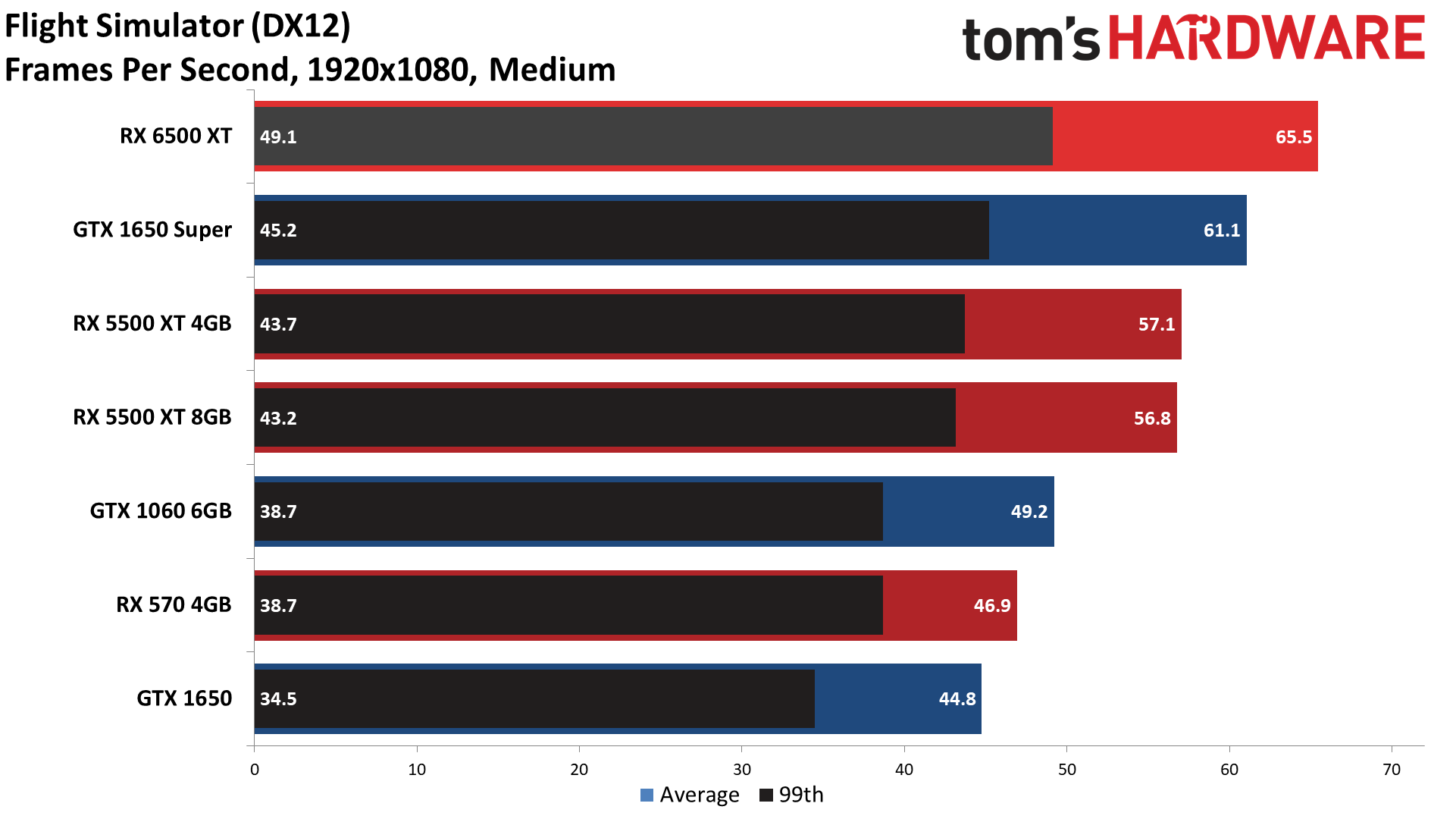

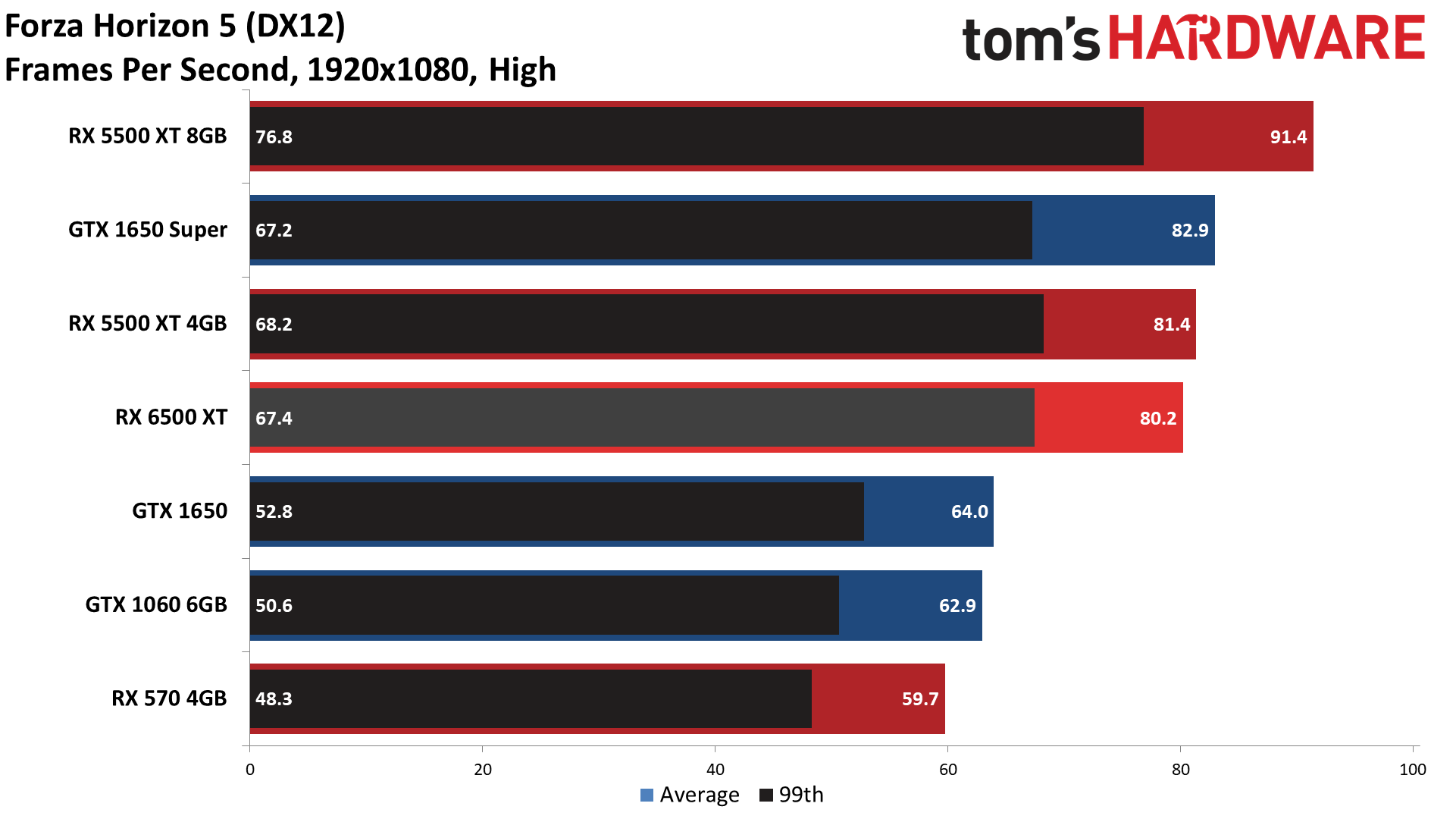

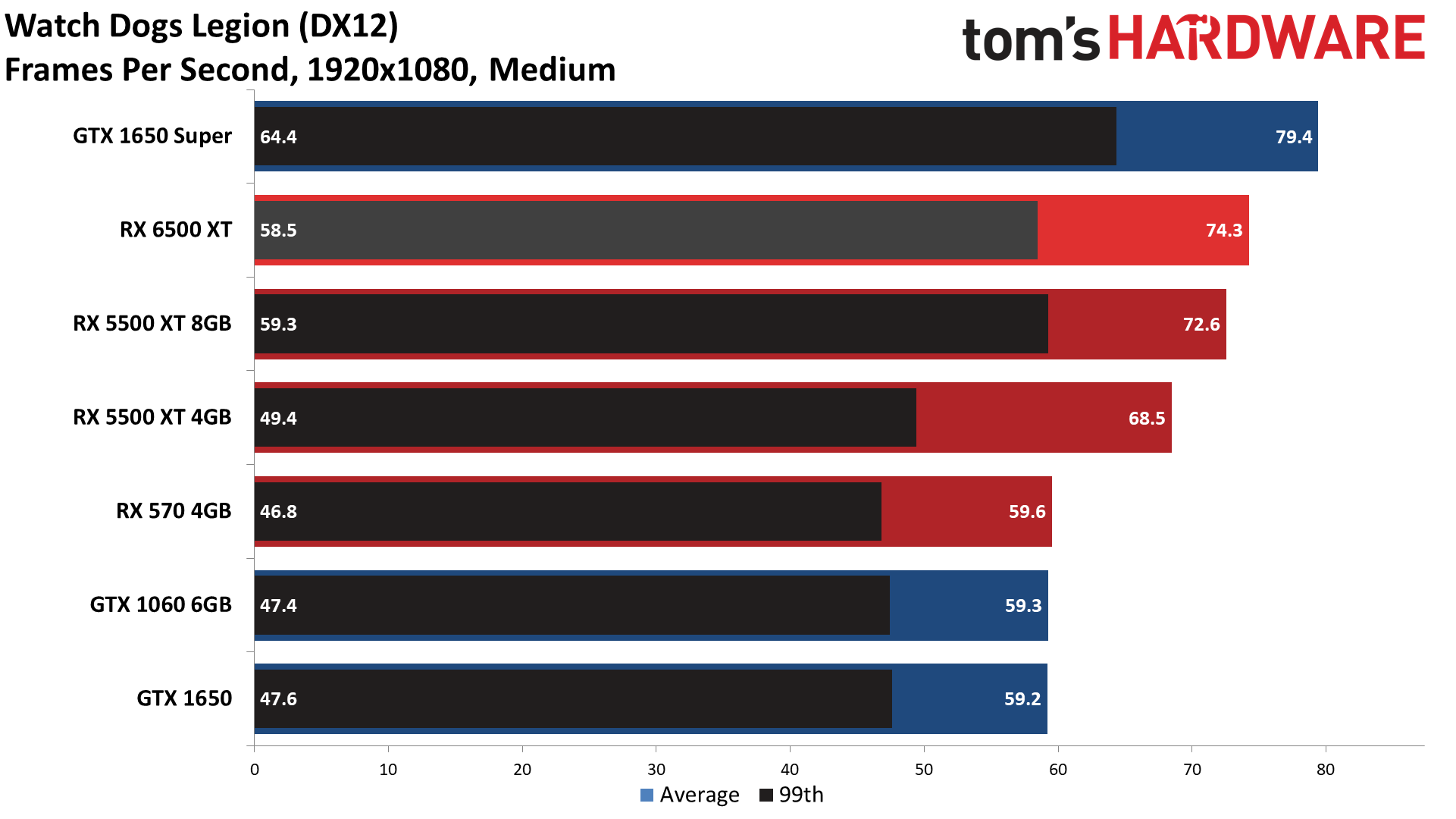

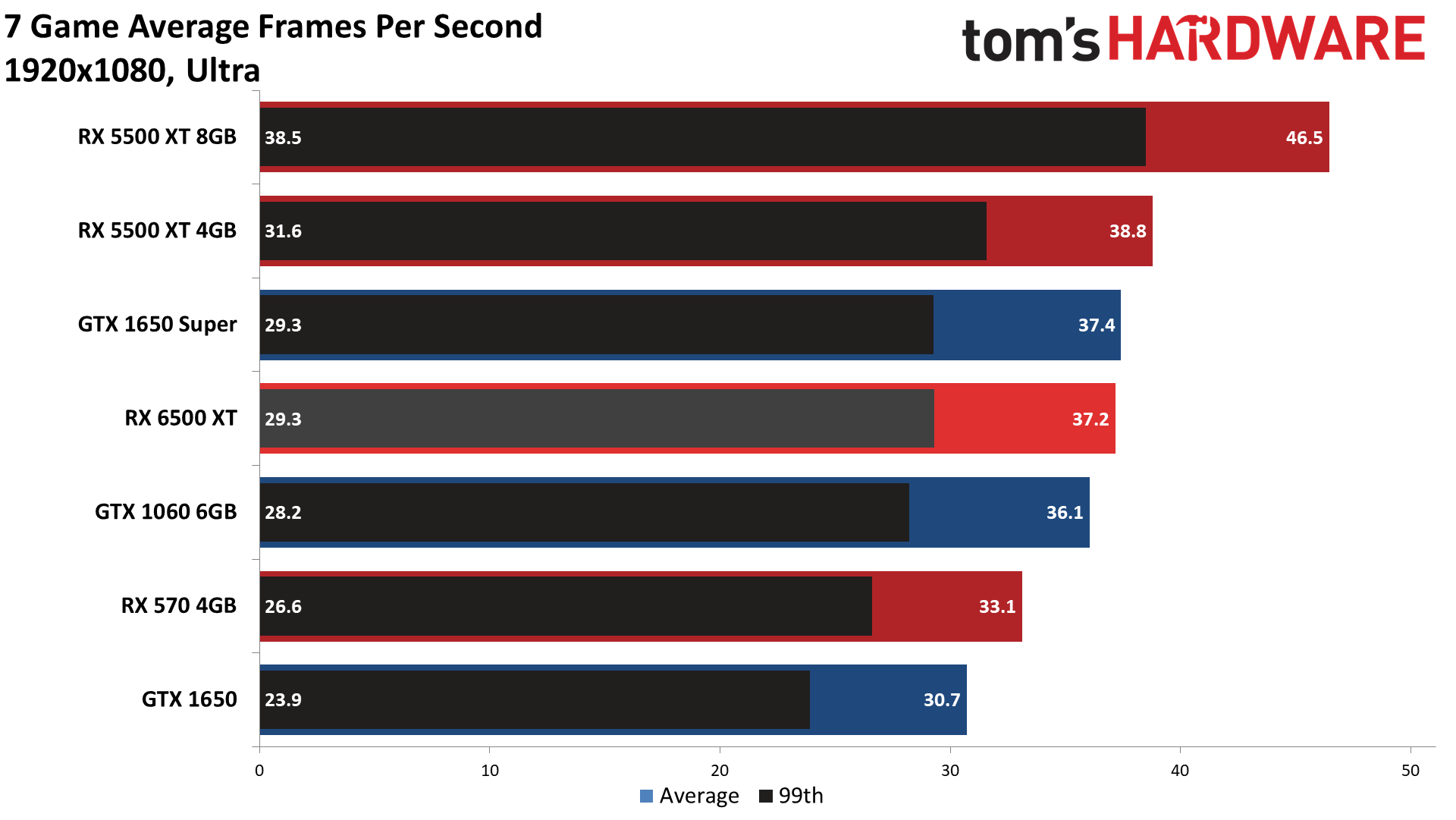

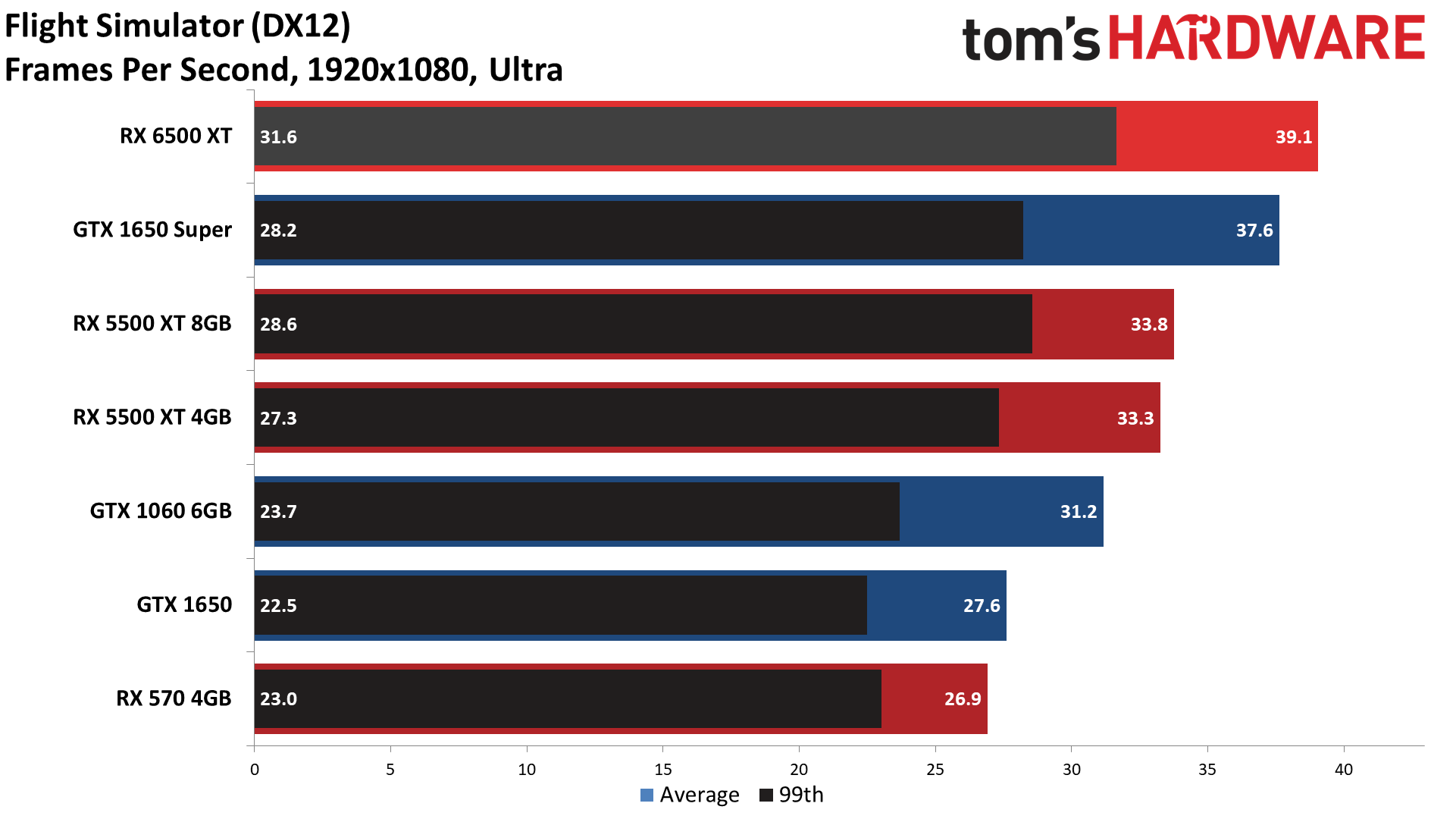

Considering the relatively puny 64-bit memory interface — something we normally only see on ultra-budget cards like the GT 1030 or Radeon 530 — the RX 6500 XT actually turns in a respectable showing. Overall, the RX 6500 XT averaged 77 fps across our new test suite. Except, it effectively tied the GTX 1650 Super, which is probably why AMD's own comparisons pointed to the significantly slower GTX 1650.

AMD provided an extensive list of its own testing that showed the RX 6500 XT outperforming the RX 570 4GB by an average of 24%. We overlapped on five of the seven games in our test suite (Flight Simulator and Forza Horizon 5 being the two extras), but we tested with a different CPU, RAM, motherboard, and our settings didn't match up in every case, either. Just looking at those five games, AMD showed the 6500 XT leading by 25%, while our results showed the RX 6500 XT leading by 20%, which is close enough given the other differences in testing procedures and hardware.

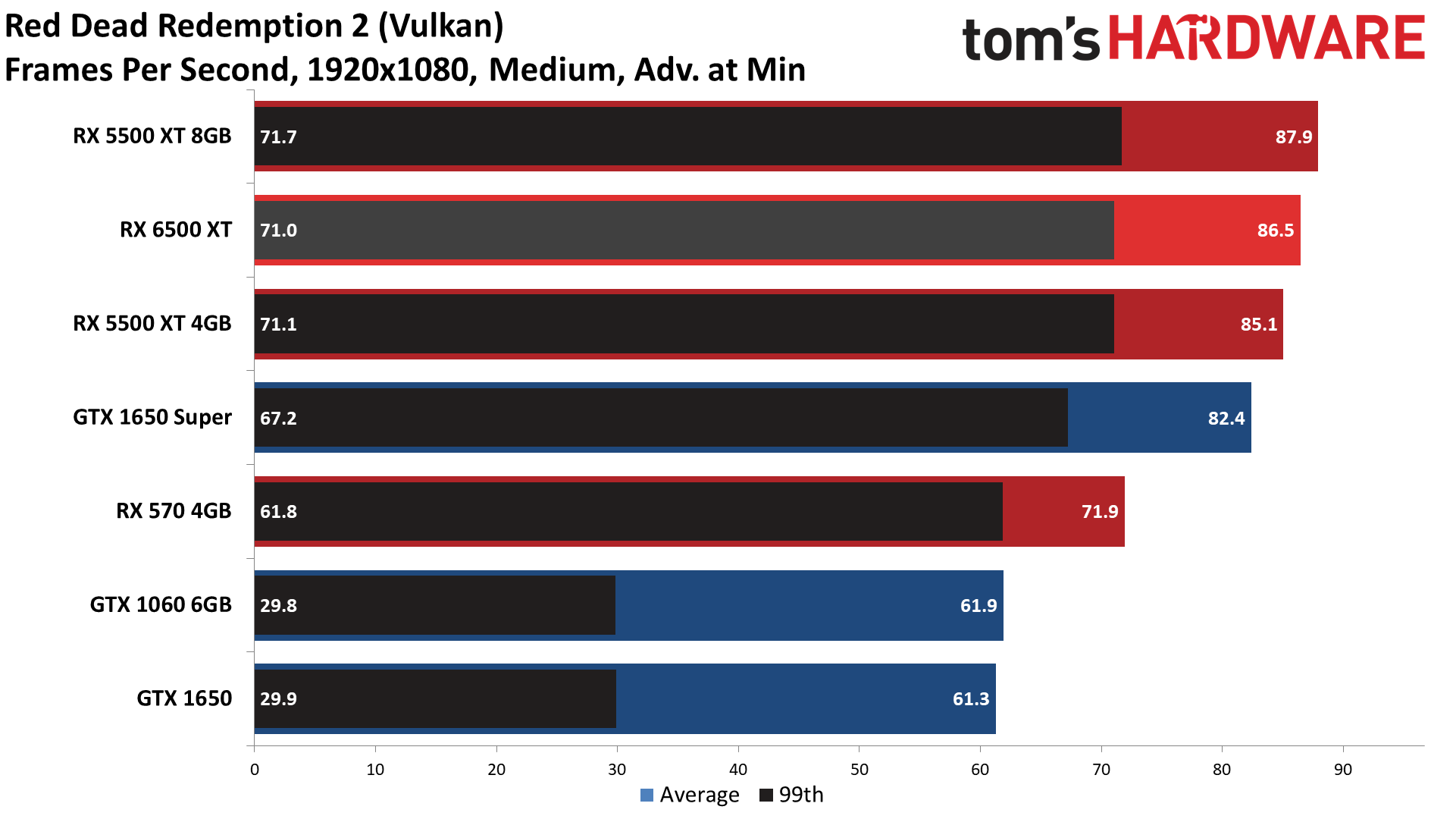

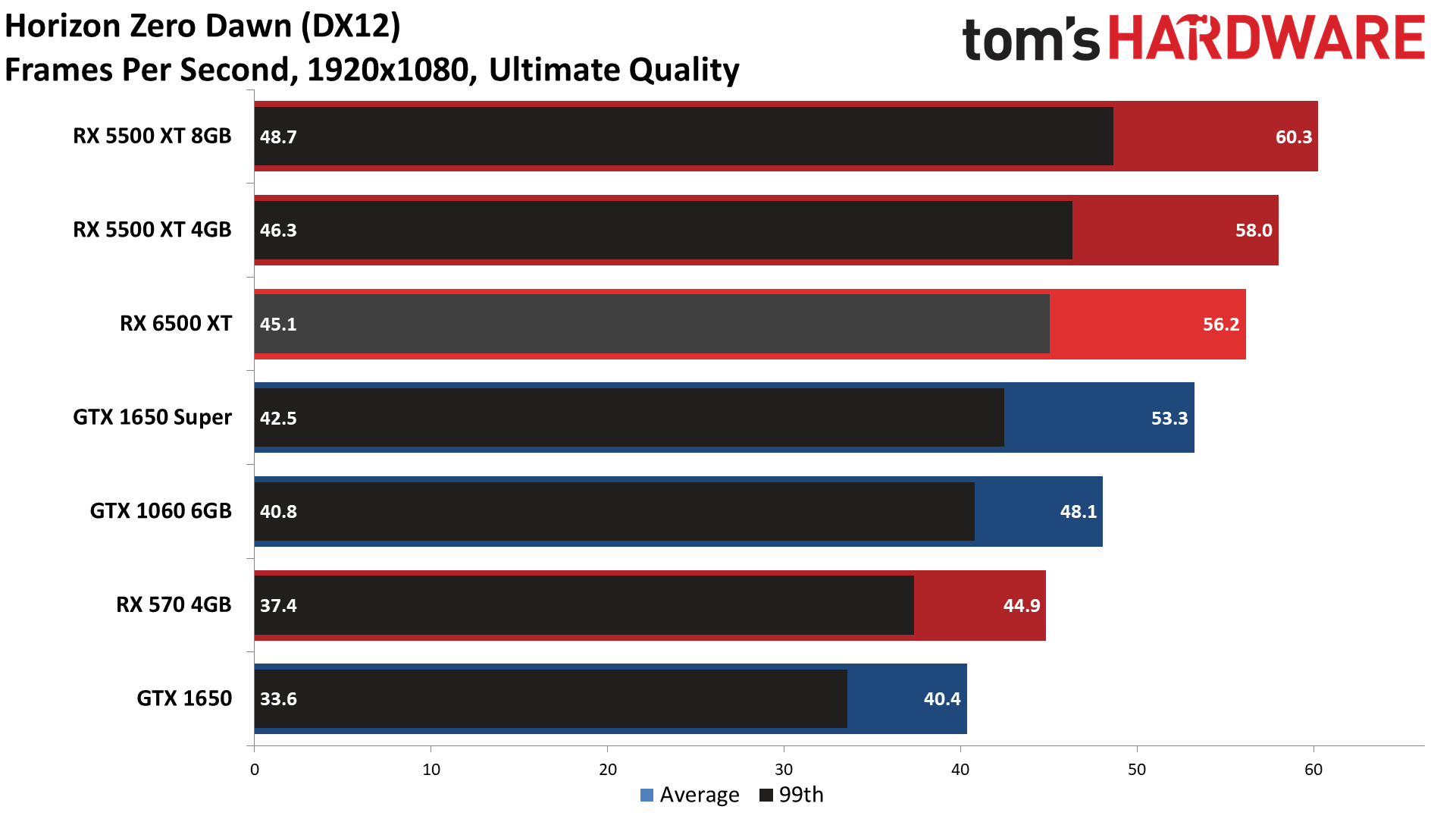

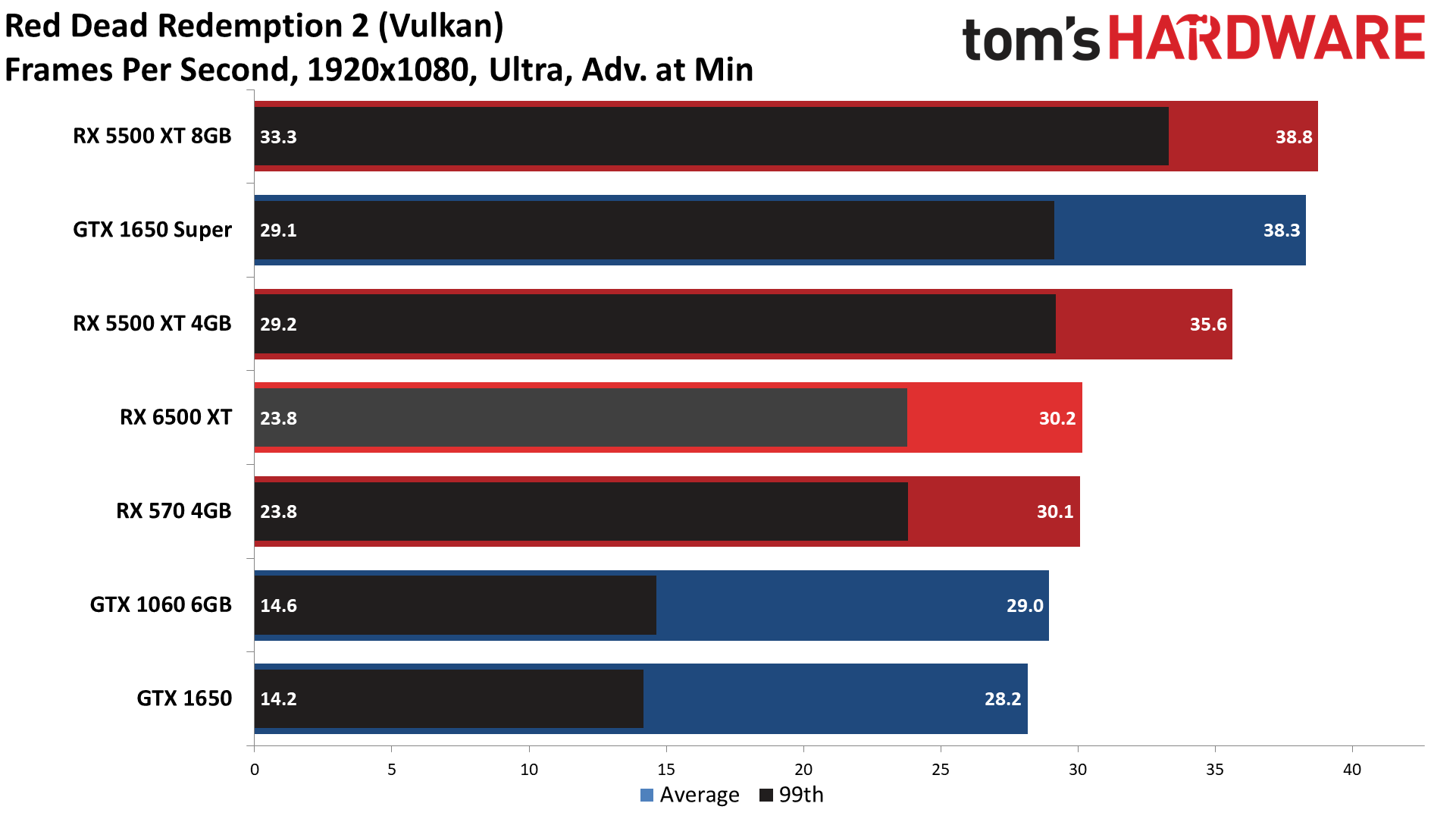

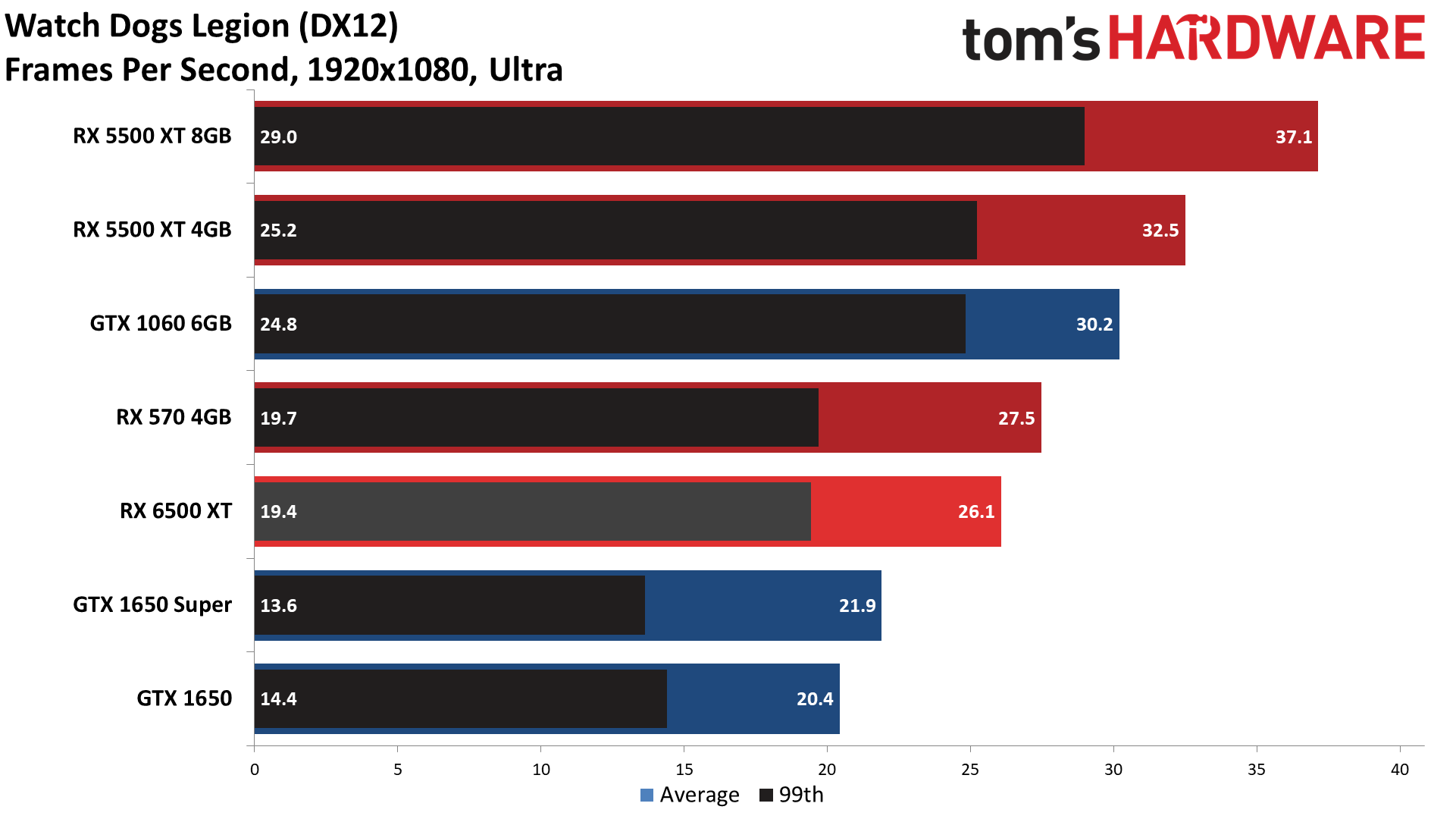

Looking at other potential matchups starts to show the weaker aspects of the RX 6500 XT, however. As noted, it's basically tied with the GTX 1650 Super at 1080p medium/high gaming performance. We tested with a few other cards, and it's actually slower than the previous generation AMD RX 5500 XT 8GB and tied with the 5500 XT 4GB that it's supposed to replace. Oops.

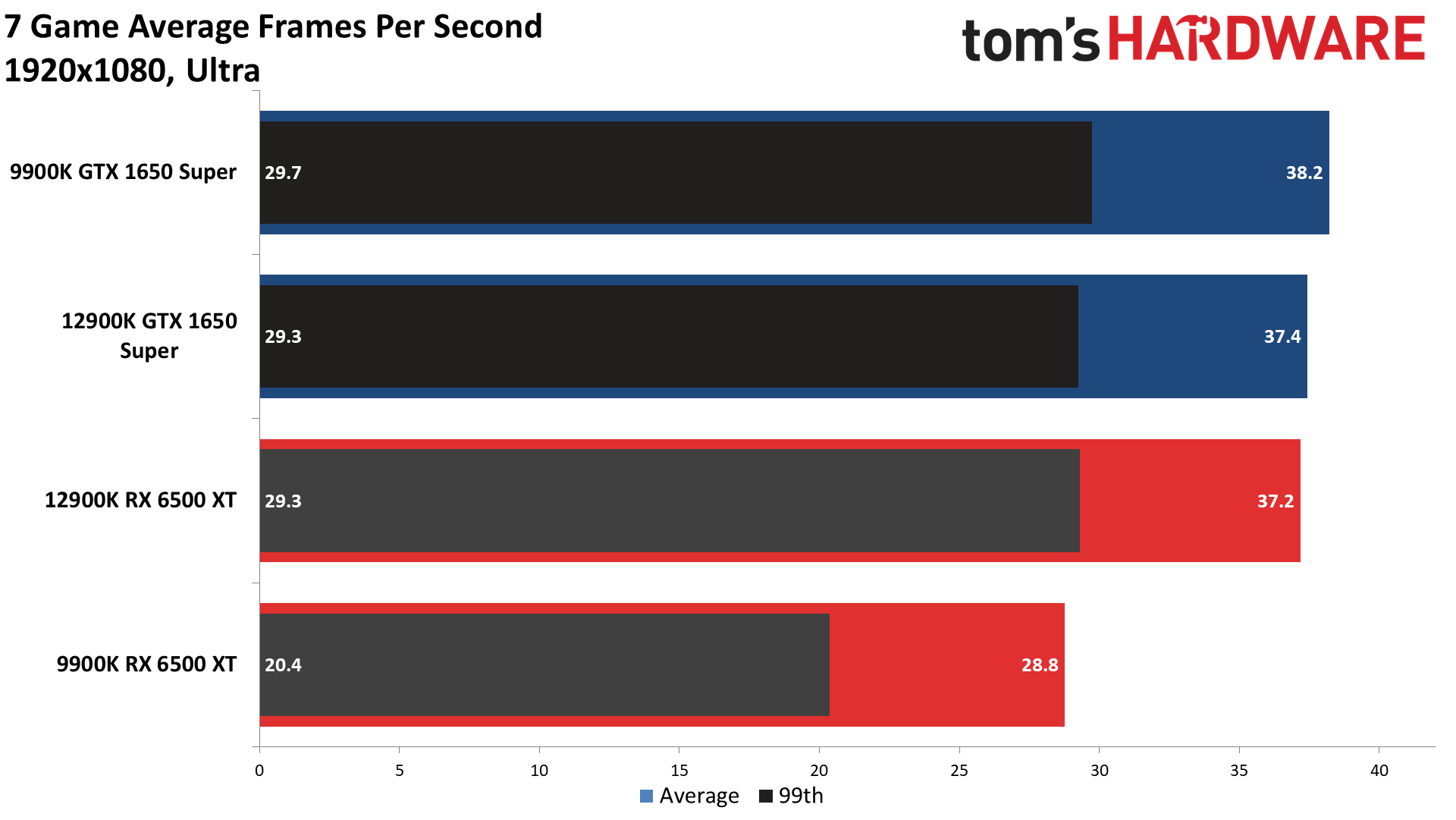

But this is all testing at 1080p medium/high settings, intentionally selected to avoid going beyond the 4GB VRAM found on several of the GPUs in our test lineup. So what happens at 1080p "ultra" settings? Brace yourself, because this isn't going to be pretty.

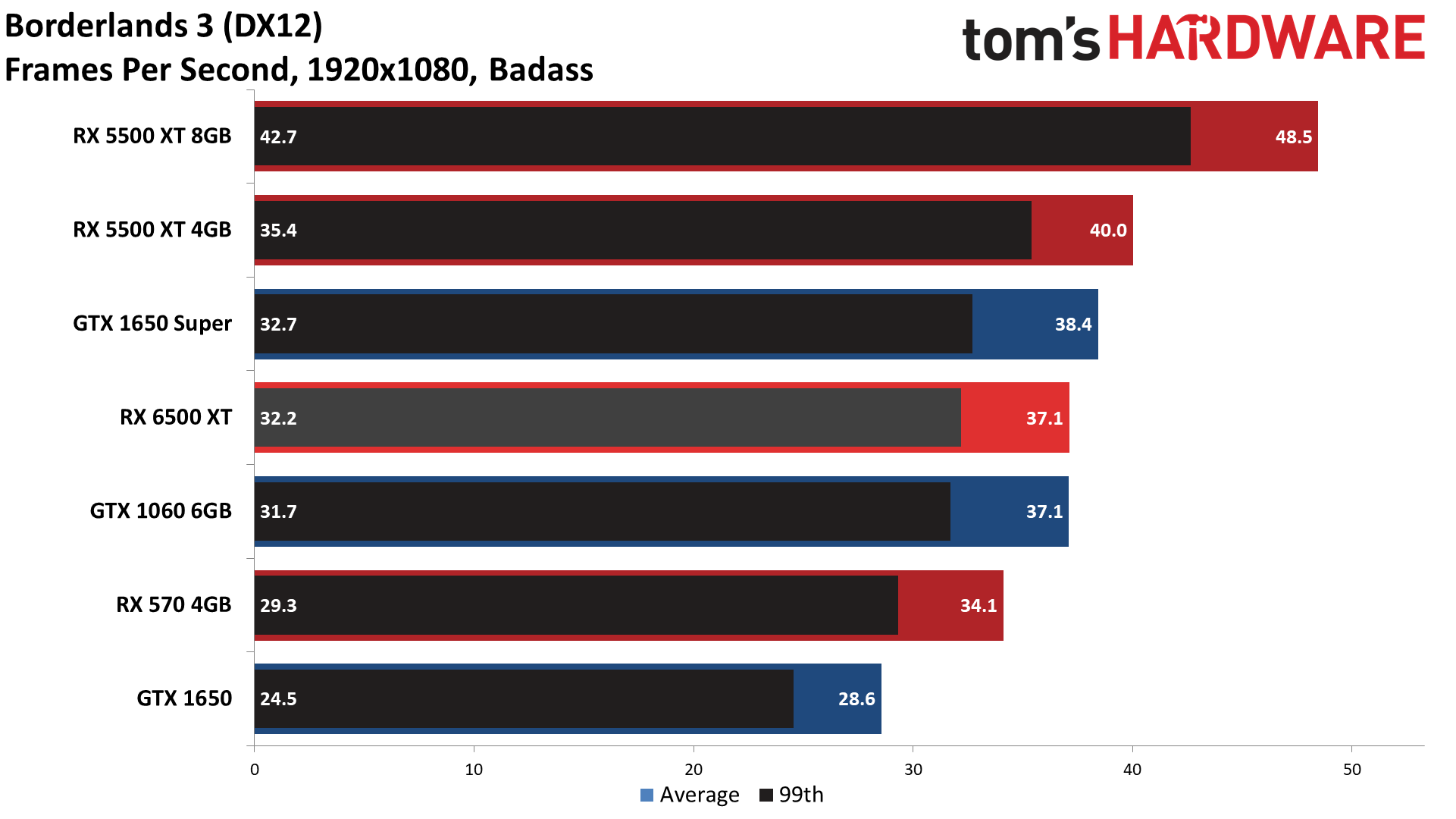

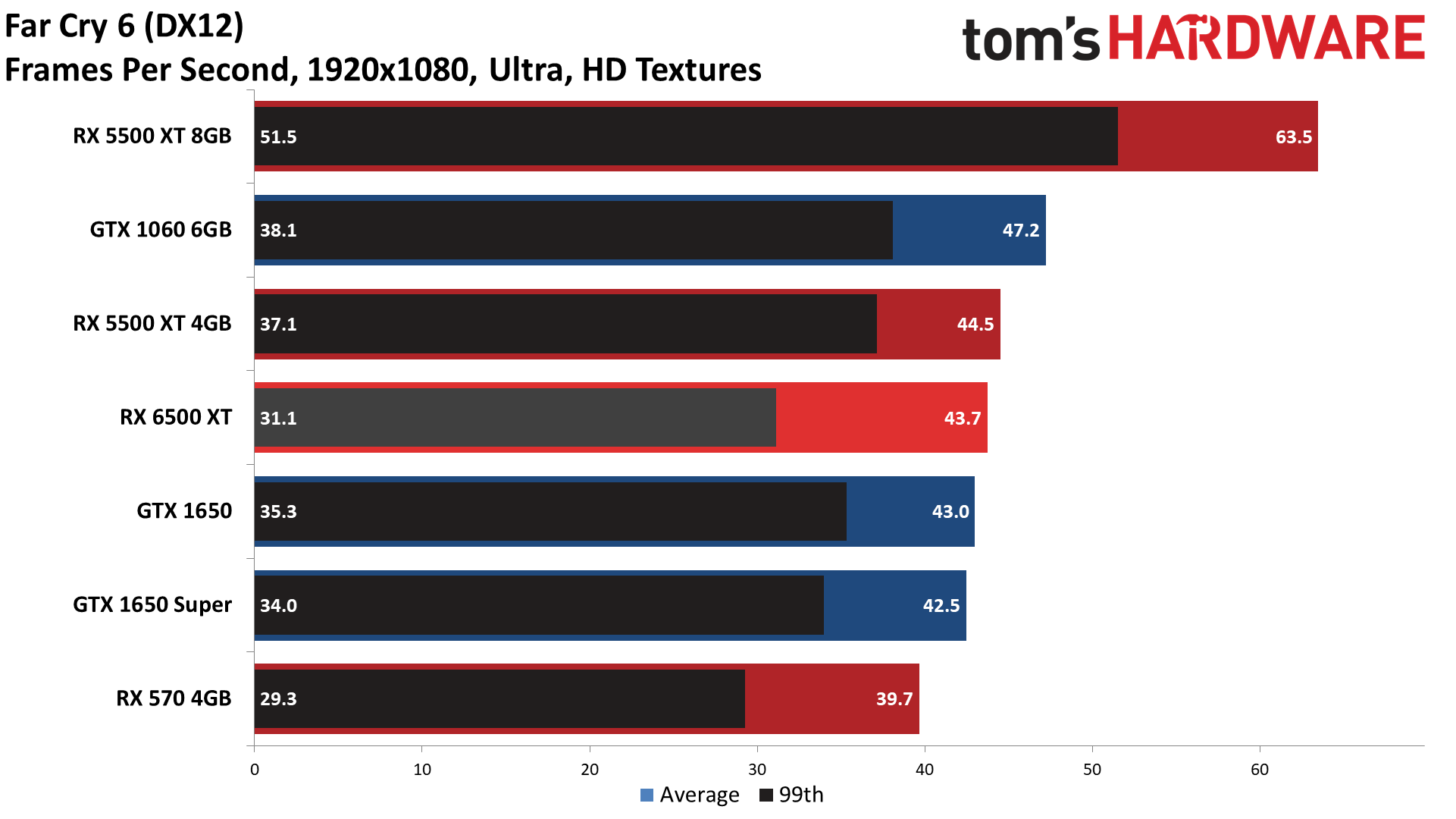

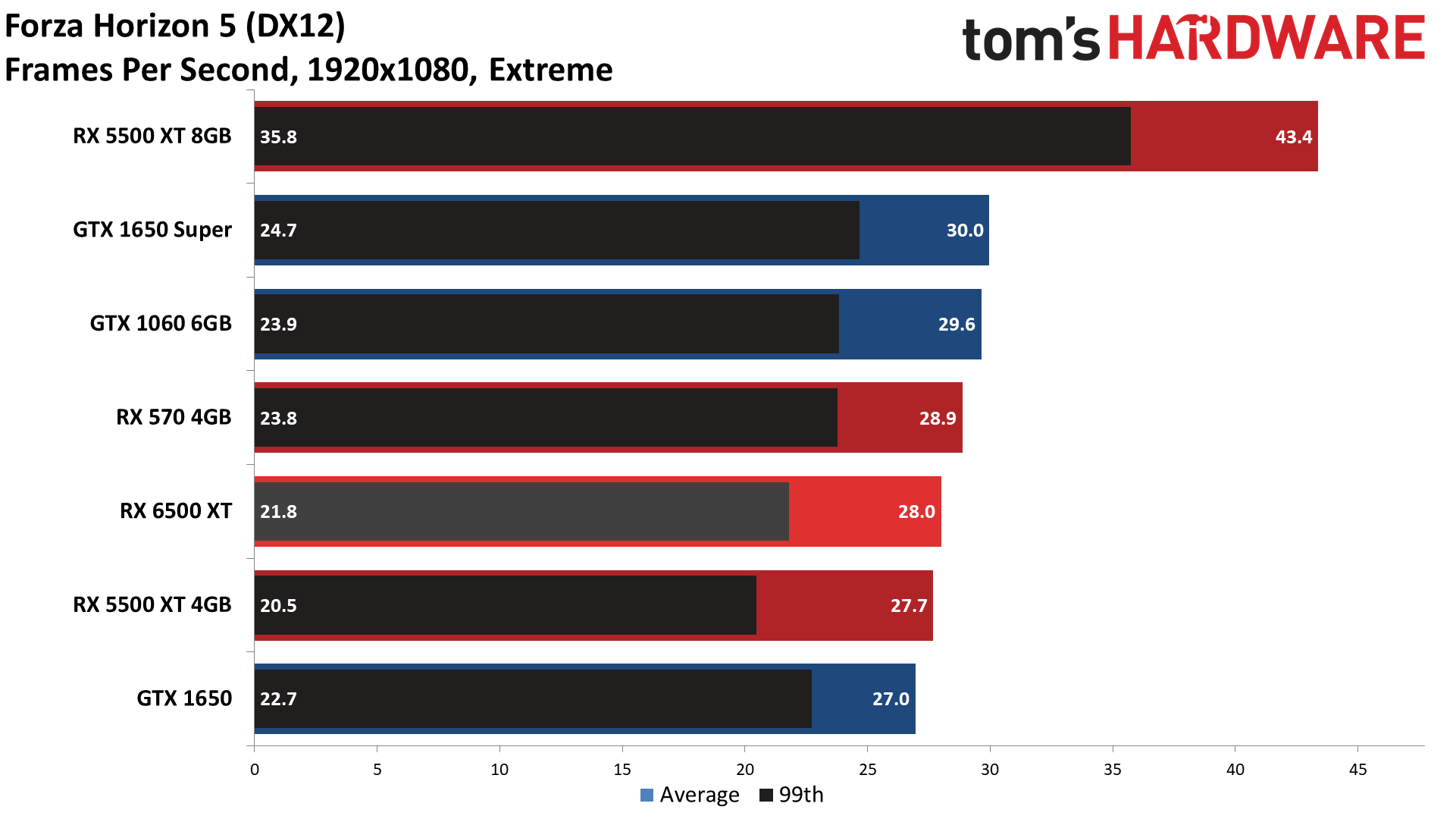

Exceeding the memory capacity of a graphics card can really tank performance, and that's precisely what happens with our 1080p ultra test suite, at least in some of the games. Both the RX 6500 XT and GTX 1650 Super averaged just 37 fps this time, less than half of their 1080p medium results. The good news is that the 4GB cards are generally penalized equally, but the bad news is that cards with 6GB or 8GB VRAM now look much better.

The RX 5500 XT 8GB card, which originally launched at the same $199 price point as today's RX 6500 XT, was 25% faster than the Navi 24 card. Perhaps even more telling is that the GTX 1060 6GB, which came out in mid-2016 as a $250 card, ended up just barely behind AMD's newcomer, and there are a few games where it came out ahead.

The charts don't even tell the whole story, as we ran each benchmark multiple times. Not shown are the numerous results where a game would perform very poorly on some runs — this was particularly common with Far Cry 6, but we had some unusually low runs on Flight Simulator, Forza Horizon 5, Horizon Zero Dawn, and Watch Dogs Legion as well. The solution was usually to restart the game and hope things ran better, and we used these "better" results for our 1080p ultra charts. Basically, 4GB cards are absolutely the bare minimum for running many games acceptably these days.

To be fair, you're not going to want to play with maxed-out settings in more demanding games on these GPUs, but that's mostly because it's simply not a viable option. If you had a different GPU, or if the RX 6500 XT had included a 96-bit interface and 6GB of VRAM, you'd be able to improve the image quality without hurting performance quite so much.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

What About RX 6500 XT Ray Tracing?

With the overhaul of our gaming test suite and hardware, we're also in the midst of retesting all ray tracing capable GPUs on a new test suite. That's not ready yet, because collecting performance results from a larger selection of GPUs simply takes a lot of time, but we did want to show the performance of just the RX 6500 XT. With only 16 ray accelerators and only 4GB of VRAM, the 6500 XT really doesn't have a lot of headroom left for ray tracing.

Sure, simpler forms of RT, like those AMD has promoted in games like Dirt 5, Far Cry 6, Riftbreaker, and World of WarCraft might run okay, but by the same token, the RT effects in those games don't really make the visuals look much better. We've elected to use "medium" settings with ray tracing enabled for this preview. We're testing with Bright Memory Infinite, Control, Cyberpunk 2077, Fortnite, Metro Exodus Enhanced, and Minecraft.

| Game | Settings | Avg fps | Min fps |

|---|---|---|---|

| Bright Memory Infinite | Normal | 7.5 | 2.4 |

| Control | Medium + DXR Medium | Fail | Fail |

| Cyberpunk 2077 | Medium + DXR medium | 7.5 | 6.0 |

| Fortnite | Medium + DXR low | 41.4 | 23.3 |

| Metro Exodus Enhanced | High + medium shaders | 15.9 | 10.0 |

| Minecraft | RT render distance 8 chunks | 13.5 | 11.7 |

Much like the 1080p ultra settings caused the RX 6500 XT to struggle in quite a few games, enabling ray tracing generally isn't advisable. Of course, it's not the end of the world to leave RT off, as most games don't look so much better with RT enabled that you'd feel like you're missing out, but it does go back to some of the design decisions. Still...

Of the six games with ray tracing that we tested — and admittedly, we used more demanding RT games, as otherwise the effects are largely wasted — only one qualified as even remotely playable: Fortnite. And even it wasn't without trouble. We initially tried running with "medium" RT reflections, but doing so caused the game to crash with a driver failure message from Radeon Settings. Meanwhile, using "low" RT reflections mostly seems to have broken the rendering of reflections, or else things just don't look good at such a low setting.

Control informed us that we needed a DXR-capable graphics card. Metro Exodus Enhanced Edition ran okay, though it didn't look as nice since we opted for the "high" preset in the benchmark utility and then turned the shaders down to medium. Both it and Minecraft only managed average framerates in the teens. And finally, Bright Memory Infinite and Cyberpunk 2077 both ran at single-digit fps.

AMD was brutal with its surgical cutting of the Navi 24 GPU, and yet it still left those 16 ray accelerators that are mostly just a checkbox feature. There's almost no point in putting RT hardware on a GPU this slow, and again, we'd rather have more memory, for example. There are almost certainly some edge use cases where the RT hardware can prove somewhat useful (e.g., in the professional space), but the Radeon brand would have benefitted from something a bit more balanced.

In a similar vein to the poor RT performance, we're not going to show 1440p ultra or 4K ultra test results, even though both will be factored into the overall score on our GPU benchmarks hierarchy (eventually). Not surprisingly, 1440p and especially 4K punish any GPU with only 4GB of VRAM. Some of the games in our test suite (Red Dead Redemption 2 and Horizon Zero Dawn) had rendering errors or refused to even run if we attempted to use such high settings. It wasn't unusual to see much higher variability between runs at 1440p ultra, and 4K ultra typically delivered frame rates in the single digits. Put simply, no one should be looking at the RX 6500 XT as a 1440p or 4K gaming solution.

PCIe Gen3 vs. Gen4 Tested

One area of potential interest is how the RX 6500 XT performs when it's not paired with the latest and greatest hardware. Now granted, we're not looking at an old and slow test bed, but we did run both the RX 6500 XT and the GTX 1650 Super through our new test suite on both our old (Core i9-9900K) and new (Core i9-12900K) test PCs. The results are... interesting.

First, let's talk about the RX 6500 XT. Performance on average dropped around 8% at 1080p medium. Looking at the individual charts (not included in the above gallery), we saw everything from a 26% drop (Borderlands 3) to an 8% increase (Horizon Zero Dawn). We were a bit time constrained and the differences in test bed hardware can potentially skew results, but at least on the surface it appears the slower PCIe speed had a noticeable impact.

That impact was felt a lot more at 1080p ultra settings, which exceeded the 4GB VRAM. That's to be expected, because going beyond your card's VRAM means pulling data over the PCIe bus, and a slower link there becomes much more painful. This time, the 9900K was on average 23% slower, with individual game results ranging from 4% slower (Horizon Zero Dawn) to as much as 35% slower (Borderlands 3 and Forza Horizon 5).

Flipping over to the GTX 1650 Super, which doesn't support PCIe Gen4 but which does have a full x16 interface, things are far less exciting. The 9900K actually outperformed the 12900K just slightly overall, with individual gaming results showing a +/-6% spread.

In other words, as a budget GPU the Radeon RX 6500 XT is far more likely to get paired with an existing budget system, and if that system doesn't support PCIe Gen4 the performance, performance will suffer — especially if you happen to exceed the 4GB VRAM.

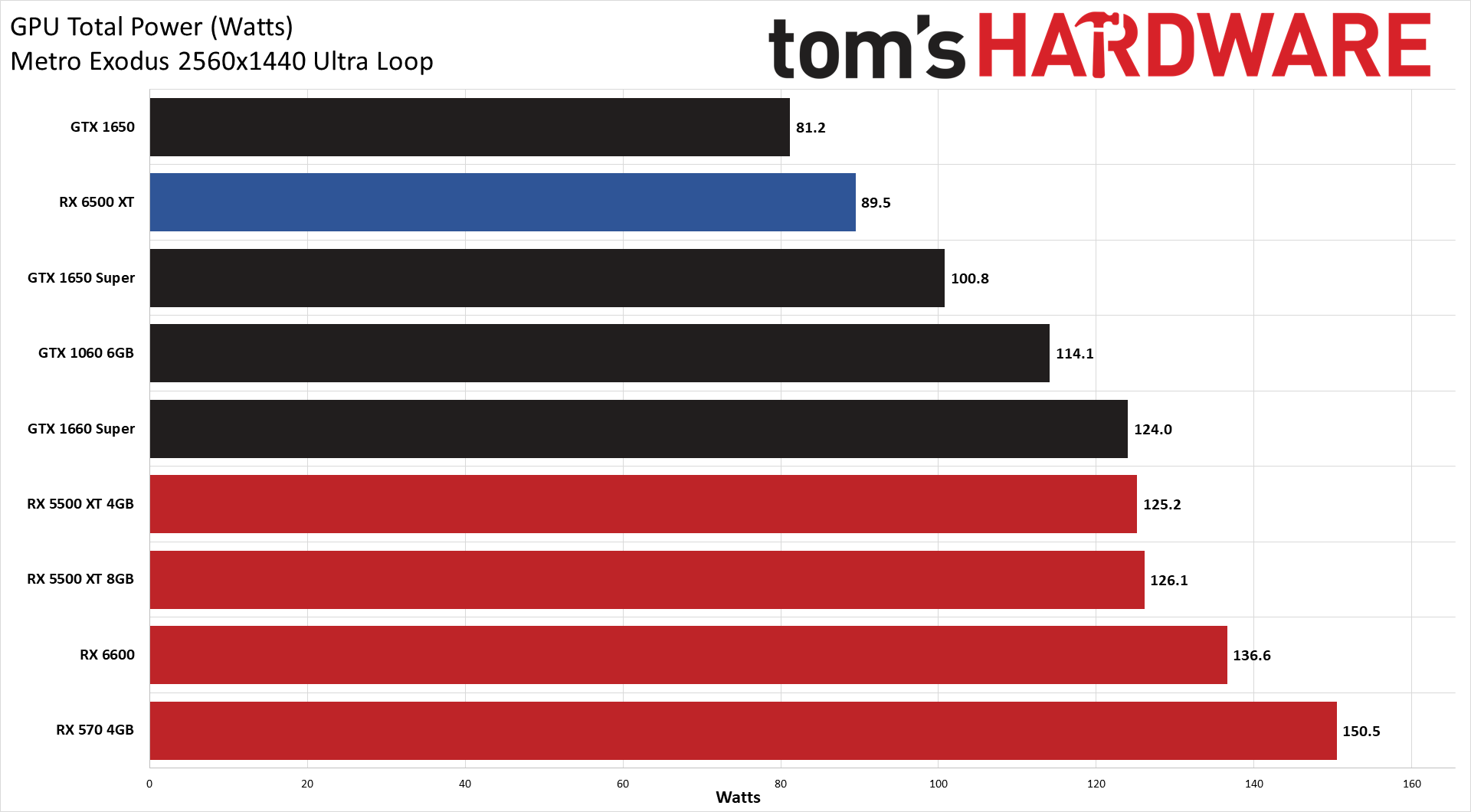

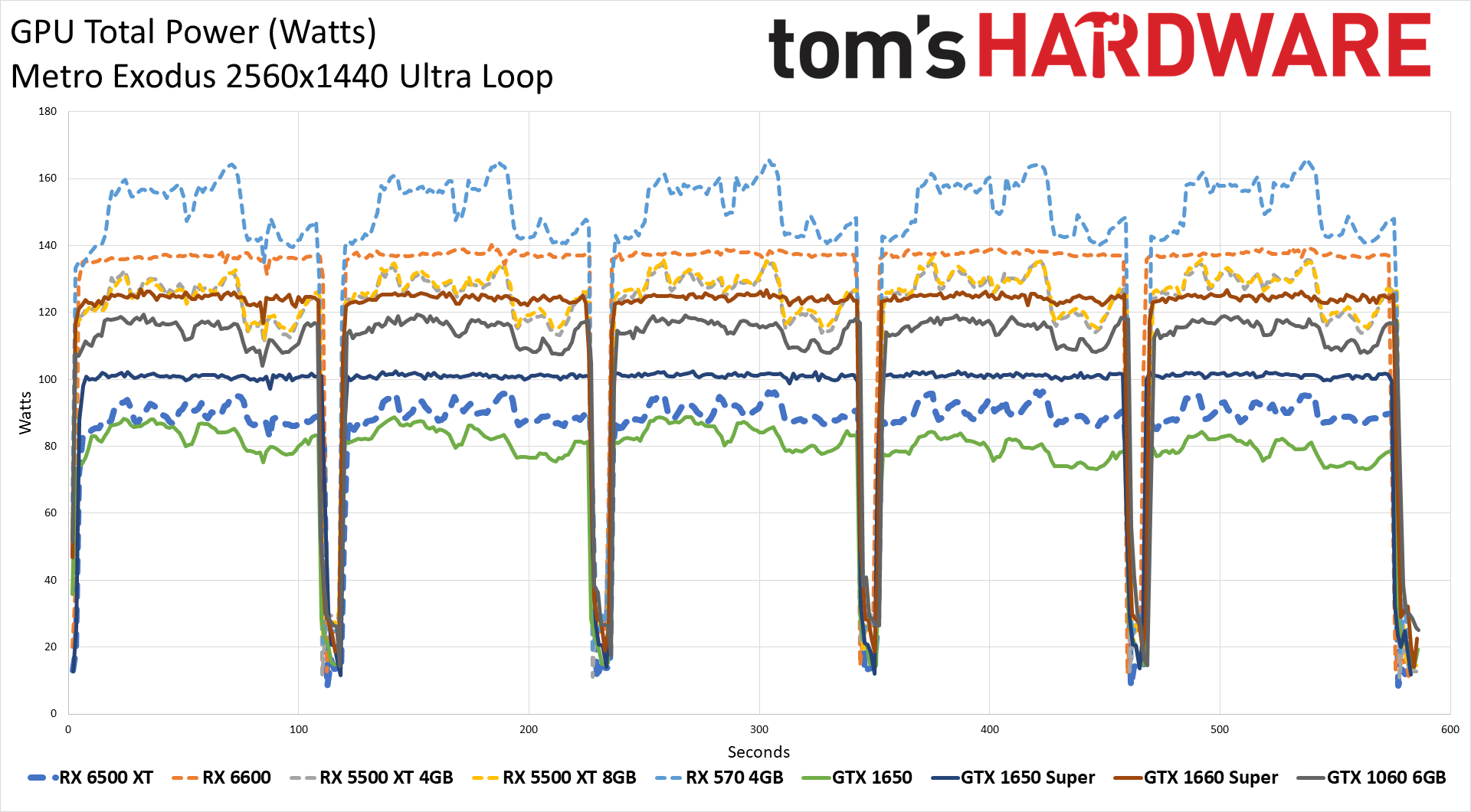

Radeon RX 6500 XT Power, Temps, Noise, Etc.

One aspect of the RX 6500 XT that doesn't seem to make much sense is the power requirements. For example, the Radeon RX 6600 only needs 132W of power and performs quite a bit better than the RX 6500 XT, but the 6500 XT still has a reference board power rating of 107W, and factory overclocked cards like the XFX model we're using apparently bump that up to 120W. That means, at least in theory, AMD only shaved 12W from the power use of the RX 6600 while cutting performance roughly in half. Yeah, that's some nasty diminishing returns.

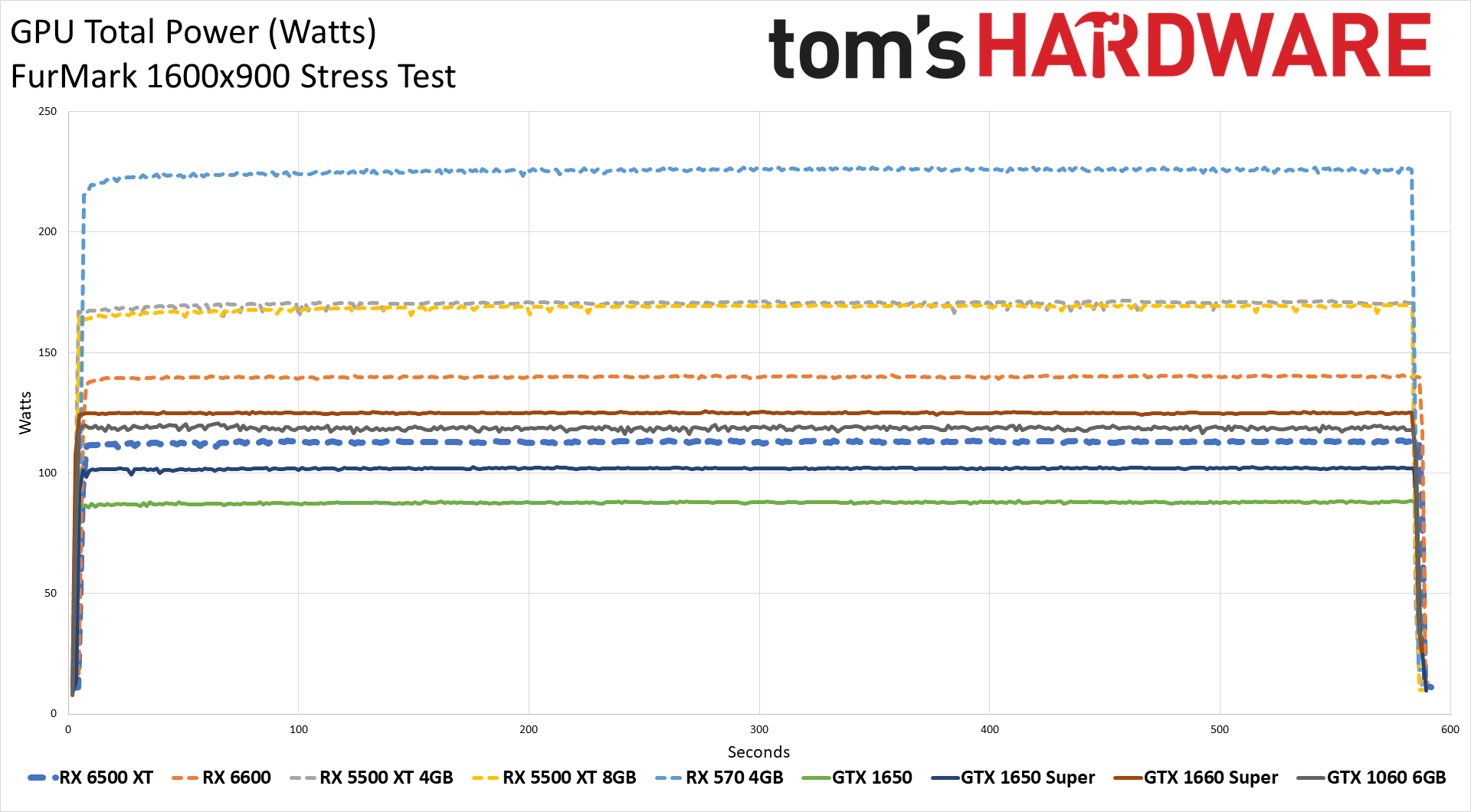

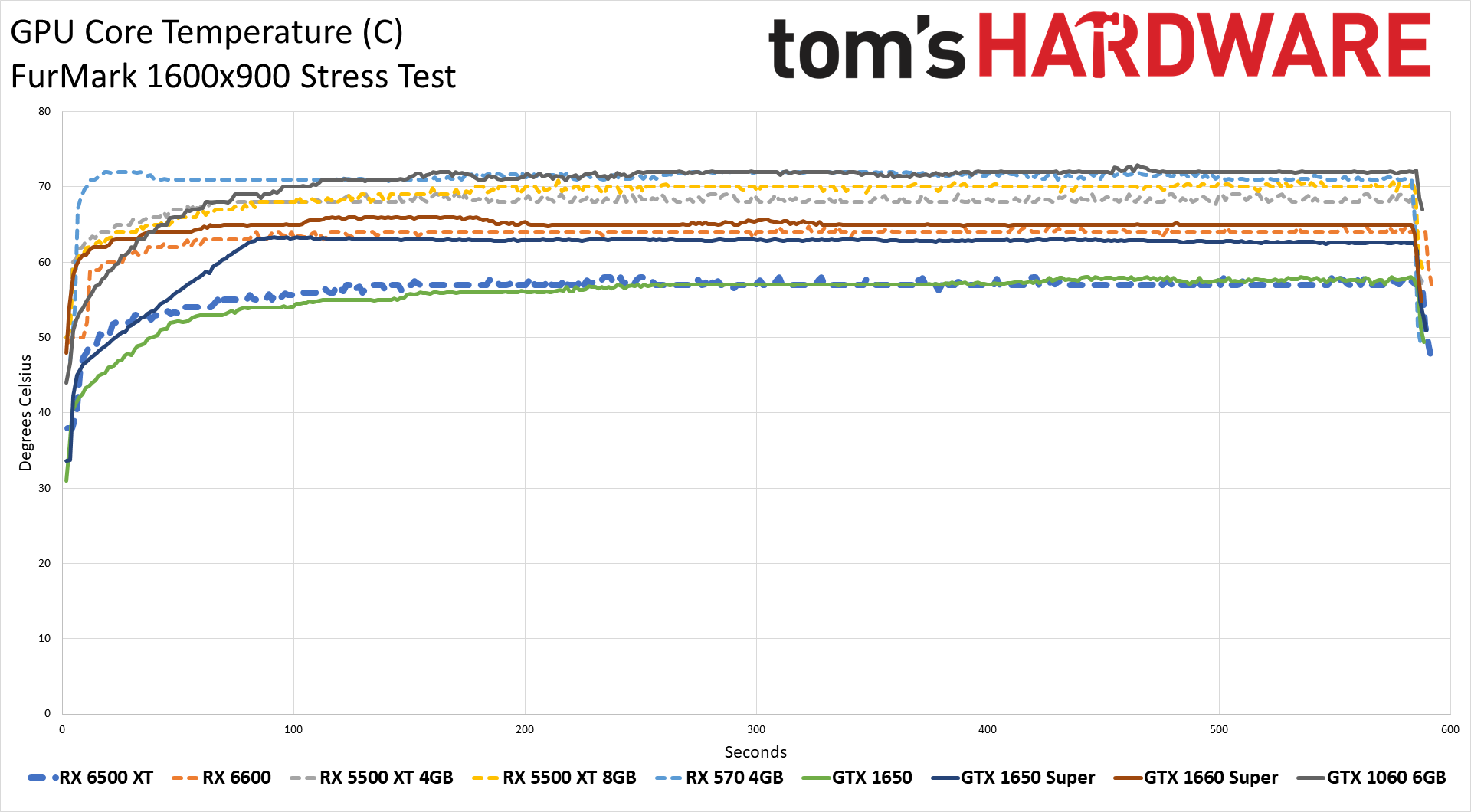

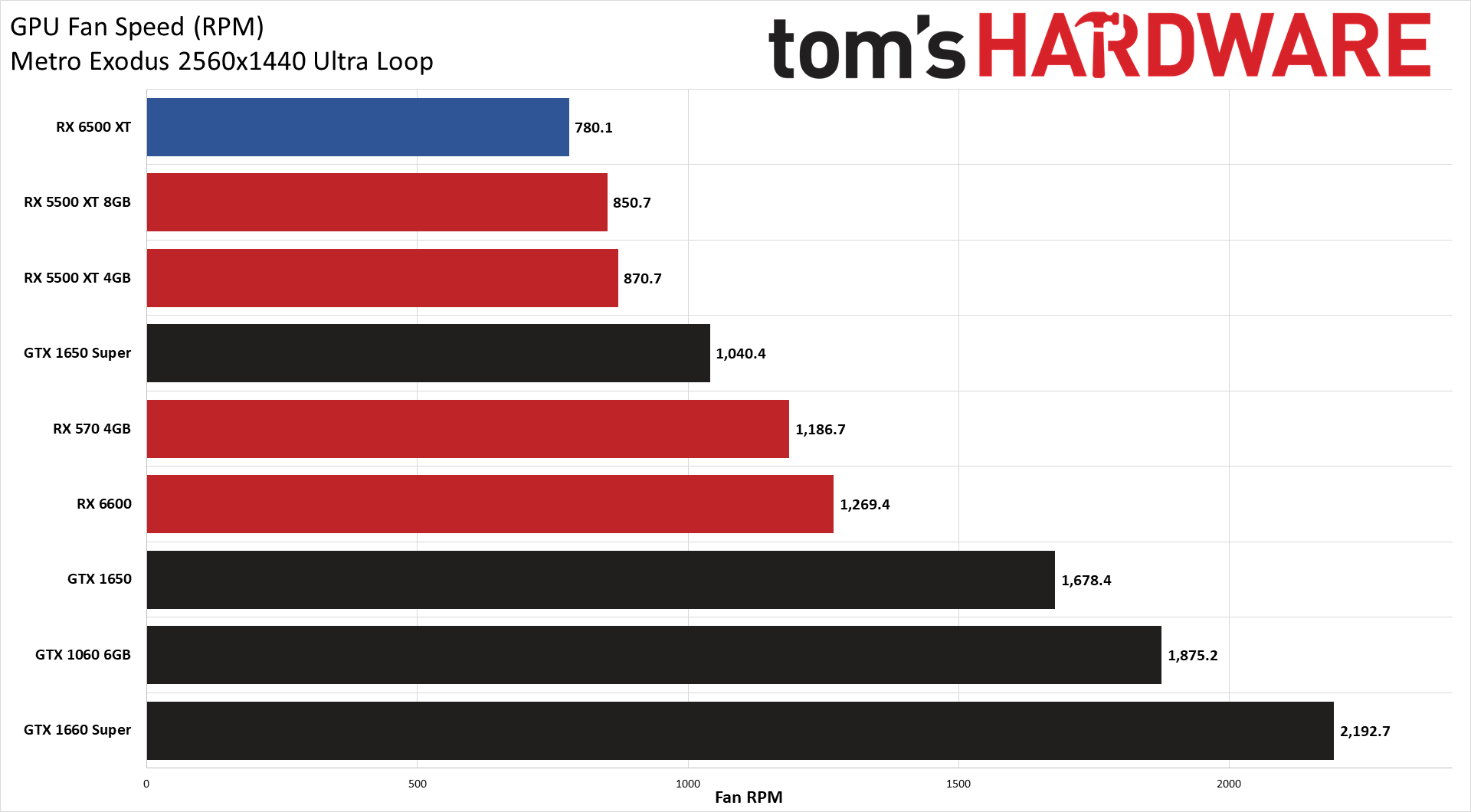

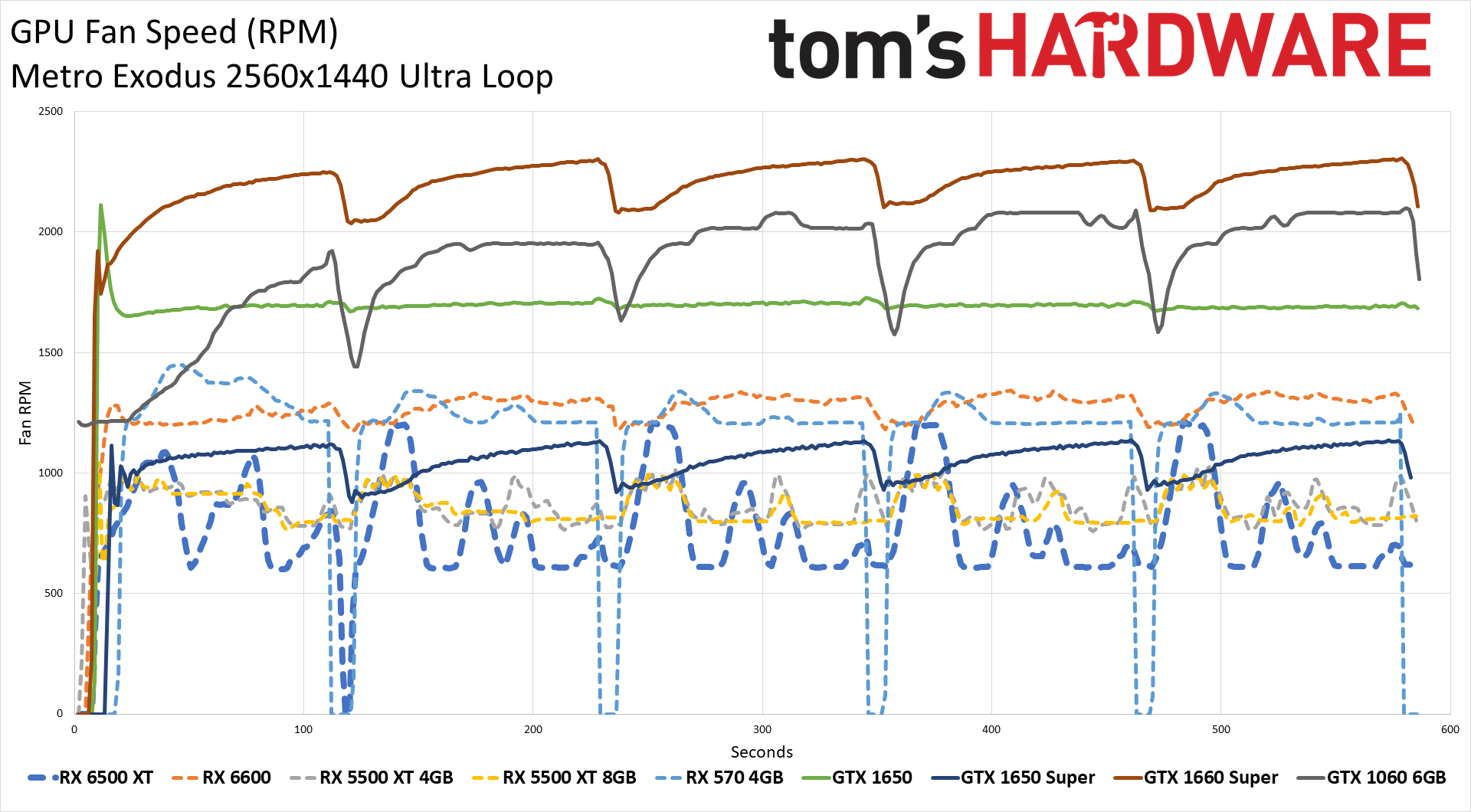

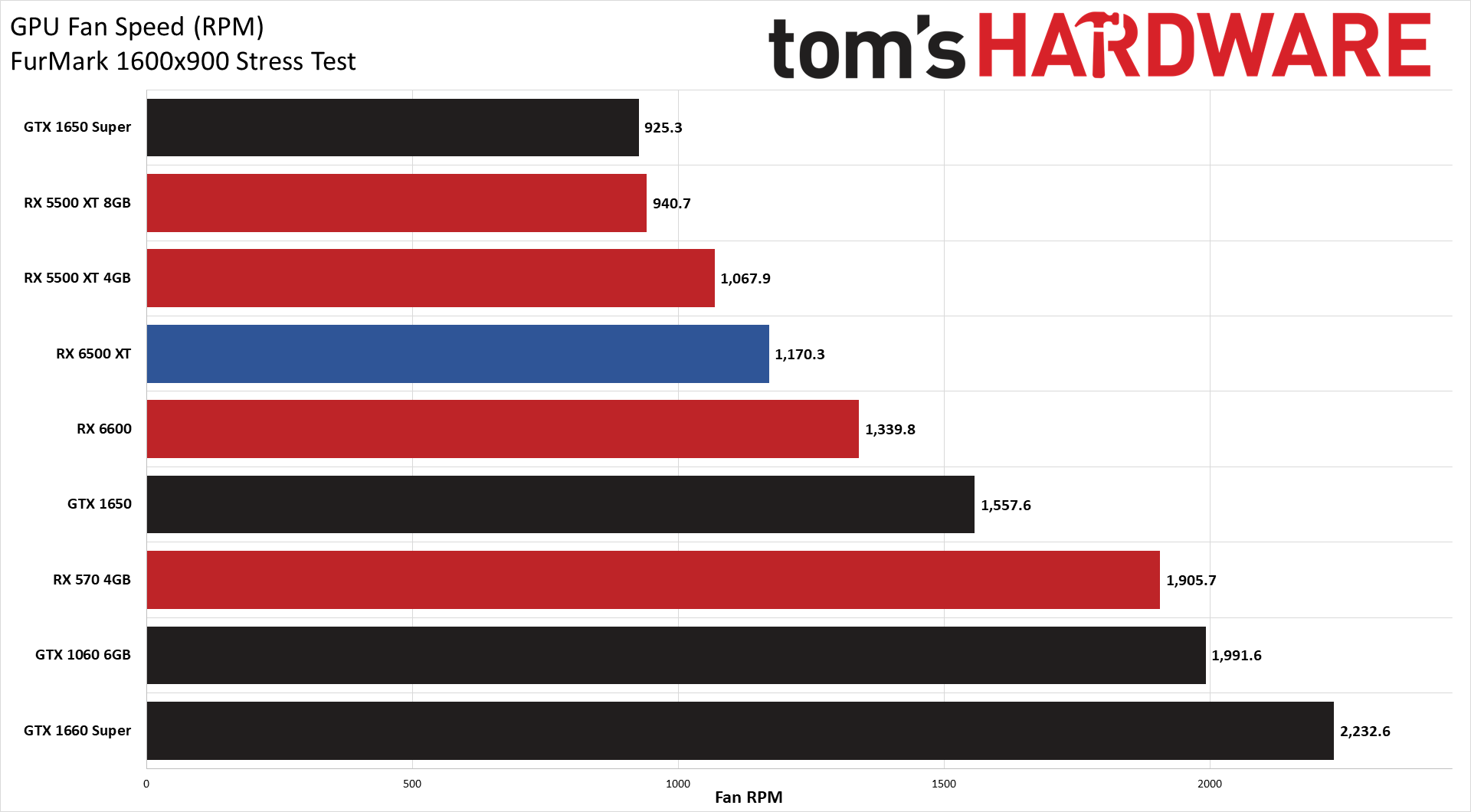

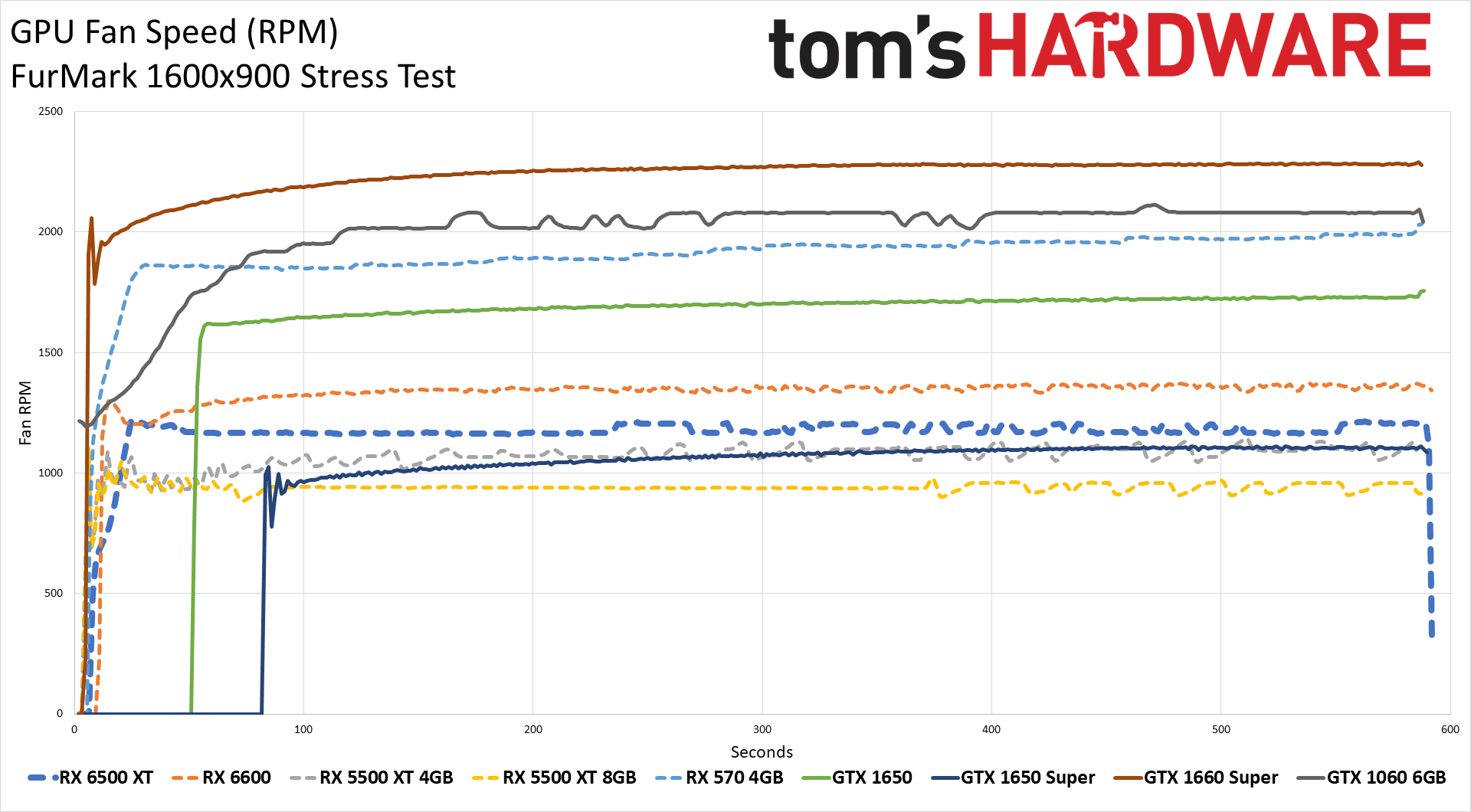

That's only in theory, though, so let's see how things look with our Powenetics testing equipment to measure in-line GPU power consumption and other aspects of the cards. We collect data while running Metro Exodus at 1080p/1440p ultra (whichever uses more power) and run the FurMark stress test at 1600x900. Our test PC remains the same old Core i9-9900K as we've used previously, as it's not a power bottleneck at all.

Power use during the Metro Exodus benchmark loop was only 90W on average, peaking at 96W. That's quite a bit less than the official board power, but it's probably due to the use of Metro Exodus at settings that basically go beyond what the card can really handle. FurMark comes in at 113W average, with a peak of 114W, which looks to coincide with the power rating a bit better.

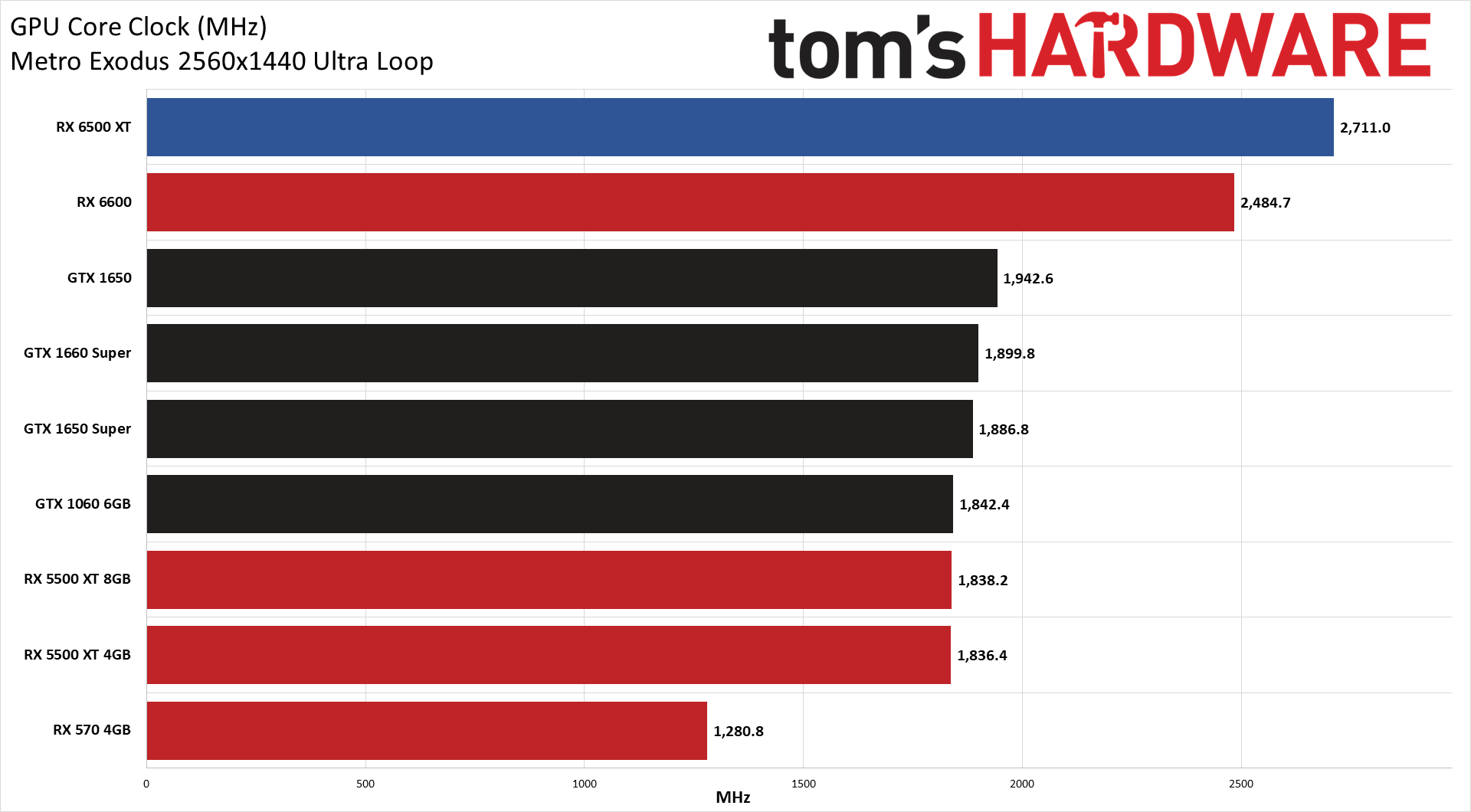

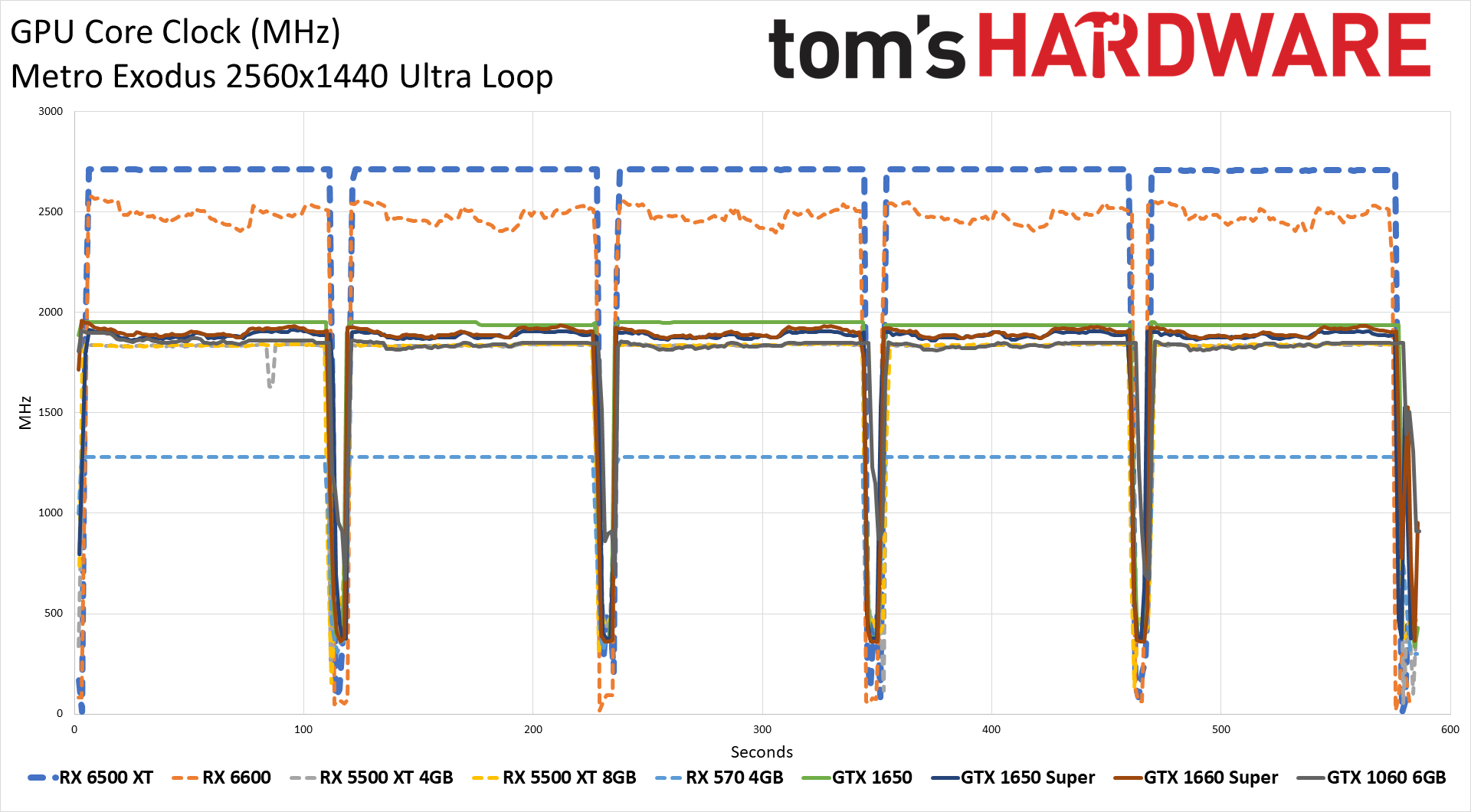

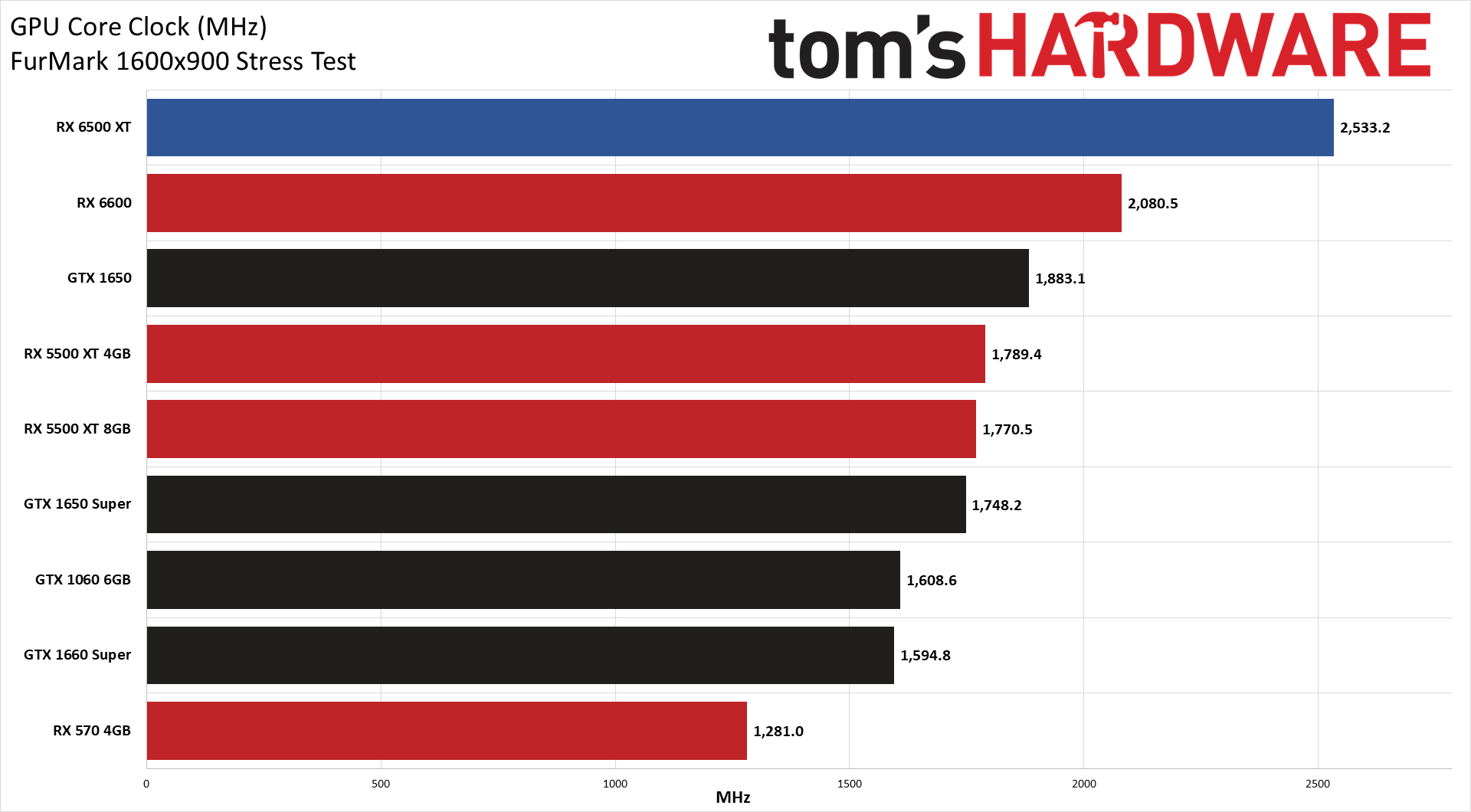

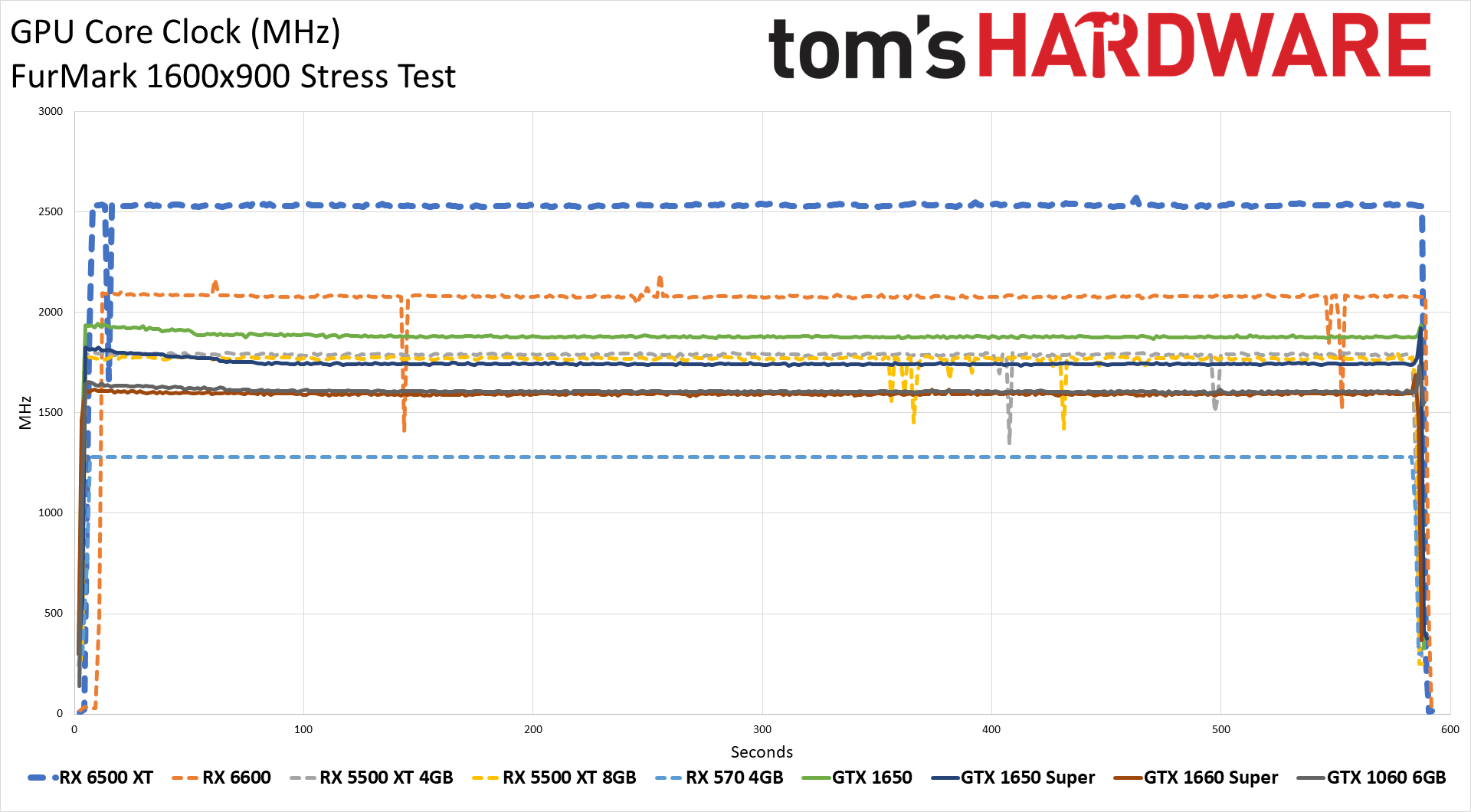

Clock speeds live up to expectations, with average clocks in Metro Exodus of 2711MHz, and even in FurMark, the RX 6500 XT clocked in at 2533MHz. AMD's RDNA2 GPUs can definitely hit high clocks, and those go a long way in boosting performance over the previous generation GPUs — it's running at basically double the clocks of the old RX 570.

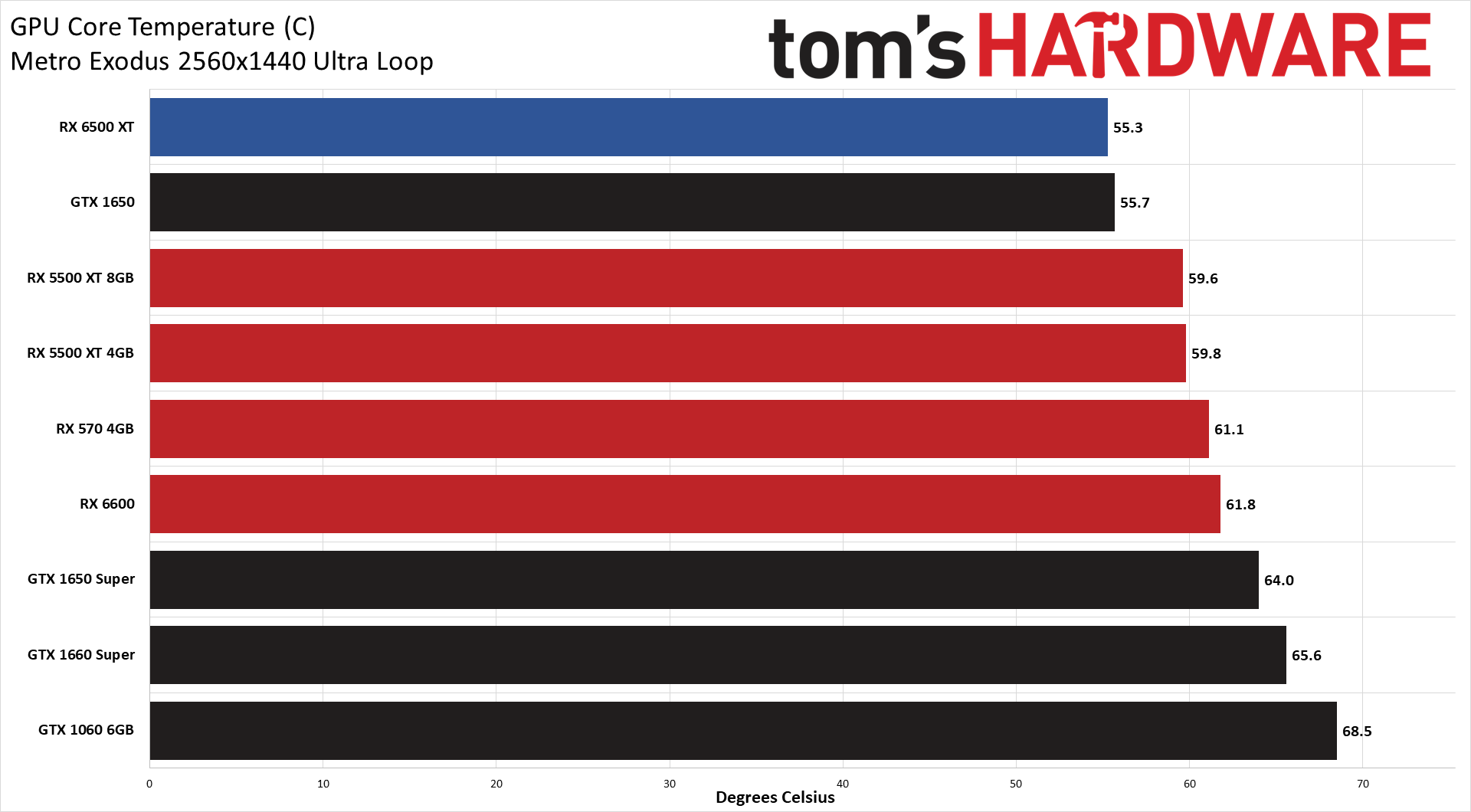

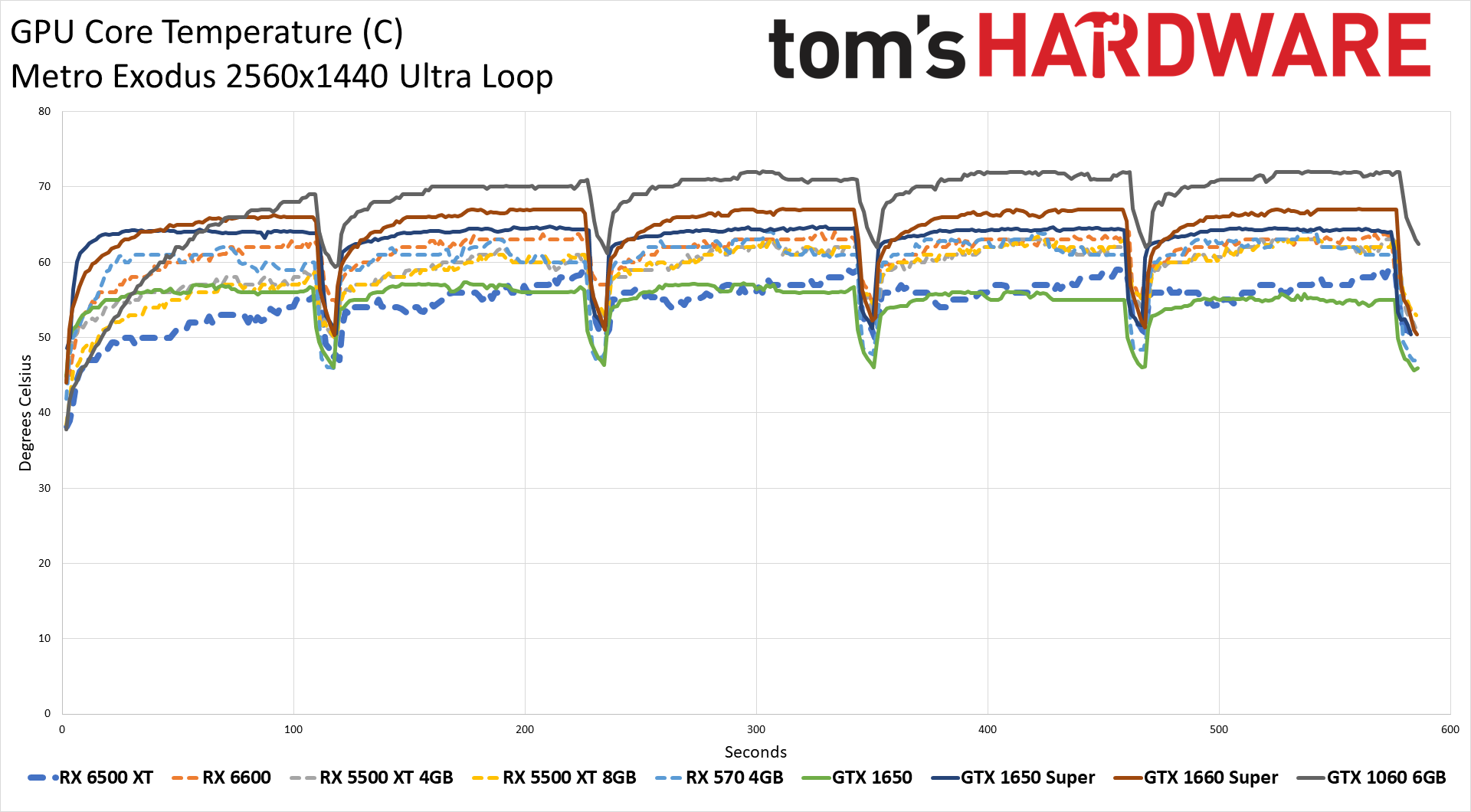

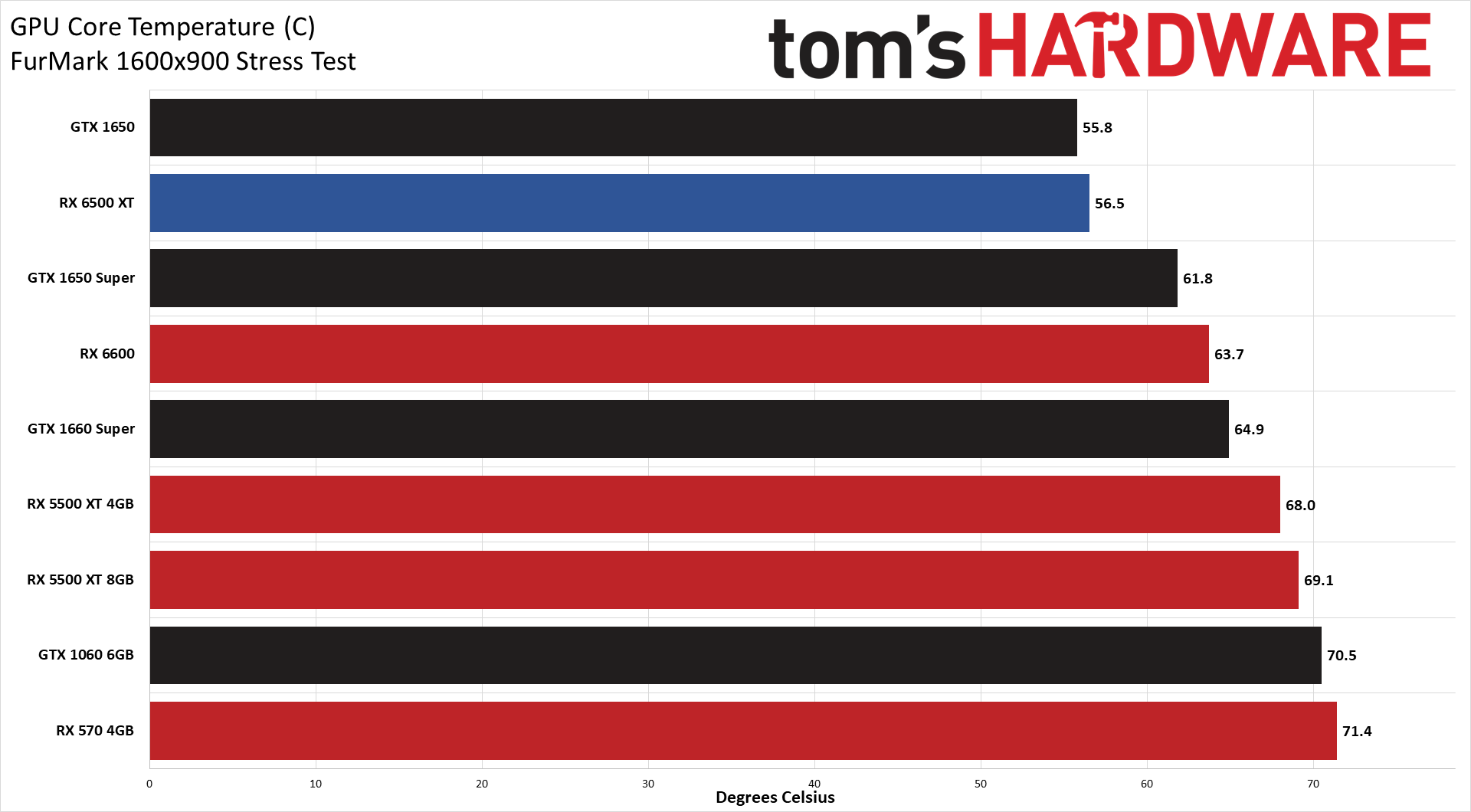

Given the large fans, it's little surprise that the XFX RX 6500 XT stays cool, even with low fan speeds. During the Metro test, the fans seemed to be trying to decide whether or not they should even bother spinning up, while the constant load of FurMark only required relatively modest RPMs. Temperatures in both tests were only 55–56C.

Along with the Powenetics data, we also measure noise levels at 10cm using an SPL (sound pressure level) meter. We aim it right at the GPU fans in order to minimize the impact of other fans like those on the CPU cooler. The noise floor of our test environment and equipment measures 33 dB(A), and the XFX RX 6500 XT reached a peak noise level of 38.4 dB during testing, but hovered in the 34–35 dB range for much of the test. It's unlikely the fan will ever go above 50% during normal use, but we also tested with a static fan speed of 75%, at which point the card generated 61.8 dB of noise and was quite loud, but that's mostly a theoretical noise level.

Radeon RX 6500 XT: Not Really a Budget Card

The Radeon RX 6500 XT really shows the world everything that's wrong with the current state of the GPU market. The chip itself is very small, which should help reduce prices, and it cuts out several potentially useful features, all in the name of cutting costs. And then it launches with an official MSRP equal to the previous generation RX 5500 XT 8GB card — a card that's often quite a bit faster than the RX 6500 XT.

As bad as all that might be, GPUs continue to sell out at heavily inflated prices, and TSMC has reportedly increased prices on its latest nodes by 10–20%. Plus, most of the cards competing with the RX 6500 XT on performance already cost more than AMD's newcomer. The RX 5500 XT 8GB average price on eBay was $412 for the past two weeks, while the 4GB model sold for $302, for example. Nvidia's GTX 1650 Super meanwhile sold for $325 on average.

There's some slim hope that miners won't be interested in picking up the cards because of the 64-bit memory interface and 4GB of VRAM, and thus prices will be close to MSRP. That would be lovely, though considering the GTX 1650 and GTX 1650 Super also aren't great mining cards (they both have 4GB VRAM) and still sell at over double their launch prices, you probably shouldn't get your hopes up.

Assuming you can actually purchase the Radeon RX 6500 XT for $199, or at least somewhat close to it, it's not a bad card. It's not better than a GTX 1650 Super, and the Nvidia GPU tends to pull ahead at higher quality settings, but it will suffice for games that don't need more than 4GB VRAM. If you don't already have a similar graphics card, which would mean everything from the GTX 1060 6GB and RX 580 8GB and above, or if you're building a new gaming PC and just need any decent graphics card for now while we wait for prices to come down, the RX 6500 XT warrants a look.

At least buying an RX 6500 XT guarantees you're getting a brand-new card, which can't be said for the various used GPUs being sold on eBay and other second-hand markets. Hopefully it also avoids putting money into the hands of scalpers and other profiteers. Unfortunately, there's a good chance the same people driving up the prices on existing GPUs will try to do the same with the RX 6500 XT, and the only way that doesn't happen is if AMD and it's partners can produce enough cards so that everyone who wants one is able to purchase a card.

The real competition for the RX 6500 XT won't arrive until next week, in the form of Nvidia's GeForce RTX 3050. The specs look almost universally superior on the 3050, but AMD seems to hope that will be the card's downfall. It has an official launch price of $249, but with 8GB of memory on a 128-bit interface, there's no question miners might end up snapping up those cards and driving prices up. Except, looking at the current cryptocurrency market, prices have been slumping of late, and the latest mining boom may finally be drawing to a close. We can only hope. Check back next week and we'll see how the RTX 3050 compares.

For now, the Radeon RX 6500 XT represents the lowest cost ray tracing capable GPU available — though we use that description very loosely. GPUs of this level really don't have the muscle for higher levels of ray tracing, and minor amounts of ray tracing generally don't matter enough to make them worth the performance hit. Maybe (probably) RTX 3050 will do better, but until the supply of all tiers of graphics cards improves to the point where we can find them waiting for purchase on store shelves at reasonable prices, building a budget to midrange gaming PC that can match the latest consoles will continue to be a difficult proposition.

Like so many budget GPUs before it, the Radeon RX 6500 XT ends up being a lesson in compromise. There's nothing new here, insofar as performance goes. Instead, there are new features and improved performance at a low price point... except the price point isn't actually all that low. If you're still holding onto a GTX 1050 or RX 560 or slower graphics card and want something better for around $200, the RX 6500 XT should suffice. Just don't be surprised when it can't handle running newer games with all the bells and whistles, and you may not want to pair it with a PCIe Gen3 platform.

MORE: Best Graphics Cards

MORE: GPU Benchmarks and Hierarchy

MORE: All Graphics Content

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

Roland Of Gilead Tut, Tut! AMD are taking the michael here. What an awful card for the price. There simply is no buttering it up to sound good, despite the writers best efforts.Reply -

InvalidError You threw out the highly inconsistent runs? Those may have been a side-effect of AMD only giving it an x4 interface on top of 4GB 64b memory and possibly be the most important detail people need to be aware of. AMD has cut far too many corners on this thing to be worth more than $150 were it not for current market conditions.Reply -

PEBKAC6 Been reading the content on this site for a decade and finally had to register to comment on the amount of nonsense in this 'review.'Reply

It's obvious this was not released with AAA-titles in mind. It was obviously released for esports games such as CS:GO / OW / DotA. Hence you excluding resolutions from the review for games that run excellently even with much less VRAM makes absolutely no sense.

Were you aware you can run the most popular esports titles listed above on 2k resolution paired with a 165 Hz monitor comfortably with a decade old 2GB GPU such as GTX770? Apparently not.

The price and x4 performance on PCIe 3.0 are major negative sides worth noting but given how excessively you advertise yourself as a GPU guru with nearly 20 years of experience it's shocking how ignorant a review can be. This card is literally what the community needed among the GPU shortage and it serves anyone playing some of the most popular games at 1k/2k at high refresh rates with low power draw and noise.

Entry level cards are crucial since old hardware dies over time and the vast majority of games run exceptionally even with these specs. The target audience is far off in this review. -

InvalidError Reply

People generally don't buy new GPUs to run titles that already do 200fps on 10 years old cards. They buy new GPUs when their current one cannot keep up with the resolutions, details and frame rates they want to play more GPU-intensive stuff at and most of those games will quite easily pass the 4GB mark. That is where the RX6500 drops behind every other 4GB GPU from the last four years that has 3.0x16 or 4.0x8 PCIe.PEBKAC6 said:Were you aware you can run the most popular esports titles listed above on 2k resolution paired with a 165 Hz monitor comfortably with a decade old 2GB GPU such as GTX770? Apparently not. -

JarredWaltonGPU Reply

The inconsistent runs, mostly in Far Cry 6, occurred on all 4GB cards. At one point I ran the GTX 1650 Super through the 1080p Ultra + HD test sequence maybe a couple dozen times — I even restarted the game and restarted the PC a couple times just to try and get the results to stabilize at one value. Performance ranged from as low as ~7 fps average (once) to as high as ~55 fps (once). Most of the results clumped into the ~44 fps range and the ~15 fps range. At times it seemed like every other run would be "bad." It's almost certainly a game bug/issue, where the game in DX12 mode isn't managing VRAM effectively and sometimes ends up in a bad state. I could have tested in DX11 mode and hoped the drivers would "fix" things, but then that opens the door to having to test both APIs on every GPU — and then do the same for every other game that supports multiple APIs.InvalidError said:You threw out the highly inconsistent runs? Those may have been a side-effect of AMD only giving it an x4 interface on top of 4GB 64b memory and possibly be the most important detail people need to be aware of. AMD has cut far too many corners on this thing to be worth more than $150 were it not for current market conditions.

For those wondering about the test suite, I need to test all GPUs on the same set of games, for the GPU benchmarks hierarchy as well as other guides. I specifically focused on the 1080p medium performance as that's what this card is designed to handle. However, even at those settings it's a questionable card. This is profiteering from AMD and the GPU market in general. The RTX 3050 will at least potentially warrant a $200-$250 MSRP (in "normal" times). The RX 6500 XT would have been a $100-$120 GPU normally. It probably would have been called the RX 6400 actually, leaving the RX 6500 name for a card with better specs and performance.

If I were to only test lighter fare to show how this card can handle games that even cards from eight years ago run just fine, what good does that do anyone? "Hey everyone, this is PEBKAC6 Marketing, letting you know that games that don't demand much from your GPU don't demand much from your GPU! Isn't that amazing? Aren't you happy to know that CS:GO, which can run at reasonable performance even on Intel's integrated graphics solutions from five years ago, can run on a $200 graphics card? You should all just go buy it! This is the card we all need! Never mind that it's slower than the card it replaces and has worse specs (RX 5500 XT 8GB). 5-stars, would buy again!" -

King_V I was afraid of this on a few points.Reply

Even back in the Polaris days, while my memory is a bit hazy, I seem to recall that there was greater benefit in increasing memory bandwidth than in GPU clocks. Yes, they went with fast GDDR6, but the 4GB limit is an issue.

Cutting down the PCIe lanes on the 5500 was a problem. Cutting it even more for the 6500 was a mistake.

I mean, even look at the difference between the GTX 1650 GDDR5 vs GDDR6; they were able to reduce the clock speeds of the GPU, and it still performed notably better, while still keeping things in the same TDP envelope.

Similarly, the performance/watt of RDNA with the 5000 series seemed to suffer going into the budget level. The 5500 had a notably lower performance/watt than the 5600. The 6500XT does have lower power, but the cut down GPU needs higher clocks... so the TDP is not reasonable for the performance it's delivering, especially given the RDNA2 architecture.

On the other hand, I guess they'd need, say, a 6600 sized chip, or at least something not quite cut down THIS small... and run the clocks lower, to get decent performance with less power consumption. I'd say that they may be trying to make the most of limited supply, but this is on N6, so, I would guess there's no supply constraints on that.

The particular cuts they made seem to be the wrong kind. Keeping in mind that I am NOT an electronics engineer of any sort.

Call me unrealistic, but I think this is a card that should have been designed to manage at least equal the 5500 XT in performance, and do so without the need of a PCIe power connector. -

InvalidError Reply

If repeatability is so problematic on 4GB cards overall, it may be a sign that graphs need to get variance bars to represent how (un)repeatable results are.JarredWaltonGPU said:The inconsistent runs, mostly in Far Cry 6, occurred on all 4GB cards. -

VforV ReplyAssuming you can actually purchase the Radeon RX 6500 XT for $199, or at least somewhat close to it, it's not a bad card.

Sorry, as much as I like AMD, this is a bad GPU! And unless someone is "forced", no one should buy it.

We should not accept this kind of garbage GPUs, anymore than we should accept nvidia's scumminess with their new policy of NO reviews before launch and NO more MSRP again!

Whatever the haters want to say about them, again HUB and GN are the voice of impartiality and say it exactly how it is: in this case a bad GPU from AMD.

M5_oM3Ow_CIView: https://www.youtube.com/watch?v=M5_oM3Ow_CI

ZFpuJqx9QmwView: https://www.youtube.com/watch?v=ZFpuJqx9Qmw -

JarredWaltonGPU Reply

Usually it's only a problem if you change settings and don't exit and restart the game (in a game that lets you do that). I've done lots of testing over the years, and generally speaking cards with 8GB and more VRAM can go from 1080p to 1440p to 4K in testing without exiting and restarting. With a 4GB card, you sometimes end up in a severely degraded performance state and need to exit and relaunch. But with low-level DX12/Vulkan APIs, sometimes it's just a periodic glitch that causes performance to suffer. I've seen issues with 4GB and even 6GB cards in Watch Dogs Legion at times. Usually, exiting and restarting the game will clear the problem. Far Cry 6 was unusual in that it had high run to run variance seemingly whenever it exceeded the card's VRAM. The game also showed changes in the amount of VRAM it thought it needed, which was certainly odd.InvalidError said:If repeatability is so problematic on 4GB cards overall, it may be a sign that graphs need to get variance bars to represent how (un)repeatable results are.

Anyway, the problem with charts is that there are lots of people who can only really manage to grok relatively simple bar charts. Start adding variance and all that other stuff and only stock investors and traders, and stats majors, are likely to get what we're showing. Plus, how many runs should I do for each setting? I try to stay with three, but do more if there's clear variance happening, and sometimes a lot more if I'm just unable to figure out what's happening (e.g. with FC6). If I had to do 10 runs of each setting, then run the results through some stats to get variance bars and such, it would dramatically decrease my throughput and workflow, and likely the potential gains (people understanding a bit better what's happening) would not even be there.

Fundamentally, I want to provide a relatively "modern" look at how graphics cards perform in a variety of situations. I have a couple of very recent games in my updated test suite, along with some slightly older games that are still useful (and relatively easy to use). I don't want to show a bunch of games that specifically won't tax 4GB cards, but neither do I only want to look at games that basically require 8GB or more. And then when I create the aggregate scores for the GPU hierarchy, I definitely don't want a bunch of random "bad" results that penalize slower GPUs. So, I figure most people will use settings that run at closer to acceptable levels of performance, and running a few extra tests to see where the high water mark is helps keep the charts more sensible. For people who care, there's the text that will call out anomalies. 🙃

As an aside, RDR2 strictly prevents you from selecting settings that exceed a card's VRAM, but you can edit the config file to try and get around that. I was able to force 1080p "ultra" and 1440p "ultra" to run, though 1440p resulted in periodic graphical corruption. 4K ultra is too much, however, and just crashes to desktop. Anyway, I need some meaningful number to avoid skewing the overall results — not having a low score present can increase the calculated average, but putting in a "0" result can go too far the other direction.