EVGA GeForce GTX 1080 Ti FTW3 Gaming Review

Why you can trust Tom's Hardware

Clock Rates, Overclocking & Heat

Clock Rates

Manufacturers can claim whatever they want in their marketing material. Actually achievable clock rates are subject to a number of hard-to-control variables, though. GPU quality, for instance, plays a big role, and there's no way to pre-screen what you get on that front. So, it's absolutely possible that a nominally slower card made by one board partner ends up faster than a more aggressively-tuned model from another partner. As a result, comparisons between products have to be approached with an understanding of some inherent uncertainty.

Board vendors can, however, control the settings and environmental factors that affect how GPU Boost ultimately determines operating frequency, depending on the situations it encounters. Beyond specifications like the power target or clock offset, temperature under load is perhaps the most influential factor in defining sustainable performance.

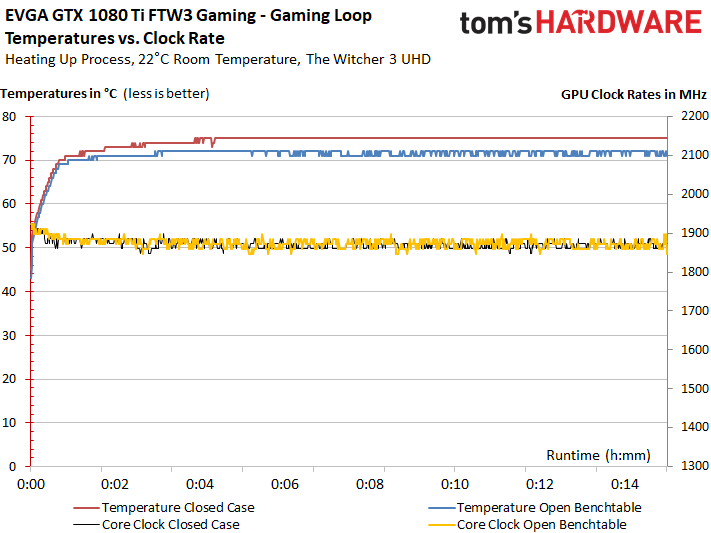

For the EVGA GeForce GTX 1080 Ti FTW3 Gaming, we measured an initial GPU Boost frequency as high as 1974 MHz during our gaming loop. As the card warmed up, it maintained an average of ~1860 MHz during our 30-minute measurement.

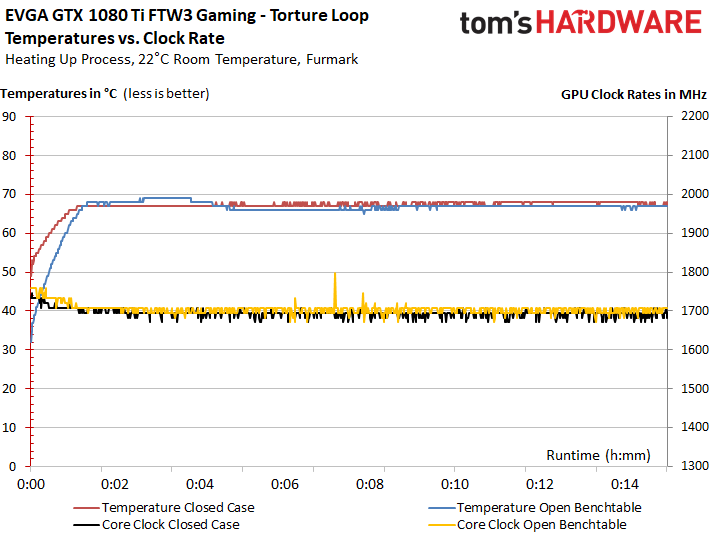

During our stress test, the power limit's constraints are more palpable, manifesting as lower clock rates and, consequently, lower temperatures.

Overclocking

Of course, the card does tolerate some additional overclocking. In our case, we achieved a stable 1974 MHz under air cooling, with peaks as high as 2037 MHz. To achieve 2 GHz+, we had to set EVGA's fan control to 100%, causing the card to run much noisier than its stock configuration.

If you plan to overclock, consider increasing the power target to its maximum, or at least to 120%.

The table below contains results after configuring our card in Afterburner and a long test run in The Witcher 3.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

| Clock Rate Increase | Power Target (Afterburner) | Voltage (Afterburner) | Avg. Boost Clock | Avg. Voltage | Power Consumption |

|---|---|---|---|---|---|

| No | 100% | Standard | 1860 MHz | 1.030 V | 286.2W |

| No | 100% | Maximum | 1885 MHz | 1.043 V | 289.4W |

| No | 120% | Standard | 1974 MHz | 1.050 V | 320.7W |

| +25 MHz | 127% | Maximum +Fans @ 100% | 2037 MHz | 1.062 V | 331.1W |

Getting a good overclock from your memory requires perseverance and a bit of luck. Seemingly stable settings might work short-term, and then prove dicey after a few hours of gaming. In the case of our sample, an extra 350 to 400 MT/s was feasible. Beyond that, performance started sliding the other direction.

Heat

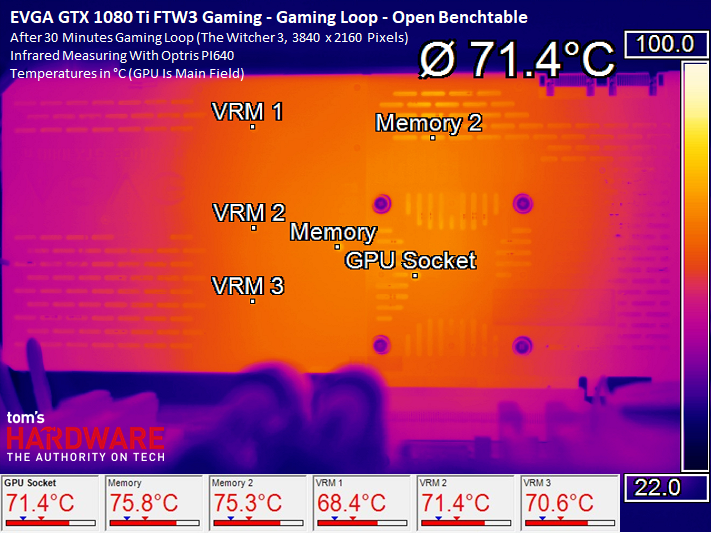

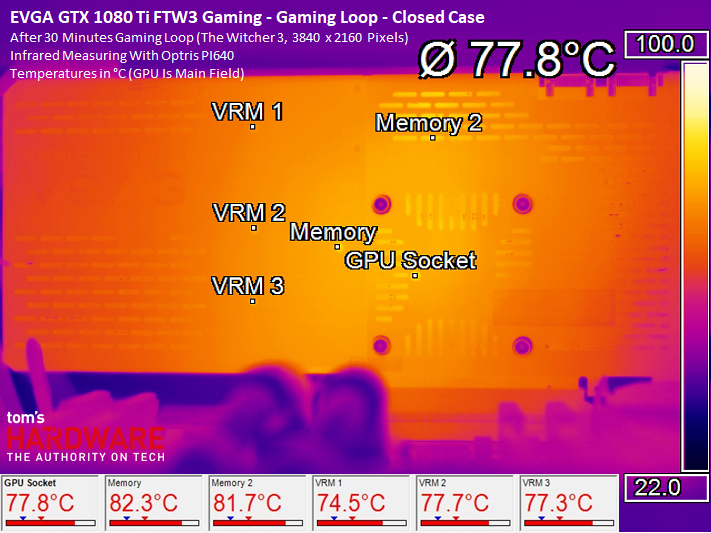

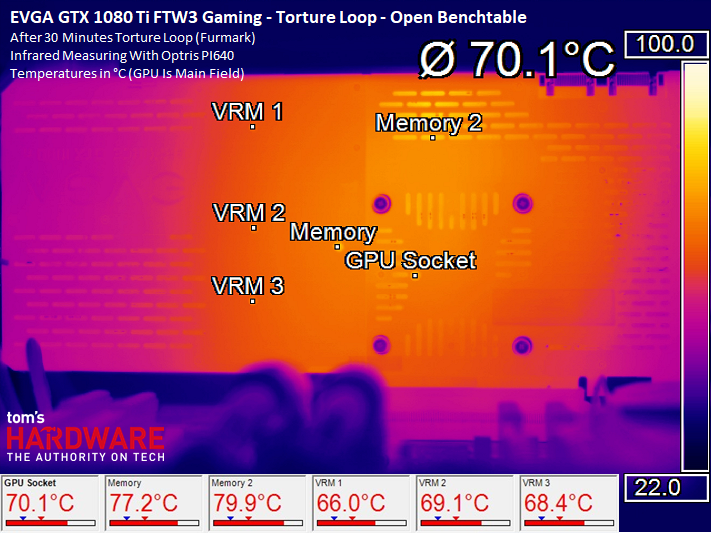

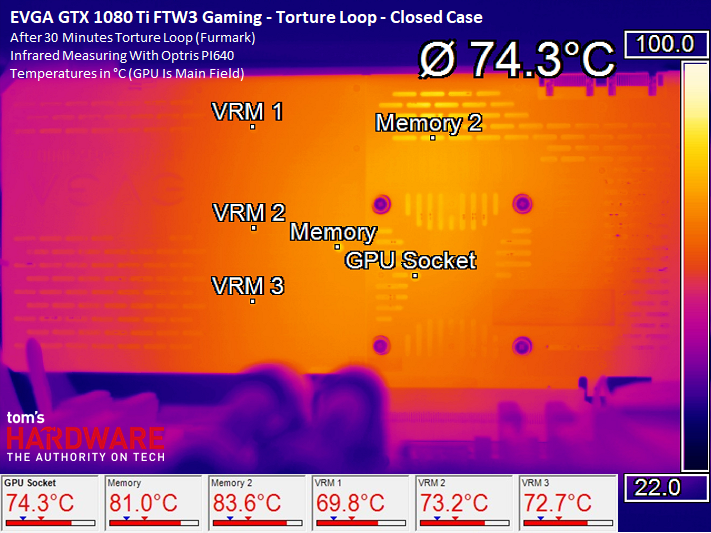

Because the backplate plays an active role in cooling, it has to stay on for our measurements. We did lay a bit of harrowing groundwork, though. Before taking our readings, we removed the backplate and identified the card's hot-spots. This gave us the information we needed to drill holes into the plate, directly above those areas. These holes allow us to take precise measurements, even with the plate attached. Just bear in mind that our numbers don't correspond to EVGA's thermal sensors. Because we're looking at thermal load directly under the relevant components, certain deviations are unavoidable.

Reading from the GPU diode, we observe 71 to 72°C on an open test bench, while the PCB underneath remains right between those two values at 71.4°C. The other temperatures are wholly acceptable at this point.

In a closed case, the GPU's temperature rises to 75°C. Meanwhile, the GPU package hits just under 78°C. This is already three Kelvin more, indicating that the backplate is already nearing its cooling capacity limit. Nevertheless, EVGA's voltage regulation circuitry and memory modules continue operating at a comfortable level. The GDDR5X is rated for temperatures as high as 95°C, after all.

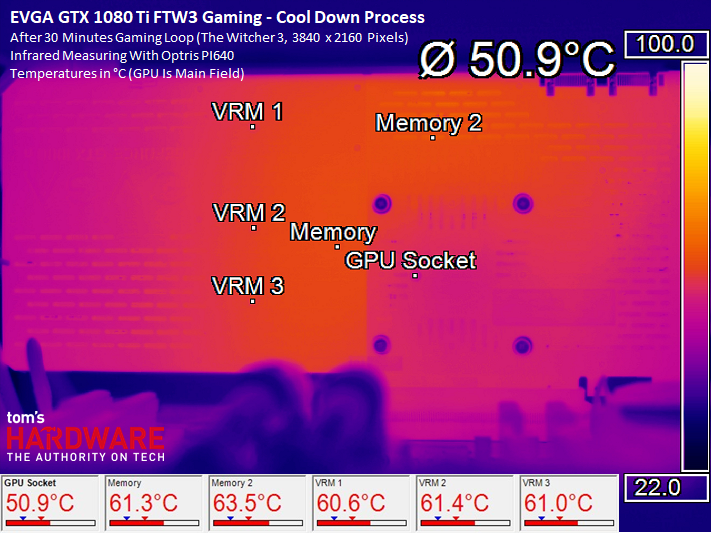

During our stress test, the card runs even cooler since the GPU's power target limits clock rates, driving voltage down and limiting thermal output. Only the memory heats up a bit more.

But this card gets hotter still; during our stress test in a closed case, the difference between GP102 and its package reaches four Kelvin.

As the card cools down, the infrared image reverses and it's easy to see where the cooler draws heat away most effectively (namely, below the GPU package).

All in all, the GeForce GTX 1080 Ti FTW3 Gaming's thermal solution is sufficient, and if we keep in mind that this is a dual-slot card, you might even say it's pretty darned good. It's hard to imagine a more effective implementation, given the physical constraints EVGA's team was under in designing a heat sink able to go up against the 2.5-slot competition.

MORE: Best Graphics Cards

MORE: Desktop GPU Performance Hierarchy Table

MORE: All Graphics Content

Igor Wallossek wrote a wide variety of hardware articles for Tom's Hardware, with a strong focus on technical analysis and in-depth reviews. His contributions have spanned a broad spectrum of PC components, including GPUs, CPUs, workstations, and PC builds. His insightful articles provide readers with detailed knowledge to make informed decisions in the ever-evolving tech landscape

-

AgentLozen I'm glad that there's an option for an effective two-slot version of the 1080Ti on the market. I'm indifferent toward the design but I'm sure people who are looking for it will appreciate it just like the article says.Reply -

gio2vanni86 I have two of these, i'm still disappointed in the sli performance compared to my 980's. What i can do but complain. Nvidia needs to do a driver game overhaul these puppies should scream together. They do the opposite which makes me turn sli off and boom i get better performance from 1. Its pathetic. Nvidia should just kill Sli all together since they got rid of triple sli they mind as well get rid of sli as well.Reply -

ahnilated I have one of these and the noise at full load on these is very annoying. I am going to install one of Arctic Cooling's heatsinks. I would think with a 3 fan setup this system would cool better and not have a noise issue like this. I was quite disappointed with the noise levels on this card.Reply -

Jeff Fx Reply19811038 said:I have two of these, i'm still disappointed in the sli performance compared to my 980's. What i can do but complain. Nvidia needs to do a driver game overhaul these puppies should scream together. They do the opposite which makes me turn sli off and boom i get better performance from 1. Its pathetic. Nvidia should just kill Sli all together since they got rid of triple sli they mind as well get rid of sli as well.

SLI has always had issues. Fortunately, one of these cards will run games very well, even in VR, so there's no need for SLI. -

dstarr3 Reply19811038 said:I have two of these, i'm still disappointed in the sli performance compared to my 980's. What i can do but complain. Nvidia needs to do a driver game overhaul these puppies should scream together. They do the opposite which makes me turn sli off and boom i get better performance from 1. Its pathetic. Nvidia should just kill Sli all together since they got rid of triple sli they mind as well get rid of sli as well.

It needs support from nVidia, but it also needs support from every developer making games. And unfortunately, the number of users sporting dual GPUs is a pretty tiny sliver of the total PC user base. So devs aren't too eager to pour that much support into it if it doesn't work out of the box. -

FormatC Dual-GPU is always a problem and not so easy to realize for programmers and driver developers (profiles). AFR ist totally limited and I hope that we will see in the future more Windows/DirectX-based solutions. If....Reply -

Sam Hain For those praising the 2-slot design for it's "better-than" for SLI... True, it does make for a better fit, physically.Reply

However, SLI is and has been fading for both NV and DV's. Two, that heat-sig and fan profile requirements in a closed case for just one of these cards should be warning enough to veer away from running in a 2-way SLI using stock and sometimes 3rd party air cooling solutions. -

SBMfromLA I recall reading an article somewhere that said NVidia is trying to discourage SLi and purposely makes them underperform in SLi mode.Reply -

Sam Hain Reply19810871 said:Unlike Asus & Gigabyte, which slap 2.5-slot coolers on their GTX 1080 Tis, EVGA remains faithful to a smaller form factor with its GTX 1080 Ti FTW3 Gaming.

EVGA GeForce GTX 1080 Ti FTW3 Gaming Review : Read more

Great article! -

photonboy NVidia does not "purposely make them underperform in SLI mode". And to be clear, SLI has different versions. It's AFR that is disappearing. In the short term I wouldn't use multi-GPU at all. In the LONG term we'll be switching to Split Frame Rendering.Reply

http://hexus.net/tech/reviews/graphics/916-nvidias-sli-an-introduction/?page=2

SFR really needs native support at the GAME ENGINE level to minimize the work required to support multi-GPU. That can and will happen, but I wouldn't expect to see it have much support for about TWO YEARS or more. Remember, games usually have 3+ years of building so anything complex needs to usually be part of the game engine when you START making the game.