EVGA GeForce GTX 1080 Ti FTW3 Gaming Review

Why you can trust Tom's Hardware

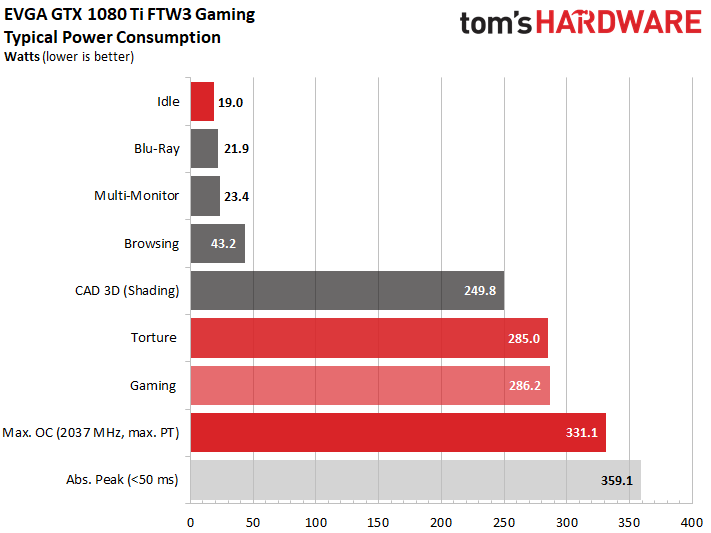

Power Consumption

By default, this card's power target is set to a moderate 280W. It can be raised manually to a little over 350W, which is more than enough to let you extract the design's maximum performance. Not surprisingly, the stock target is hit during our gaming and torture tests. However, even if the power target is set as high as possible using Afterburner, the actual value peaks just above 330W. The cooler is simply overwhelmed before getting to 350W.

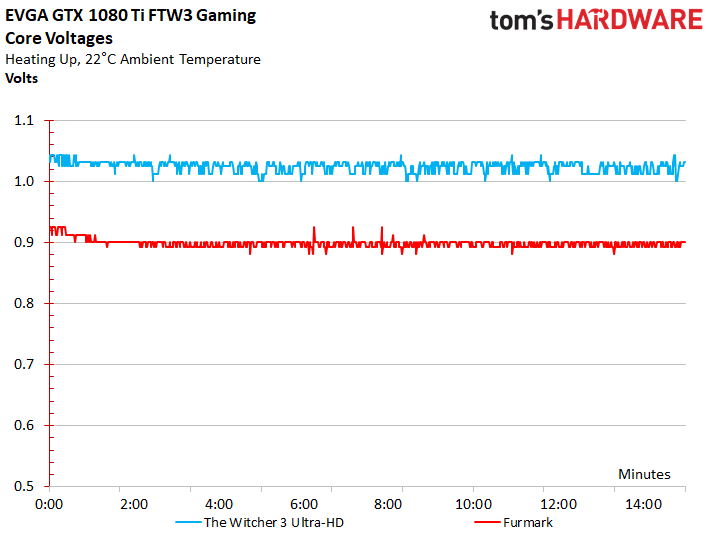

The GP102 processor on our test sample is good enough to reach ~1949 MHz at 1.062V, so long as you keep the chip under 50°C. Beyond that, too-high of a temperature forces the voltage down to 1.012V, with brief dips as low as 1.0V.

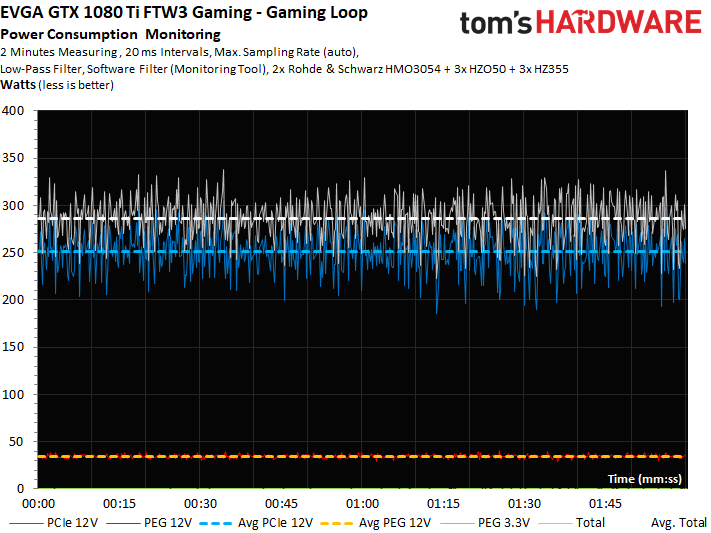

Gaming Loop

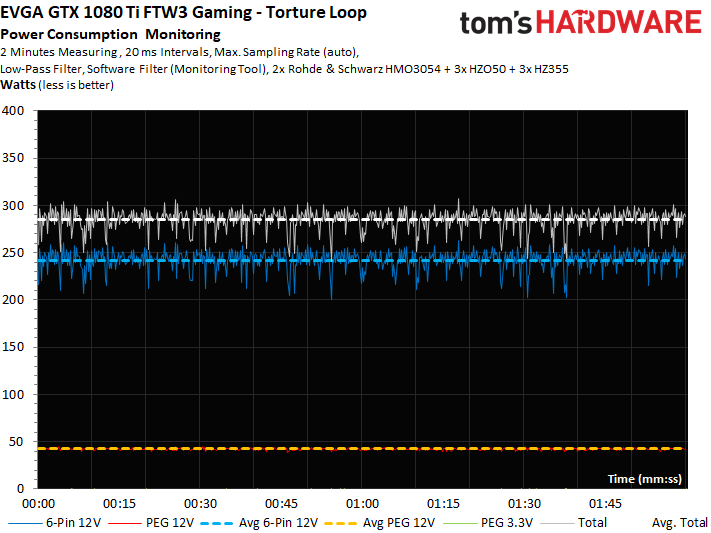

Let's break the power consumption measurement into separate, higher-resolution lines for each supply rail over a two-minute interval. In spite of our intelligent low-pass filter, occasional spikes remain visible. In places, they reach up to 343W. On average, however, this card stays around its 280W power target.

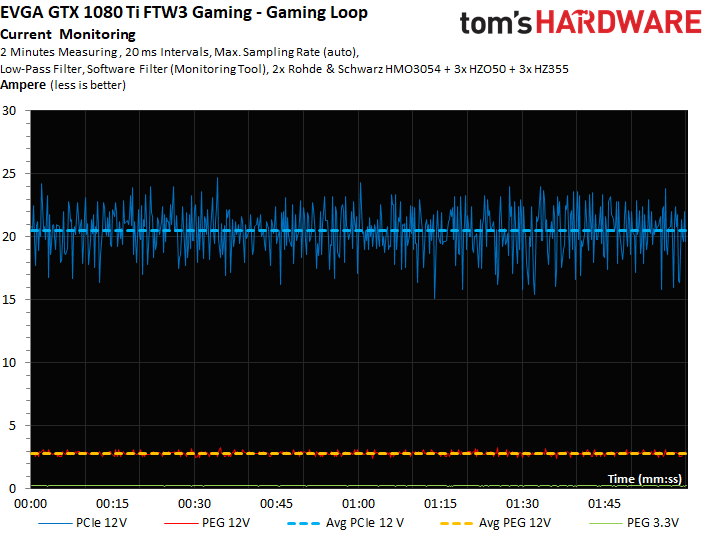

The graph corresponding to our current measurement looks just as hectic.

Torture Test

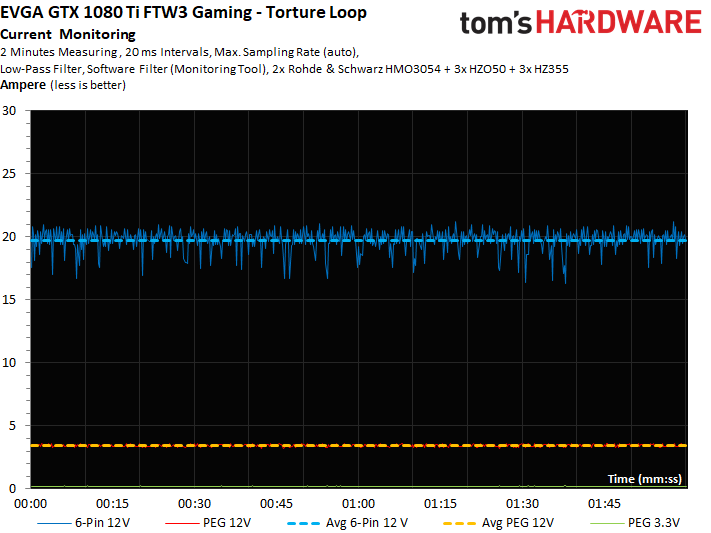

Faced with a more consistent load, power consumption does rise a little. However, the peaks are almost completely eliminated. Instead, we see where GPU Boost kicks in to start limiting power use.

The isolated current readings behave similarly.

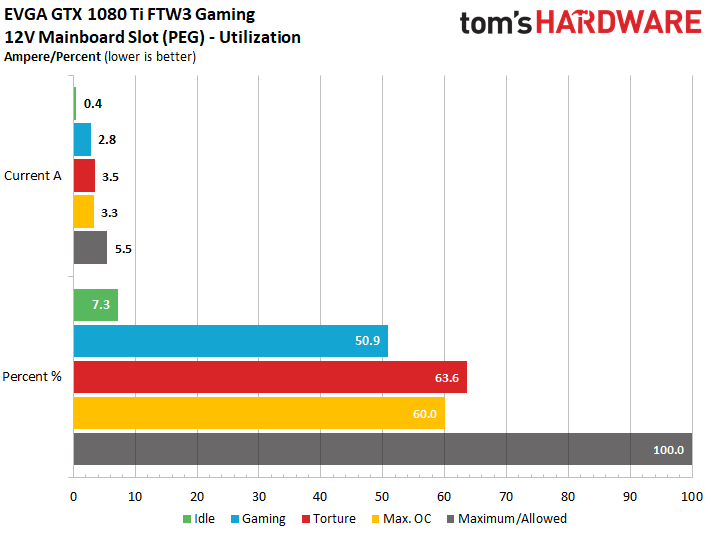

Ever since the launch of AMD's Radeon RX 480, we've been asked to include this metric in our reviews. But EVGA's GeForce GTX 1080 Ti FTW3 Gaming gives us no reason to be concerned about load on the motherboard's 16-lane PCIe 3.0 slot. In fact, our highest reading is just over 3A, leaving plenty of headroom under the PCI-SIG's 5.5A ceiling.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

MORE: Best Graphics Cards

MORE: Desktop GPU Performance Hierarchy Table

MORE: All Graphics Content

Current page: Power Consumption

Prev Page Gaming Performance Next Page Clock Rates, Overclocking & Heat

Igor Wallossek wrote a wide variety of hardware articles for Tom's Hardware, with a strong focus on technical analysis and in-depth reviews. His contributions have spanned a broad spectrum of PC components, including GPUs, CPUs, workstations, and PC builds. His insightful articles provide readers with detailed knowledge to make informed decisions in the ever-evolving tech landscape

-

AgentLozen I'm glad that there's an option for an effective two-slot version of the 1080Ti on the market. I'm indifferent toward the design but I'm sure people who are looking for it will appreciate it just like the article says.Reply -

gio2vanni86 I have two of these, i'm still disappointed in the sli performance compared to my 980's. What i can do but complain. Nvidia needs to do a driver game overhaul these puppies should scream together. They do the opposite which makes me turn sli off and boom i get better performance from 1. Its pathetic. Nvidia should just kill Sli all together since they got rid of triple sli they mind as well get rid of sli as well.Reply -

ahnilated I have one of these and the noise at full load on these is very annoying. I am going to install one of Arctic Cooling's heatsinks. I would think with a 3 fan setup this system would cool better and not have a noise issue like this. I was quite disappointed with the noise levels on this card.Reply -

Jeff Fx Reply19811038 said:I have two of these, i'm still disappointed in the sli performance compared to my 980's. What i can do but complain. Nvidia needs to do a driver game overhaul these puppies should scream together. They do the opposite which makes me turn sli off and boom i get better performance from 1. Its pathetic. Nvidia should just kill Sli all together since they got rid of triple sli they mind as well get rid of sli as well.

SLI has always had issues. Fortunately, one of these cards will run games very well, even in VR, so there's no need for SLI. -

dstarr3 Reply19811038 said:I have two of these, i'm still disappointed in the sli performance compared to my 980's. What i can do but complain. Nvidia needs to do a driver game overhaul these puppies should scream together. They do the opposite which makes me turn sli off and boom i get better performance from 1. Its pathetic. Nvidia should just kill Sli all together since they got rid of triple sli they mind as well get rid of sli as well.

It needs support from nVidia, but it also needs support from every developer making games. And unfortunately, the number of users sporting dual GPUs is a pretty tiny sliver of the total PC user base. So devs aren't too eager to pour that much support into it if it doesn't work out of the box. -

FormatC Dual-GPU is always a problem and not so easy to realize for programmers and driver developers (profiles). AFR ist totally limited and I hope that we will see in the future more Windows/DirectX-based solutions. If....Reply -

Sam Hain For those praising the 2-slot design for it's "better-than" for SLI... True, it does make for a better fit, physically.Reply

However, SLI is and has been fading for both NV and DV's. Two, that heat-sig and fan profile requirements in a closed case for just one of these cards should be warning enough to veer away from running in a 2-way SLI using stock and sometimes 3rd party air cooling solutions. -

SBMfromLA I recall reading an article somewhere that said NVidia is trying to discourage SLi and purposely makes them underperform in SLi mode.Reply -

Sam Hain Reply19810871 said:Unlike Asus & Gigabyte, which slap 2.5-slot coolers on their GTX 1080 Tis, EVGA remains faithful to a smaller form factor with its GTX 1080 Ti FTW3 Gaming.

EVGA GeForce GTX 1080 Ti FTW3 Gaming Review : Read more

Great article! -

photonboy NVidia does not "purposely make them underperform in SLI mode". And to be clear, SLI has different versions. It's AFR that is disappearing. In the short term I wouldn't use multi-GPU at all. In the LONG term we'll be switching to Split Frame Rendering.Reply

http://hexus.net/tech/reviews/graphics/916-nvidias-sli-an-introduction/?page=2

SFR really needs native support at the GAME ENGINE level to minimize the work required to support multi-GPU. That can and will happen, but I wouldn't expect to see it have much support for about TWO YEARS or more. Remember, games usually have 3+ years of building so anything complex needs to usually be part of the game engine when you START making the game.