Tom's Hardware Verdict

GeForce RTX 2080 Ti is the fastest gaming graphics card available, and Gigabyte’s highest-end air-cooled implementation is big, bold, a bit gaudy, but undeniably fast. Extra display outputs, four years of warranty coverage, an overclocked GPU, and copious RGB LED lighting set this model apart from Nvidia’s Founders Edition version.

Pros

- +

Exceptional performance

- +

Extensive use of RGB LEDs complements illuminated PCs

- +

Four-year warranty coverage

- +

More display output options than competing cards

Cons

- -

Axial fans exhaust waste heat into your case

- -

Cooling solution is louder under load than other Turing-based cards we’ve tested

- -

Triple-slot form factor takes up lots of space

Why you can trust Tom's Hardware

Gigabyte Aorus GeForce RTX 2080 Ti Xtreme 11G Review

Debate the value of GeForce RTX 2070 versus GTX 1080 all you want. Up where the GeForce RTX 2080 Ti lives, there is no competition. There is no point to arguing whether $1,200/£1,100 (or more) is worth paying. If you want smooth performance at 3840 x 2160 with detail settings cranked up, GeForce RTX 2080 Ti is the only game in town. Really, the one decision you face is spending big money on Nvidia’s Founders Edition version or springing for an even more expensive third-party model with a larger cooler, fancy lighting, and a longer warranty.

Gigabyte’s Aorus GeForce RTX 2080 Ti Xtreme 11G epitomizes the top end of an already flagship-class graphics card. It takes Nvidia’s specifications and kicks them up a notch. Perhaps it’s fitting, then, that such an exclusive piece of hardware is also, in fact, quite rare. Months after its official introduction the Aorus GeForce RTX 2080 Ti Xtreme 11G remains hard to find. But that’s not going to stop us from running the board through our gauntlet of performance, power, and thermal benchmarks.

Meet the Aorus GeForce RTX 2080 Ti Xtreme 11G

Gigabyte doesn’t publish a specification for the Xtreme 11G’s power consumption. However, our sensors indicate that this GeForce RTX 2080 Ti uses up to ~300W between the PCIe slot and a pair of eight-pin auxiliary connectors. That’s a full 40W more than Nvidia’s Founders Edition card, and roughly the same as a Radeon RX Vega 64.

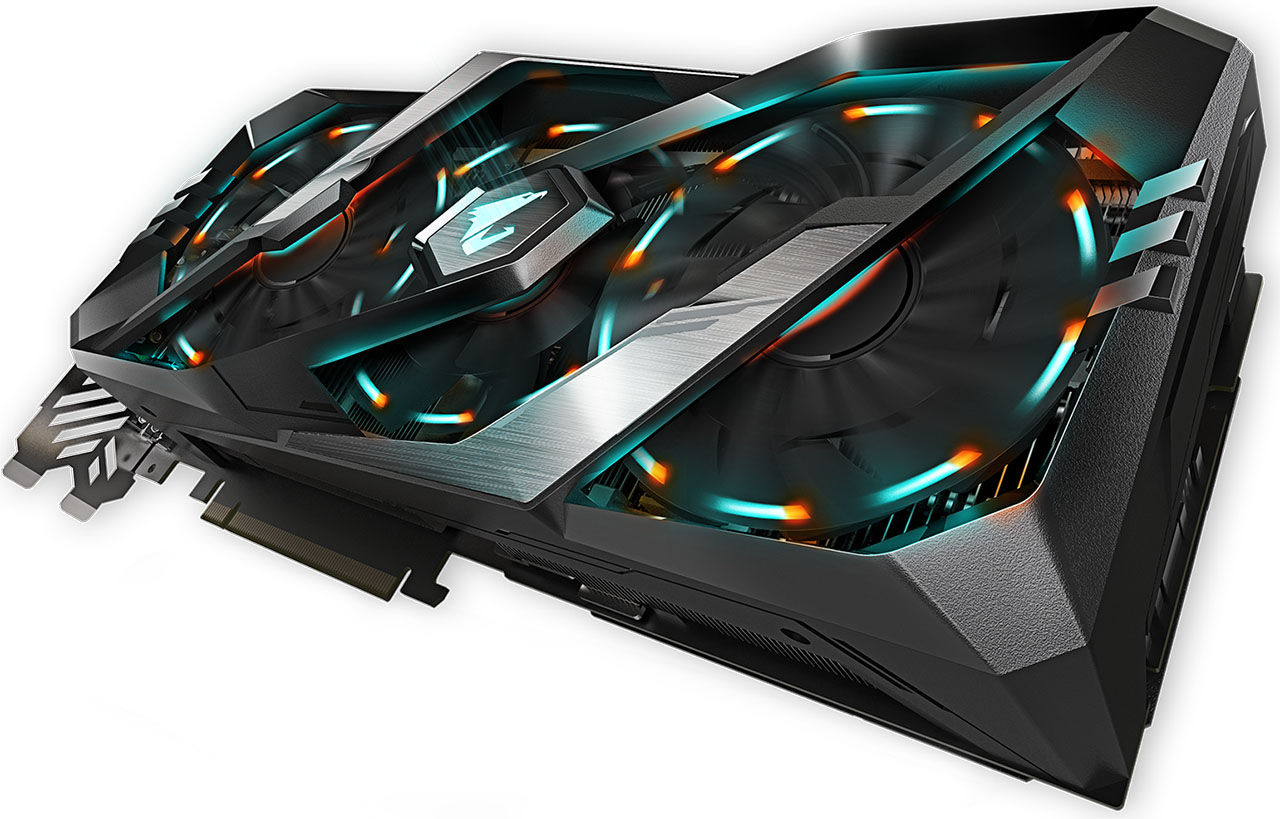

Coping with big power consumption requires a capable thermal solution. Gigabyte tops the TU102 processor with a substantially-sized heat sink and three 100mm fans to keep Nvidia’s GPU cool. As a result, the GeForce RTX 2080 Ti Xtreme 11G is both large and heavy. According to our scale, it weighs about 3 lbs. (1,351g), making this the beefiest GeForce RTX 20-series card we’ve tested.

It isn’t the longest, though. Because Gigabyte’s fan shroud does not overhang the PCB, an 11 ¼” (28.6cm) measurement fits better than cards like Asus’ ROG Strix GeForce RTX 2070 O8G Gaming when case clearance is an issue. Height is another matter entirely. In order to accommodate those fans, Gigabyte needed to use an especially tall shroud. So, from the bottom of its PCIe connector to the card’s top edge, you’re looking at roughly 5 ¼” (13.4cm). Depth might be an issue if you’re short on expansion slots. There are no 2.5-slot pretenses—this is a full three-slot card occupying 2 ⅜” (60mm) of space from the backplate to the widest part of its shroud.

The card’s thickness is pretty evenly split between its heat sink and fan shroud. Predominately matte black plastic is accented by silver swoops and glossy black edges. Underneath the decorative cover, three 100mm fans in a stacked configuration overlap each other across the cooler’s length. By overlapping and spinning in opposite directions, Gigabyte claims its Windforce Stack 3X system yields complementary airflow from each of the fans, rather than inefficient turbulence. Interestingly, though, based on Gigabyte’s own diagrams and experiments, the middle and right fans blow down onto your motherboard, while the left and middle fans push air out the card’s top—right up against an overhang in the shroud.

The heat sink is split into two similarly-sized sections, each shaped differently to accommodate surface-mounted components below them. In the center, fins are cut to the same height, maximizing surface area. But the outer two-thirds of both sinks employ an angular fin design, with every other fin sitting lower. Gigabyte says this helps channel air through the fins while reducing noise.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The heat sink half closest to the display outputs sits on top of Nvidia’s TU102 processor. Seven heat pipes make direct contact with the GPU die. Two of them bend back in to the sink’s top and bottom edges, ensuring thermal energy is dissipated evenly. Meanwhile, five pipes pass into the other sink, running all the way through to its far edge. A spacer under the GPU sink sandwiches thermal pads between itself and GDDR6 memory modules surrounding TU102. Both sides also contact voltage regulation circuitry on the board to alleviate hot spots.

Rather than building its thermal solution onto a metal frame close to the GeForce RTX 2080 Ti Xtreme 11G’s PCB, Gigabyte’s backplate bolts directly to the heat sink in 10 places. A metal frame on top of the sink adds rigidity by keeping the thermal solution from flexing. Even then, this is an extremely heavy card to hang from a PCIe slot, so Gigabyte bundles a stand meant to support the card by pushing up from your bottom-mounted PSU or the floor of your chassis.

Beyond souped-up cooling, lighting is also a major component of this card’s Aorus Xtreme branding. RGB LEDs above each fan shine down onto a single pipe that takes the light out onto one fan blade. Gigabyte’s RGB Fusion 2.0 software allows you to control the effects those LEDs create, from smooth pulses and gradients to more jarring strobes and flashes. Backlit Aorus logos on the board’s top and back are synchronized to the fans, ensuring your scheme of choice is visible from any angle.

Gigabyte does enable a semi-passive mode called 3D Active Fan, which keeps the fans from spinning during idle periods. Just be aware that turning this feature on turns off the fan LEDs, leaving you with illuminated logos and a Fan Stop indicator along the top edge.

Just above the Fan Stop indicator is a pair of eight-pin power connectors rotated 180 degrees to avoid hanging up on the heat sink underneath them. LEDs just above each connector help diagnose power supply issues. When they’re off, everything’s good. If you forget to run a cable from your PSU, they light up. Or, if power is intermittent, they blink to indicate an abnormality. At the other end of the top edge, an NVLink interface is protected by an orange plastic piece. The integrated cover on Nvidia’s Founders Edition card is definitely classier-looking.

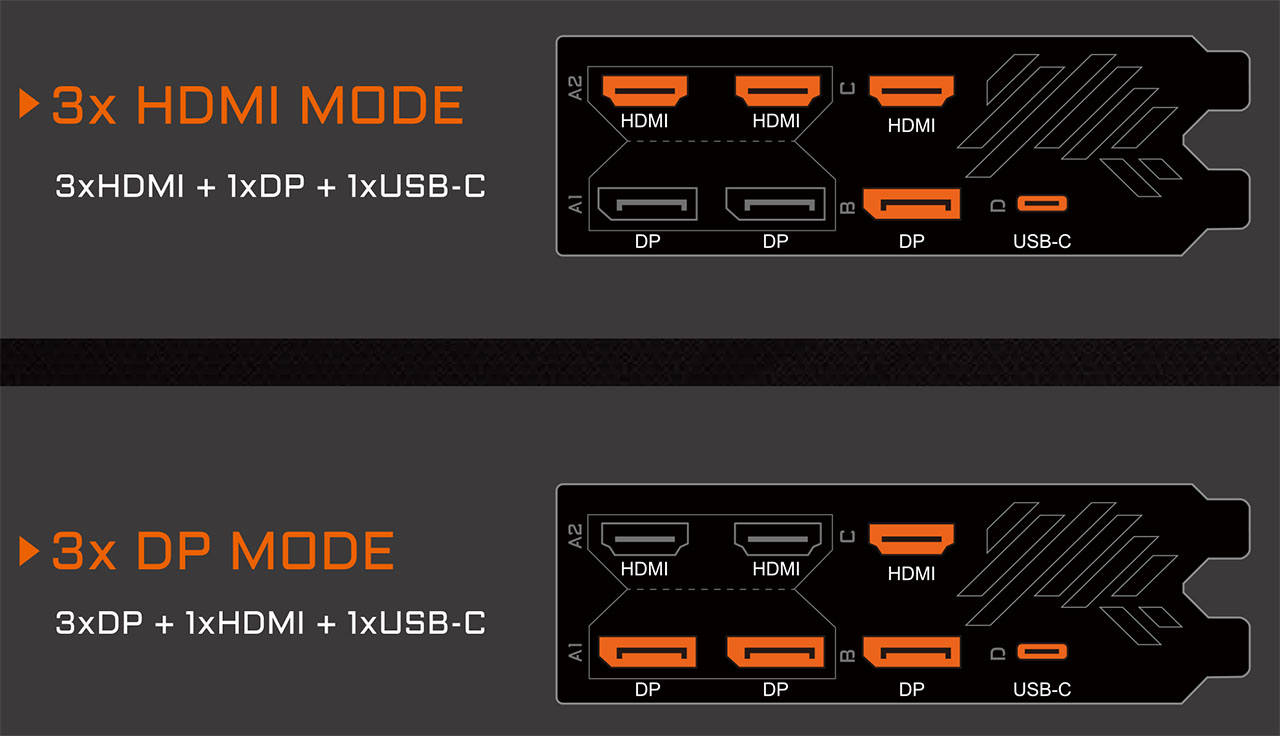

Because the heat sink’s fins move air up and down, there is no need for ventilation on the expansion bracket. Gigabyte instead fills its two-slot cover with seven display outputs: three DisplayPort connectors, three HDMI ports, and one USB Type-C interface. Of course, TU102 can only drive four displays simultaneously, so the seven connectors must be utilized via two distinct modes. At any given time, either two HDMI ports or two DP interfaces are turned off. Kudos to Gigabyte, though, for giving gamers gobs of connectivity, saving them from the annoying adapters.

A metal backplate gives the two heat sink pieces something solid to screw into. It also hosts the Aorus logo, which is backlit and controllable through Gigabyte’s RGB Fusion 2.0 software. The plate is solid, covering the PCB from end to end. There are no ventilation holes for trapped heat to escape from. However, pads behind the GPU do draw thermal energy away from TU102.

| Row 0 - Cell 0 | Aorus GeForce RTX 2080 Ti Xtreme 11G | GeForce RTX 2080 Ti FE | GeForce RTX 2080 FE | GeForce GTX 1080 Ti FE |

| Architecture (GPU) | Turing (TU102) | Turing (TU102) | Turing (TU104) | Pascal (GP102) |

| CUDA Cores | 4352 | 4352 | 2944 | 3584 |

| Peak FP32 Compute | 15.4 TFLOPS | 14.2 TFLOPS | 10.6 TFLOPS | 11.3 TFLOPS |

| Tensor Cores | 544 | 544 | 368 | N/A |

| RT Cores | 68 | 68 | 46 | N/A |

| Texture Units | 272 | 272 | 184 | 224 |

| Base Clock Rate | 1350 MHz | 1350 MHz | 1515 MHz | 1480 MHz |

| GPU Boost Rate | 1770 MHz | 1635 MHz | 1800 MHz | 1582 MHz |

| Memory Capacity | 11GB GDDR6 | 11GB GDDR6 | 8GB GDDR6 | 11GB GDDR5X |

| Memory Bus | 352-bit | 352-bit | 256-bit | 352-bit |

| Memory Bandwidth | 616 GB/s | 616 GB/s | 448 GB/s | 484 GB/s |

| ROPs | 88 | 88 | 64 | 88 |

| L2 Cache | 5.5MB | 5.5MB | 4MB | 2.75MB |

| TDP | 300W | 260W | 225W | 250W |

| Transistor Count | 18.6 billion | 18.6 billion | 13.6 billion | 12 billion |

| Die Size | 754 mm² | 754 mm² | 545 mm² | 471 mm² |

| SLI Support | Yes (x8 NVLink, x2) | Yes (x8 NVLink, x2) | Yes (x8 NVLink) | Yes (MIO) |

What lives under the Aorus GeForce RTX 2080 Ti Xtreme 11G’s hood is already well-known. We dug deep into the TU102 graphics processor and its underlying architecture in Nvidia’s Turing Architecture Explored: Inside the GeForce RTX 2080. Gigabyte takes the same graphics processor with 4,352 of its CUDA cores enabled and bumps the typical GPU Boost rating up to 1,770 MHz (versus the Founders Edition card’s 1,635 MHz and the reference 1,545 MHz).

The Gigabyte card's 11GB of GDDR6 memory moves data at 14 Gb/s, matching Nvidia’s reference design. Despite their similarities, though, this overclocked model is consistently faster than the smaller, cheaper Founders Edition model.

Most of Gigabyte’s graphics cards include three years of warranty coverage. Certain SKUs, however, come with an optional four-year guarantee after online registration in the first 30 days of ownership. The Aorus GeForce RTX 2080 Ti Xtreme 11G is one such product, giving it a definite advantage over competing cards without that extra bit of protection.

How We Tested Gigabyte’s Aorus GeForce RTX 2080 Ti Xtreme 11G

Gigabyte’s latest will no doubt be found in one of the many high-end CPU/motherboard platforms now available from AMD and Intel. Our graphics station still employs an MSI Z170 Gaming M7 motherboard with an Intel Core i7-7700K CPU at 4.2 GHz, though. The processor is complemented by G.Skill’s F4-3000C15Q-16GRR memory kit. Crucial’s MX200 SSD remains, joined by a 1.4TB Intel DC P3700 loaded down with games. Special thanks go to Noctua for sending over a batch of NH-D15S coolers. These top our graphics testing platform, our special power consumption measurement rig, and the system we use for testing temperature/fan speed in a closed case.

As far as competition goes, the Aorus GeForce RTX 2080 Ti Xtreme 11G is really in a league of its own, competing against other GeForce RTX 2080 Ti cards, Titan RTX, and Titan V. We include all of those in our performance benchmarks, along with GeForce RTX 2080, GeForce RTX 2070, GeForce GTX 1080 Ti, Titan X, GeForce GTX 1070 Ti, and GeForce GTX 1070 from Nvidia. AMD is represented by the Radeon RX Vega 64 and Radeon RX Vega 56. All of the comparison cards are either Founders Edition or reference models.

Our list of benchmarks received some attention ahead of today’s review. It now includes Ashes of the Singularity: Escalation, Battlefield V, Destiny 2, Far Cry 5, Forza Motorsport 7, Grand Theft Auto V, Metro: Last Light Redux, Rise of the Tomb Raider, Tom Clancy’s The Division, Tom Clancy’s Ghost Recon Wildlands, The Witcher 3, and Wolfenstein II: The New Colossus.

The testing methodology we're using comes from PresentMon: Performance In DirectX, OpenGL and Vulkan. In short, these games are evaluated using a combination of OCAT and our own in-house GUI for PresentMon, with logging via GPU-Z.

As we generate new data, we’re using new drivers. For Nvidia, that means testing the two new games with build 417.22 (all of the Gigabyte card’s numbers are generated with that driver, too). The Founders Edition cards are tested with 416.33 (2070) and 411.51 (2080 and 2080 Ti). Older Pascal-based boards are tested with build 398.82. Titan V’s results were spot-checked with 411.51 to ensure performance didn’t change. AMD’s cards utilize Crimson Adrenalin Edition 18.8.1 (except for the Battlefield V and Wolfenstein tests, which are tested with Adrenalin Edition 18.11.2).

MORE: Best Graphics Cards

MORE: Desktop GPU Performance Hierarchy Table

MORE: All Graphics Content

Current page: Gigabyte Aorus GeForce RTX 2080 Ti Xtreme 11G Review

Next Page Performance Results: 2560 x 1440-

NinjaNerd56 I’ll keep the GTX1060 machine I have....that cost IN TOTAL 1/2 of what this card goes for...and ray trace around all the money I have left.Reply -

tomspown The price stated of vega 56 and 64 are there to make the price of the 2080ti less wow but the real price of vega 56 is 370 euro and the vega 64 449 euro. i realy hate it when Toms H. uses over blown prices on AMD products just to make the competition a good buy.Reply -

jimmysmitty Reply21631806 said:The price stated of vega 56 and 64 are there to make the price of the 2080ti less wow but the real price of vega 56 is 370 euro and the vega 64 449 euro. i realy hate it when Toms H. uses over blown prices on AMD products just to make the competition a good buy.

I am not sure where they get their pricing but they did the same with the RX590 review with a over priced 1060. I think its a site algorithm that pulls prices and not a person as any normal person can easily find better deals. -

delaro I would think Nvidia would have learned a lesson on overly pricing cards with gimmicky tags... well I guess not and no wonder their stock tanked. I wouldn't think it's all that hard to understand, Selling 20,000 cards @ $1300+ or 250,000 @ $200? Over the last 15 years what range of cards have sold the most and which one gets released last in line? Splatting a big RTX label and charging a premium on a GPU when software is still 12 months behind was just stupid and we can add it to the many other gimmicks Nvidia has come up with over the years that failed to draw sales " PhysX, Early stages of VR, Hairworks, and now RTX" These cards are indeed nice but adding that extra premium just for "RTX" is just bad marketing.Reply -

redgarl How can a card with such a bad value proposition could score that high?Reply

It is insane, that you guys, are now accepting Nvidia price gouging behavior as something normal.... IT IS NOT!

This card offer the worst value ever. -

ingtar33 obviously a paid ad in the form of a review. for shame THGReply

About ready to retire this site for good at this point; first "just buy it because there is a price to NOT be an early adapter tripe", then a 4.5/5.0 for any NVIDIA RTX product is just a slap in the face of the site readers.

Clearly this is about the ad bucks, not sure how your reviewers can look themselves in the mirror anymore. -

cosmin.matei86 Any1 with a 1070ti or above is absolutely fine at 1440p resolution. My card scores about 60 fps in the last tomb raider and 52 in AC Oddysey benchmark. In game with dips to just unde 40. Coupled with a high refresh g sync monitor I don't even notice low framerate.Reply

I'll say pass to shitty rtx. I cannot believe how people could pay 1300 for a gimmick like rays. Gimmick that you will find in 0.0001% of the time played in the 2 existing games. It's like getting the gold frame. I would have preferred they invested in better more advanced phisics and market that. -

Phaaze88 Reply21632900 said:Any1 with a 1070ti or above is absolutely fine at 1440p resolution. My card scores about 60 fps in the last tomb raider and 52 in AC Oddysey benchmark. In game with dips to just unde 40. Coupled with a high refresh g sync monitor I don't even notice low framerate.

I'll say pass to shitty rtx. I cannot believe how people could pay 1300 for a gimmick like rays. Gimmick that you will find in 0.0001% of the time played in the 2 existing games. It's like getting the gold frame. I would have preferred they invested in better more advanced phisics and market that.

Not to mention, the current gen of RTX is only optimized for 1080p!

RTX Off: too powerful for 1080p. Ideal for 1440 and 4k

RTX On: Works best at 1080p. But for 1440 and 4k? NOPE.

The core feature of these cards(flagship) is useless to folks with higher res monitors, while being too powerful without said feature for those on lower res ones. This is why I'm passing on this gen's flagship.

It's just a bad investment all around.

And the 2060 won't fix this either:

RTX Off: great for 1080p.

RTX On: Not going to be able to run max settings like the 2070(?) and up, but hey, the overall build will be better balanced at least?

If they can keep the RTX train running, I'll hop aboard when they can do RTX On: 1440p, 100+hz. -

delaro Reply21632957 said:21632900 said:Any1 with a 1070ti or above is absolutely fine at 1440p resolution. My card scores about 60 fps in the last tomb raider and 52 in AC Oddysey benchmark. In game with dips to just unde 40. Coupled with a high refresh g sync monitor I don't even notice low framerate.

I'll say pass to shitty rtx. I cannot believe how people could pay 1300 for a gimmick like rays. Gimmick that you will find in 0.0001% of the time played in the 2 existing games. It's like getting the gold frame. I would have preferred they invested in better more advanced phisics and market that.

Not to mention, the current gen of RTX is only optimized for 1080p!

RTX Off: too powerful for 1080p. Ideal for 1440 and 4k

RTX On: Works best at 1080p. But for 1440 and 4k? NOPE.

The core feature of these cards(flagship) is useless to folks with higher res monitors, while being too powerful without said feature for those on lower res ones. This is why I'm passing on this gen's flagship.

It's just a bad investment all around.

And the 2060 won't fix this either:

RTX Off: great for 1080p.

RTX On: Not going to be able to run max settings like the 2070(?) and up, but hey, the overall build will be better balanced at least?

If they can keep the RTX train running, I'll hop aboard when they can do RTX On: 1440p, 100+hz.

It's not that the hardware isn't powerful enough.. It's that the API's are a year behind being able to render it without massive performance issues. So yes it makes these premium cards now seem Gimmicky and over inflated in price.

In a way they are listening by releasing Touring cards without the RTX badge at a closer to normal price. The issue here is the market is flooded and Nvidia is going to rush out Touring with 2X the badge numbers they normally release. Glad I moved all my Nvidia stock to AMD when Ryzen launched.

-

lorfa "this is an extremely heavy card to hang from a PCIe slot, so Gigabyte bundles a stand meant to support the card by pushing up from your bottom-mounted PSU or the floor of your chassis."Reply

This made me lol, what a time we are in.