Hot Vega: Gigabyte Radeon RX Vega 56 Gaming OC 8G Review

Why you can trust Tom's Hardware

Board & Power Supply

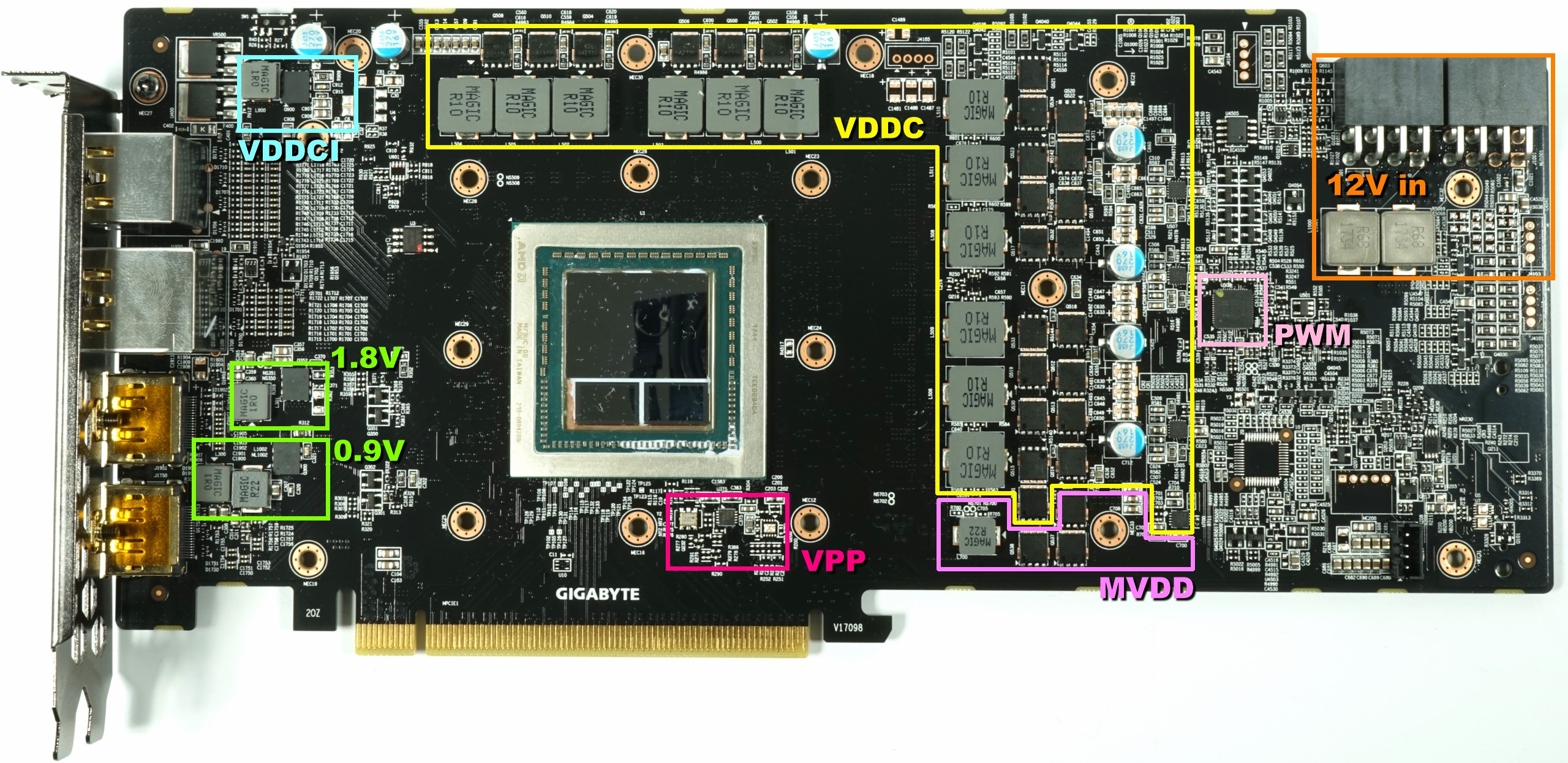

Board Layout

Gigabyte deviates significantly from AMD's reference board. Unfortunately, this also means that the design is incompatible with most existing full-cover water coolers. Even Raijintek's popular Morpheus is not an option. While Gigabyte implements six power phases with doubling, resulting in 12 voltage converters for the VDDC, and one phase for the memory (MVDD), just like AMD, the way those elements are oriented is bound to cause issues for third-party coolers.

The sources of other auxiliary voltages are also visible in our layout diagram.

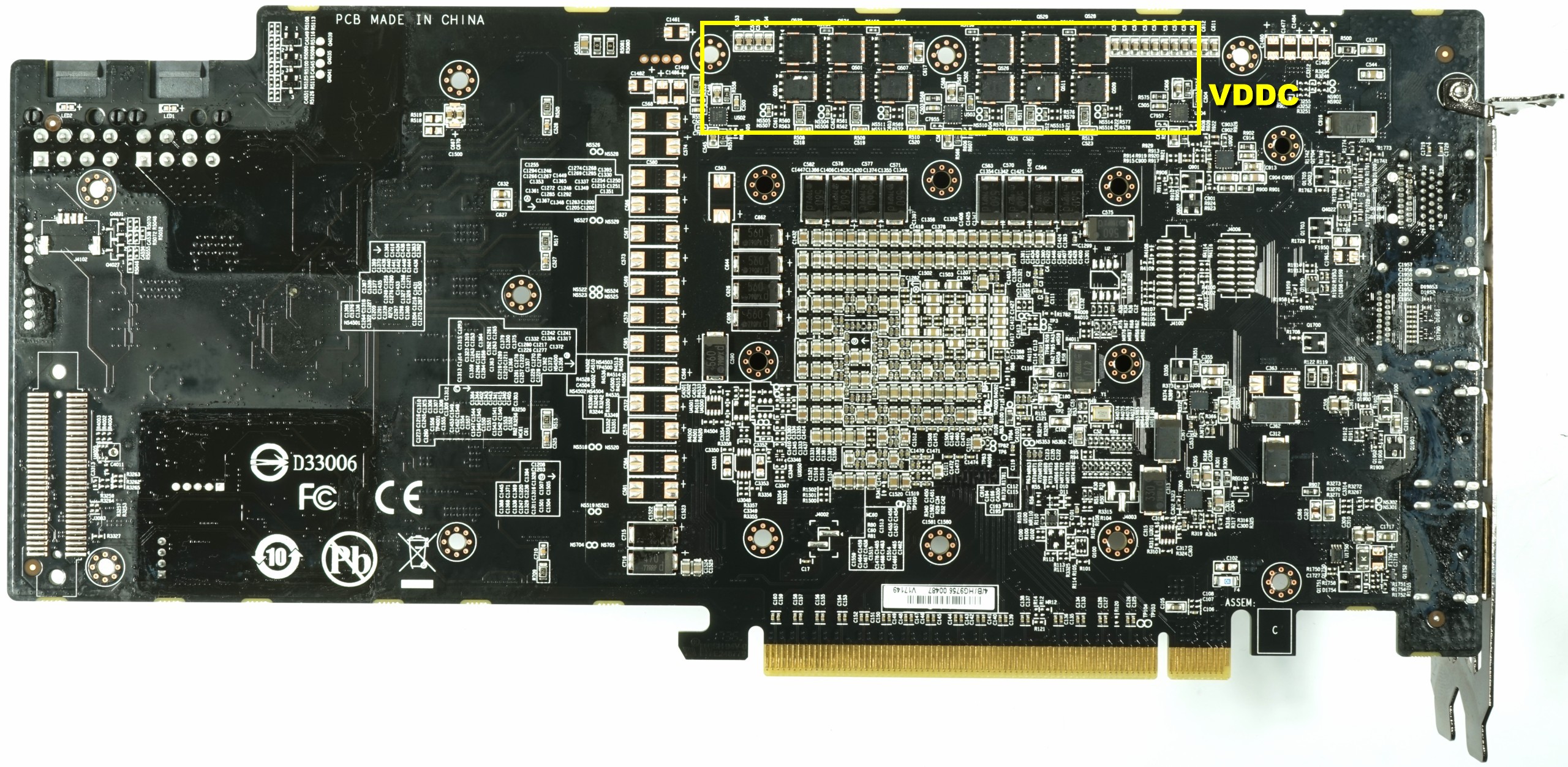

A look at the board's back side reveals that half of the most heavily-used low-side MOSFETs are relocated there. So, it's safe to assume that ~30% of all voltage converter losses (and associated heat) occur on the back of the card. Naturally, then, it isn't enough to only dissipate heat from the board's front. Cooling is now required on both sides.

Gigabyte relies on a total of two eight-pin auxiliary power connectors to complement the 16-lane PCIe slot. According to our measurements, that slot delivers a maximum of ~25W, so those two connectors make up the difference.

A once-over of the PCB suggests Gigabyte omitted many components found on its pre-production sample. The list of missing features begins with a Holtek microprocessor for RGB controls and ends with a second BIOS IC, which we miss a lot more. In the end, what remains is a cut-down version of what started as a much more ambitious project.

GPU Power Supply (VDDC)

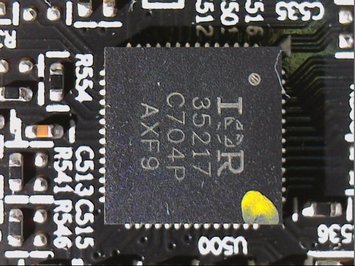

As with AMD's reference design, the focus is on International Rectifier's IR35217, a dual-output multi-phase controller that provides six phases for the GPU and an additional phase for the memory. But again, there are 12 regulator circuits, not just six. This is a result of doubling, allowing the load from each phase to be distributed between two regulator circuits.

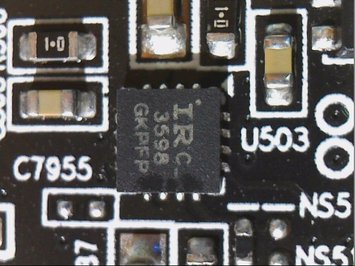

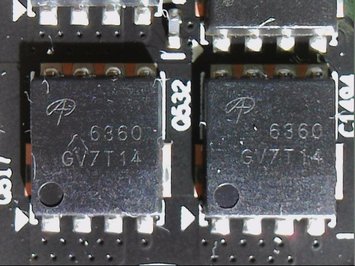

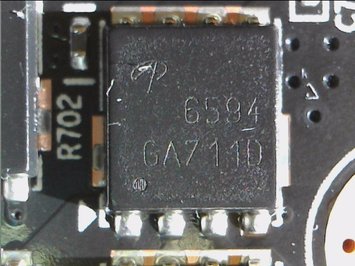

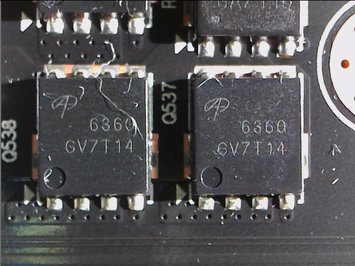

Six IR3598 interleaved MOSFET drivers, three on the front and three on the back, are responsible for this doubling. The actual voltage conversion for each of the 12 regulator circuits is handled by one Alpha & Omega Semiconductor AON6594 on the high side and two AON6360s in parallel on the low side. This is an inexpensive but acceptable choice of components, especially since the parallel arrangement also means that thermal hot-spots are distributed more evenly.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

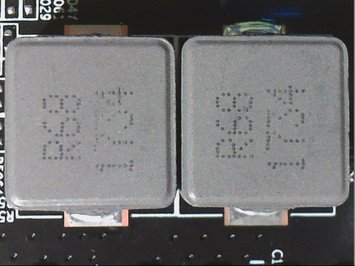

Gigabyte relies on Magic chokes for its VDDC and MVDD. With only 10nH for the VDCC, however, these encapsulated ferrite-core coils are rather small.

The one MVDD phase's coils are more average, having an inductance of 22nH. However, in other cards, we've even seen 33nH chokes.

Memory Power Supply (MVDD)

As mentioned, the memory's power is controlled by International Rectifier's IR35217 as well. One phase is fully sufficient for this card, as its HBM2 is less demanding. Similar to the VDDC, one AON6594 on the high side and two AON6360s in parallel on the low side are used.

Additional Voltage Converters

Creating the VDDCI isn’t a very difficult task. But it's an important one since this regulates the transition between the internal GPU and memory signal levels. It’s essentially the I/O bus voltage between the GPU and memory. As such, two constant sources of 1.8V and 0.9V are supplied.

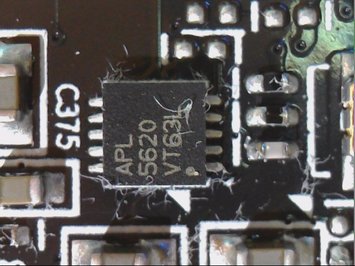

Underneath the GPU, there’s an Anpec APL5620 low drop-out linear regulator, which provides the very low voltage for the phase locked loop (PLL) area.

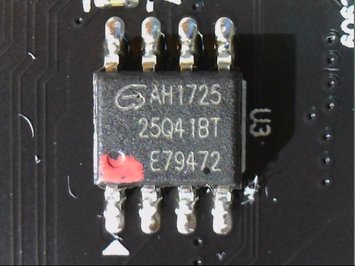

Everything else is standard fare. Only the single BIOS chip stands out. Gigabyte naturally leaves off the switch you'd use to toggle between BIOSes on a dual-firmware board, although there is a designated space for it on the PCB.

A 68nH ferrite-core coil helps to block peaks and any other potential by-products of the power supply.

MORE: Best Graphics Cards

MORE: Desktop GPU Performance Hierarchy Table

MORE: All Graphics Content

Igor Wallossek wrote a wide variety of hardware articles for Tom's Hardware, with a strong focus on technical analysis and in-depth reviews. His contributions have spanned a broad spectrum of PC components, including GPUs, CPUs, workstations, and PC builds. His insightful articles provide readers with detailed knowledge to make informed decisions in the ever-evolving tech landscape

-

marcelo_vidal With the pricey from those gpus :) I will get an 2400g and play 720P. maybe with a little tweaking I can boost to 1920x1080Reply -

Sakkura This thing about board partners only getting a few thousand Vega 10 GPUs goes back many months now. Has AMD just not been making any more? What the heck is going on?Reply

Seems like Gigabyte did a really nice job making an affordable yet effective cooling solution for Vega 56, it's really a shame it goes to waste because there just aren't any chips available. -

CaptainTom To those complaining about the low supply (and resulting high prices) of AIB cards:Reply

It's because the reference cards are still selling very well (at least for their supply). If vendors can sell the $500 Vega 64 for $600 and sell out, why would they bother wasting time on any other model? -

g-unit1111 Reply20576533 said:To those complaining about the low supply (and resulting high prices) of AIB cards:

It's because the reference cards are still selling very well (at least for their supply). If vendors can sell the $500 Vega 64 for $600 and sell out, why would they bother wasting time on any other model?

That's because miners are the ones buying the cards as fast as they come in stock. It's us gamers and enthusiasts that are waiting for the high performance models. Bad thing is, we don't matter to the bottom line. All they see and want is our precious money, and they don't care what model they sell to us. -

aelazadne Because, the Vendor's making money doesn't equal AMD making money. AMD is losing market share in the GPU scene. With Vega unable to keep up with demand AMD is losing customers who would have bought Radeon's but instead go with Nvidia due to availability. The lack of Availability stemming from August and the fact that even now in early 2018 the Vegas are over priced and hard to find ruins customer confidence. In fact, this situation is so bad that the only people benefitting are the people gouging both Nvidia cards and Radeon cars because at this point there is NO COMPETITION.Reply

Also, just because you are gouging doesn't mean you are making money. AMD has to make money and they need to sell these things in a certain volume. In their contracts with Vendors, they will require their vendors to sell a certain amount of vegas in order to order more. Due to scarcity the only companies making money are Retailers. AMD is going to have to address this issue otherwise their investors will begin to come after them for bungling so bad that their market share dropped so bag. Literally, the intel screw up plus Ryzen being good has been a godsend for AMD, they do not need a declining GPU market share sparking a debate with investors over whether AMD should get out and play the Intel game. -

bit_user Reply

I really appreciate the thorough review.20575687 said:...

The super-imposed heatpipes vs. GPU picture was a very nice touch. For any of you who missed it, check out page 6 (Cooling & Noise) about 1/3 or 1/2 of the way down.

-

bit_user Reply

I think you're too cynical. It's an ASIC supply problem. The AIB partners would probably spend the time if they could get enough GPUs to sell custom boards in enough volume to offset the overhead of doing the extra design work.20576533 said:It's because the reference cards are still selling very well (at least for their supply). If vendors can sell the $500 Vega 64 for $600 and sell out, why would they bother wasting time on any other model?

The only real way out of this is for AMD to design a more cost-effective chip with the graphics units removed. That will divert miners' interest away from their graphics products. -

bit_user Almost as surprising to me as how much more oomph they got out of Vega 56 is how well the stock Vega 64 is holding up against stock GTX 1080. Is it just me, or did AMD really gain some ground since launch?Reply