How To Find Large Files on Any Linux Machine

Locate the files that are consuming your disk space on your Linux machine

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

We’ve all got to that point on a given system where we start to run out of storage space. Do we buy more storage, perhaps one of the best SSDs, or do we search and find the largest files quickly? In this how to we will look at a few simple approaches to help us maintain and manage our filesystems.

All the commands in this article will work on most Linux machines. We’ve used an Ubuntu LTS install but you could run this how-to on a Raspberry Pi. All of the how-to is performed via the Terminal. If you’re not already at the command line, you can open a terminal window on most Linux machines by pressing ctrl, alt and t or by searching for the terminal app in your applications menu.

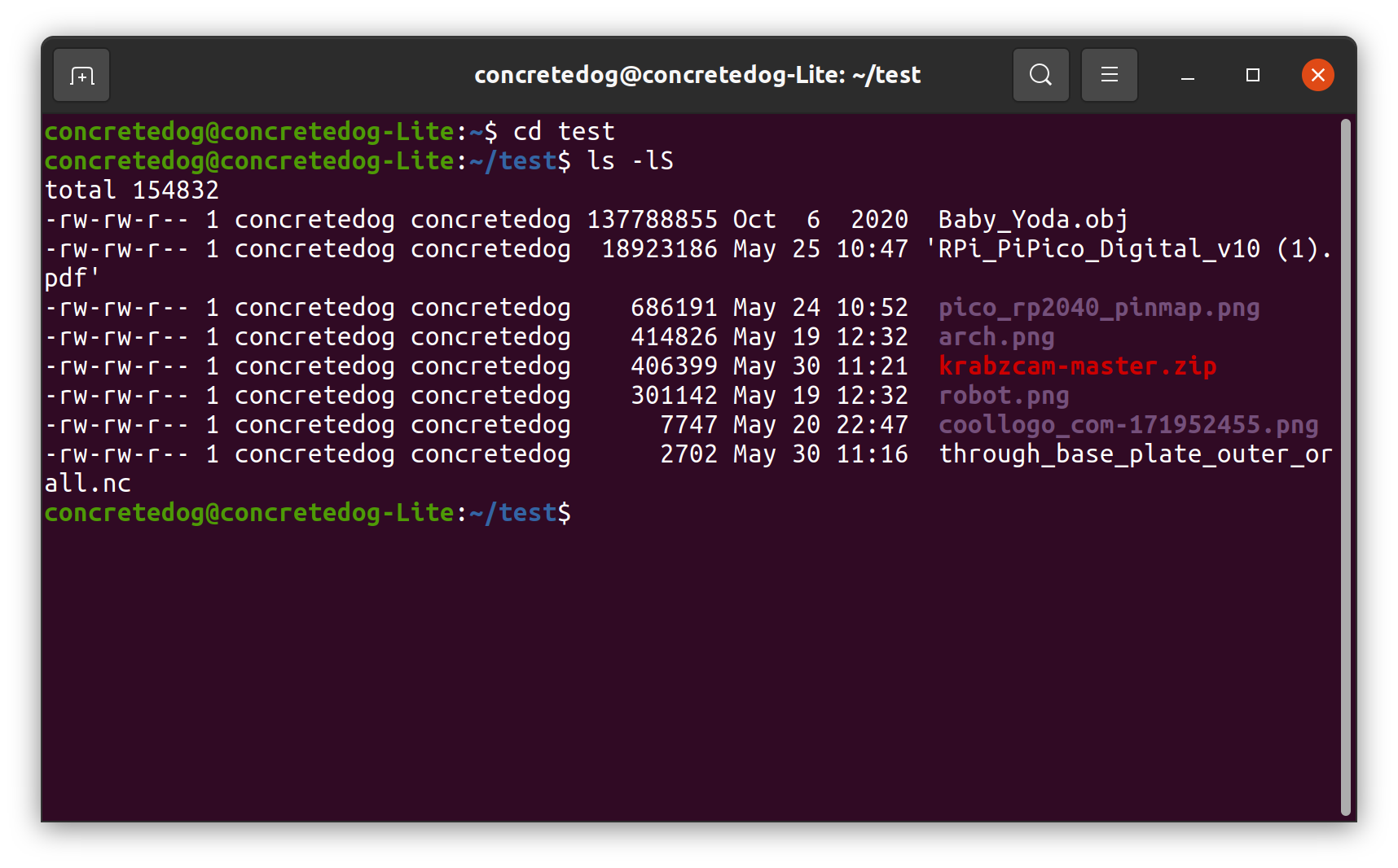

Listing Files In Size Order Using the ls Command in Linux

The ls command is used to list the contents of a directory in Linux. By adding the -lS argument we can order the returned results according to the file size. We have copied a collection of files into a test directory to show this command but it can be run in any directory you choose.

To list the directory contents in descending file size order, use the ls command along with the -IS argument. You will see the larger files at the top of the list descending to the smallest files at the bottom.

ls -lSWhile this command is useful for seeing, it lacks the actual size of the files so how can we identify the largest files in Linux and display their size?

Identifying Files Larger Than a Specified Size in Linux

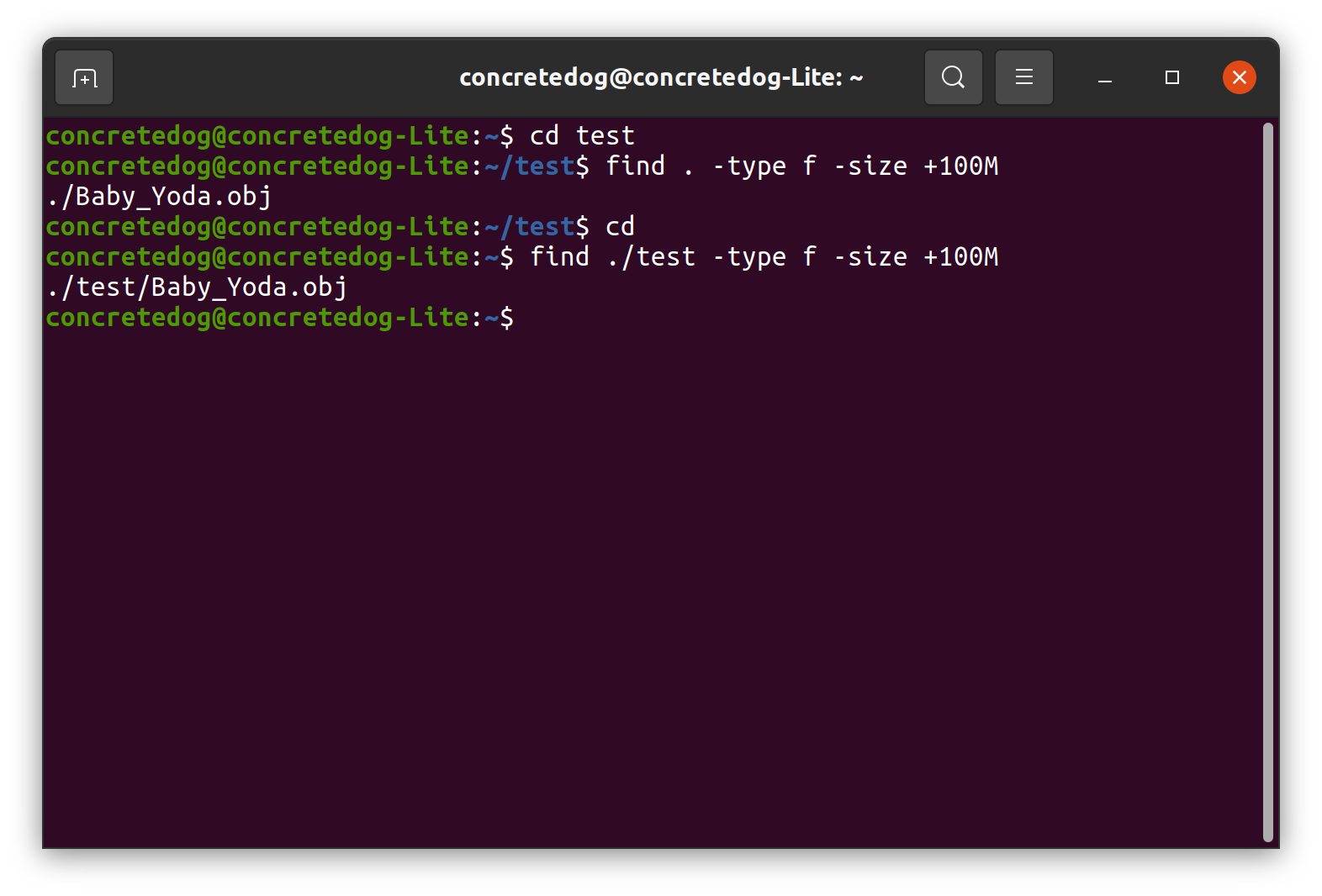

In another article, we explained how to find files in Linux using the find command to search based on a filename or part of a filename. We can also use the find command in combination with the -size argument specifying a size threshold where any file larger than specified will be returned.\

1. Use find to search for any file larger than 100MB in the current directory. We are working inside our test directory and the “.” indicates to search the current directory. The -type f argument specifies returning files as results. Finally the +100M argument specifies that the command will only return files larger than 100MB in size. We only have one file in our test folder Baby_Yoda.obj that is larger than 100MB.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

find . -type f -size +100M2. Use the same command, but this time specify a path to search. We can run the same command as in the previous section but replace the “.” with a specified path. This means we can search the test directory from the home directory.

cd

find ./test -type f -size +100MSearching the Whole Linux Filesystem For Large Files

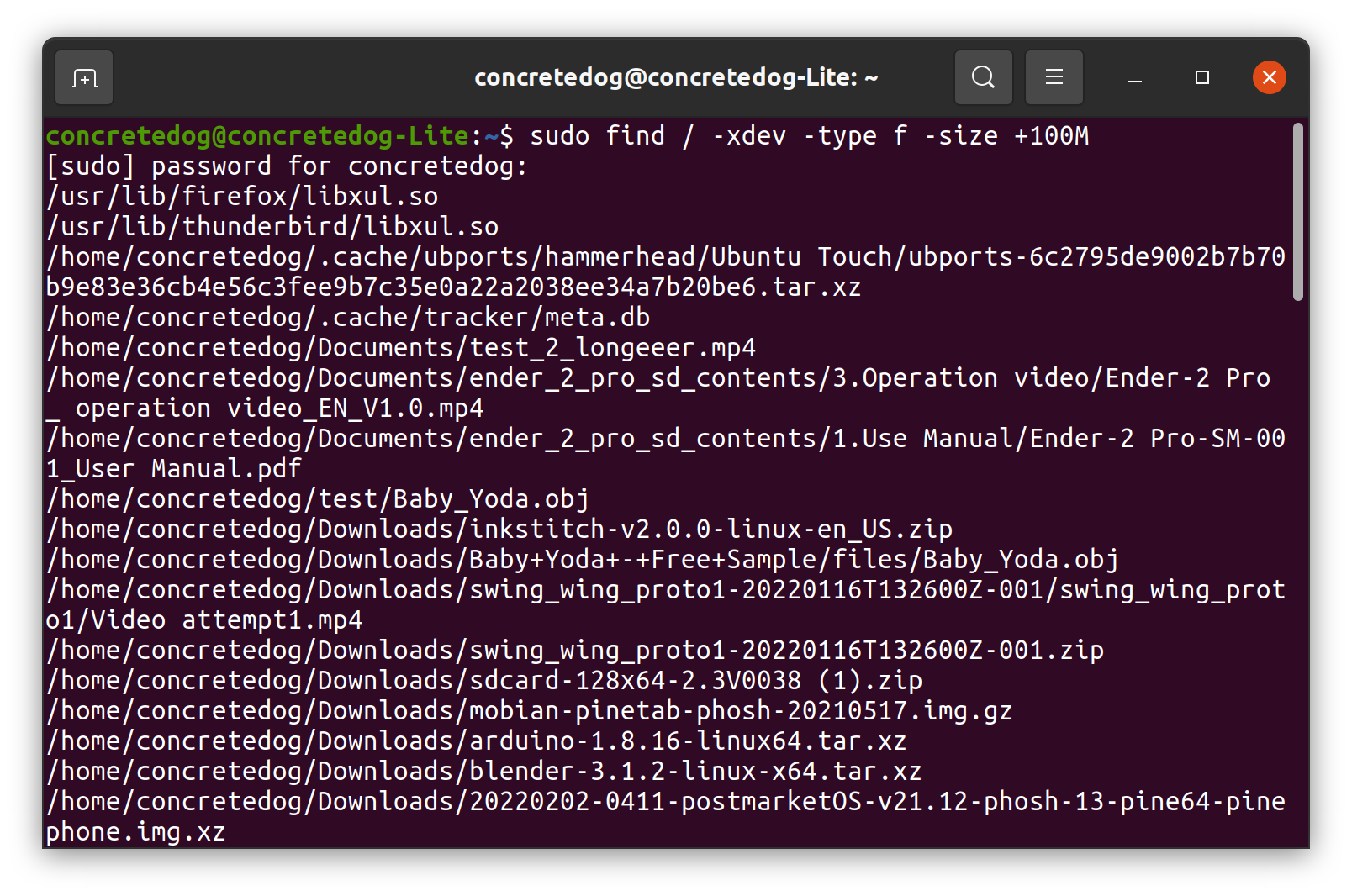

It’s sometimes useful to search the whole Linux filesystem for large files. We may have some files hidden away in our home directory that need removing. To search the entire filesystem, we will need to use the command with sudo. We might also want to either limit the search to the current filesystem which can be achieved via the -xdev argument, for example when we suspect the files we seek are in our current main filesystem or we can choose not to add the -xdev argument which will then include results from other mounted filesystems, for example an attached USB drive.

1. Open a terminal.

2. Search the current filesystem for files larger than 100MB. As we are invoking root privileges using sudo we will need to input our password. Note that we are using / to set the command to search the entire filesystem from the root of the filesystem.

sudo find / -xdev -type f -size +100M3. Search all filesystems for files larger than 100MB. For this example connect a USB drive with a collection of files on it including some that are over 100MB in size. You should be able to scroll through the returned results and see that the larger files on the pen drive have been included in the results.

sudo find / -type f -size +100MFinding the 10 Largest Linux Files on Your Drive

What are the top ten files or directories on our machine? How large are they and where are they located? Using a little Linux command line magic we can target these files with only one line of commands.

1. Open a terminal.

2. Use the du command to search all files and then use two pipes to format the returned data.

du -aBM will search all files and directories, returning their sizes in megabytes.

/ is the root directory, the starting point for the search.

2>/dev/null will send any errors to /dev/null ensuring that no errors are printed to the screen.

| sort -nr is a pipe that sends the output of du command to be the input of sort which is then listed in reverse order.

| head -n 10 will list the top ten files/directories returned from the search.

sudo du -aBm / 2>/dev/null | sort -nr | head -n 103. Press Enter to run the command. It will take a little time to run as it needs to check every directory of the filesystem. Once complete it will return the top ten largest files / directories, their sizes and locations.

With this collection of commands, you have several ways to identify and locate large files in Linux. It's extremely useful to be able to do this when you need to quickly select big files for deletion to free up your precious system resources. As always, take care when poking around your filesystem to ensure you aren’t deleting something critical!

Related Tutorials

Jo Hinchliffe is a UK-based freelance writer for Tom's Hardware US. His writing is focused on tutorials for the Linux command line.