Graphics Card Makers Rolling Out Radeon RX Vega 56 Graphics Cards

Now that AMD has officially launched the Radeon RX Vega 56, it should come as no surprise that add-in board makers around the globe are releasing cards based on this new GPU. If you haven’t done so already, we highly recommend reading our Radeon RX Vega 56 review for a complete rundown on features, specifications, and performance.

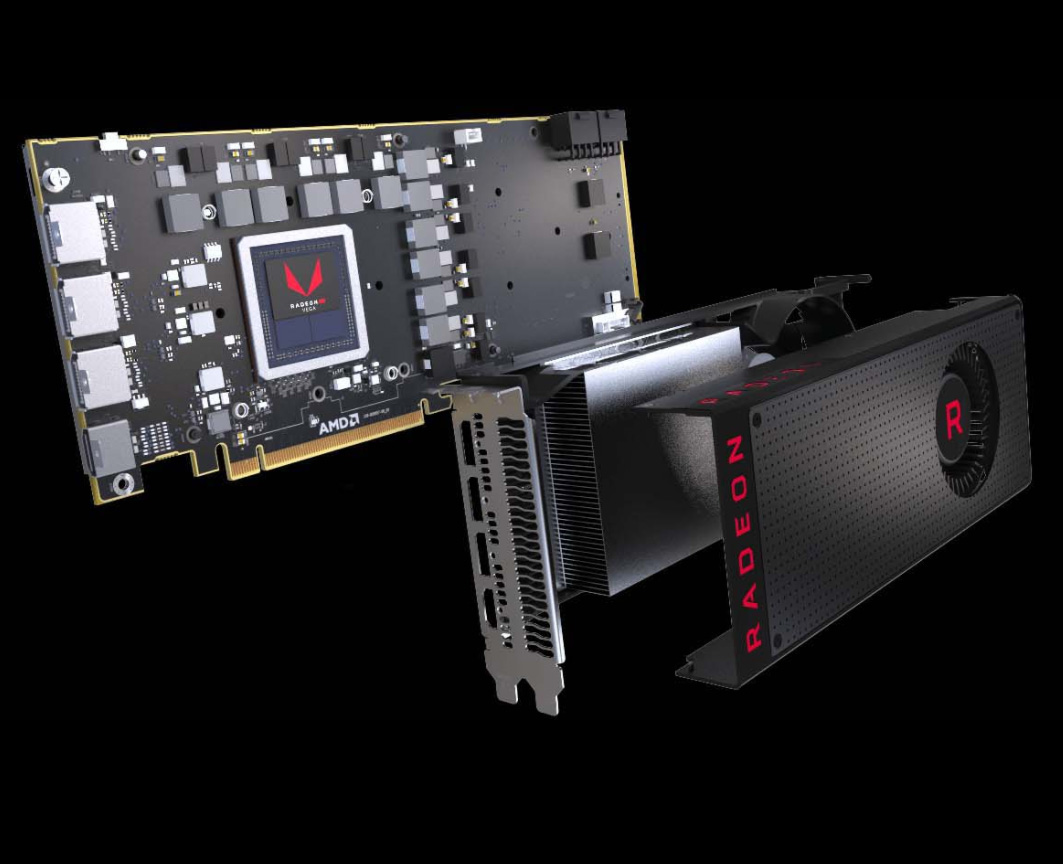

First of all, let’s talk about what these cards from Asus, XFX, Sapphire, and Gigabyte have in common. All Radeon RX Vega 56 graphics cards feature the Vega 10 GPU, which is manufactured using a 14nm FinFET LPP process and is made up of close to 12.5 billion transistors. It's equipped with four Asynchronous Compute units, four next-gen Geometry units, 56 next-gen compute units, and 3,584 stream processors. This GPU also sports 224 texture units and 4MB of L2 cache, and it employs 8GB of HBM2 memory.

All the Radeon RX Vega 56 graphics cards mentioned here have a core clock speed of 1,156MHz and a boost clock speed of 1,471MHz. It should go without saying that we will no doubt see overclocked versions of this graphics card from various vendors in the future.

Radeon RX Vega 56 series cards also support bridgeless CrossFire for when you want to use more than one GPU simultaneously. They support AMD FreeSync Technology that eliminates image tears and choppiness, as well as AMD Eyefinity for a panoramic multi-screen gaming experience on up to four monitors.

The RX Vega 56 cards from Sapphire, XFX, and Gigabyte are reference cards equipped with a rear exhaust design utilizing a rear-blower fan mated to a red and black plastic fan shroud. The heatsink features a large direct contact copper vapor chamber with aluminum fins bonded to the surface that absorb the heat energy and are in turn cooled by the blower-style fan.

Asus has distinguished its RX Vega 56 by offering a non-reference design cooler. This card features a large custom-designed heatpipe heatsink that, according to the company, provides 40% more surface area compared to its previous dual-slot design. Asus also included three of its patented “wing-blade” fans and RGB lighting features to boot.

As of this writing, availability and pricing are not available. Depending on demand and availability, we would assume that most of the reference design cards listed above will have an MSRP close to the $400. Graphics cards with custom cooling solutions will no doubt command a higher price. It’s too early to tell what kind of effect Ethereum mining will have on pricing and availability.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

We reached out to each of the companies listed above for more details.

| Header Cell - Column 0 | Radeon RX Vega 56 |

|---|---|

| Clock Speed | Core: 1,156MHzBoost: 1,471MHz |

| Memory Clock | 1.6 Gb/s |

| Memory Bus | 2048 bit |

| Memory Type | HBM2 |

| Direct X | 12 |

| Open GL | 4.5 |

| PCB Form | ATX |

| I/O | 1 x HDMI 2.0b3 x DisplayPort 1.4 |

| Multi-view | 4 |

| PowerRequirement | 650W |

Steven Lynch is a contributor for Tom’s Hardware, primarily covering case reviews and news.

-

Lazovski It would be interesting to see how many of these cards could be upgraded to vega64 by firmware..Reply -

RomeoReject I'm also curious about that. I know some people were finding themselves with 8GB RX480s having reflashed their 4GB ones.Reply -

Sam Hain All I know is that adds on Tom's pages w/high or any volume are really annoying/suck... Especially, when one loads one after another and another, etc. Advertising is important but spare us the volumetric-delights of it please.Reply -

Agente Silva AMD totally craped on his "fanbase". Never had an nVidia card until last year... Bought one 1080 for 4K and after 5 months bought another 1080 for SLI. Running games like butter. No microstutter, 100% compability in games I play or any of the so-called reported problems by the "nay-sayers" xDReply

The main factor that made me go nVidia was actually power consumption. I knew after choosing to go Pascal that I would SLI in the future and my research showed me that even with a 850W PSU (80+) I could be short on supply.

Had for some years a R9280X crossfire setup for Eyfinity (3 screen). Primarly I had a 750W (80+) that simply couldn´t deliver the necessary powerdraw. Swapped for the 850W mentioned above... One good thing was I literally could turn off room heater during winter in long gaming weekend sessions. -

Agente Silva *edit: I knew I needed 2 cards for smooth high/ultra 4K and with AMD a 850W (80+) PSU wouldn´t be enough. With nVidia is more than enough :)Reply -

RomeoReject Reply

Either you're full of it, or your PSU sucked. I was using an OCZ 750W for years with my Crossfire XFX R9 280X setup, with zero issues. Hell, only reason I ended up changing PSUs is because I gave that PSU to my brother for his build, and I got an 850W for the same cost.20139384 said:AMD totally craped on his "fanbase". Never had an nVidia card until last year... Bought one 1080 for 4K and after 5 months bought another 1080 for SLI. Running games like butter. No microstutter, 100% compability in games I play or any of the so-called reported problems by the "nay-sayers" xD

The main factor that made me go nVidia was actually power consumption. I knew after choosing to go Pascal that I would SLI in the future and my research showed me that even with a 850W PSU (80+) I could be short on supply.

Had for some years a R9280X crossfire setup for Eyfinity (3 screen). Primarly I had a 750W (80+) that simply couldn´t deliver the necessary powerdraw. Swapped for the 850W mentioned above... One good thing was I literally could turn off room heater during winter in long gaming weekend sessions. -

Agente Silva Reply20143028 said:

Either you're full of it, or your PSU sucked. I was using an OCZ 750W for years with my Crossfire XFX R9 280X setup, with zero issues. Hell, only reason I ended up changing PSUs is because I gave that PSU to my brother for his build, and I got an 850W for the same cost.20139384 said:AMD totally craped on his "fanbase". Never had an nVidia card until last year... Bought one 1080 for 4K and after 5 months bought another 1080 for SLI. Running games like butter. No microstutter, 100% compability in games I play or any of the so-called reported problems by the "nay-sayers" xD

The main factor that made me go nVidia was actually power consumption. I knew after choosing to go Pascal that I would SLI in the future and my research showed me that even with a 850W PSU (80+) I could be short on supply.

Had for some years a R9280X crossfire setup for Eyfinity (3 screen). Primarly I had a 750W (80+) that simply couldn´t deliver the necessary powerdraw. Swapped for the 850W mentioned above... One good thing was I literally could turn off room heater during winter in long gaming weekend sessions.

It´s rather unpolite that you build and state your opinion on those terms. I have no need to lie anywhere. Maybe I should detail it was a 7970 GHz edition paired with a PowerColor R9280X. In terms of performance an R9280X was actually a refurbished 7970 but could draw less power a thus making a 750W PSU on the verge of being ok. But these are just crumbles of the main subject. AMD is power hungry, always was, and the argument of FreeSync does not sustain any reason to buy a Vega64. Of course it´s an enthusiast card and probably a >750W PSU is already present in a enthusiast rig. If not, don´t be surprised with shutdowns...