Redfall GPU Benchmarks: AMD, Intel and Nvidia Cards Tested

Unreal Engine meets vampires, DLSS 3, and open world gameplay

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

Redfall arrives on PC and consoles starting May 2 (or May 1, depending on your time zone). We received early access to the game for testing and review purposes, and since we're Tom's Hardware, that primarily means testing. We've benchmarked the game on many of the best graphics cards to see how it performs and what settings are best for various GPUs.

Redfall uses Unreal Engine 4, just like Dead Island 2, so this makes for a contrasting view of sorts to our Dead Island 2 PC benchmarks. Redfall also deals with the undead, though here it's vampires rather than zombies. But that's about where the similarities end, at least as far as the games are concerned. Redfall is being promoted by Nvidia and it features DLSS 3. It also supports FSR 2.1 and XeSS, however, so non-Nvidia users aren't left out.

It's an open world game (with two different maps, though once you leave the first map there's no going back). The game comes from Arkane Austin, the studio behind the Dishonored series as well as Prey, Deathloop, and Wolfenstein: Youngblood. Hopefully the Arkane heritage comes through, though we'll have to see as we haven't played through much of the story.

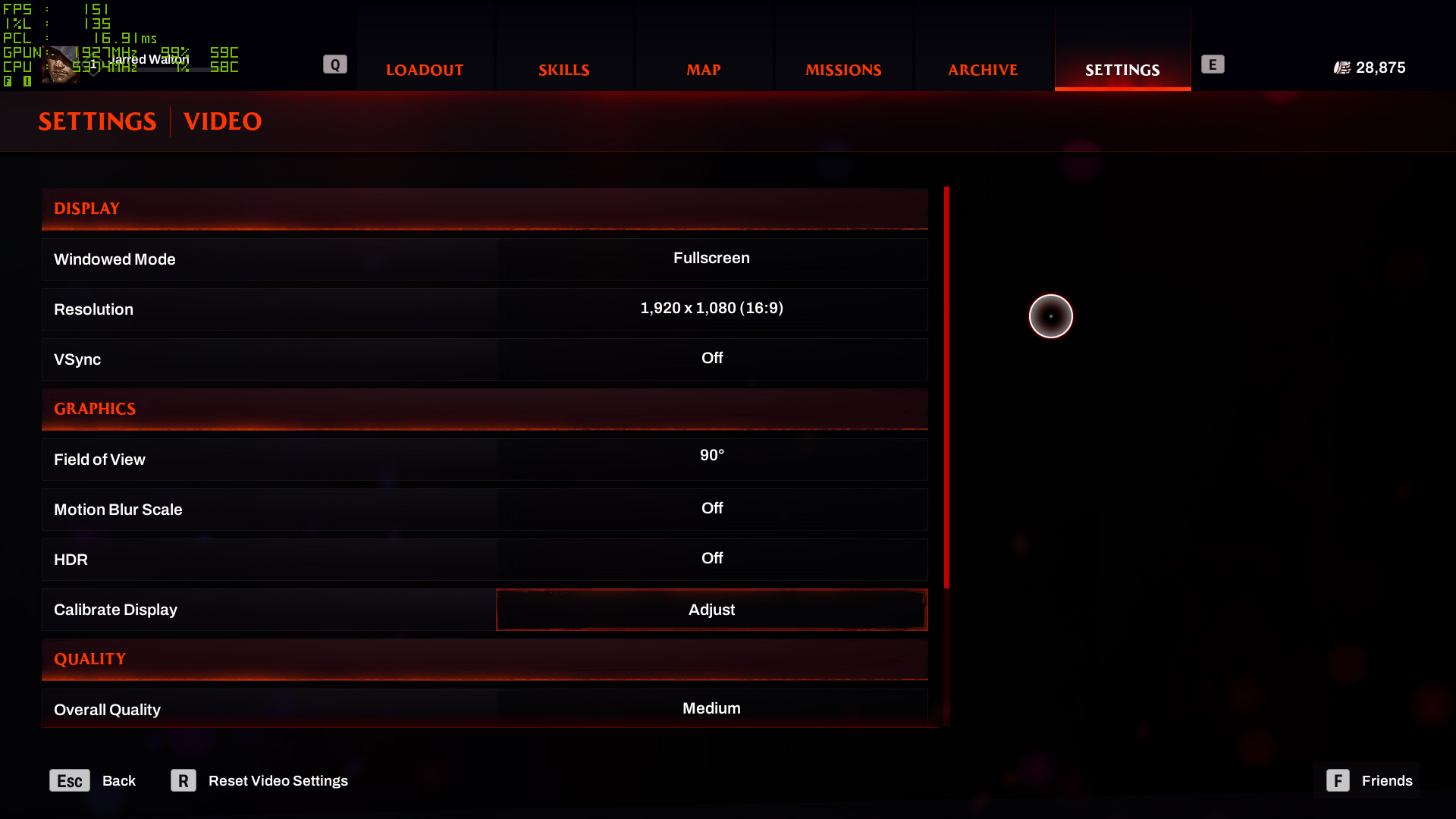

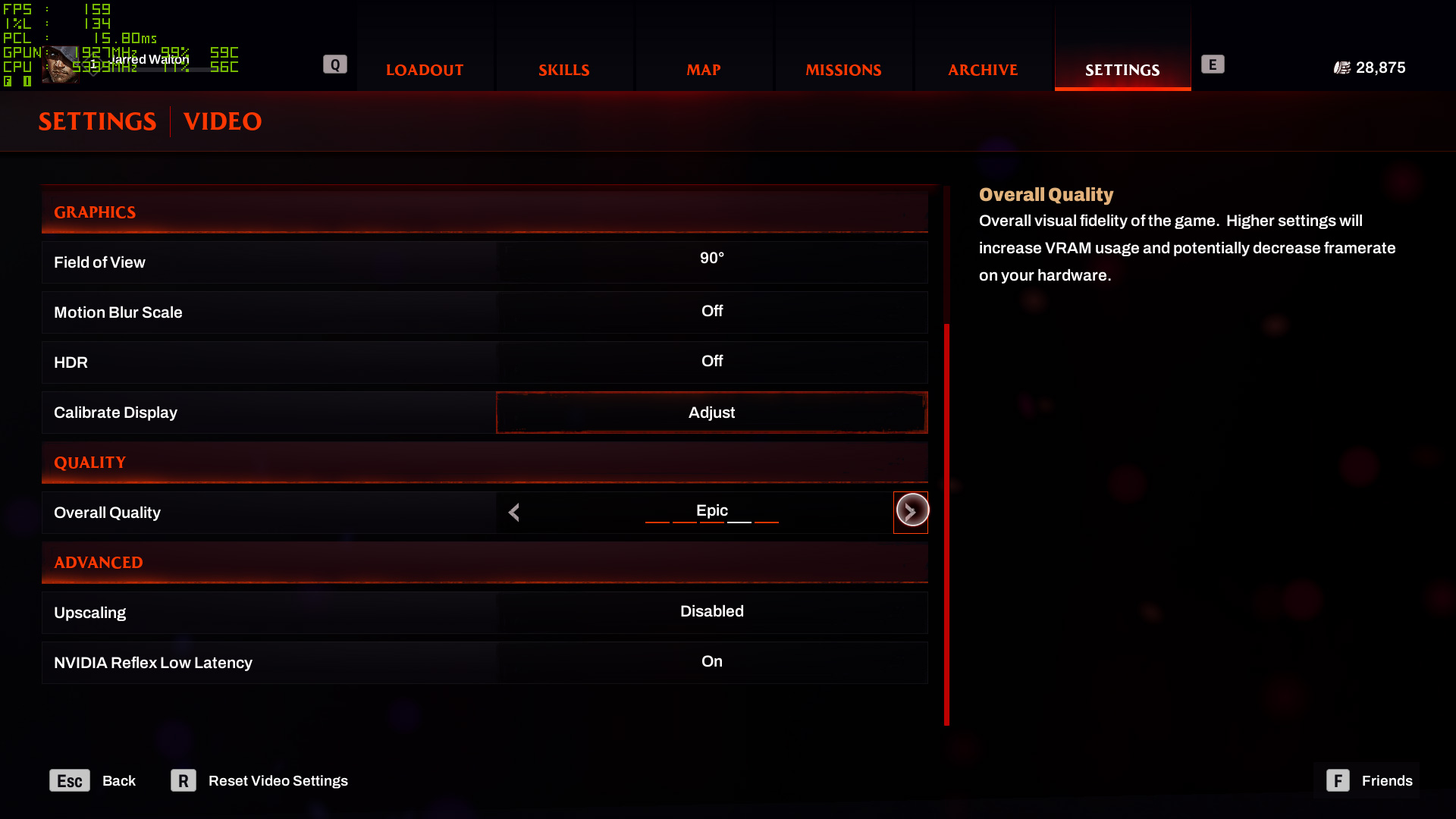

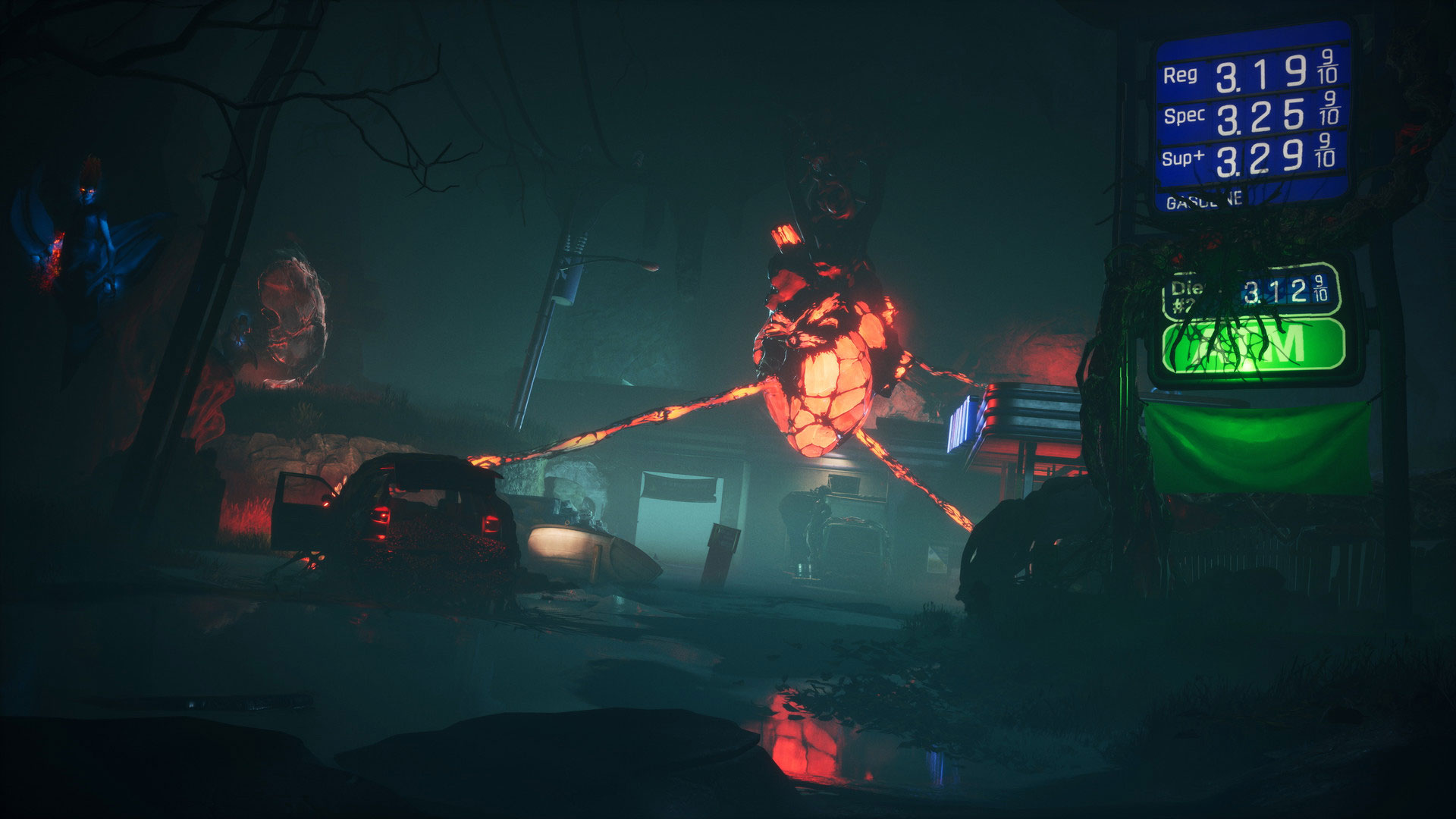

Redfall Settings

Redfall uses Unreal Engine, with four graphics presets plus custom: Low, Medium, High, and Epic. Unlike Dead Island 2, there's a more noticeable difference between the various settings, and Epic in particular provides a pretty substantial upgrade to the visuals. Real-time shadows move across the environment, and the lighting in general just looks a lot better.

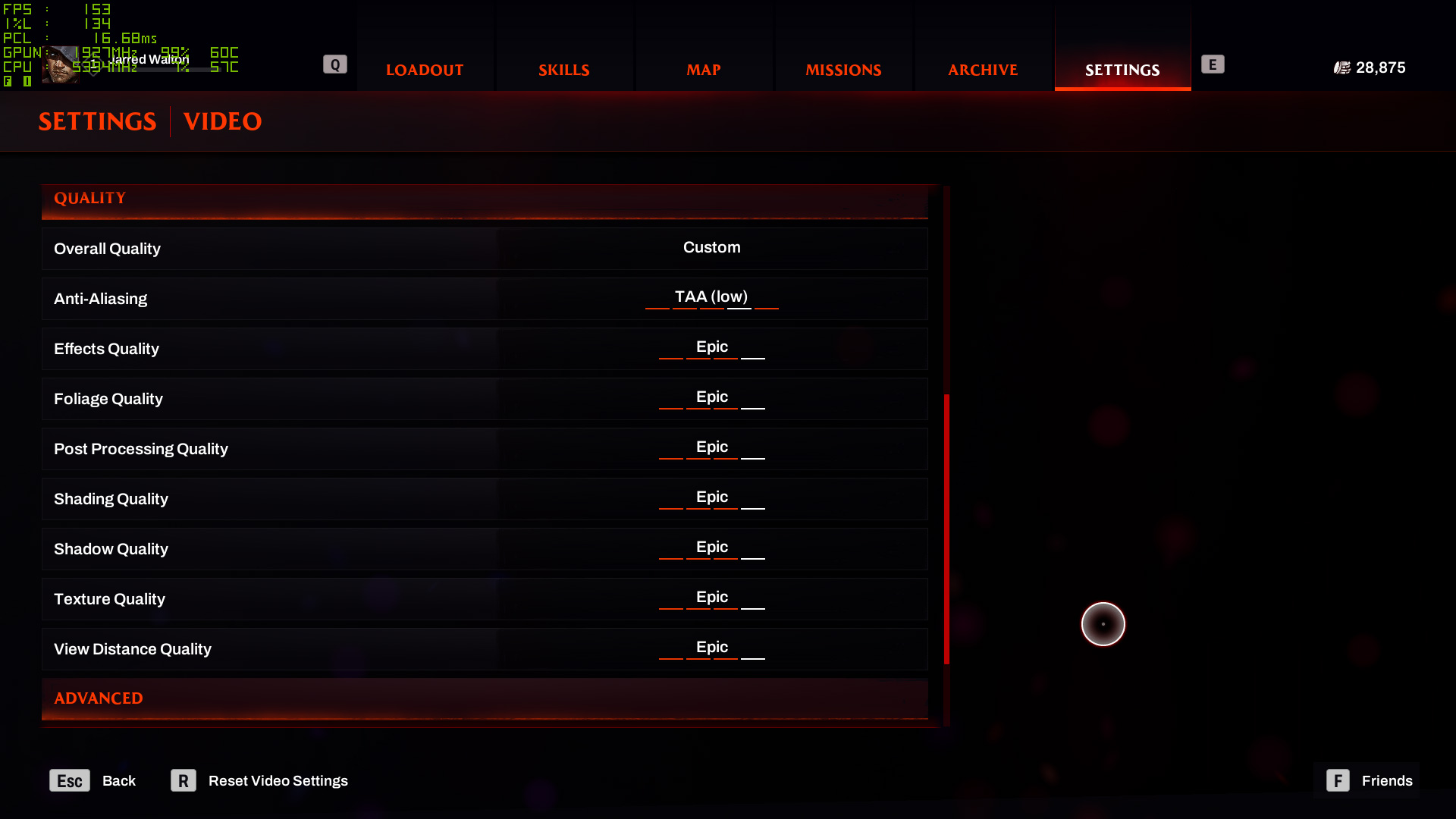

One of the noticeable changes between the various presets is that Low and Medium have weak anti-aliasing (AA) — it's disabled on Low, and I think it uses FXAA Low on Medium. There are lots of jaggies, whatever the setting (which you can't see as all the custom options are hidden on the other presets). High and Epic appear to use "TAA Low" as well, rather than "TAA High," though there's not a huge difference.

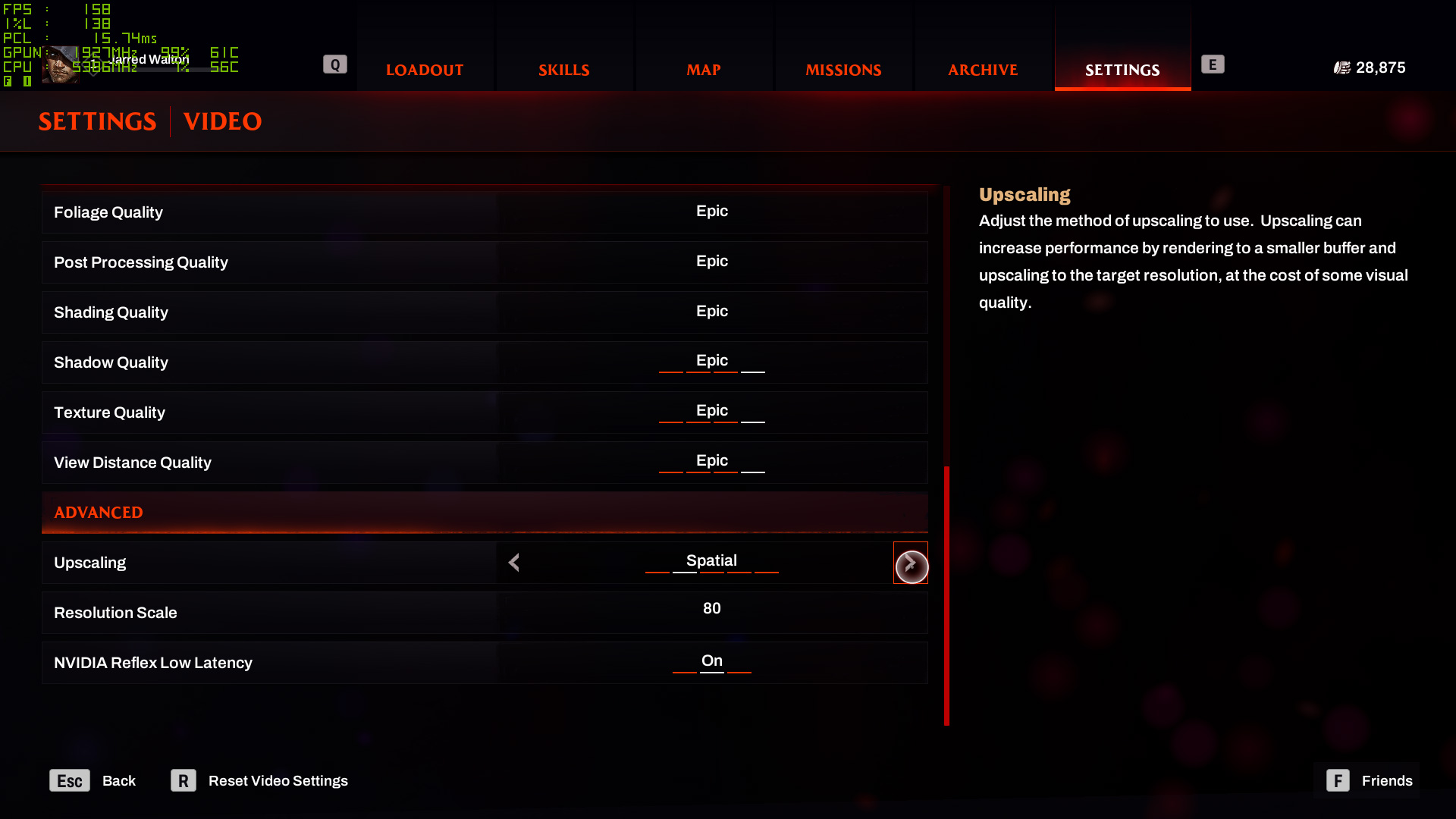

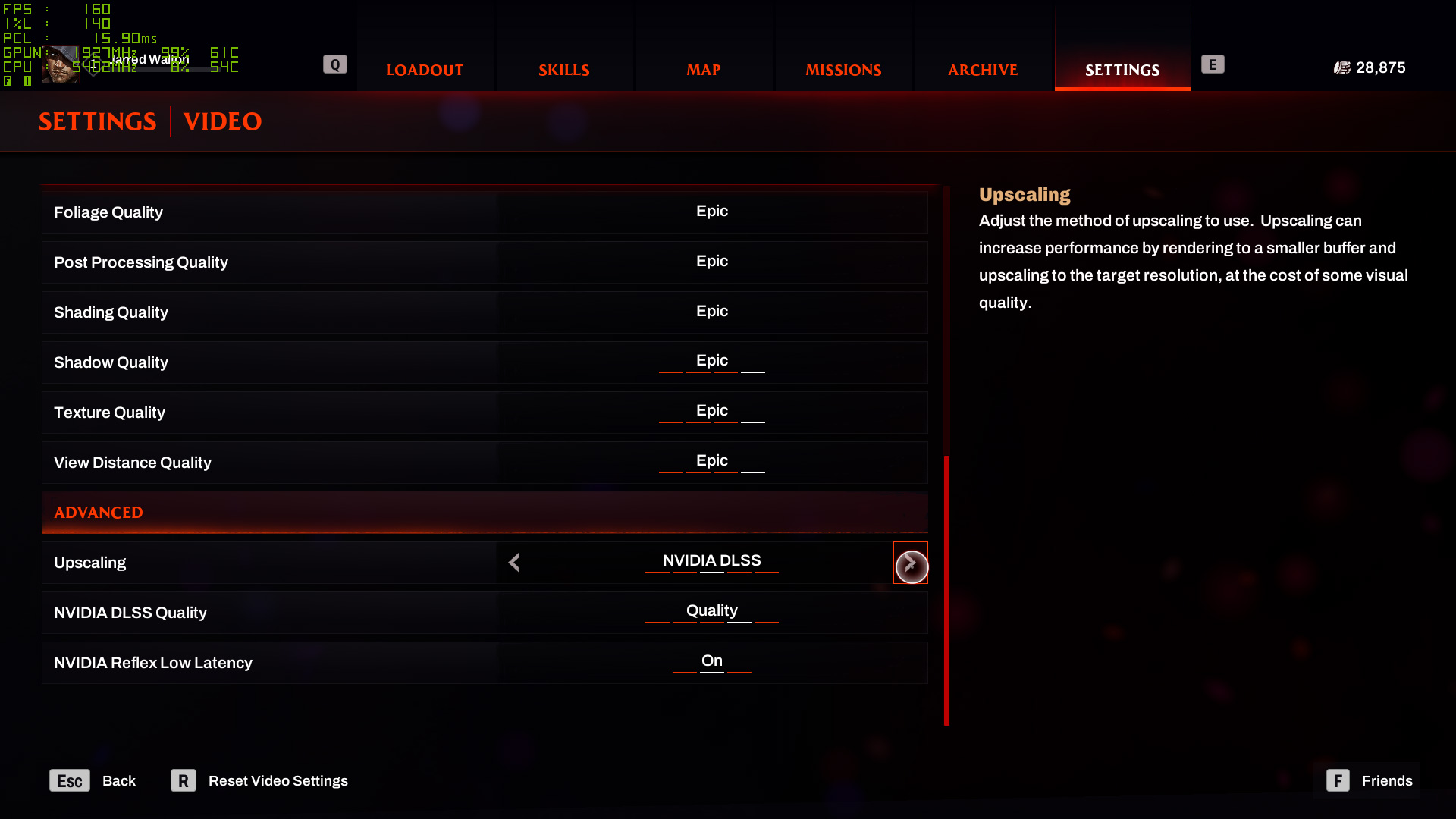

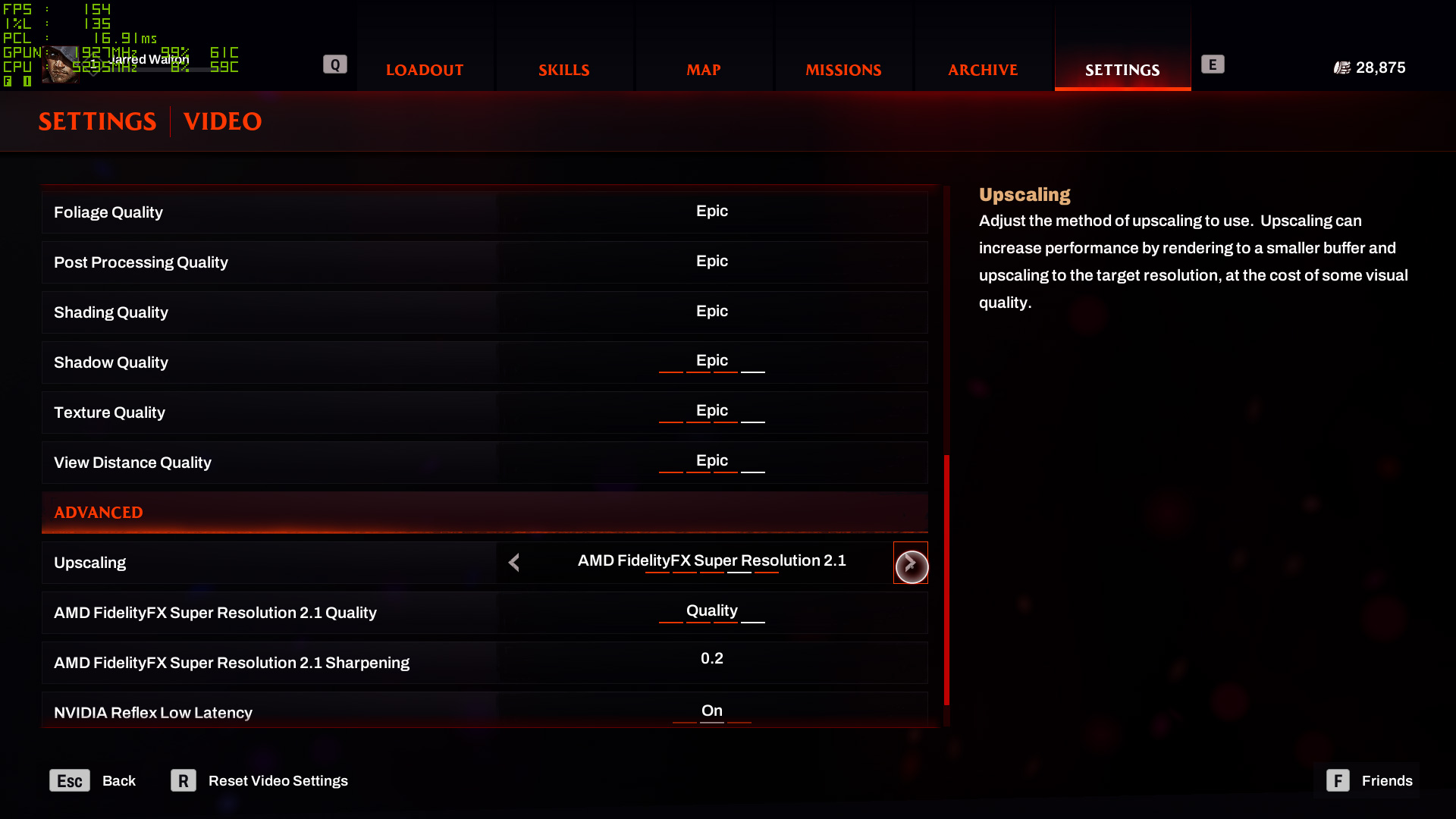

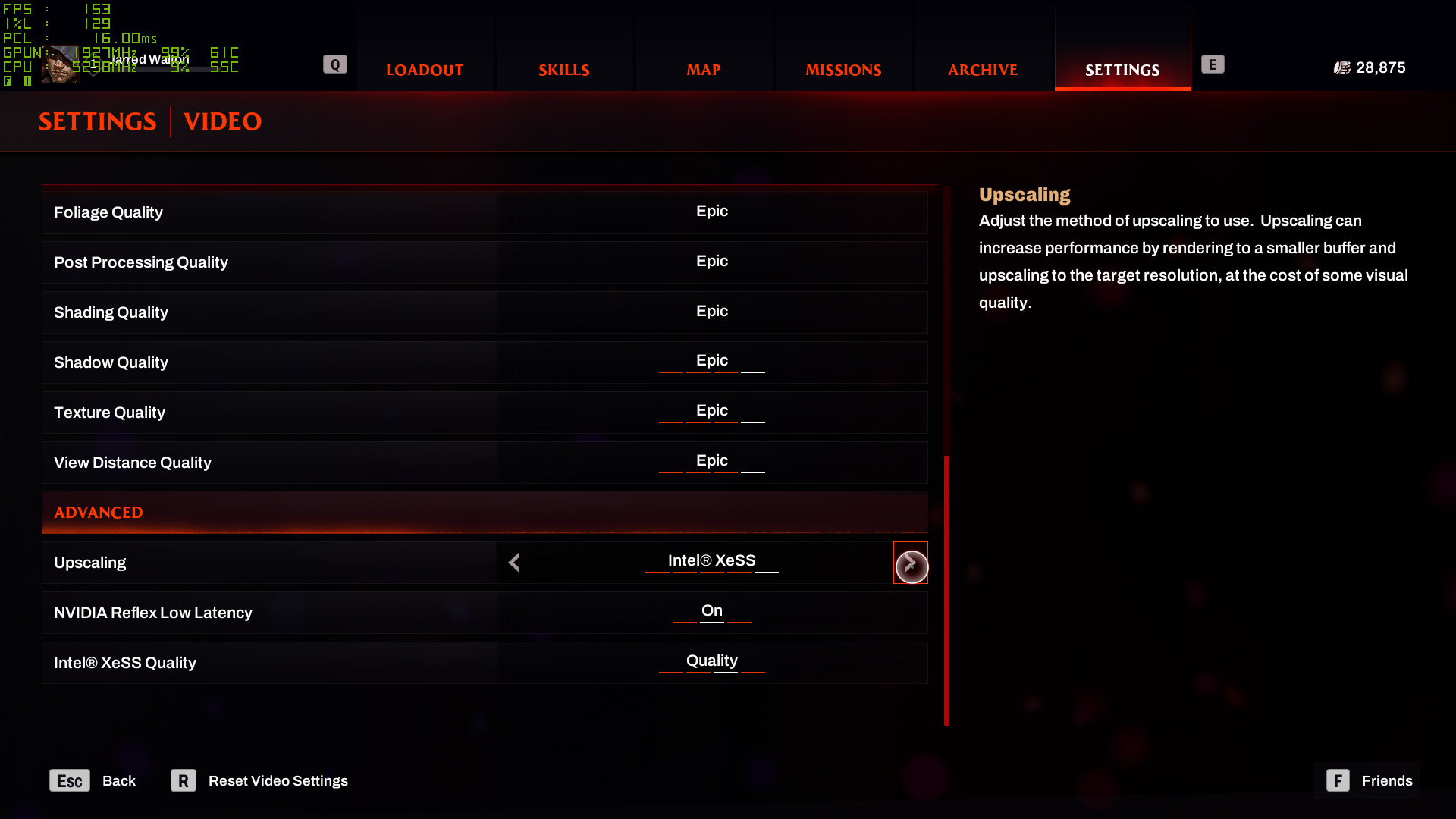

Separate from the presets are the upscaling and (if you have an Nvidia GPU) Reflex options. The game supports FSR2.1 on all GPUs, XeSS on all GPUs, and Nvidia DLSS on RTX cards. It also supports DLSS 3 Frame Generation if you have an Nvidia Ada Lovelace graphics card (RTX 40-series).

You can flip through the above gallery to see three different sets of image quality comparisons, using the four presets. Shadows, lighting, and anti-aliasing are all clearly improved as you go from Low to Epic, though the final step between High and Epic is less pronounced in some areas of the game. The lack of good anti-aliasing is particularly noticeable in the second set of images.

(Note also that the framerate counter in the first set of images isn't particularly useful, since I was busy taking screenshots. We'll get to performance in a moment.)

If you have a higher end graphics card, there's enough of a visual upgrade going from High to Epic settings to warrant the change. You can also tune your settings to balance out the demands against the visual upgrade provided.

Running quickly through the individual settings, the above images were all captured on an RTX 3050 running at 1080p with maxed out (Epic plus TAA High) settings. We then turned each setting down to minimum, let framerates stabilize, and grabbed a screenshot. The baseline performance was 67 fps, for reference.

Anti-Aliasing: Turning this off barely changed performance (68 fps) but the jaggies are very bad, especially in motion, which the screenshot doesn't properly convey.

Effects Quality: Setting this to low improved performance by 6% (71 fps), with little difference in image fidelity, at least for the chosen scene.

Foliage Quality: Turning this to low removes a lot of the extra foliage (and debris), which is very visible. It also improved performance by 12% (75 fps).

Post-Processing Quality: This did not affect performance at all, so you can just leave it on Epic.

Shading Quality: This also didn't affect performance.

Shadow Quality: Downgrades the shadow map resolution and doesn't soften the shadow edges as much, but improves performance 12% (75 fps).

Texture Quality: Noticeably reduces the texture resolution and only improves performance by a small amount (68 fps), assuming you're not running out of VRAM.

View Distance Quality: Changes the cutoff distance for rendering certain objects, with a moderate impact on performance (71 fps).

Upscaling: Quality mode upscaling improved performance by around 20% (80 fps) in this particular case.

Redfall Test Setup

TOM'S HARDWARE TEST PC

Intel Core i9-13900K

MSI MEG Z790 Ace DDR5

G.Skill Trident Z5 2x16GB DDR5-6600 CL34

Sabrent Rocket 4 Plus-G 4TB

be quiet! 1500W Dark Power Pro 12

Cooler Master PL360 Flux

Windows 11 Pro 64-bit

Samsung Neo G8 32

GRAPHICS CARDS

AMD RX 7900 XTX

AMD RX 7900 XT

AMD RX 6000-Series

Intel Arc A770 16GB

Intel Arc A750

Intel Arc A380

Nvidia RTX 4090

Nvidia RTX 4080

Nvidia RTX 4070 Ti

Nvidia RTX 4070

Nvidia RTX 30-Series

We're using our standard 2023 GPU test PC, with a Core i9-13900K and all the other bells and whistles. We've tested 20 different GPUs from the past two generations of architectures in Redfall, using the medium and epic presets.

We're also testing at 1920x1080, 2560x1440, and 3840x2160, and we've also tested most of the cards at 4K with Quality upscaling enabled. We used FSR2 for AMD GPUs, XeSS for Intel GPUs, and DLSS for Nvidia RTX cards. Only the RX 6500 XT and Arc A380 weren't tested at 4K, due to a lack of performance. We also tested with Frame Generation enabled on RTX 40-series cards, which was a bit interesting, but more on that in a bit.

We run the same path each time, but due to the day/night cycle (well, black sun/night cycle), the environment changes a bit. We ran the same tests in the same order on each GPU, however, which kept performance consistent. Medium was always first ("daytime"), and then we'd wait a minute for the game to transition to night for the epic testing.

Each GPU gets tested a minimum of two times at each setting, and if the results aren't consistent, we'll run additional tests. We also run around the map for a couple of minutes before testing starts, clearing out any enemies (so that they don't kill us while we're benchmarking).

And with that out of the way, let's get to the benchmark results.

Redfall GPU Performance

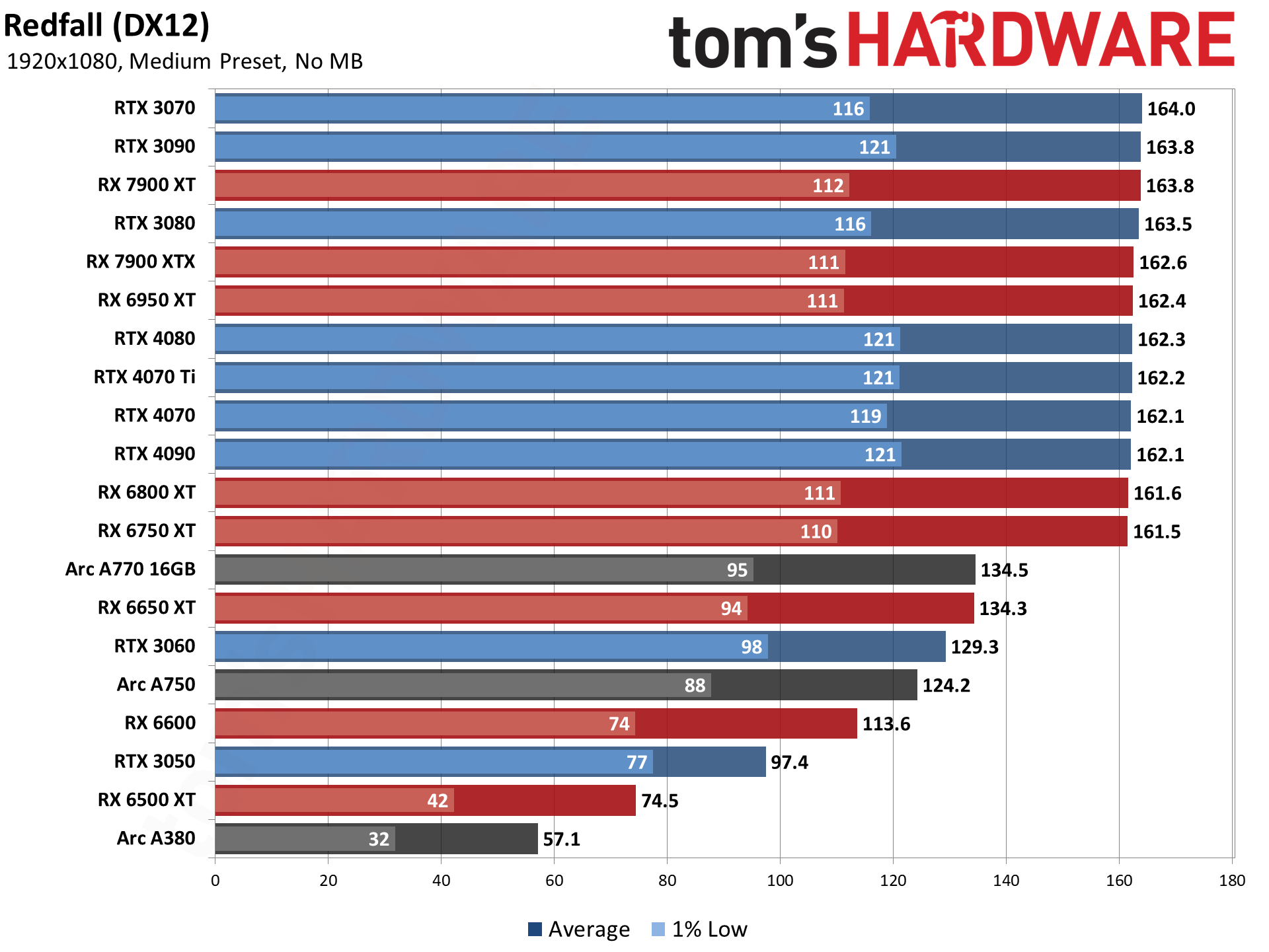

Nearly every GPU in our test suite breaks 60 fps at 1080p medium, with the Arc A380 being the sole exception. Intel's A770 and A750 do better, but the A380 just tends to be underpowered. For 1080p medium, everything from the RX 6750 XT and RTX 3070 up also ends up being CPU limited. There are minor fluctuations, but otherwise we're consistently at 161–164 for average fps, while the 1% lows (the average of the bottom 1% of frames) ranges from 110–112 fps on AMD, and 116–121 on Nvidia.

The game feels pretty smooth as well, even at lower fps, so you could play at 30-40 fps in a pinch. You might get a bit of stuttering on lower-end GPUs as you explorer, however, so if you're bothered by such things, try to choose settings that keep your minimum fps above 60.

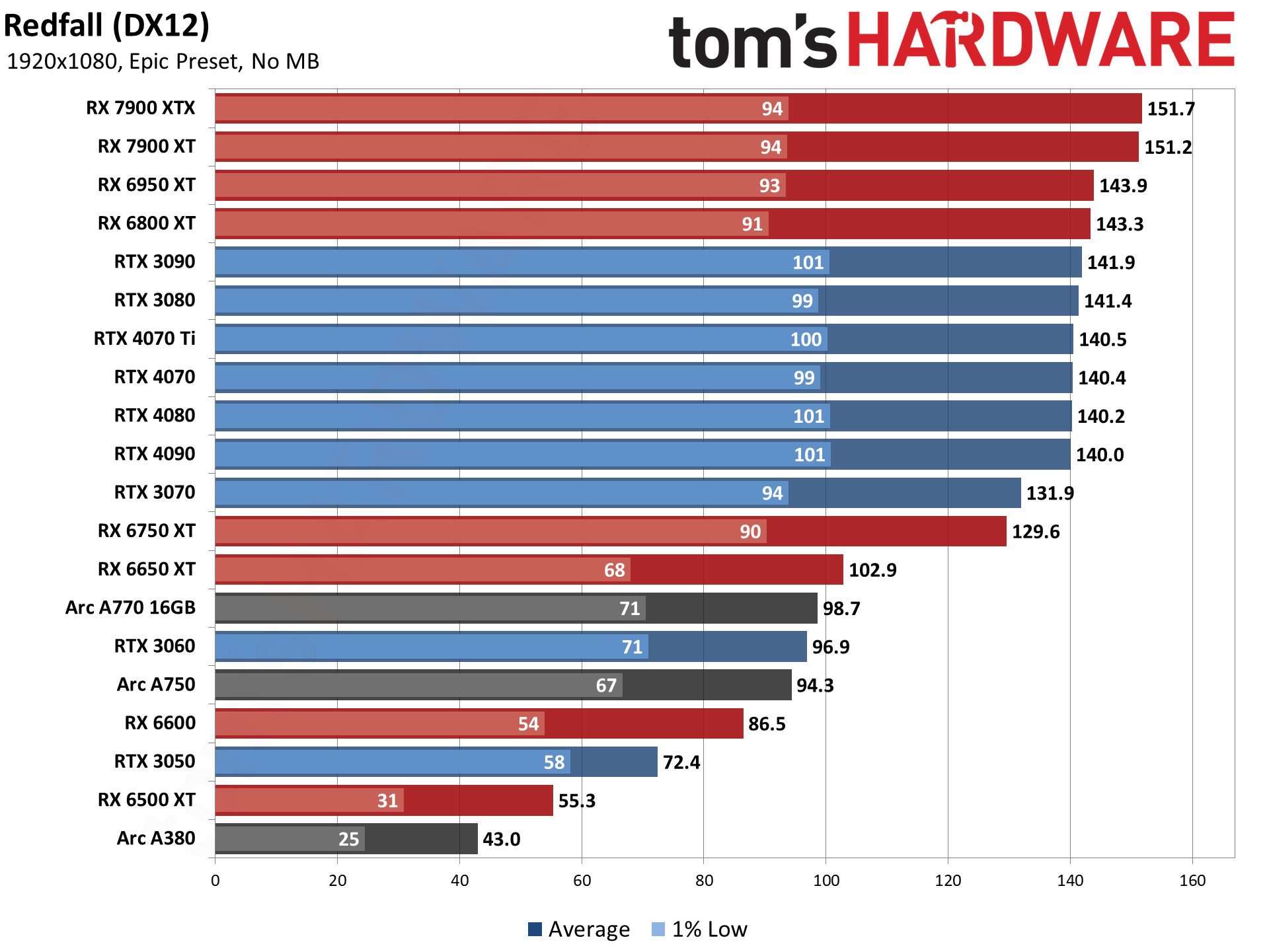

Moving to the epic preset drops performance on the fastest Nvidia cards to around 140–142 fps, while AMD's cards hit a slightly higher limit of ~144 fps on the RX 6000-series and ~152 fps on the RX 7900 cards. While average fps is higher on AMD's top GPUs, minimum fps ends up being lower, but the RX 6750 XT and above average 120 fps or more, and the RTX 3050 and above break 60 fps.

At the bottom of the charts, the RX 6500 XT and Arc A380 remain playable, though the A380 does occasionally dip below 30 fps. There's a catch, however: The RX 6500 XT only has 4GB of VRAM, and Redfall seems to auto-adjust texture quality in order to stay within VRAM constraints. 6GB is enough for 1080p epic settings, but a 4GB card can exhibit texture popping.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

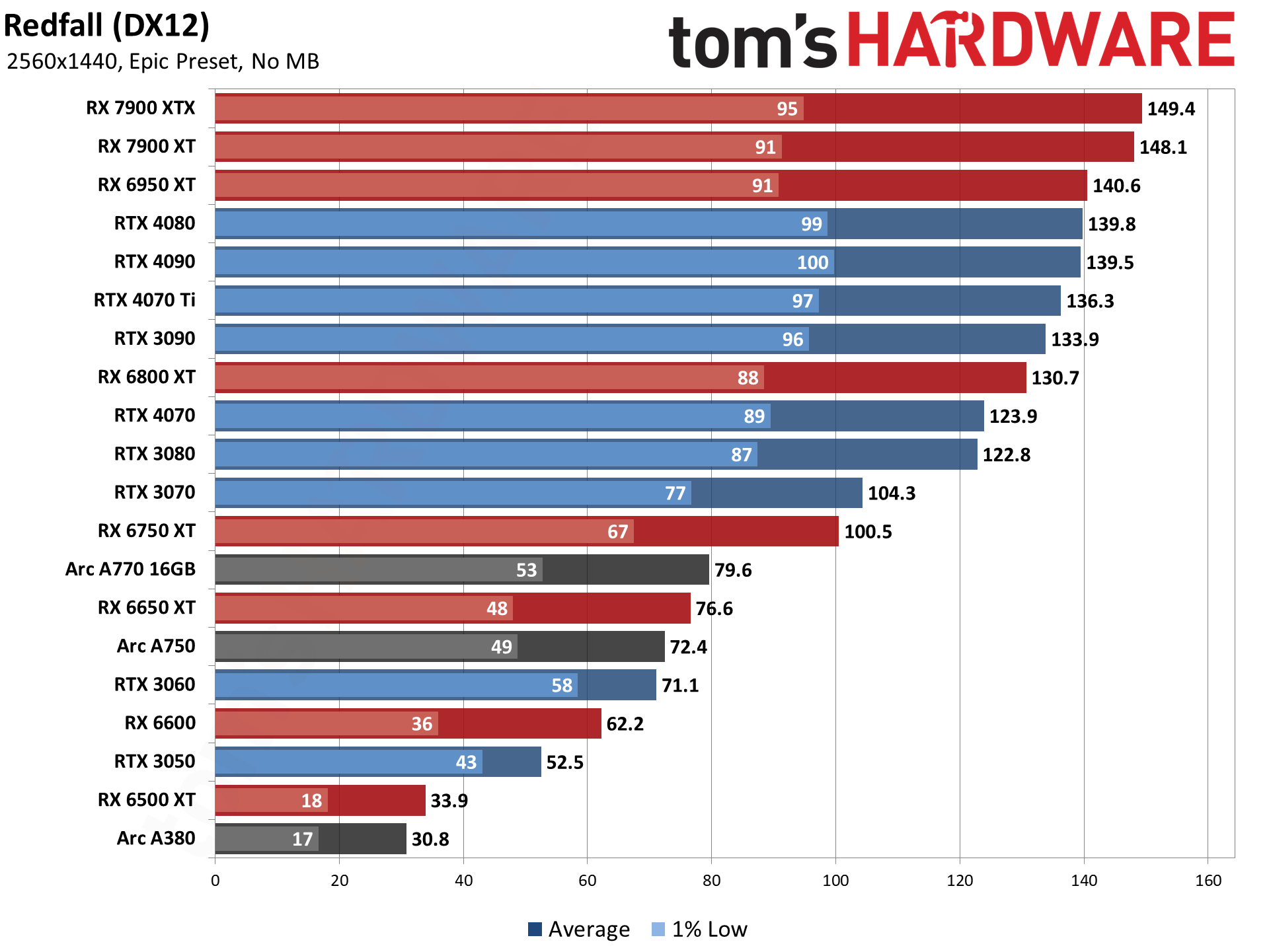

Outside of the bottom two GPUs, everything is still quite playable at 1440p epic settings. Sure, we're not running a bunch of GTX 10-series or RX 500-series parts, but an RX 570 as an example should deliver a similar result to the A380.

We're still hitting CPU limits on most of the caster cards, and minimum fps hasn't dropped much either. But the cards in general are starting to fall into their "normal" positions at least, even if the RTX 4090 and 4080 remain tied.

Looking at the RTX 3070/3050 versus the RTX 3060, it's also clear that Redfall doesn't require more than 8GB for 1440p. Performance still scales with GPU compute and the minimums don't show a sharp drop-off like we'd see if there was texture thrashing going on.

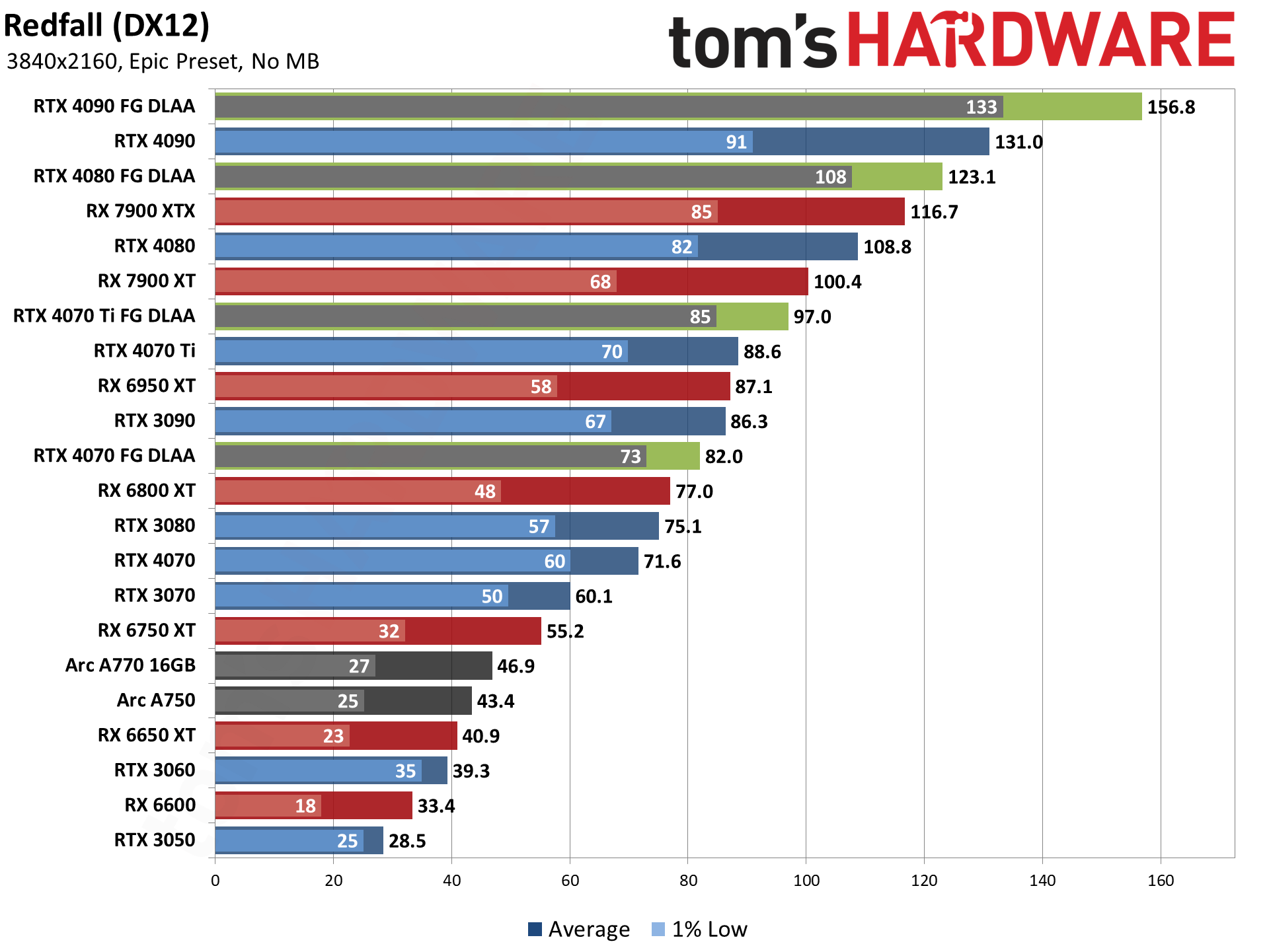

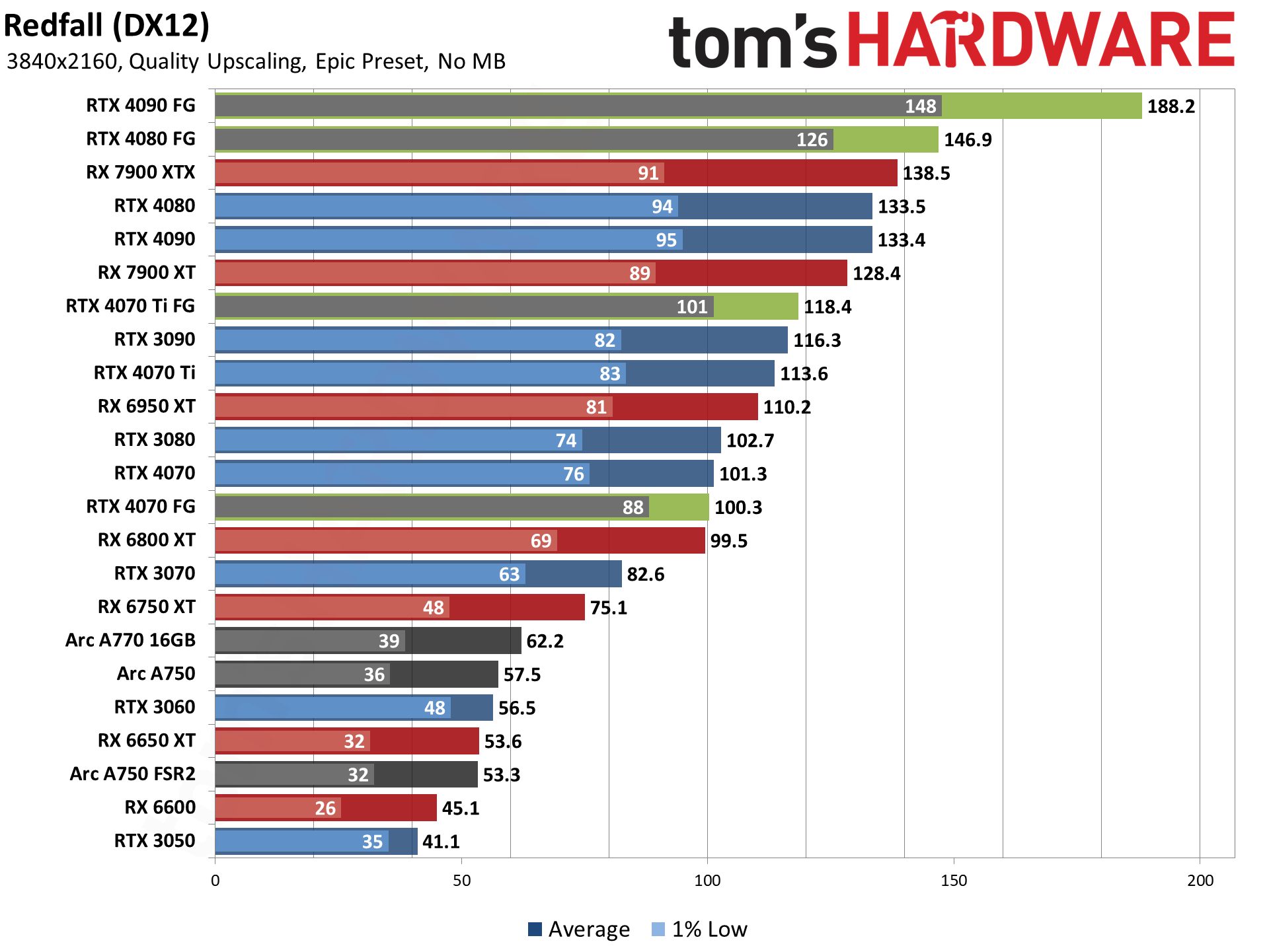

With 4K, we've added a few additional tests, thanks to Nvidia's DLSS 3 Frame Generation. However, even though we're trying to do native resolution comparisons here, Redfall doesn't let you turn on just Frame Generation. You have to use DLSS or DLAA, and so we opted for DLAA here. That would drop performance relative to non-DLAA, but then Frame Generation helps make up the difference and so there's a net gain.

There's not a big net gain, however. The RTX 4090 improves by 20%, and the RTX 4080 sees a 13% increase in frames to monitor. The RTX 4070 Ti meanwhile only gets 9% better "fps" and the RTX 4070 gets 15% higher "fps" — we put fps in quotes because Frame Generation has some other things going on that can actually make it a worse experience overall.

Given what we've seen already, it's not too surprising to see a lot of GPUs still above 60 fps, even at native 4K with maxed out settings. The RTX 3070 and above average at least 60 fps, but you'll need an RTX 3090 or RX 7900 XT if you want to keep minimum fps above 60... at least if you're only doing 4K native.

Redfall With Quality Upscaling

Our final chart is at 4K, but this time we've enabled Quality mode upscaling — using FSR2, DLSS, or XeSS as appropriate. There's a short caveat about XeSS, however: shadows and some other aspects of the game seem to render improperly with XeSS (shadows turn basically black rather than just being dark). We tested the A750 with FSR2 as well just to show how performance differs.

This is the best upscaling mode if you're after image quality. In most cases, whether you use DLSS or FSR2 (or XeSS, assuming the black shadows bug gets addressed), the game basically looks as good as native 4K. If you want more performance, you could also enable higher upscaling factors, but we didn't do that testing.

In general, DLSS upscaling improves performance by around 30–40 percent, but the fastest cards start to hit CPU limits again. FSR2 upscaling improves performance by a similar 25–35 percent, again with cards like the 7900-series bumping into CPU bottlenecks. Assuming XeSS isn't getting higher performance thanks to the rendering issues noted already, fps is 33% faster on the two Arc cards we tested.

The boost from upscaling ends up being enough to take marginal cards like the RTX 3060 and make them quite capable at 4K. Even the bottom card in our chart, the RTX 3050, delivers a playable result.

But let's pause to discuss Frame Generation again. This time, we get some real oddities. The RTX 4090 gets a decent 41% boost over pure upscaling, but the RTX 4080 only gets a 10% improvement. Then things get even worse, as the RTX 4070 Ti is only 4% faster, while the RTX 4070 is actually 1% slower. Not shown in the charts is that latency is also higher with Frame Generation, which is something we've mentioned before with DLSS 3.

It looks like there's a bug with the RTX 4070 and 4070 Ti cards and Frame Generation. Either that or the lower memory bandwidth is somehow a factor. But right now you wouldn't want to enable FG on the 4070-series parts.

Redfall Closing Thoughts

Redfall isn't the most demanding game around, and that's probably nice to see. After all the hubbub over Star Wars Jedi: Survivor and its massive VRAM issues, having a game that can deliver consistently playable performance on a wide range of GPUs is nice. Just about everything in our GPU benchmarks hierarchy should handle the game at 1080p medium.

We haven't had a chance to really dive into the game itself, but what we've experienced so far seems... fine. Well, maybe not so fine: PC Gamer calls it "lifeless" in it's Redfall review-in-progress. Uh oh. The town of Redfall and the surrounding environs are darker than other games, thanks to the black sun, and there's support for multiplayer as well — actually, it's up to 4-player cooperative, so if you're looking for player versus player, this isn't the right game for you. And we're not sure if the multiplayer is any good, either, as that wasn't the focus of our performance testing.

We're early in the campaign, so we'll leave out deeper judgments of the game quality for now. If you liked Deathloop or the Dishonored games, though, hopefully you'll find something to enjoy in Redfall.

Graphically, the game looks good, even if it doesn't include all the latest fancy pants ray tracing effects. No, you're not going to get fully path traced lighting, shadows, and reflections like in Cyberpunk 2077 with RT Overdrive, but you're also able to play the game on GPUs from several years back without too much trouble.

As for the Nvidia promotional aspect, other than somewhat spotty support for DLSS 3 (we're still not sure why the 4070 and 4070 Ti didn't benefit), Redfall doesn't appear to be heavily tied to team green hardware. You can play it just fine on AMD and Intel cards as well. So if you've been waiting for a chance to hunt vampires and their thralls, give Redfall a shot. Or read other reviews of the game, but all we can really say is that it generally seemed to run fine in our testing.

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

Elusive Ruse https://cdn.mos.cms.futurecdn.net/suwoyAvEbNq3cyao8JJCAA-970-80.pngReply

I knew Radeon had great raster performance but didn't see this coming :oops:

@JarredWaltonGPU thank you for the benchmarks, I have been hearing this game won't have the easiest time running on the Xbox X, did you get any such sense from the PC performance? Those 1% lows on both 4K and 1080p are a bit rough, innit? -

KyaraM So in essence, the AMD sponsored title only features FSR and prefers AMD cards, while the Nvidia sponsored title features all upscalers and does not prefer Nvidia cards. Amazing job with that performance skewing, AMD.Reply

@Elusive Ruse it's a shooter. I'm not really surprised, seems like the same mechanism as in other shooters, which has been going for a while. Besides, it has been noted before that Nvidia cards drop less with higher resolutions this generation. Situation changes at 4K. Will many people play at that resolution? Probably not, but it illustrates my point. -

The game actually got a new patch/update few hours ago. The size of the update is actually 65GB which is a ridiculous size for a patch. Maybe you can retest some of the game areas/levels once again.Reply

Unfortunately, as observed by other gamers (I don't have the game with me), even after applying the patch Redfall still suffers from some graphical issues, like pop-in issues, ridiculous T-poses, square-ish/pixely shadows, and traversal stutters. -

JarredWaltonGPU Reply

This tends to be the way of open world games where you truly can go to just about anywhere, any time. You get lower fps dips when things that were out of range have to load in. It's probably also just a factor of using Unreal Engine at settings that push the hardware reasonably hard. Anyway, for the 6750 XT and above, minimums are above 60 fps, which isn't bad at all IMO.Elusive Ruse said:https://cdn.mos.cms.futurecdn.net/suwoyAvEbNq3cyao8JJCAA-970-80.png

I knew Radeon had great raster performance but didn't see this coming :oops:

@JarredWaltonGPU thank you for the benchmarks, I have been hearing this game won't have the easiest time running on the Xbox X, did you get any such sense from the PC performance? Those 1% lows on both 4K and 1080p are a bit rough, innit? -

Avro Arrow Reply

It's not quite what it appears. This chart is CPU-limited and, since Radeon drivers have less CPU-overhead than GeForce drivers, the CPU is less limited and allows for a higher framerate.Elusive Ruse said:https://cdn.mos.cms.futurecdn.net/suwoyAvEbNq3cyao8JJCAA-970-80.png

I knew Radeon had great raster performance but didn't see this coming :oops:

Radeons are good, but they're not that good! :giggle: -

Ogotai Reply

how is this any different then the " how its meant to be played " campaign by nvidia ? seems, from what i could find this is exactly what that hole thing was about. direct help from nvidia by way of optimizing the games code for nvidia hardware, and nvidia optimizing its drivers for that game.KyaraM said:So in essence, the AMD sponsored title only features FSR and prefers AMD cards, while the Nvidia sponsored title features all upscalers and does not prefer Nvidia cards. Amazing job with that performance skewing, AMD.

this is NO different, if that is the case. -

Avro Arrow Reply

Yeah, do you remember what hairworks used to do (or GameWorks in general, for that matter)?Ogotai said:how is this any different then the " how its meant to be played " campaign by nvidia ?

Never mind them. They either work for nVidia or have some vested interest in them because their constant defending of everything that nVidia does and weird attacks on anything that AMD does is clearly driven by emotion, not logic.Ogotai said:seems, from what i could find this is exactly what that hole thing was about. direct help from nvidia by way of optimizing the games code for nvidia hardware, and nvidia optimizing its drivers for that game.

this is NO different, if that is the case.

Sure, AMD has pulled some crap and I've ripped them a new one each time, but their worst anti-consumer actions have always paled in comparison to the crap that nVidia has pulled.

Never forget about the "GeForce Partner Program"

Meanwhile, in some places where nVidia has anti-consumer practices, AMD has pro-consumer practices. This is even true if the consumer in question owns a GeForce card.

AMD created Mantle, an API designed to lessen the gaming load on a CPU so that gamers' CPUs would be viable for longer. This wasn't in AMD's best interest because they sell CPUs but they made it anyway and released it for free as an open-source API. Microsoft used elements from it in DirectX12. Then the Khronos Group took it and made Vulkan with it so that they could finally retire OpenGL.

Then of course, there's the fact that people who own GeForce RTX 30-series cards are denied DLSS3 and people who own ANY GeForce card beginning with GT or GTX can't use DLSS in any form at all. Meanwhile, those hapless GeForce owners can use AMD FSR without issue because, as was demonstrated with Mantle, AMD has always preferred open standards to locked proprietary solutions. It's also why their Linux drivers are so good and why nVidia's are so bad. Remember what Linus Torvalds, one of the greatest tech minds in history, had to say to nVidia.

We also owe the fact that we're no longer sandbagged with quad-core CPUs to AMD because Intel clearly felt that 8-core CPUs should cost over $1,000USD and that 10-core CPUs should cost over $1,700USD.

Let them ramble on about how "wonderful" Intel and nVidia are. Who knows, maybe they secretly own LoserBenchmark! ;) :LOL: -

KyaraM Reply

What does that even matter? There is still no excuse not to include other upscalers. Especially since all usually work best on their respective hardware and with there now existing a pipeline that makes implementation of every upscaler easier. Which, btw, was developed by Nvidia. And Nvidia sponsored titles, last I checked, didn't block other upscalers. We are, in fact, commenting on of them right now.Elusive Ruse said:FSR is open sour e and works on all GPUs, can you say the same about DLSS?

When a company restricts developers in which upscalers they can include, then none of this matters. Especially AMD is absolutely notorious for bragging about the dumbest stuff and FSR being more widespread than DLSS is one of them. They are that desperate to gain marketshare. However, if you simultaneously block other upscalers in sponsored titles, well, than that supposed dominance means jack. It's bought and nothing else. Anyone else would get massive backlash for this, but it's AMD doing it, so who cares, right.

And about AvroArrow I can only say. I have yet to see them criticize anything AMD did. Ever.