Manor Lords is here and we benchmarked it — how much GPU horsepower do you need to play the indie hit?

Manor Lords also supports DLSS, FSR, and XeSS upscaling.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

Manor Lords Overview

Manor Lords just entered open early access after seven years of development by indie studio Slavic Magic. If you haven't heard of it before, it's the most wishlisted game on Steam, with over three million people expressing interest. That means we're also interested — interested in seeing how it runs on all the best graphics cards, that is.

The game was built using Unreal Engine, with most of the latest upscaling technologies included. It has DLSS 3.7.0, XeSS 1.3, and FSR ... something. We're not sure what version of FSR was included, as it's open source, and thus, developers can just integrate the code directly into their projects. We'd assume it uses FSR 2.2, but that's just a best guess based on the other upscaling algorithms. We'll assume it's FSR 2.x for now, and we'll talk about the upscaling in more detail below.

Manor Lords System Requirements

The official system requirements, according to the Steam page, are pretty tame. You'd need at least an Intel Core i5-4670 or AMD FX-4350 — both of those are quad-core CPUs. You'll also need 8GB of RAM, and at least a GTX 1050 2GB, RX 460 4GB, or Arc A380. The game needs Windows 10 or 11 64-bit, uses DirectX 12, and requires 15GB of storage space.

How will a minimum-spec PC run the game? We're not sure, as we don't have all of that hardware. We may see about testing it on a Steam Deck, though the controls and interface certainly don't seem ideal for such a system. It's probably a safe bet that the minimum specs are 1280x720 with low settings, though, and even then, you might only get a bit more than 30 fps.

The recommended specs bump the CPU to an Intel Core i5-7600 or Ryzen 3 2200G, which are both still quad-core processors. You'll also want 12GB of RAM, and the GPU recommendations consist of the GTX 1060 6GB, RX 580 8GB, or Arc A580. We did at least test how the game runs on the Arc A580, and basically, you can get over 30 fps at ultra settings — without upscaling.

Manor Lords Test Setup

We're testing the initial early access release of Manor Lords, which the game shows as version 0.7.955. It's a safe bet that a lot of things will change in the coming months, including performance, but since you can now at least get access to the game for $29.99 we figured it would be okay to run some benchmarks and see where things stand.

TOM'S HARDWARE TEST PC

Intel Core i9-13900K

MSI MEG Z790 Ace DDR5

G.Skill Trident Z5 2x16GB DDR5-6600 CL34

Crucial T700 4TB

be quiet! 1500W Dark Power Pro 12

Cooler Master PL360 Flux

Windows 11 Pro 64-bit

Samsung Neo G8 32

GRAPHICS CARDS

Nvidia RTX 4090

Nvidia RTX 4080 Super

Nvidia RTX 4070 Ti Super

Nvidia RTX 4070 Ti

Nvidia RTX 4070 Super

Nvidia RTX 4070

Nvidia RTX 4060 Ti 16GB

Nvidia RTX 4060 Ti

Nvidia RTX 4060

Nvidia RTX 3060 12GB

Nvidia RTX 3050 8GB

Nvidia RTX 2060 6GB

Nvidia RTX 1660 Super

AMD RX 7900 XTX

AMD RX 7900 XT

AMD RX 7900 GRE

AMD RX 7800 XT

AMD RX 7700 XT

AMD RX 7600 XT

AMD RX 7600

AMD RX 6900 XT

Intel Arc A770 16GB

Intel Arc A750

Intel Arc A580

Intel Arc A380

Manor Lords doesn't have any DirectX Raytracing support, though it does have an embree.2.14.0.dll file in the game folders. Embree is an Intel ray tracing library with support for CPUs and GPUs, but it's not clear what exactly it's being used for in this particular game right now. There's also an OpenImageDenoise.dll file, presumably for future support of ray tracing and denoising (maybe).

There are no presets right now, just eleven graphics options that mostly have low/off, medium, high, and ultra settings available. We started our testing with an RTX 4070, and based on the performance we decided to focus on just testing with all the settings maxed out. That... turned out to perhaps be a mistake, as the game currently runs better on Nvidia GPUs. Whether that's due to drivers or a focus by the devs on Nvidia hardware isn't clear.

We also tested a few GPUs at "medium" settings (volumetric clouds were turned off) to see how lesser cards might fare. Since the game is early access, we didn't want to get too crazy about what GPUs we tested, so we focused on all the current-generation AMD, Intel, and Nvidia GPUs, plus a few previous-generation parts. Depending on interest, we may revisit the game in the coming months and/or add more GPUs.

Manor Lords belongs to the city-builder genre and offers some real-time combat options. Some of the screenshots remind me of the Total War series, though at least in its current state, it doesn't play like those games. There's no built-in benchmark, so we needed to figure out a reasonable way to test performance.

We ended up getting to the level of a relatively minor village, with some fields and buildings, and then created a test sequence where we moved the camera along the same path. We repeated this at least three times per GPU, per setting — the first run or two can encounter more stuttering as the game assets get loaded into RAM/VRAM. Subsequent runs tend to be more consistent... at least on Nvidia and Intel GPUs; AMD GPUs were more prone to the occasional stutter, even after multiple passes.

Besides testing at "ultra" settings at 1080p, 1440p, and 4K, we also tested at 4K ultra with Quality mode upscaling enabled. Mostly, this consisted of using the vendor-specific API for each GPU, so Nvidia GPUs were tested with DLSS, Intel used XeSS, and AMD used FSR. However, you can also use FSR and XeSS on any (reasonably recent) GPU, so there are a few additional tests with such configurations.

Thankfully, all three GPU vendors have "game ready" drivers, or at least drivers that specifically make mention of Manor Lords. We tested with AMD 24.4.1, Intel 5445, and Nvidia 552.22 drivers. Again, based on our initial test results, it's a reasonably safe bet that AMD will eventually deliver improved performance with driver optimizations.

With the preamble out of the way, let's hit the benchmarks. We also have some image quality and upscaling discussions after the charts.

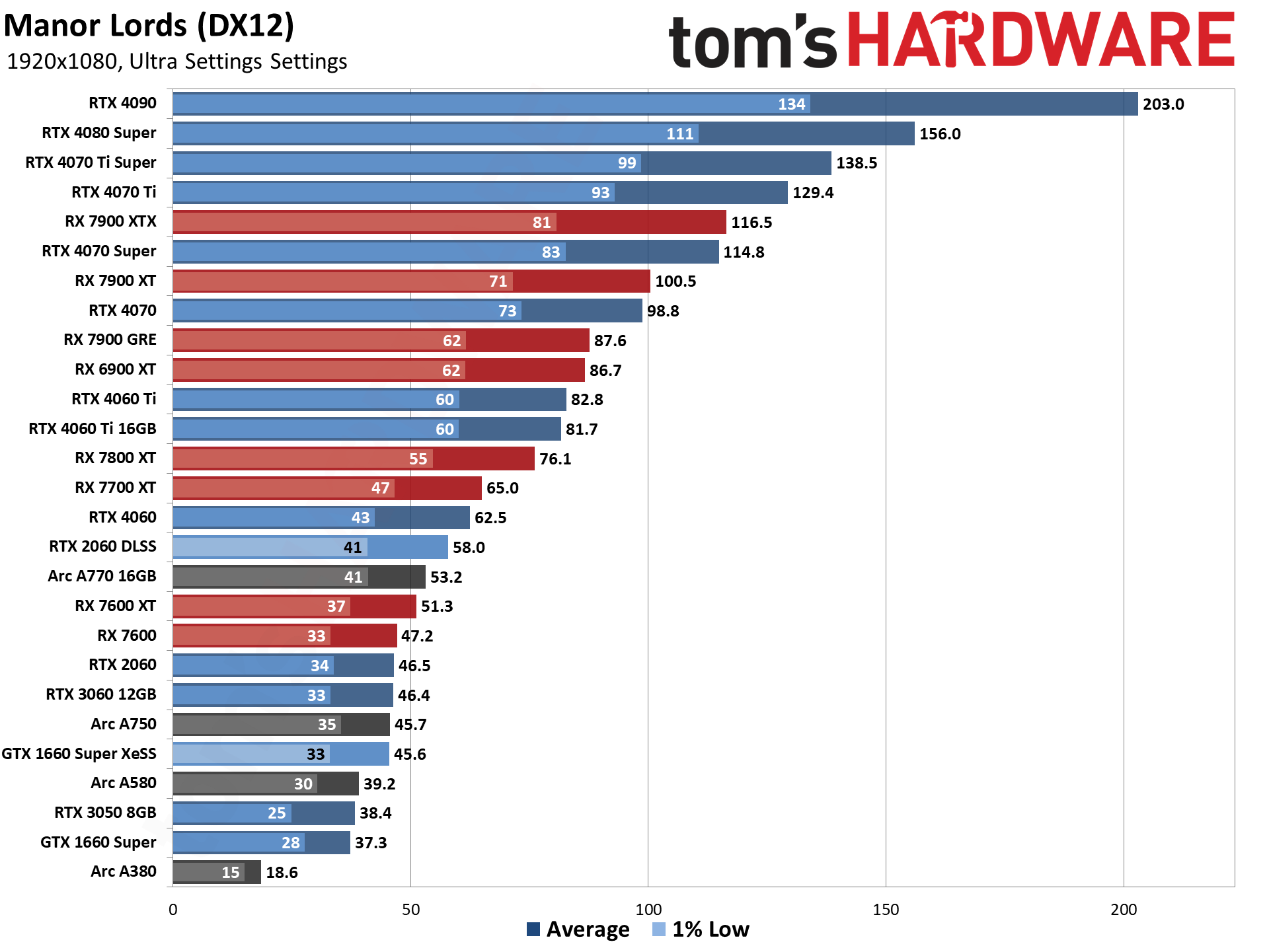

Manor Lords 1080p GPU Performance

We'll start with the medium and ultra benchmarks at 1080p. We only tested a few GPUs at medium settings, but we also tested with various upscaling modes enabled. Mostly, we wanted to see if we could get more than 60 fps on the GTX 1660, RTX 2060, and Arc A380. Spoiler: Yes, yes, and no. But let's kick things off with 1080p ultra settings.

If you thought just about any recent GPU should be able to handle Manor Lords at maxed-out settings and 1080p, think again. For average framerates, the RTX 4060 and RX 7700 XT and above do manage to break 60 fps, but plenty of other GPUs fall well short of that mark.

Also, as hinted at earlier, Nvidia GPUs rule the medieval times right now, with the RTX 4070 Ti and above outperforming AMD's fastest RX 7900 XTX. Is that due to AMD's drivers or the game itself favoring Nvidia cards? It might be more of an AMD problem, as Intel's Arc GPUs land about where expected — the A750 comes in roughly at the same level as the RTX 3060.

Except, the RTX 2060 also manages to match the RTX 3060. Based on our GPU benchmarks hierarchy, we'd normally expect the RTX 3060 to outperform the RTX 2060 by about 30%. The current code appears to simply run better on Turing for the time being, though the 40-series GPU results at least seem to mostly make sense. The RTX 4060 beats the 2060 by 34%, compared to a 53% lead in our hierarchy, but Manor Lords doesn't seem to need a lot of VRAM, so the 6GB doesn't hurt the 2060 as much.

Clearly, there's some wonkiness going on, and there are lots of good reasons Manor Lords has disclaimers about the early access nature. Every time you launch the game right now, you get a message from developer Greg saying things will change over time. So, we're not going to say Nvidia will remain faster, as this is a rasterization game (at least for now?), but we had a far better experience using Nvidia GPUs for the time being.

Something you can't see in the charts is that we had quite a few crash to desktop events on AMD GPUs as well. Mostly, it was just a matter of getting the game running. After the first ten seconds in a map, if we hadn't crashed yet, it didn't usually crash at all. The game wasn't 100% stable, but it was certainly playable.

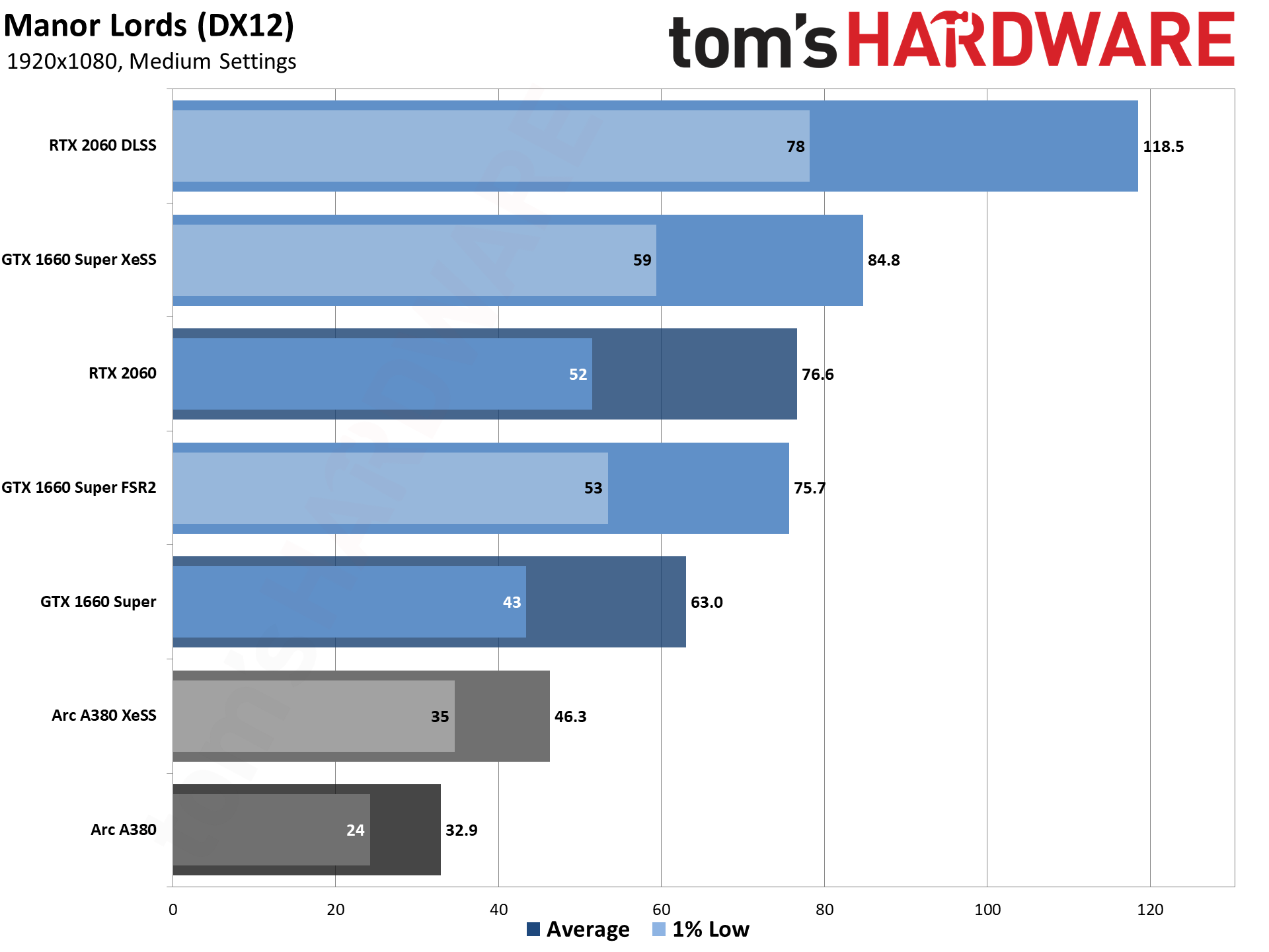

Let's also look at some of the lesser GPUs running medium settings and with upscaling. We only tested the RTX 2060, GTX 1660 Super, and Arc A380 at 1080p medium. We could add an AMD GPU as well, but given the above results, we figure any AMD results are, at best, preliminary right now and will very likely change a lot in the coming days.

The A380 wasn't even remotely playable at ultra settings. Okay, maybe that's too harsh, as this isn't a fast-paced shooter. You probably could still play Manor Lords at less than 20 fps, but that would definitely be less enjoyable. Dropping to medium settings bumps performance up to 33 fps, with lows in the mid-20s. Still not awesome, but at least it's viable. Turning on XeSS meanwhile gets performance into the mid-40s, with lows in the mid-30s, and at that point, the game is definitely playable.

On the RTX 2060, even with DLSS quality mode upscaling we couldn't quite hit 60 fps at ultra settings. Dropping to medium kicks performance well above that mark with 77 fps, and then DLSS, on top of that, yields minimums of 78 fps with nearly 120 fps average performance.

Finally, just for kicks, we pulled out the old GTX 1660 Super, a trimmed-down take on the original RTX Turing architecture. It already managed to break 60 fps at medium settings, but of course, the GTX cards don't support DLSS. That's where AMD FSR2 and Intel XeSS come into play. FSR2 gets performance up to 76 fps, a 20% increase, while XeSS boosts framerates up to 85 fps, a 35% improvement.

So, whatever GPU you happen to be running, the combination of lower settings and upscaling will likely get Manor Lords up to playable framerates. Just don't expect it to look quite as nice if you have to run the lowest settings (see below).

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

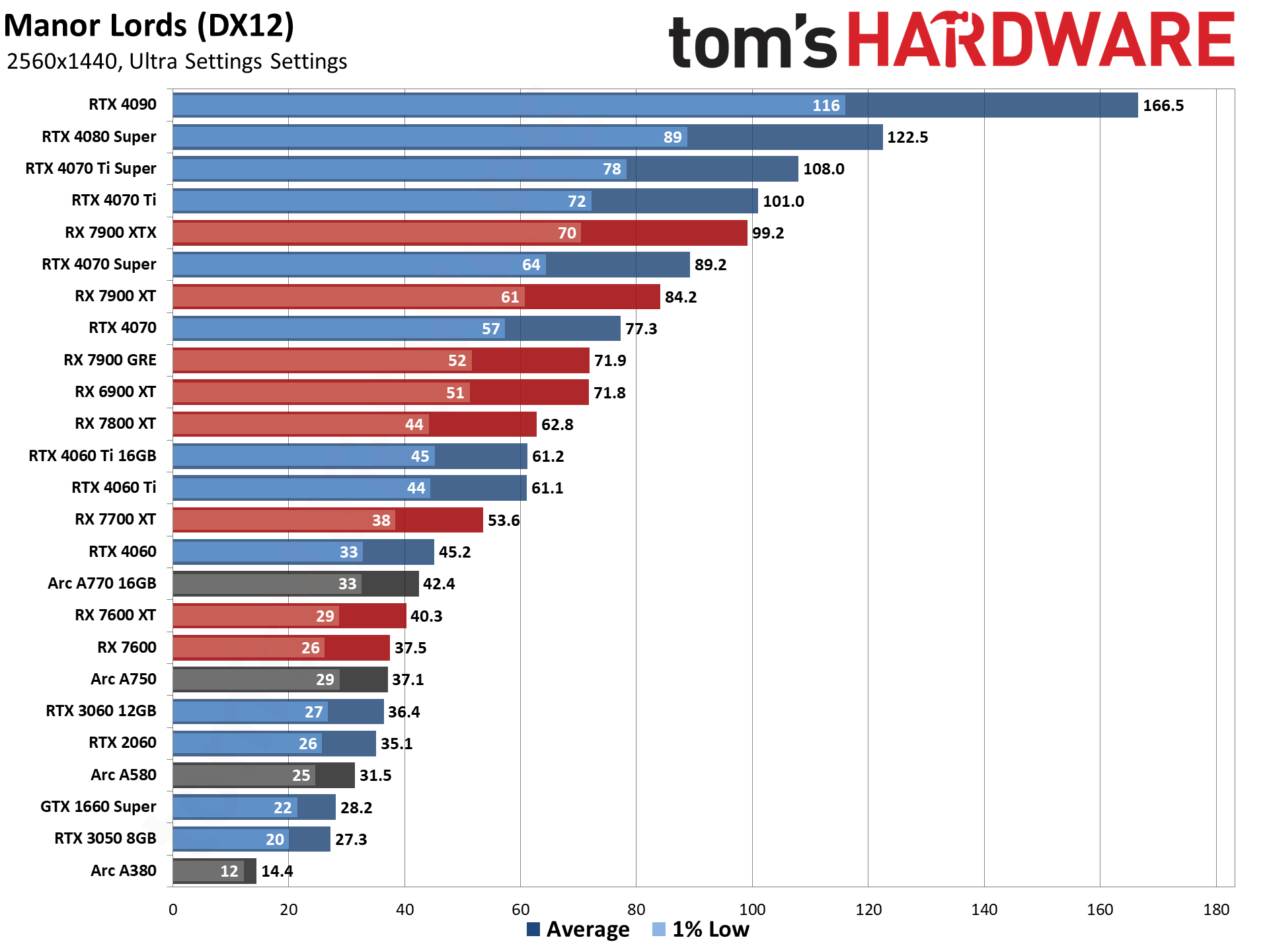

Manor Lords 1440p GPU Performance

The Nvidia advantage continues at 1440p ultra, though now the 7900 XTX is at least coming very close to matching the RTX 4070 Ti. Which still isn't that great, and lower down the list we see similar deficiencies in AMD's performance. The RX 7700 XT falls behind the RTX 4060 Ti — both the 8GB and 16GB models, and 16GB appears to matter very little in this particular game — while the 7800 XT is just a hair ahead of Nvidia's mainstream card.

The charts are basically what we'd expect if Manor Lords were a heavy ray-tracing game like Cyberpunk 2077 or Minecraft RTX, except it's not, as evidenced by the GTX 1660 Super running the game faster than an RTX 3050.

If you're only looking for playable performance of 30 fps, the Arc A580, RTX 2060, and anything faster than those should suffice. Again, the 3060 barely beats the 2060, so something is clearly off right now. Upscaling would, of course, help improve the situation, and we'd strongly suggest using DLSS, XeSS, or FSR (in that order) if you want a smoother gaming experience with Manor Lords.

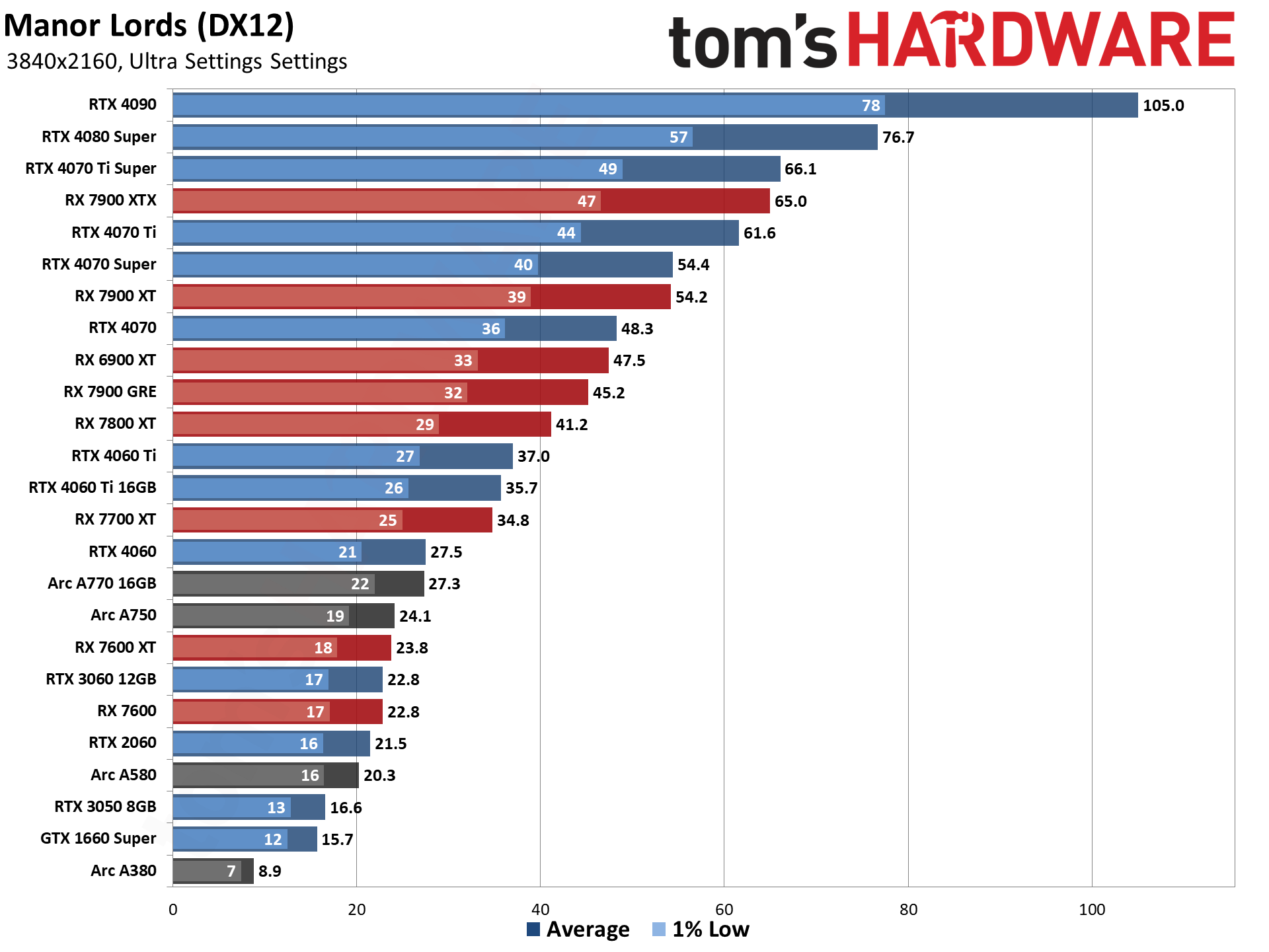

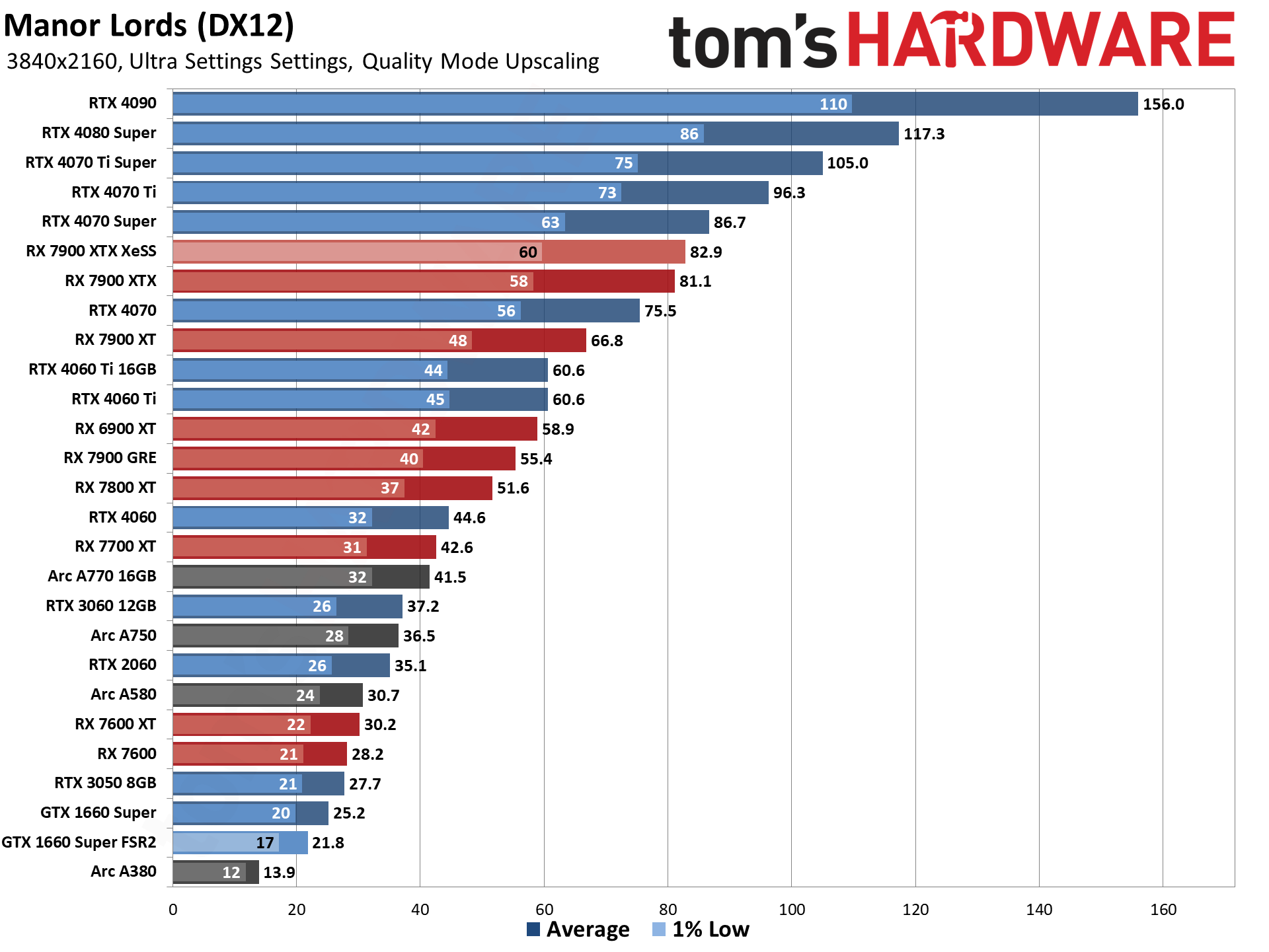

Manor Lords 4K GPU Performance

What about 4K ultra? Not surprisingly, you'll need a pretty hefty GPU to get to 60 fps. The RTX 4070 Ti technically makes it, though minimums are in the mid-40s. RX 7900 XTX also gets there, this time edging out the 4070 Ti by 6%. The RTX 4080 Super nearly gets minimum fps above 60 as well, while the RTX 4090 plugs along happily at 105 fps with 78 fps lows.

If you're adamant about not using upscaling, you need a lot of GPU to run the current early access version of Manor Lords at 60 fps. Only a handful of GPUs will suffice right now. But if you're okay with 40-ish fps, the RX 7800 XT and above will also work. Or you can do the smart thing and enable upscaling...

We tested all of the GPUs with Quality mode upscaling at 4K, just to see how they stack up. Now, even the RTX 4060 Ti manages to break 60 fps (barely), while the RX 7900 XT and above do even better.

We have two results for the 7900 XTS, one with FSR2 upscaling (the default for our benchmarks) and a second using XeSS upscaling. In our opinion, the XeSS 1.3 upscaling generally looks better than FSR2 and also performs slightly better. There are reasons for that, which we'll discuss more below.

Near the bottom of the chart, we also have the GTX 1660 Super, with FSR2 upscaling and XeSS as well. Again, XeSS performs better and would be our recommendation for any non-RTX GPU.

Most of the GPUs we tested managed to reach 30 fps or more at 4K with upscaling. Only the RX 7600 and below came up short, though if you want minimum fps above 30 as well, you'll need at least an Arc A770 16GB, RX 7700 XT, or RTX 4060. Alternatively, you could use a higher level of upscaling, like the Balanced or Performance modes.

Manor Lords Image Fidelity Comparisons

Given that Manor Lords is an indie game just hitting early access, there's still plenty of time for improvements in the graphics quality. Right now, things generally look quite nice, but there are occasional rendering artifacts.

One of the biggest issues is with the TAA anti-aliasing. People often talk about ghosting artifacts with upscaling solutions, but TAA can also cause ghosting... and it definitely does in Manor Lords. Probably that's something that could get ironed out with future updates, but we prefer the look of the game with DLSS, XeSS, or FSR over the native TAA rendering right now.

Videos show the ghosting and other differences a lot better, especially "live" rather than in heavily processed YouTube uploads. We've added a video below to show at least one area (moving through the forest) where ghosting can be very distracting if you're using the default TAA mode. We also have some screenshots showing the general image quality using the ultra, high, medium, and low settings.

Ultra settings

High settings

Medium settings

Low settings

We can hopefully all agree that the ultra settings look best, though the difference between ultra and high isn't massive. Distant foliage loses some detail, the snow, lighting, and shadows change, and there's a bit of a shift in the geometry and LOD (level of detail) on the high settings. As for performance, high only runs about 15% faster than ultra, which may or may not be worth the drop in visuals.

The medium settings mostly seem to change based on our turning off volumetric clouds — there's a haze everywhere on high that goes away on our medium settings. There's also less detail on the geometry, like the fences don't have as many boards. Foliage quality also drops a bit as well. Medium offers about a 15% performance increase over the high settings.

Then we get to the low settings. The difference between low and medium is quite massive, both in image fidelity and performance. Framerates are over 50% higher on the low settings, but the reduction in lighting quality, textures, and other aspects makes the game look far worse.

In our screenshots, we were in March — the start of spring when the snow begins melting. All of the higher settings will slowly blend between winter and spring in terms of visuals, but on the low settings, the "partially melted snow" aesthetic is simply gone. If you have an older GPU, like the minimum spec GTX 1050 or RX 460 4GB, perhaps you'll need to use the low settings just to get decent performance. For anyone with a more modern GPU, medium should be the bare minimum you want to run.

Manor Lords Resolution Upscaling

We've shows some upscaling performance results above, and we've talked a bit about the rendering quality and upscaling already, but let's dig a little deeper. There's no frame generation support, not that we really miss it — and it could always get added later if desired.

As far as the supported algorithms, we know based on the DLLs that Manor Lords uses DLSS 3.7 and XeSS 1.3, but FSR is a bit of a wild card. Games often bake FSR into the binary directly rather than using a DLL, so we can't just search for a file and check the version. The lack of framegen support implies it's not FSR 3.0 or 3.1, and it could even be FSR 1.x spatial upscaling, but that would be odd considering the inclusion of DLSS and XeSS temporal upscaling algorithms. Our assumption, based on performance and image fidelity, is that the game uses FSR 2.2 or similar. If you happen to know which version specifically, let us know and we'll update the text as appropriate.

Above are image sets from three different GPUs: RTX 4070, RX 7900 GRE, and Arc A770 16GB. All of the images are at maximum settings, with TAA at native 4K followed by the various supported upscaling algorithms using the Quality settings.

One thing to note is that AMD and Nvidia use a 1.5X upscale factor for Quality mode. Prior to XeSS 1.3, Intel used the same 1.5X factor, but with version 1.3 it changed to a higher 1.7X factor. That means XeSS Quality mode is more like DLSS and FSR2 Balanced mode now, at least in terms of how many pixels are rendered prior to upscaling.

Here's the interesting bit: XeSS 1.3 still looks better than FSR2, at least in our opinion. Still images don't do it justice, either. Both TAA and FSR2 modes have quite a lot of visual artifacts, including shimmering on surfaces with lots of fine lines. XeSS does better with temporal stability, though it's still not perfect, while DLSS overall looks the best.

Nvidia RTX Native TAA

Nvidia RTX DLSS Quality

Nvidia RTX XeSS Quality

Nvidia RTX FSR2 Quality

Intel Arc Native TAA

Intel Arc XeSS Quality

Intel Arc FSR2 Quality

AMD RX Native TAA

AMD RX XeSS Quality

AMD RX FSR2 Quality

There are nuances to the upscaling, of course, and while you can use all three options on an RTX card, AMD and Intel GPUs will need to choose between FSR and XeSS. But looking at the results, and especially in light of the fact that XeSS is using a higher upscaling factor, we think it's pretty obvious that machine learning upscaling algorithms do a better job than hand-coded solutions like FSR/FSR2. That's probably why there are rumblings about AMD looking into AI-based upscaling for a future version of FSR.

One side effect of upscaling is that it can change the way shadows get rendered. That's because the math behind certain things like ambient occlusion can give different results at 1080p, 1440p, and 4K. So if you render at ~1440p and upscale to 4K, it won't look quite the same as native 4K rendering.

You can see this in the garden with more shadows in FSR2 mode with the Arc and Radeon GPUs, as well as from a different angle on the RTX card. FSR2 seems to emphasize the shadows more than the other two algorithms. It's a subtle distinction, but it's definitely present.

We've also added a video showing DLSS, FSR2, XeSS, and TAA modes to highlight the shimmering and moiré pattern effects that are more pronounced with FSR and TAA as well. There's a slight shimmer with XeSS that's still visible, while DLSS looks the best. There are differences elsewhere, however, like in how the spinning windmill blades look.

The FSR quality even makes us wonder if Manor Lords is only using FSR 1 upscaling, but hopefully that's not the case, as the other two algorithms are the latest available releases. Why would a developer use FSR spatial upscaling when the temporal upscaling inputs are already available for DLSS and XeSS?

On a related tangent, FSR 1.x spatial upscaling tends to be much lighter weight than FSR 2, XeSS, and DLSS. Given that we didn't see a noticeable performance advantage from the FSR setting, we're reasonably confident it's FSR 2/3 temporal upscaling — we just don't know precisely which version is being used.

Manor Lords Closing Thoughts

We don't want to make any definitive conclusions right now, given that Manor Lords just went into early access. There are bugs and plenty of other work that still needs to be done, and there is probably a lot of fine-tuning to make the game run better on a wider variety of hardware.

Still, with the initial release, Nvidia GPUs run the game best, often punching a couple of rungs above their normal weight class. But there are oddities even within Nvidia, with the Ampere RTX 30-series cards that we tested seemingly underperforming. If we had to venture a guess, the developers might be using RTX 40-series cards, and that's why it works best on those GPUs... but we might be making assumptions, and you know what they say...

Manor Lords is also an interesting look at a long-in-development Unreal Engine game. We're pretty sure it's still using Unreal Engine 4 rather than the newer UE5, and Unreal Engine 5.4 just came out, promising even more features. Will the developers eventually switch to UE5, or is there too much customization to make such a move practical? Only time will tell.

With three million people having put the game on their wishlist prior to the early access release, we hope the game will have enough commercial success to warrant further development. Manor Lords strikes me as a blend between Settlers and Total War right now, while attempting to carve out its own niche. We'll have to wait and see how things develop in the coming days now that anyone who wants can give the game a try in its current state.

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

Paul Basso something must be broken really badly to AMD to be outperformed this way. Radeon 7000 XTX outperformed for a 4700super at 4K nonsense!Reply -

Notton AMD GPUs have had an issue with Unreal Engine for a while now. It's no surprise they perform worse in a game that uses UE4/5.Reply

"Why does everyone hate Nvidia but still use them anyways?" It's because of stuff like this.

AMD, please stop dropping the ball at drivers if you are going to charge a premium for the hardware that doesn't even perform all that well to begin with.

RX7900XTX still offers a playable experience at 4K ultra, so there is at least that. -

russell_john Absolutely no CPU performance specs and using a CPU that has known problems right now.Reply

Seriously you call this professional? -

tamalero Reply

"AMD Dropping the ball". I call bull.Notton said:AMD GPUs have had an issue with Unreal Engine for a while now. It's no surprise they perform worse in a game that uses UE4/5.

"Why does everyone hate Nvidia but still use them anyways?" It's because of stuff like this.

AMD, please stop dropping the ball at drivers if you are going to charge a premium for the hardware that doesn't even perform all that well to begin with.

RX7900XTX still offers a playable experience at 4K ultra, so there is at least that.

Nvidia sometimes pay hefty sums to have tailored optimizations. AMD has had their way a few times too (like that racing game).

Let's not forget funky stuff like Nvidia forcing Batman's developer to disable certain things on AMD's chips even if it works fine on them. -

mac_angel Reply

umm, what GPUs are those?Paul Basso said:something must be broken really badly to AMD to be outperformed this way. Radeon 7000 XTX outperformed for a 4700super at 4K nonsense! -

mac_angel Reply

I think they did a lot of benchmarks as it is. And it shows their test system was a 13900K.russell_john said:Absolutely no CPU performance specs and using a CPU that has known problems right now.

Seriously you call this professional?

Also, as many people/media have reported, and Intel made a statement on, it's the settings of the motherboard, not Intel. If you put Intel's limits on the CPU, they run fine. -

Notton Reply

Yeah, exactly. There is no law or rule against doing that, and AMD's finances are doing fine.tamalero said:"AMD Dropping the ball". I call bull.

Nvidia sometimes pay hefty sums to have tailored optimizations. AMD has had their way a few times too (like that racing game).

Let's not forget funky stuff like Nvidia forcing Batman's developer to disable certain things on AMD's chips even if it works fine on them.

There's nothing stopping AMD from doing the same thing.

Yet they don't, and it shows up in a ton of games.

Why wouldn't you spend time, money, and manpower working with a game dev? If the game runs better, everyone is happier. It's literally win-win-win. -

rustigsmed yeah interesting - I was getting about 200-250fps in the early game on a 5950x and 7900xtx at 1440p ultra / native res. late game more like 140-150fps. but I'm on Arch Linux so perhaps there is plenty of room for the AMD proprietry windows drivers to improve yet.Reply -

tamalero Reply

it's called Nvidia's monopolistic "The way its mean to be played" XDNotton said:Yeah, exactly. There is no law or rule against doing that, and AMD's finances are doing fine.

There's nothing stopping AMD from doing the same thing.

Yet they don't, and it shows up in a ton of games.

Why wouldn't you spend time, money, and manpower working with a game dev? If the game runs better, everyone is happier. It's literally win-win-win.