SuperComputing 2017 Slideshow

SuperComputing 2017

The annual SuperComputing 2017 conference finds the brightest minds in HPC (High Performance Computing) descending from all around the globe to share the latest in bleeding-edge supercomputing technology. The six-day show is packed with technical breakout technical sessions, research paper presentations, tutorials, panels, workshops, and the annual student cluster competition.

Of course, the conference also features an expansive show floor brimming with the latest in high-performance computing technology. We traveled to Denver, Colorado to take in the sights and sounds of the show and found an overwhelming number of exciting products and demonstrations. Some of them are simply mind-blowing. Let's take a look.

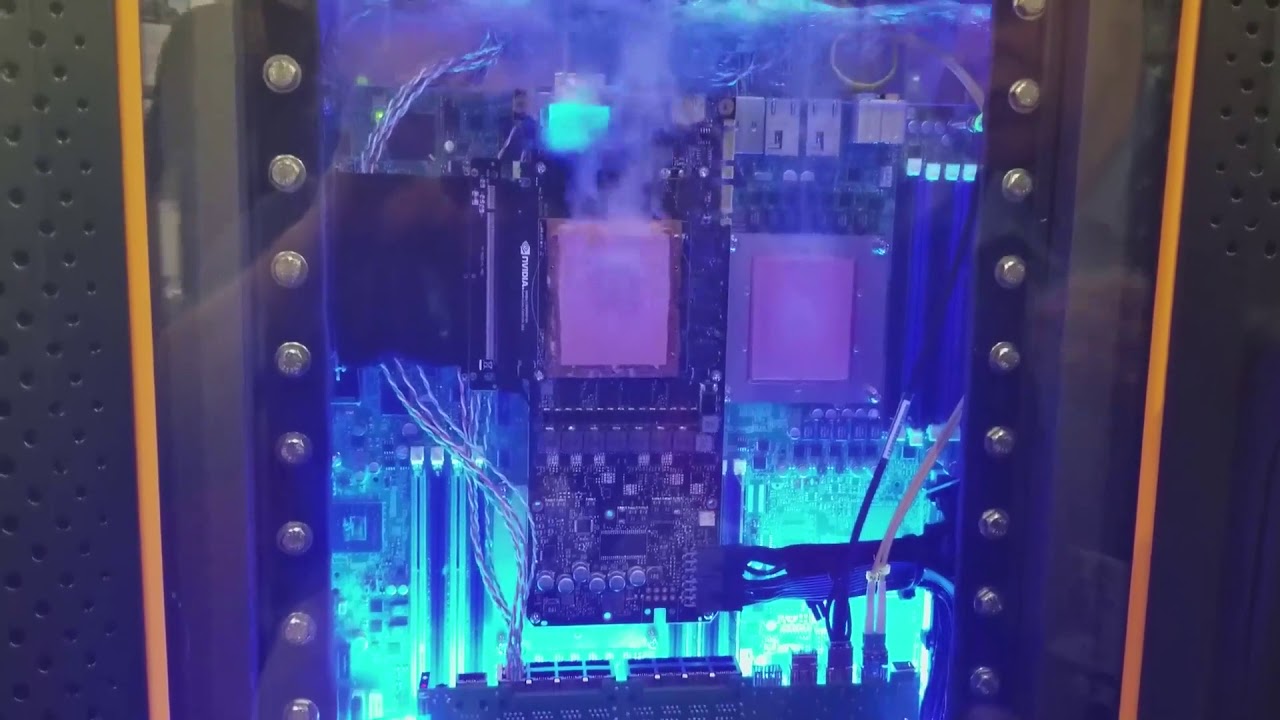

LiquidMIPS Immersion Cooling Nvidia GTX Titan X

HPC workloads are rapidly transitioning to AI-centric workloads that are a natural fit for expensive purpose-built GPUs, but it's common to find standard desktop GPUs used in high-performance systems. LiquidMIPS designs custom two-phase Flourinert immersion cooling systems for HPC systems. The company displayed an Nvidia GeForce GTX Titan X overclocked to 2,000 MHz (120% of TDP) running at a steady 57C.

Supercomputer cooling is a challenge that vendors tackle in a number of ways. Immersion cooling affords several advantages, primarily in thermal dissipation and system density. Simply put, using various forms of immersion cooling can increase cooling capacity and allow engineers to pack more power-hungry components into a smaller space. Better yet, it often provides significant cost savings over air-cooled designs.

LiquidMIPS' system is far too large to sit on your desk, but we found some other immersion cooling systems that might be more suitable for that task.

The Nvidia Volta Traveling Roadshow

The rise of AI is revolutionizing the HPC industry. Nvidia's GPUs are one of the preferred solutions for supercomputers, largely due to the company's decade-long investment in CUDA. The widely-supported parallel computing and programming model enjoys broad industry uptake.

The company had a series of "Volta Tour" green placards peppered across the show floor at each Nvidia-powered demo. The Tesla Volta GV100 GPUs were displayed in so many booths, and with so many companies, that we lost count. We've picked a few of the most interesting systems to share, but there were far too many Volta demos to share them all.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

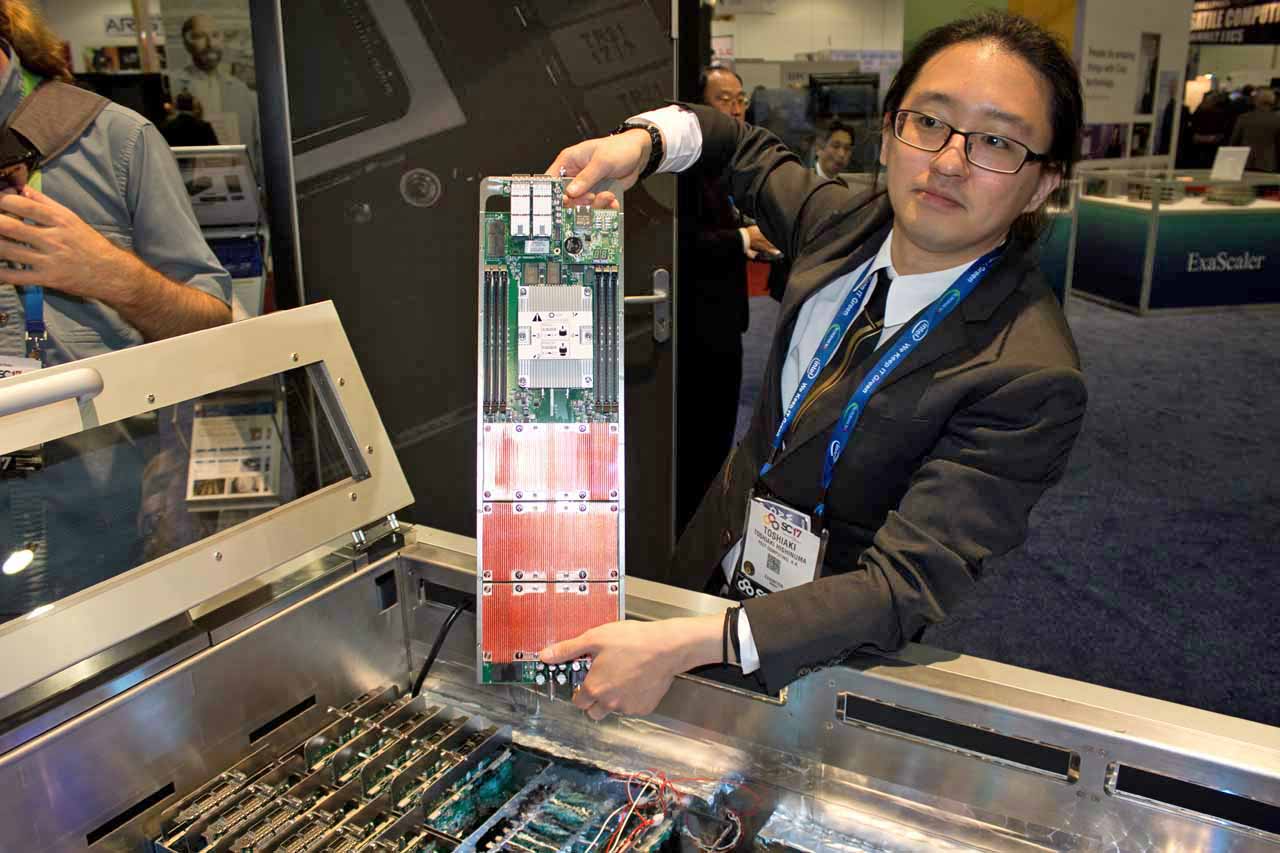

Easy PEZY Triple-Volta GV100 Blade

PEZY Supercomputing may not be a household name, but the Japanese company's immersion-cooled systems are a disruptive force in the industry. Its ZettaScaler-2.2 HPC system (Xeon-D) is the fourth fastest in the world, with 19 PetaFLOPS of computing power.

But being the absolute fastest isn't really PEZY's goal. The company holds the top three spots on the Green500, which is a list of the most power-efficient supercomputers in the world. The company's Xeon-D powered models offer up to 17.009 GigaFLOPS per Watt, which is an efficiency largely made possible by immersion cooling with 3M's Flourinert Electronic Liquid.

PEXY had its new ZettaScaler-2.4 solution on display at the show. The system begins with a single "sub-brick" with three Nvidia Tesla V100-SXM2 GPUs paired with an Intel Skylake-F Xeon processor. The GPUs communicate over the speedy NVLink 2.0 interface, which offers up to 150GB/s of throughput, and the system communicates via Intel's 100Gb/s Omni-Path networking.

Now, let's see how they cool them.

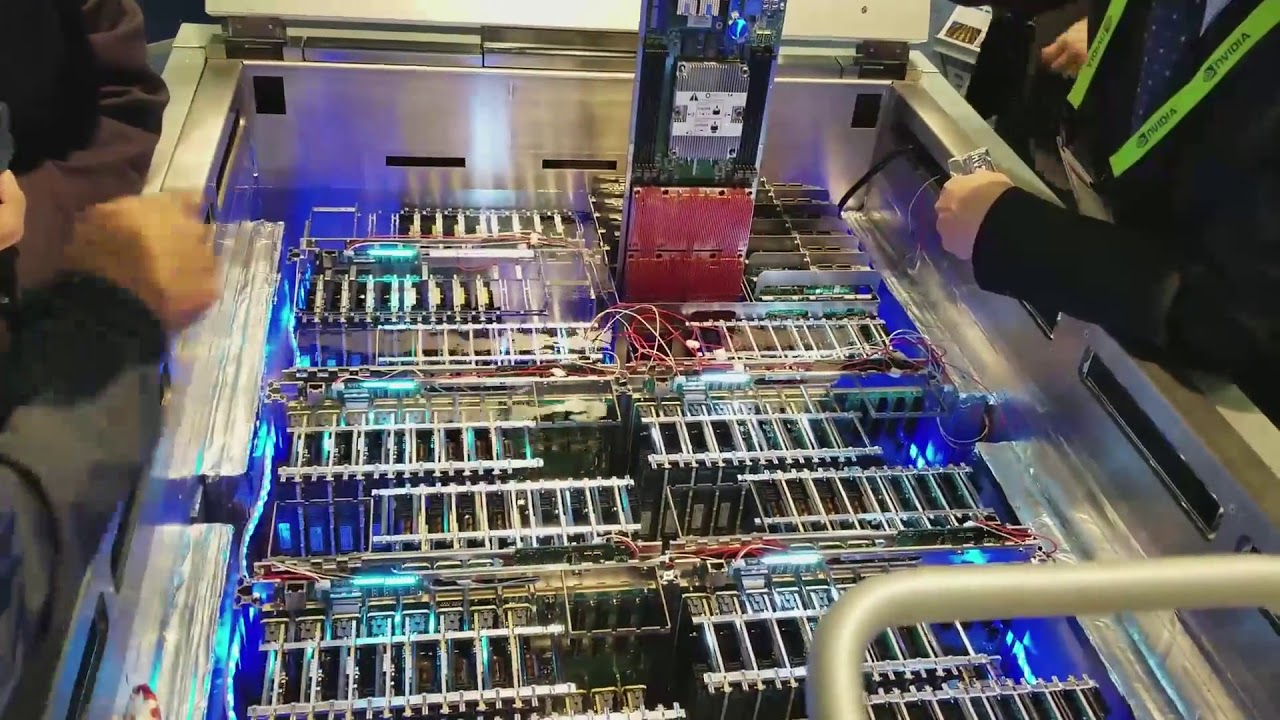

PEZY Immersion Cooling Nvidia Volta GV100s

PEZY outfits the GPUs and processor with standard heatsinks and submerges them in an immersion cooling tank. The company inserts six "sub-bricks" into slotted canisters to create a full "brick." Sixteen bricks, wielding 288 Tesla V100s, can fit into a single tank. The bricks communicate with each other over four Omni-Path 48 port switches. A single tank includes 4.6TB of memory.

A single tank generates a peak of 2.16 double-precision PetaFLOPS and 34.56 half-precision PetaFLOPS. The hot-swappable modules can easily be removed for service while the rest of the system chugs along. Some PEZY-powered supercomputers use up to 26 tanks in a single room. That means the company can pack in 7,488 Tesla V100s and 2,496 Intel Xeons into a comparatively small space.

We'll revisit one of PEZY's more exotic solutions (yeah, they've got more) a bit later.

AMD's EPYC Breaks Cover

AMD began discussing its revolutionary EPYC processors at Supercomputing 2016, but this year it finally arrived to challenge Intel's dominance in the data center. Several other contenders, such as Qualcomm's Centriq and Cavium's ThunderX2 ARM processors, are also coming to the forefront, but AMD holds the advantage of the x86 instruction set architecture (ISA). That means its gear is plug-and-play with most common applications and operating systems, which should speed adoption.

AMD's EPYC also has other advantages, such as copious core counts at a lower per-core pricing than Intel's Xeons. It also offers 128 PCIe 3.0 lanes on every model. The strong connectivity options play particularly well to the single-socket server space. In AMD's busy booth, we found a perfect example of the types of solutions EPYC enables.

This single-socket server wields 24 PCIe 3.0 x4 connectors for 2.5" NVMe SSDs. These SSDs are connected directly to the processor, which eliminates the need for HBAs, thereby reducing cost. Even though the NVMe SSDs consume 96 PCIe 3.0 lanes, the system still has an additional 32 lanes available for other additives, such as high-performance networking adapters. That's simply an advantage that Intel cannot match in a single-socket solution.

We saw EPYC servers throughout the show floor, including from the likes of Tyan and Gigabyte. Things are looking very positive for AMD's EPYC, and we expect to see many more systems next year, such as HPE's recently announced ProLiant DL385 Gen 10 systems. Dell EMC is also bringing EPYC PowerEdge servers to market this year.

Trust Your Radeon Instincts

AMD recently began shipping the three-pronged Instinct lineup for HPC applications. Nvidia's Volta GPUs may have stolen Supercomputing show, but they have also been on the market much longer. We found AMD's Radeon Instinct MI25 in Mellanox's booth. The Vega-powered MI25 slots in for heavy compute-intensive training workloads, while the Polaris MI6 and Fiji MI8 slot in for less-intense workloads, such as inference.

AMD also announced the release of a new version of ROCm, an open-source set of programming tools, at the show. Version 1.7 supports multi-GPU deployments and adds support for the popular TensorFlow and Caffe machine learning frameworks.

AMD Radeon Instincts In A BOXX

Stacking up MI25s in a single chassis just became easier with AMD's release of ROCm 1.7, and here we see a solution from BOXX that incorporates eight Radeon Instinct MI25s into a dual-socket server. It's not a coincidence that AMD's EPYC processors expose 128 PCIe 3.0 lanes to the host. AMD is the only vendor that manufactures both discrete GPUs and x86 data processors, which the company feels will provide it an advantage in tightly-integrated solutions.

BOXX also recently added an Apexx 4 6301 model with an EPYC 16-core/32-thread processor to its workstation lineup.

Here Comes The ThunderX2

Red Hat Linux recently announced that it has finally released Red Hat Enterprise Linux (RHEL) for ARM after seven long years of development. The new flavor of Linux supports 64-bit ARMv8-A sever-class SoCs, which paves the way for broader industry adoption. As such, it wasn't surprising to find HPE's new Apollo 70 system in Red Hat's booth.

Cavium's 14nm FinFET ThunderX2 processor offers up to 54 cores running at 3.0GHz, so a single dual-socket node can wield 108 cores of compute power. Recent benchmarks of the 32C ThunderX2 SoCs came from the team building the "Isambard" supercomputer at the University of Bristol in the UK. The team used its 8-node cluster to run performance comparisons to Intel's Broadwell and Skylake processors. The single-socket ThunderX2 system proved to be faster in OpenFOAM and NEMO tests, among several others.

Gigabyte had its newly-anointed ThunderX2 R181 servers on display, while Bull/Atos and Penguin also displayed their latest ThunderX2 wares. Cray also demoed its XC50 supercomputer, which will be available later this year. We can expect to hear of more systems from leading-edge OEMs in the near future.

IBM's Power9 To The Summit Supercomputer Rescue

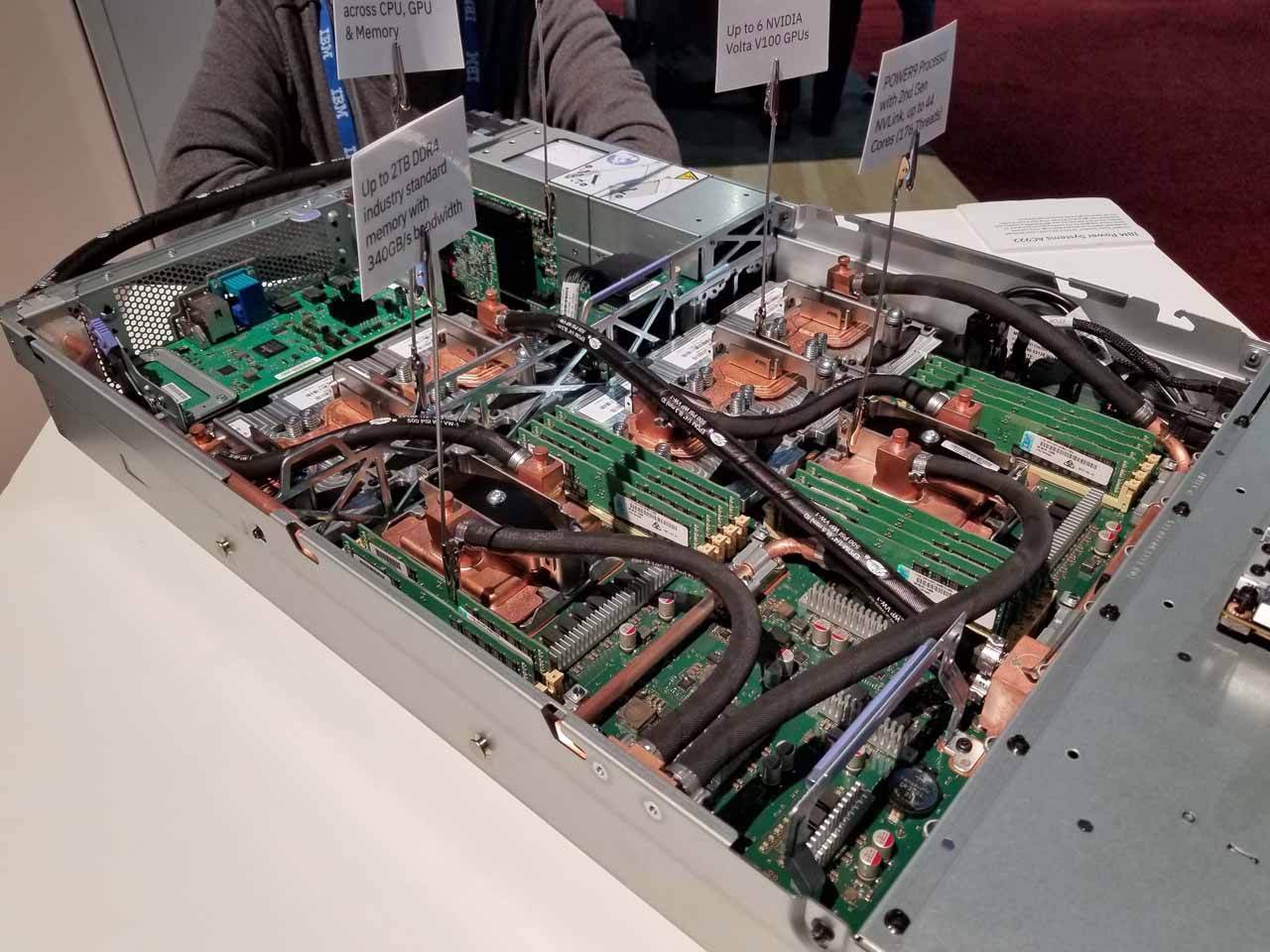

The battle for the leading position in the Top500 list of supercomputers is pitched, and Oak Ridge National Laboratory's new Summit supercomputer, projected to be the fastest in the world, should rocket the U.S. back into the lead over China. IBM's Power Systems AC922 nodes serve as the backbone of Summit's bleeding-edge architecture. We took a close look inside the Summit server node and found two Power9 processors paired with six Nvidia GV100s. These high-performance components communicate over both the PCIe 3.0 interface and NVLink 2.0, which provides up to 100GB/s of throughput between the devices.

Summit will offer ~200 PetaFLOPS, which should easily displace China's (current) chart-topping 93-PetaFLOPS Sunway TaihuLight system. We took a deep look inside the chassis in a recent article, so head there for more detail.

Qualcomm's Centriq 2400 Makes An Entrance

Qualcomm's Centriq 2400 lays claim to the title of the industry's first 10nm server processor, though Qualcomm's 10nm process is similar to Intel's density on the 14nm node. Like the Cavium ThunderX2, the Centriq processors significantly benefit from Red Hat's recently added support for the ARM architectures. For perspective, every single supercomputer on the Top500 list now runs on Linux.

The high-end Centriq 2460 comes bearing 48 "Falkor" 64-bit ARM v8-compliant cores garnished with 60MB of L3 cache. The processor die measures 398mm2 and wields 18 billion transistors.

The chip operates at a base frequency of 2.2 GHz and boosts up to 2.6 GHz. Surprisingly, the 120W processor retails for a mere $1,995. Qualcomm also has 46 and 40 core models on offer for $1,373 and $888, respectively. A quick glance at Intel's recommended pricing for Xeon processors drives home the value. Prepare to spend $10,000 or more for comparable processors.

Performance-per-watt and performance-per-dollar are key selling points of ARM-based processors. Qualcomm has lined up a bevy of partners to push the platform out to market, and considering the recent Cloudflare benchmark results, the processors are exceptionally competitive against Intel's finest.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

germz1986 A few of these slides displayed ads instead of the content, or displayed a blank slide. Your website is becoming hard to read on due to all of the ads....Reply -

berezini the whole thing is advertisement, soon toms hardware will be giving out recommended products based on commission they get from sales.Reply -

FritzEiv Due to some technical issues, our normal company video player has some implementation issues when we use videos (instead of still images) in slideshows. We went in and replaced all of those with YouTube versions of the videos on the slides in question, and it looks to be working correctly now. I apologize for the technical difficulty.Reply

(I'd rather not turn the comments on this slideshow into an ad referendum. Please know that I make sure concerns about ads/ad behavior/ad density (including concerns I see mentioned in article comments) reach the right ears. You can feel free to message me privately at any time.) -

COLGeek Some amazing tech showcased here. Just imagine the possibilities. It will be interesting to ponder on the influences to the prosumer/consumer markets.Reply -

bit_user ReplyThe single-socket ThunderX2 system proved to be faster in OpenFOAM and NEMO tests, among several others.

Qualcomm's CPU has also posted some impressive numbers and, while I've no doubt these are on benchmarks that scale well to many cores, this achievement shouldn't be underestimated. I think 2017 will go down in the history of computing as an important milestone in ARM's potential domination of the cloud.

-

bit_user Reply

"bleeding edge" at least used to have the connotation of something that wasn't quite ready for prime-time - like beta quality - or even alpha. However, I suspect that's not what you mean to say, here.20403668 said:IBM's Power Systems AC922 nodes serve as the backbone of Summit's bleeding-edge architecture.

-

bit_user ReplyNEC is the last company in the world to manufacture Vector processors.

This is a rather funny claim.

I think the point might be that it's the last proprietary SIMD CPU to be used exclusively in HPC? Because SIMD is practically everywhere you look. It graced x86 under names like MMX, SSE, and now the AVX family of extensions. Even ARM has had it for more than a decade, and it's a mandatory part of ARMv8-A. Modern GPUs are deeply SIMD, hybridized with massive SMT (according to https://devblogs.nvidia.com/parallelforall/inside-volta/ a single V100 can concurrently execute up to 164k threads).

Anyway, there are some more details on it, here:

http://www.nec.com/en/global/solutions/hpc/sx/architecture.html

I don't see anything remarkable about it, other than how primitive it is by comparison with modern GPUs and CPUs. Rather than award it a badge of honor, I think it's more of a sign of the degree of government subsidies in this sector.

Both this thing and the PEZY-SC2 are eyebrow-raising in the degree to which they differ from GPUs. Whenever you find yourself building something for HPC that doesn't look like a modern GPU, you have to ask why. There are few cases I can see that could possibly justify using either of these instead of a V100. And for those, Xeon Phi stands as a pretty good answer for HPC workloads that demand something more closely resembling a conventional CPU. -

bit_user ReplyThe network infrastructure required to run a massive tradeshow may not seem that exciting, but Supercomputing 17's SCinet network is an exception.

Thanks for this - I didn't expect it to be so extensive, but then I didn't expect they'd have access to "3.63 Tb/s of WAN bandwidth" (still, OMG).

What I was actually wondering about was the size of friggin' power bill for this conference! Did they have to make any special arrangements to power all the dozens-kW display models mentioned? I'd imagine the time of year should've helped with the air conditioning bill.

-

cpsalias For more details on picture 27 - MicroDataCenter visit http://www.zurich.ibm.com/microserver/Reply -

Saga Lout Reply20420018 said:For more details on picture 27 - MicroDataCenter visit http://www.zurich.ibm.com/microserver/

Welcome to Tom's cpsalias. Your IP address points to you being an IBM employee and also got your Spammish looking post undeleted. :D

If you'd like to show up as an Official IBM rep., drop an e-mail to community@tomshardware.com.