AMD Unveils Vega Radeon Instinct Details, Shipping Soon

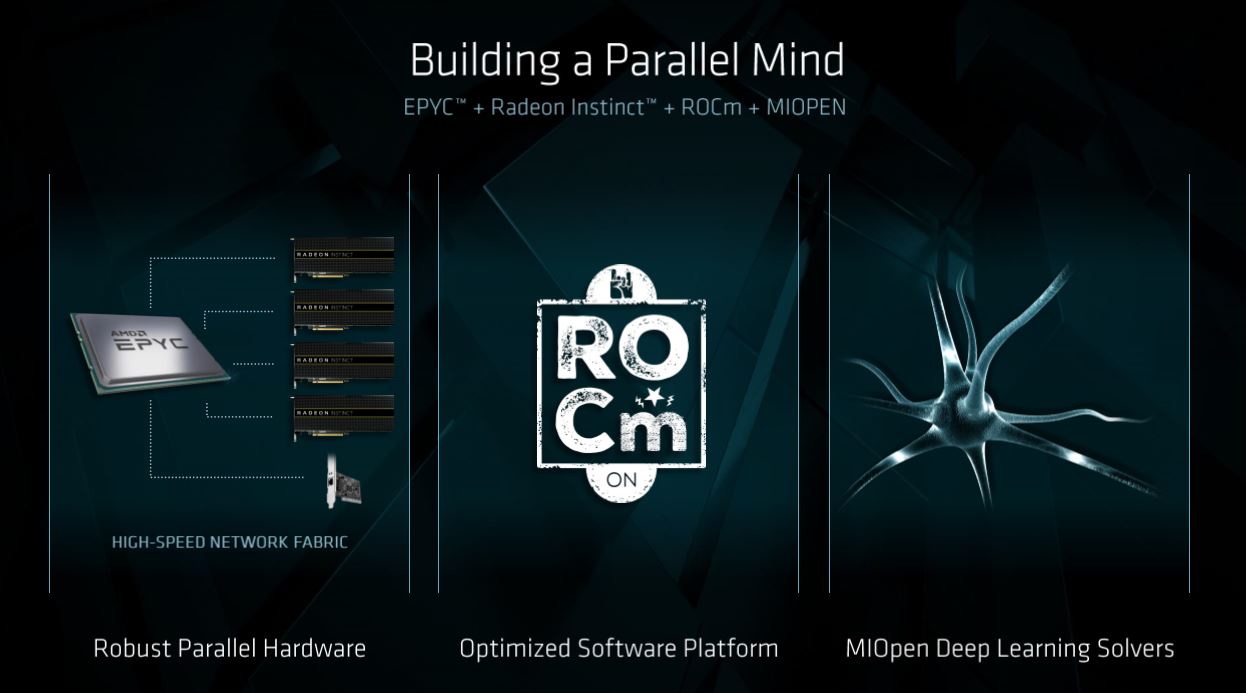

AMD's re-entrance into the data center begins with its EPYC lineup, which pits the company against the dominant Intel. Not enough pressure? Part of AMD's strategy also includes attacking the burgeoning AI segment. That lines the company up against Nvidia, which by all rights has a dominating position in the machine learning segment.

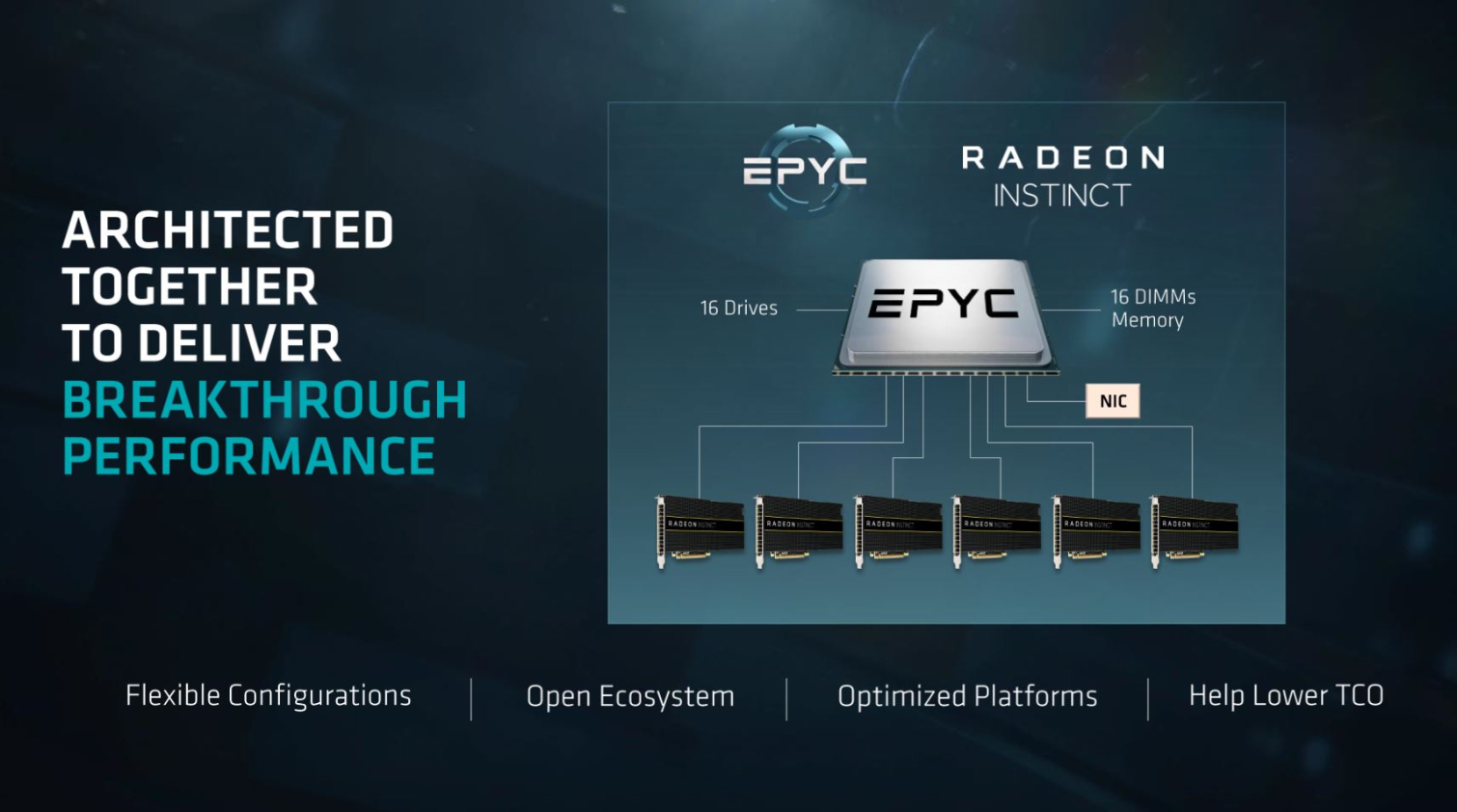

However, AMD is the only company with both GPUs and CPUs under the same roof, which it feels hands it an advantage when it comes to complementary designs.

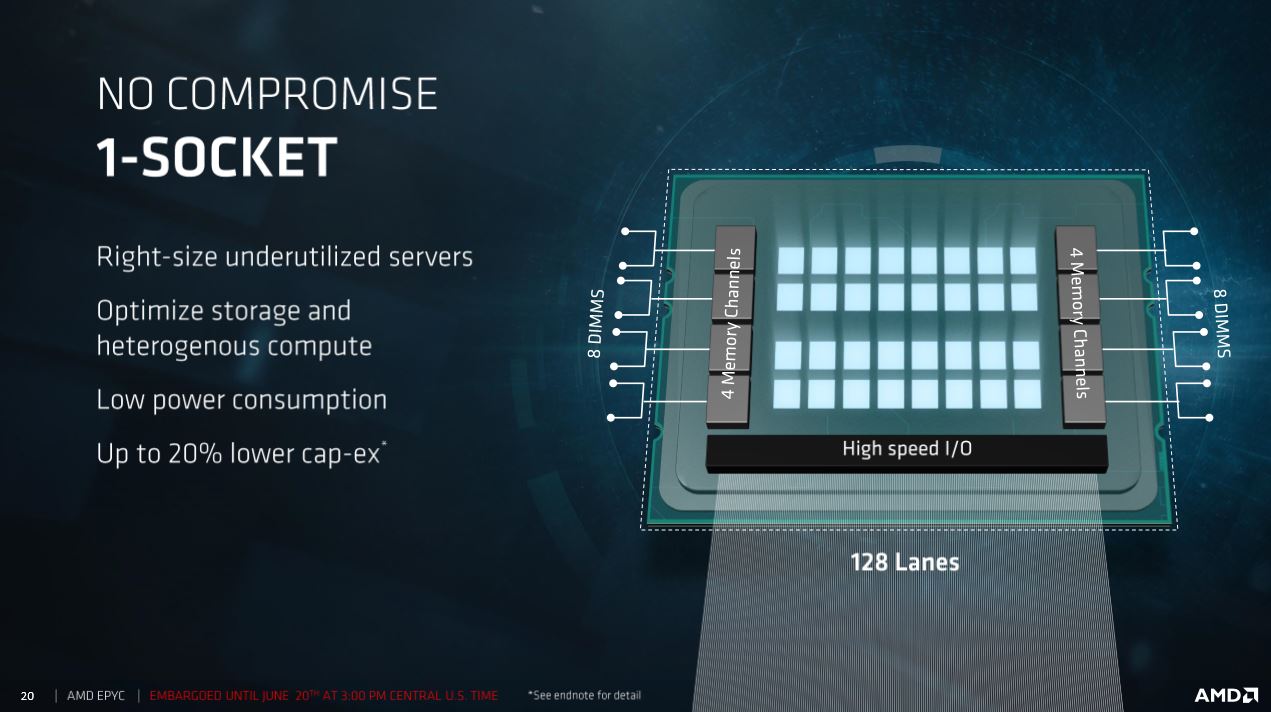

That's where EPYC comes in. It's no coincidence that EPYC, AMD's new line of data center processors, have a copious allotment of 128 PCIe lanes. AMD believes that makes the EPYC platform a great fit for incredibly dense AI platforms in single socket servers. We broke down AMD's latest EPYC processors in our AMD Unveils EPYC Server Processor Models And Pricing Guidelines Article, but the single-socket server is one of the most important aspects of AMD's two-pronged AI strategy.

The Single Sockets

Roughly 25% of today's server platforms, which are almost entirely powered by Intel, ship with only one socket populated. That means they have only one processor, so there are a number of redundant components on the motherboard and in the chassis that aren't needed. Eliminating these redundancies reduces costs on multiple axes, possibly making a dedicated single-socket server a data center architects' best friend.

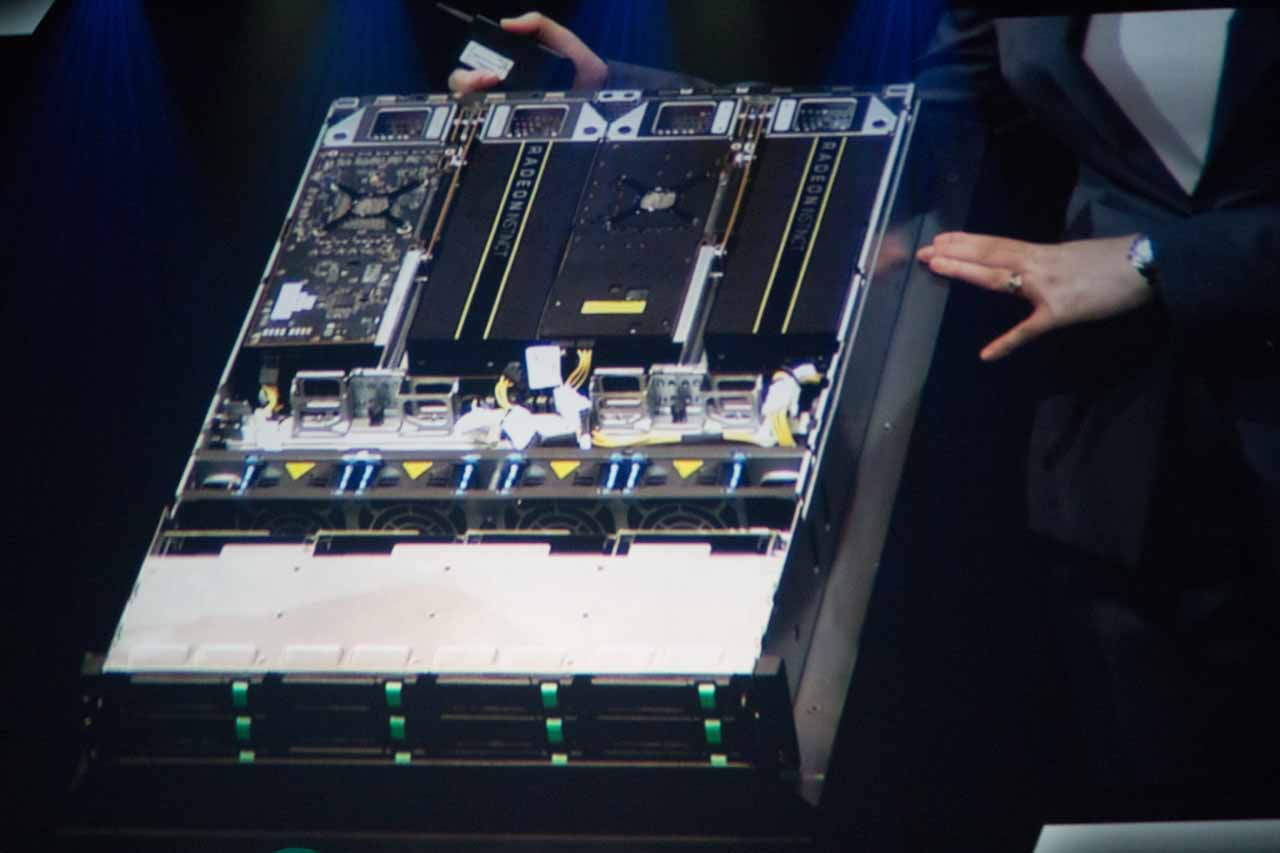

The data center is shifting en masse to AI-centric architectures, but these designs require beefy data throughput to feed the hungry GPUs. AMD's single-socket server leverages the platform's 2TB of memory capacity and 128 PCIe lanes to provide copious connectivity. As seen above in Inventec's system, cramming six GPUs into single-socket chassis is easy if you have enough connectivity and cores to push the workload. EPYC's 64 threads should suit those purposes nicely. The end result? Up to 100 TFLOPS in a single chassis. That's performance density at its finest.

AMD Trusts Its Instincts

AMD's got quite a bit of graphics IP laying around, so it employs a range of its architectures, including Vega, Polaris, and Fiji, to target various segments of the AI space. The Vega-powered MI25 slots in as the workhorse for the heavy compute-intensive training workloads, while the Polaris-based MI6 is more of an all-rounder that can handle a variety of training and inference workloads. The Fiji-based MI8 handles the low-power tasks, such as lightweight inference, at a lower price point.

Our resident GPU expert, Chris Angelini, did the heavy lifting when AMD announced these cards in December 2016. Head over to his article for more coverage of the high-level view. AMD also has the Vega Frontier Edition in the hopper and recently teased us with performance details.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

AMD has its new Instinct solutions headed to market in Q3 and accordingly released more details. Let's take a quick trip through AMD's stable of Instinct cards.

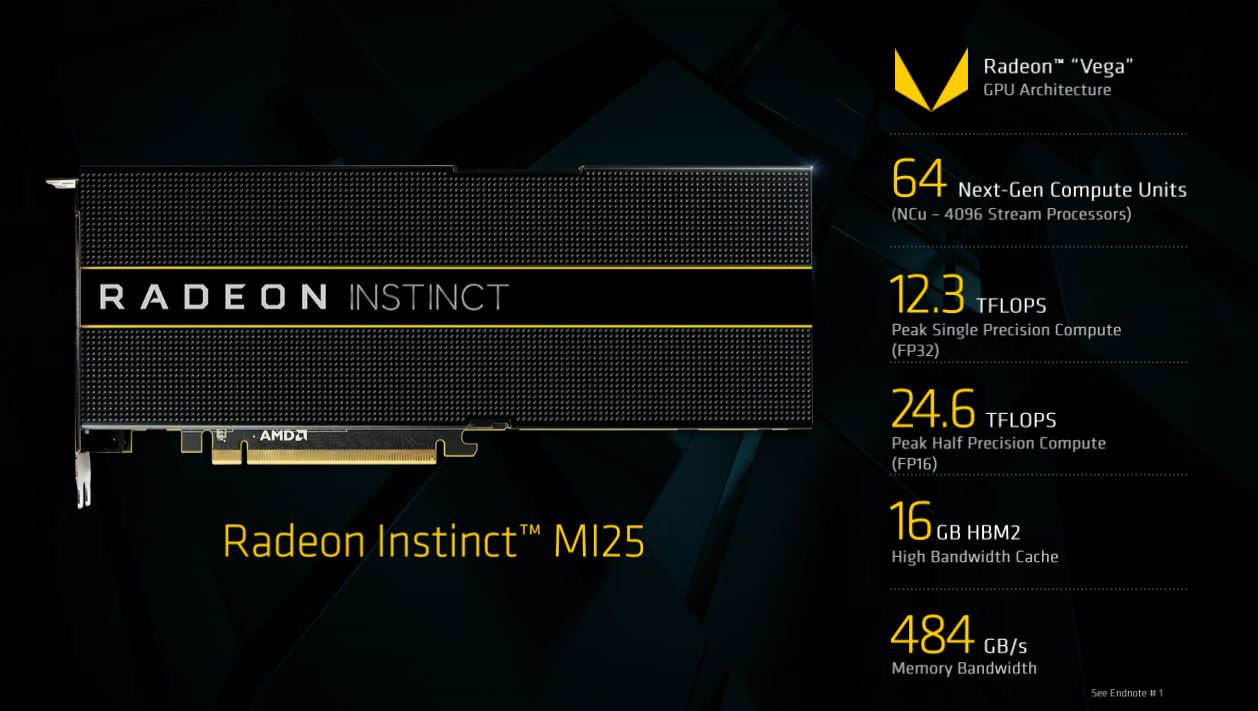

Vega - Radeon Instinct MI25

Vega powers onto the scene with Global Foundries' 14nm FinFETs in tow. Well, arguably, it's Samsung's process. In either case, the MI25 bears down with a peak 24.6 TFLOPS of FP16 and 12.3 FP32 TFLOPS delivered by its 64 compute units, which equates to 4,096 stream processors. A complementary 16GB of ECC HBM2 provides up to 484 GB/s of memory bandwidth. The MI25 will eventually square up with Nvidia's beastly Voltas.

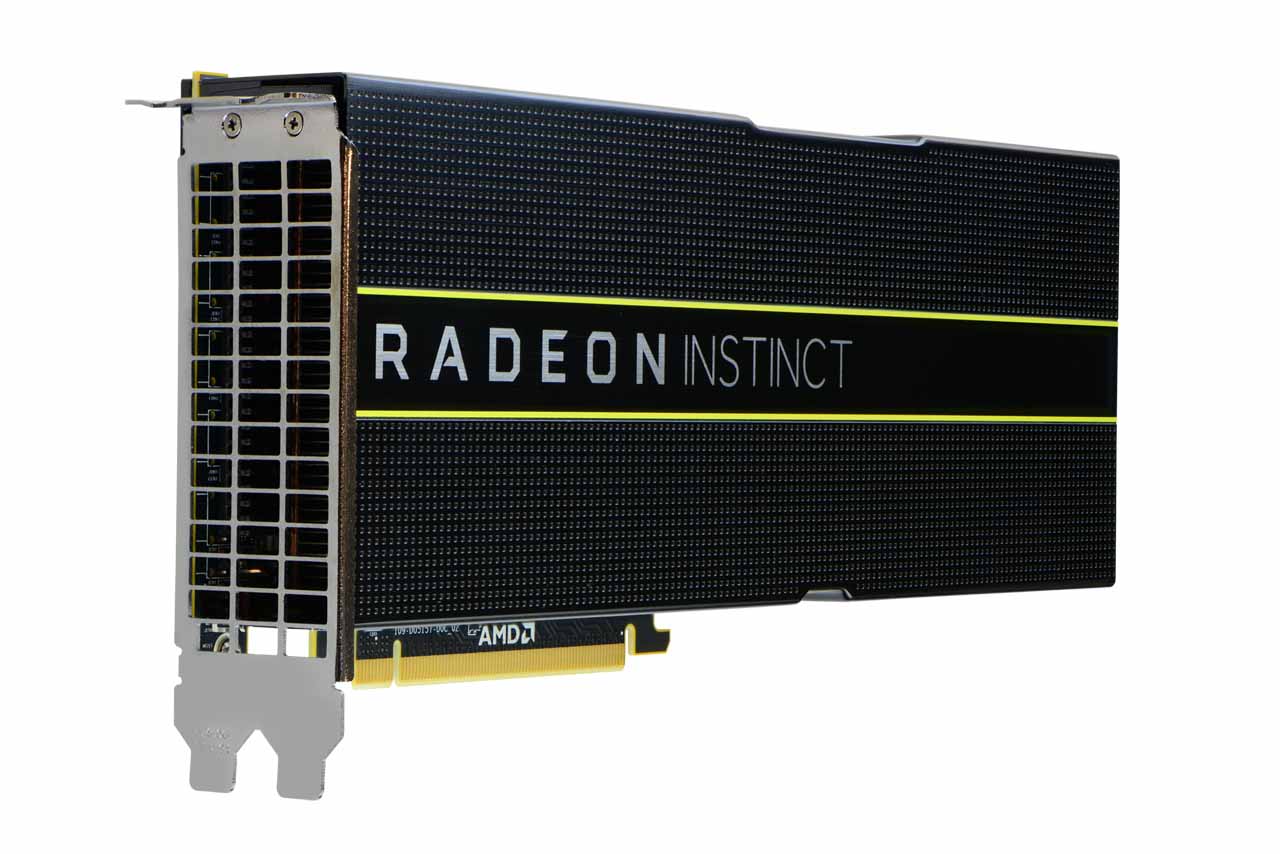

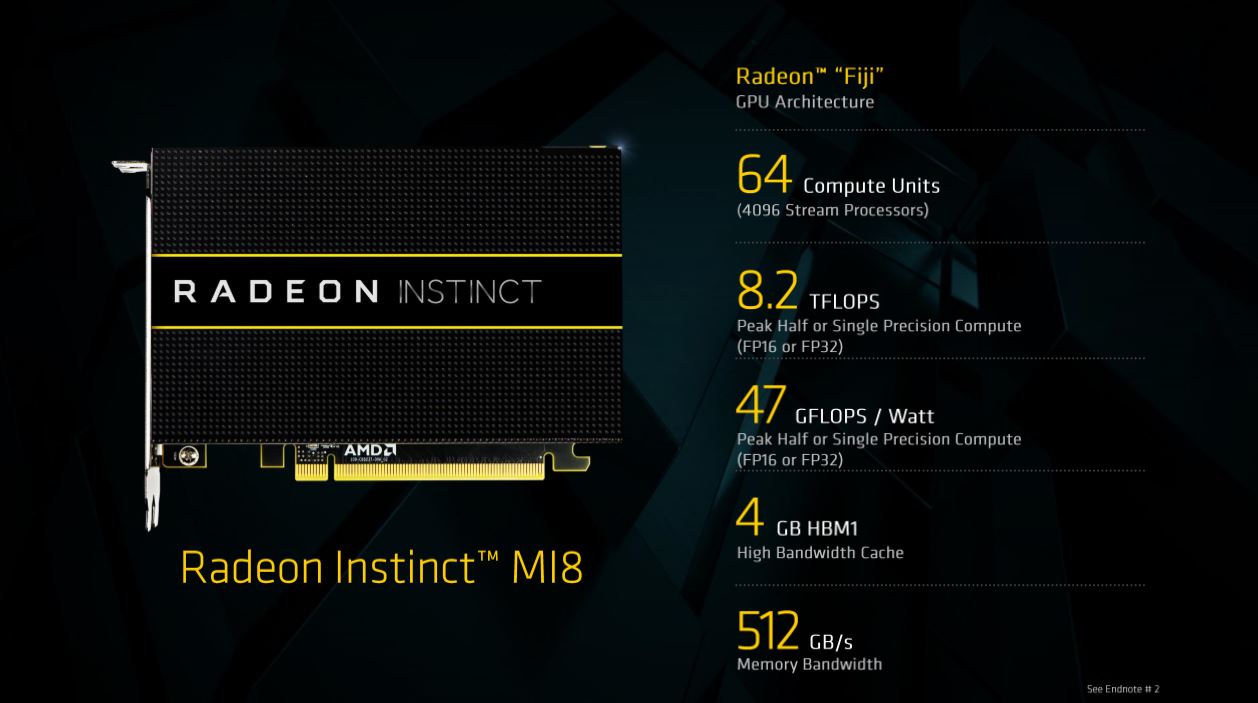

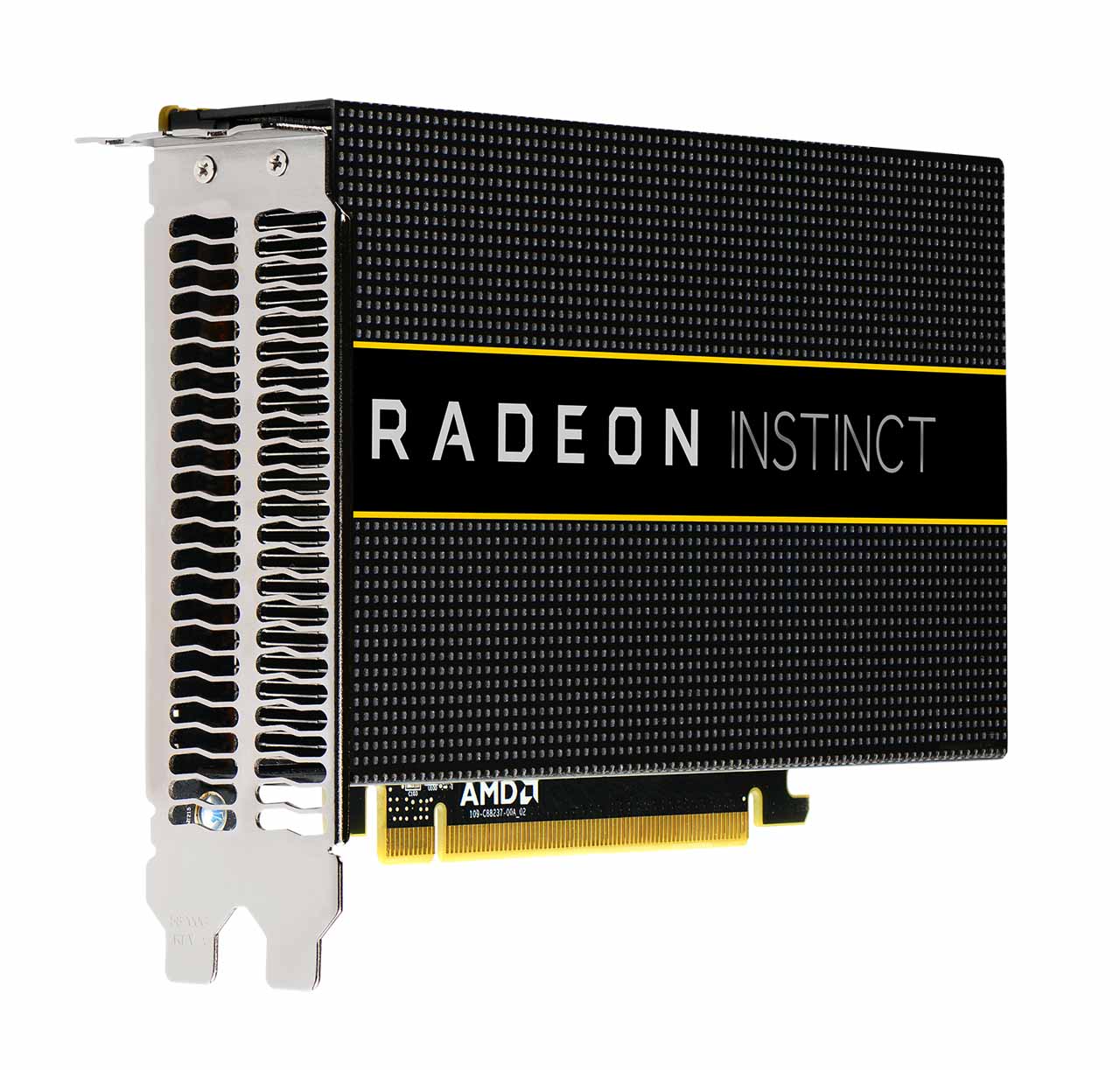

Fiji - Radeon Instinct MI8

AMD geared the Fiji-powered card to address HPC and inference workloads in a small form factor. It wields a peak 8.2 TFLOPS of FP16/FP32 and sucks 175W. It also features 4GB of HBM. Power efficiency is the goal here; the M18 provides 47 GFLOPS per Watt.

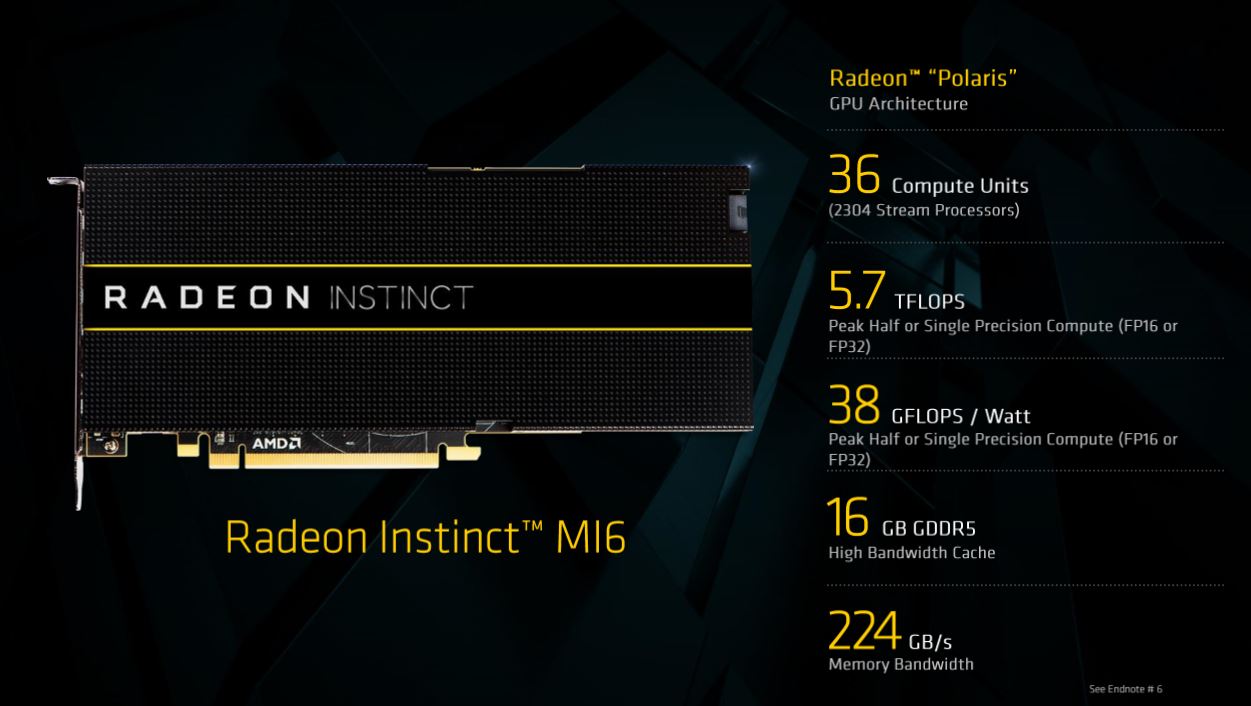

Polaris - Radeon Instinct M16

The MI6 leverages the Polaris architecture and sips a mere 150W to provide a peak FP16/FP32 5.7 TFLOPS. It steps down a notch from HBM to 16GB of GDDR5.

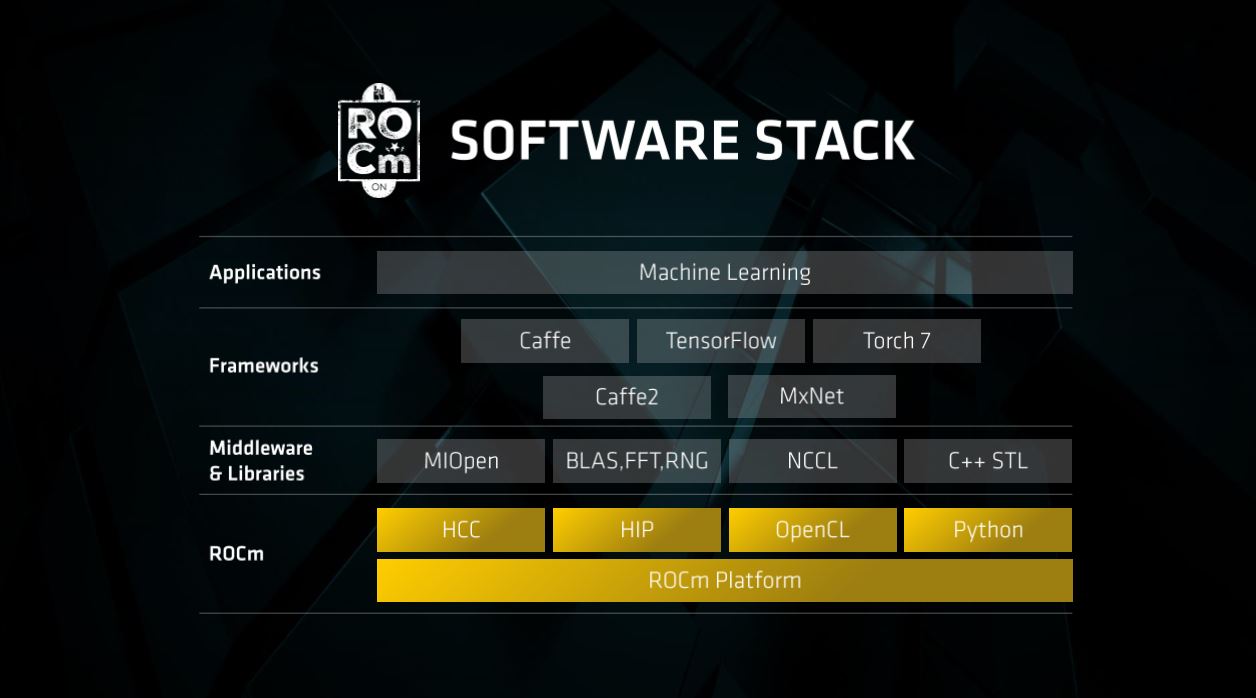

ROCm Sock 'Em

The entire lineup is worthless without tools, so AMD has designed a set of open source software tools. Here's the breakdown, courtesy of AMD:

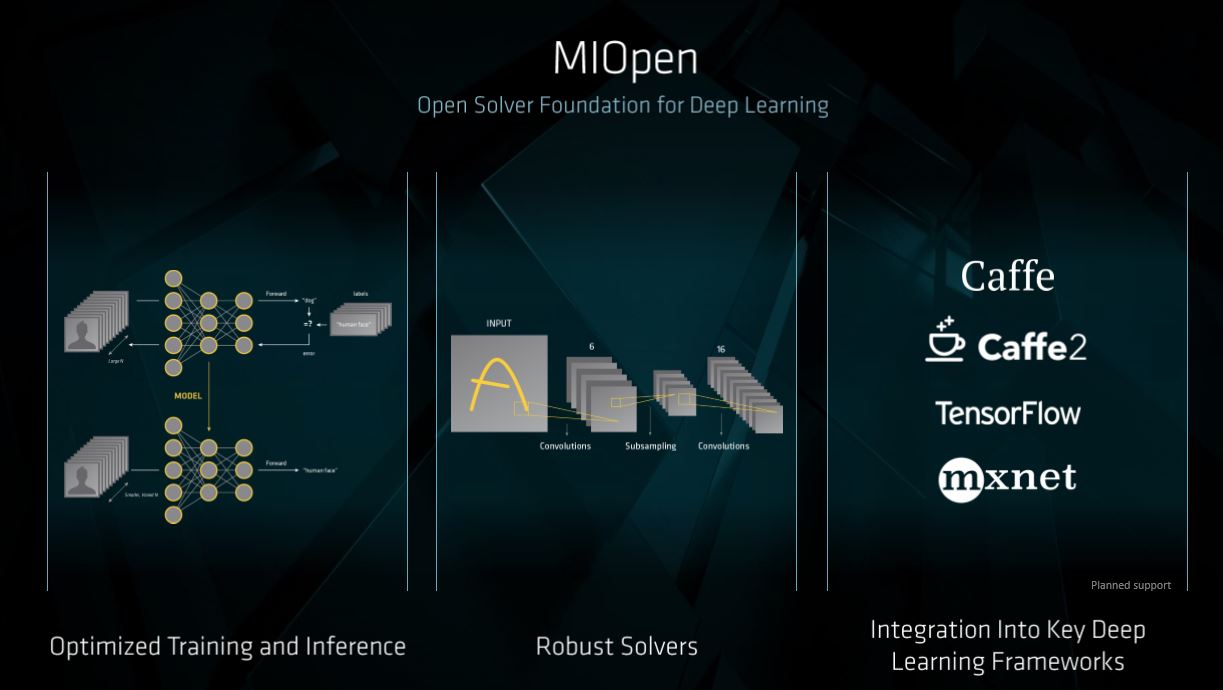

Planned for June 29th rollout, the ROCm 1.6 software platform with performance improvements and now support for MIOpen 1.0 is scalable and fully open source providing a flexible, powerful heterogeneous compute solution for a new class of hybrid Hyperscale and HPC-class system workloads. Comprised of an open-source Linux® driver optimized for scalable multi-GPU computing, the ROCm software platform provides multiple programming models, the HIP CUDA conversion tool, and support for GPU acceleration using the Heterogeneous Computing Compiler (HCC). The open-source MIOpen GPU-accelerated library is now available with the ROCm platform and supports machine intelligence frameworks including planned support for Caffe, TensorFlow and Torch.

Open The Things

The open source component is key. The industry is weary of proprietary solutions and vendor lock-in. AMD is investing heavily in developing open tools for both the EPYC and Instinct lineups, which is encouraging. AMD even has budding initiatives in more far-reaching climes, such as Gen-Z, OpenCAPI, and CCIX standards.

The industry awaits a wave of competitive x86 alternatives based on open architectures. AMD's EPYC has already landed, and the Instinct lineup isn't far behind. It ships in Q3 to AMD's partners, which include Boxx, Colfax, Exxact Corporation, Gigabyte, Inventec and Supermicro, among others.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

Snipergod87 Reply19849336 said:I wonder if these, too, will be gobbled up by cryptocurrency miners?

At what they will likely be costing, would take years if not more to get back your investment. -

redgarl It's interesting that AMD can focus their silicon strategy to accomplish a single goal while Nvidia or Intel needs to do partnership. I am not even sure if Nvidia was looking at AMD CPU architecture to develop their products.Reply

AMD believe in the multi-GPU environment while Nvidia is dropping the Multi-GPU architecture more and more. -

MASOUTH completely OT and I know it's correct but...I'm not sure I will ever be comfortable with the plural of axisReply -

withoutfences I doubt that CryptoCurrency Miners will pic these up, CC Mining is the ASiC Market entirely now for any real return on investment given the currently difficulty.Reply

Even for speculative CC Mining, ASIC's are still the ticket since you need to mine hard for the first hour and then beyond that who cares.

I see this in the low cost server market, be in, mobile big data computational systems, filling the cap between low power single board Server Market and the institutional Systems (which these _will find a home in).

Now I just need to work for company that has interested in upgrading thier hardware.

Our Dell PowerEdge 2850's and 2950's are getting long in the tooth. -

HideOut It wont take years to get it back because most of us cant get them. The crypto purchases are made by huge companies, mostly in china. They buy palets of these at a time :(Reply -

Josh_killaknott27 people keep telling me to sell one of the MSI gaming RX480's i have in a crossfire build, apparently they are going for 350 nowadays.Reply

I just really want vega to be a really competitive performer and have been holding out. -

jimmysmitty Reply19849336 said:I wonder if these, too, will be gobbled up by cryptocurrency miners?

GPUs have been worthless to CryptoMinning since ASICS hit the market and blew GPUs out of the water. It was after the R9 390 went. After the ASICs hit the old R9 390/390Xs hit the market and flooded it causing the price to drop and making them near worthless.

19849468 said:It's interesting that AMD can focus their silicon strategy to accomplish a single goal while Nvidia or Intel needs to do partnership. I am not even sure if Nvidia was looking at AMD CPU architecture to develop their products.

AMD believe in the multi-GPU environment while Nvidia is dropping the Multi-GPU architecture more and more.

What partnership do Intel and NVidia have to have and with whom?

Last I checked Intel works on their process tech and uArchs themselves. In fact if you look at it, Intel currently has 5 consumer uArchs they are working on and should be out in the next year while AMD has Ryzen and plans Zen 2, just an enhanced Zen, in a year or so.

As well I have not seen anything that Intel has done in the server marketspace with a "partnership". They have their own CPU and their own accelerator ( Knights Landing) that they work on and develop with their own technologies. -

bit_user Reply

Nvidia has now designed two generations of custom ARM cores. So far, they've targeted mobile. In the future... ? It's true that they recently partnered with IBM.19849468 said:It's interesting that AMD can focus their silicon strategy to accomplish a single goal while Nvidia or Intel needs to do partnership. I am not even sure if Nvidia was looking at AMD CPU architecture to develop their products.

Intel has Xeon Phi and the FPGA accelerator blocks in some of their other Xeon CPUs. I think they're trying to tackle parts of the HPC and AI market in their own way. They don't exactly preclude customers using their CPUs with other GPUs, but I haven't heard of any partnerships...

Have you heard of NVLink? What's that all about, then?19849468 said:AMD believe in the multi-GPU environment while Nvidia is dropping the Multi-GPU architecture more and more.

You might be interested in checking out their DGX line of multi-GPU boxes.