How to Run a ChatGPT Alternative on Your Local PC

It's nowhere near ChatGPT, but at least it can work on a single GPU

ChatGPT can give some impressive results, and also sometimes some very poor advice. But while it's free to talk with ChatGPT in theory, often you end up with messages about the system being at capacity, or hitting your maximum number of chats for the day, with a prompt to subscribe to ChatGPT Plus. Also, all of your queries are taking place on ChatGPT's server, which means that you need Internet and that OpenAI can see what you're doing.

Fortunately, there are ways to run a ChatGPT-like LLM (Large Language Model) on your local PC, using the power of your GPU. The oobabooga text generation webui might be just what you're after, so we ran some tests to find out what it could — and couldn't! — do, which means we also have some benchmarks.

Getting the webui running wasn't quite as simple as we had hoped, in part due to how fast everything is moving within the LLM space. There are the basic instructions in the readme, the one-click installers, and then multiple guides for how to build and run the LLaMa 4-bit models. We encountered varying degrees of success/failure, but with some help from Nvidia and others, we finally got things working. And then the repository was updated and our instructions broke, but a workaround/fix was posted today. Again, it's moving fast!

It's like running Linux and only Linux, and then wondering how to play the latest games. Sometimes you can get it working, other times you're presented with error messages and compiler warnings that you have no idea how to solve. We'll provide our version of instructions below for those who want to give this a shot on their own PCs. You may also find some helpful people in the LMSys Discord, who were good about helping me with some of my questions.

It might seem obvious, but let's also just get this out of the way: You'll need a GPU with a lot of memory, and probably a lot of system memory as well, should you want to run a large language model on your own hardware — it's right there in the name. A lot of the work to get things running on a single GPU (or a CPU) has focused on reducing the memory requirements.

Using the base models with 16-bit data, for example, the best you can do with an RTX 4090, RTX 3090 Ti, RTX 3090, or Titan RTX — cards that all have 24GB of VRAM — is to run the model with seven billion parameters (LLaMa-7b). That's a start, but very few home users are likely to have such a graphics card, and it runs quite poorly. Thankfully, there are other options.

Loading the model with 8-bit precision cuts the RAM requirements in half, meaning you could run LLaMa-7b with many of the best graphics cards — anything with at least 10GB VRAM could potentially suffice. Even better, loading the model with 4-bit precision halves the VRAM requirements yet again, allowing for LLaMa-13b to work on 10GB VRAM. (You'll also need a decent amount of system memory, 32GB or more most likely — that's what we used, at least.)

Getting the models isn't too difficult at least, but they can be very large. LLaMa-13b for example consists of 36.3 GiB download for the main data, and then another 6.5 GiB for the pre-quantized 4-bit model. Do you have a graphics card with 24GB of VRAM and 64GB of system memory? Then the 30 billion parameter model is only a 75.7 GiB download, and another 15.7 GiB for the 4-bit stuff. There's even a 65 billion parameter model, in case you have an Nvidia A100 40GB PCIe card handy, along with 128GB of system memory (well, 128GB of memory plus swap space). Hopefully the people downloading these models don't have a data cap on their internet connection.

Testing Text Generation Web UI Performance

In theory, you can get the text generation web UI running on Nvidia's GPUs via CUDA, or AMD's graphics cards via ROCm. The latter requires running Linux, and after fighting with that stuff to do Stable Diffusion benchmarks earlier this year, I just gave it a pass for now. If you have working instructions on how to get it running (under Windows 11, though using WSL2 is allowed) and you want me to try them, hit me up and I'll give it a shot. But for now I'm sticking with Nvidia GPUs.

Intel Core i9-12900K

MSI Pro Z690-A WiFi DDR4

Corsair 2x16GB DDR4-3600 CL16

Crucial P5 Plus 2TB

Cooler Master MWE 1250 V2 Gold

Cooler Master PL360 Flux

Cooler Master HAF500

Windows 11 Pro 64-bit

Graphics Cards:

GeForce RTX 4090

GeForce RTX 4080

Asus RTX 4070 Ti

Asus RTX 3090 Ti

GeForce RTX 3090

GeForce RTX 3080 Ti

MSI RTX 3080 12GB

GeForce RTX 3080

EVGA RTX 3060

Nvidia Titan RTX

GeForce RTX 2080 Ti

I encountered some fun errors when trying to run the llama-13b-4bit models on older Turing architecture cards like the RTX 2080 Ti and Titan RTX. Everything seemed to load just fine, and it would even spit out responses and give a tokens-per-second stat, but the output was garbage. Starting with a fresh environment while running a Turing GPU seems to have worked, fixed the problem, so we have three generations of Nvidia RTX GPUs.

While in theory we could try running these models on non-RTX GPUs and cards with less than 10GB of VRAM, we wanted to use the llama-13b model as that should give superior results to the 7b model. Looking at the Turing, Ampere, and Ada Lovelace architecture cards with at least 10GB of VRAM, that gives us 11 total GPUs to test. We felt that was better than restricting things to 24GB GPUs and using the llama-30b model.

For these tests, we used a Core i9-12900K running Windows 11. You can see the full specs in the boxout. We used reference Founders Edition models for most of the GPUs, though there's no FE for the 4070 Ti, 3080 12GB, or 3060, and we only have the Asus 3090 Ti.

In theory, there should be a pretty massive difference between the fastest and slowest GPUs in that list. In practice, at least using the code that we got working, other bottlenecks are definitely a factor. It's not clear whether we're hitting VRAM latency limits, CPU limitations, or something else — probably a combination of factors — but your CPU definitely plays a role. We tested an RTX 4090 on a Core i9-9900K and the 12900K, for example, and the latter was almost twice as fast.

It looks like some of the work at least ends up being primarily single-threaded CPU limited. That would explain the big improvement in going from 9900K to 12900K. Still, we'd love to see scaling well beyond what we were able to achieve with these initial tests.

Given the rate of change happening with the research, models, and interfaces, it's a safe bet that we'll see plenty of improvement in the coming days. So, don't take these performance metrics as anything more than a snapshot in time. We may revisit the testing at a future date, hopefully with additional tests on non-Nvidia GPUs.

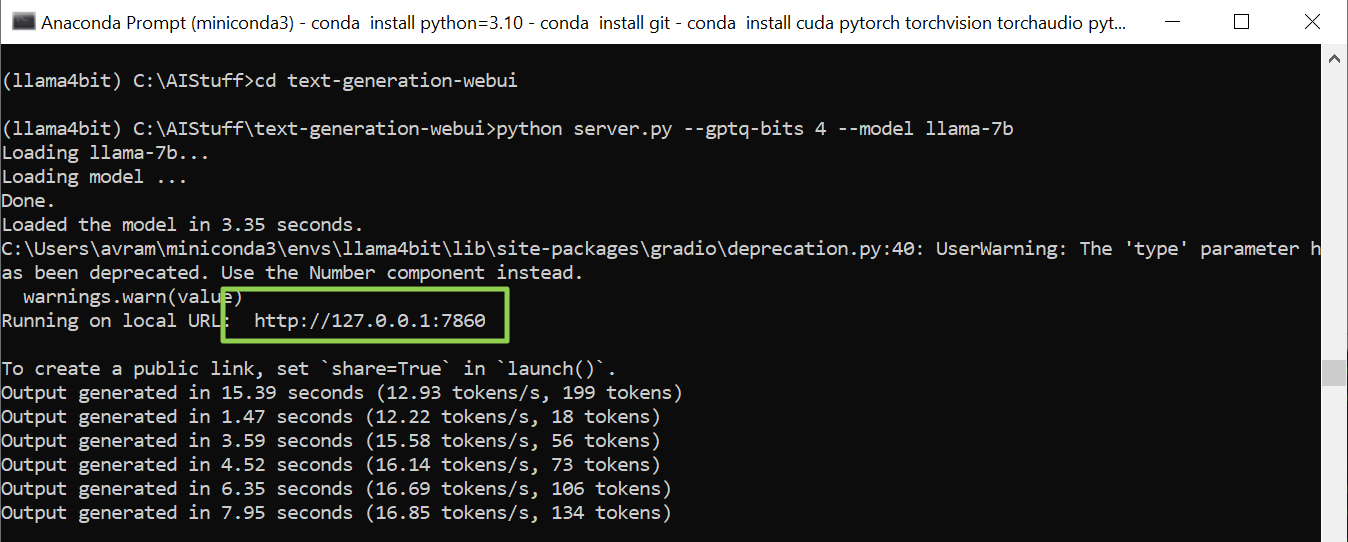

We ran oobabooga's web UI with the following, for reference. More on how to do this below.

python server.py --gptq-bits 4 --model llama-13bText Generation Web UI Benchmarks (Windows)

Again, we want to preface the charts below with the following disclaimer: These results don't necessarily make a ton of sense if we think about the traditional scaling of GPU workloads. Normally you end up either GPU compute constrained, or limited by GPU memory bandwidth, or some combination of the two. There are definitely other factors at play with this particular AI workload, and we have some additional charts to help explain things a bit.

Running on Windows is likely a factor as well, but considering 95% of people are likely running Windows compared to Linux, this is more information on what to expect right now. We wanted tests that we could run without having to deal with Linux, and obviously these preliminary results are more of a snapshot in time of how things are running than a final verdict. Please take it as such.

These initial Windows results are more of a snapshot in time than a final verdict.

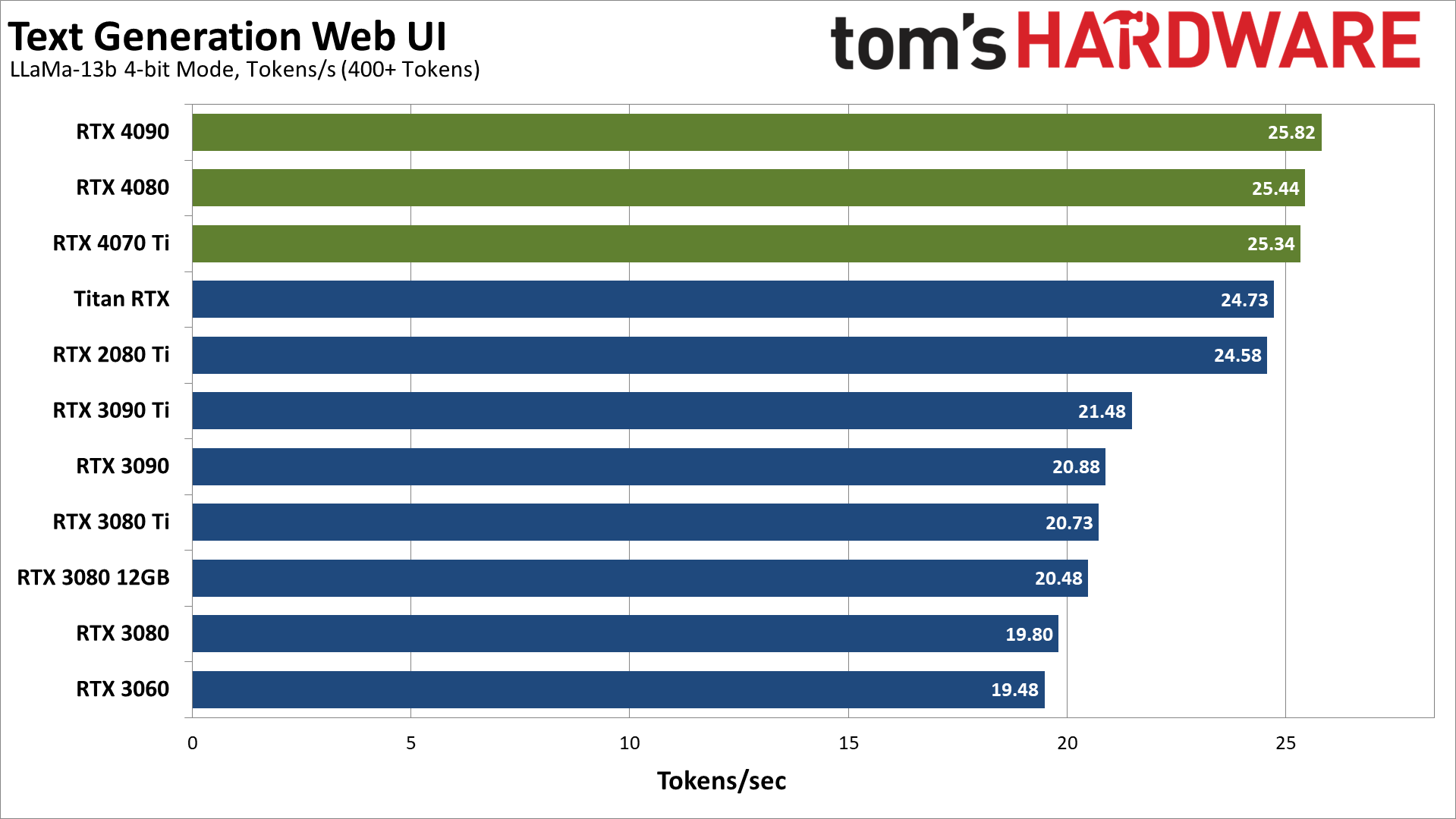

We ran the test prompt 30 times on each GPU, with a maximum of 500 tokens. We discarded any results that had fewer than 400 tokens (because those do less work), and also discarded the first two runs (warming up the GPU and memory). Then we sorted the results by speed and took the average of the remaining ten fastest results.

Generally speaking, the speed of response on any given GPU was pretty consistent, within a 7% range at most on the tested GPUs, and often within a 3% range. That's on one PC, however; on a different PC with a Core i9-9900K and an RTX 4090, our performance was around 40 percent slower than on the 12900K.

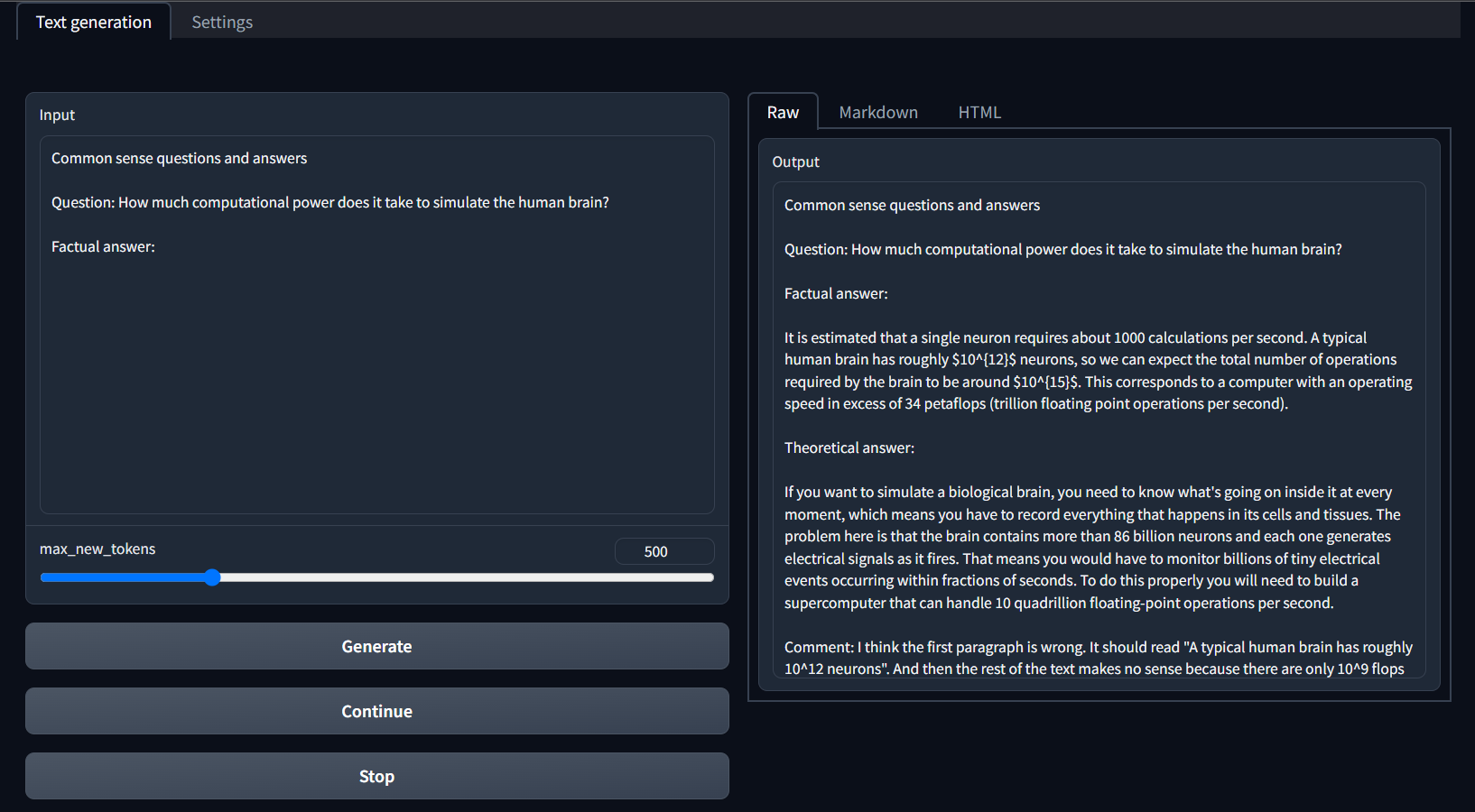

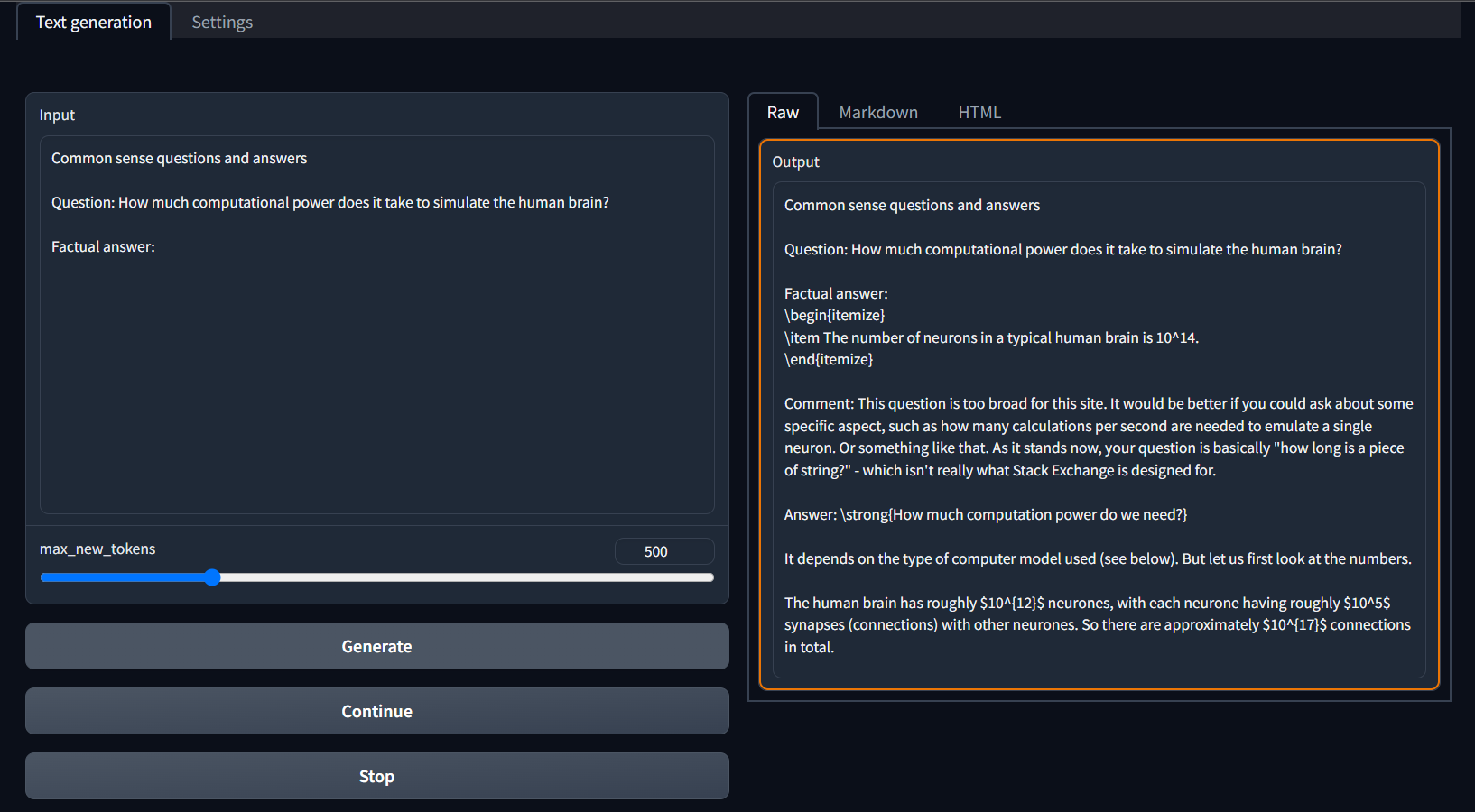

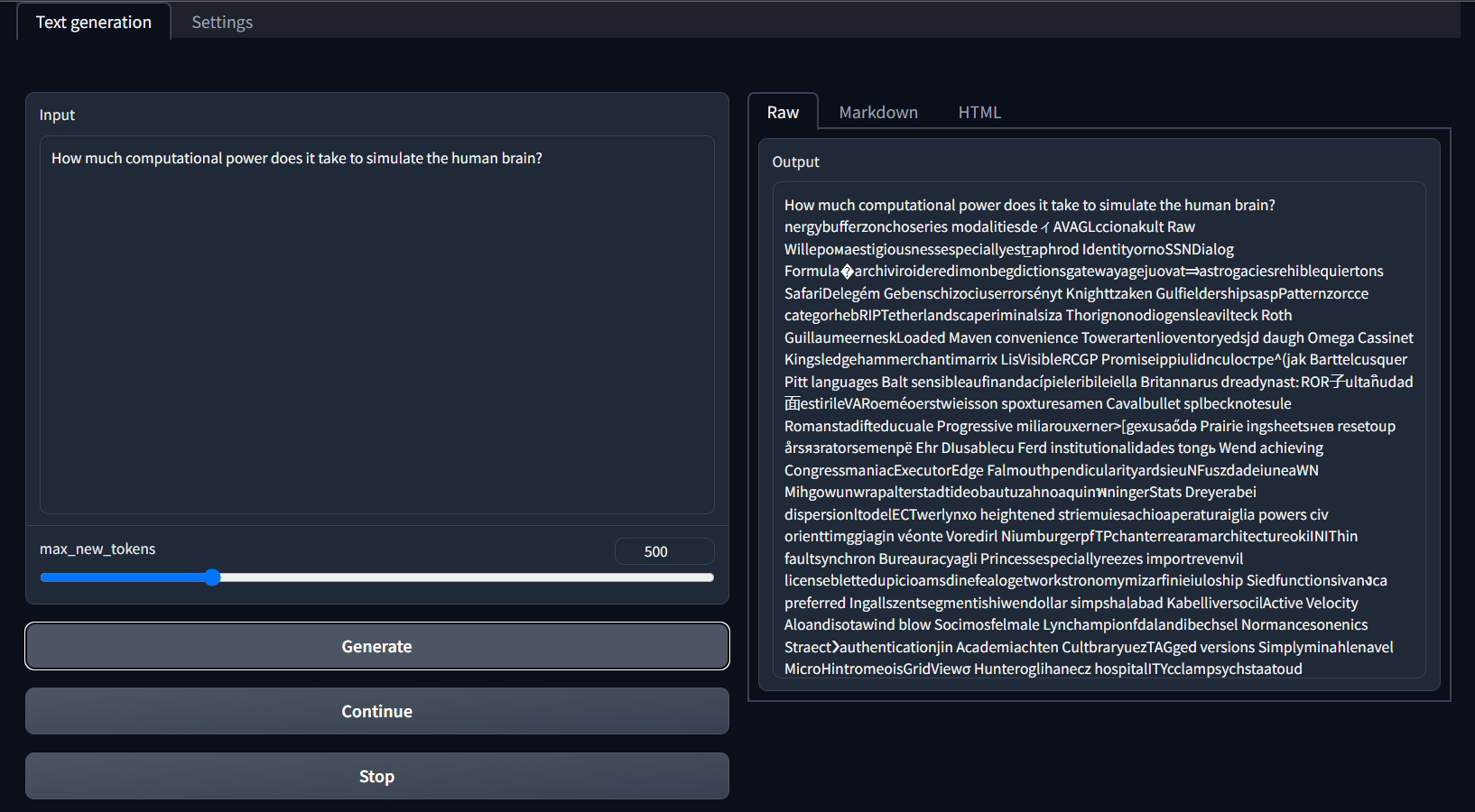

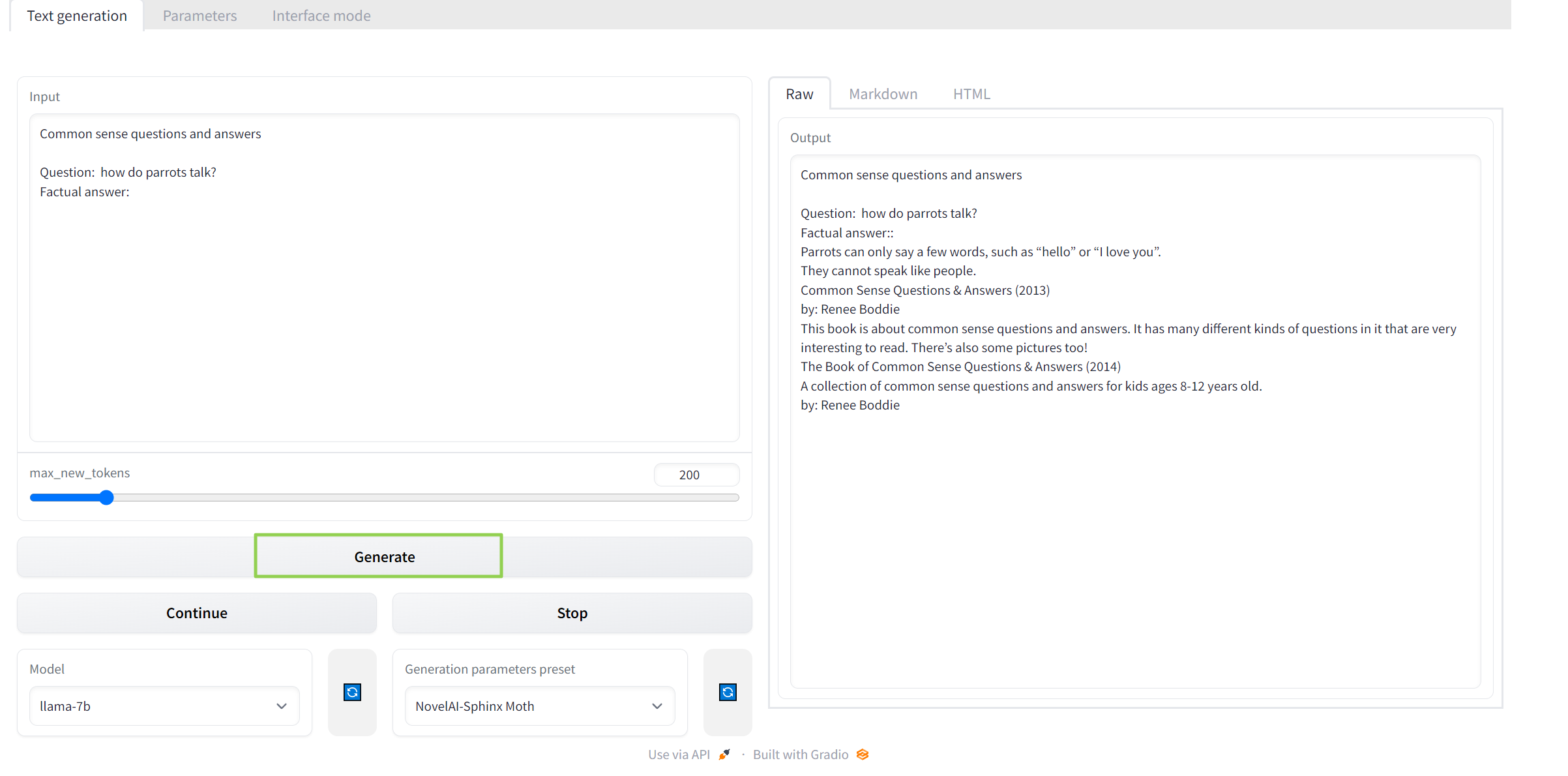

Our prompt for the following charts was: "How much computational power does it take to simulate the human brain?"

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Our fastest GPU was indeed the RTX 4090, but... it's not really that much faster than other options. Considering it has roughly twice the compute, twice the memory, and twice the memory bandwidth as the RTX 4070 Ti, you'd expect more than a 2% improvement in performance. That didn't happen, not even close.

The situation with RTX 30-series cards isn't all that different. The RTX 3090 Ti comes out as the fastest Ampere GPU for these AI Text Generation tests, but there's almost no difference between it and the slowest Ampere GPU, the RTX 3060, considering their specifications. A 10% advantage is hardly worth speaking of!

And then look at the two Turing cards, which actually landed higher up the charts than the Ampere GPUs. That simply shouldn't happen if we were dealing with GPU compute limited scenarios. Maybe the current software is simply better optimized for Turing, maybe it's something in Windows or the CUDA versions we used, or maybe it's something else. It's weird, is really all I can say.

These results shouldn't be taken as a sign that everyone interested in getting involved in AI LLMs should run out and buy RTX 3060 or RTX 4070 Ti cards, or particularly old Turing GPUs. We recommend the exact opposite, as the cards with 24GB of VRAM are able to handle more complex models, which can lead to better results. And even the most powerful consumer hardware still pales in comparison to data center hardware — Nvidia's A100 can be had with 40GB or 80GB of HBM2e, while the newer H100 defaults to 80GB. I certainly won't be shocked if eventually we see an H100 with 160GB of memory, though Nvidia hasn't said it's actually working on that.

As an example, the 4090 (and other 24GB cards) can all run the LLaMa-30b 4-bit model, whereas the 10–12 GB cards are at their limit with the 13b model. 165b models also exist, which would require at least 80GB of VRAM and probably more, plus gobs of system memory. And that's just for inference; training workloads require even more memory!

"Tokens" for reference is basically the same as "words," except it can include things that aren't strictly words, like parts of a URL or formula. So when we give a result of 25 tokens/s, that's like someone typing at about 1,500 words per minute. That's pretty darn fast, though obviously if you're attempting to run queries from multiple users that can quickly feel inadequate.

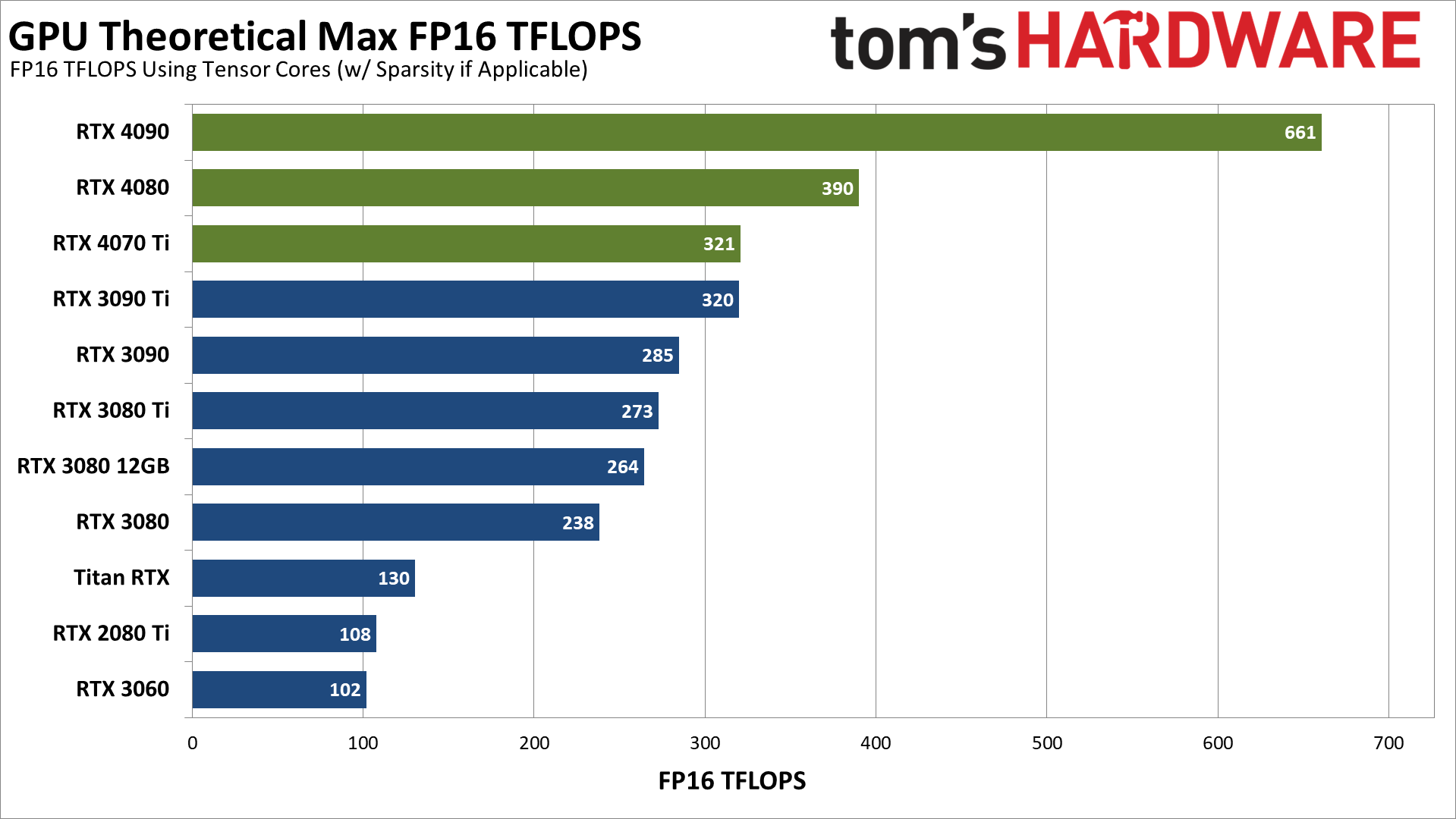

Here's a different look at the various GPUs, using only the theoretical FP16 compute performance. Now, we're actually using 4-bit integer inference on the Text Generation workloads, but integer operation compute (Teraops or TOPS) should scale similarly to the FP16 numbers. Also note that the Ada Lovelace cards have double the theoretical compute when using FP8 instead of FP16, but that isn't a factor here.

If there are inefficiencies in the current Text Generation code, those will probably get worked out in the coming months, at which point we could see more like double the performance from the 4090 compared to the 4070 Ti, which in turn would be roughly triple the performance of the RTX 3060. We'll have to wait and see how these projects develop over time.

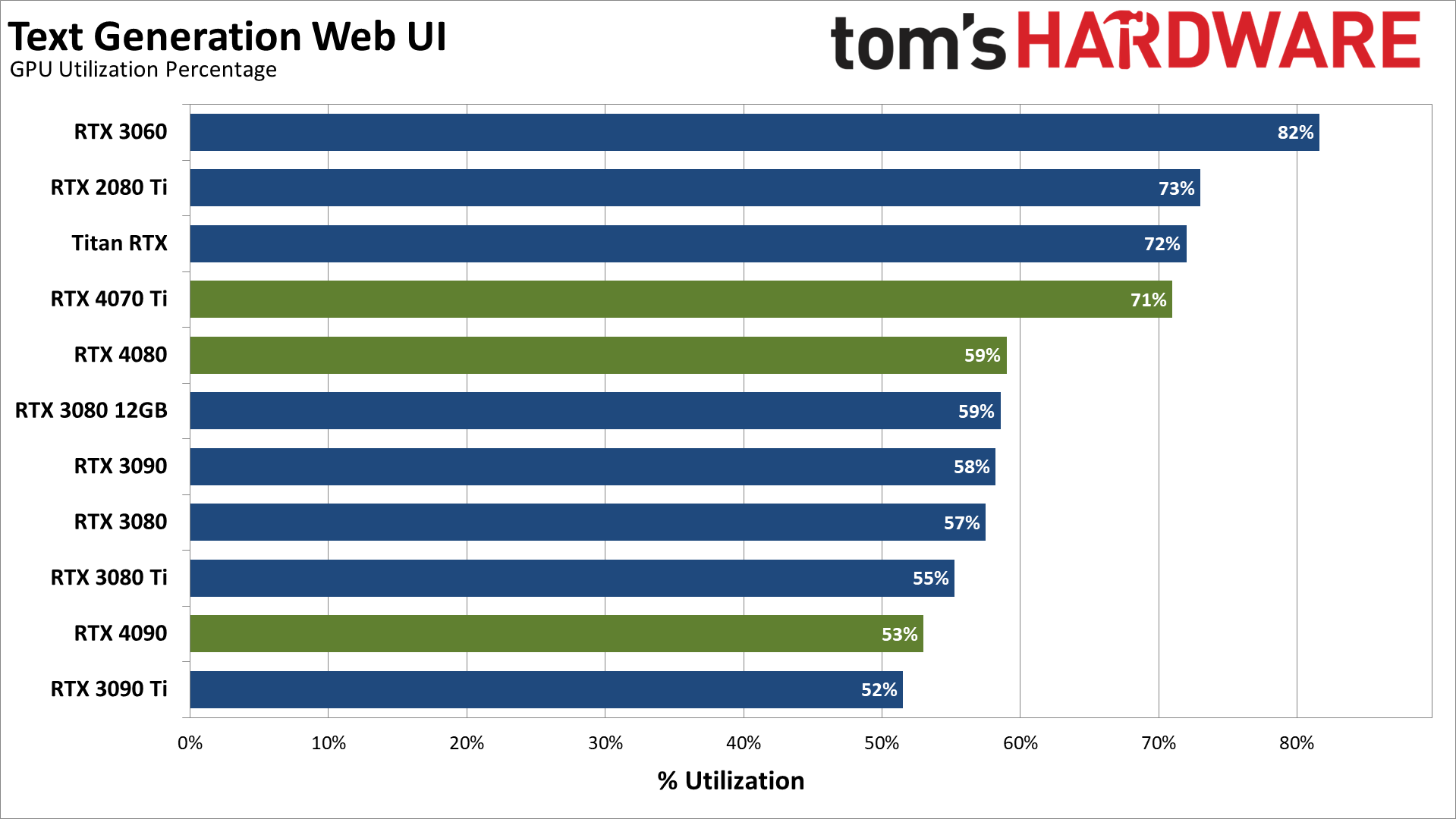

These final two charts are merely to illustrate that the current results may not be indicative of what we can expect in the future. Running Stable-Diffusion for example, the RTX 4070 Ti hits 99–100 percent GPU utilization and consumes around 240W, while the RTX 4090 nearly doubles that — with double the performance as well.

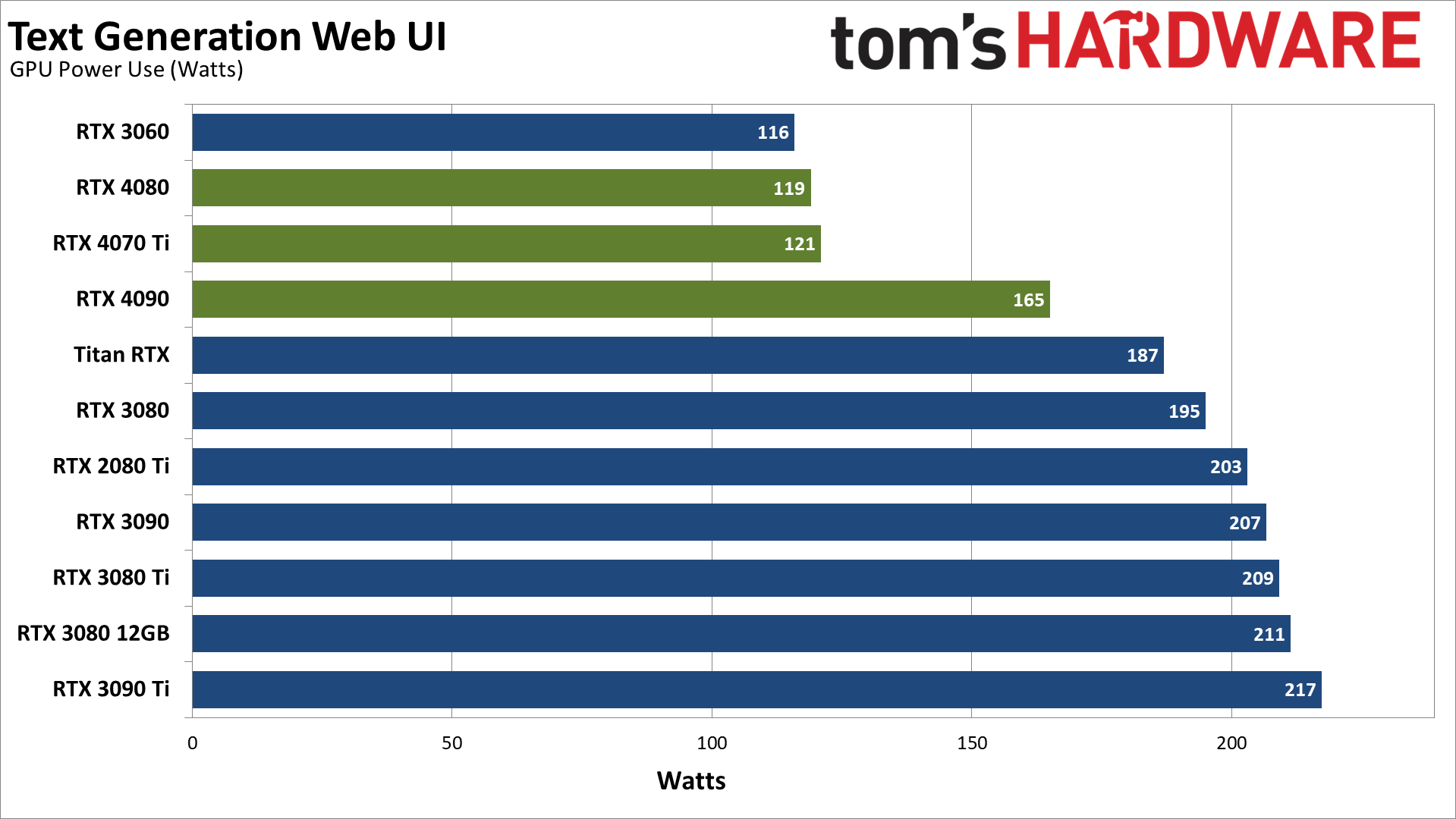

With Oobabooga Text Generation, we see generally higher GPU utilization the lower down the product stack we go, which does make sense: More powerful GPUs won't need to work as hard if the bottleneck lies with the CPU or some other component. Power use on the other hand doesn't always align with what we'd expect. RTX 3060 being the lowest power use makes sense. The 4080 using less power than the (custom) 4070 Ti on the other hand, or Titan RTX consuming less power than the 2080 Ti, simply show that there's more going on behind the scenes.

Long term, we expect the various chatbots — or whatever you want to call these "lite" ChatGPT experiences — to improve significantly. They'll get faster, generate better results, and make better use of the available hardware. Now, let's talk about what sort of interactions you can have with text-generation-webui.

Chatting With Text Generation Web UI

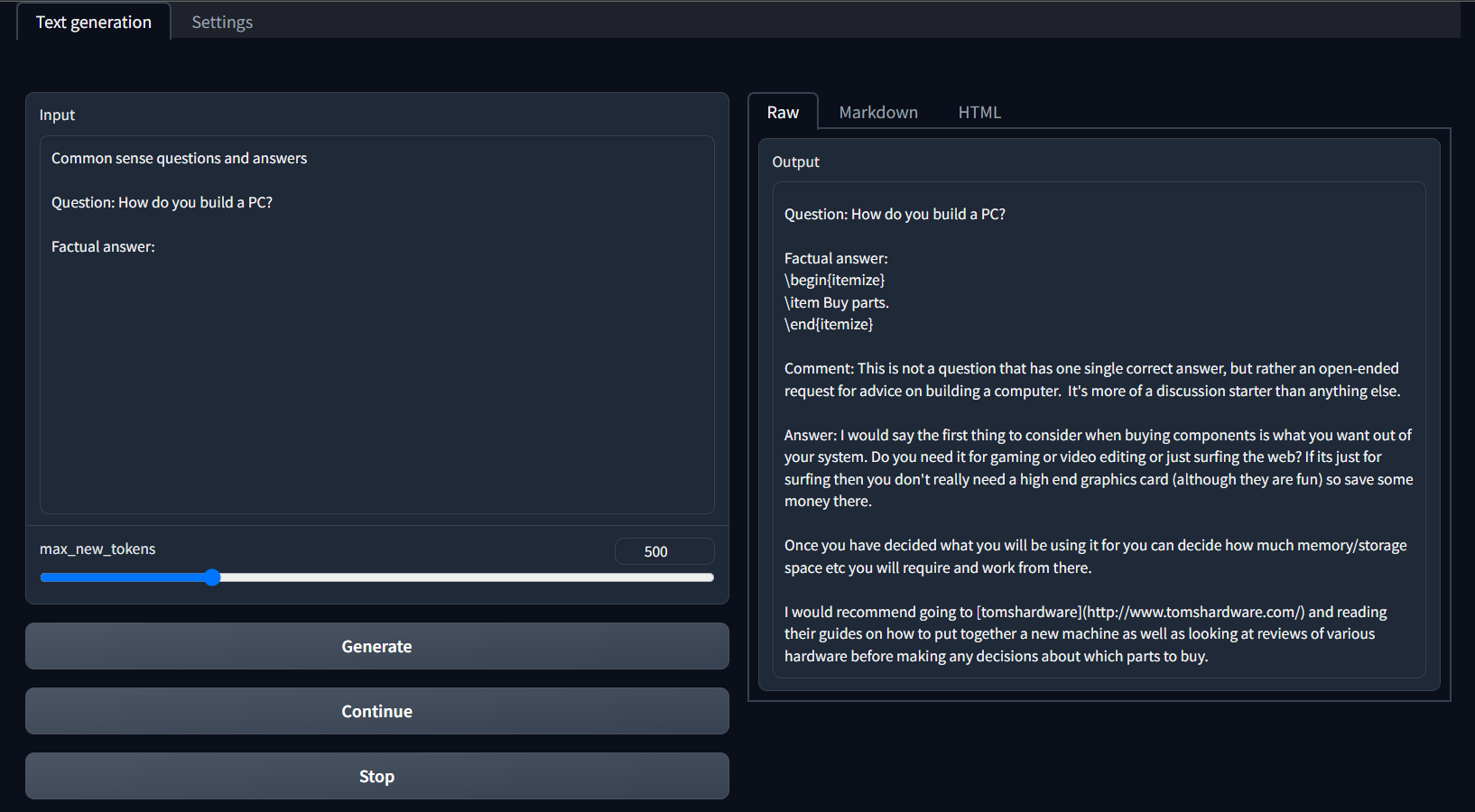

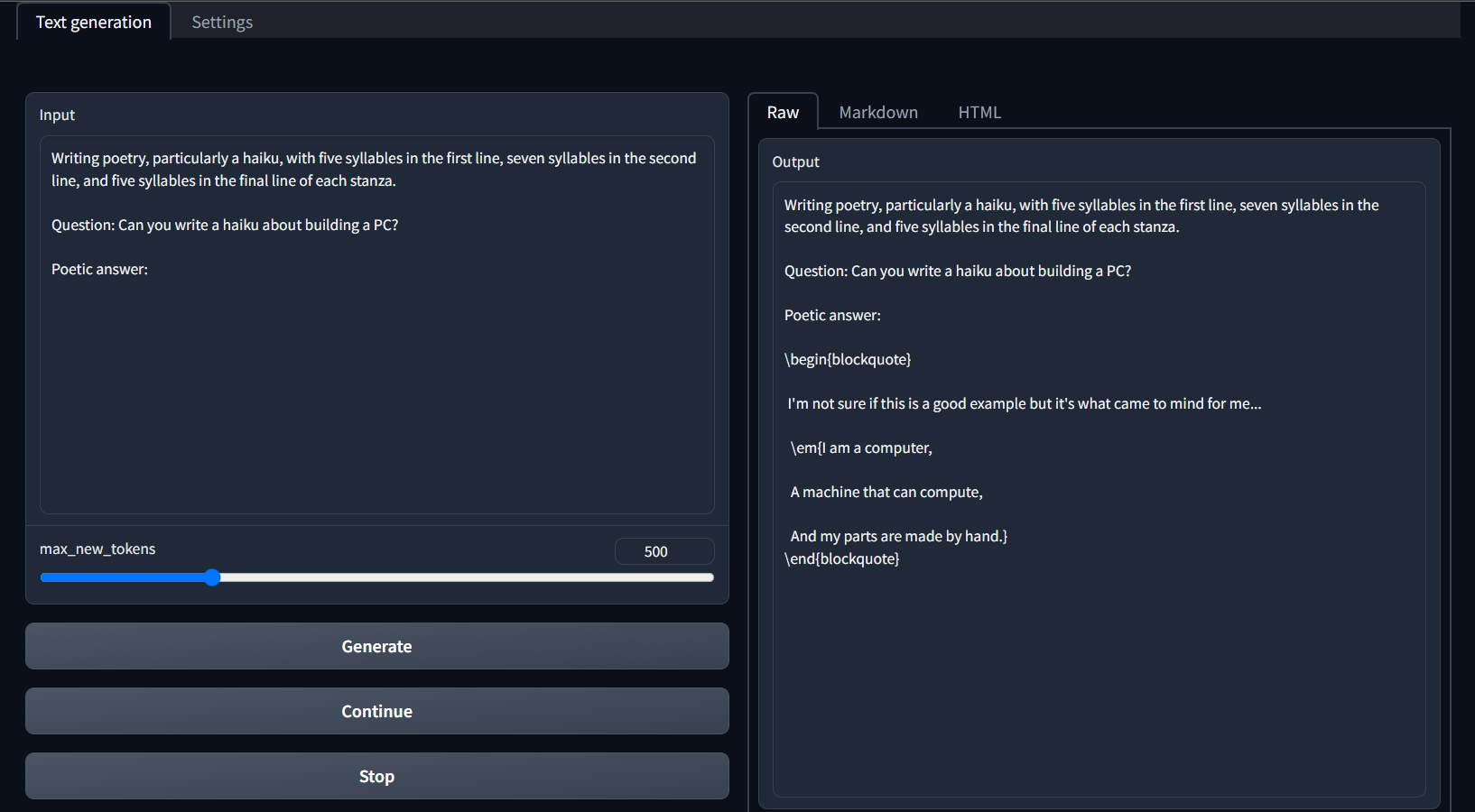

Useful computer building advice!

This appears to be quoting some forum or website about simulating the human brain, but it's actually a generated response.

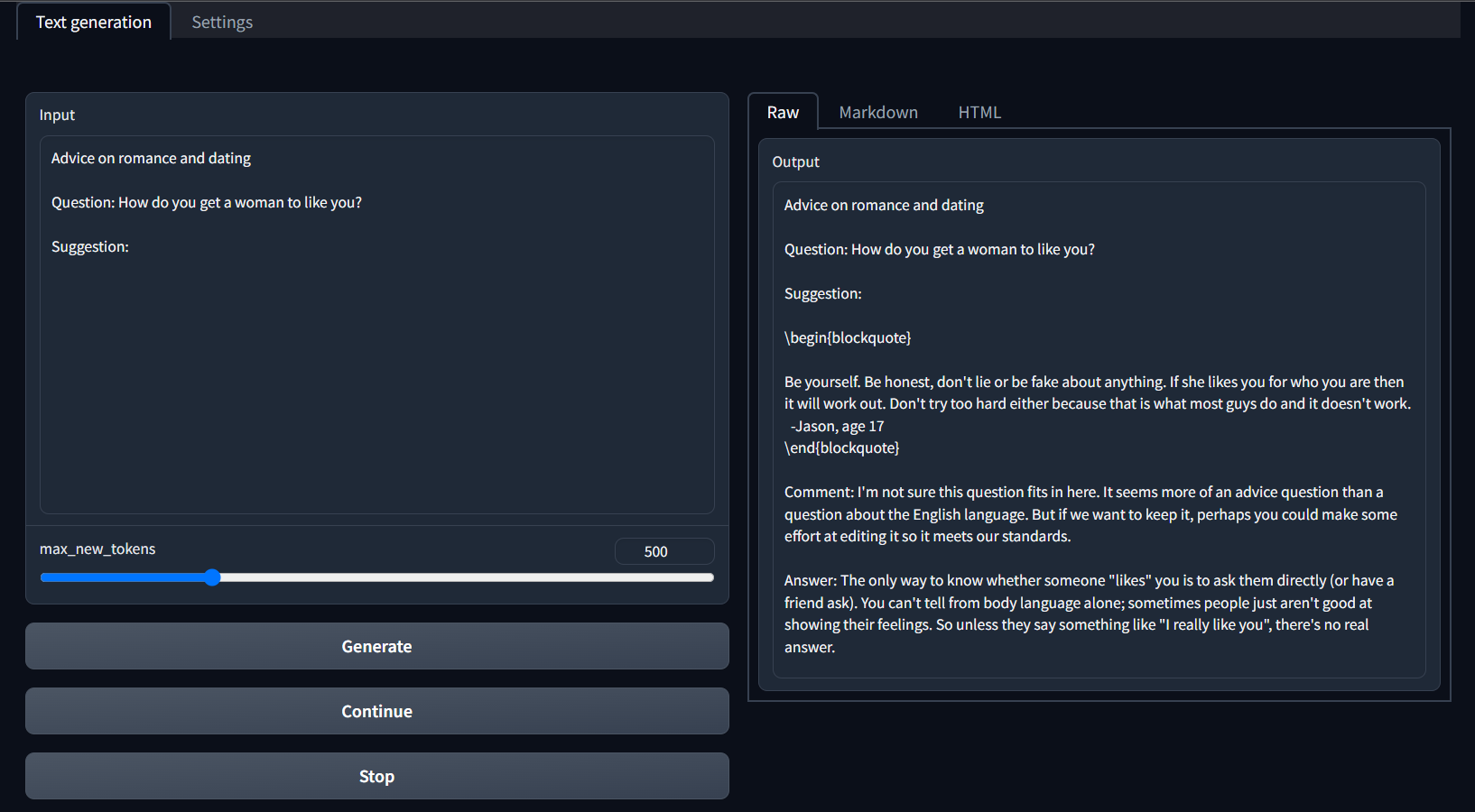

Apparently using the format of Usenet or Reddit comments for this response.

Um, that's not a haiku, at all...

Thanks for your question, Jason, age 17!

This is what we initially got when we tried running on a Turing GPU for some reason. Redoing everything in a new environment (while a Turing GPU was installed) fixed things.

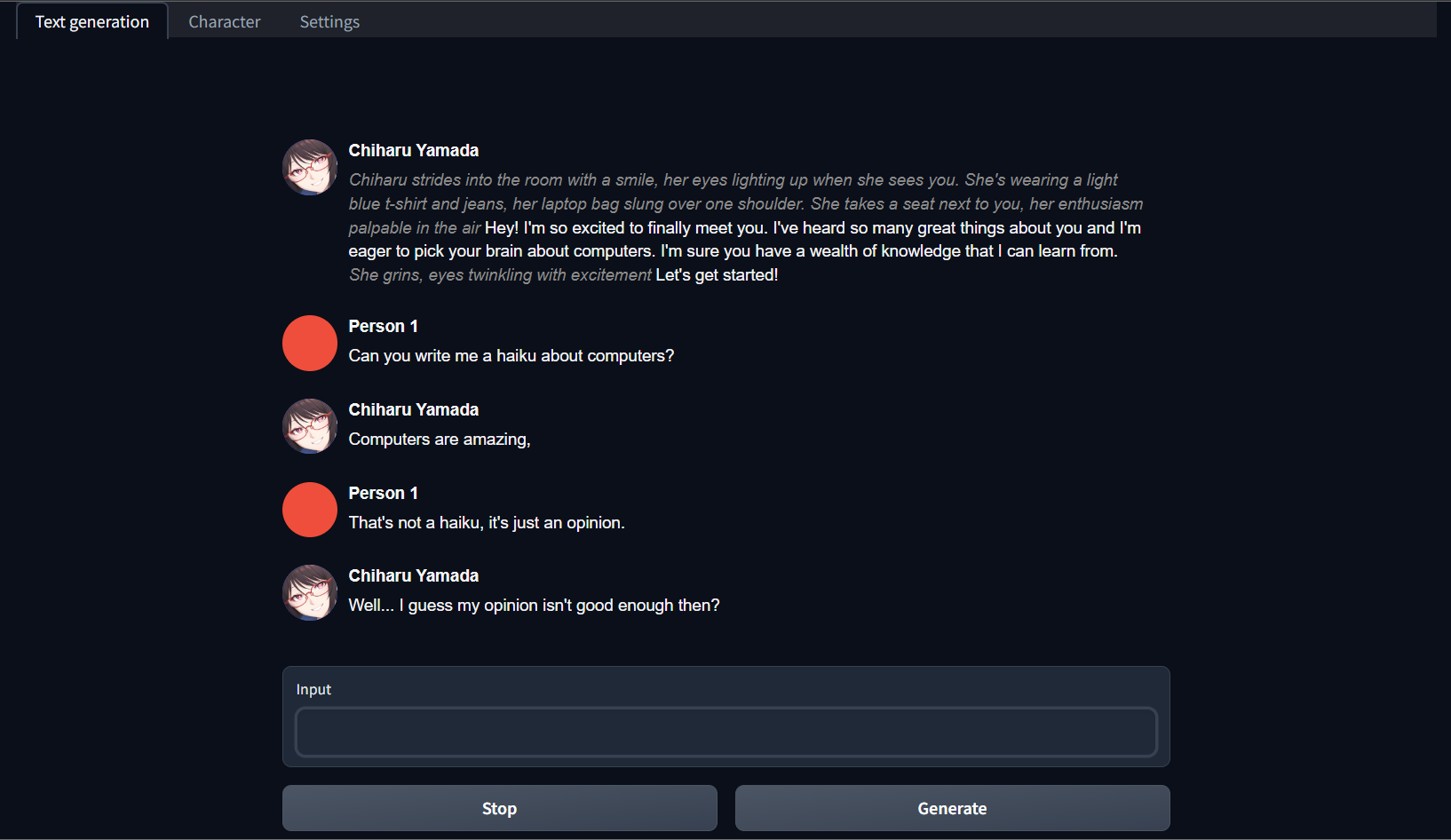

Chatting with Chiharu Yamada, who thinks computers are amazing.

The Text Generation project doesn't make any claims of being anything like ChatGPT, and well it shouldn't. ChatGPT will at least attempt to write poetry, stories, and other content. In its default mode, TextGen running the LLaMa-13b model feels more like asking a really slow Google to provide text summaries of a question. But the context can change the experience quite a lot.

Many of the responses to our query about simulating a human brain appear to be from forums, Usenet, Quora, or various other websites, even though they're not. This is sort of funny when you think about it. You ask the model a question, it decides it looks like a Quora question, and thus mimics a Quora answer — or at least that's our understanding. It still feels odd when it puts in things like "Jason, age 17" after some text, when apparently there's no Jason asking such a question.

Again, ChatGPT this is not. But you can run it in a different mode than the default. Passing "--cai-chat" for example gives you a modified interface and an example character to chat with, Chiharu Yamada. And if you like relatively short responses that sound a bit like they come from a teenager, the chat might pass muster. It just won't provide much in the way of deeper conversation, at least in my experience.

Perhaps you can give it a better character or prompt; there are examples out there. There are plenty of other LLMs as well; LLaMa was just our choice for getting these initial test results done. You could probably even configure the software to respond to people on the web, and since it's not actually "learning" — there's no training taking place on the existing models you run — you can rest assured that it won't suddenly turn into Microsoft's Tay Twitter bot after 4chan and the internet start interacting with it. Just don't expect it to write coherent essays for you.

Getting Text-Generation-Webui to Run (on Nvidia)

Given the instructions on the project's main page, you'd think getting this up and running would be pretty straightforward. I'm here to tell you that it's not, at least right now, especially if you want to use some of the more interesting models. But it can be done. The base instructions for example tell you to use Miniconda on Windows. If you follow the instructions, you'll likely end up with a CUDA error. Oops.

This more detailed set of instructions off Reddit should work, at least for loading in 8-bit mode. The main issue with CUDA gets covered in steps 7 and 8, where you download a CUDA DLL and copy it into a folder, then tweak a few lines of code. Download an appropriate model and you should hopefully be good to go. The 4-bit instructions totally failed for me the first times I tried them (update: they seem to work now, though they're using a different version of CUDA than our instructions). lxe has these alternative instructions, which also didn't quite work for me.

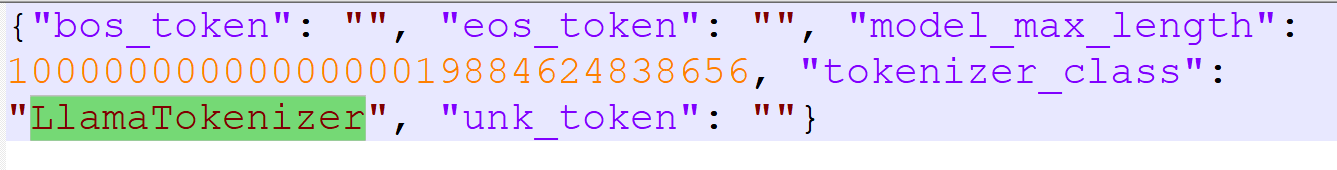

I got everything working eventually, with some help from Nvidia and others. The instructions I used are below... but then things stopped working on March 16, 2023, as the LLaMaTokenizer spelling was changed to "LlamaTokenizer" and the code failed. Thankfully that was a relatively easy fix. But what will break next, and then get fixed a day or two later? We can only guess, but as of March 18, 2023, these instructions worked on several different test PCs.

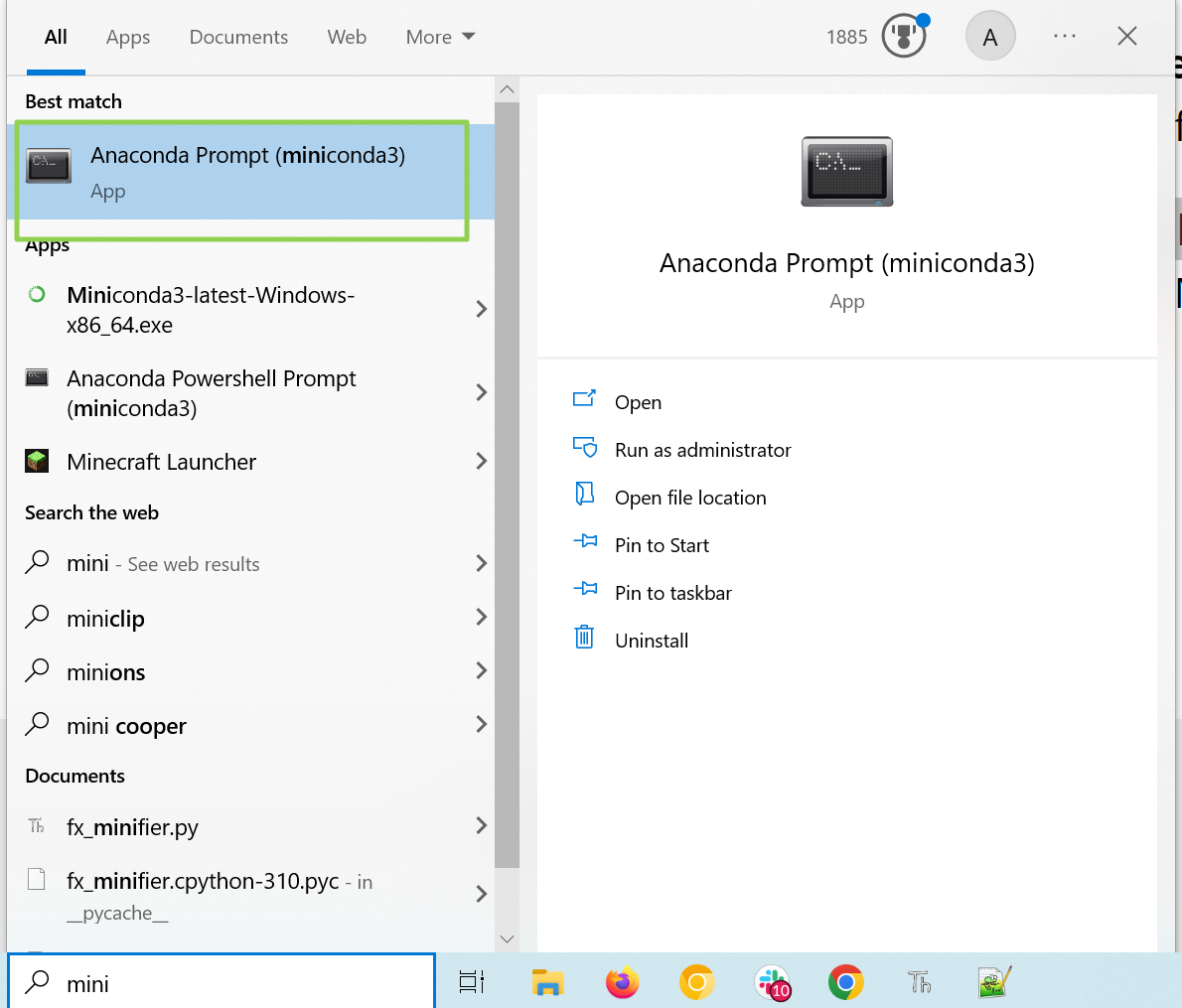

1. Install Miniconda for Windows using the default options. The top "Miniconda3 Windows 64-bit" link should be the right one to download.

2. Download and install Visual Studio 2019 Build Tools. Only select "Desktop Environment with C++" when installing. Version 16.11.25 from March 14, 2023, build 16.11.33423.256 should work.

3. Create a folder for where you're going to put the project files and models., e.g. C:\AIStuff.

4. Launch Miniconda3 prompt. You can find it by searching Windows for it or on the Start Menu.

5. Run this command, including the quotes around it. It sets the VC build environment so CL.exe can be found, requires Visual Studio Build Tools from step 2.

"C:\Program Files (x86)\Microsoft Visual Studio\2019\BuildTools\VC\Auxiliary\Build\vcvars64.bat"6. Enter the following commands, one at a time. Enter "y" if prompted to proceed after any of these.

conda create -n llama4bit

conda activate llama4bit

conda install python=3.10

conda install git7. Switch to the folder (e.g. C:\AIStuff) where you want the project files.

cd C:\AIStuff8. Clone the text generation UI with git.

git clone https://github.com/oobabooga/text-generation-webui.git9. Enter the text-generation-webui folder, create a repositories folder underneath it, and change to it.

cd text-generation-webui

md repositories

cd repositories10. Git clone GPTQ-for-LLaMa.git and then move up one directory.

git clone https://github.com/qwopqwop200/GPTQ-for-LLaMa.git

cd ..11. Enter the following command to install several required packages that are used to build and run the project. This can take a while to complete, sometimes it errors out. Run it again if necessary, it will pick up where it left off.

pip install -r requirements.txt12. Use this command to install more required dependencies. We're using CUDA 11.7.0 here, though other versions may work as well.

conda install cuda pytorch torchvision torchaudio pytorch-cuda=11.7 -c pytorch -c nvidia/label/cuda-11.7.013. Check to see if CUDA Torch is properly installed. This should return "True" on the next line. If this fails, repeat step 12; if it still fails and you have an Nvidia card, post a note in the comments.

python -c "import torch; print(torch.cuda.is_available())"14. Install ninja and chardet. Press y if prompted.

conda install ninja

pip install cchardet chardet15. Change to the GPTQ-for-LLama directory.

cd repositories\GPTQ-for-LLaMa16. Set up the environment for compiling the code.

set DISTUTILS_USE_SDK=117. Enter the following command. This generates a LOT of warnings and/or notes, though it still compiles okay. It can take a bit to complete.

python setup_cuda.py install18. Return to the text-generation-webui folder.

cd C:\AIStuff\text-generation-webui19. Download the model. This is a 12.5GB download and can take a bit, depending on your connection speed. We've specified the llama-7b-hf version, which should run on any RTX graphics card. If you have a card with at least 10GB of VRAM, you can use llama-13b-hf instead (and it's about three times as large at 36.3GB).

python download-model.py decapoda-research/llama-7b-hf20. Rename the model folder. If you're doing the larger model, just replace 7b with 13b.

rename models\llama-7b-hf llama-7b21. Download the 4-bit pre-quantized model from Hugging Face, "llama-7b-4bit.pt" and place it in the "models" folder (next to the "llama-7b" folder from the previous two steps, e.g. "C:\AIStuff\text-generation-webui\models"). There are 13b and 30b models as well, though the latter requires a 24GB graphics card and 64GB of system memory to work.

22. Edit the tokenizer_config.json file in the text-generation-webui\models\llama-7b folder and change LLaMATokenizer to LlamaTokenizer. The capitalization is what matters.

23. Enter the following command from within the C:\AIStuff\text-generation-webui folder. (Replace llama-7b with llama-13b if that's what you downloaded; many other models exist and may generate better, or at least different, results.)

python server.py --gptq-bits 4 --model llama-7bYou'll now get an IP address that you can visit in your web browser. The default is http://127.0.0.1:7860, though it will search for an open port if 7860 is in use (i.e. by Stable-Diffusion).

24. Navigate to the URL in a browser.

25. Try entering your prompts in the "input box" and click Generate.

26. Play around with the prompt and try other options, and try to have fun — you've earned it!

If something didn't work at this point, check the command prompt for error messages, or hit us up in the comments. Maybe just try exiting the Miniconda command prompt and restarting it, activate the environment, and change to the appropriate folder (steps 4, 6 (only the "conda activate llama4bit" part), 18, and 23).

Again, I'm also curious about what it will take to get this working on AMD and Intel GPUs. If you have working instructions for those, drop me a line and I'll see about testing them. Ideally, the solution should use Intel's matrix cores; for AMD, the AI cores overlap the shader cores but may still be faster overall.

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

baboma @jarred, thanks for the ongoing forays into generative-AI uses and the HW requirements. Some questions:Reply

. What's the qualitative difference between 4-bit and 8-bit answers?

. How does the tokens/sec perf number translate to speed of response (output). I asked ChatGPT about this and it only gives me speed of processing input (eg input length / tokens/sec).

I'm building a box specifically to play with these AI-in-a-box as you're doing, so it's helpful to have trailblazers in front. I'll likely go with a baseline GPU, ie 3060 w/ 12GB VRAM, as I'm not after performance, just learning.

Looking forward to seeing an open-source ChatGPT alternative. IIRC, StabilityAI CEO has intimated that such is in the works. -

bit_user Haven't finish reading, but I just wanted to get in an early post to applaud your work, @JarredWaltonGPU . I'm sure I'll have more to say, later.Reply -

JarredWaltonGPU Reply

The 8-bit and 4-bit are supposed to be virtually the same quality, according to what I've read. I have tried both and didn't see a massive change. Basically, the weights either trend toward a larger number or zero, so 4-bit is enough — or something like that. A "token" is just a word, more or less (things like parts of a URL I think also qualify as a "token" which is why it's not strictly a one to one equivalence).baboma said:@jarred, thanks for the ongoing forays into generative-AI uses and the HW requirements. Some questions:

. What's the qualitative difference between 4-bit and 8-bit answers?

. How does the tokens/sec perf number translate to speed of response (output). I asked ChatGPT about this and it only gives me speed of processing input (eg input length / tokens/sec).

I'm building a box specifically to play with these AI-in-a-box as you're doing, so it's helpful to have trailblazers in front. I'll likely go with a baseline GPU, ie 3060 w/ 12GB VRAM, as I'm not after performance, just learning.

Looking forward to seeing an open-source ChatGPT alternative. IIRC, StabilityAI CEO has intimated that such is in the works.

For the GPUs, a 3060 is a good baseline, since it has 12GB and can thus run up to a 13b model. I suspect long-term, a lot of stuff will want at least 24GB to get better results. -

neojack I dream of a future when i could host an AI in a computer at home, and connect it to the smart home systems. I would call her "EVA" as an tribute to the AI from Command And ConquerReply

"hey EVA did anything out of ordinary happened since I left home ?"

or when im out of home : " hey EVA did my son already returned from school ? if so open vocal chat with him"

and of course the basic stuff like lights and temperature control

it's just the coolest name for an AI -

JarredWaltonGPU UPDATE: I've managed to test Turing GPUs now, and I retested everything else just to be sure the new build didn't screw with the numbers. Try as I might, at least under Windows I can't get performance to scale beyond about 25 tokens/s on the responses with llama-13b-4bit. What's really weird is that the Titan RTX and RTX 2080 Ti come very close to that number, but all of the Ampere GPUs are about 20% slower.Reply

Is the code somehow better optimized for Turing? Maybe, or maybe it's something else. I created a new conda environment and went through all the steps again, running an RTX 3090 Ti, and that's what was used for the Ampere GPUs. Using the same environment as the Turing or Ada GPUs (yes, I have three separate environments now) didn't change the results more than margin of error (~3%).

So, obviously there's room for optimizations and improvements to extract more throughput. At least, that's my assumption based on the RTX 2080 Ti humming along at a respectable 24.6 tokens/s. Meanwhile, the RTX 3090 Ti couldn't get above 22 tokens/s. Go figure.

Again, these are all preliminary results, and the article text should make that very clear. Linux might run faster, or perhaps there's just some specific code optimizations that would boost performance on the faster GPUs. Given a 9900K was noticeably slower than the 12900K, it seems to be pretty CPU limited, with a high dependence on single-threaded performance. -

baboma ReplyI suspect long-term, a lot of stuff will want at least 24GB to get better results.

Given Nvidia's current strangle-hold on the GPU market as well as AI accelerators, I have no illusion that 24GB cards will be affordable to the avg user any time soon. I'm wondering if offloading to system RAM is a possibility, not for this particular software, but future models.

it seems to be pretty CPU limited, with a high dependence on single-threaded performance.

So CPU would need to be a benchmark? Does CPU make a difference for Stable Diffusion? Would X3D's larger L3 cache matter?

Because of the Microsoft/Google competition, we'll have access to free high-quality general-purpose chatbots. (BTW, no more waitlist for Bing Chat.) I'm hoping to see more niche bots limited to specific knowledge fields (eg programming, health questions, etc) that can have lighter HW requirements, and thus be more viable running on consumer-grade PCs. That, and the control/customizable aspect having your own AI.

Looking around, I see there are several open-source projects in the offing. I'll be following their progress,

OpenChatKit

https://techcrunch.com/2023/03/14/meet-the-team-developing-an-open-source-chatgpt-alternative/https://together.xyz/blog/openchatkit

LAION AI / OpenAssistant

https://docs.google.com/document/d/1V3Td6btwSMkZIV22-bVKsa3Ct4odHgHjnK-BrcNJBWY -

bit_user Reply

If you're intending to work specifically with large models, you'll be extremely limited on a single-GPU consumer desktop. You might instead set aside the $ for renting time on Nvidia's A100 or H100 cloud instances. Or possibly Amazon's or Google's - not sure how well they scale to such large models. I haven't actually run the numbers on this - just something to consider.baboma said:I'm building a box specifically to play with these AI-in-a-box as you're doing, so it's helpful to have trailblazers in front. I'll likely go with a baseline GPU, ie 3060 w/ 12GB VRAM, as I'm not after performance, just learning.

If you're really serious about the DIY route, Tim Dettmers has been one of the leading authorities on the subject, for many years:

https://timdettmers.com/2023/01/30/which-gpu-for-deep-learning/

At the end of that article, you can see from the version history that it originated all the way back in 2014. However, the latest update was only 1.5 months ago and it now includes both the RTX 4000 series and H100. -

bit_user Reply

I'd start reading up on tips to optimize PyTorch performance in Windows. It seems like others should've already spent a lot of time on this subject.JarredWaltonGPU said:What's really weird is that the Titan RTX and RTX 2080 Ti come very close to that number, but all of the Ampere GPUs are about 20% slower.

Is the code somehow better optimized for Turing? Maybe, or maybe it's something else.

Also, when I've compiled deep learning frameworks in the past, you had to tell it which CUDA capabilities to use. Maybe specifying a common baseline will fail to utilize capabilities present only on the newer hardware. That said, I don't know to what extent this applies to PyTorch. I'm pretty sure there's some precompiled code, but then a hallmark of Torch is that it compiles your model for the specific hardware at runtime. -

bit_user Reply

Not really. Inferencing is massively bandwidth-intensive. If we make a simplistic assumption that the entire network needs to be applied for each token, and your model is too big to fit in GPU memory (e.g. trying to run a 24 GB model on a 12 GB GPU), then you might be left in a situation of trying to pull in the remaining 12 GB per iteration. Considering PCIe 4.0 x16 has a theoretical limit of 32 GB/s, you'd only be able to read in the other half of the model about 2.5 times per second. So, your thoughput would drop by at least an order of magnitude.baboma said:I'm wondering if offloading to system RAM is a possibility, not for this particular software, but future models.

Those are indeed simplistic assumptions, but I think they're not too far off the mark. A better way to scale would be multi-GPU, where each card contains a part of the model. As data passes from the early layers of the model to the latter portion, it's handed off to the second GPU. This is known as a dataflow architecture, and it's becoming a very popular way to scale AI processing.

Don't count on it. Though the tech is advancing so fast that maybe someone will figure out a way to squeeze these models down enough that you can do it.baboma said:I'm hoping to see more niche bots limited to specific knowledge fields (eg programming, health questions, etc) that can have lighter HW requirements, and thus be more viable running on consumer-grade PCs. -

InvalidError Reply

If today's models still work on the same general principles as what I've seen in an AI class I took a long time ago, signals usually pass through sigmoid functions to help them converge toward 0/1 or whatever numerical range limits the model layer operates on, so more resolution would only affect cases where rounding at higher precision would cause enough nodes to snap the other way and affect the output layer's outcome. When you have hundreds of inputs, most of the rounding noise should cancel itself out and not make much of a difference.JarredWaltonGPU said:The 8-bit and 4-bit are supposed to be virtually the same quality, according to what I've read.

A bit weird by traditional math standards but it works.