Star Wars Jedi: Survivor Patched Performance — AMD and Nvidia GPUs Tested

The latest patch helps to address excessive VRAM use and seems to run better.

Star Wars Jedi: Survivor officially released on April 27, and complaints about poor performance, stuttering, and excessive VRAM use appeared virtually seconds later. As an AMD-promoted game, and one that AMD has used to suggest cards with less than 16GB of VRAM aren't sufficient (which would include its own RX 6700- and 6600-series parts), we probably shouldn't be surprised. But patches and driver updates have landed that are supposed to fix some of the most egregious problems. To see how the game now runs, we've tested the PC patch 3.5 version on some of the best graphics cards.

First, let us rant for a moment about DRM. Like so many other EA games, Star Wars Jedi: Survivor has some draconian limitations on what you can do with the game. We purchased the game on Steam... and then still had to install the EA launcher app to play it. This sucks, and the same goes for Ubi games or products from anyone else. If you're going to release the game on Steam, Epic, or other launchers, do the work to make it function fully within that environment!

Also fun: We borrowed a friend's account (Aaron's, if you're wondering), which was not the Steam version but was instead purchased on the EA launcher. We couldn't even install the game there because "another version of the game is already installed." So, backup the Steam version, uninstall, and then download the 133GB of data again. Nice. But that's still only part of the issue.

Our biggest complaint (about the DRM, not performance) is one that literally only affects people like us that benchmark games: It's tied to your PC hardware. Oh, you can run it on up to four "different" PCs, but after that you get locked out for 24 hours. Changing a graphics card counts as a different PC, the same goes for the CPU. The CPU is slightly more understandable, though really we'd prefer the DRM to link to the motherboard, as that's the true indicator of whether a different PC is being used. Regardless, even with two different accounts, we're only able to test on eight GPUs/CPUs/PCs per day. Well, except the DRM screwed up somehow and let us test on a ninth GPU at one point. Go figure.

Anyway, this is a look at how the 3.5 patch variant of the game runs on a high-end PC, using higher performance GPUs plus a few others, which we'll augment with additional testing as requested once we're able to get back into the game. #DRMSucks. All testing was done on May 2–3, and EA promises future patches that could further improve performance.

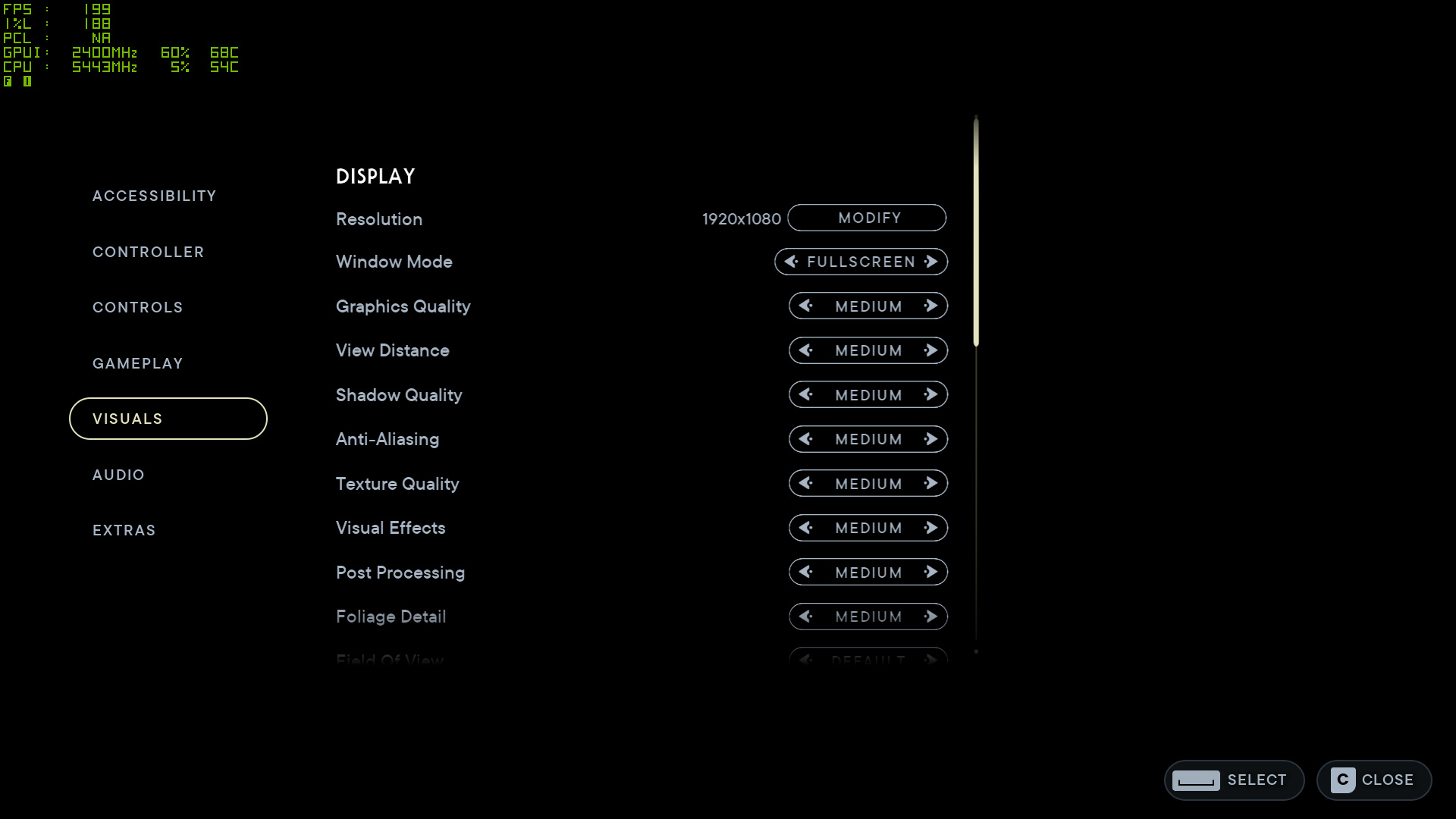

Star Wars Jedi: Survivor Settings

There aren't a ton of settings in Star Wars Jedi: Survivor, but you get the usual four presets: Low, Medium, High, and Epic. Wait, Epic and not Ultra for the highest setting? Yup, this is yet another Unreal Engine 4 game, just like Dead Island 2 and Redfall. Except this time, the game also supports ray tracing as an extra option to improve image quality.

You get the usual increase in performance and drop in image quality as you go from Epic to High to Medium to Low presets. You can also customize the various settings, and note that none of the settings default to having ray tracing enabled. They also don't default to turning on AMD FidelityFX Super Resolution 2 (FSR2) upscaling, leaving those decisions to the user.

The ray tracing in Jedi: Survivor would at first seem to be pretty comprehensive. There's apparently RTAO (ambient occlusion), RTGI (global illumination), and RT reflections. Except, the reflections and GI effects are used quite sparingly, and the RTAO rendering distance is somewhat short. So at times, you'll see the higher-quality shadows popping into view with RT enabled and that can be somewhat distracting. (It was far worse in the launch version of the game.)

That was probably done in order to reduce the impact of having RT enabled, particularly on AMD-promoted GPUs, because as usual, they don't handle the additional RT workload as well. Or maybe it was just a tacked-on feature that no one thought too deeply about.

Whatever the case, we're still of the opinion that you need to go big with RT or just leave it out — even if "going big" means making the settings useless for anything below the top Nvidia GPUs, like with Cyberpunk 2077 RT Overdrive. For Star Wars Jedi: Survivor, our take is that the ray tracing effects aren't particularly striking or important to your enjoyment of the game... though there are a few exceptions to this.

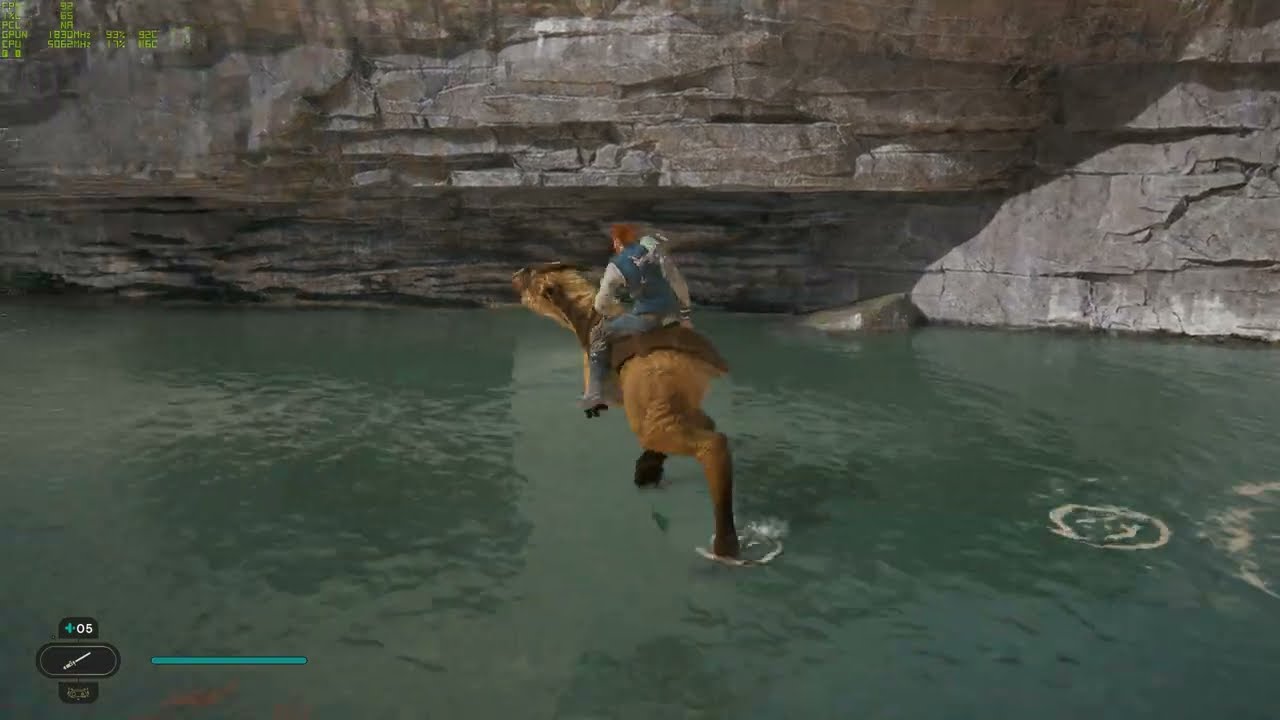

While a lot of the RT effects don't really make a big difference, here's one example where the non-RT rendering looks really off. The screen space reflections basically don't work around your character (or other characters) from the third person viewpoint. You can see the brighter "unreflected" portion of the water below the Nekko (the mount) as well as below BD-1. While you might think it doesn't look too bad, it's actually worse in motion.

The ray tracing option at least somewhat fixes this issue. It doesn't make everything else that's reflected look tons better, but you can also tell that it's not just the cliffside in the water reflections, and you also get illumination under the overhang that the non-RT version doesn't handle. If you have a beefy GPU, then, you will find areas of the game where you'll appreciate what RT brings to the table. Well, maybe. It's a bit of a "damned if you do (RT), damned if you don't (RT)" with Star Wars Jedi: Survivor.

The above gallery contains a bunch of screenshots of a few different areas, with the settings used listed below the images. We have Low, Medium, High, Epic, and Epic+RT for comparison, all captured at 1440p on an RX 6750 XT. (That was what was in the PC at the time when we grabbed the images — except for one set of images that had an RX 6650 XT installed.) The FPS in the corner isn't a full benchmark, but it does indicate relative performance with the various settings, and as you can see, performance can drop quite a bit as you go up the quality stack.

Depending on the area of the game, the change in settings can produce some pretty substantial differences in the way the game looks. Outdoors (i.e. not in the starting area on Coruscant), the amount of foliage present can drop a lot at lower settings. One thing you won't generally find are gratuitous amounts of highly reflective materials to show off the ray tracing effects.

The higher presets can hit VRAM pretty hard, even with the 3.5 patch, especially with the Epic quality textures and shadows. 12GB of VRAM is basically the minimum for a decent experience at Epic settings and 4K. But the difference between Epic and High quality textures and shadows probably isn't worth the VRAM tax, so if you have a 12GB or even 8GB card, don't feel bad about dropping Shadows and Textures down a notch.

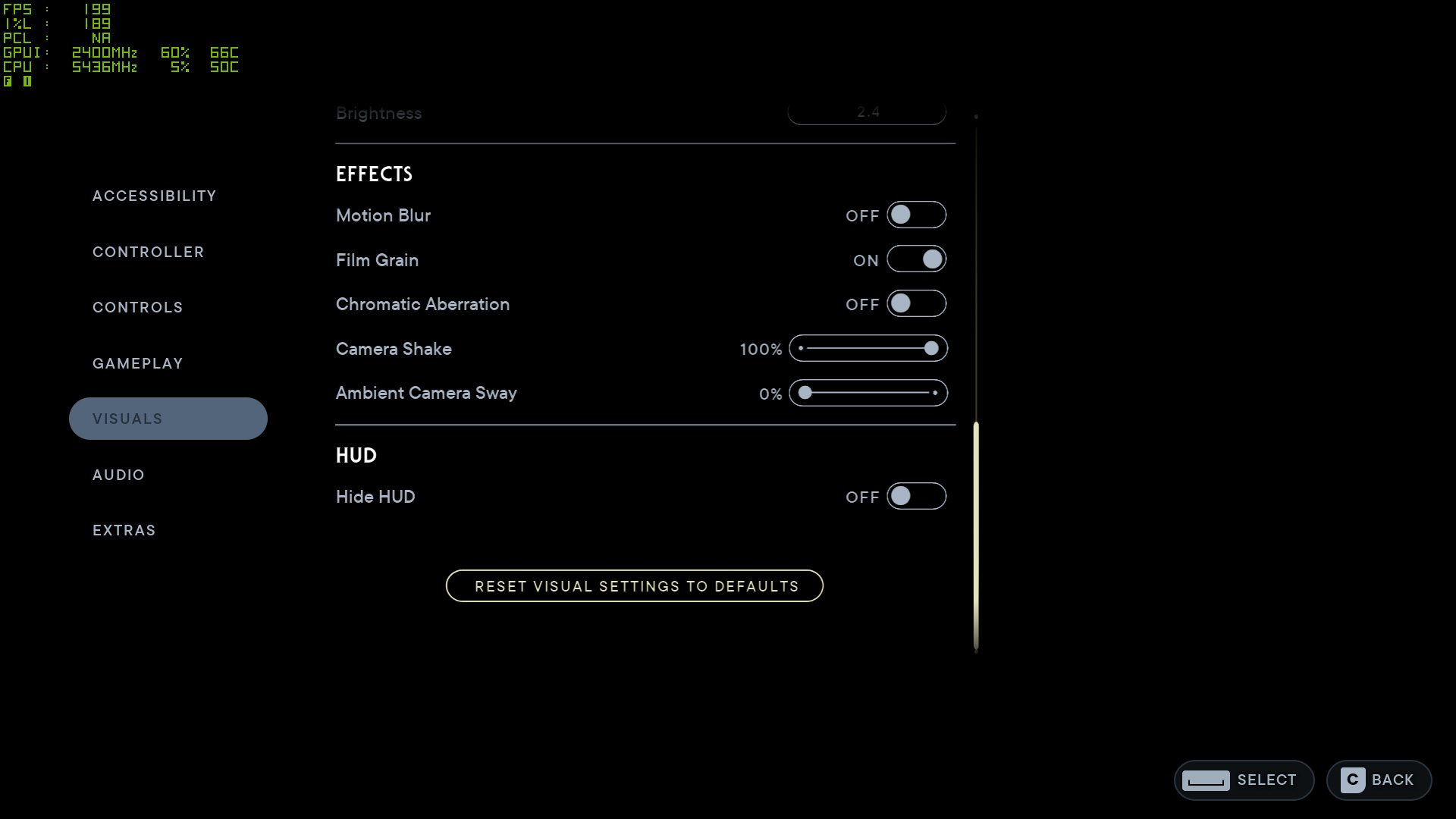

Here's another set of images, this time showing image quality at Epic other than the specified setting. There are eight adjustable settings that can impact image quality (along with the usual stuff like resolution, FSR2, field of view, etc.), which is fewer than some games, but at least most of the settings make a more noticeable difference in the resulting image quality. Also, we disabled motion blur and chromatic aberration, as we're not particularly keen on either effect.

In terms of performance, Shadow Quality, Visual Effects, Post Processing, and Foliage Detail tend to have the biggest impact — and in some areas, dropping View Distance can also help. Anti-Aliasing does very little (even the low setting looks fine), but it doesn't seem like Texture Quality and perhaps a few other settings will properly update without changing the preset or restarting the game. This makes direct comparisons of the individual settings more difficult, so don't take the above images as gospel truth — refer to the preset images instead.

Further testing indicates that if you customize any of the "advanced" settings, the results are not what you'd expect — further details are in this forum post. Setting Texture Quality to Low for example and then restarting the game — with everything else on Epic — gave performance that was similar to the Medium preset. The same goes for adjusting View Distance while having everything else on Epic. But looking at the two images, having just View Distance at Low looks like lower resolution textures are also being used, and probably other changes as well. In other words, the individual settings (outside of the global Graphics Quality preset) appear to affect far more than just the changed setting.

Star Wars Jedi: Survivor PC System Requirements

Minimum PC System Requirements

- CPU: Ryzen 5 1400 or Core i7-7700, 4-core/8-thread

- GPU: Radeon RX 580 or GeForce GTX 1070, 8GB VRAM

- RAM: 8GB

- Storage: 155GB

- Windows 10/11 64-bit

Recommended PC System Requirements

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

- CPU: Ryzen 5 5600X or Core i5-11600K, 4-core/8-thread

- GPU: Radeon RX 6700 XT or GeForce RTX 2070, 8GB VRAM

- RAM: 16GB

- Storage: 155GB

- Windows 10/11 64-bit

The base and recommended PC specs for running Star Wars Jedi: Survivor are relatively tame by modern standards, at least in most respects. Officially, EA requires at least a 4-core/8-thread CPU, pointing to the Ryzen 5 1400 and Core i7-7700 as examples — and let's just be clear that those are not "equivalent," as the i7-7700 tends to be substantially faster. Realistically, you can probably get by with an i7-4770 or similar, though there's of course no guarantee of 60+ fps.

The recommended CPUs are much newer, with the Ryzen 5 5600X and Core i5-11600K, though EA still lists "4-core/8-thread" as the basic CPU feature requirement even though both of those are 6-core/12-thread processors. It's almost like the system requirements were phoned in by someone that doesn't really know PC hardware.

For the GPUs, the RX 580 8GB and GTX 1070 are listed — again, those are different classes of hardware, with the RX 580 more or less matching the GTX 1060 6GB. Meanwhile, the recommended GPUs are substantially more potent, with the RX 6700 XT and RTX 2070. This time, the 6700 XT ends up being way faster in general. You can check our GPU benchmarks hierarchy for the details, but the 1070 is typically 25% faster than an RX 580, while the RX 6700 XT is generally 45% faster than the 2070. And this is why we run actual benchmarks.

Memory requirements are given as 8GB minimum and 16GB recommended, while storage requires 155GB. Thankfully, capacious and inexpensive SSDs are all the rage right now, with even higher performance 2TB PCIe 4.0 TLC drives like the Acer GM7000 starting at just $129. Or if you don't care about sustained performance and just want the cheapest SSD possible, check out the Silicon Power UD85 2TB for just $77 — that's 3.9 cents per GB.

Star Wars Jedi: Survivor Test Setup

TOM'S HARDWARE TEST PC

Intel Core i9-13900K

MSI MEG Z790 Ace DDR5

G.Skill Trident Z5 2x16GB DDR5-6600 CL34

Sabrent Rocket 4 Plus-G 4TB

be quiet! 1500W Dark Power Pro 12

Cooler Master PL360 Flux

Windows 11 Pro 64-bit

Samsung Neo G8 32

GRAPHICS CARDS

AMD RX 7900 XTX

AMD RX 7900 XT

AMD RX 6000-Series

Intel Arc A770 16GB

Nvidia RTX 4090

Nvidia RTX 4080

Nvidia RTX 4070

Nvidia RTX 30-Series

We're using our standard 2023 GPU test PC, with a Core i9-13900K and all the other bells and whistles. We've tested 13 different GPUs from the past two generation of architectures in Star Wars Jedi: Survivor, using the medium and epic presets. (Note that it already took two days to run the tests, due to the DRM issues, and that was using two different accounts!)

We're also testing at 1920x1080, 2560x1440, and 3840x2160 with ray tracing enabled, and finally we've tested most of the cards at 4K with ray tracing and FSR2 Quality upscaling enabled. Games that didn't hit reasonable framerates at 4K generally weren't tested there.

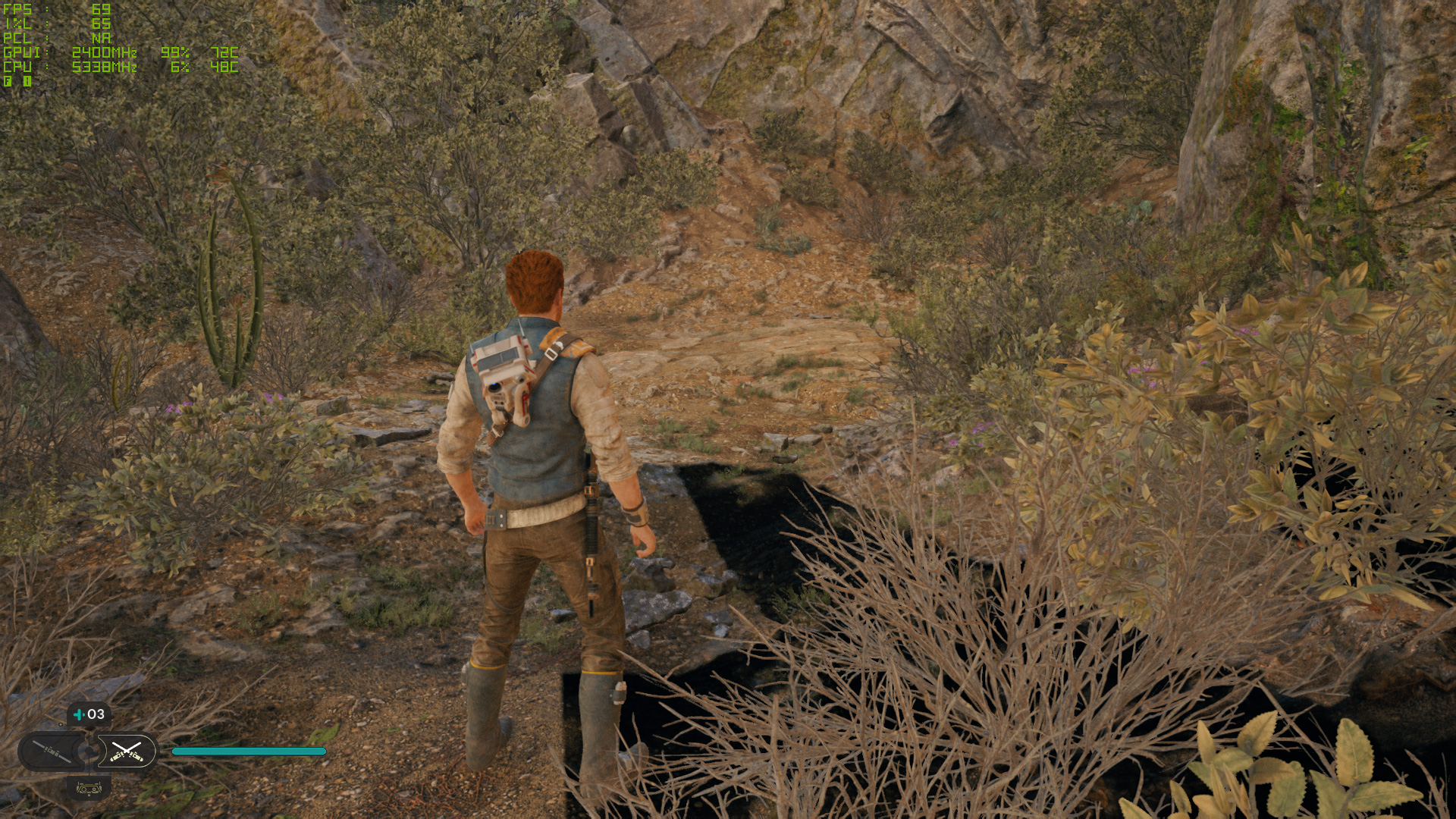

Our test sequence consists of running a loop through a section of the Koboh map. Each GPU gets one loop to "warm up," followed by two more loops while logging frametimes. We check the results to ensure consistency of performance, running additional loops if there's significant variability. (Framerates were very stable in our testing, so that generally wasn't necessary.)

Now, a few caveats are in order. If you go to the Koboh village, performance is far, far worse than what we're showing here. From what I've seen, that's the most demanding area of the game, and you end up returning there on a fairly regular basis. There's no combat in the village for the most part, so it's not as critical to have higher and smoother fps, but it's very much a problem. (For reference, the 1% lows in Koboh village while running around are consistently in the 30 fps range, regardless of GPU.)

Outside of the initial run, where texture loads could cause some stuttering, performance was consistent. The latest updates to the game seem to have fixed most of the egregious problems, but further game and/or driver updates could continue to improve things. This is how performance looks about a week after launch.

Let's hit the charts.

Star Wars Jedi: Survivor GPU Performance

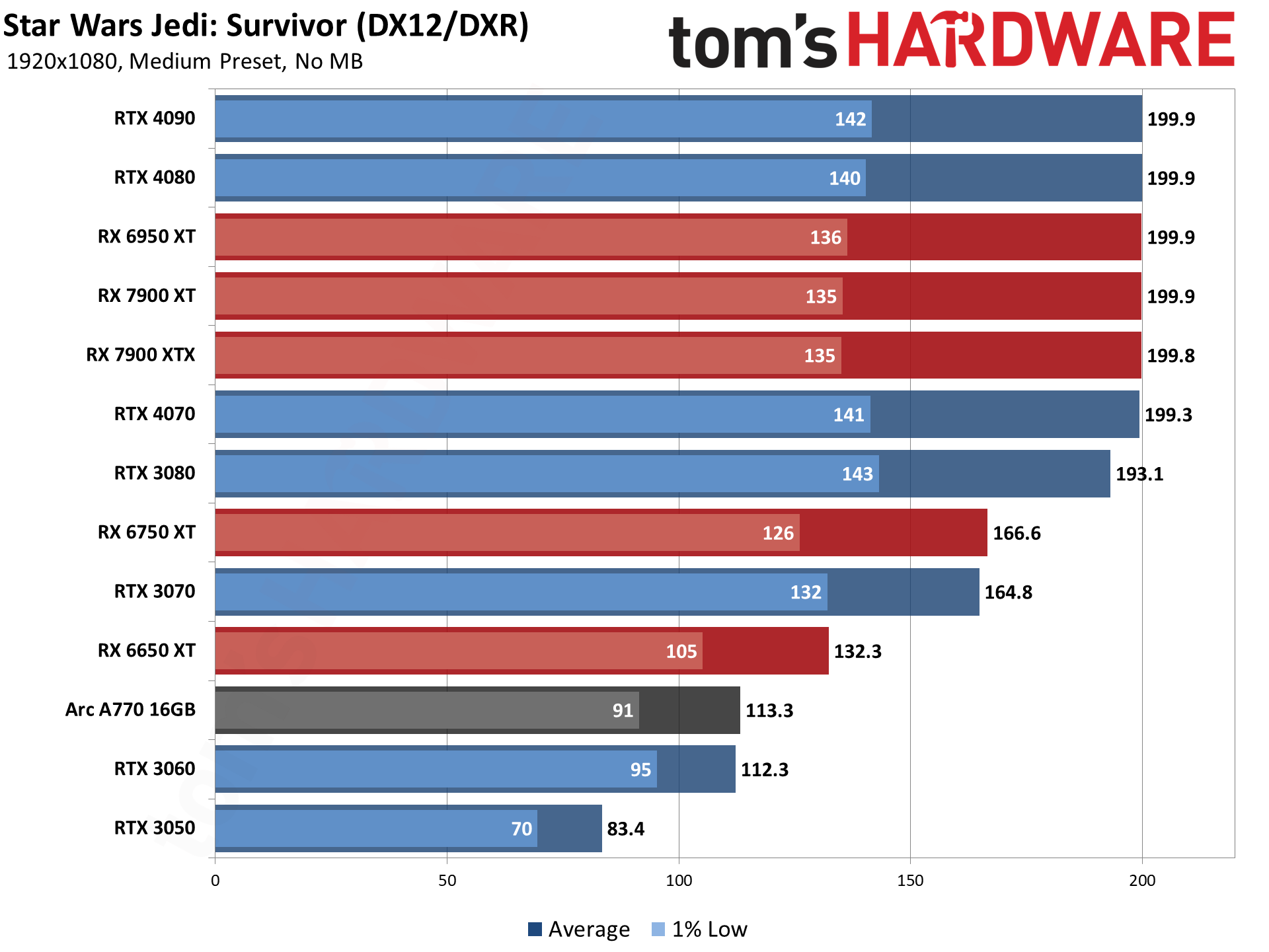

Our baseline testing at 1080p medium has every GPU in our test suite hitting well above 60 fps. Granted, the slowest GPU is the RTX 3050, which isn't exactly a low-end offering, but cards costing $200 these days like the RX 6600 should easily handle Star Wars Jedi: Survivor at medium settings — and probably a mix of high and epic settings is doable.

You can also see that there's a 200 fps cap in place. If there's a way to get around that, we didn't find it, but the RTX 3080 and above are all getting close to the limit. Note that there are more demanding areas of the game, but we selected a segment that seemed to be relatively typical — some areas will run much faster, and some will run slower, but a lot of the game should run roughly in line with our results. That's assuming you have a fast CPU and plenty of memory to go with the GPU, of course.

1% lows on all of the faster cards end up at around 140 fps, give or take. We saw similar results with Dead Island 2 and Redfall, and the somewhat larger gap between the average and minimum fps tends to be pretty typical of Unreal Engine 4 games. At the bottom of the chart, the RTX 3050 has minimums of 70 fps, which means even in the worst scenarios it should still be able to get above 60 fps (though occasional stutters while loading in new textures and data will occur).

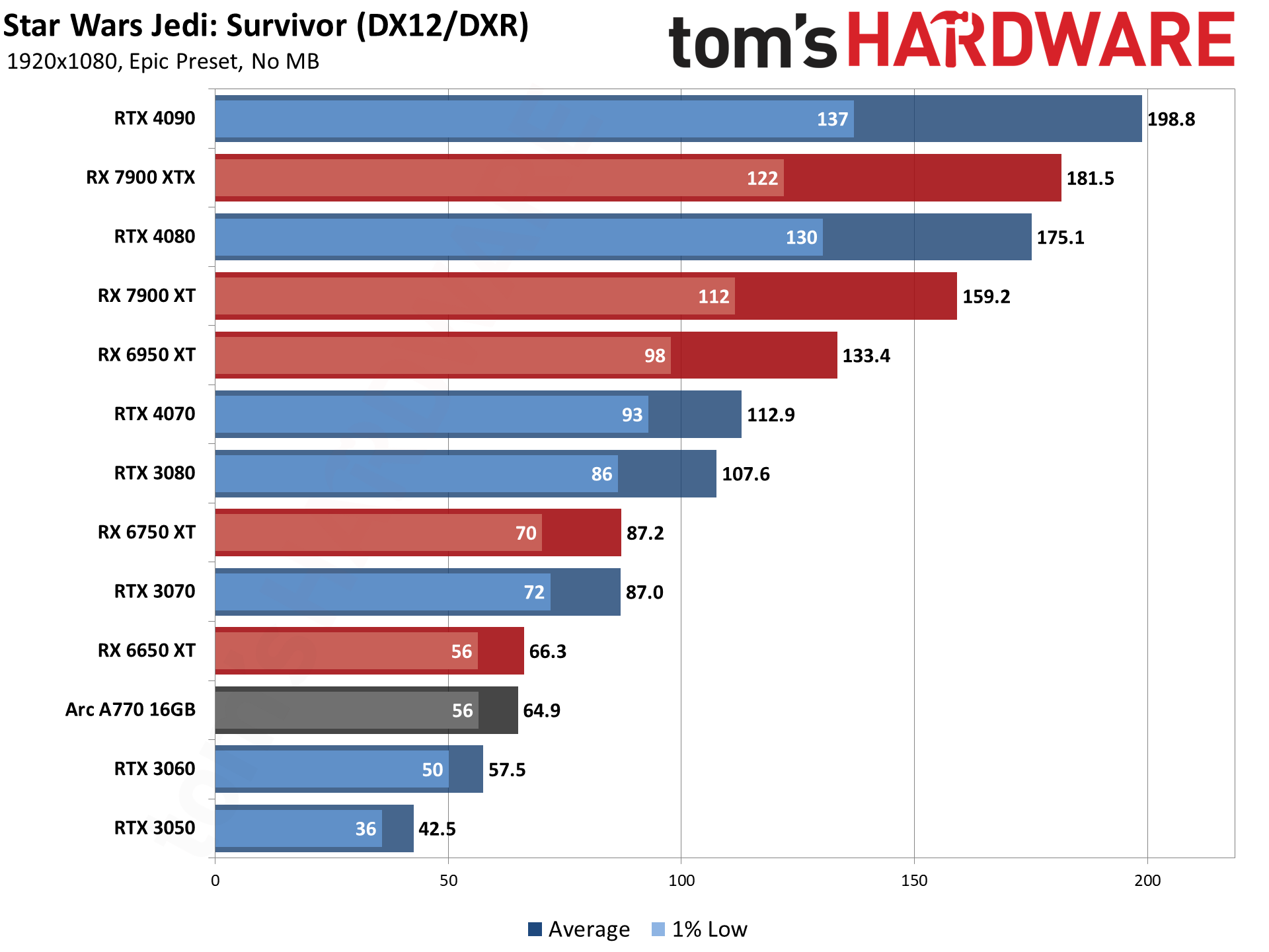

The jump from medium to epic quality causes a pretty massive performance hit, with only the RTX 4090 remaining close to the 200 fps limit. More critically, note that high-end GPUs like the RTX 4070 and RTX 3080 fall below 120 fps. If you're hoping to hit 144 fps or higher on your monitor, you'll need to lower some settings to high or else buy at least an RX 7900 XT / RTX 4080. You can imagine what will happen as we move up to 1440p epic...

At the bottom of the charts, the RTX 3050 now fails to come anywhere near 60 fps, and even the RTX 3060 comes up short. AMD's RX 6650 XT and Intel's Arc A770 16GB just barely edge past that mark, though there Intel's current drivers have some rendering errors.

The short summary for Intel Arc is that having Shadows on anything other than the low setting causes some graphics corruption, and turning on ray tracing, also causes some severe rendering errors. Intel informed us it's aware of the problem and should have a fix with its next drivers. Most likely, changes in the latest patches and code caused some issues for Intel's drivers, as they're supposed to be "game ready."

As far as VRAM goes, the most recent patch seems to have fixed the problems with massive performance drops on modest GPUs. The RTX 3070 only has 8GB of VRAM, but it still stays above 60 fps and effectively matches the performance of the RX 6750 XT.

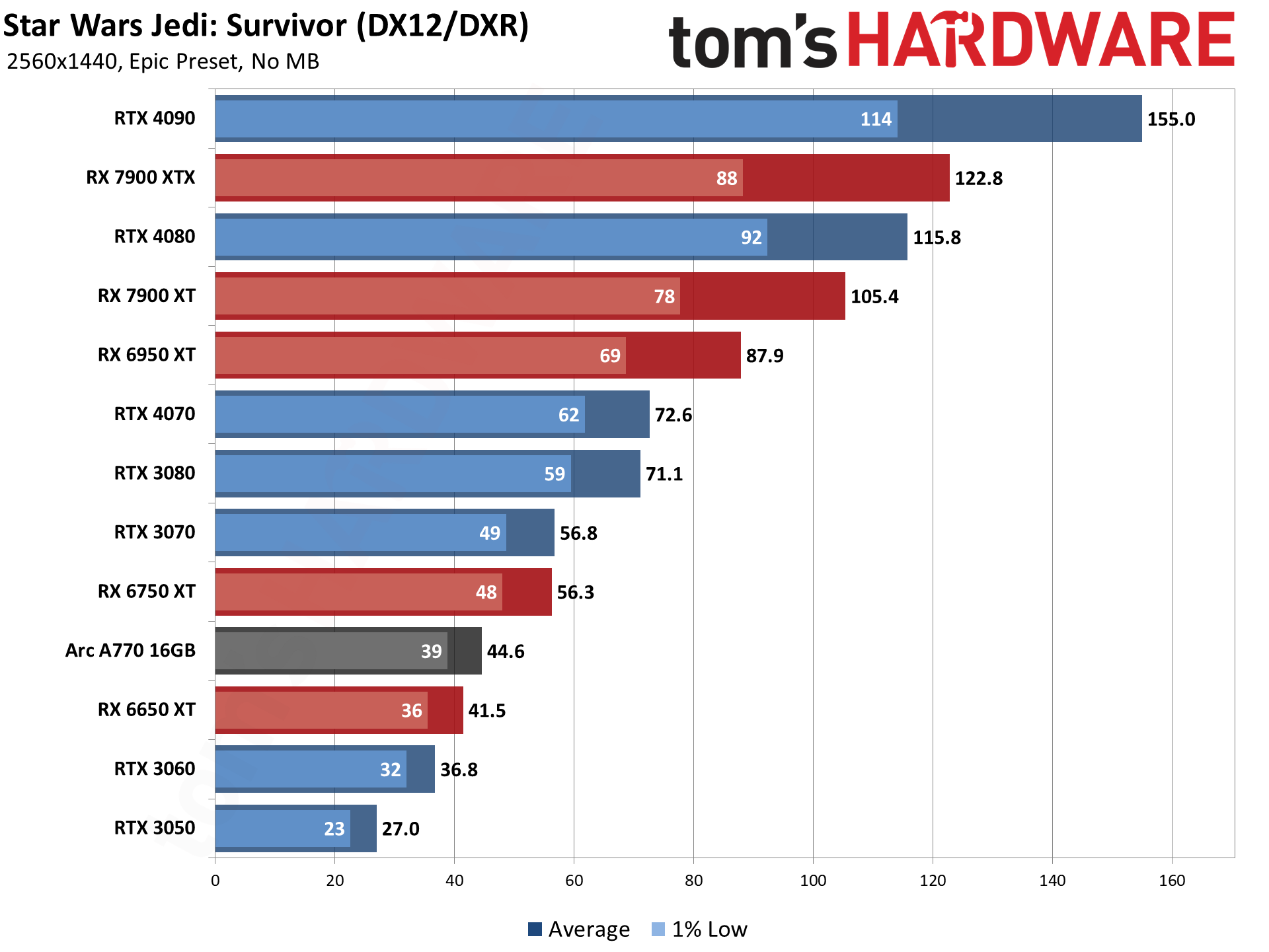

1440p epic now drops performance to 155 fps even on the RTX 4090. That's without any form of upscaling, and also without ray tracing, and we'll cover both of those in a bit. Given the game has a 200 fps limit, it's certainly not designed to run at extreme framerates.

If you're wanting to run Star Wars Jedi: Survivor at 1440p and at least 60 fps, you'll want an RTX 3080 / RTX 4080 or faster. While we didn't test it (due to the DRM limitations), we'd expect the RX 6800 XT to deliver similar performance to the RTX 3080.

Meanwhile, staying above 30 fps now requires at least an RTX 3060 or RX 6650 XT. (The RX 6600 would likely be borderline playable at these settings as well.) RTX 3050 clearly isn't sufficient for a good 1440p experience.

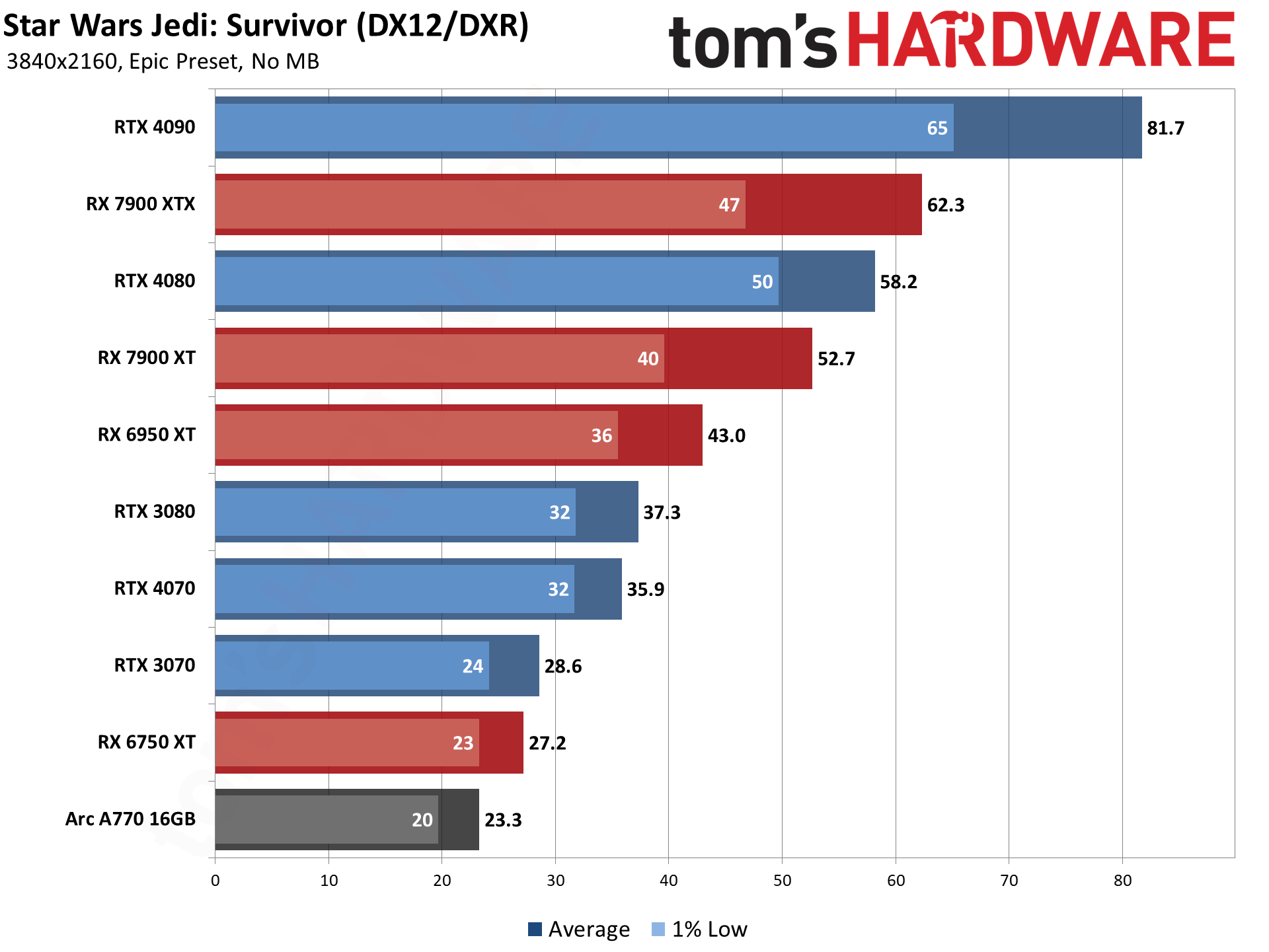

Finally, we have the 4K epic results. Considering the above benchmarks, the sub-60 fps results on most of the GPUs we tested shouldn't be too surprising. Some will call the game poorly optimized (and it clearly was at launch), but whatever the cause, only the RTX 4090 and RX 7900 XTX can break 60 fps at native 4K.

The RTX 3070 and RX 6750 XT now fall below 30 fps, and even the RTX 3080/4070 struggle to deliver a good experience. Dropping some settings to high would help, but you can also enable FSR2 upscaling. Before we get to the FSR2 results, however, we need to look at ray tracing.

Star Wars Jedi: Survivor Ray Tracing

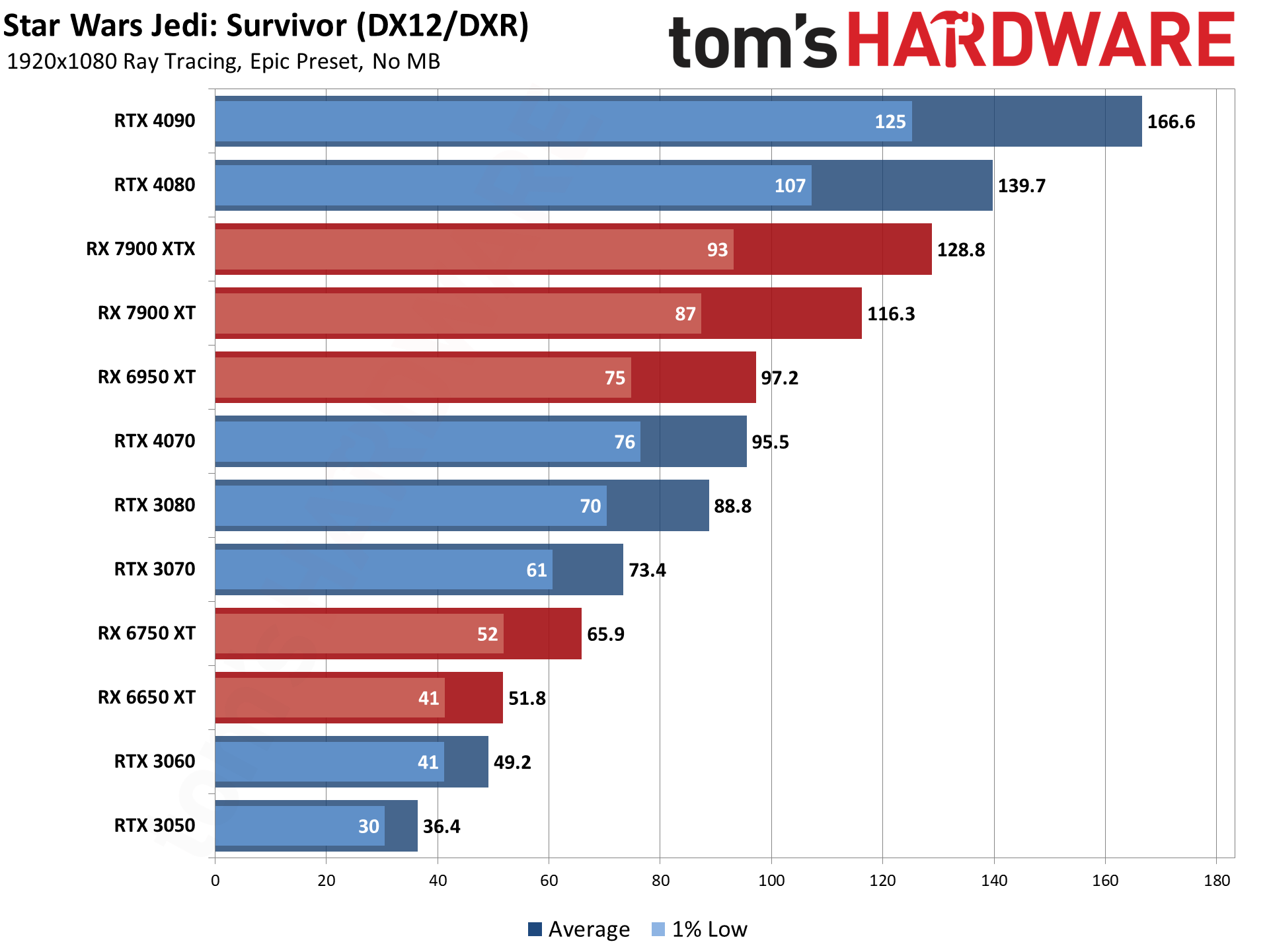

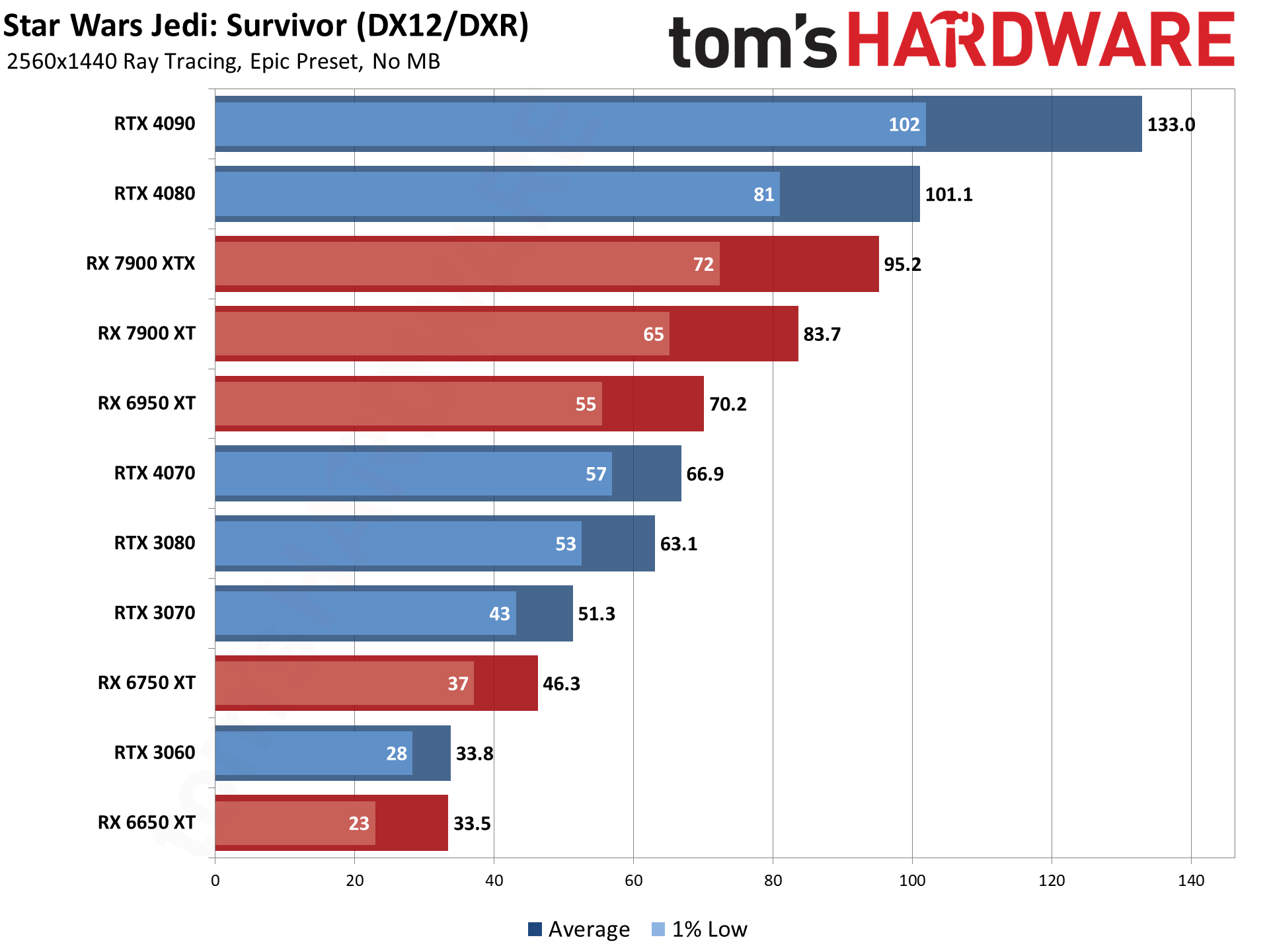

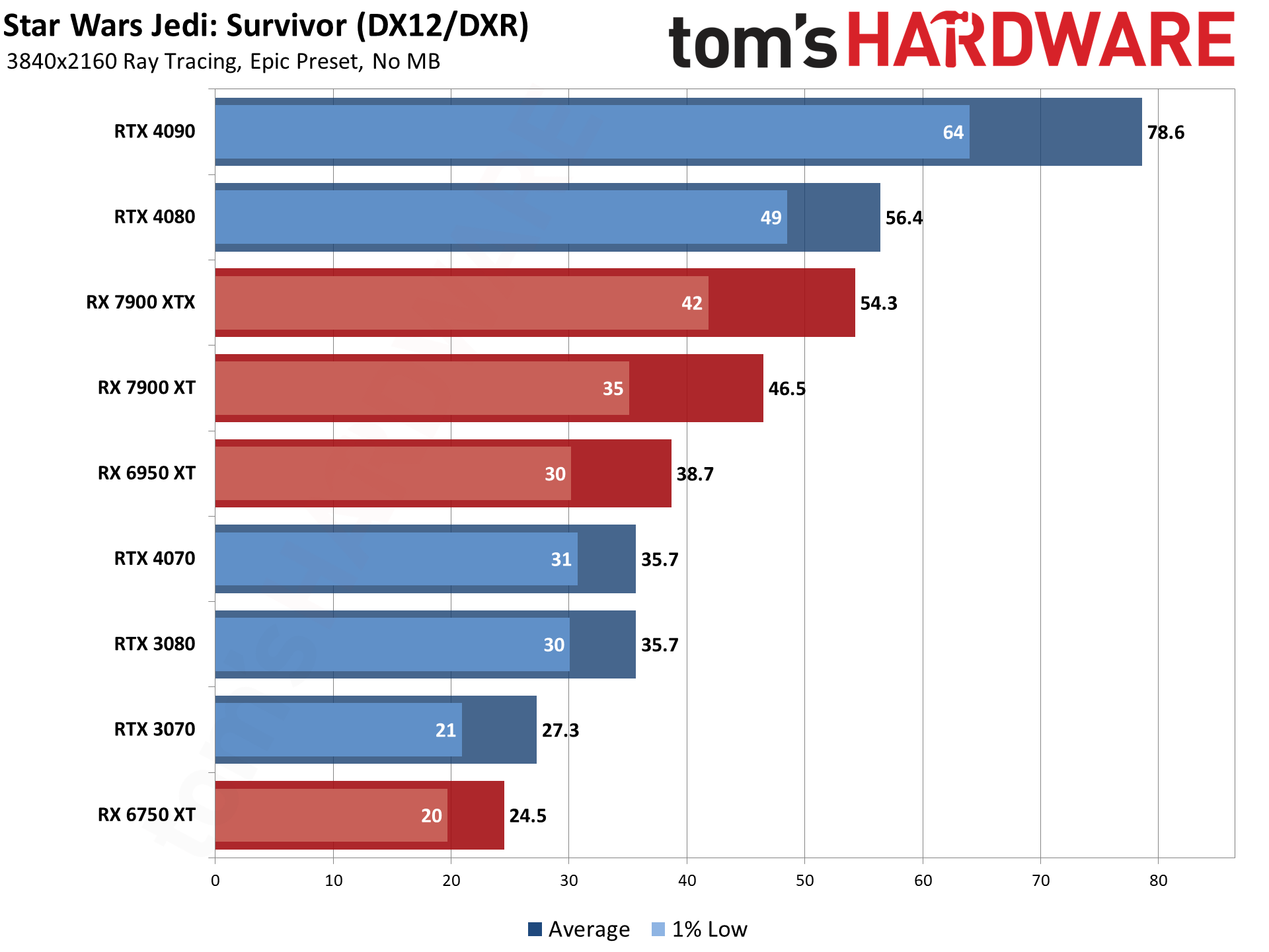

Love it or leave it, ray tracing is supported by Star Wars Jedi: Survivor. Depending on your graphics card, you'll see anywhere from a 10 to 30 percent drop in performance compared to having ray tracing off. One interesting side effect of ray tracing is that it can be less demanding on VRAM now, at least in some cases — the RTX 3050 for instance only drops by 14%, probably because it doesn't have to store as many high resolution shadow maps or something.

There's also the fact that Nvidia's ray tracing hardware is simply superior to AMD's solutions, so the AMD cards end up losing more like 25 to 30 percent, compared to 10 to 15 percent losses on the Nvidia side.

While there are definitely things that look better with ray tracing in Jedi: Survivor, it's most certainly not required to enjoy the game. The biggest improvement tends to be with things like the shadows under Cal's feet as well as some shadows on other objects (thanks to RTAO).

If you want to run Star Wars Jedi: Survivor with ray tracing turned on and epic quality — which is probably the only case where you'd consider it, as enabling RT while using a lower preset doesn't make much sense — you'll need at least an RX 6750 XT or RTX 3060 Ti for 60 fps at 1080p. For 1440p, the RTX 3080 will suffice, and probably an RX 6800 XT as well. 4K with maximum quality and ray tracing meanwhile can only break 60 fps on the RTX 4090. No surprise there.

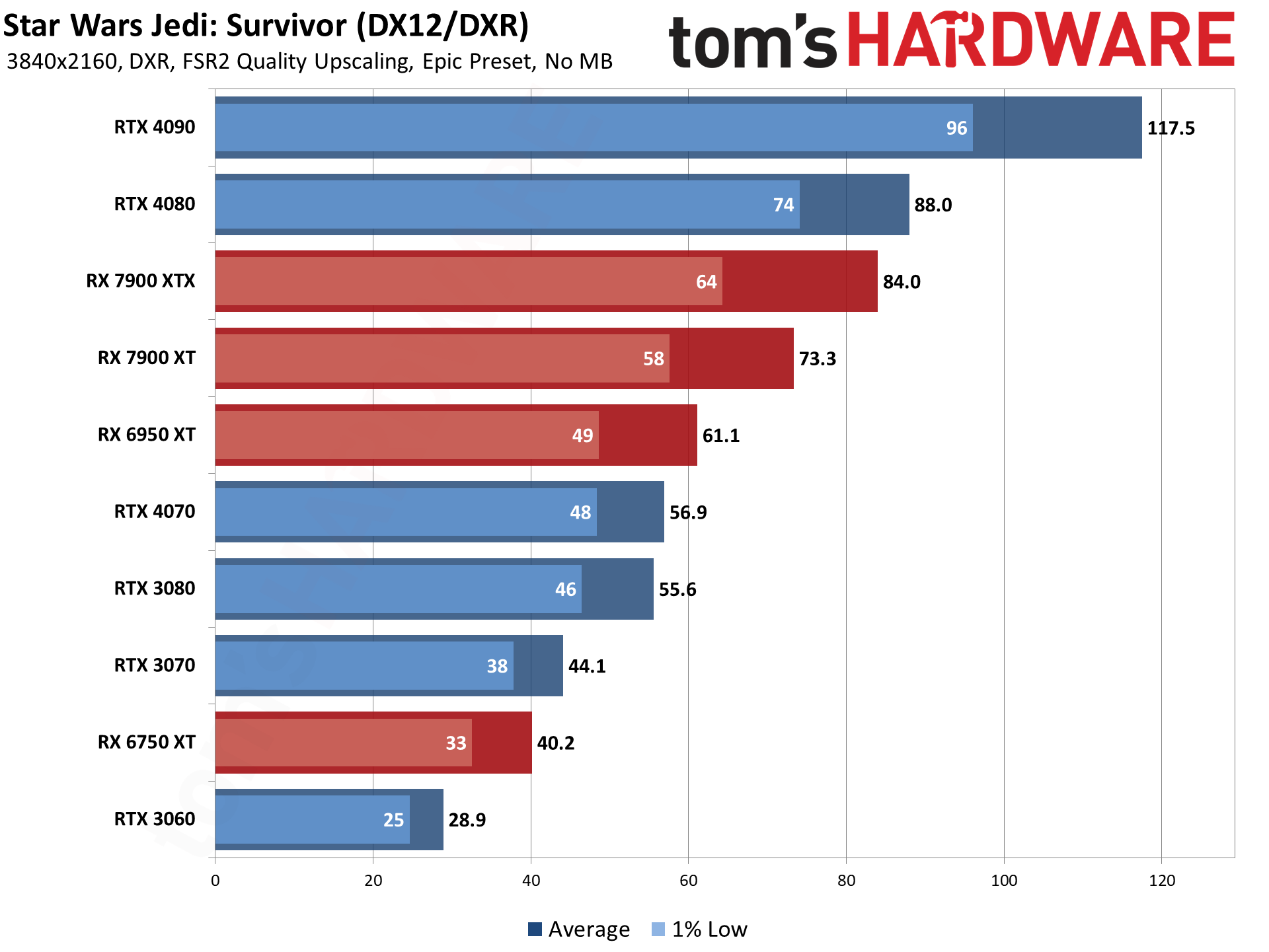

Star Wars Jedi: Survivor with RT and FSR2 Quality Upscaling

Native 4K with all the graphics settings maxed out isn't possible on most GPUs, but what about with upscaling? We used FSR2 Quality mode, which tends to look very close to native 4K in terms of image fidelity.

Even the mighty RTX 4090 gains a hefty 50% in performance thanks to FSR2. All of the other GPUs we tested improved by 55 to 65 percent with FSR2 Quality mode. And if you want even higher performance at the cost of some image fidelity, bumping to Balanced or Performance mode upscaling can increase framerates.

Quality mode upscaling wasn't enough to get the RTX 3060 above 30 fps, but the RX 6750 XT and above are now at least playable. The RX 6950 XT and above even manage to clear 60 fps, though really, you'd want at least the RX 7900 XT for a mostly stable 60 fps.

Star Wars Jedi: CPU Requirements

We did not perform a ton of CPU tests with Star Wars Jedi: Survivor, and in fact we only tested on other CPU: a Core i9-9900K. This was done because the CPU does appear to be a significant factor in some areas of the game (especially Koboh village). Here's the quick summary of results using an RTX 4070 Ti.

| Header Cell - Column 0 | Average | 1% Low |

|---|---|---|

| Koboh Wilds (1080p Med) | 147.8 | 109.7 |

| Koboh Wilds (1080p Epic) | 133.6 | 95.8 |

| Koboh Wilds (1440p Epic) | 91.6 | 64.6 |

| Koboh Town (1080p Med) | 74.7 | 37.0 |

| Koboh Town (1080p Epic) | 68.4 | 32.8 |

| Koboh Town (1440p Epic) | 60.9 | 32.3 |

Now, I realize we didn't do the other tests with the RTX 4070 Ti, but it should land roughly halfway between the 4070 and 4080. In our main test area, performance at 1080p medium was at least 25% lower — the 4070 and 4080 hit the 200 fps limit on the 13900K, while the 9900K only got 148 fps. Minimum fps was also over 20% lower.

Bumping to 1080p epic, things look a lot more GPU limited for the Koboh wilds, at least for average fps. The same goes for 1440p epic, which logically would be even more GPU limited — 92 fps is probably pretty close to what we'd get on the i9-13900K with the RTX 4070 Ti.

We've also included Koboh village testing results just to show what is probably the worst-case scenario for Star Wars Jedi: Survivor. Even at 1080p medium, the 9900K only managed 75 fps, and that dropped to 68 fps with 1080p epic. If you're running a CPU that's several generations old, you'll definitely encounter some slowdowns in the village area.

Star Wars Jedi: Survivor Closing Thoughts

Launch performance for Star Wars Jedi: Survivor was more than a bit questionable. Even with the latest patch in place, this is a demanding game. Don't go into it expecting to get 60 fps at 4K native with maxed-out settings and ray tracing, unless (maybe) you have an RTX 4090 and a top-tier CPU. Even then, places like Koboh village are going to stutter quite a bit. But at least based on our experience, the game runs decently if you temper your expectations in terms of settings and resolution.

And hey, it's May the Fourth, so if you're a Star Wars fan and haven't had a chance to try the game yet, maybe now is the time to take the plunge. You'll still want to pay attention to the recommended hardware, particularly the 8GB VRAM if you intend to run high or epic settings. Once we've got a chance to test a few other cards, we'll see about updating the charts with some 4GB and 6GB GPUs just to see what happens if you fall below the recommendations.

Image quality drops off quite a bit at medium rather than epic settings, but the high preset gets you most of epic without pounding your hardware as hard. Also, don't expect every area of the game to play perfectly smoothly, especially right when you load into a new area. On slower hardware, you'll definitely get some stuttering when new data needs to load from storage.

Outside of technical considerations, Star Wars Jedi: Survivor is proving to be enjoyable, at least to those of us on the Tom's Hardware staff that have tried it. (Shout out to Aaron for letting me borrow his account to get a few additional GPUs tested. And shame on EA for still putting in stupid DRM limits like the number of GPUs / PCs in a 24-hour period.) If you liked the previous installment, Star Wars Jedi: Outcast, it probably goes without saying that the sequel is more of the same. Our sister site PC Gamer scored the game an 80 if you're wondering, and we feel that's a pretty fair assessment.

The graphics are good and, at times, great, letting you explore new environments and interact with the Star Wars universe via a traditional third person platformer game. And yes, you get to wield a lightsaber and customize its appearance as you see fit, using force powers and all the other trimmings. If that's what you're looking for and you have a reasonably capable PC, you should be happy.

If you don't have a high-end PC, feelings are likely to be far more mixed. We haven't tried it on a system with 16GB of RAM, never mind 8GB. We haven't tried it running from a hard drive instead of an SSD. We didn't try it on a minimum-spec RX 580 or GTX 1070. Give us time, and we can look at those as well. So sound off in the forums to let us know what other GPUs you'd like us to test. But the bottom line is that you're not going to get the full Star Wars Jedi: Survivor experience on PC if your hardware is just scraping by — certainly not if you attempt to run at epic quality settings.

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

JarredWaltonGPU So, a few quick notes here, in case anyone skims the article.Reply

No area represents the whole game, and it's impossible to test every hardware and software combination people are running. On our "extreme" test PC, the game generally runs fine. We don't have a bunch of other background tasks running, we don't have esoteric hardware and drivers installed, etc. This is a clean Windows install — not clean as in "brand new" but clean as in I don't install a bunch of cruft. Windows Defender is off, Virtualization Based Security is off, etc.

Because I run through an area multiple times while testing, stuttering caused by loading in new data isn't really an issue. The first run (that's discarded) gets everything into memory, the subsequent runs generally don't stutter. If you're playing the game and running around between areas, you'll still get some stutters — how bad they are depends at least in part on your storage speed and other hardware.

"You didn't test on different CPUs!" I know. I'm not our CPU tester. This is only a look at GPUs while using a system that should remove all other bottlenecks as much as possible. (Yeah, Ryzen 7 7800X3D or Ryzen 9 7950X3D might be faster; I don't have one of those for testing either.)

"What about graphics card XYZ!?" I'll tell you what, if you post a GPU you want tested and get five people to like the comment (one GPU per comment!) I'll see about adding it to my test list. (Don't try to game the system.) The DRM means I can't possibly test more than eight GPUs per day, and honestly I don't want to do a ton more testing of this game. It's not going into my staple of benchmarks, in other words. Plus, give it another week and we'll probably have another patch that alters performance, hopefully for the better, which means these results are a snapshot in time.That's all for now. Happy Star Warsing and May the Fourth be with you, alwayth. -

luissantos The most interesting thing for me would've been to see a chart comparing the pre-patch and post-patch performance, TBH. Right now I'm just looking at the numbers in a vacuum.Reply

Also, I've seen some videos online (from Hardware Unboxed and others) where they show nVidia cards suffering from severe texture popping, meaning that raw FPS numbers no longer paint an accurate picture just by themselves, particularly when VRAM becomes the limiting factor. Would love to see this addressed in future performance comparison articles as well. -

JarredWaltonGPU Reply

I chalk some of that up to guerilla marketing. AMD has pushed multiple games with higher than usual VRAM requirements, and often you'll get major outcry about poor performance on Nvidia (especially at launch) when that happens — anything to paint Intel or Nvidia in a bad light. The Last of Us Part 1, some of the Resident Evil games, Godfall, God of War... The list goes on. There are a lot of games where I can't help but question the "need" to push VRAM use beyond 8GB, particularly when the visual upgrades provided aren't actually noticeable.luissantos said:The most interesting thing for me would've been to see a chart comparing the pre-patch and post-patch performance, TBH. Right now I'm just looking at the numbers in a vacuum.

Also, I've seen some videos online (from Hardware Unboxed and others) where they show nVidia cards suffering from severe texture popping, meaning that raw FPS numbers no longer paint an accurate picture just by themselves, particularly when VRAM becomes the limiting factor. Would love to see this addressed in future performance comparison articles as well.

That an AMD-promoted game had issues with Nvidia hardware at launch, and then those issues got (mostly/partially) fixed with driver and game updates within a week, is the real story IMO. We've seen the reverse as well, though not so much recently that I can think of unless you count Cyberpunk 2077 RT Overdrive. Actually, you could probably make the claim of Nvidia pushing stuff that tanks performance on AMD GPUs for any game that uses a lot of ray tracing, though in some cases the rendering does look clearly improved.

Part of this is probably also a rush to release the game head of May the 4th. Or at least that's the only thing that I can come up with. Was there any good reason to ship the game in a poorly running state, rather than spend a few more weeks? Or maybe EA just didn't realize how bad things were, and releasing it to the public provided the needed feedback. Whatever.

I did not get early access or launch access, so the first time I tried the game was with the patches already available (and there's no way with Steam/EA to stay with the original launch version). Aaron however has been playing on an RTX 2060 Super at 3440x1440 with FSR2 Quality and a mix of ~high settings since launch and hasn't had too many issues. If you try maxing out settings on hardware that can't really handle those settings, don't be surprised if performance is poor. -

KyaraM Which patch are you refering to and which area of Koboh if I may ask? Unfortunately, with the patch from Monday, Koboh is still not running all that well on my system with a 4070Ti so these results surprise me. Really can't confirm getting such high FPS anywhere near the settlement. Though the result for the 3070 with RT in 1080p looks a little lower what I got pre-patch on my 3070Ti (55-56 FPS average around Koboh settlement, same for the 4070Ti in 1440p, ironically, even though that one also has a stronger CPU partner).Reply

As for GPUs, personally I would have liked to see the 4070Ti. Ada has a big enough segmentation to warrant dropping a low-end card for, imho. -

kerberos_20 fps looking fine, can confirm with rx6800 (non XT) 60 fps with vsync running fine 1440p epic no rt, there maybe few areas which drops fps a little to 50s, but that is cpu bottleneck (2 cpu threads maxed, 3rd thread with denuvo still have spare resources), most areas runs fine up to 8core load, but some just doesnt want to scale upReply -

jeremyj_83 Is it just me or do newer RT games seem to not have the massive performance drop on Radeon GPUs as they did earlier? AMD's RT implementation isn't as fast as nVidia's but to me it doesn't look as bad as it did in 2020 when the 6000 series launched.Reply -

atomicWAR Reply

I think there is some luck of the draw in hardware configs. I am running a RTX 4090 on a X670E Taichi with a 7950X, 64GB of DDR5 6000 CL 30 and my numbers match up well with Jarred's. Yet when I poke around the Steam forums there are still users having issues with low frame rates and/or bad frame times. Regardless many of us saw an over doubling of frame rate with frame times smoothing out as well in just three days time. While the game never should have been released in the fashion it was, how the fast they released a patch that fixed many of the most glaring issues gamers were facing was nothing short of impressive. Now they need to focus on making these fixes work with more hardware configs.KyaraM said:Which patch are you refering to and which area of Koboh if I may ask? Unfortunately, with the patch from Monday, Koboh is still not running all that well on my system with a 4070Ti so these results surprise me. Really can't confirm getting such high FPS anywhere near the settlement. Though the result for the 3070 with RT in 1080p looks a little lower what I got pre-patch on my 3070Ti (55-56 FPS average around Koboh settlement, same for the 4070Ti in 1440p, ironically, even though that one also has a stronger CPU partner).

As for GPUs, personally I would have liked to see the 4070Ti. Ada has a big enough segmentation to warrant dropping a low-end card for, imho. -

cknobman I really annoys me how everyone always complains about EA, their business practices, and crap product releases; YET THEY STILL BUY THE GAMES AT RELEASE (or shortly after).Reply

Companies could care less about people who complain but still buy products.

Its insanity TBH. Complain, still buy, then hope for change.

Change will only happen when consumers voice with their wallet. -

Makaveli "If you liked the previous installment, Star Wars Jedi: Outcast"Reply

I do not believe this was the last game in this series.

Should be this below.

Star Wars Jedi: Fallen Order -

ReplyJarredWaltonGPU said:"You didn't test on different CPUs!" I know. I'm not our CPU tester. This is only a look at GPUs while using a system that should remove all other bottlenecks as much as possible. (Yeah, Ryzen 7 7800X3D or Ryzen 7950X3D might be faster; I don't have one of those for testing either.)

JarredWaltonGPU said:Star Wars Jedi: Survivor Closing ThoughtsLaunch performance for Star Wars Jedi: Survivor was more than a bit questionable. Even with the latest patch in place, this is a demanding game. Don't go into it expecting to get 60 fps at 4K native with maxed-out settings and ray tracing, unless (maybe) you have an RTX 4090.

It has performed exceptionally on my 7950x3D with 4090... epic preset with RT. 60 fps at 4K native and any frame drops I've seen were down to like 55... nothing big.

Haven't played in a couple days so I have to download the patch but I haven't had any complaints thusfar.

atomicWAR said:I think there is some luck of the draw in hardware configs. I am running a RTX 4090 on a X670E Taichi with a 7950X, 64GB of DDR5 6000 CL 30 and my numbers match up well with Jarred's.

Now they need to focus on making these fixes work with more hardware configs.

Yeah we have similar configs. Hopefully they'll get it squared away for lesser systems.