AMD Radeon RX 580 8GB Review

Why you can trust Tom's Hardware

Power Consumption

Power Consumption Overview

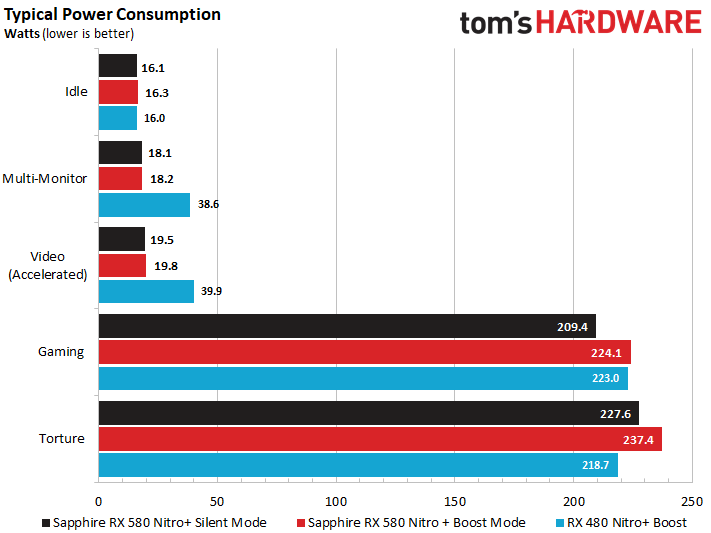

Our exploration of power consumption begins with a look at the loads while running different tasks. After all, this is where AMD made the most headway with Radeon RX 580.

Specifically, power consumption is way down with multiple monitors attached, so long as you're running them all at the same resolution. Moreover, power use drops while watching video using hardware acceleration. An aggressively-overclocked card like Sapphire's Nitro+ Radeon RX 580 Limited Edition set to its O/C mode really shows off the effect of these lower GPU and memory clock rates.

Of course, it'd be great to see AMD roll these enhancements out to older Polaris-based cards via firmware update, but it sounds like the company has no plans to do this.

At 1411 MHz in silent mode and 1450 MHz in boost mode, you're talking about much higher clock rates than Ellesmere was originally set to run at. Impressively, Sapphire enables these frequencies without using more power than the Radeon RX 480. In our gaming loop, its Nitro+ Radeon RX 580 operates at an almost-constant 1450 MHz, whereas the previous-gen card was limited to 1350 MHz and used just as much power. One explanation for this is lower leakage current. A much better cooling solution helps keep GPU temperatures down, and we estimate this provides an advantage of ~10W.

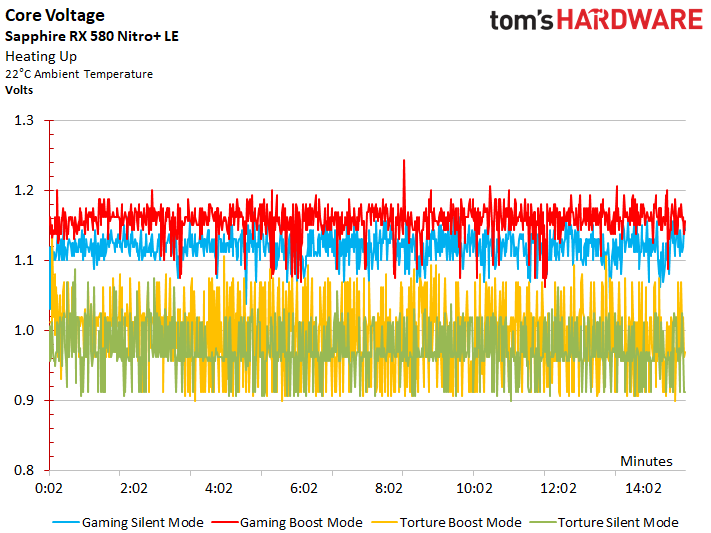

The Radeon RX 580's higher frequency does come at a price, though: it requires a higher voltage setting.

Sapphire's Nitro+ Radeon RX 480 averages 1.15V and peaks at 1.1563V. Those numbers increase to an average of 1.1688V and a peak of 1.19V for the company's Nitro+ Radeon RX 580 Limited Edition, creating more power loss. This doesn't show up in the graph, though, because of the RX 480 card's much higher leakage current.

A Closer Look at Silent Mode

The Nitro+ Radeon RX 580 Limited Edition ships with a silent mode clock rate of 1411 MHz, which matches the boost mode setting of Sapphire's non-Limited Edition model. As a result, our results should apply to that card as well.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

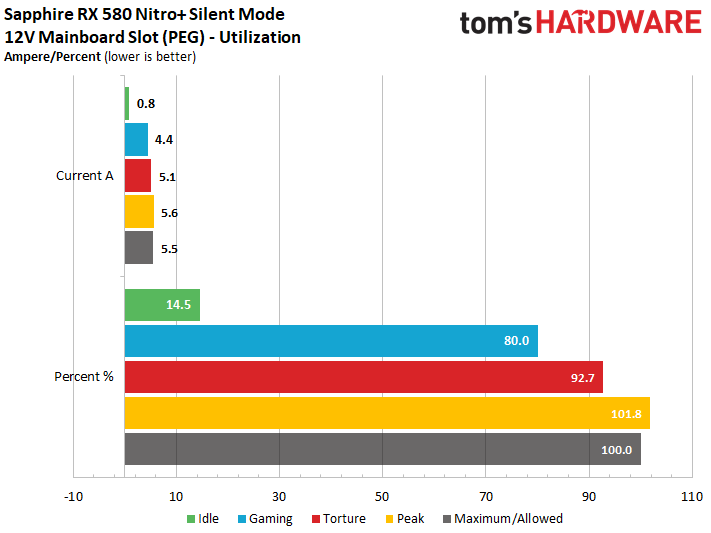

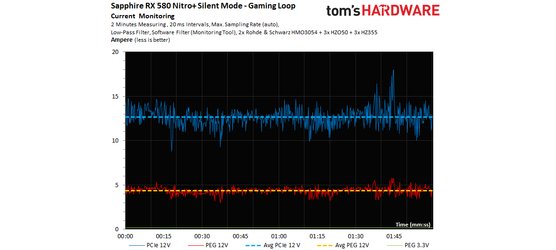

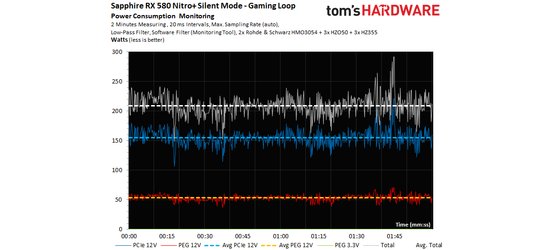

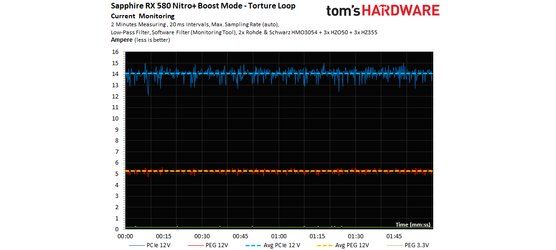

First, we present power consumption during our gaming loop:

The rails are balanced fairly well, though there are some brief peaks that exceed the motherboard slot’s maximum rating of 5.5A.

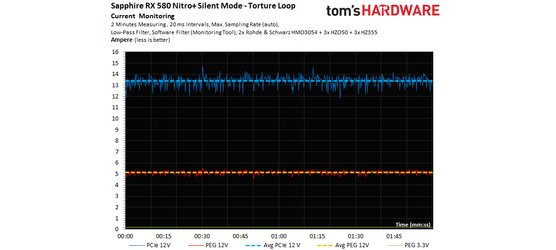

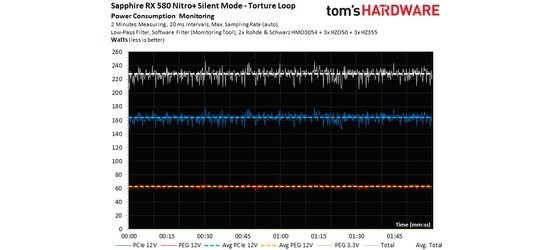

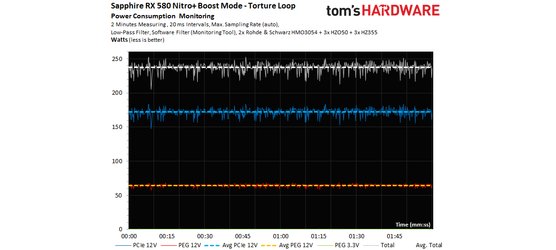

During our stress test, all of the numbers go up (some of them significantly). Now the motherboard slot consistently nudges up to the PCI-SIG specification's limits.

Illustrating the motherboard slot’s results with a bar graph shows just how close to the limit Sapphire gets with its Nitro+ Radeon RX 580 Limited Edition. The peaks aren’t particularly meaningful since they're brief; it's the constant loads you want to focus on. Those stay well within a safe range.

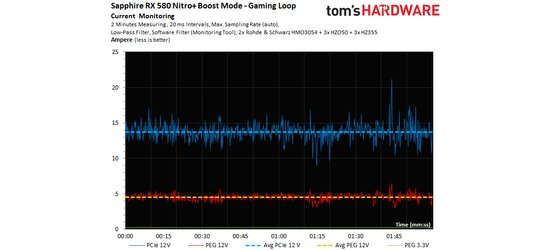

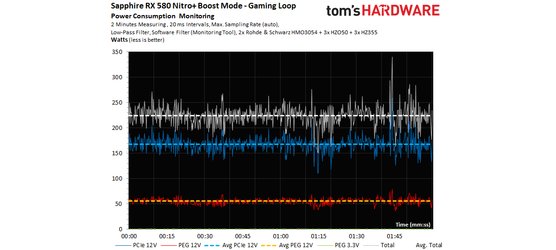

A Closer Look at Boost Mode

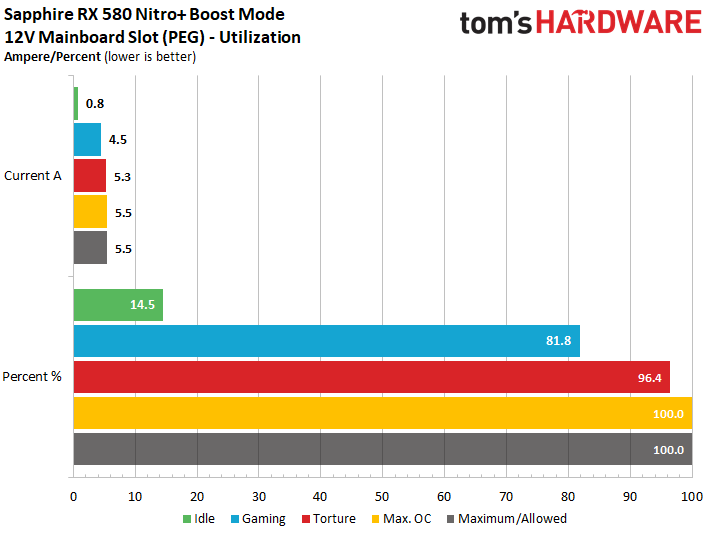

Switching over to boost mode pushes the Nitro+ Radeon RX 580 Limited Edition to a GPU clock rate of 1450 MHz. That's a big step up from the vanilla version's ceiling, but it should still represent what you might see after some manual overclocking. With this in mind, let’s take a look at power consumption during our gaming loop:

The rails are still balanced fairly well, even though we observe short peaks that exceed the motherboard slot’s maximum rating of 5.5A once again.

Again, our results increase significantly during the stress test, with the motherboard slot’s power consumption hovering around the PCI-SIG specification's limit.

The motherboard slot’s load doesn't increase, since the GPU is driven by the auxiliary power connectors. However, our numbers with manually overclocked memory, which is supplied by the PCIe slot, show Sapphire toeing the line.

Power Savings with Chill

AMD is making a big deal of its Chill technology with Radeon RX 580. This isn't new, though. Check out Benchmarking AMD Radeon Chill: Pumping The Brakes On Wasted Power from last December if you want more information.

Comparing To GeForce GTX 1060, 1070, and 1080

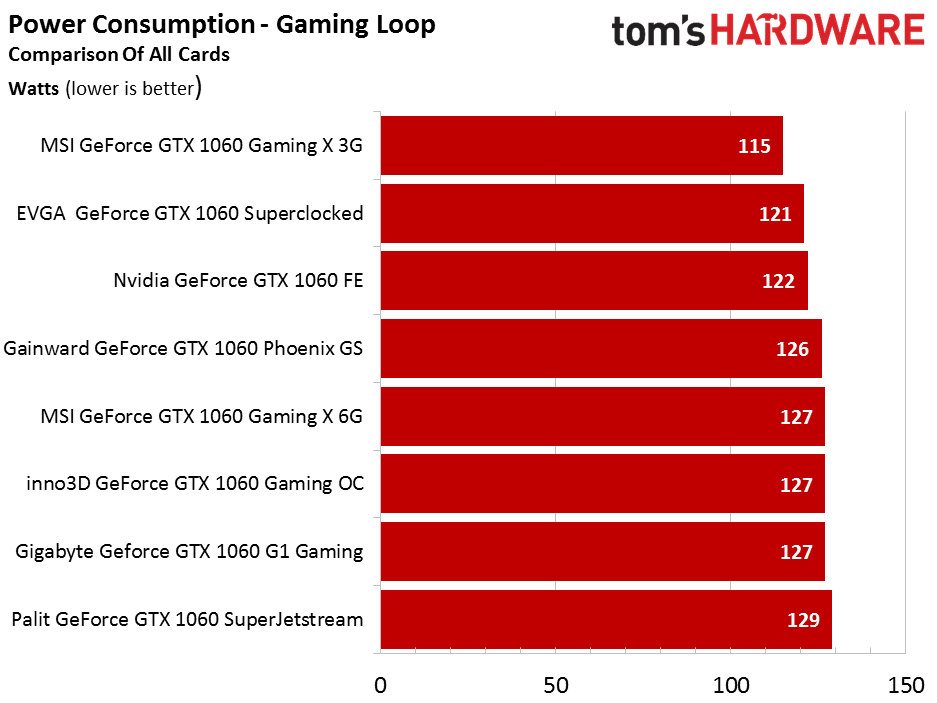

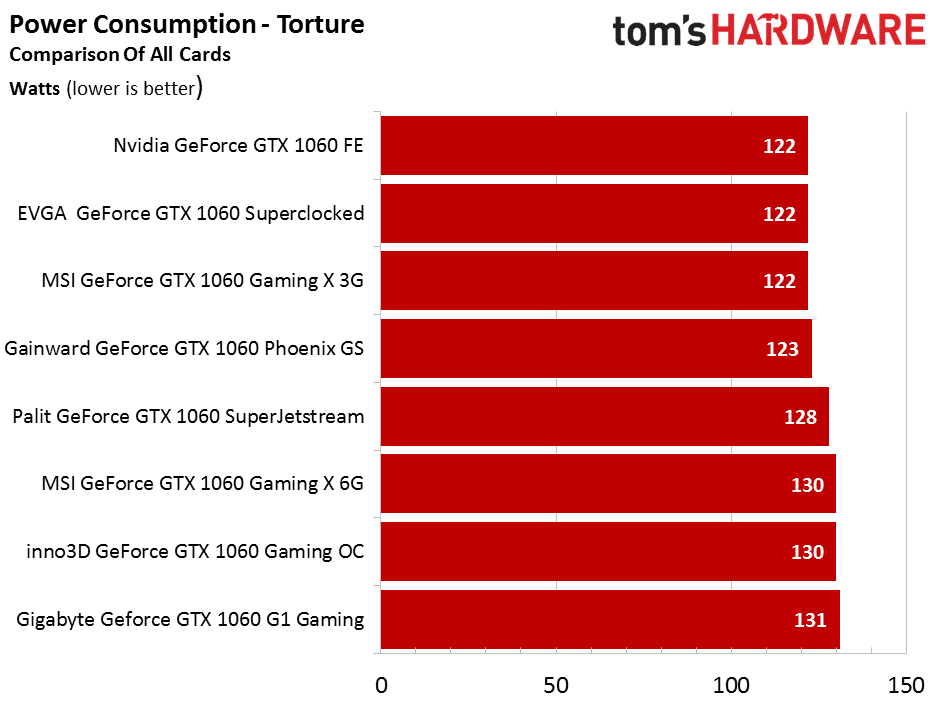

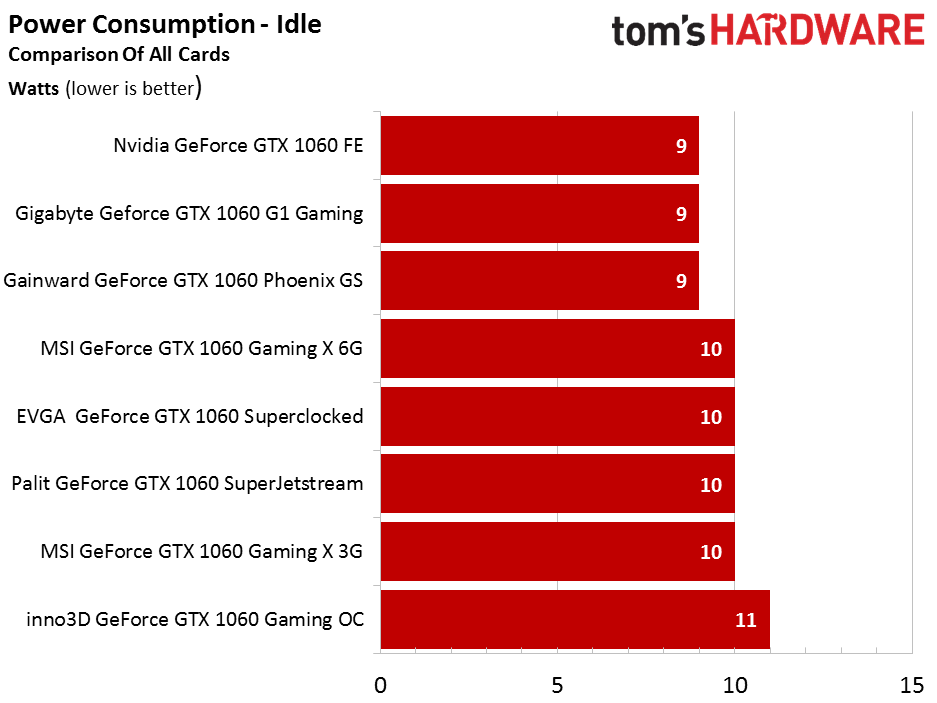

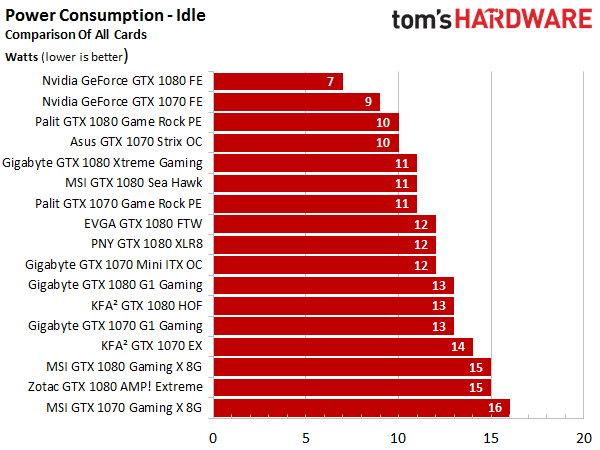

For a point of comparison to Radeon RX 580, check out the following power consumption measurements from several GeForce GTX 1060s. They fall well below Sapphire's overclocked board.

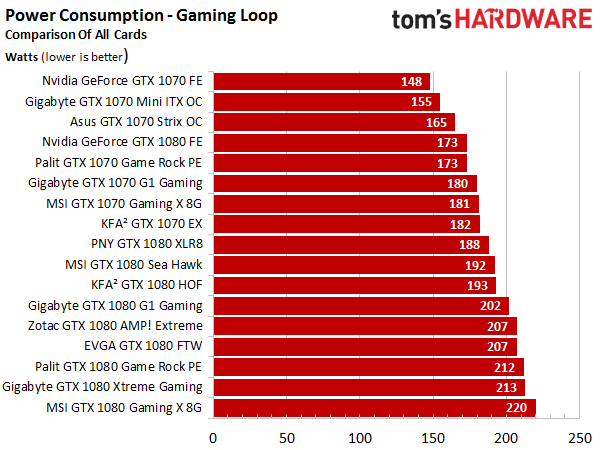

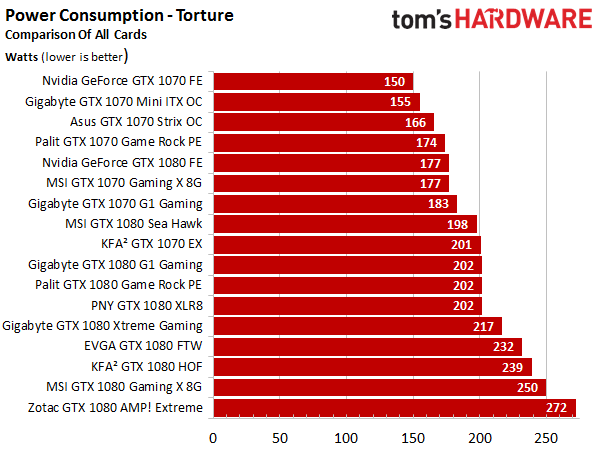

Why is it necessary to throw this information in as a post-script. Well, we think it's pretty important to mention that Sapphire's Nitro+ Radeon RX 580 Limited Edition uses as much power as overclocked third-party GeForce GTX 1080s in our gaming loop:

Bottom Line

The Radeon RX 580 shows us that when finesse isn't in the cards, brute force works, too. Dialing in a higher core voltage (and enduring its higher power losses) are the price you pay for a few extra megahertz of GPU clock rate. The improvements to get excited about are found elsewhere: AMD's Radeon RX 580 demonstrates lower power consumption in multi-monitor configurations and hardware-accelerated video playback thanks to a new memory clock state.

MORE: Best Graphics Cards

MORE: Desktop GPU Performance Hierarchy Table

MORE: All Graphics Content

Current page: Power Consumption

Prev Page Ghost Recon, The Division, and The Witcher 3 Next Page Temperatures and GPU Frequencies

Igor Wallossek wrote a wide variety of hardware articles for Tom's Hardware, with a strong focus on technical analysis and in-depth reviews. His contributions have spanned a broad spectrum of PC components, including GPUs, CPUs, workstations, and PC builds. His insightful articles provide readers with detailed knowledge to make informed decisions in the ever-evolving tech landscape

-

max0x7ba Battlefield 1 2560x1440, Ultra benchmark Radeon RX 580 minimum fps does not look right.Reply -

lasik124 Unless I am really missing something, can't you just slightly overclock the 480 to match the slight performance boost the 580 has?Reply -

FormatC Reply

Take a look at the frametimes at start. I think, it's a driver issue, because it was reproducible ;)19579183 said:Battlefield 1 2560x1440, Ultra benchmark Radeon RX 580 minimum fps does not look right.

No, it were in each case less than 1375 MHz. Slower as the Silent Mode of this 580 and simply too hot for my taste. The problem is not the pre-defined clock rate itself but the reduced real clocks from power tune due temps and voltage/power limtations;)19579237 said:Unless I am really missing something, can't you just slightly overclock the 480 to match the slight performance boost the 580 has?

-

lasik124 Reply19579253 said:

Take a look at the frametimes at start. I think, it's a driver issue, because it was reproducible ;)19579183 said:Battlefield 1 2560x1440, Ultra benchmark Radeon RX 580 minimum fps does not look right.

No, it were in each case less than 1375 MHz. Slower as the Silent Mode of this 580 and simply too hot for my taste. The problem is not the pre-defined clock rate itself but the reduced real clocks from power tune due temps and voltage/power limtations;)19579237 said:Unless I am really missing something, can't you just slightly overclock the 480 to match the slight performance boost the 580 has?

So i guess what Im trying to ask is it worth buying at 580 (Currently at a 7870) or save a couple bucks pick up a 480 non reference cooler and be able to slightly overclock it to get in game benchmarks similar if closely identical to the current 580?

-

Math Geek no, you'll get the 480 numbers with a 480. the tested card was already oc'ed and you won't get any better manually. the changes made to the 580 can't be done to the 480.Reply

want the extra few fps, then you'll want to get a 580. -

Tech_TTT I dont get it , for sure AMD could release this card as the original RX 480 long ago , why did they allow Nvidia GTX 1060 to steal the RX 480 share ?Reply

Very Stupid Strategy ... I now alot of people who bought GTX 1060 and wished AMD were better Just to take advantage of the cheaper Freesync Monitors.

AMD you lost millions of buyers !!! for nothing !!! -

turkey3_scratch Reply19579402 said:I dont get it , for sure AMD could release this card as the original RX 480 long ago , why did they allow Nvidia GTX 1060 to steal the RX 480 share ?

Very Stupid Strategy ... I now alot of people who bought GTX 1060 and wished AMD were better Just to take advantage of the cheaper Freesync Monitors.

AMD you lost millions of buyers !!! for nothing !!!

Don't think it's as simple as you make it out to be. They're a huge company with a ton of professionals, they know what they're doing. -

madmatt30 Reply

.19579402 said:I dont get it , for sure AMD could release this card as the original RX 480 long ago , why did they allow Nvidia GTX 1060 to steal the RX 480 share ?

Very Stupid Strategy ... I now alot of people who bought GTX 1060 and wished AMD were better Just to take advantage of the cheaper Freesync Monitors.

AMD you lost millions of buyers !!! for nothing !!!

Better binning ,refinements on the power circuitry - something thats come with time after the initial production runs of the rx470/480.

Fairly normal process for how amd work in all honesty.

Has it lost them some custom to prospective buyers in the last 6 months since the rx series was released ?? Maybe a few - not even 1% of the buyers they'd have lost if theyd actually held the rx series release back until now though!!

-

Tech_TTT Reply19579428 said:

.19579402 said:I dont get it , for sure AMD could release this card as the original RX 480 long ago , why did they allow Nvidia GTX 1060 to steal the RX 480 share ?

Very Stupid Strategy ... I now alot of people who bought GTX 1060 and wished AMD were better Just to take advantage of the cheaper Freesync Monitors.

AMD you lost millions of buyers !!! for nothing !!!

Better binning ,refinements on the power circuitry - something thats come with time after the initial production runs of the rx470/480.

Fairly normal process for how amd work in all honesty.

Has it lost them some custom to prospective buyers in the last 6 months since the rx series was released ?? Maybe a few - not even 1% of the buyers they'd have lost if theyd actually held the rx series release back until now though!!

Thats the Job of the R&D in the beta testing interval .. not after release, I am not buying this explanation at all.