AMD Radeon RX 580 8GB Review

Why you can trust Tom's Hardware

Ghost Recon, The Division, and The Witcher 3

Tom Clancy’s Ghost Recon Wildlands (DX11)

Our first experience with Ghost Recon Wildlands was our Nvidia GeForce GTX 1080 Ti 11GB Review, right after the game launched.

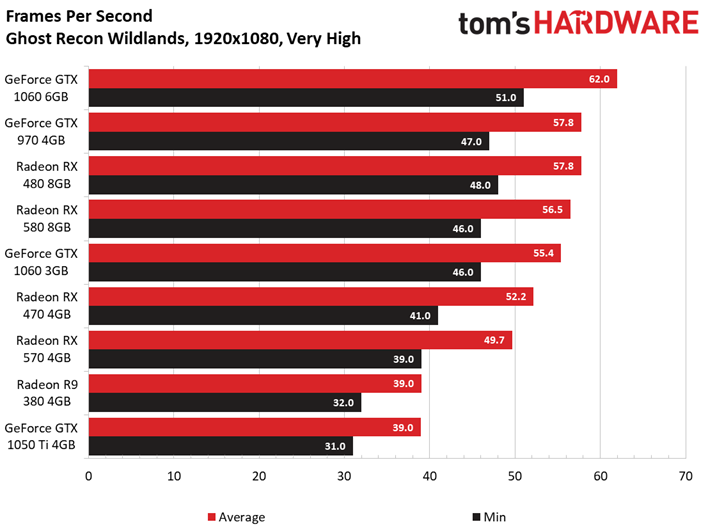

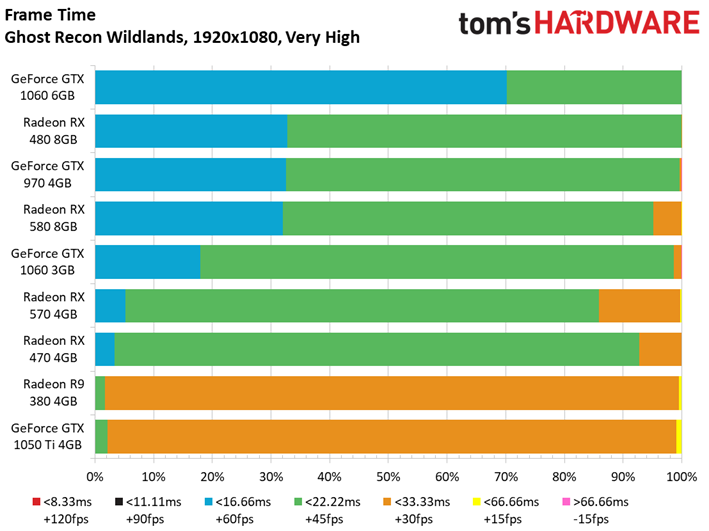

In that piece, we saw the GeForce GTX 980 Ti beat a Radeon R9 Fury X, so it’s not particularly surprising that GeForce GTX 1060 6GB does the same to Radeon RX 580. We weren’t, however, expecting Radeon RX 480 to land in front of AMD’s new RX 580.

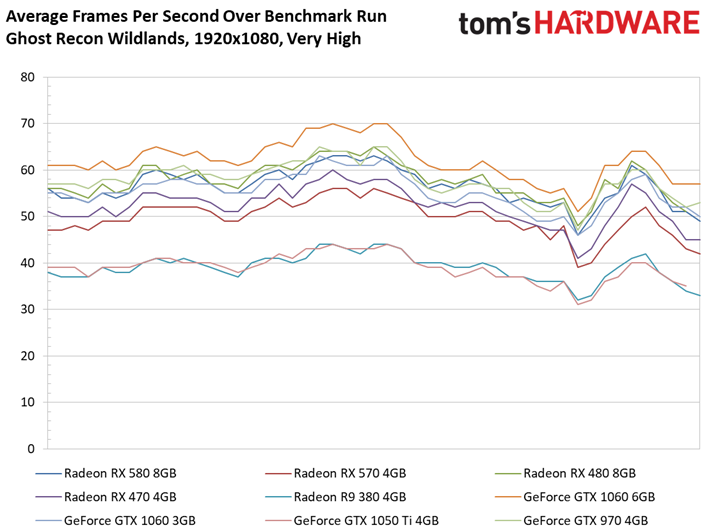

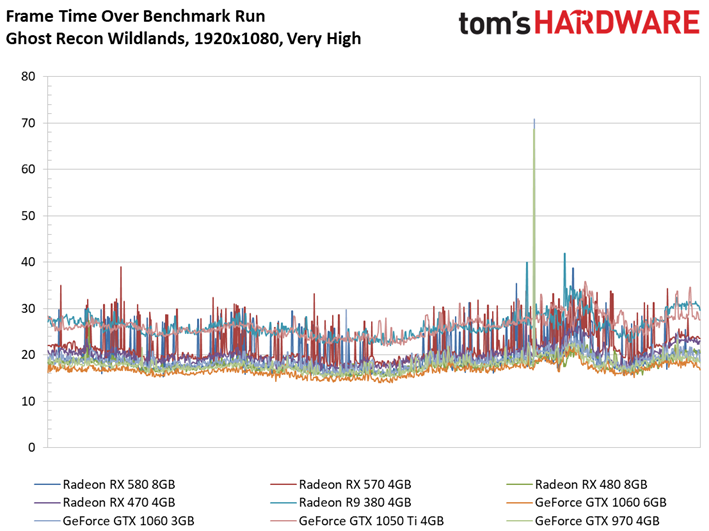

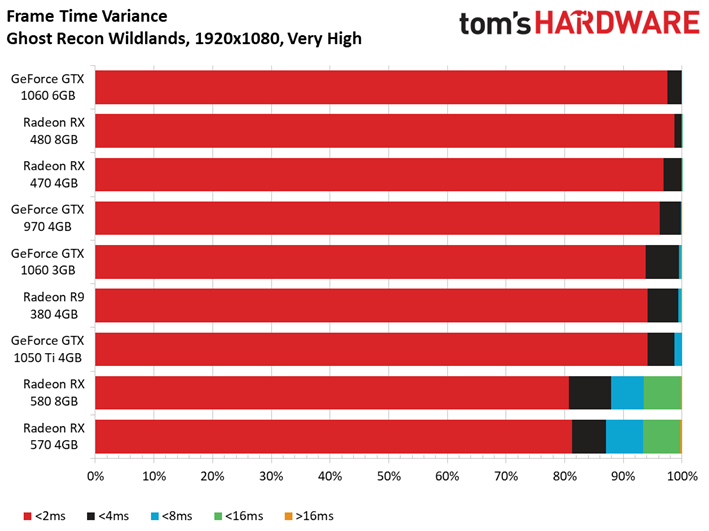

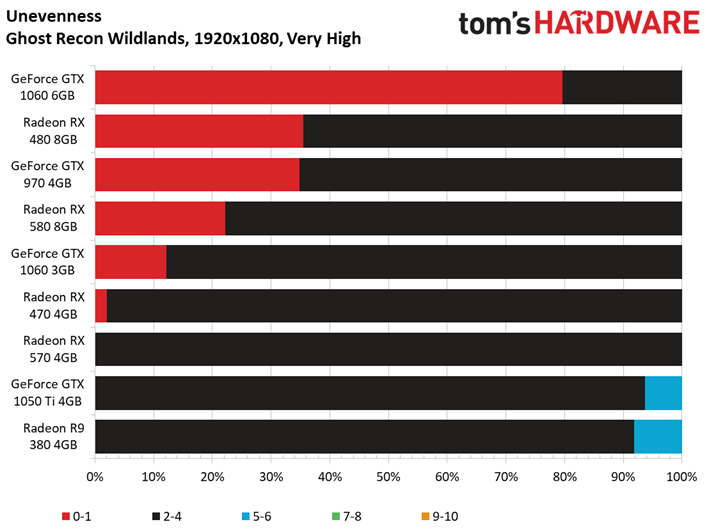

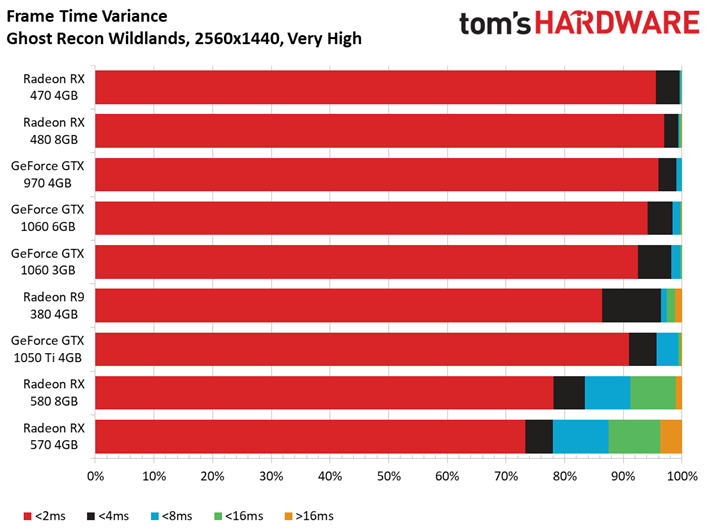

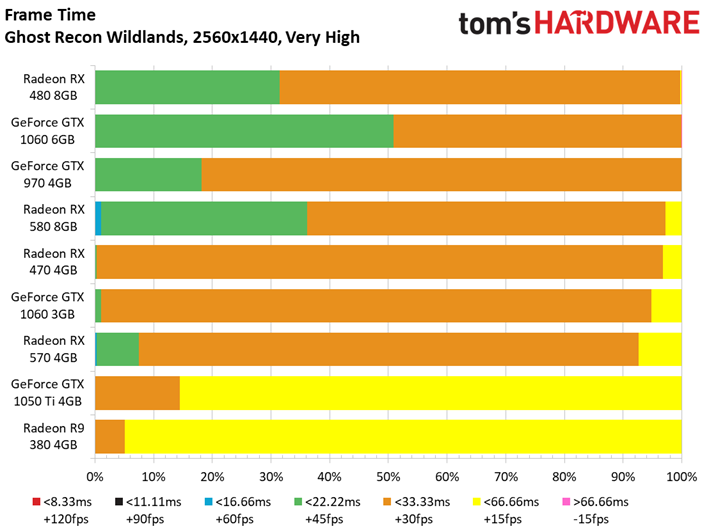

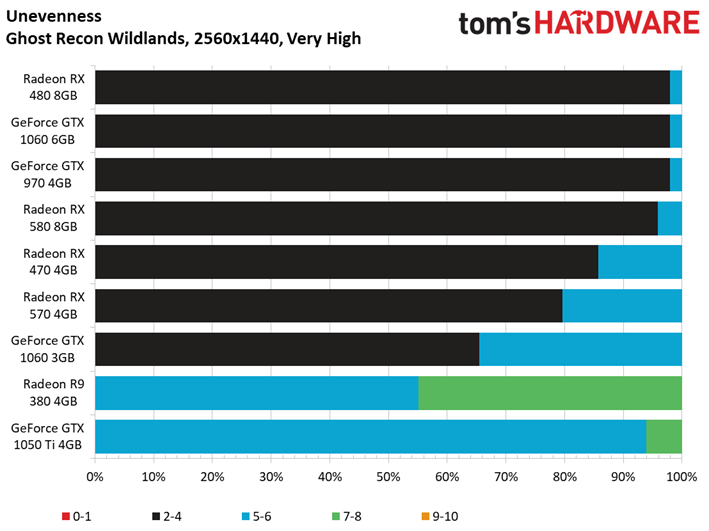

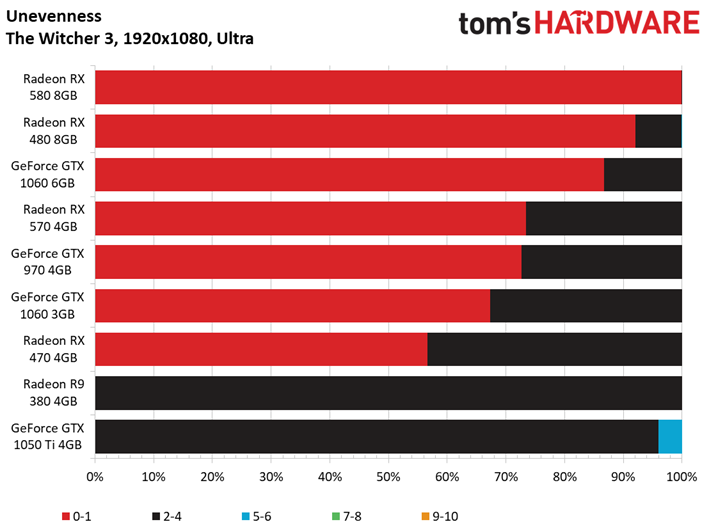

Drilling down into frame time over the benchmark run, it looks like both the Radeon RX 580 and 570 struggle with spikes throughout our benchmark. This manifests as uncommonly high variance and, ultimately, the two worst smoothness index ratings in our unevenness measurement. We used the Crimson ReLive Edition 17.4.2 driver for AMD’s Radeon RX 480, 470, and R9 380 and a special press driver for RX 580 and 570. Is there a regression of some sort that slipped through in an effort to get the RX 500-series ready?

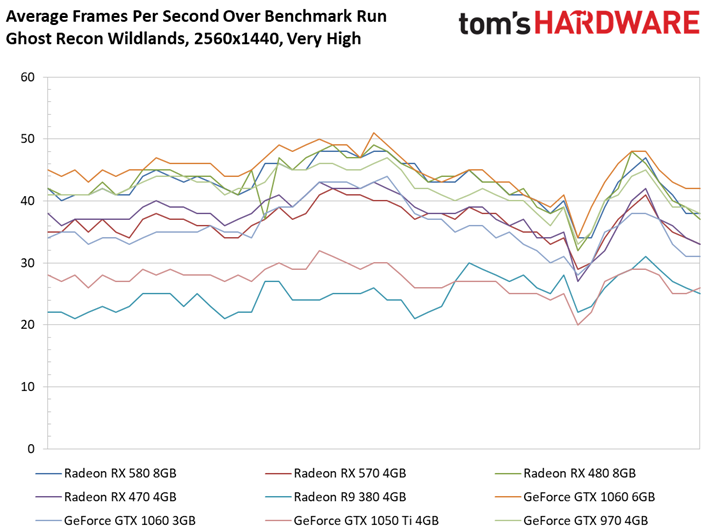

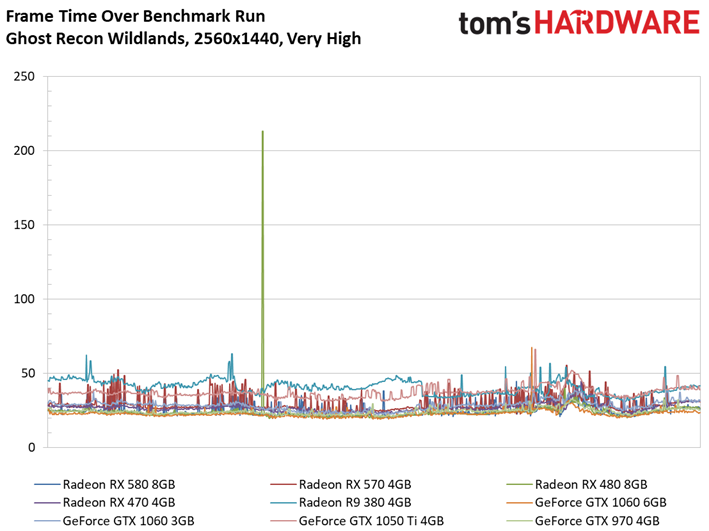

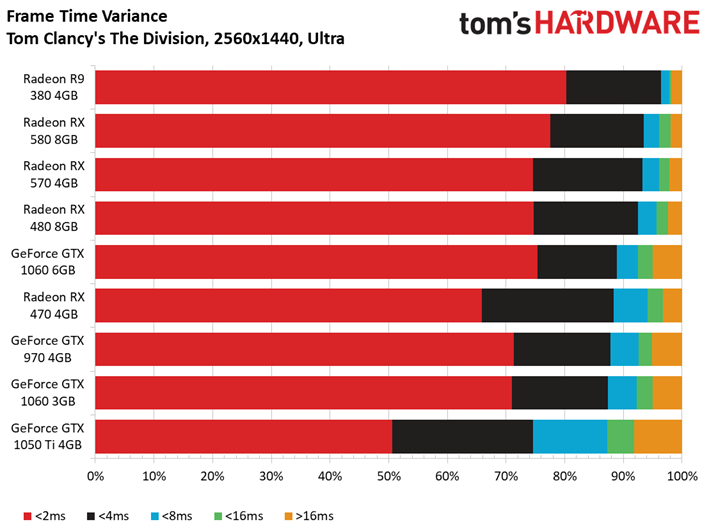

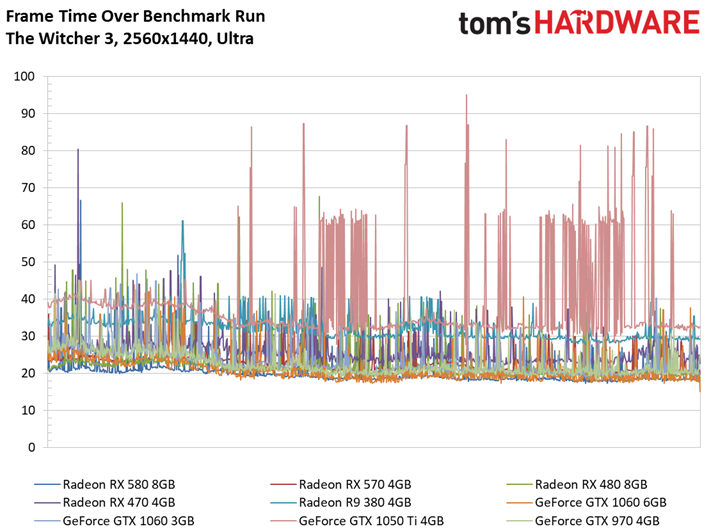

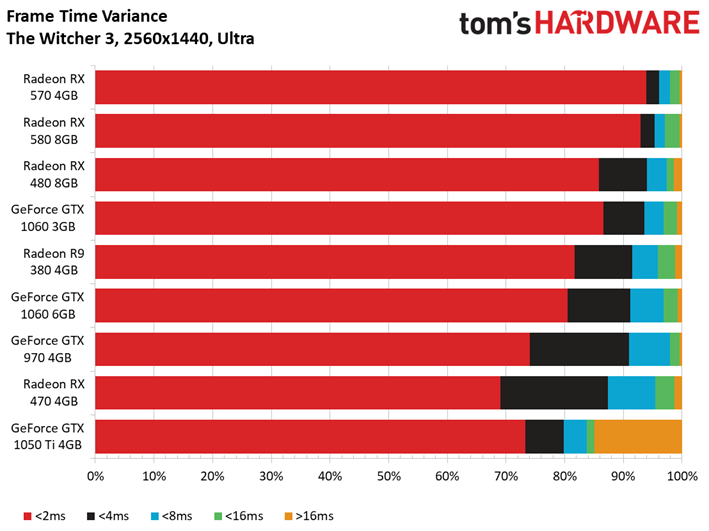

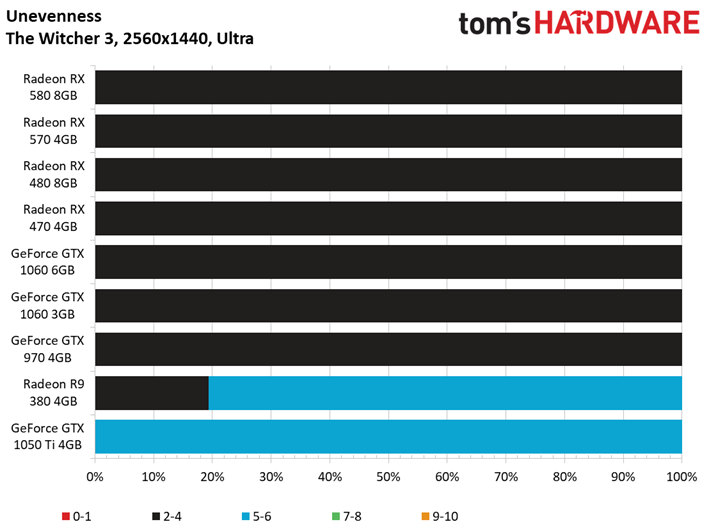

The same thing happens at 2560x1440. Radeon RX 580’s clock rate advantage is swallowed up by frame time issues only visible when we look our data as granularly as possible. Only the last-place Radeon R9 380 rivals AMD’s new RX 580 and 570 when it comes to >16ms frames in our variance chart.

Tom Clancy’s The Division (DX12)

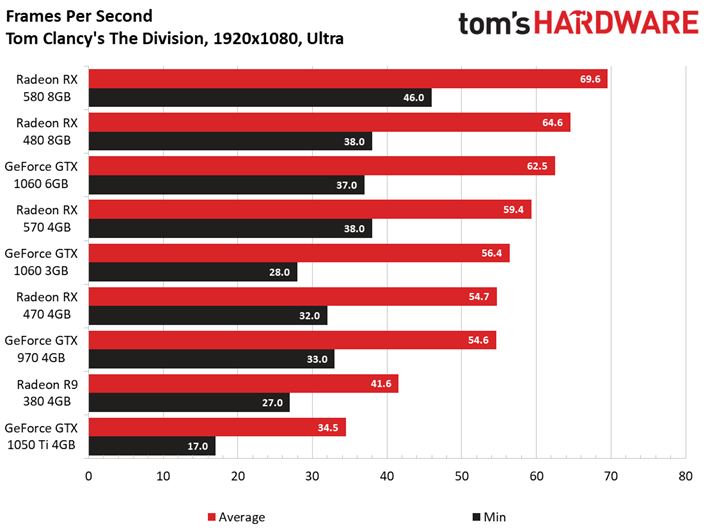

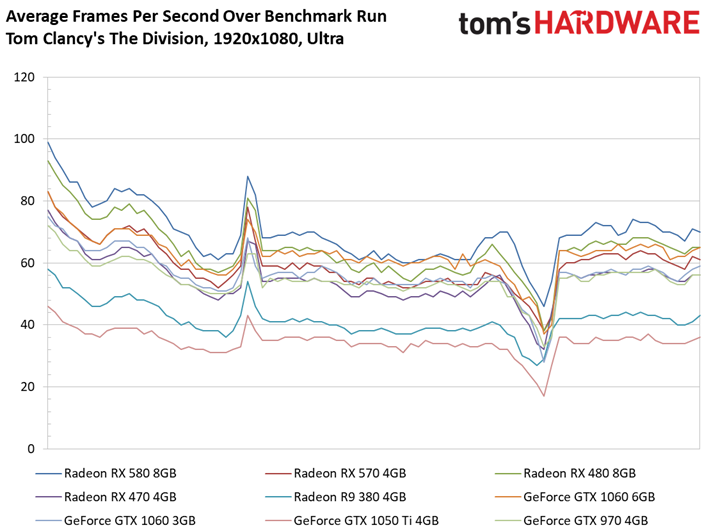

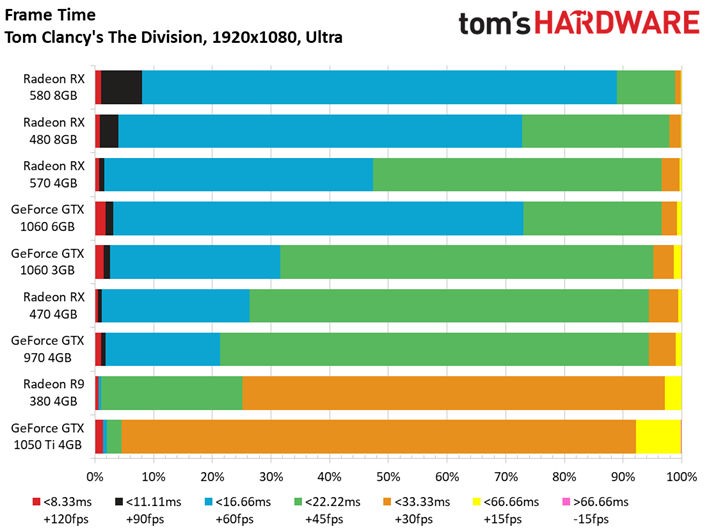

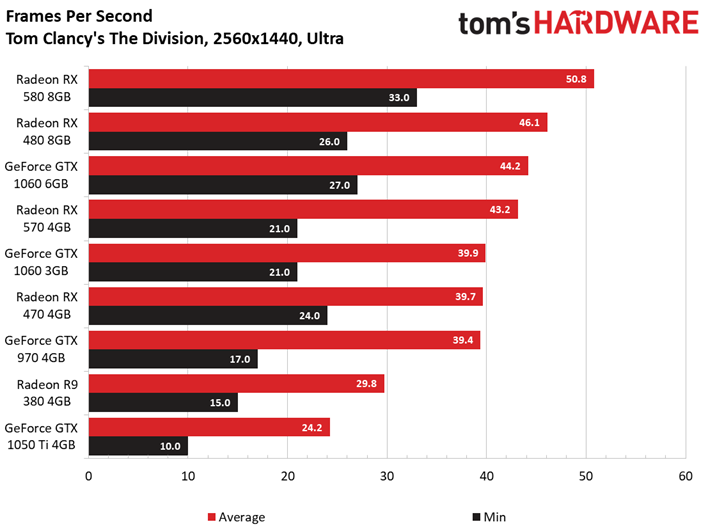

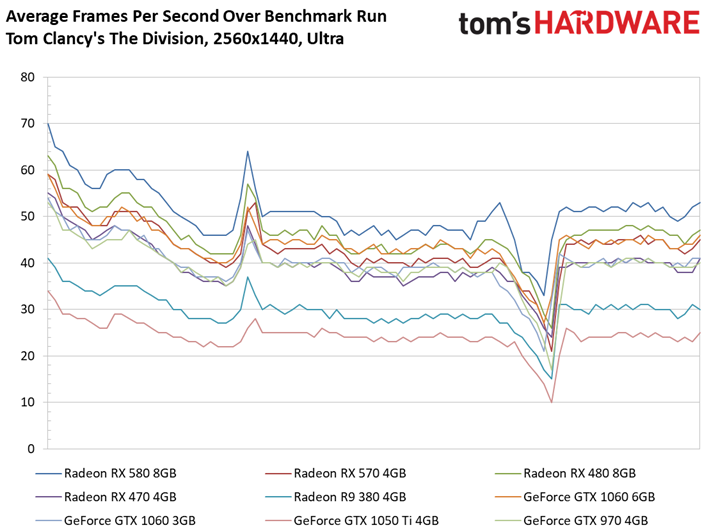

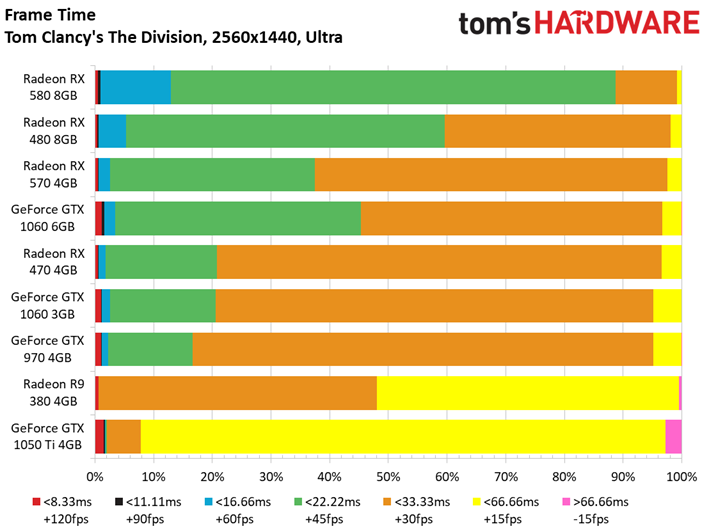

Whereas Ghost Recon Wildlands is based on the AnvilNext engine, The Division employs Ubisoft’s DirectX 12-enabled Snowdrop engine. AMD’s Radeon RX 580 extends a lead already enjoyed by its RX 480, putting the new card 11% ahead of GeForce GTX 1060 6GB’s average frame rate.

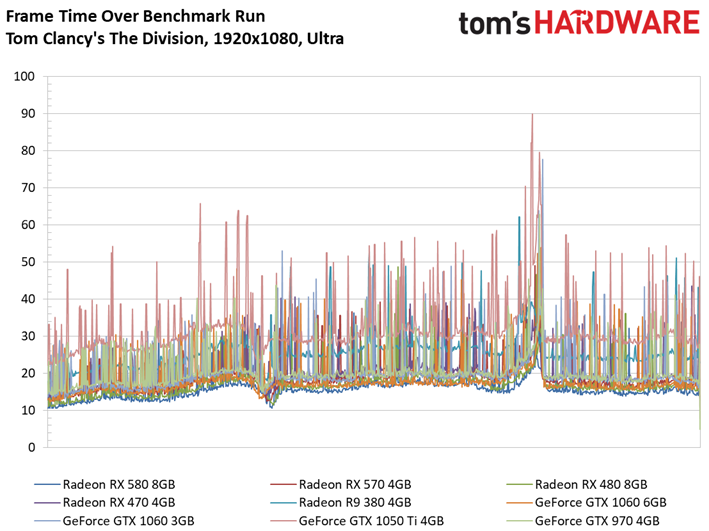

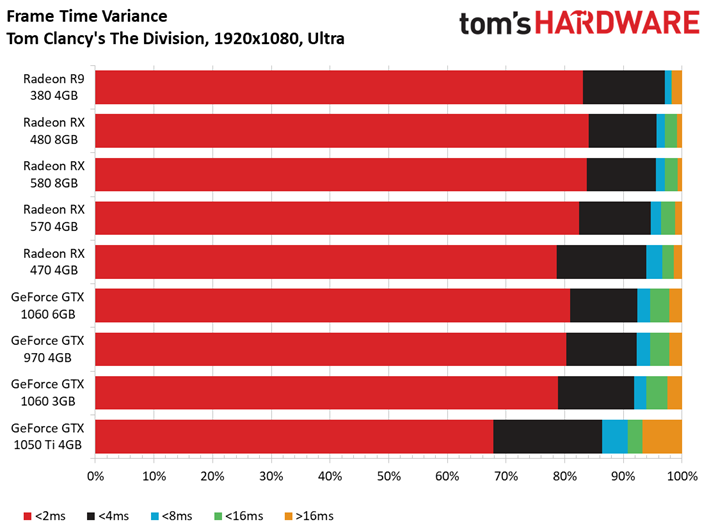

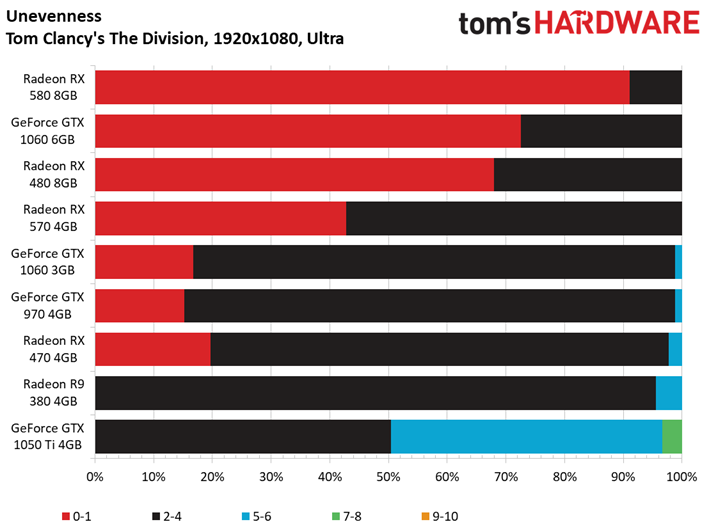

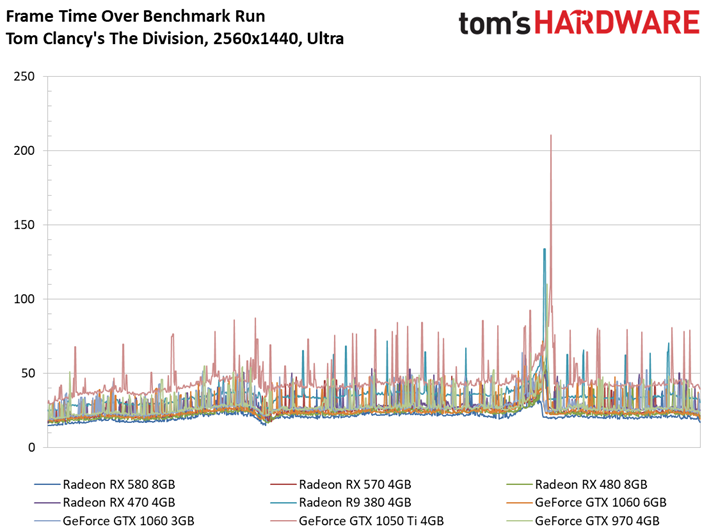

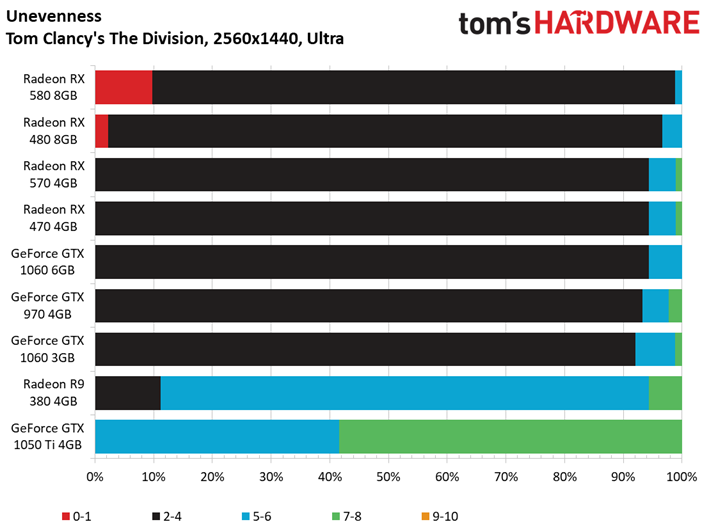

This time around, it’s the GeForce GTX 1050 Ti that demonstrates worrying frame time variance, resulting in a poor unevenness index score.

The RX 580’s lead over GeForce GTX 1060 6GB grows to 15% at 2560x1440, though even the Radeon RX 480 is faster than Nvidia’s board.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Frame time variance in general isn’t great in The Division, but the Radeon RX 580 definitely serves up frames most consistently, according to our measurements.

The Witcher 3 (DX11)

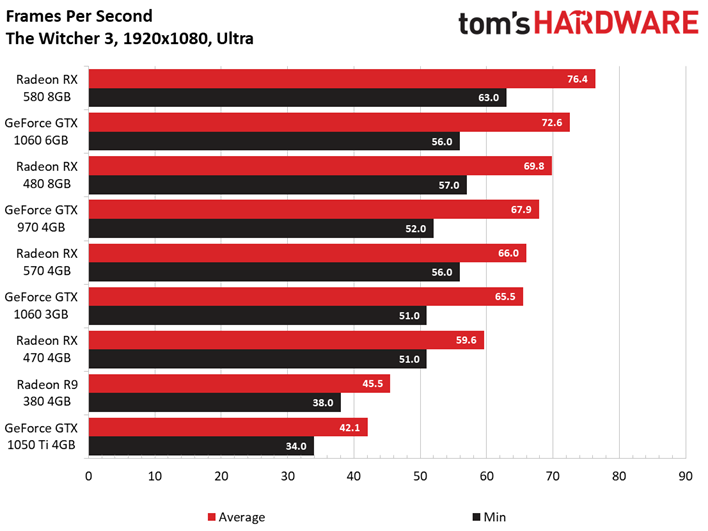

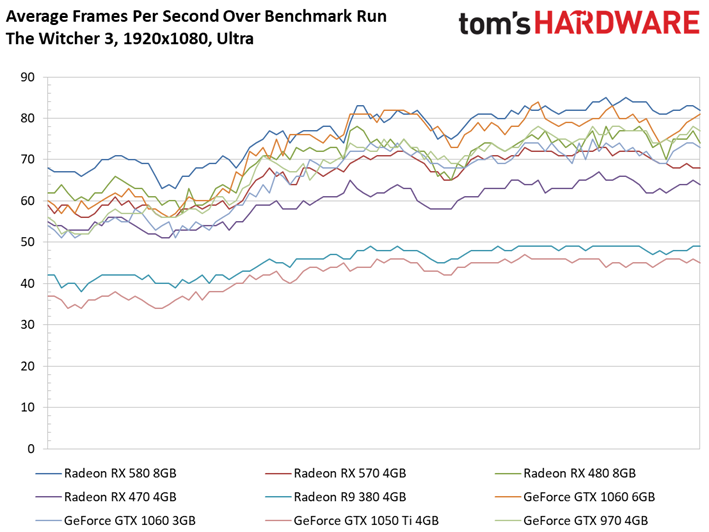

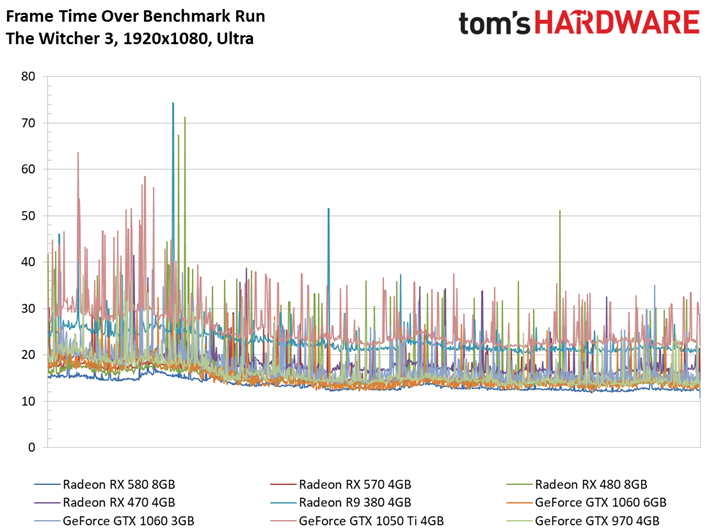

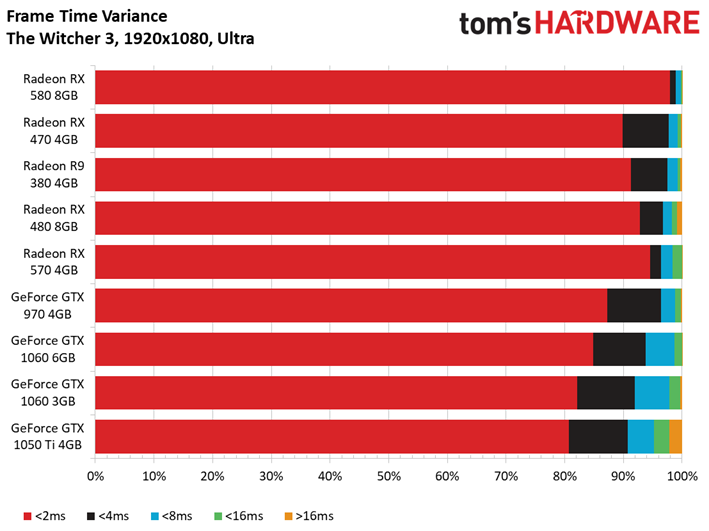

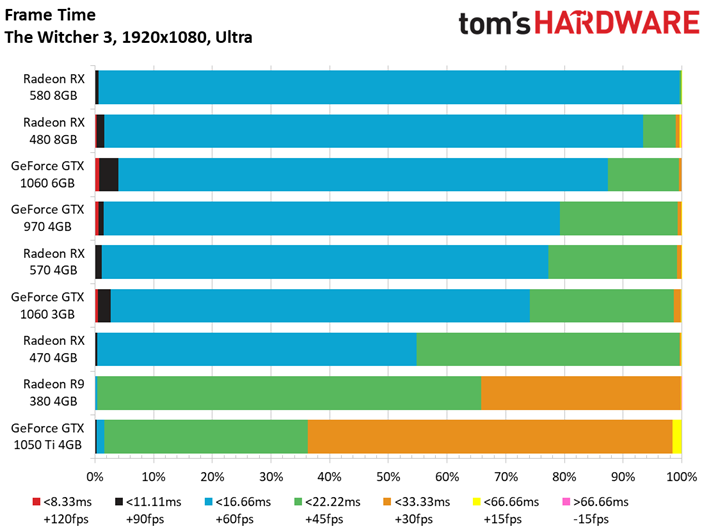

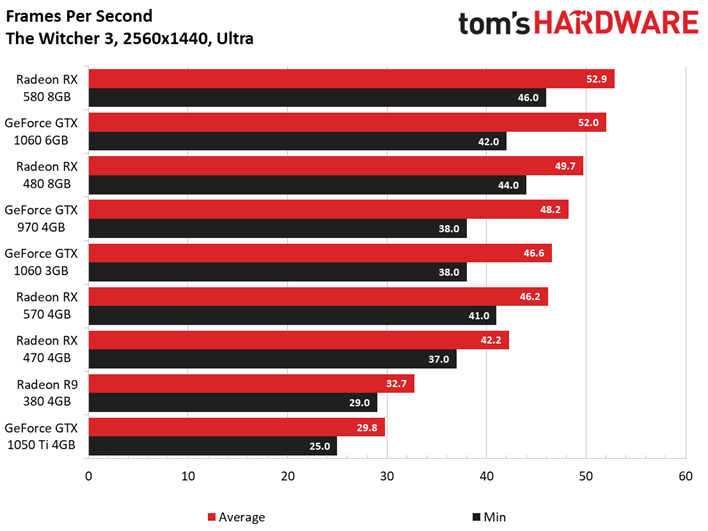

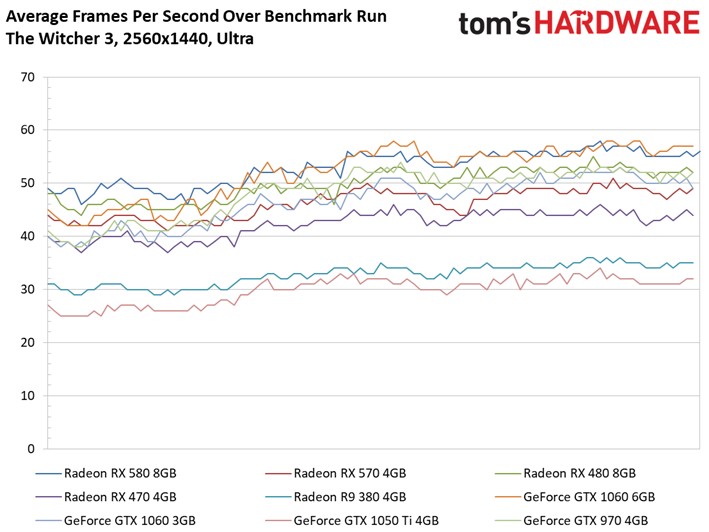

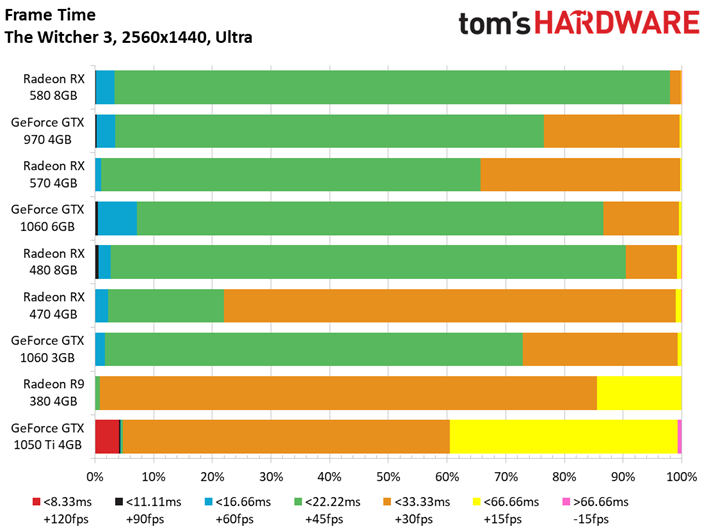

Although The Witcher 3 is a DirectX 11 game, its REDengine 3 runs well on Graphics Core Next products. Radeon RX 580 even secures a lead in our 100-second test sequence. The GeForce GTX 1060 6GB appears slightly faster than RX 480, though AMD’s card achieves better frame pacing.

Similarly, Radeon RX 570 ends up just ahead of the 3GB GeForce GTX 1060 in our average frame rate metric.

The Radeon RX 580 holds up well at 2560x1440 too, keeping its nose above 45 FPS through our run. Nvidia’s GeForce GTX 1060 6GB lands just behind the RX 580’s average frame rate. However, isolating frame time variance reveals how much smoother the RX 580 appears to be. Even AMD’s Radeon RX 570 looks more consistent than the 1060 6GB.

MORE: Best Graphics Cards

MORE: Desktop GPU Performance Hierarchy Table

MORE: All Graphics Content

Current page: Ghost Recon, The Division, and The Witcher 3

Prev Page GTA V, Hitman, Metro, and Rise of the Tomb Raider Next Page Power Consumption

Igor Wallossek wrote a wide variety of hardware articles for Tom's Hardware, with a strong focus on technical analysis and in-depth reviews. His contributions have spanned a broad spectrum of PC components, including GPUs, CPUs, workstations, and PC builds. His insightful articles provide readers with detailed knowledge to make informed decisions in the ever-evolving tech landscape

-

max0x7ba Battlefield 1 2560x1440, Ultra benchmark Radeon RX 580 minimum fps does not look right.Reply -

lasik124 Unless I am really missing something, can't you just slightly overclock the 480 to match the slight performance boost the 580 has?Reply -

FormatC Reply

Take a look at the frametimes at start. I think, it's a driver issue, because it was reproducible ;)19579183 said:Battlefield 1 2560x1440, Ultra benchmark Radeon RX 580 minimum fps does not look right.

No, it were in each case less than 1375 MHz. Slower as the Silent Mode of this 580 and simply too hot for my taste. The problem is not the pre-defined clock rate itself but the reduced real clocks from power tune due temps and voltage/power limtations;)19579237 said:Unless I am really missing something, can't you just slightly overclock the 480 to match the slight performance boost the 580 has?

-

lasik124 Reply19579253 said:

Take a look at the frametimes at start. I think, it's a driver issue, because it was reproducible ;)19579183 said:Battlefield 1 2560x1440, Ultra benchmark Radeon RX 580 minimum fps does not look right.

No, it were in each case less than 1375 MHz. Slower as the Silent Mode of this 580 and simply too hot for my taste. The problem is not the pre-defined clock rate itself but the reduced real clocks from power tune due temps and voltage/power limtations;)19579237 said:Unless I am really missing something, can't you just slightly overclock the 480 to match the slight performance boost the 580 has?

So i guess what Im trying to ask is it worth buying at 580 (Currently at a 7870) or save a couple bucks pick up a 480 non reference cooler and be able to slightly overclock it to get in game benchmarks similar if closely identical to the current 580?

-

Math Geek no, you'll get the 480 numbers with a 480. the tested card was already oc'ed and you won't get any better manually. the changes made to the 580 can't be done to the 480.Reply

want the extra few fps, then you'll want to get a 580. -

Tech_TTT I dont get it , for sure AMD could release this card as the original RX 480 long ago , why did they allow Nvidia GTX 1060 to steal the RX 480 share ?Reply

Very Stupid Strategy ... I now alot of people who bought GTX 1060 and wished AMD were better Just to take advantage of the cheaper Freesync Monitors.

AMD you lost millions of buyers !!! for nothing !!! -

turkey3_scratch Reply19579402 said:I dont get it , for sure AMD could release this card as the original RX 480 long ago , why did they allow Nvidia GTX 1060 to steal the RX 480 share ?

Very Stupid Strategy ... I now alot of people who bought GTX 1060 and wished AMD were better Just to take advantage of the cheaper Freesync Monitors.

AMD you lost millions of buyers !!! for nothing !!!

Don't think it's as simple as you make it out to be. They're a huge company with a ton of professionals, they know what they're doing. -

madmatt30 Reply

.19579402 said:I dont get it , for sure AMD could release this card as the original RX 480 long ago , why did they allow Nvidia GTX 1060 to steal the RX 480 share ?

Very Stupid Strategy ... I now alot of people who bought GTX 1060 and wished AMD were better Just to take advantage of the cheaper Freesync Monitors.

AMD you lost millions of buyers !!! for nothing !!!

Better binning ,refinements on the power circuitry - something thats come with time after the initial production runs of the rx470/480.

Fairly normal process for how amd work in all honesty.

Has it lost them some custom to prospective buyers in the last 6 months since the rx series was released ?? Maybe a few - not even 1% of the buyers they'd have lost if theyd actually held the rx series release back until now though!!

-

Tech_TTT Reply19579428 said:

.19579402 said:I dont get it , for sure AMD could release this card as the original RX 480 long ago , why did they allow Nvidia GTX 1060 to steal the RX 480 share ?

Very Stupid Strategy ... I now alot of people who bought GTX 1060 and wished AMD were better Just to take advantage of the cheaper Freesync Monitors.

AMD you lost millions of buyers !!! for nothing !!!

Better binning ,refinements on the power circuitry - something thats come with time after the initial production runs of the rx470/480.

Fairly normal process for how amd work in all honesty.

Has it lost them some custom to prospective buyers in the last 6 months since the rx series was released ?? Maybe a few - not even 1% of the buyers they'd have lost if theyd actually held the rx series release back until now though!!

Thats the Job of the R&D in the beta testing interval .. not after release, I am not buying this explanation at all.