AMD Radeon RX 580 8GB Review

Why you can trust Tom's Hardware

Power Supply and Cooling

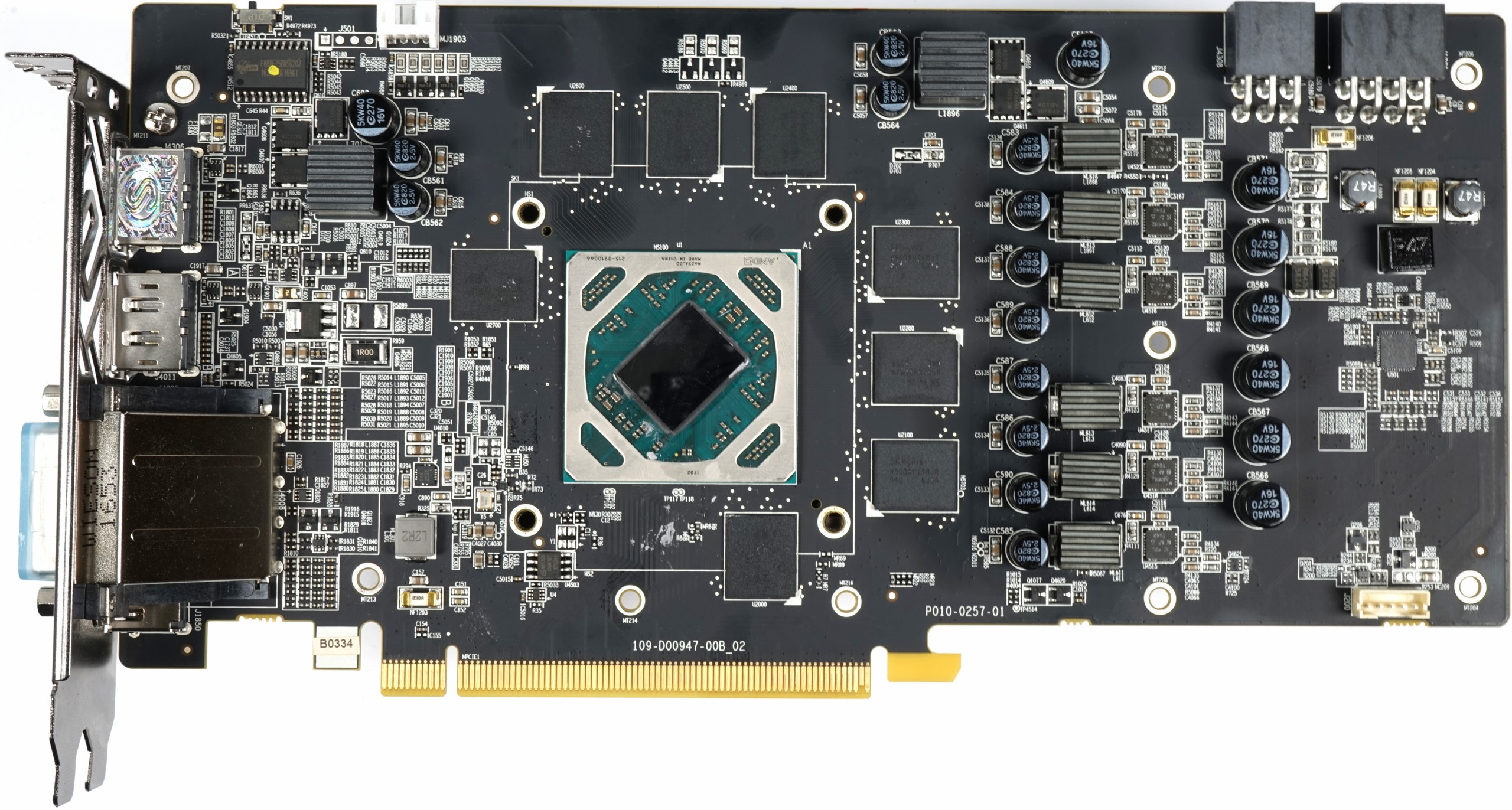

Power Supply and Board Layout

The board was designed by Sapphire, and its layout is quite a bit different from what we saw when AMD sent us the reference Radeon RX 480. A glance at the PCA reveals the GPU power supply’s six phases, which are prominently mentioned in the marketing material. Sapphire uses round polymer capacitors in all of the voltage regulator's critical places.

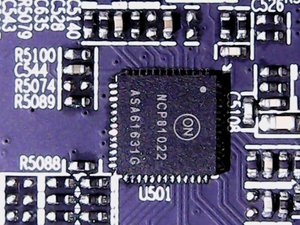

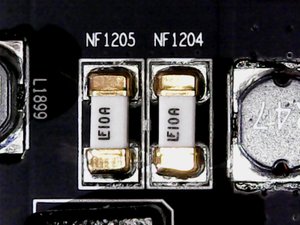

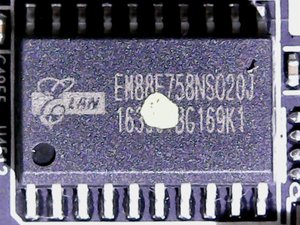

ON Semiconductor’s NCP81022 serves as the Nitro+ Radeon RX 580's PWM controller. But here's where the specs get a little confusing: this is a dual-output buck solution that can only control 4+1 phases, not six. If you need more evidence that Sapphire uses just three phases for the GPU, there are three 10A fuses on the right side of the board for the GPU and a fourth one on the left side for the memory’s single phase. The three 10A fuses limit Ellesmere's power to 360W, which is way more than enough. Even if they save the board from total destruction, you'd still have to RMA your card if any of the fuses blew. A TI INA3221 voltage monitor and emergency shutdown through the power supply would have worked as well.

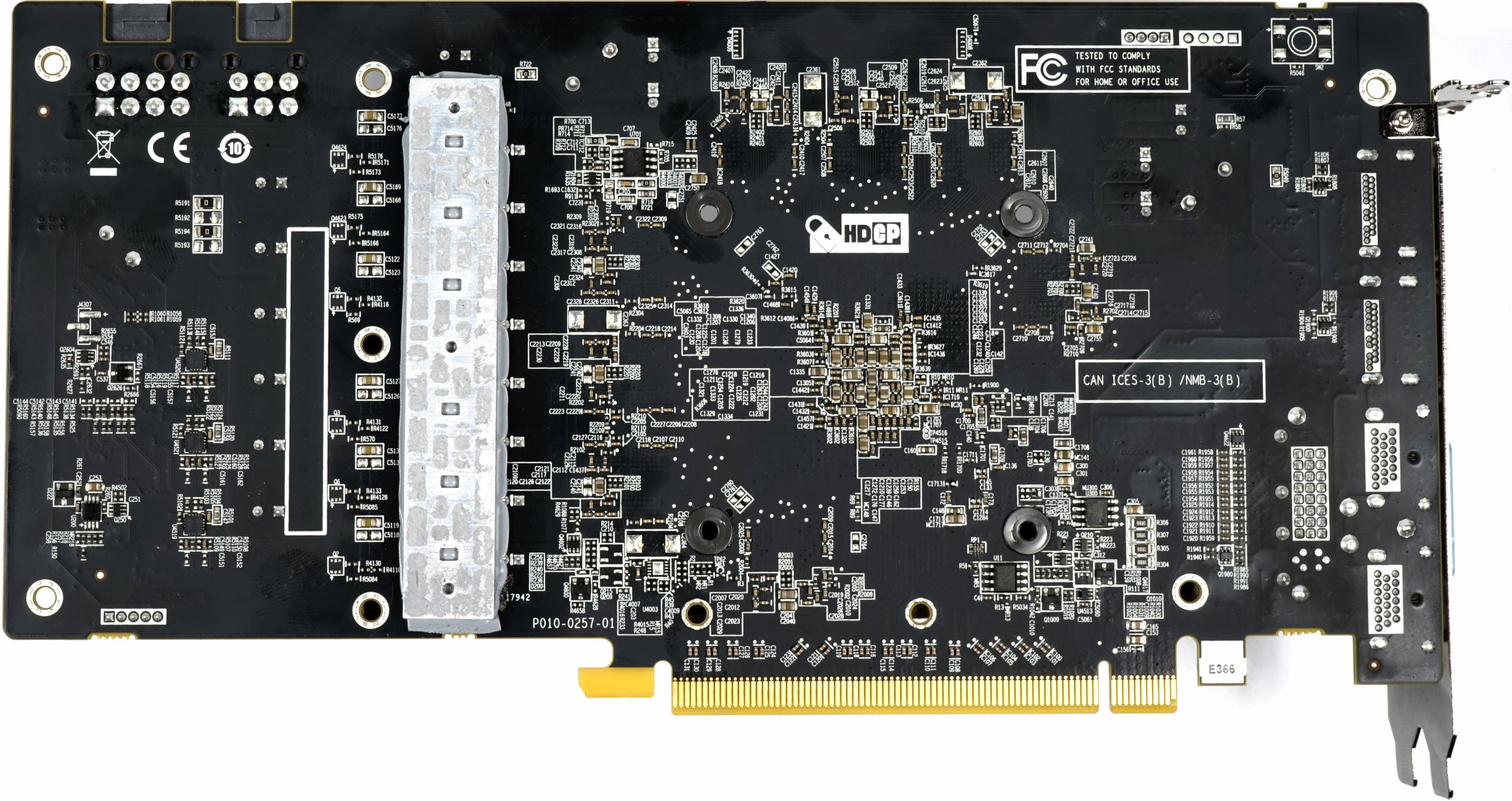

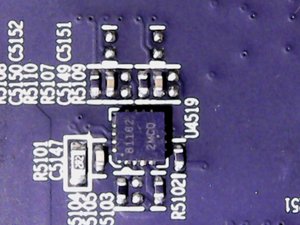

So, how do we get from three phases to the advertised six? We don’t, at least not really. There are three phases that each have two voltage converters running in parallel. This is called phase doubling, and it’s achieved using a trio of NCP81162 current-balancing phase doublers on the back of the board. In reality, there are six real voltage regulator circuits, with three pairs that run in parallel.

More phases make for better balancing, but because they all come from those two auxiliary power connectors anyway, three phases should be close enough in this case. Each of the six supply rails (not phases) utilizes a Vishay SiC632 integrated power stage, which combines MOSFETS for the high and low side, a gate driver, a Schottky diode, and zero-current detection for improved light-load efficiency.

This configuration saves board space and money. Theoretically, the chip can handle up to 50A, though that number is a bit lower in practice. As a result, we're looking at power losses between 6 to 8W, totaling up to 50W of waste heat across Sapphire's board.

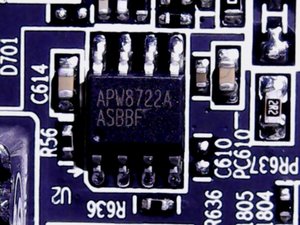

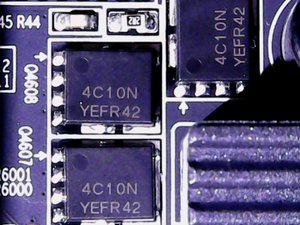

The memory’s power supply is comparatively simple. Its voltage converters are controlled by Anpec's APW8722 synchronous buck converter, and they consist of one ON Semiconductor NTMFS4C10N single N-channel MOSFET on the high side and two on the low side. They are driven by the motherboard's PCIe slot.

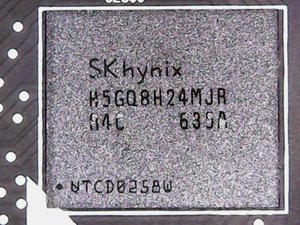

The memory is sourced from SK hynix, and just like Nvidia, AMD sells the GDDR5 with its GPUs. As a result, AMD’s partners don’t have the option to use faster memory.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

These are 8Gb H5GQ8H24MJR modules (32x 256Mb) that can be operated at up to 1.55V, depending on clock rate. Just like Samsung’s K4G80325FB-HC25, they max out at 2000 MHz, or 8Gb/s. That's the fastest you can get from SK hynix, which doesn't leave much hope for aggressive overclocking. This is a shame, since the Radeon RX 480 benefited quite a bit from extra memory frequency.

Sapphire delivers both versions of its Nitro+ Radeon RX 580 with two BIOS files, selectable through a switch on top of the card. By default, they're set to use the more conservative frequencies. This is a change from the Radeon RX 480 generation, where Sapphire shipped its cards set to more aggressive clock rates. Apparently, lessons were learned from the thermal and acoustic fallout of that decision.

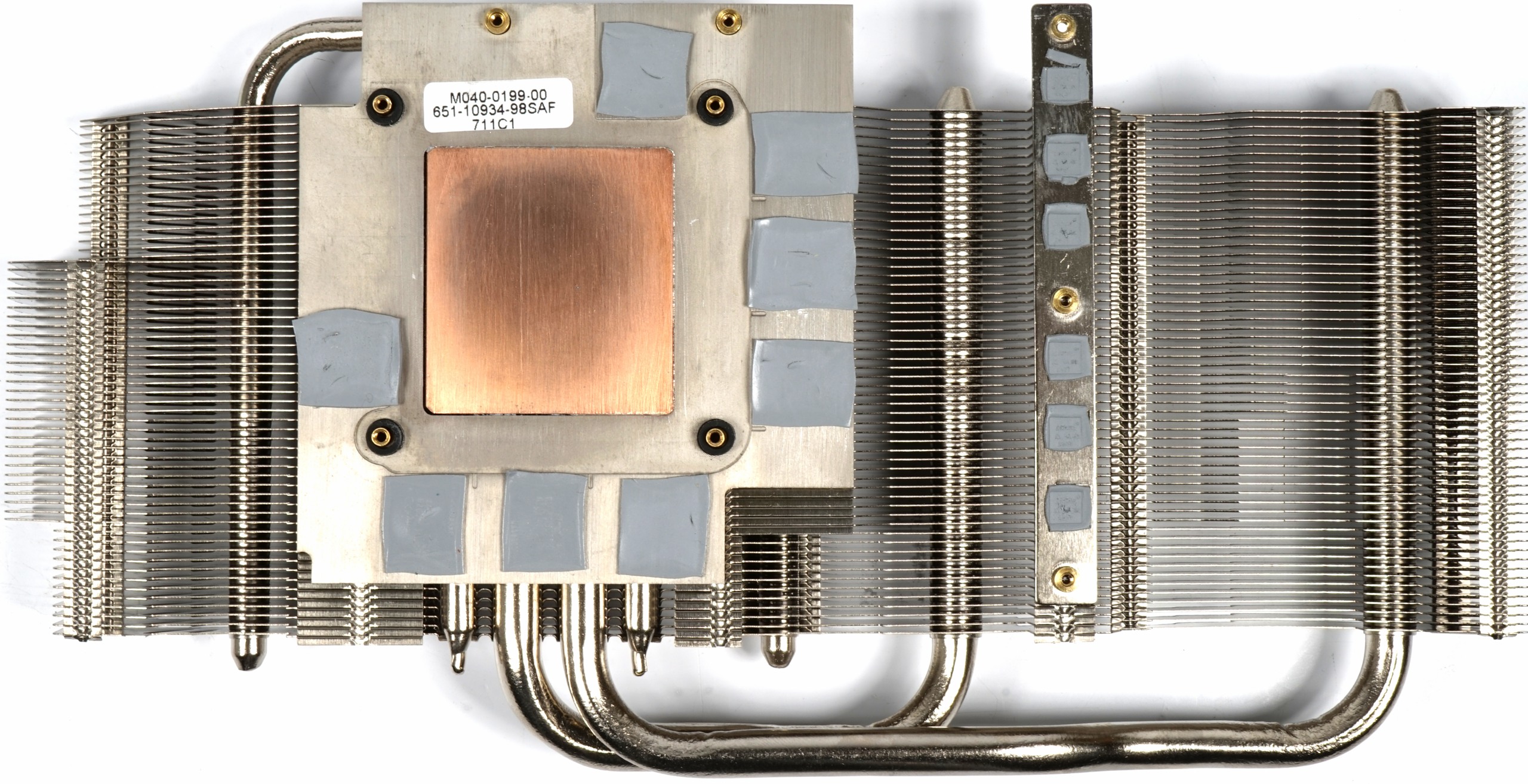

A Closer Look at the Cooler

The Nitro+ Radeon RX 580 Limited Edition's cooler needs enough headroom to handle at least 250W of waste heat without a problem. Below the shroud, two PWM-controlled axial fans from Dongguan Champion Electronics do their best to keep Sapphire's card cool. These are dual ball-bearing fans that top out in the 3250 to 3300 RPM range. The rotors have nine blades and a diameter of 95mm. They're designed to provide a broad and diffuse airflow, rather than a direct and targeted one.

Thermal density increases with fewer components shouldering the load. This creates hot-spots. Given what we saw from our walk-through of the Nitro+ Radeon RX 580's PCA, we know that just six components deal with as much as 50W of waste heat.

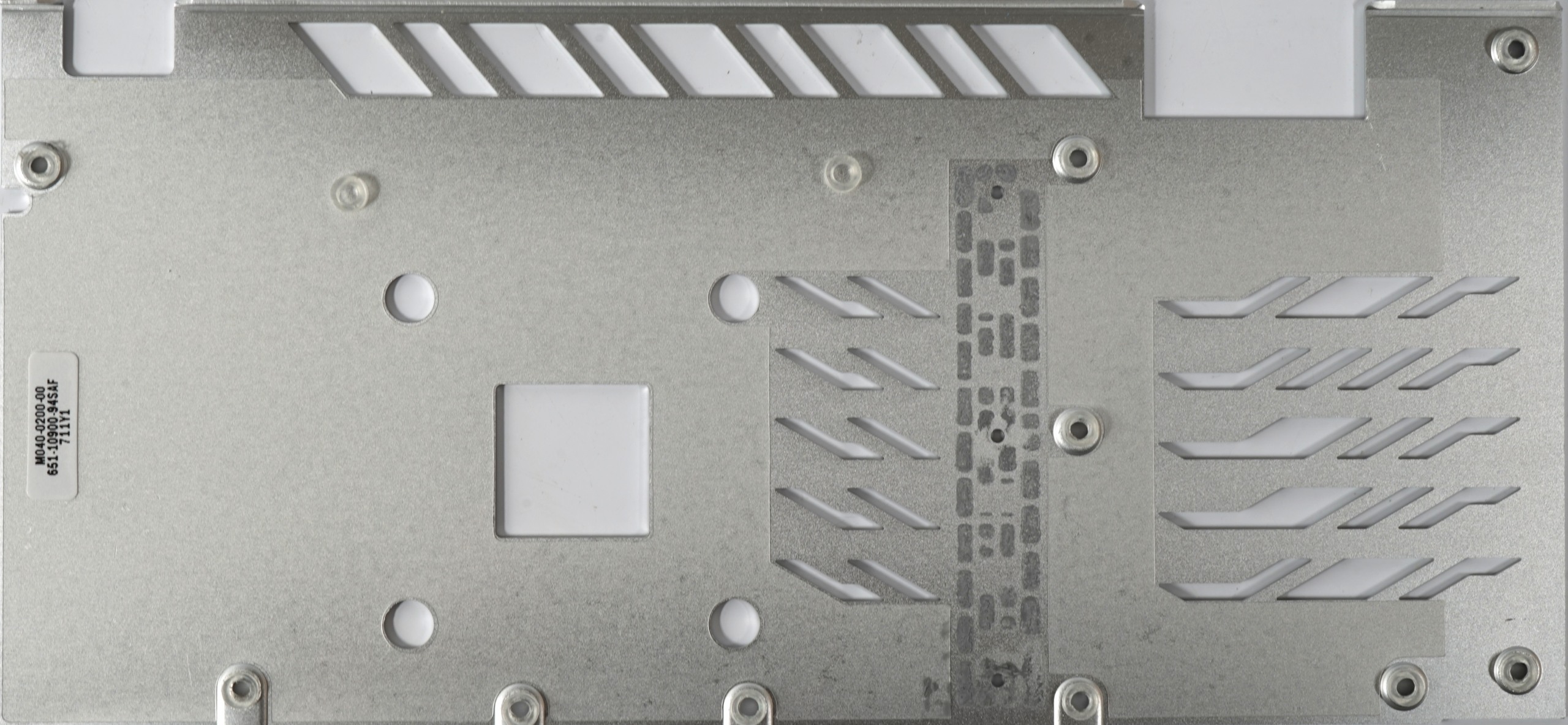

Consequently, Sapphire uses a backplate to help cool the card. It eschews the thick and inefficient thermal pads we dislike so much. Instead, there's a small aluminum heat sink positioned just below the hot spots on the board's back side. This makes it possible to go with a thin, much more effective thermal pad.

The heat sink makes direct contact with the backplate, leaving just a bit of thermal paste between them. This setup significantly improves cooling performance. Sapphire could have saved some money by stamping a depression into the backplate instead, though. Right next to all of that, on the left, we see the three chips responsible for phase doubling.

The cooler itself weighs 451g. It sports two 8mm and two 6mm heat pipes made of nickel-plated composite material. Those pipes are pressed into the back of a copper sink, which itself is surrounded by a light metal plate that makes direct contact with the heat sink and bottom of the cooler. It's responsible for drawing thermal energy away from SK hynix's memory modules.

The voltage converters get their own heat sink, which is integrated into the cooler. This should really help dissipate their significant waste heat. Moreover, this area receives lots of airflow. Consider us cautiously optimistic.

Sapphire's thermal solution isn't large or heavy, but its design looks good. The fins are relatively close to each other, providing lots of surface area. However, they aren't deep enough to necessitate lots of static air pressure. This makes the implementation less prone to annoying fan noise. Overall, Sapphire strikes a good compromise between all of the attributes we look at in a cooler.

MORE: Best Graphics Cards

MORE: Desktop GPU Performance Hierarchy Table

MORE: All Graphics Content

Current page: Power Supply and Cooling

Prev Page Introducing the AMD Radeon RX 580 8GB Next Page Ashes, Battlefield 1, Civilization VI, and Doom

Igor Wallossek wrote a wide variety of hardware articles for Tom's Hardware, with a strong focus on technical analysis and in-depth reviews. His contributions have spanned a broad spectrum of PC components, including GPUs, CPUs, workstations, and PC builds. His insightful articles provide readers with detailed knowledge to make informed decisions in the ever-evolving tech landscape

-

max0x7ba Battlefield 1 2560x1440, Ultra benchmark Radeon RX 580 minimum fps does not look right.Reply -

lasik124 Unless I am really missing something, can't you just slightly overclock the 480 to match the slight performance boost the 580 has?Reply -

FormatC Reply

Take a look at the frametimes at start. I think, it's a driver issue, because it was reproducible ;)19579183 said:Battlefield 1 2560x1440, Ultra benchmark Radeon RX 580 minimum fps does not look right.

No, it were in each case less than 1375 MHz. Slower as the Silent Mode of this 580 and simply too hot for my taste. The problem is not the pre-defined clock rate itself but the reduced real clocks from power tune due temps and voltage/power limtations;)19579237 said:Unless I am really missing something, can't you just slightly overclock the 480 to match the slight performance boost the 580 has?

-

lasik124 Reply19579253 said:

Take a look at the frametimes at start. I think, it's a driver issue, because it was reproducible ;)19579183 said:Battlefield 1 2560x1440, Ultra benchmark Radeon RX 580 minimum fps does not look right.

No, it were in each case less than 1375 MHz. Slower as the Silent Mode of this 580 and simply too hot for my taste. The problem is not the pre-defined clock rate itself but the reduced real clocks from power tune due temps and voltage/power limtations;)19579237 said:Unless I am really missing something, can't you just slightly overclock the 480 to match the slight performance boost the 580 has?

So i guess what Im trying to ask is it worth buying at 580 (Currently at a 7870) or save a couple bucks pick up a 480 non reference cooler and be able to slightly overclock it to get in game benchmarks similar if closely identical to the current 580?

-

Math Geek no, you'll get the 480 numbers with a 480. the tested card was already oc'ed and you won't get any better manually. the changes made to the 580 can't be done to the 480.Reply

want the extra few fps, then you'll want to get a 580. -

Tech_TTT I dont get it , for sure AMD could release this card as the original RX 480 long ago , why did they allow Nvidia GTX 1060 to steal the RX 480 share ?Reply

Very Stupid Strategy ... I now alot of people who bought GTX 1060 and wished AMD were better Just to take advantage of the cheaper Freesync Monitors.

AMD you lost millions of buyers !!! for nothing !!! -

turkey3_scratch Reply19579402 said:I dont get it , for sure AMD could release this card as the original RX 480 long ago , why did they allow Nvidia GTX 1060 to steal the RX 480 share ?

Very Stupid Strategy ... I now alot of people who bought GTX 1060 and wished AMD were better Just to take advantage of the cheaper Freesync Monitors.

AMD you lost millions of buyers !!! for nothing !!!

Don't think it's as simple as you make it out to be. They're a huge company with a ton of professionals, they know what they're doing. -

madmatt30 Reply

.19579402 said:I dont get it , for sure AMD could release this card as the original RX 480 long ago , why did they allow Nvidia GTX 1060 to steal the RX 480 share ?

Very Stupid Strategy ... I now alot of people who bought GTX 1060 and wished AMD were better Just to take advantage of the cheaper Freesync Monitors.

AMD you lost millions of buyers !!! for nothing !!!

Better binning ,refinements on the power circuitry - something thats come with time after the initial production runs of the rx470/480.

Fairly normal process for how amd work in all honesty.

Has it lost them some custom to prospective buyers in the last 6 months since the rx series was released ?? Maybe a few - not even 1% of the buyers they'd have lost if theyd actually held the rx series release back until now though!!

-

Tech_TTT Reply19579428 said:

.19579402 said:I dont get it , for sure AMD could release this card as the original RX 480 long ago , why did they allow Nvidia GTX 1060 to steal the RX 480 share ?

Very Stupid Strategy ... I now alot of people who bought GTX 1060 and wished AMD were better Just to take advantage of the cheaper Freesync Monitors.

AMD you lost millions of buyers !!! for nothing !!!

Better binning ,refinements on the power circuitry - something thats come with time after the initial production runs of the rx470/480.

Fairly normal process for how amd work in all honesty.

Has it lost them some custom to prospective buyers in the last 6 months since the rx series was released ?? Maybe a few - not even 1% of the buyers they'd have lost if theyd actually held the rx series release back until now though!!

Thats the Job of the R&D in the beta testing interval .. not after release, I am not buying this explanation at all.