Revolutionary Samsung tech that enables stacking HBM memory on CPU or GPU arrives this year — SAINT-D HBM scheduled for 2024 rollout, says report

Tech to debut ahead of HBM4 (2025/26)

Samsung is set to introduce 3D packaging services for high-bandwidth memory (HBM) this year, according to a report from the Korea Economic Daily that cites the company's announcement at the Samsung Foundry Forum 2024 in San Jose, as well as 'industry sources.' The 3D packaging for HBM essentially paves the way for HBM4 integration in late 2025 – 2026, but we are not sure what kind of memory Samsung is set to package this year.

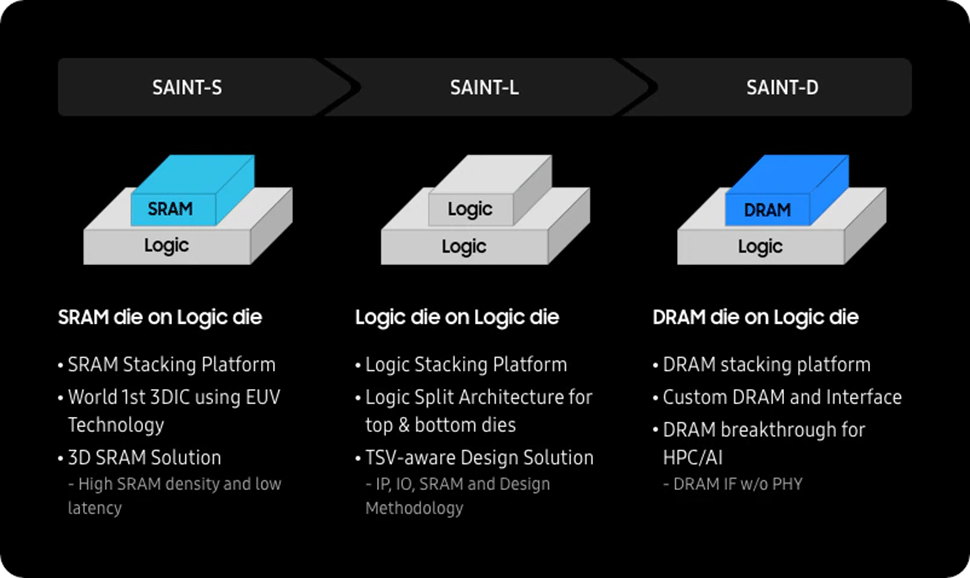

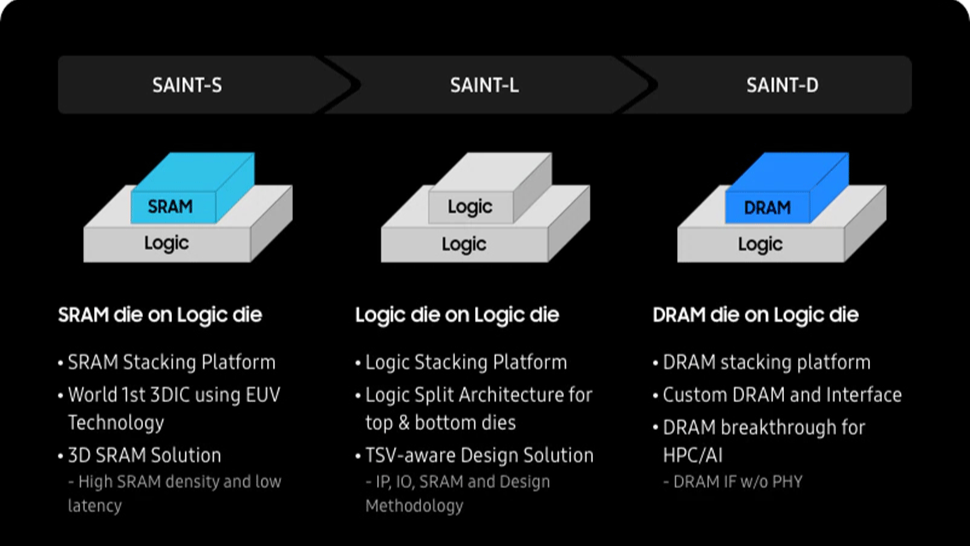

For 3D packaging, Samsung has a platform called SAINT (Samsung Advanced Interconnect Technology) that includes three distinct 3D stacking technologies: SAINT-S for SRAM, SAINT-L for logic, and SAINT-D for DRAM stacking on top of logic chips like CPUs or GPUs. The company has been working on SAINT-D for several years (and formally announced it in 2022) and it looks like the technology will be ready for prime time this year, which will be a notable milestone for the world's largest memory maker and a leading foundry.

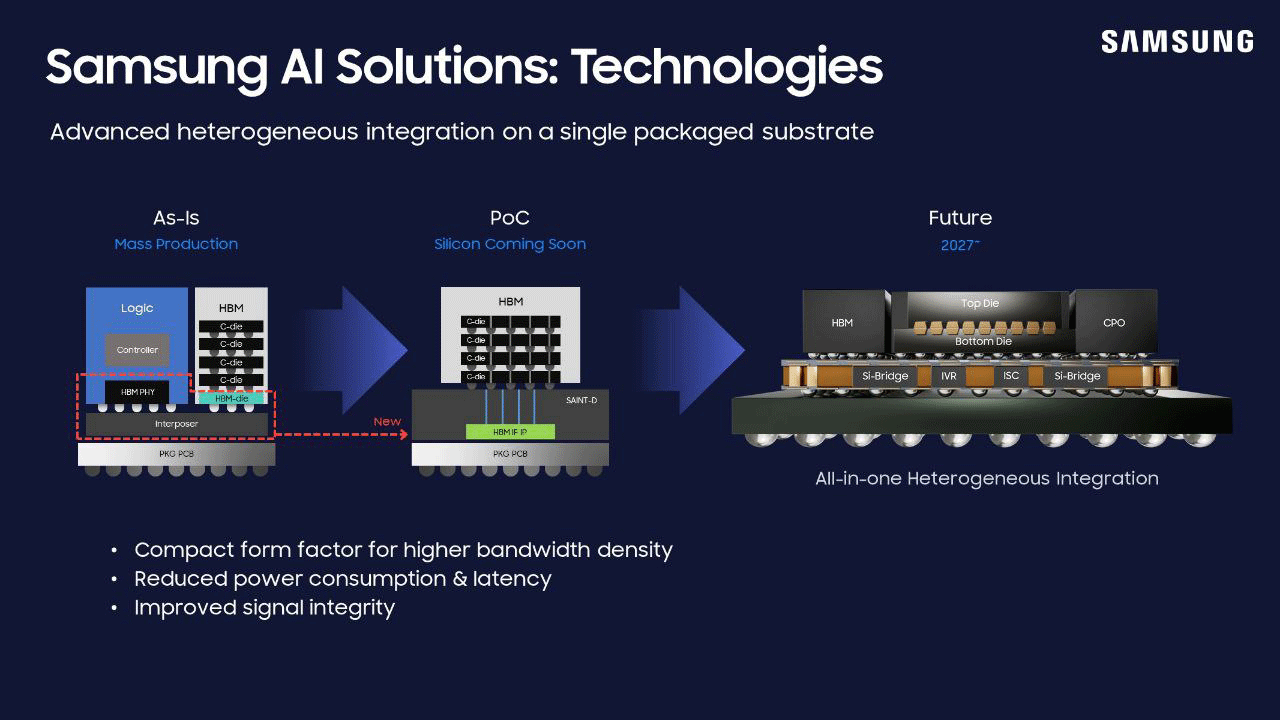

Samsung's new 3D packaging method involves stacking HBM chips vertically on top of processors, which differs from the existing 2.5D technology that connects HBM chips and GPUs horizontally via a silicon interposer. This vertical stacking approach eliminates the need for the silicon interposer but requires a new base die for HBM memory that is made using a sophisticated process technology.

The 3D packaging technology offers significant benefits for HBM, including faster data transfers, cleaner signals, reduced power consumption, and lower latencies, but at relatively high packaging costs. Samsung plans to offer this advanced 3D HBM packaging as a turnkey service, where its memory business division produces HBM chips, and the foundry unit assembles actual processors for fabless companies.

What remains unclear is what exactly Samsung plans to offer with SAINT-D this year. Putting HBM on a logic die requires an appropriate chip design and we are not aware of any processors from well-known companies that are designed to hold HBM on top and are set to launch in 2024 – 1H 2025.

Looking ahead, Samsung aims to introduce all-in-one heterogeneous integration technology by 2027. This future technology will enable the integration of two layers of logic chips, HBM memory (on interposer), and even co-package optics (CPOs).

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

LabRat 891 Yet... we still won't see a return of HBM in the consumer space.Reply

AMD gave us a taste, and then the industry yanked it away. -

oofdragon Revolutionary..... where? Will it make processors next year make s revolutionary leap that will leave the predecessor eating dust? No? Só It's not revolutionaryReply -

hannibal ReplyLabRat 891 said:Yet... we still won't see a return of HBM in the consumer space.

AMD gave us a taste, and then the industry yanked it away.

Does not matter. This is good for datacenter products! -

thestryker I'm not sure if HBM is as sensitive to temperatures as DRAM is but this still seems like something that couldn't effectively be implemented until BSPDN nodes go live. Seems like more of an investor bait type announcement than anything industry impacting.Reply -

hotaru251 Reply

if the application is heavily ram influenced? very much so.oofdragon said:Revolutionary..... where? Will it make processors next year make s revolutionary leap that will leave the predecessor eating dust? No? Só It's not revolutionary

(not really useful but it is an example)

Y Cruncher for example would (i believe) benefit as its actually bandwidth bound so higher bandwidth memory (hbm) would see a significant performance increase.

i believe DDR5 caps out around 8GT/s (64GB/s) for bandwidth compared to HBM3 which can do around 100GT/s (819GB/s) and as you can imagine thats a massive performance if the application can utilize that.

Will it likely ever replace ram? no. However you could (likely) easily add a small amount and then have it spill over into dram/vram as needed. -

thisisaname Reply

Sounds to me more evolutional than revolutionary.?hotaru251 said:if the application is heavily ram influenced? very much so.

(not really useful but it is an example)

Y Cruncher for example would (i believe) benefit as its actually bandwidth bound so higher bandwidth memory (hbm) would see a significant performance increase.

i believe DDR5 caps out around 8GT/s (64GB/s) for bandwidth compared to HBM3 which can do around 100GT/s (819GB/s) and as you can imagine thats a massive performance if the application can utilize that.

Will it likely ever replace ram? no. However you could (likely) easily add a small amount and then have it spill over into dram/vram as needed. -

DS426 3D DRAM stacking on the CPU (or more specifically, on CPU logic dies) will eventually happen in the consumer world; just look at how much 3D V-Cache improved the Ryzen platform when utilized. I say g'luck on thermals, but I'm sure someone will figure it out.Reply -

Diogene7 It would be even more revolutionnary if it would be using persistent memory / Non-Volatile-Memory (NVM) MRAM like SOT-MRAM developed by European research center IMEC : it would open plenty new oportunities !!!Reply

https://www.imec-int.com/en/press/imecs-extremely-scaled-sot-mram-devices-show-record-low-switching-energy-and-virtually -

usertests The promise of X-Cube finally fulfilled?Reply

https://semiconductor.samsung.com/news-events/news/samsung-announces-availability-of-its-silicon-proven-3d-ic-technology-for-high-performance-applications/