Intel's Sapphire Rapids to Have 64 Gigabytes of HBM2e Memory, More Ponte Vecchio Details

HBM2e comes to Xeon

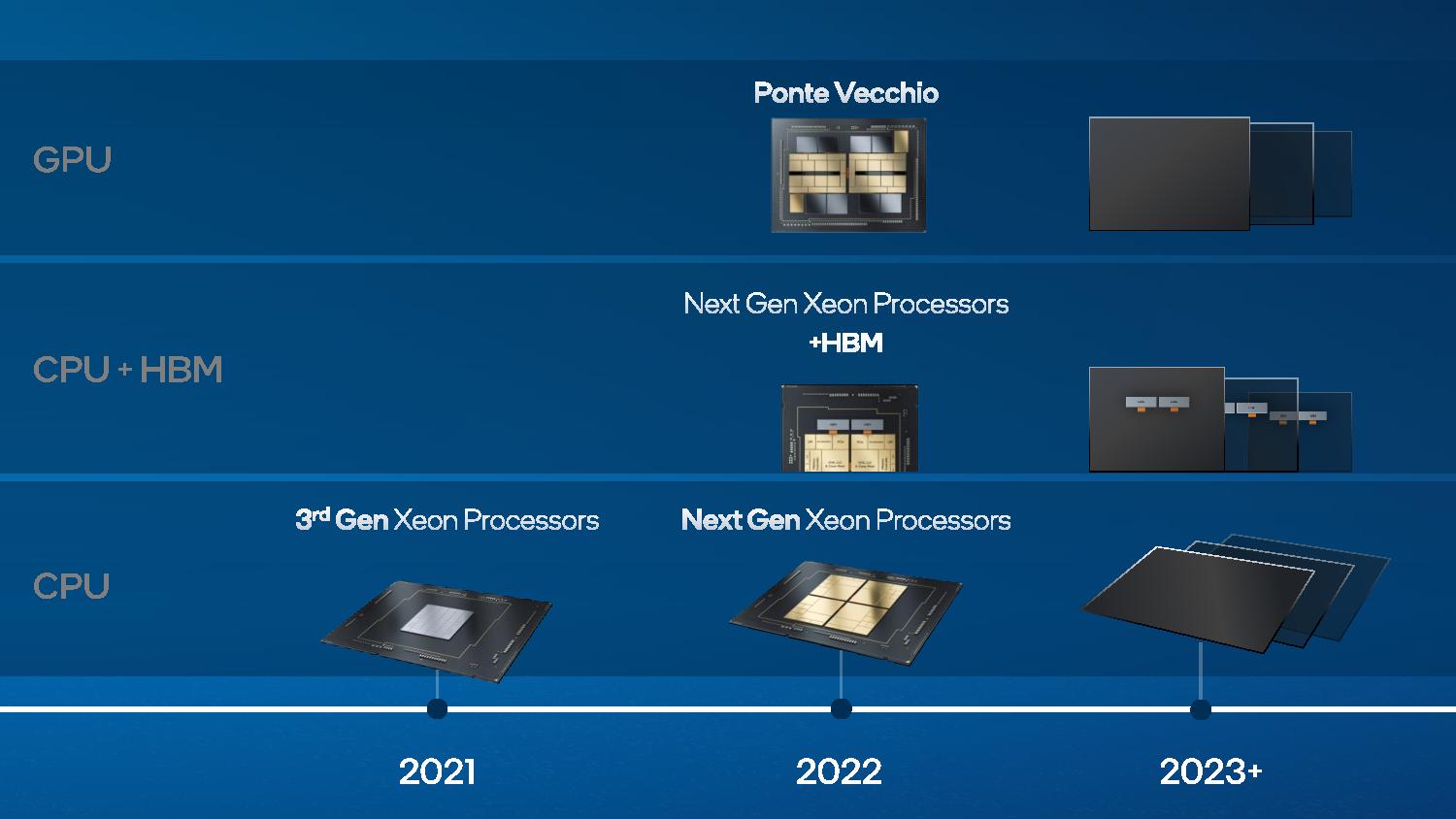

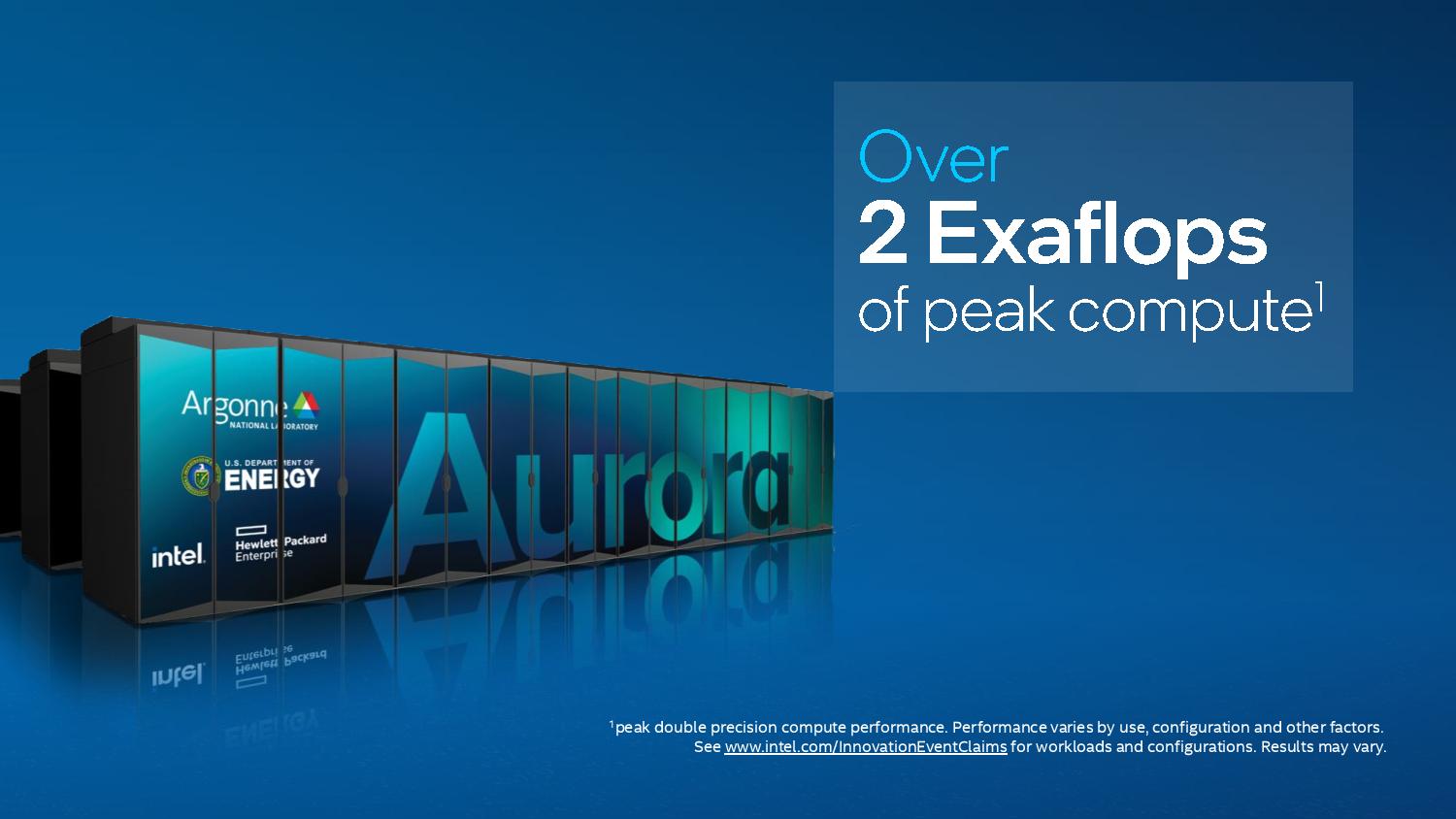

Intel announced at the Supercomputing 2021 tradeshow that the company's forthcoming Sapphire Rapids processors will come with up to 64GB of HBM2e memory and also pointed to brisk development on the platform side, with a broad range of partners working to bring OEM systems to market with both Sapphire Rapids and Ponte Vecchio. Intel also revealed that Ponte Vecchio GPUs come with 408MB of L2 cache and HBM2e memory and that the upcoming two ExaFLOP Aurora supercomputer will come with 54,000 Ponte Vecchio GPUs paired with 18,000 Sapphire Rapids processors.

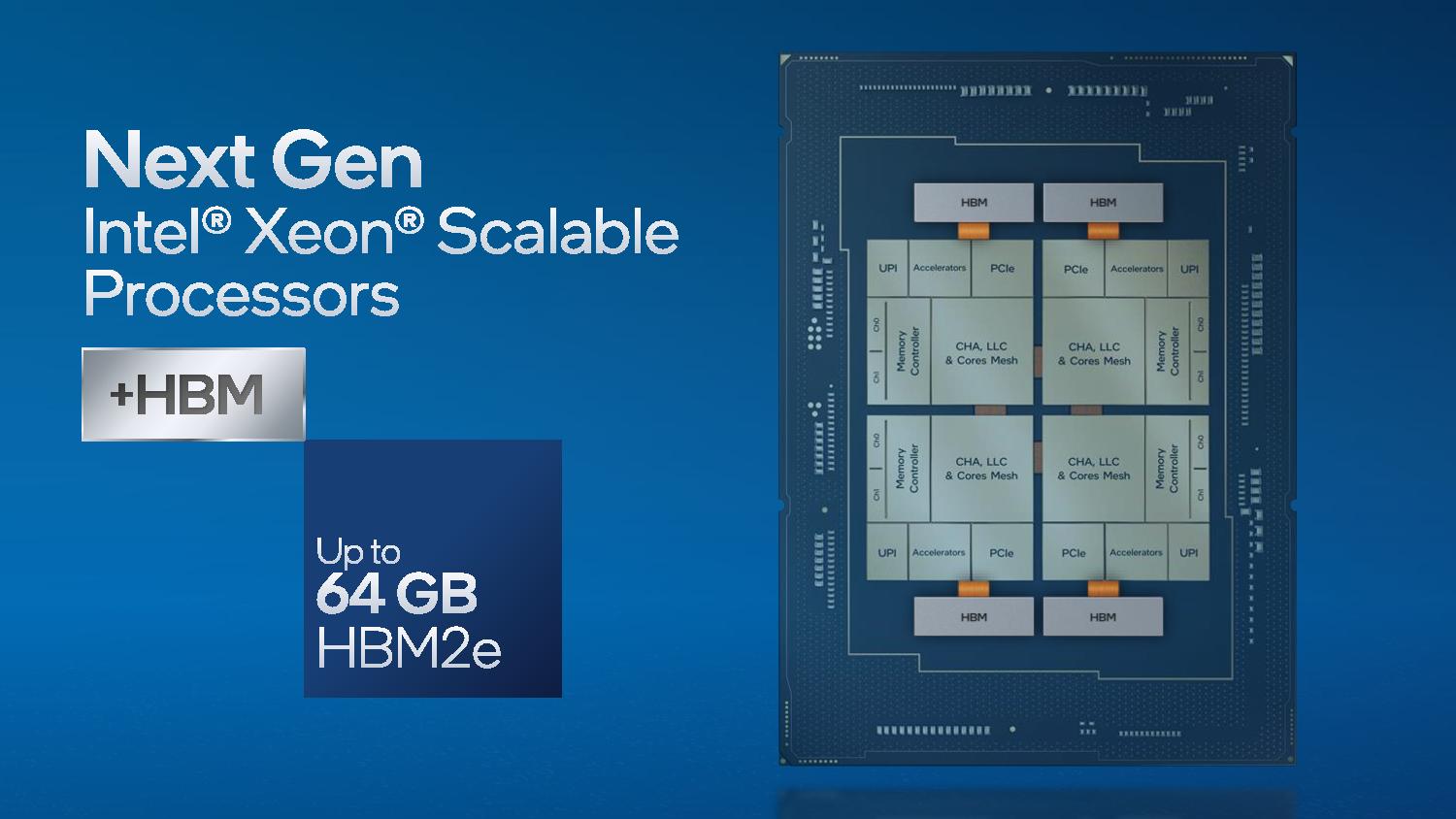

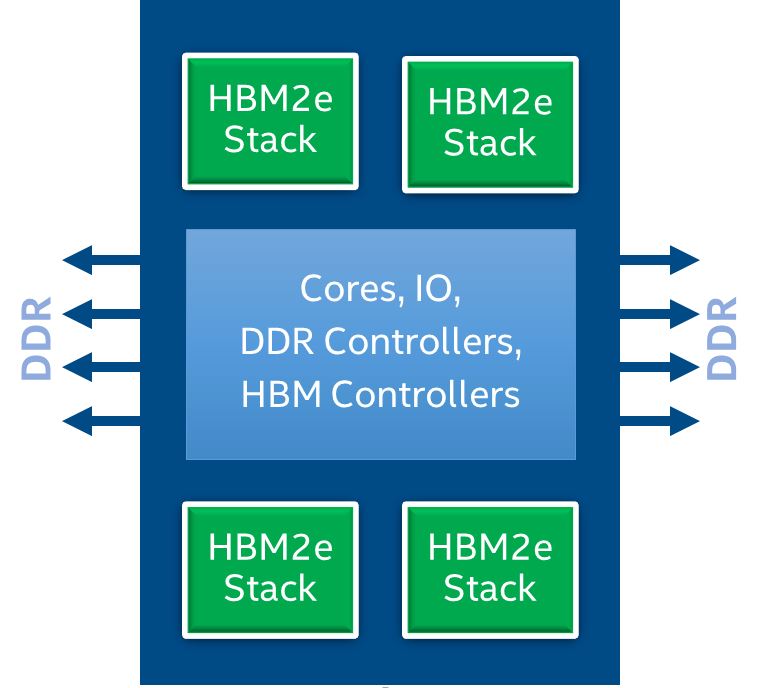

Intel has already shared the broad overview of its Sapphire Rapids platform, including that some models will come with on-package HBM memory, but hadn't elaborated. Today's news finds the company finally revealing that the chips will support up to 64GB of HBM2e, a type of memory that comes with up to 410 GB/s of bandwidth per stack, spread across four packages. That means we could see up to a theoretical peak of 1.6 TBps of throughput.

Each HBM2E package, consisting of eight 16 Gb dies (8-Hi), is connected to one die of the quad-tile Sapphire Rapid chips. This memory is on-package and will reside under the heatspreader.

| Row 0 - Cell 0 | HBM2 / HBM2E (Current) | HBM | HBM3 (Upcoming) |

| Max Pin Transfer Rate | 3.2 Gbps | 1 Gbps | 3.1 Gbps |

| Max Capacity | 24GB | 4GB | 64GB |

| Max Bandwidth | 410 GBps | 128 GBps | ~800 GBps |

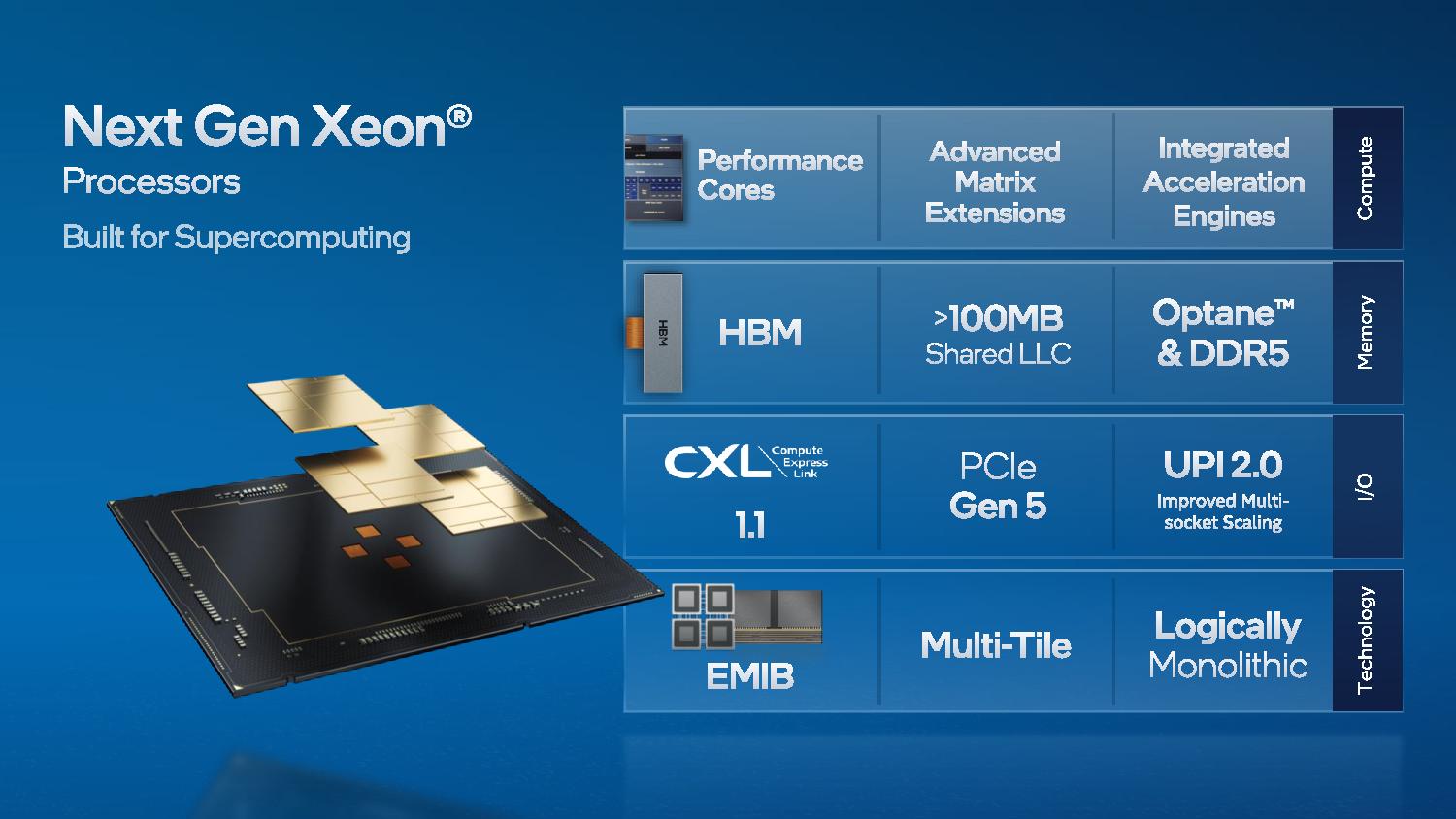

Sapphire Rapids will come with the Golden Cove architecture etched onto the Intel 7 process. In addition, the chips also support Intel Advanced Matrix eXtensions (AMX) to improve performance in training and inference workloads.

All told, Intel's Sapphire Rapids data center chips come with up to 64GB of HBM2e memory, eight channels of DDR5, PCIe 5.0, and support for Optane memory and CXL 1.1, meaning they have a full roster of connectivity tech to take on AMD's forthcoming Milan-X chips that will come with a different take on boosting memory capacity.

While Intel has gone with HBM2e for Sapphire Rapids, AMD has decided to boost L3 capacity using hybrid bonding technology to provide up to 768MB of L3 cache per chip. Sapphire Rapids will also grapple with AMD's forthcoming Zen 4 96-core Genoa and 128-core Bergamo chips, both fabbed on TSMC's 5nm process. Those chips also support DDR5, PCIe 5.0, and CXL interfaces.

Intel says that Sapphire Rapids will be available to all of its customers as part of the standard product stack and maintains that the chips will drop into the same socket in general-purpose servers as the standard models. The HBM-equipped models will come to market a bit later than the HBM-less variants, though in the same rough time frame.

The Sapphire Rapids chip comes as four tiles that are interconnected via EMIB connections, but presents itself to the system as one monolithic die. HBM-equipped models can function without any DRAM present, or with both types of memory. The chip can also be subdivided into four different NUMA nodes using Sub-Numa Clustering (SNC).

The processor exposes the HBM memory in three different modes: HBM-Only, Flat Mode, and Cache Mode. The 'HBM-Only' mode allows the chip to function without any DRAM in the system, and existing software can work as normal.

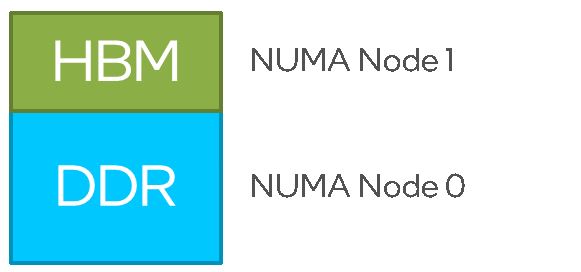

With DDR5 sticks in the system, the user can pick 'Flat Mode.' This makes both the DDR5 and HBM2e visible to the system, with each type of memory presented as its own NUMA node. This allows existing software to address the different memory types using standard NUMA programming approaches.

'Cache Mode' uses HBM as a cache for the DDR5 memory. This makes the HBM transparent to software, meaning it doesn't require specific coding, and the HBM cache is managed by the memory controllers as a direct-mapped cache.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

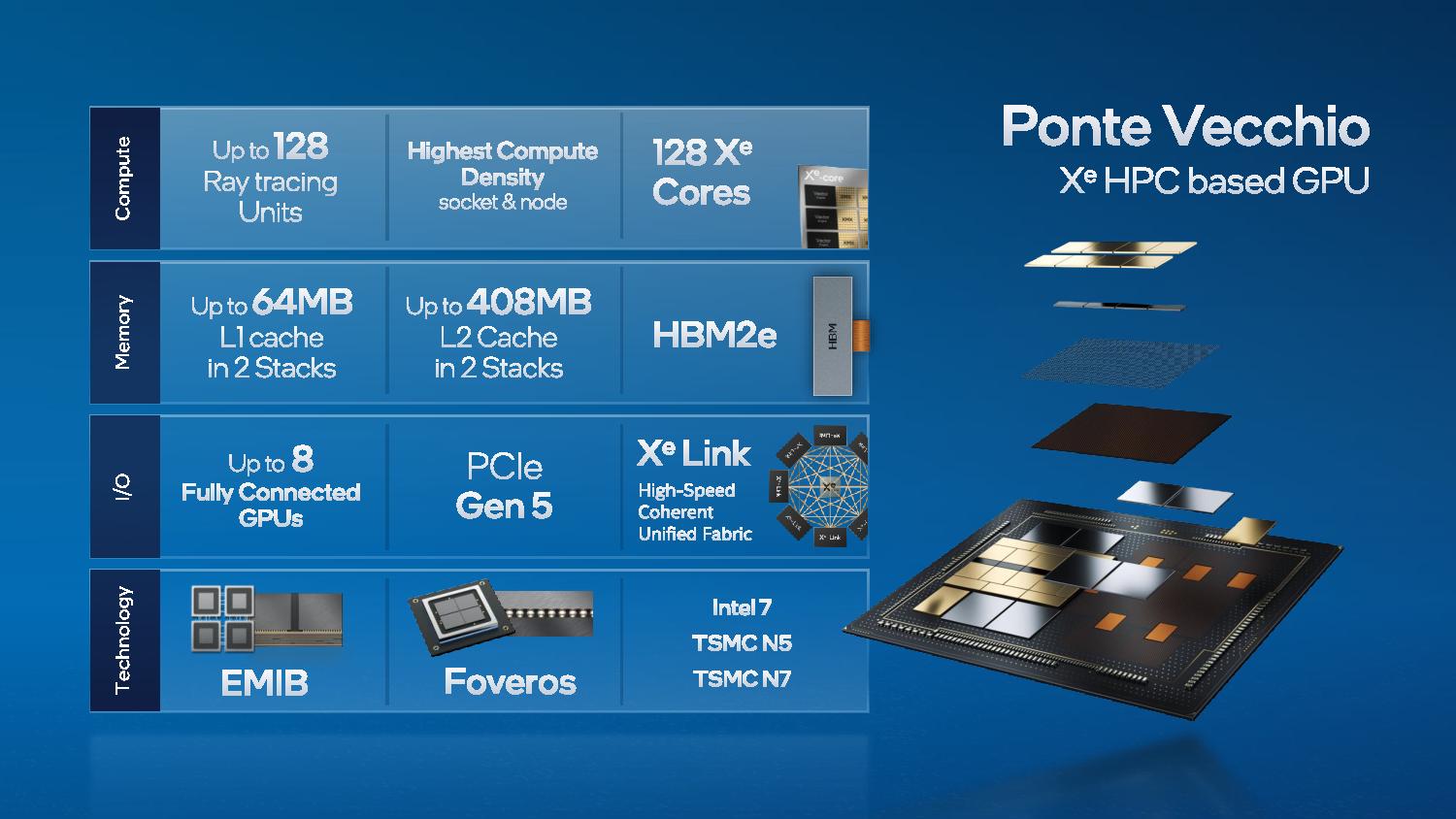

Intel also divulged that Ponte Vecchio GPUs would come with up to 408MB of L2 cache and 64MB of L1 cache for its dual-stack models. In addition, they will also feature HBM2e memory. These accelerators power the forthcoming 2 ExaFLOP Aurora system, with 54,000 Ponte Vecchio GPUs paired with 18,000 Sapphire Rapids processors in a three-to-one ratio.

The Ponte Vecchio cards will come in both PCIe and OAM form factors, with the former having the option for Xe Link bridging via a secondary bridge between multiple cards (like an Nvidia SLI bridge). Intel is using third-party contractors to build the PCIe cards, but they come as Intel-branded solutions.

Intel is also bringing Sapphire Rapids systems with Ponte Vecchio GPUs to the general server market and is working with a broad spate of partners, including Atos, Dell, Lenovo, Supermicro, and HPE, among others, to provide qualified platforms at launch.

Intel is also talking up the EU's decision to use Ponte Vecchio CPUs with SiPearl's Rhea system-on-chip (SoC) for the European Processor Initiative. This ARM chip should feature up to 72 Arm Neoverse Zeus cores with a mesh interconnect, with the fact that Intel is making inroads with ARM-powered systems being an important win.

Intel seems to be running full force with its Sapphire Rapids-based systems. As reported by ServeTheHome, there were multiple sitings at the OCP Summit, including a Samsung system and a "Bodega Bay" 10U development platform.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

dark_lord69 Haha 😂Reply

AMD: "Check out our new 768MB L3 cache on our server CPUs"

Intel: "Hold my beer..." -

Samipini Reply

?? What does hbm have to do with l3 cache?dark_lord69 said:Haha 😂

AMD: "Check out our new 768MB L3 cache on our server CPUs"

Intel: "Hold my beer..." -

dark_lord69 I should clarify, my post was more about the timing of Intel's announcement. Not the tech.Reply -

JayNor Intel also unveiled the 408MB L2 cache for Ponte Vecchio. No big announcement like AMD's V2 cache, but delivering it in the same timeframe.Reply -

Howardohyea Reply

Technically like the article said the HBM can operate in "cache mode", functioning like a cache of the system. Assuming all 48GB of HBM it can function like an extension of L3 or even L4 cacheSamipini said:?? What does hbm have to do with l3 cache? -

frogr Reply

AMD claims 10% access time penalty compared to on chip L3 cache. I think HBM access times would be signficantly greater so it could be L4 like cache. Nonetheless, it will be interesting to see how much this can speed up certain applications - blockchain mining anyone? 😣Howardohyea said:Technically like the article said the HBM can operate in "cache mode", functioning like a cache of the system. Assuming all 48GB of HBM it can function like an extension of L3 or even L4 cache