Neoverse Roadmap Helps Explain Why Nvidia Wants Arm

Nvidia recently announced its planned ARM acquisition in a blockbuster $40 billion deal, and Nvidia CEO Jensen Huang's key messages include his belief in the future of ARM's data center architectures. Aside from leveraging the licensing model to broaden GeForce's penetration, Huang also says that Nvidia plans to accelerate the ARM's CPU roadmap to deliver a faster cadence of innovation to licensees, and he even left the door open for Nvidia-branded ARM CPUs, both of which could mark a fundamental shift in the balance of power in the data center.

Today, we can see why Huang is so bullish on ARM's future data center prospects. ARM's unveiled its new V1 "Zeus" server core, touting up to a 50% gain in IPC over the current N1 core and the adoption of scalable vector extensions and HBM2e, signaling that the Neoverse platform is moving ahead at breakneck speed on the performance side of the equation. We certainly don't typically see these types of generational leaps on the x86 side of the market.

ARM also unveiled its N2 platform, which is designed to pack the most cores into a limited TDP as possible while still improving IPC by 40%, showing that the company has options for scalability, too. The combination of up to 128 cores, HBM3, and the vastly improved single-threaded performance bodes well for high-performance scale-out implementations.

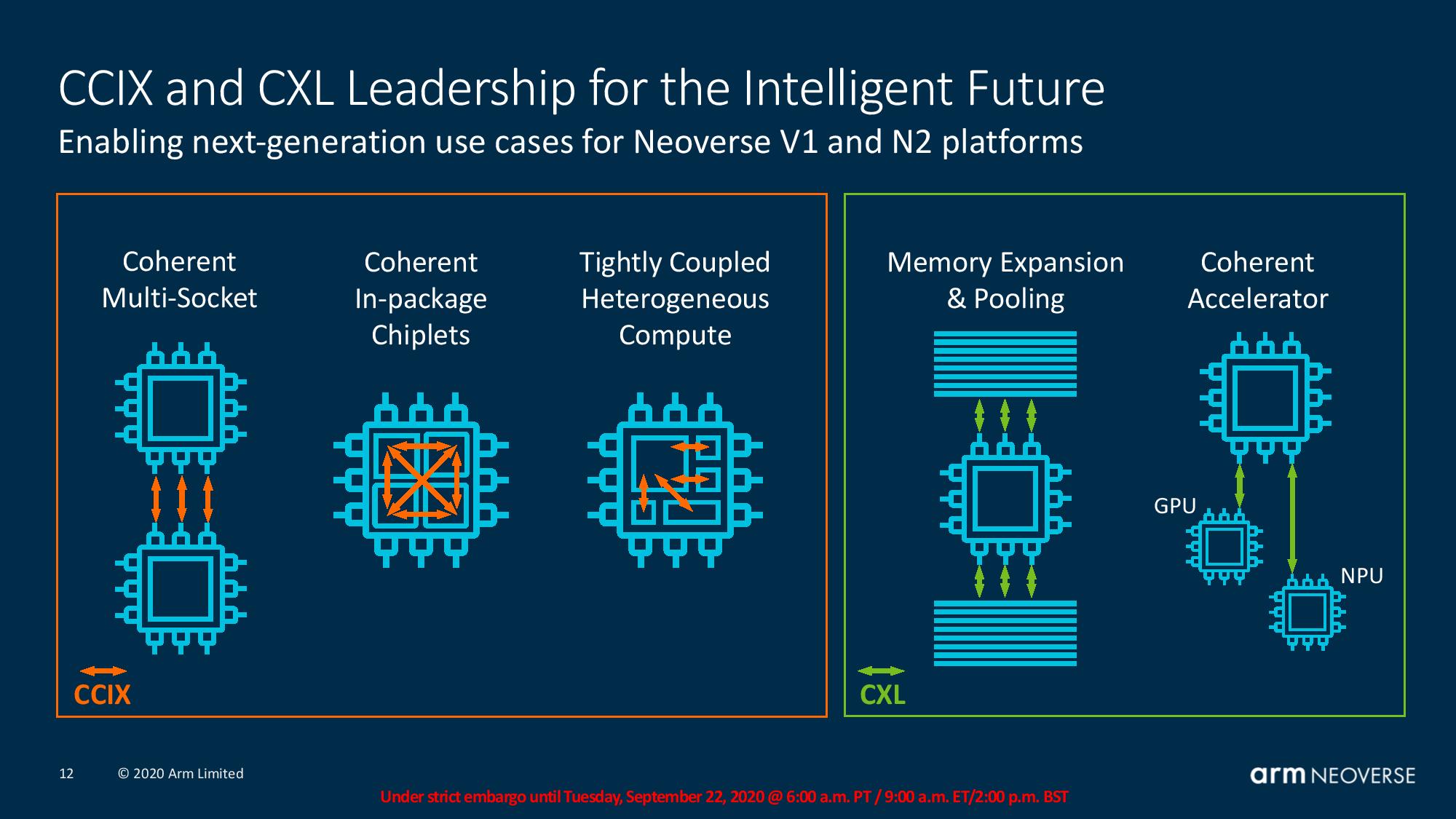

ARM also unveiled the broader strokes of its plans for next-gen coherent chiplet-based designs and heterogenous compute solutions that leverage industry standard protocols, showing that it also has its eyes further on the horizon and plans to match the leading-edge tech we see in AMD and Intel's x86 chips.

The two new designs bring in support for both 7nm and 5nm designs, PCIe 5.0, DDR5, and HBM2E/HBM3, all of which represents the bleeding edge of data center tech.

In light of the success of ARM's Neoverse platform, which has already achieved monumental feats over the last two years, ARM's roadmap vision, which stretches to 2021 in its public form, looks particularly threatening to the x86 data center duopoly of Intel and AMD. Not to mention that ARM's roadmap positions it for continued gen-on-gen performance gains that will soon outstrip x86 architectures.

Nvidia intends to further accelerate the already-impressive ARM roadmap when (and if) all goes to plan, taking the reins in ~2022. That would help enable the Neoverse platform to upset the industry in a shorter period of time.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Snapping Nvidia's IP into the ARM portfolio would enable new possibilities for both companies, such as tighter integration for Nvidia GPUs and networking with the ARM architecture. Nvidia's graphics and DPU IP could also serve as high-performance chiplets (possibly even in APU-style implementations) that would tie in well with ARM's chiplet-based ambitions.

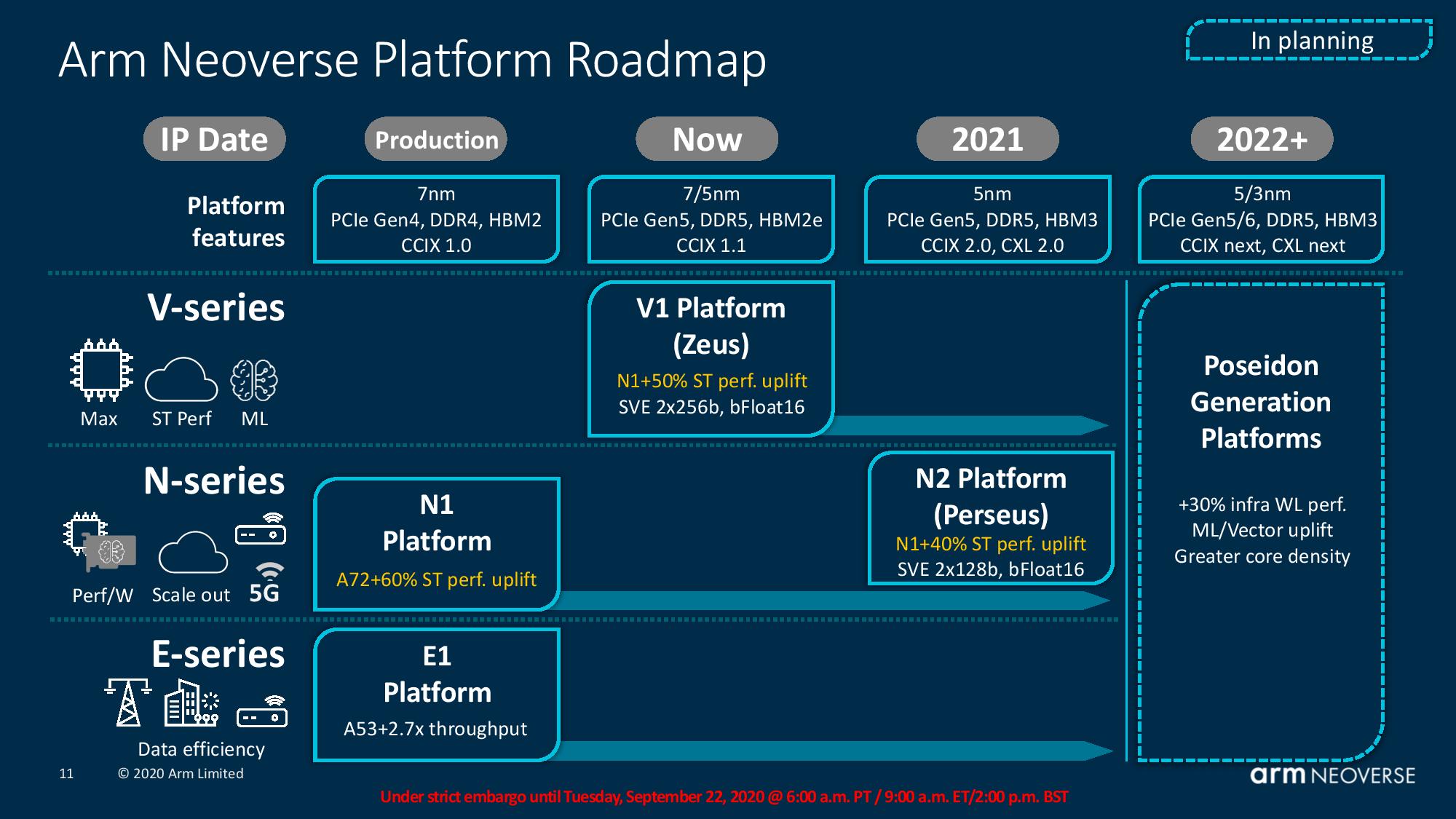

ARM Neoverse Roadmap

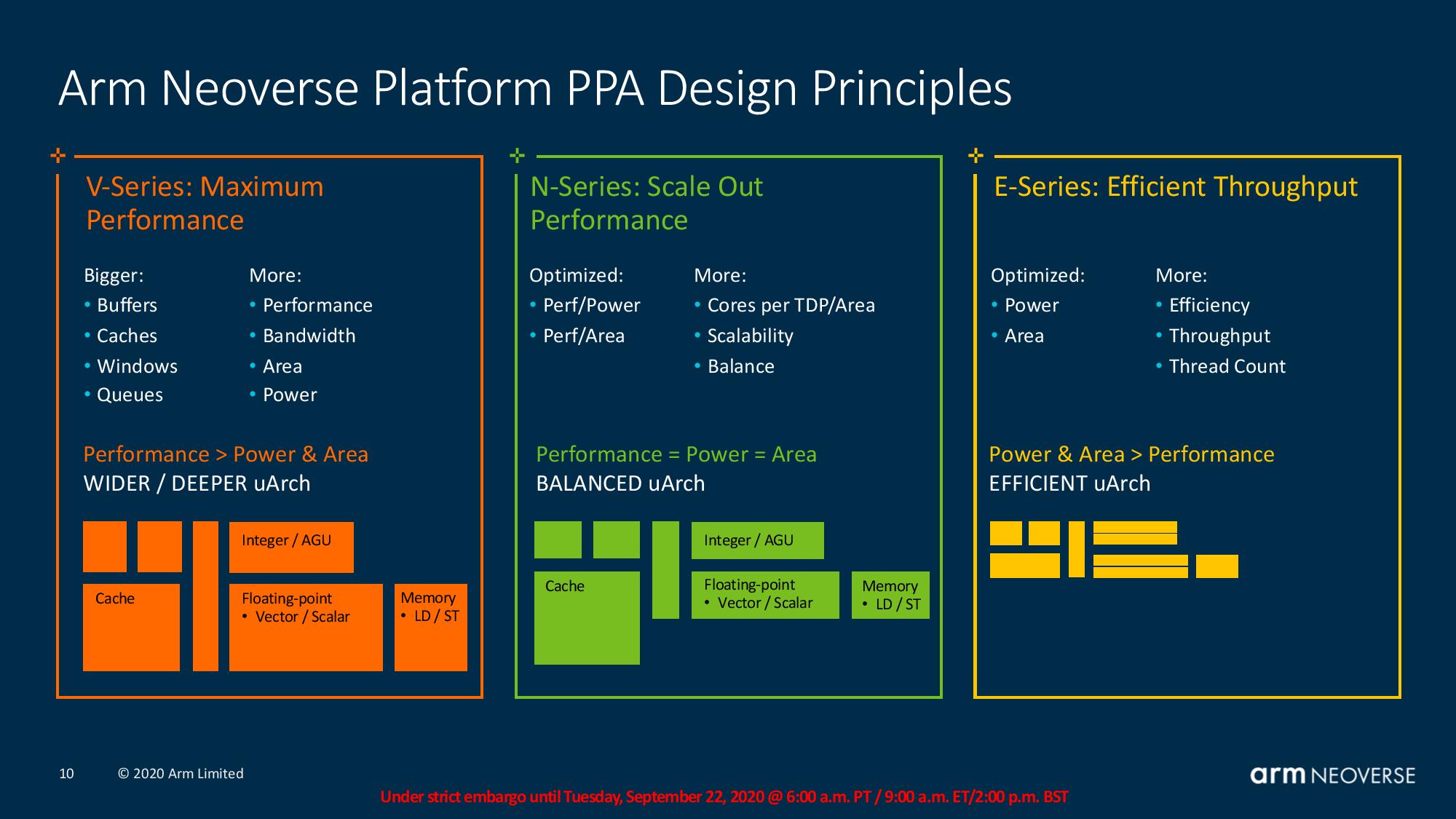

Like most semiconductor vendors, ARM designs its chips to meet specific power, performance and area targets (PPA), with adjustments on these axes defining the end solution. As such, each of ARM's three platforms is tuned for specific objectives:

- N-Series: Optimized for performance-per-power (watt) and performance-per-area

- E-Series: Optimized for power and area - efficiency-focused at the cost of performance

- V-Series: Optimize for maximum performance, at the cost of power and area

ARM's three-prong approach targets scale-out (N-Series), edge-type devices (E-Series), and scale-out high performance compute, high-performance cloud, and machine learning (V-Series).

ARM also teased its next-gen Poseidon platforms that will come in 2022 and beyond. The company predicts a continued cadence of 30% IPC gains, and these designs will be based on either 5nm or 3nm process nodes to enable higher core counts. ARM also teased enhanced performance in vectorized/machine learning workloads. These chips will also adopt future tech, like PCIe 6.0, and as-yet-undefined new versions of the CCIX and CXL interfaces.

Chips based on "Zeus" V1 and N2 "Perseus" designs could come in either 7nm or 5nm flavors, support PCIe 5.0, DDR5, and either HBM2e or HBM3. In terms of coherent interconnects, V1 supports CCIX 1.1, while N2 steps forward to CCIX 2.0 and CXL 2.0.

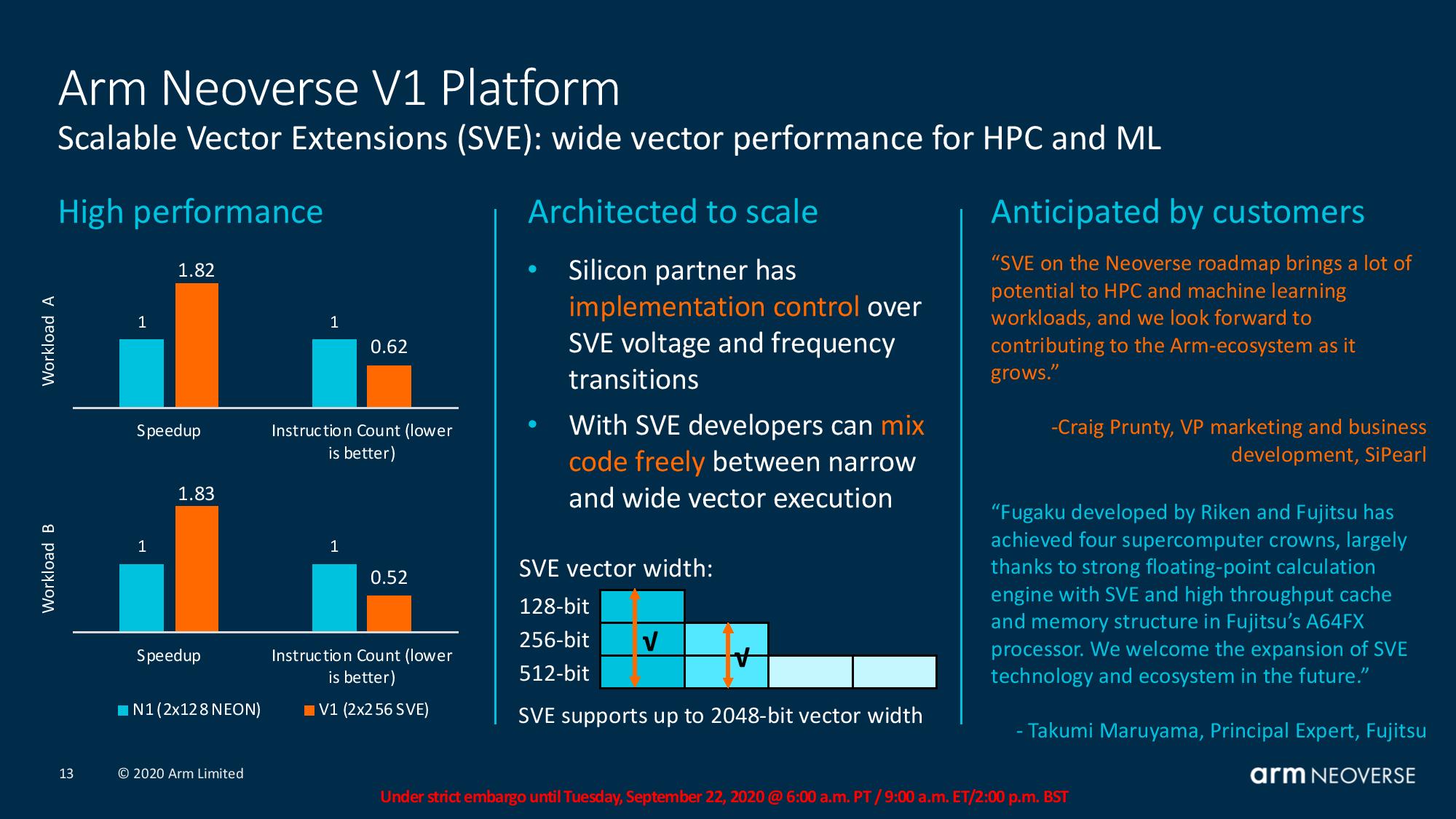

ARM Neoverse V1 Platform

ARM hasn't shared specific microarchitectural details yet, but predicts that V1 enables a massive 50% IPC (labeled as sT in the slides) uplift over the N1 platform. This exceeds the 30% target that ARM defined several years ago, and comes as the result of architectural optimizations like larger buffers, caches, windows and queues. Given possible increases in frequency due to process nodes and TDP limits, we could see even larger gains (+50%) when we zoom out to per-core performance in the final designs. These chips will top out at 96 single-threaded cores and support HBM2e.

V1 also marks the first SVE implementation, which supports variable vector widths, like 2x256b and bFloat16. ARM charted out impressive gains relative to N1 in the slide above.

ARM also says that V1 allows licensees to have full control over SVE frequency and power targets. This will allow designers to avoid clock frequency reductions during vectorized workloads, unlike what we see with the various flavors of AVX on Intel platforms. Now architects can tune their chips for the expected amount of cooling, with water-cooled designs being able to unlock the heights of SVE performance (~500W). That said, ARM anticipates most of its customers to design for air cooling due to the high cost associated with liquid cooling.

ARM calls out Fujitsu as a good example of this type of tuning - the AF64X chips that power the world's fastest supercomputer, Fugaku, operate at their full frequency while executing SVE code. ARM's adoption of more diverse data types also isn't a problem with older code - SVE seamlessly transitions between different vector widths.

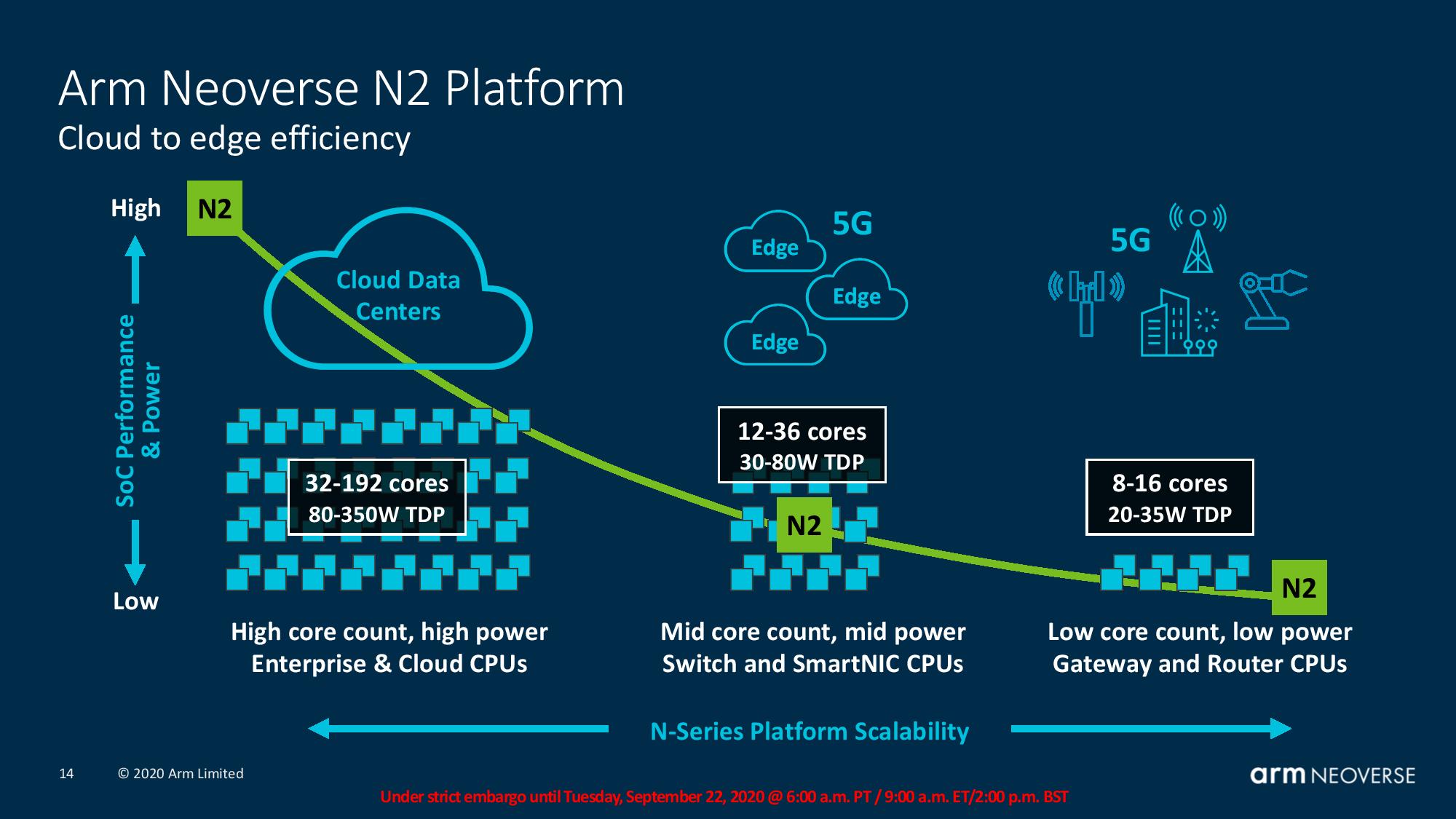

ARM Neoverse N2 Platform

N2 is designed to scale from large cloud applications down to edge devices. ARM says the N2 platform offers 40% higher IPC than the N1 platform, and again, frequency improvements could magnify the per-core performance uplift in the final designs. Notably, ARM says the N2 platform will retain the same power and area efficiency targets as N1.

This design, which also features single-threaded cores, is carved into three buckets - 32-192 cores, 12-26 cores, and 8-16 cores to satisfy the power and performance targets for different types of deployments. It also adopts the same SVE implementation as the V1 cores.

This platform will adopt a more advanced feature set than V1 - it steps up from HBM2e to HBM3 and also includes CCIX 2.0 and CXL 2.0 support.

ARM Neoverse Chiplet, CXL, CCIX Strategy

Both Intel and AMD are working furiously on next-gen interconnects and chiplet-based designs, but ARM is also developing its own approach to future chip design.

ARM painted its interconnect roadmap in broad strokes, but the company has already created a chip-level fabric that it says allows for linear core-count scaling in the N1 platform.

However, the company is investing in the CCIX interface for bi-directional coherent communication between sockets and in-package chiplets, with the latter looking somewhat like AMD's Rome design. This will also expand to chip-to-chip coupling in the future, allowing the company to tie together accelerators (Nvidia graphics or DPU chiplets?) and memory into a single package.

ARM will turn to CXL, an Intel-spurred open-source initiative that has garnered wide spread industry adoption, for tying together coherent pools of memory across nodes, or intra-node persistent memory pools. The fabric will also enable connections between remote GPUs and NPUs, both of which slot nicely into Nvidia's oft-stated broader vision of data center architectures.

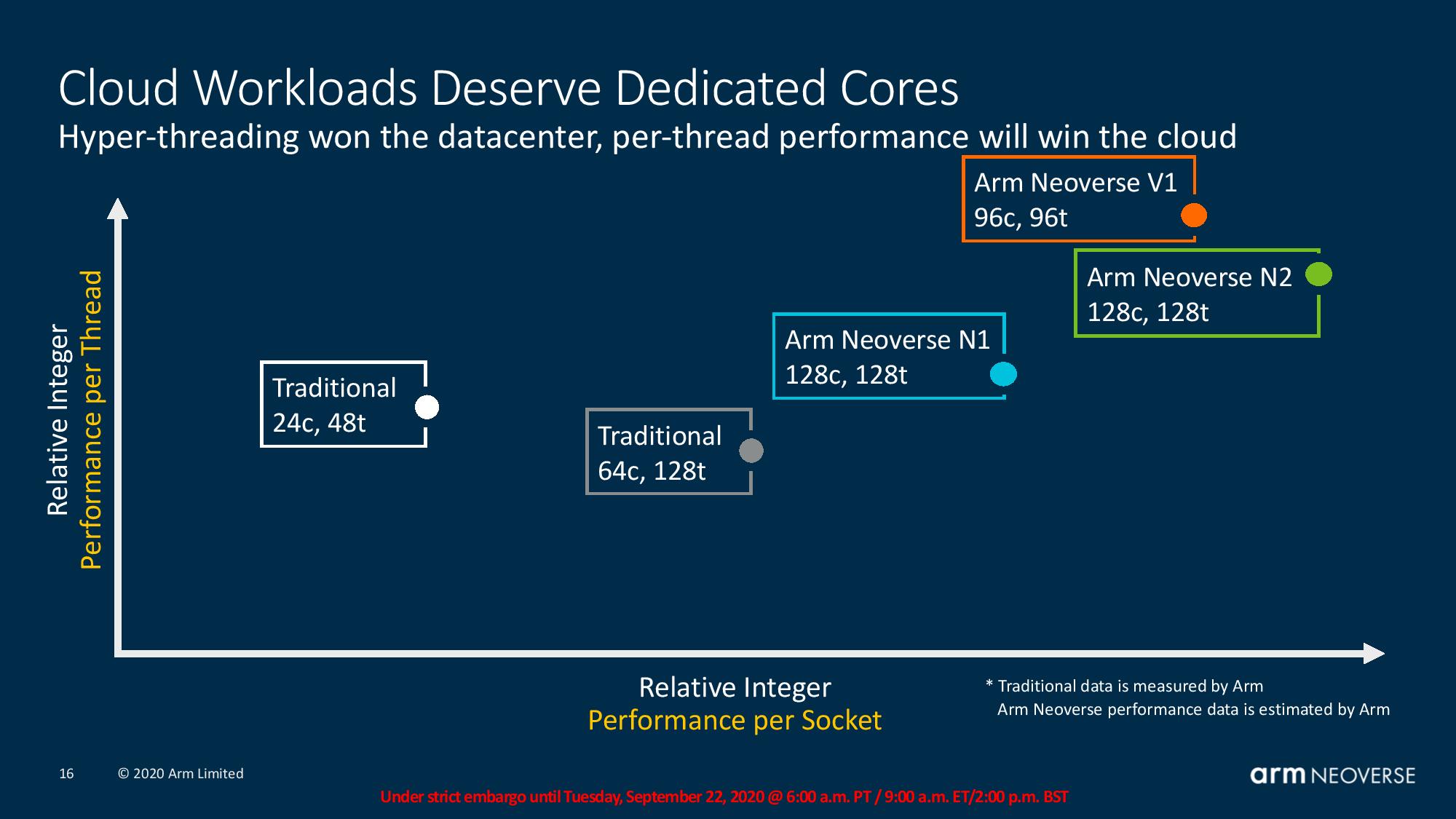

ARM hasn't provided firm projections for multi-threaded performance yet, but the first slide above shows how the family lines up when performance in integer workloads is plotted against performance-per-thread (remember, each core is single-threaded) and performance per socket. Note, these values are based on ARM's internal testing and/or emulations.

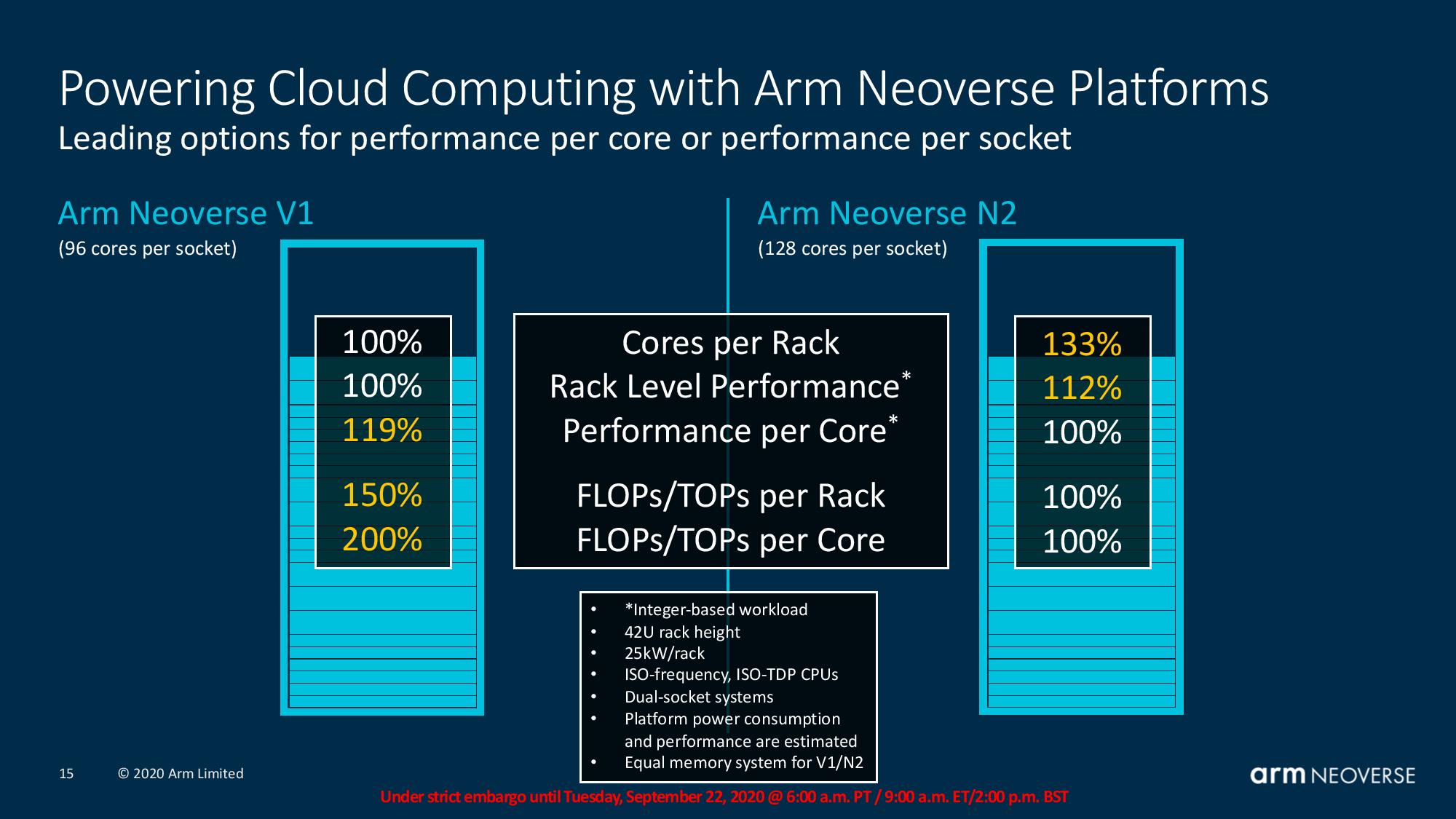

The second slide outlines a rack-level comparison between V1 and N2 in a typical big cloud deployment. ARM says N2 and V1 allow architects to design for different priorities within a standard 15kW rack power budget (42U) with dual-socket servers.

ARM has certainly gained meaningful traction with the N1 platform, particularly in the AWS Graviton2 chips, and Ampere has already announced new 128 core offerings coming this year. If ARM can deliver on its objectives with the V1 and N2 platforms, we're sure to see adoption accelerate in the future.

Snapping the Pieces Together

Nvidia's participation in fostering the ARM ecosystem have thus far consisted of enabling CUDA support on the platform. It's possible we could see increased collaboration between the two companies prior to the acquisition, and given Nvidia's existing licensing agreements with ARM, it wouldn't be entirely surprising if Nvidia begins working on new ARM-based hardware solutions of its own in the interim. Notably, Nvidia is already one of many industry stalwarts involved with the CXL interconnect, while Mellanox, now an Nvidia company, participates in the CCIX project.

ARM's plans for a 30%+ gen-on-gen IPC growth rate stretch into the next three iterations of its existing platforms (V1, N2, Poseidon), and will conceivably continue into the future. We haven't seen gen-on-gen gains in that range from Intel in recent history, and while AMD notched large gains with the first two Zen iterations, it might not be able to execute such large generational leaps in the future.

If ARM's projections play out in the real world, that puts the company not only on an intercept course with x86 (it's arguably already there in some aspects), but on a path to performance superiority.

The data center now comprises the majority of Nvidia's revenue, so naturally the company would like to acquire an asset with multiple architectures that could put it in the drivers seat for the future of compute, spanning from the phone in your hand to the edge, and then up to the Neoverse cores that could serve as the nerve center of the data center.

ARM is the only asset in the world that can fulfill those lofty goals, and that's why Nvidia is attempting to pull off the deal of the century to get it.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.