Nvidia Benchmarks Show 4080 12GB Up to 30% Slower Than 16GB Model

Nvidia's GeForce RTX 4080 16GB is 24 - 30% faster than the GeForce RTX 4080 12GB.

Nvidia has posted its first official performance numbers for its GeForce RTX 4080 12GB and GeForce RTX 4080 16GB graphics cards. The version with 16GB of memory appears to be 21% – 30% faster than its 12GB counterpart. Meanwhile, Nvidia's previous generation flagship GeForce RTX 3090 Ti is still faster than the GeForce RTX 4080 12GB — at least without any DLSS enabled.

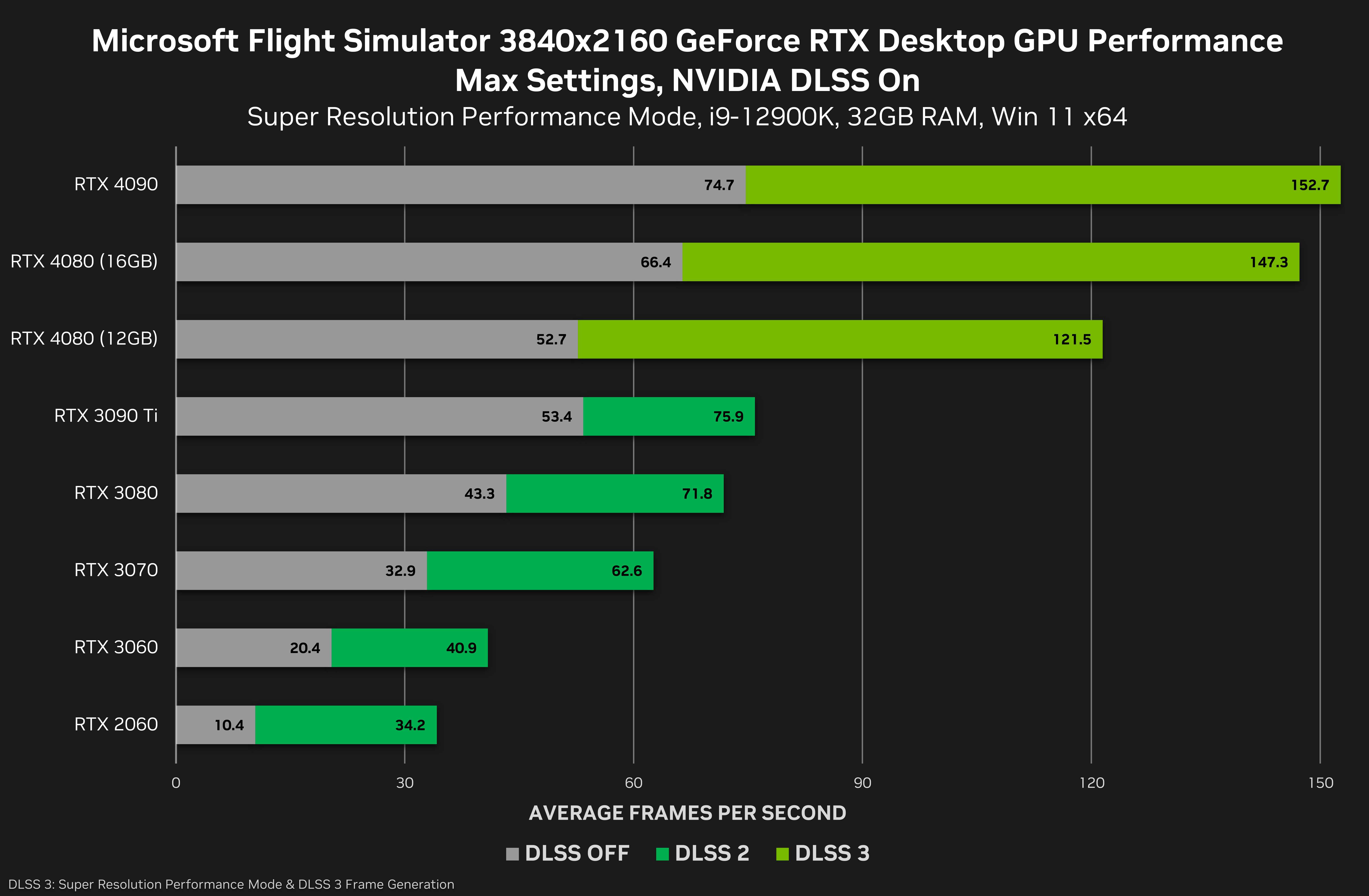

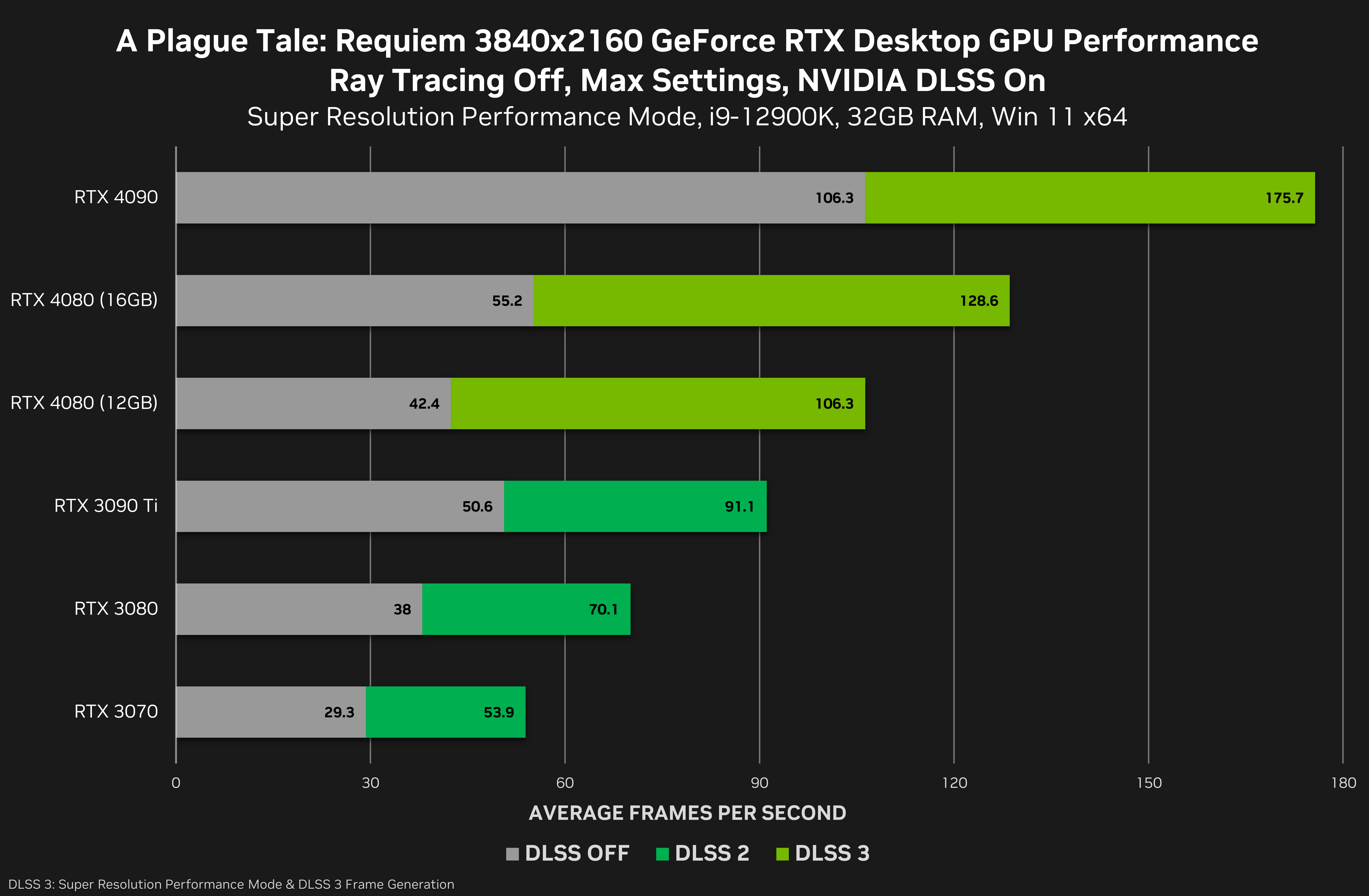

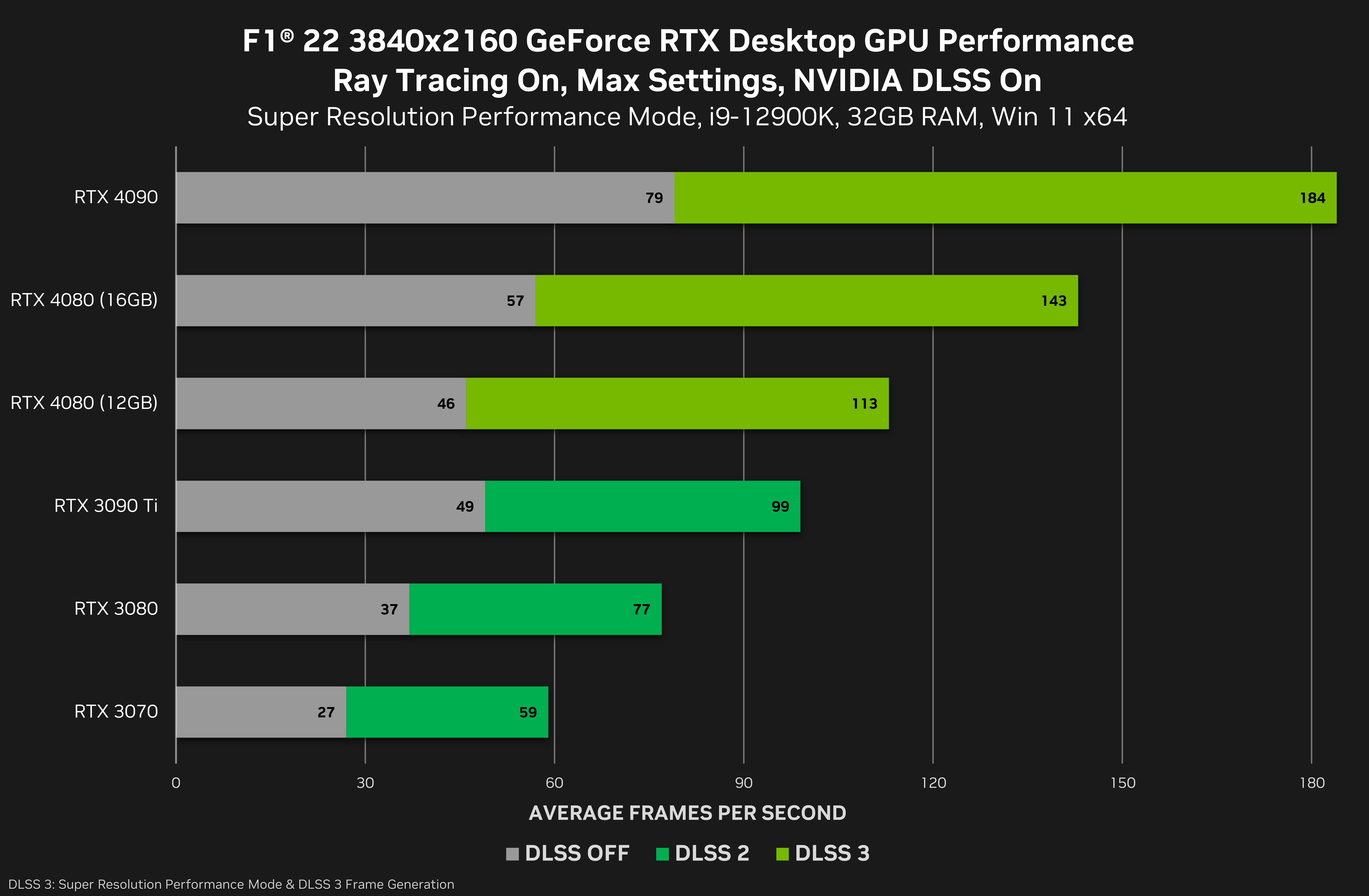

Nvidia compared its latest GeForce RTX 40-series graphics cards (including the flagship GeForce RTX 4090) against each other and against its previous-generation GeForce RTX 30-series products in Microsoft Flight Simulator, A Plague Tale: Requiem, and F1 22.

The comparison focused primarily on performance advantages provided by the company's latest DLSS 3 technology (with optical multi frame generation, resolution upscaling, and Nvidia Reflex), but the Nvidia also included performance numbers without any enhancements. Furthermore, it included its first official GeForce RTX 4080 12GB and GeForce RTX 4080 16GB performance comparison.

Traditionally, Microsoft's Flight Simulator games are tough on both CPUs and GPUs and the latest version is no exception. Both DLSS 2 and DLSS 3 bring significant performance uplift to the game, but so does Nvidia's new Ada Lovelace GPU architecture (perhaps courtesy of enhanced ray tracing performance).

With DLSS 3 on, the GeForce RTX 4080 16GB is 21% faster than the GeForce RTX 4080 12GB; without DLSS 3 the 16GB version is 26% faster than the 12GB. The GeForce RTX 4080 12GB offers the same performance as the GeForce RTX 3090 Ti 24GB without DLSS 3 — not bad at all, and this is still one of the best gaming graphics cards today.

For some reason Nvidia decided to test A Plague Tale: Requiem without enabling ray tracing, but with DLSS technologies enabled. DLSS 3 brings even more tangible performance enhancements than DLSS 2, so all GeForce RTX 40-series boards are substantially faster than their predecessors.

In this test, the GeForce RTX 4080 16GB is 21% faster than the GeForce RTX 4080 12GB with DLSS 3 enabled, and is 30% faster with DLSS 3 disabled. Meanwhile, the GeForce RTX 3090 Ti is 19% faster than the GeForce RTX 4080 12GB without DLSS enabled. Also, the new GeForce RTX 4080 12GB is only 4 frames per second (fps), or about 12%, faster than the original GeForce RTX 3080 in this test.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

In F1 22 you want to get all the framerates you can possibly have, so both DLSS 2 and DLSS 3 are helpful. The new version is somewhat more efficient with its optical multi frame generation, and Ada Lovelace boards are clearly faster than Ampere boards in this title.

With DLSS 3 enabled, the GeForce RTX 4080 16GB is 27% faster than its 12GB sibling, and is 24% faster without DLSS 3 enabled. Also, the GeForce RTX 3090 Ti turns out to be 7% faster than the GeForce RTX 4080 12GB without DLSS enabled. Still, the GeForce RTX 4080 12GB is consistently faster than the original GeForce RTX 3080.

While Nvidia's GeForce RTX 4080 12GB and GeForce RTX 4080 16GB carry the same model number, they are based on completely different graphics processors (AD104 vs. AD103) and feature different CUDA core counts (7680 vs. 9728). When combined with distinctive memory bandwidth (504 GB/s vs. 717 GB/s), the two models offer very different performance levels than you would typically expect from graphics boards carrying the same model number.

For an easier comparison, we've combined the relative performance differences between Nvidia's latest desktop GPUs into a table.

Performance of GeForce RTX 40 and GeForce 30-Series Graphics Cards (without DLSS)

| Row 0 - Cell 0 | GeForce RTX 4080 12GB | GeForce RTX 4080 16GB | GeForce RTX 3090 Ti 24GB | GeForce RTX 4090 24GB |

| MS Flight Simulator | 100% | 126% | 101% | 142% |

| A Plague Tale: Requiem | 100% | 130% | 119% | 251% |

| F1 22 | 100% | 124% | 107% | 172% |

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

blacknemesist Damn, the 4080s really pale in comparison to the 4090 by quite a bit.Reply

This really is the generation where diminishing return are not a thing(apparently, still waiting on AMD and real 4080 benchmarks vs selective).

Quite odd how MSFS is the only title where the 4080 16gb gain more % over the 12gb than the 4090 against the 4080 16gb that being 26% and 16% respectively or 0/26/16. Maybe the CPU is capped? -

sizzling I would be very interested to see how the 4080 16GB and 4090 do in 8k using medium, high and max settings. I get the feeling 60fps may be feasible.Reply -

JarredWaltonGPU Reply

MSFS is 100% CPU limited on a 4090, even at 4K. DLSS 3 doubles the performance because of this.blacknemesist said:Damn, the 4080s really pale in comparison to the 4090 by quite a bit.

This really is the generation where diminishing return are not a thing(apparently, still waiting on AMD and real 4080 benchmarks vs selective).

Quite odd how MSFS is the only title where the 4080 16gb gain more % over the 12gb than the 4090 against the 4080 16gb that being 26% and 16% respectively or 0/26/16. Maybe the CPU is capped? -

kal326 Now the only thing Nvidia needs to drop is the charade that there is such a thing as a 4080 12GB. A 4070 by any other name is still overpriced.Reply -

-Fran- Reply

Hm... I'll nitpick this, sorry: "DLSS3 doubles the performance". No... Any form of interpolation doesn't increase "performance", it just hides bad/low framerates using the GPU extra room and that's all. I get the idea behind simplifying it, but don't give nVidia's marketing any funny ideas, please. EDIT: No ascii faces; sadge.JarredWaltonGPU said:MSFS is 100% CPU limited on a 4090, even at 4K. DLSS 3 doubles the performance because of this.

As for the reporting itself... Well, nVidia thinks it's better to justify an 80-class at $900 instead of a 70-class, so nothing much to do there. That being said, it would've been nice to actually see a 4070 with 12GB beat the 3090ti for a repeat of the 2080ti memes. Such a missed opportunity, nVidia. For reals.

Looking forward to power numbers as I'm suspecting these 2 SKUs, no matter how nVidia decided to call them, so be quite good performers per power unit (efficient?). Not sure if price-wise they'd be justified, but we'll see. Plus, these are the ones most people (maybe, kinda?) can get xD

Regards. -

btmedic04 Its incredibly scummy of nvidia to think we're dumb enough to buy into the 4080 12gb being anything other than a $900 -70 class part. and the performance difference really proves it here.Reply -

helper800 Reply

In my opinion its more like 4080 12gb = 3060 tier, 4080 16gb = 3070 tier, 4090 24gb = 3090 tier. This is on the premise that the elephant in the room, the 4090 ti, is 15%+ more powerful than the 4090. I support my conclusion by comparing die sizing with the 3000 series equivalents and the performance disparity between the 4080 16gb and the 4090. The 4080 12gb comes in at 295 mm² and the 3060 is at 276 mm², the 4080 16gb comes in at 379 mm² and the 3060 ti / 3070 is at 392 mm², the 4090 is a 608 mm² chip while the 3080 ti / 3090 / 3090 ti is at 628 mm². There is plenty of room for a 4080 ti card and a 4090 ti card.kal326 said:Now the only thing Nvidia needs to drop is the charade that there is such a thing as a 4080 12GB. A 4070 by any other name is still overpriced. -

helper800 Reply

Seems we have already been conditioned to pay double for similar tier parts of 7+ years ago. gone are the days of getting a XX70 tier part like the 970 or 1070 for less than 400 dollars. Now we get similar tier parts for 700+ dollars. Its like Moores law has infected not only the performance, but the price too.-Fran- said:Hm... I'll nitpick this, sorry: "DLSS3 doubles the performance". No... Any form of interpolation doesn't increase "performance", it just hides bad/low framerates using the GPU extra room and that's all. I get the idea behind simplifying it, but don't give nVidia's marketing any funny ideas, please. EDIT: No ascii faces; sadge.

As for the reporting itself... Well, nVidia thinks it's better to justify an 80-class at $900 instead of a 70-class, so nothing much to do there. That being said, it would've been nice to actually see a 4070 with 12GB beat the 3090ti for a repeat of the 2080ti memes. Such a missed opportunity, nVidia. For reals.

Looking forward to power numbers as I'm suspecting these 2 SKUs, no matter how nVidia decided to call them, so be quite good performers per power unit (efficient?). Not sure if price-wise they'd be justified, but we'll see. Plus, these are the ones most people (maybe, kinda?) can get xD

Regards. -

-Fran- Reply

Well, in regards to the specific bit of Moore's Law: Intel was very explicit in telling nVidia/Jensen to STFU with that, no?helper800 said:Seems we have already been conditioned to pay double for similar tier parts of 7+ years ago. gone are the days of getting a XX70 tier part like the 970 or 1070 for less than 400 dollars. Now we get similar tier parts for 700+ dollars. Its like Moores law has infected not only the performance, but the price too.

And this is not their first rodeo with the die naming/size and card naming relative to pricing tiers. They know they can get away with it.

I think it was Gordon from PCWorld* that said it best: "as long as you keep buying these at those price points, whining won't do diddily squat". And he's absolutely right. It appears to me there's plenty of people with enough money to just accept these price hikes like nothing, as nVidia keeps doing it, so... Not sure what else to say or do here. Sadge.

Regards.