Tom's Hardware Verdict

The EVGA GeForce GTX 1650 GDDR6 is faster than the GTX 1650 GDDR5, but the 1650 Super is 20% faster for basically the same price, making it the superior option.

Pros

- +

Same price as the GTX 1650 GDDR5

- +

14% faster than GTX 1650 GDDR5

- +

Efficient Turing architecture

Cons

- -

Basically same price as GTX 1650 Super

- -

16% slower than GTX 1650 Super

- -

TU117 lacks enhanced Turing NVENC

Why you can trust Tom's Hardware

The EVGA GeForce GTX 1650 GDDR6 is a better card in every way compared to the original GeForce GTX 1650 cards with GDDR5. With the same basic GPU (and slightly slower clocks) but 50% more memory bandwidth thanks to the switch to GDDR6, it's an easy win in a head-to-head comparison. The problem is that the GTX 1650 doesn't exist in a vacuum, and with the GTX 1650 Super essentially selling for the same price (maybe $10 more), that's clearly the budget pick for the best graphics card. We've added the GTX 1650 GDDR6 to our GPU hierarchy, and it lands near the bottom of the charts in both performance and price, much as you'd expect.

Put simply, the current budget GPU market is both confusing and underwhelming. Where have all the good budget cards gone? The previous generation had GeForce GTX 1050 and GTX 1050 Ti from Nvidia, priced around $110 and $140, respectively. Meanwhile, AMD offered up the Radeon RX 560 4GB at prices ranging from $100 to $120. More recently, the Radeon RX 570 4GB basically killed off demand for most other ultra-budget GPUs — assuming your PC could handle the 6-pin or 8-pin PEG power requirement. Where have all the good budget GPUs gone?

The latest AMD and Nvidia budget GPUs cost nearly 50% more than the previous edition, and they're potentially up to 65% faster — mostly thanks to the GTX 1050 only having 2GB VRAM. But it's been 3.5 years since the 1050 and 1050 Ti launched, and one year since the GTX 1650 landed. Prices have stagnated, and neither AMD nor Nvidia appear to be willing to target the sub-$150 market with new GPUs right now.

A few recent cards might get there with mail-in rebates, but the true budget graphics cards are uninspiring. You're far better off spending up a bit for a $200-$230 GPU like the GeForce GTX 1660 and GeForce GTX 1660 Super. Or maybe in a few months we'll see the GTX 1650 (both GDDR5 and GDDR6 variants) drop in pricing, which would help tremendously. Right now, they're basically a $20-$30 price cut away from being 'great' budget cards.

EVGA GTX 1650 GDDR6 Specifications

| Graphics Card | GTX 1660 | GTX 1650 Super | GTX 1650 GDDR6 | GTX 1650 |

|---|---|---|---|---|

| Architecture | TU116 | TU116 | TU117 | TU117 |

| Process (nm) | 12 | 12 | 12 | 12 |

| Transistors (Billion) | 6.6 | 6.6 | 4.7 | 4.7 |

| Die size (mm^2) | 284 | 284 | 200 | 200 |

| SMs / CUs | 22 | 20 | 14 | 14 |

| GPU Cores | 1408 | 1280 | 896 | 896 |

| Base Clock (MHz) | 1530 | 1530 | 1410 | 1485 |

| Boost Clock (MHz) | 1785 | 1725 | 1590 | 1665 |

| VRAM Speed (Gbps) | 8 | 12 | 12 | 8 |

| VRAM (GB) | 6 | 4 | 4 | 4 |

| VRAM Bus Width | 192 | 128 | 128 | 128 |

| ROPs | 48 | 48 | 32 | 32 |

| TMUs | 88 | 80 | 56 | 56 |

| GFLOPS (Boost) | 5027 | 4416 | 2849 | 2984 |

| Bandwidth (GBps) | 192 | 192 | 192 | 128 |

| TDP (watts) | 120 | 100 | 75 | 75 |

| Launch Date | 19-Mar | 19-Nov | 20-Apr | 19-Apr |

| Launch Price | $219 | $159 | $149 | $149 |

Nvidia currently offers four different GPUs that generally fall in the sub-$200 range: the GTX 1650, GTX 1650 GDDR6, GTX 1650 Super, and GTX 1660. All are manufactured using TSMC's 12nm FinFET lithography, and we'll have to wait for Nvidia's Ampere GPUs before Nvidia shifts to 7nm or 8nm Lithography. The TU117 GPU in the GTX 1650 (both GDDR5 and GDDR6) supports up to 16 SMs (Streaming Multiprocessors), each with 64 CUDA cores. The full TU117 so far has only shown up in the mobile GTX 1650 Ti, however, with the desktop GTX 1650 models enabling 14 SMs. That means 896 FP32 CUDA cores and 56 TMUs (texture mapping units).

Clock speeds also vary slightly among the models, and as usual, the AIB partners are free to deviate. Officially, the reference spec on the GTX 1650 GDDR5 is a 1485 MHz base clock and 1665 MHz boost clock, while the GTX 1650 GDDR6 has a 1410 MHz base clock and 1590 MHz boost clock. The EVGA GTX 1650 GDDR6 card, on the other hand, has a 1710 MHz boost clock, because it's the SC Ultra Gaming edition — the other EVGA option being an SC Ultra Black edition that has a 1605 MHz boost clock and currently costs $10 more.

One key difference not listed in the above table is video codec support. The GTX 1650 and 1650 GDDR6 use the Turing TU117 GPU, while the GTX 1650 Super and GTX 1660 use the TU116 GPU. Besides offering more cores and performance, TU116 also includes the latest NVENC video block that supports encoding and decoding a variety of video formats. Generally, it delivers equivalent or superior quality to CPU-based encoding. Even the lowly GTX 1650 Super has the same capabilities as the RTX 2080 Ti in this area. TU117, on the other hand, uses the same NVENC as the previous-generation Pascal GPUs. It's not terrible, but it's definitely not as good as the Turing encoder.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

EVGA GTX 1650 GDDR6 SC Ultra: A Closer Look

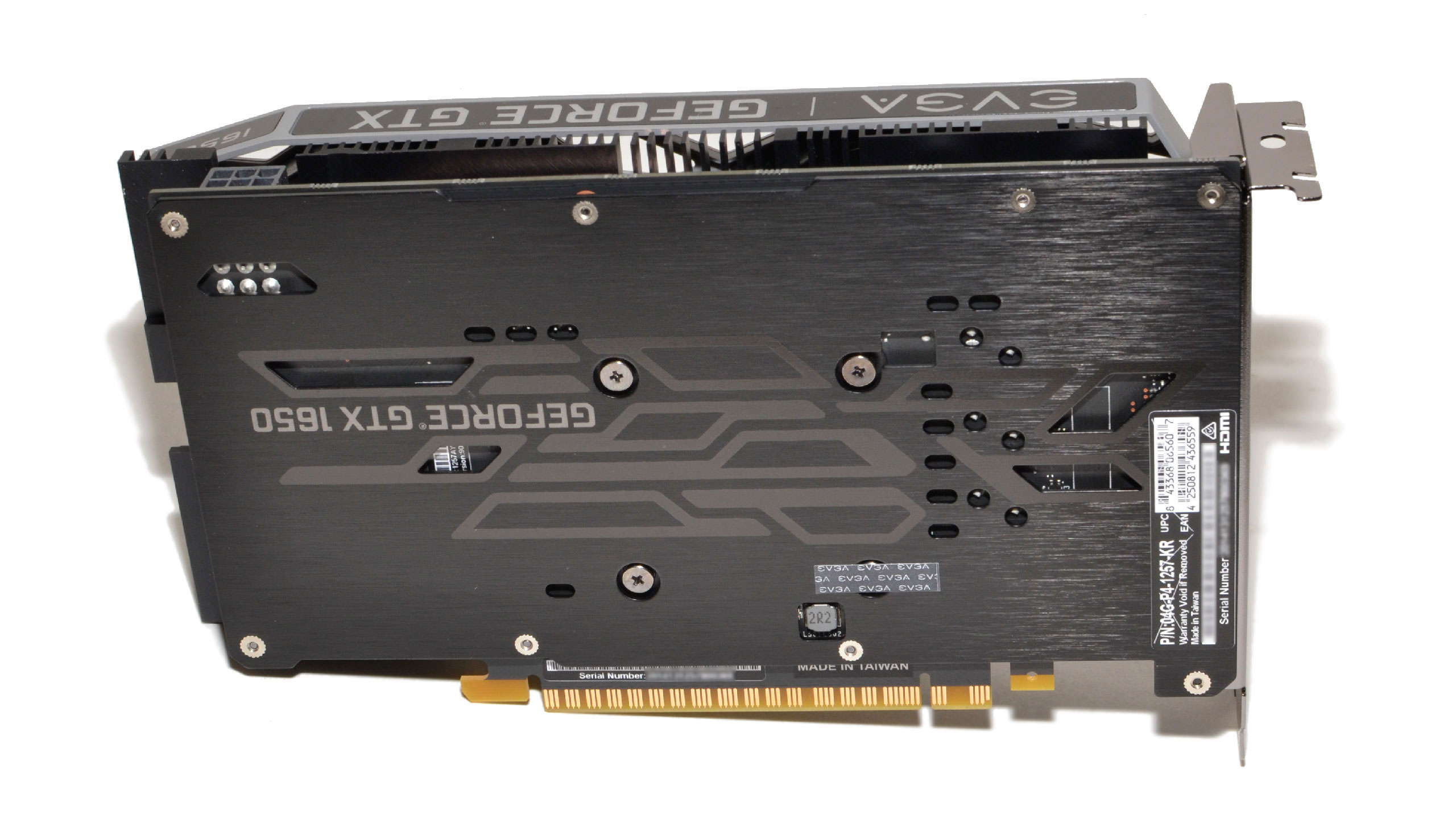

The EVGA GTX 1650 SC Ultra GDDR6 variant uses a relatively compact design. It measures 202.2 x 111.2 x 37.3 mm (7.96 x 4.38 x 1.47 inches) and weighs 565g (1.24 lbs). It's a full 2-slot card and extends about 5cm past the end of the PCIe slot, but it should fit in nearly any PC case that's designed to work with a dedicated GPU.

It's nice to see that, even on a budget GPU, EVGA still includes a full-coverage metal backplate. It might theoretically help with cooling the card, but that seems unnecessary. The main benefit we see is that it protects the delicate components on the back of the graphics card from accidental damage. I'm not going to name names, but I have a 'friend' who may have damaged an R9 290X back in the day when a small component (resistor or capacitor) got hit by a screwdriver while he was putting together a PC. Oops.

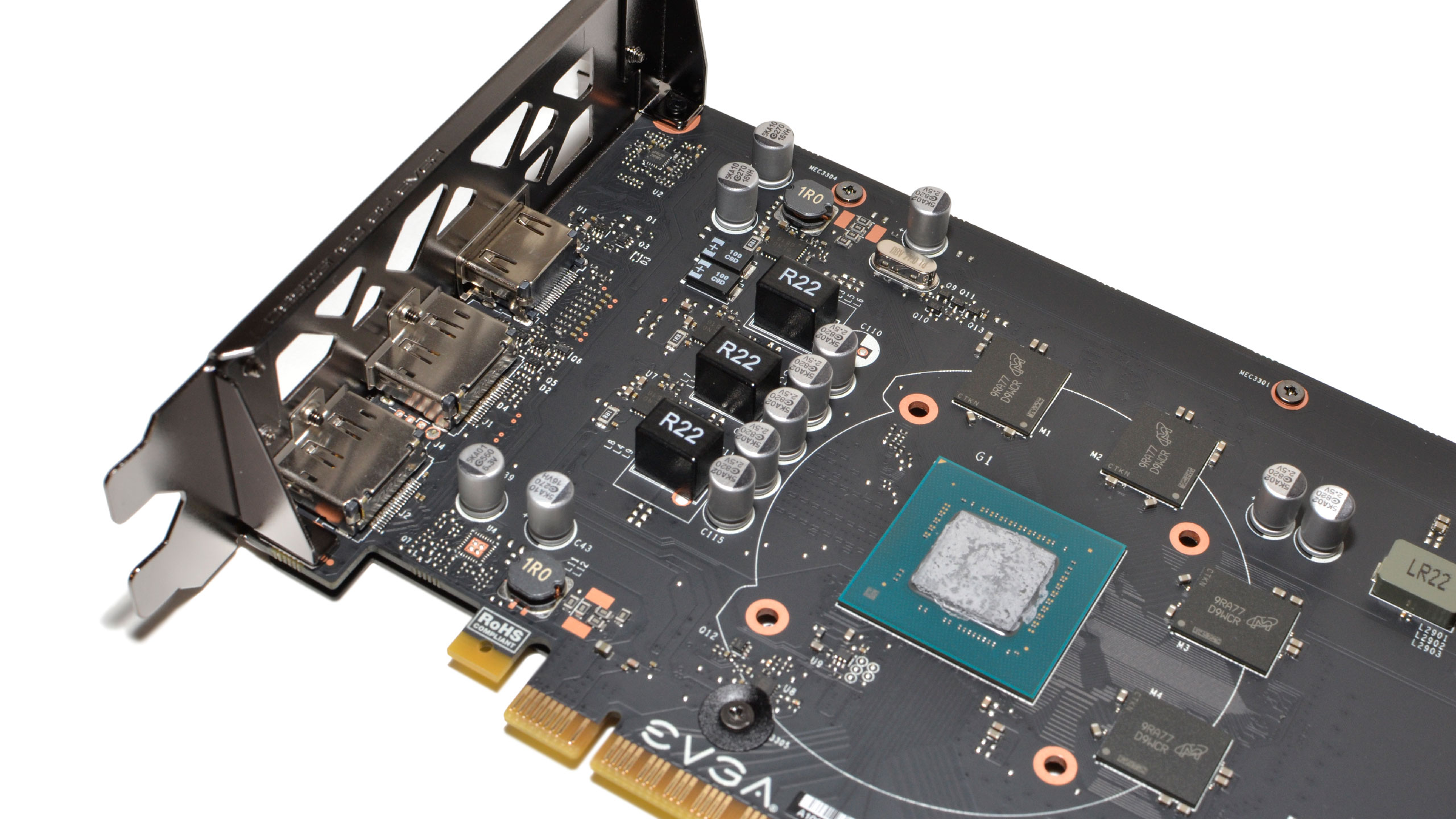

The cooler is relatively simple with a single heatpipe wrapping around to help disperse heat to the heatsink's fin array. Two 85mm axial fans provide airflow, with a plastic shroud helping to direct the air across the heatsink fins. While EVGA makes no mention of the fact, the card does appear to have 0dB fan tech that shuts off the fans when the GPU is idle. Even under load, the fans typically spin at less than 2000 RPM and are very quiet. Video ports consist of two DisplayPort 1.4 outputs and a single HDMI 2.0b port.

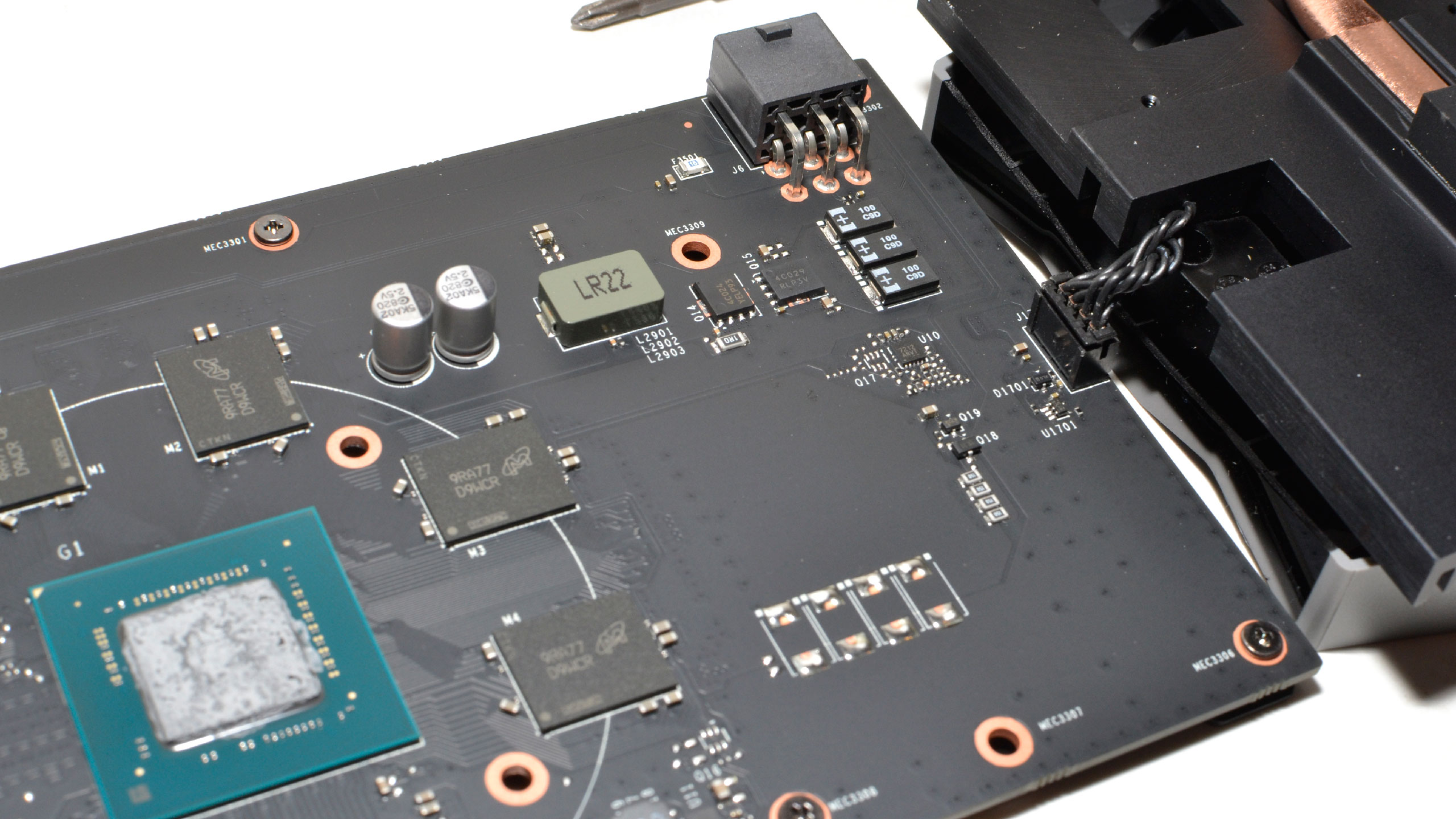

Popping off the cooler, the PCB and power circuitry is pretty tame compared to what we see on larger, higher performance graphics cards. And rightly so. There's a single 6-pin PEG (PCIe Express graphics) power connector providing extra power, probably thanks to the factory overclock. Theoretically, the GTX 1650 could run off just the PCIe x16 slot's 75W power, but EVGA tacks on a 6-pin PEG to ensure there's more than enough power on tap.

EVGA GeForce GTX 1650 GDDR6 SC Ultra: How We Test

Our current graphics card test system consists of Intel's Core i9-9900K, an 8-core/16-thread CPU that routinely ranks as the fastest overall gaming CPU. The MSI MEG Z390 Ace motherboard is paired with 2x16GB Corsair Vengeance Pro RGB DDR4-3200 CL16 memory (CMW32GX4M2C3200C16). Keeping the CPU cool is a Corsair H150i Pro RGB AIO. OS and gaming suite storage comes via a single XPG SX8200 Pro 2TB M.2 SSD.

The motherboard runs BIOS version 7B12v17. Optimized defaults were applied to set up the system, after which we enabled the memory's XMP profile to get the memory running at the rated 3200 MHz CL16 specification. No other BIOS changes or performance enhancements were enabled. The latest version of Windows 10 (1909) is used and is fully updated as of May 2020.

Our current list of test games consists of Borderlands 3 (DX12), The Division 2 (DX12), Far Cry 5 (DX11), Final Fantasy XIV: Shadowbringers (DX11), Forza Horizon 4 (DX12), Metro Exodus (DX12), Red Dead Redemption 2 (Vulkan), Shadow of the Tomb Raider (DX12), and Strange Brigade (Vulkan). These titles represent a broad spectrum of genres and APIs, which gives us a good idea of the relative performance differences between the cards. We're using driver build 445.87 for the Nvidia cards and Adrenalin 20.4.2 drivers for AMD. We've provided a selection of competing GPUs from both AMD and Nvidia for this review.

We capture our frames per second (fps) and frame time information by running OCAT during most of our benchmarks, and use the .csv files the built-in benchmarks create for The Division 2 and Metro Exodus. For GPUs clocks, fan speed, and temperature data, we use GPU-Z's logging capabilities in conjunction with Powenetics software from Cybernetics that collects accurate graphics card power consumption.

Current page: EVGA GTX 1650 GDDR6 Review: Overview, Features, and Specifications

Next Page EVGA GTX 1650 GDDR6: 1080p Medium Gaming Performance

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

NightHawkRMX Cool, maybe Nvidia's new card is now only a few percent behind a 3-year-old RX570 that costs $40 less.Reply

Hey at least it's not 20-25% slower and $40 more than the 3-year-old RX570this time around.

Not impressed at all. My $80 used Sapphire Pulse RX570 4g would humiliate this card that costs double. So would my $89 Sapphire Nitro RX480 8g used. -

King_V Agreed - this is a little bit of a strange decision for Nvidia. I mean, maybe the switch to GDDR6 is just so they don't have to deal with GDDR5 anymore?Reply

In any case, this really only slightly improves the poor standing of the 1650. It's still less capable than the RX 570 4GB. The only saving grace is significantly lower power draw. But "higher price and lower performance" tends to not be a strong selling point at this level, especially if a PCIe connector is going to be needed anyway.

EDIT: also, I didn't realize that the 5500 XT 8GB was as close to the 1660's performance as that. I had for some reason thought the gap between them was wider. -

JarredWaltonGPU Reply

Mostly agreed, though I have to say. I am super tired of the RX 570 4GB as well. It's not an efficient card, and the overall experience is underwhelming, but that's the case for just about every budget GPU. I'm not saying people should upgrade from 570 to a 1650 Super or whatever, but I wouldn't buy a 570 these days, unless it was under $100.NightHawkRMX said:Cool, maybe Nvidia's new card is now only a few percent behind a 3-year-old RX570 that costs $40 less. Hey at least it's not 20-25% slower and $40 more than the 3-year-old RX570 this time around.

Not impressed at all. My $80 used Sapphire Pulse RX570 4g would humiliate this card that costs double. So would my $89 Sapphire Nitro RX480 8g used.

Really, for AMD GPUs, you want 8GB (or RX 5600 XT 6GB) -- stay away from 4GB cards. I generally recommend that same attitude for Nvidia, but Nvidia does a bit better with 4GB overall. Not with an underpowered GPU like TU117, though. Realistically, GTX 1650 GDDR5 should cost $120 now to warrant a recommendation, GTX 1650 GDDR6 for $140 would be fine, and GTX 1650 Super at $160 is good. But I'm not sure there's any margin left trying to sell 1650 at those prices.

GTX 1660 is mostly tied with RX 590 (just a hair faster overall), which is also just a hair faster than the RX 5500 XT 8GB. It can vary by game -- Metro Exodus, RDR2, and Strange Brigade all seem to like the extra VRAM bandwidth of 590 more than other games -- but they're all very close. The 590 does use quite a bit more power, though.King_V said:Agreed - this is a little bit of a strange decision for Nvidia. I mean, maybe the switch to GDDR6 is just so they don't have to deal with GDDR5 anymore?

In any case, this really only slightly improves the poor standing of the 1650. It's still less capable than the RX 570 4GB. The only saving grace is significantly lower power draw. But "higher price and lower performance" tends to not be a strong selling point at this level, especially if a PCIe connector is going to be needed anyway.

EDIT: also, I didn't realize that the 5500 XT 8GB was as close to the 1660's performance as that. I had for some reason thought the gap between them was wider. -

King_V ReplyJarredWaltonGPU said:Mostly agreed, though I have to say. I am super tired of the RX 570 4GB as well. It's not an efficient card, and the overall experience is underwhelming, but that's the case for just about every budget GPU. I'm not saying people should upgrade from 570 to a 1650 Super or whatever, but I wouldn't buy a 570 these days, unless it was under $100.

Yeah, agreed there. Still, at one point a couple of months ago, a new RX 570 4GB was going for $99.99... considering that's with a full warranty, it was a pretty impressive deal. I haven't seen it less than $119.99 these days. Ironically, it's Nvidia's unreasonable 1650 pricing that's keeping the RX 570 viable. Though I suppose a GDDR5 version of the 1650 that has no need for a PCIe connector might get sales just based on that for smaller systems with smaller PSUs.

JarredWaltonGPU said:GTX 1660 is mostly tied with RX 590 (just a hair faster overall), which is also just a hair faster than the RX 5500 XT 8GB. It can vary by game -- Metro Exodus, RDR2, and Strange Brigade all seem to like the extra VRAM bandwidth of 590 more than other games -- but they're all very close. The 590 does use quite a bit more power, though.

Yep - and, I'd have to say that, at, assuming the same price at a 5500XT 8GB, the R590 is a really hard sell given that high level (officially 225W) of draw. -

King_V ReplyNightHawkRMX said:AMD's older cards are competing with their own cards like 5500xt.

I wholeheartedly agree, and that is a problem that AMD brought on themselves. But, at least for AMD, they're getting a sale either way.

Nvidia is almost driving people away from the 1650 and toward the AMD Polaris cards. It seems like they don't HAVE to lose the low-end, but are choosing to do so.

The saving grace for them is the 1650 Super, which both cannibalizes the 1650/GDDR5 and 1650/GDDR6, along with giving a better price/performance than the Polaris cards and direct competition against the 5500XT

Also, the 1660 (when discounted). Though, that might be considered straddling between low-and-mid range. -

yeti_yeti It's honestly not that bad of a card, however I feel like that 6-Pin power connector kind of ruins the entire purpose of the 1650, which is to be a power-efficient, plug-and-play gpu that could be used for upgrading older pcs with sub-par power supplies, that would otherwise be unable to handle a card like rx 570, that requires a power connector. That said, the price could have also been a bit more generous (something like $115-120 would seem pretty reasonable to me).Reply -

JarredWaltonGPU Reply

Yeah, 1650 Super is currently my top budget pick, but it's still in a precarious position. The 1660 can be found for under $200 at times and it's 15% faster, but then the proximity to the 1660 Super ($230 and another 15% faster) makes that questionable as well... but then 5600 XT is 23% faster and another $40. And then you hit diminishing returns.King_V said:The saving grace for them is the 1650 Super, which both cannibalizes the 1650/GDDR5 and 1650/GDDR6, along with giving a better price/performance than the Polaris cards and direct competition against the 5500XT

Also, the 1660 (when discounted). Though, that might be considered straddling between low-and-mid range.

5700 is only 10% faster than the 5600 XT (and costs 13% more, so still reasonable). 2060 is 4% slower but costs 11% more. And it only gets worse from there. 5700 XT is probably the next best step, and it's 10% faster than the 5700 but costs 21% more. 2060 Super costs 5% more than 5700 XT and is about 11% slower. Or 2070 Super is 5% faster than the 5700 XT but costs 36% more!

Honestly, right now it's hard to get excited about anything below the RX 5600 XT -- it offers a tremendous value proposition at the latest prices. You can make a case for just about any GPU with the right price, but performance and price combined with efficiency I'd definitely consider the 5600 XT, especially if you can find it on sale for $250 or so. Same with RX 5700. Of course, right now I'd also just wait and see what Ampere and RDNA2 bring to the party. -

srfdude44 None of these can top the GTX1070. When I can afford a 2080, then I can upgrade, by then the 2080 will be where the 1070 is....sigh.Reply -

JarredWaltonGPU Reply

Yeah, if you have a 1070, your only real upgrade path is to move to at least a 2070, probably faster. Best bet is to wait and see what the RTX 3060 and AMD equivalent (RX 6600?) can offer in performance and price. Hopefully by next year you'll be able to get 2080 levels of performance for under $300. Maybe.srfdude44 said:None of these can top the GTX1070. When I can afford a 2080, then I can upgrade, by then the 2080 will be where the 1070 is....sigh.